Docker常用image

MySQL

Start a mysql server instance

Starting a MySQL instance is simple:

docker run -itd --name mysql -p 3306:3306 -e MYSQL_ROOT_PASSWORD=123456 -v ~/Data\&Log/dockerdata/mysql/:/var/lib/mysql -d mysql

Environment Variables

When you start the mysql image, you can adjust the configuration of the MySQL instance by passing one or more environment variables on the docker run command line. Do note that none of the variables below will have any effect if you start the container with a data directory that already contains a database: any pre-existing database will always be left untouched on container startup.

See also https://dev.mysql.com/doc/refman/5.7/en/environment-variables.html for documentation of environment variables which MySQL itself respects (especially variables like MYSQL_HOST, which is known to cause issues when used with this image).

MYSQL_ROOT_PASSWORD

This variable is mandatory and specifies the password that will be set for the MySQL root superuser account. In the above example, it was set to my-secret-pw.

MYSQL_DATABASE

This variable is optional and allows you to specify the name of a database to be created on image startup. If a user/password was supplied (see below) then that user will be granted superuser access (corresponding to GRANT ALL) to this database.

MYSQL_USER,MYSQL_PASSWORD

These variables are optional, used in conjunction to create a new user and to set that user's password. This user will be granted superuser permissions (see above) for the database specified by the MYSQL_DATABASE variable. Both variables are required for a user to be created.

Do note that there is no need to use this mechanism to create the root superuser, that user gets created by default with the password specified by the MYSQL_ROOT_PASSWORD variable.

MYSQL_ALLOW_EMPTY_PASSWORD

This is an optional variable. Set to a non-empty value, like yes, to allow the container to be started with a blank password for the root user. NOTE: Setting this variable to yes is not recommended unless you really know what you are doing, since this will leave your MySQL instance completely unprotected, allowing anyone to gain complete superuser access.

MYSQL_RANDOM_ROOT_PASSWORD

This is an optional variable. Set to a non-empty value, like yes, to generate a random initial password for the root user (using pwgen). The generated root password will be printed to stdout (GENERATED ROOT PASSWORD: .....).

MYSQL_ONETIME_PASSWORD

Sets root (not the user specified in MYSQL_USER!) user as expired once init is complete, forcing a password change on first login. Any non-empty value will activate this setting. NOTE: This feature is supported on MySQL 5.6+ only. Using this option on MySQL 5.5 will throw an appropriate error during initialization.

MYSQL_INITDB_SKIP_TZINFO

By default, the entrypoint script automatically loads the timezone data needed for the CONVERT_TZ() function. If it is not needed, any non-empty value disables timezone loading.

Docker Secrets

As an alternative to passing sensitive information via environment variables, _FILE may be appended to the previously listed environment variables, causing the initialization script to load the values for those variables from files present in the container. In particular, this can be used to load passwords from Docker secrets stored in /run/secrets/<secret_name> files. For example:

$ docker run --name some-mysql -e MYSQL_ROOT_PASSWORD_FILE=/run/secrets/mysql-root -d mysql:tag

Currently, this is only supported for MYSQL_ROOT_PASSWORD, MYSQL_ROOT_HOST, MYSQL_DATABASE, MYSQL_USER, and MYSQL_PASSWORD.

Where to Store Data

Important note: There are several ways to store data used by applications that run in Docker containers. We encourage users of the mysql images to familiarize themselves with the options available, including:

- Let Docker manage the storage of your database data by writing the database files to disk on the host system using its own internal volume management. This is the default and is easy and fairly transparent to the user. The downside is that the files may be hard to locate for tools and applications that run directly on the host system, i.e. outside containers.

- Create a data directory on the host system (outside the container) and mount this to a directory visible from inside the container. This places the database files in a known location on the host system, and makes it easy for tools and applications on the host system to access the files. The downside is that the user needs to make sure that the directory exists, and that e.g. directory permissions and other security mechanisms on the host system are set up correctly.

The Docker documentation is a good starting point for understanding the different storage options and variations, and there are multiple blogs and forum postings that discuss and give advice in this area. We will simply show the basic procedure here for the latter option above:

Create a data directory on a suitable volume on your host system, e.g.

/my/own/datadir.Start your

mysqlcontainer like this:$ docker run --name some-mysql -v /my/own/datadir:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=my-secret-pw -d mysql:tag

The -v /my/own/datadir:/var/lib/mysql part of the command mounts the /my/own/datadir directory from the underlying host system as /var/lib/mysql inside the container, where MySQL by default will write its data files.

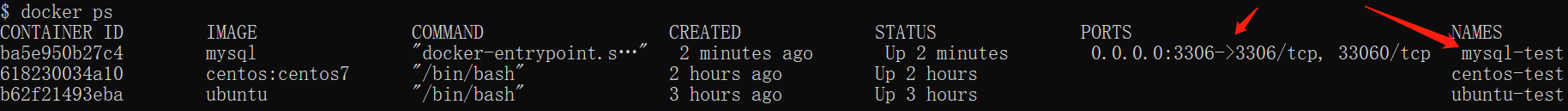

安装成功

通过 docker ps 命令查看是否安装成功:

设置远程连接,使得本地工具可以连上docker的mysql:

- 本机可以通过 root 和密码 123456 访问 MySQL 服务。

/# mysql -u root -p

- 在 MySQL 服务中修改用户权限:

GRANT ALL ON *.* TO 'root'@'%';

flush privileges;

MongoDB

docker run --name mongo -p 27017:27017 -v ~/Data\&Log/dockerdata/mongo:/data/db -e MONGO_INITDB_ROOT_USERNAME=admin -e MONGO_INITDB_ROOT_PASSWORD=admin -d mongo

Connect to MongoDB from another Docker container

The MongoDB server in the image listens on the standard MongoDB port, 27017, so connecting via Docker networks will be the same as connecting to a remote mongod. The following example starts another MongoDB container instance and runs the mongo command line client against the original MongoDB container from the example above, allowing you to execute MongoDB statements against your database instance:

$ docker run -it --network some-network --rm mongo mongo --host some-mongo test

... where some-mongo is the name of your original mongo container.

Redis

start a redis instance

$ docker run --name redis -d -p 6379:6379 redis

start with persistent storage

$ docker run --name some-redis -d redis redis-server --appendonly yes

If persistence is enabled, data is stored in the VOLUME /data, which can be used with --volumes-from some-volume-container or -v /docker/host/dir:/data (see docs.docker volumes).

docker run --name some-redis -v ~/Data\&Log/dockerdata/redis:/data -d redis redis-server --appendonly yes

For more about Redis Persistence, see http://redis.io/topics/persistence.

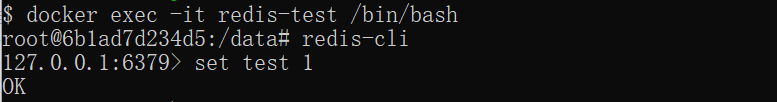

connecting via redis-cli

接着我们通过 redis-cli 连接测试使用 redis 服务。

$ docker run -it --network some-network --rm redis redis-cli -h some-redis

或者使用

$ docker exec -it redis-test /bin/bash

中文乱码请使用

redis-cli --raw

Additionally, If you want to use your own redis.conf ...

You can create your own Dockerfile that adds a redis.conf from the context into /data/, like so.

FROM redis

COPY redis.conf /usr/local/etc/redis/redis.conf

CMD [ "redis-server", "/usr/local/etc/redis/redis.conf" ]

Alternatively, you can specify something along the same lines with docker run options.

$ docker run -v /myredis/conf:/usr/local/etc/redis --name myredis redis redis-server /usr/local/etc/redis/redis.conf

Where /myredis/conf/ is a local directory containing your redis.conf file. Using this method means that there is no need for you to have a Dockerfile for your redis container.

The mapped directory should be writable, as depending on the configuration and mode of operation, Redis may need to create additional configuration files or rewrite existing ones.

Zookeeper

- Start a Zookeeper server instance

$ docker run --name some-zookeeper --restart always -d zookeeper

better way:

docker run --name zookeeper -p 2181:2181 -d -v ~/Data\&Log/zookeeper/conf:/conf -v ~/Data\&Log/zookeeper/data:/data -v ~/Data\&Log/zookeeper/datalog:/datalog zookeeper:3.5

This image includes EXPOSE 2181 2888 3888 8080 (the zookeeper client port, follower port, election port, AdminServer port respectively), so standard container linking will make it automatically available to the linked containers. Since the Zookeeper "fails fast" it's better to always restart it.

主机上建立挂载目录和zookeeper配置文件:

clientPort=2181

dataDir=/data

dataLogDir=/data/log

tickTime=2000

initLimit=5

syncLimit=2

autopurge.snapRetainCount=3

autopurge.purgeInterval=0

maxClientCnxns=60

server.0=192.168.90.128:2888:3888

server.1=192.168.90.129:2888:3888

server.2=192.168.90.130:2888:3888

分别设置每台机器的server id

cd /data/zookeeper_data/data

touch myid

echo 0 > myid

- Connect to Zookeeper from an application in another Docker container

$ docker run --name some-app --link some-zookeeper:zookeeper -d application-that-uses-zookeeper

- Connect to Zookeeper from the Zookeeper command line client

$ docker run -it --rm --link some-zookeeper:zookeeper zookeeper zkCli.sh -server zookeeper

- Configuration

Zookeeper configuration is located in /conf. One way to change it is mounting your config file as a volume:

$ docker run --name some-zookeeper --restart always -d -v $(pwd)/zoo.cfg:/conf/zoo.cfg zookeeper

Environment variables

ZooKeeper recommended defaults are used if zoo.cfg file is not provided. They can be overridden using the following environment variables.

$ docker run -e "ZOO_INIT_LIMIT=10" --name some-zookeeper --restart always -d zookeeper

ZOO_TICK_TIME

Defaults to 2000. ZooKeeper's tickTime

The length of a single tick, which is the basic time unit used by ZooKeeper, as measured in milliseconds. It is used to regulate heartbeats, and timeouts. For example, the minimum session timeout will be two ticks

ZOO_INIT_LIMIT

Defaults to 5. ZooKeeper's initLimit

Amount of time, in ticks (see tickTime), to allow followers to connect and sync to a leader. Increased this value as needed, if the amount of data managed by ZooKeeper is large.

ZOO_SYNC_LIMIT

Defaults to 2. ZooKeeper's syncLimit

Amount of time, in ticks (see tickTime), to allow followers to sync with ZooKeeper. If followers fall too far behind a leader, they will be dropped.

ZOO_MAX_CLIENT_CNXNS

Defaults to 60. ZooKeeper's maxClientCnxns

Limits the number of concurrent connections (at the socket level) that a single client, identified by IP address, may make to a single member of the ZooKeeper ensemble.

ZOO_STANDALONE_ENABLED

Defaults to true. Zookeeper's standaloneEnabled

Prior to 3.5.0, one could run ZooKeeper in Standalone mode or in a Distributed mode. These are separate implementation stacks, and switching between them during run time is not possible. By default (for backward compatibility) standaloneEnabled is set to true. The consequence of using this default is that if started with a single server the ensemble will not be allowed to grow, and if started with more than one server it will not be allowed to shrink to contain fewer than two participants.

ZOO_ADMINSERVER_ENABLED

Defaults to true. Zookeeper's admin.enableServer

The AdminServer is an embedded Jetty server that provides an HTTP interface to the four letter word commands. By default, the server is started on port 8080, and commands are issued by going to the URL "/commands/[command name]", e.g., http://localhost:8080/commands/stat.

ZOO_AUTOPURGE_PURGEINTERVAL

Defaults to 0. Zookeeper's autoPurge.purgeInterval

The time interval in hours for which the purge task has to be triggered. Set to a positive integer (1 and above) to enable the auto purging. Defaults to 0.

ZOO_AUTOPURGE_SNAPRETAINCOUNT

Defaults to 3. Zookeeper's autoPurge.snapRetainCount

When enabled, ZooKeeper auto purge feature retains the autopurge.snapRetainCount most recent snapshots and the corresponding transaction logs in the dataDir and dataLogDir respectively and deletes the rest. Defaults to 3. Minimum value is 3.

ZOO_4LW_COMMANDS_WHITELIST

Defaults to srvr. Zookeeper's 4lw.commands.whitelist

A list of comma separated Four Letter Words commands that user wants to use. A valid Four Letter Words command must be put in this list else ZooKeeper server will not enable the command. By default the whitelist only contains "srvr" command which zkServer.sh uses. The rest of four letter word commands are disabled by default.

Where to store data

This image is configured with volumes at /data and /datalog to hold the Zookeeper in-memory database snapshots and the transaction log of updates to the database, respectively.

Be careful where you put the transaction log. A dedicated transaction log device is key to consistent good performance. Putting the log on a busy device will adversely affect performance.

How to configure logging

By default, ZooKeeper redirects stdout/stderr outputs to the console. You can redirect to a file located in /logs by passing environment variable ZOO_LOG4J_PROP as follows:

$ docker run --name some-zookeeper --restart always -e ZOO_LOG4J_PROP="INFO,ROLLINGFILE" zookeeper

This will write logs to /logs/zookeeper.log. Check ZooKeeper Logging for more details.

This image is configured with a volume at /logs for your convenience.

consul

Running Consul for Development

开启UDP解析DNS

docker run --name consul -d -p 8500:8500 -p 8600:8600/udp consul

$ docker run -d --name=dev-consul -e CONSUL_BIND_INTERFACE=eth0 consul

This runs a completely in-memory Consul server agent with default bridge networking and no services exposed on the host, which is useful for development but should not be used in production. For example, if that server is running at internal address 172.17.0.2, you can run a three node cluster for development by starting up two more instances and telling them to join the first node.

$ docker run -d -e CONSUL_BIND_INTERFACE=eth0 consul agent -dev -join=172.17.0.2

... server 2 starts

$ docker run -d -e CONSUL_BIND_INTERFACE=eth0 consul agent -dev -join=172.17.0.2

... server 3 starts

Then we can query for all the members in the cluster by running a Consul CLI command in the first container:

$ docker exec -t dev-consul consul members

Node Address Status Type Build Protocol DC

579db72c1ae1 172.17.0.3:8301 alive server 0.6.3 2 dc1

93fe2309ef19 172.17.0.4:8301 alive server 0.6.3 2 dc1

c9caabfd4c2a 172.17.0.2:8301 alive server 0.6.3 2 dc1

Remember that Consul doesn't use the data volume in this mode - once the container stops all of your state will be wiped out, so please don't use this mode for production. Running completely on the bridge network with the development server is useful for testing multiple instances of Consul on a single machine, which is normally difficult to do because of port conflicts.

Development mode also starts a version of Consul's web UI on port 8500. This can be added to the other Consul configurations by supplying the -ui option to Consul on the command line. The web assets are bundled inside the Consul binary in the container.

Running Consul Agent in Client Mode

$ docker run -d --net=host -e 'CONSUL_LOCAL_CONFIG={"leave_on_terminate": true}' consul agent -bind=<external ip> -retry-join=<root agent ip>

==> Starting Consul agent...

==> Starting Consul agent RPC...

==> Consul agent running!

Node name: 'linode'

Datacenter: 'dc1'

Server: false (bootstrap: false)

Client Addr: 127.0.0.1 (HTTP: 8500, HTTPS: -1, DNS: 8600, RPC: 8400)

Cluster Addr: <external ip> (LAN: 8301, WAN: 8302)

Gossip encrypt: false, RPC-TLS: false, TLS-Incoming: false

Atlas: <disabled>

...

This runs a Consul client agent sharing the host's network and advertising the external IP address to the rest of the cluster. Note that the agent defaults to binding its client interfaces to 127.0.0.1, which is the host's loopback interface. This would be a good configuration to use if other containers on the host also use --net=host, and it also exposes the agent to processes running directly on the host outside a container, such as HashiCorp's Nomad.

The -retry-join parameter specifies the external IP of one other agent in the cluster to use to join at startup. There are several ways to control how an agent joins the cluster, see the agent configuration guide for more details on the -join, -retry-join, and -atlas-join options.

Note also we've set leave_on_terminate using the CONSUL_LOCAL_CONFIG environment variable. This is recommended for clients to and will be defaulted to true in Consul 0.7 and later, so this will no longer be necessary.

At startup, the agent will read config JSON files from /consul/config. Data will be persisted in the /consul/data volume.

Running Consul Agent in Server Mode

$ docker run -d --net=host -e 'CONSUL_LOCAL_CONFIG={"skip_leave_on_interrupt": true}' consul agent -server -bind=<external ip> -retry-join=<root agent ip> -bootstrap-expect=<number of server agents>

This runs a Consul server agent sharing the host's network. All of the network considerations and behavior we covered above for the client agent also apply to the server agent. A single server on its own won't be able to form a quorum and will be waiting for other servers to join.

Just like the client agent, the -retry-join parameter specifies the external IP of one other agent in the cluster to use to join at startup. There are several ways to control how an agent joins the cluster, see the agent configuration guide for more details on the -join, -retry-join, and -atlas-join options. The server agent also consumes a -bootstrap-expect option that specifies how many server agents to watch for before bootstrapping the cluster for the first time. This provides an easy way to get an orderly startup with a new cluster. See the agent configuration guide for more details on the -bootstrap and -bootstrap-expect options.

Note also we've set skip_leave_on_interrupt using the CONSUL_LOCAL_CONFIG environment variable. This is recommended for servers and will be defaulted to true in Consul 0.7 and later, so this will no longer be necessary.

At startup, the agent will read config JSON files from /consul/config. Data will be persisted in the /consul/data volume.

Once the cluster is bootstrapped and quorum is achieved, you must use care to keep the minimum number of servers operating in order to avoid an outage state for the cluster. The deployment table in the consensus guide outlines the number of servers required for different configurations. There's also an adding/removing servers guide that describes that process, which is relevant to Docker configurations as well. The outage recovery guide has steps to perform if servers are permanently lost. In general it's best to restart or replace servers one at a time, making sure servers are healthy before proceeding to the next server.

Exposing Consul's DNS Server on Port 53

By default, Consul's DNS server is exposed on port 8600. Because this is cumbersome to configure with facilities like resolv.conf, you may want to expose DNS on port 53. Consul 0.7 and later supports this by setting an environment variable that runs setcap on the Consul binary, allowing it to bind to privileged ports. Note that not all Docker storage backends support this feature (notably AUFS).

Here's an example:

$ docker run -d --net=host -e 'CONSUL_ALLOW_PRIVILEGED_PORTS=' consul -dns-port=53 -recursor=8.8.8.8

This example also includes a recursor configuration that uses Google's DNS servers for non-Consul lookups. You may want to adjust this based on your particular DNS configuration. If you are binding Consul's client interfaces to the host's loopback address, then you should be able to configure your host's resolv.conf to route DNS requests to Consul by including "127.0.0.1" as the primary DNS server. This would expose Consul's DNS to all applications running on the host, but due to Docker's built-in DNS server, you can't point to this directly from inside your containers; Docker will issue an error message if you attempt to do this. You must configure Consul to listen on a non-localhost address that is reachable from within other containers.

Once you bind Consul's client interfaces to the bridge or other network, you can use the --dns option in your other containers in order for them to use Consul's DNS server, mapped to port 53. Here's an example:

$ docker run -d --net=host -e 'CONSUL_ALLOW_PRIVILEGED_PORTS=' consul agent -dns-port=53 -recursor=8.8.8.8 -bind=<bridge ip>

Now start another container and point it at Consul's DNS, using the bridge address of the host:

$ docker run -i --dns=<bridge ip> -t ubuntu sh -c "apt-get update && apt-get install -y dnsutils && dig consul.service.consul"

...

;; ANSWER SECTION:

consul.service.consul. 0 IN A 66.175.220.234

...

In the example above, adding the bridge address to the host's /etc/resolv.conf file should expose it to all containers without running with the --dns option.

Service Discovery with Containers

There are several approaches you can use to register services running in containers with Consul. For manual configuration, your containers can use the local agent's APIs to register and deregister themselves, see the Agent API for more details. Another strategy is to create a derived Consul container for each host type which includes JSON config files for Consul to parse at startup, see Services for more information. Both of these approaches are fairly cumbersome, and the configured services may fall out of sync if containers die or additional containers are started.

If you run your containers under HashiCorp's Nomad scheduler, it has first class support for Consul. The Nomad agent runs on each host alongside the Consul agent. When jobs are scheduled on a given host, the Nomad agent automatically takes care of syncing the Consul agent with the service information. This is very easy to manage, and even services on hosts running outside of Docker containers can be managed by Nomad and registered with Consul. You can find out more about running Docker under Nomad in the Docker Driver guide.

Other open source options include Registrator from Glider Labs and ContainerPilot from Joyent. Registrator works by running a Registrator instance on each host, alongside the Consul agent. Registrator monitors the Docker daemon for container stop and start events, and handles service registration with Consul using the container names and exposed ports as the service information. ContainerPilot manages service registration using tooling running inside the container to register services with Consul on start, manage a Consul TTL health check while running, and deregister services when the container stops.

Running Health Checks in Docker Containers

Consul has the ability to execute health checks inside containers. If the Docker daemon is exposed to the Consul agent and the DOCKER_HOST environment variable is set, then checks can be configured with the Docker container ID to execute in. See the health checks guide for more details.

nacos

Quick Start

docker run --name nacos-quick -e MODE=standalone -p 8849:8848 -d nacos/nacos-server:2.0.2

docker run --name nacos -d -p 8848:8848 -e MODE=standalone nacos/nacos-server

⽤户名密码为 nacos

Advanced Usage

Tips: You can change the version of the Nacos image in the compose file from the following configuration.

example/.env

NACOS_VERSION=2.0.2

Run the following command:

Clone project

git clone --depth 1 https://github.com/nacos-group/nacos-docker.git

cd nacos-docker

Standalone Derby

docker-compose -f example/standalone-derby.yaml up

Standalone Mysql

# Using mysql 5.7

docker-compose -f example/standalone-mysql-5.7.yaml up # Using mysql 8

docker-compose -f example/standalone-mysql-8.yaml up

Cluster

docker-compose -f example/cluster-hostname.yaml up

Service registration

curl -X PUT 'http://127.0.0.1:8848/nacos/v1/ns/instance?serviceName=nacos.naming.serviceName&ip=20.18.7.10&port=8080'

Service discovery

curl -X GET 'http://127.0.0.1:8848/nacos/v1/ns/instances?serviceName=nacos.naming.serviceName'

Publish config

curl -X POST "http://127.0.0.1:8848/nacos/v1/cs/configs?dataId=nacos.cfg.dataId&group=test&content=helloWorld"

Get config

curl -X GET "http://127.0.0.1:8848/nacos/v1/cs/configs?dataId=nacos.cfg.dataId&group=test"

Open the Nacos console in your browser

Common property configuration

| name | description | option |

|---|---|---|

| MODE | cluster/standalone | cluster/standalone default cluster |

| NACOS_SERVERS | nacos cluster address | eg. ip1:port1 ip2:port2 ip3:port3 |

| PREFER_HOST_MODE | Whether hostname are supported | hostname/ip default ip |

| NACOS_APPLICATION_PORT | nacos server port | default 8848 |

| NACOS_SERVER_IP | custom nacos server ip when network was mutil-network | |

| SPRING_DATASOURCE_PLATFORM | standalone support mysql | mysql / empty default empty |

| MYSQL_SERVICE_HOST | mysql host | |

| MYSQL_SERVICE_PORT | mysql database port | default : 3306 |

| MYSQL_SERVICE_DB_NAME | mysql database name | |

| MYSQL_SERVICE_USER | username of database | |

| MYSQL_SERVICE_PASSWORD | password of database | |

| MYSQL_DATABASE_NUM | It indicates the number of database | default :1 |

| MYSQL_SERVICE_DB_PARAM | Database url parameter | default : characterEncoding=utf8&connectTimeout=1000&socketTimeout=3000&autoReconnect=true&useSSL=false |

| JVM_XMS | -Xms | default :1g |

| JVM_XMX | -Xmx | default :1g |

| JVM_XMN | -Xmn | default :512m |

| JVM_MS | -XX:MetaspaceSize | default :128m |

| JVM_MMS | -XX:MaxMetaspaceSize | default :320m |

| NACOS_DEBUG | enable remote debug | y/n default :n |

| TOMCAT_ACCESSLOG_ENABLED | server.tomcat.accesslog.enabled | default :false |

| NACOS_AUTH_SYSTEM_TYPE | The auth system to use, currently only 'nacos' is supported | default :nacos |

| NACOS_AUTH_ENABLE | If turn on auth system | default :false |

| NACOS_AUTH_TOKEN_EXPIRE_SECONDS | The token expiration in seconds | default :18000 |

| NACOS_AUTH_TOKEN | The default token | default :SecretKey012345678901234567890123456789012345678901234567890123456789 |

| NACOS_AUTH_CACHE_ENABLE | Turn on/off caching of auth information. By turning on this switch, the update of auth information would have a 15 seconds delay. | default : false |

| MEMBER_LIST | Set the cluster list with a configuration file or command-line argument | eg:192.168.16.101:8847?raft_port=8807,192.168.16.101?raft_port=8808,192.168.16.101:8849?raft_port=8809 |

| EMBEDDED_STORAGE | Use embedded storage in cluster mode without mysql | embedded default : none |

| NACOS_AUTH_CACHE_ENABLE | nacos.core.auth.caching.enabled | default : false |

| NACOS_AUTH_USER_AGENT_AUTH_WHITE_ENABLE | nacos.core.auth.enable.userAgentAuthWhite | default : false |

| NACOS_AUTH_IDENTITY_KEY | nacos.core.auth.server.identity.key | default : serverIdentity |

| NACOS_AUTH_IDENTITY_VALUE | nacos.core.auth.server.identity.value | default : security |

| NACOS_SECURITY_IGNORE_URLS | nacos.security.ignore.urls | default : /,/error,/**/*.css,/**/*.js,/**/*.html,/**/*.map,/**/*.svg,/**/*.png,/**/*.ico,/console-fe/public/**,/v1/auth/**,/v1/console/health/**,/actuator/**,/v1/console/server/** |

Advanced configuration

If the above property configuration list does not meet your requirements, you can mount the custom.properties file into the /home/nacos/init.d/ directory of the container, where the spring properties can be configured, and the priority is higher than application.properties file

Reference example: cluster-hostname.yaml

Nacos + Grafana + Prometheus

Usage reference:Nacos monitor-guide

Note: When Grafana creates a new data source, the data source address must be http://prometheus:9090

RabbitMQ

Running the daemon

RabbitMQ 3.9 一下可以用:

docker run --name rabbitmq -d -p 5672:5672 -p 15672:15672 -e RABBITMQ_DEFAULT_USER=spring -e RABBITMQ_DEFAULT_PASS=spring

rabbitmq:3.7-management

One of the important things to note about RabbitMQ is that it stores data based on what it calls the "Node Name", which defaults to the hostname. What this means for usage in Docker is that we should specify -h/--hostname explicitly for each daemon so that we don't get a random hostname and can keep track of our data:

$ docker run -d --hostname my-rabbit --name rabbitmq -p 5672:5672 -p 15672:15672 rabbitmq:3

This will start a RabbitMQ container listening on the default port of 5672. If you give that a minute, then do docker logs rabbitmq, you'll see in the output a block similar to:

=INFO REPORT==== 6-Jul-2015::20:47:02 ===

node : rabbit@my-rabbit

home dir : /var/lib/rabbitmq

config file(s) : /etc/rabbitmq/rabbitmq.config

cookie hash : UoNOcDhfxW9uoZ92wh6BjA==

log : tty

sasl log : tty

database dir : /var/lib/rabbitmq/mnesia/rabbit@my-rabbit

Note the database dir there, especially that it has my "Node Name" appended to the end for the file storage. This image makes all of /var/lib/rabbitmq a volume by default.

Environment Variables

For a list of environment variables supported by RabbitMQ itself, see the Environment Variables section of rabbitmq.com/configure

WARNING: As of RabbitMQ 3.9, all of the docker-specific variables listed below are deprecated and no longer used. Please use a configuration file instead; visit rabbitmq.com/configure to learn more about the configuration file. For a starting point, the 3.8 images will print out the config file it generated from supplied environment variables.

# Unavailable in 3.9 and up

RABBITMQ_DEFAULT_PASS

RABBITMQ_DEFAULT_PASS_FILE

RABBITMQ_DEFAULT_USER

RABBITMQ_DEFAULT_USER_FILE

RABBITMQ_DEFAULT_VHOST

RABBITMQ_ERLANG_COOKIE

RABBITMQ_MANAGEMENT_SSL_CACERTFILE

RABBITMQ_MANAGEMENT_SSL_CERTFILE

RABBITMQ_MANAGEMENT_SSL_DEPTH

RABBITMQ_MANAGEMENT_SSL_FAIL_IF_NO_PEER_CERT

RABBITMQ_MANAGEMENT_SSL_KEYFILE

RABBITMQ_MANAGEMENT_SSL_VERIFY

RABBITMQ_SSL_CACERTFILE

RABBITMQ_SSL_CERTFILE

RABBITMQ_SSL_DEPTH

RABBITMQ_SSL_FAIL_IF_NO_PEER_CERT

RABBITMQ_SSL_KEYFILE

RABBITMQ_SSL_VERIFY

RABBITMQ_VM_MEMORY_HIGH_WATERMARK

使用新镜像改用配置文件方式修改环境参数

docker-compose-rabbitmq.yml

# 环境变量可参考: https://www.rabbitmq.com/configure.html

# https://github.com/rabbitmq/rabbitmq-server/blob/master/deps/rabbit/docs/rabbitmq.conf.example

version: '3'

services:

rabbitmq:

image: registry.cn-hangzhou.aliyuncs.com/zhengqing/rabbitmq:3.9.1-management # 镜像`rabbitmq:3.9.1-management` 【 注:该版本包含了web控制页面 】

container_name: rabbitmq # 容器名为'rabbitmq'

hostname: my-rabbit

restart: always # 指定容器退出后的重启策略为始终重启

environment: # 设置环境变量,相当于docker run命令中的-e

TZ: Asia/Shanghai

LANG: en_US.UTF-8

volumes: # 数据卷挂载路径设置,将本机目录映射到容器目录

- "./rabbitmq/config/rabbitmq.conf:/etc/rabbitmq/rabbitmq.conf"

- "./rabbitmq/config/10-default-guest-user.conf:/etc/rabbitmq/conf.d/10-default-guest-user.conf"

- "./rabbitmq/data:/var/lib/rabbitmq"

# - "./rabbitmq/log:/var/log/rabbitmq"

ports: # 映射端口

- "5672:5672"

- "15672:15672"

rabbitmq.conf

loopback_users.guest = false

listeners.tcp.default = 5672

default_vhost = my_vhost

default_user = admin

default_pass = admin

default_user_tags.administrator = true

default_permissions.configure = .*

default_permissions.read = .*

default_permissions.write = .*

hipe_compile = false

management.listener.port = 15672

management.listener.ssl = false

default-guest-user.conf

## DEFAULT SETTINGS ARE NOT MEANT TO BE TAKEN STRAIGHT INTO PRODUCTION

## see https://www.rabbitmq.com/configure.html for further information

## on configuring RabbitMQ

## allow access to the guest user from anywhere on the network

## https://www.rabbitmq.com/access-control.html#loopback-users

## https://www.rabbitmq.com/production-checklist.html#users

## loopback_users.guest = false

开放端口和添加用户

这时 rabbitmq 是在 docker 中安装好了,但是这时候如果就去浏览器访问可能会碰到两个问题:

1. 地址访问不到

这是因为我们演示的是安装在云服务器中的docker中,我们需要去云服务器的控制台中的安全组把15672端口打开,如果是安装的本地的docker就没有这个问题。

进入rabbitmq,然后安装插件

rabbitmq-plugins enable rabbitmq_management

2. 如文章开头图片所示,我们没有 Username和Password

这是因为要在rabbitmq中添加用户

rabbitmqctl add_user mhlevel mhlevel #添加用户,后面两个参数分别是用户名和密码

rabbitmqctl set_permissions -p / mhlevel ".*" ".*" ".*" #添加权限

rabbitmqctl set_user_tags mhlevel administrator #修改用户角色

用户级别:

- administrator 可以登录控制台、查看所有信息、可以对rabbitmq进行管理

- monitoring 监控者 登录控制台,查看所有信息

- policymaker 策略制定者 登录控制台,指定策略

- managment 普通管理员 登录控制台

三、测试

这时候在浏览器中输入ip:15672用刚才设置的用户名和密码就可以登入了

Memory Limits

RabbitMQ contains functionality which explicitly tracks and manages memory usage, and thus needs to be made aware of cgroup-imposed limits (e.g. docker run --memory=..).

The upstream configuration setting for this is vm_memory_high_watermark in rabbitmq.conf, and it is described under "Memory Alarms" in the documentation. If you set a relative limit via vm_memory_high_watermark.relative, then RabbitMQ will calculate its limits based on the host's total memory and not the limit set by the contianer runtime.

Additional Configuration

If configuration is required, it is recommended to supply an appropriate /etc/rabbitmq/rabbitmq.conf file (see the "Configuration File(s)" section of the RabbitMQ documentation for more details), for example via bind-mount, Docker Configs, or a short Dockerfile with a COPY instruction.

Alternatively, it is possible to use the RABBITMQ_SERVER_ADDITIONAL_ERL_ARGS environment variable, whose syntax is described in section 7.8 ("Configuring an Application") of the Erlang OTP Design Principles User's Guide (the appropriate value for -ApplName is -rabbit), this method requires a slightly different reproduction of its equivalent entry in rabbitmq.conf. For example, configuring channel_max would look something like -e RABBITMQ_SERVER_ADDITIONAL_ERL_ARGS="-rabbit channel_max 4007". Where the space between the variable channel_max and its value 4007 correctly becomes a comma when translated in the environment.

Reference

Docker常用image的更多相关文章

- 关于Docker 常用命令

Docker 常用命令 分类列一下常用的CLI命令 仓库相关 search/ pull / push / login etc. 例:docker pull ubuntu 从仓库下载ubuntuimag ...

- docker专题(2):docker常用管理命令(上)

http://segmentfault.com/a/1190000000751601 本文只记录docker命令在大部分情境下的使用,如果想了解每一个选项的细节,请参考官方文档,这里只作为自己以后的备 ...

- 【Docker】(3)---linux部署Docker、Docker常用命令

linux部署Docker.Docker常用命令 本次部署Linux版本:CentOS 7.4 64位. 说明: 因为Docker是基于Linux 64bit的 所以Docker要求64位的系统且内核 ...

- Docker常用命令(二)

Docker常用命令 查看Docker所有正在运行的容器 docker ps 查看Docker已退出的容器 docker ps -a 查看Docker所有镜像 docker images 删除镜像 删 ...

- Centos7 docker 常用指令

Docker 运行在 CentOS 7 上,要求系统为64位.系统内核版本为 3.10 以上 一.docker的安装及卸载 1.查看当前系统内核版本: [root@docker ~]# uname - ...

- Docker应用二:docker常用命令介绍

Docker常用命令使用介绍 docker中常用的命令: 1.docker search image_name:搜查镜像 2.docker pull image_name:从镜像库中拉去镜像 3.d ...

- Docker常用命令汇总,和常用操作举例

Docker命令 docker 常用命令如下 管理命令: container 管理容器 image 管理镜像 network 管理网络 node 管理Swarm节点 plugin 管理插件 secre ...

- 1021 docker常用命令和Jenkins搭建

docker常用命令 1.镜像管理 docker pull (镜像名) # 默认拉取最新版本的镜像 docker pull (镜像名:版本号) #拉取指定版本的镜像 docker push (镜像名) ...

- Docker 常用命令——镜像

Docker 常用命令 帮助命令 docker version --版本信息 docker info --详细信息 docker --help --帮助 镜像命令 1.doc ...

- Docker常用操作命令

docker 常用管理命令 修改镜像地址 sudo tee /etc/docker/daemon.json <<-'EOF' { "registry-mirrors": ...

随机推荐

- idea如何在终端使用git并解决终端中文乱码

idea使用git终端 在idea设置中 找到Settings-Tools-Terminal-Shell path,替换为git安装目录下的bin/bash.exe 解决中文乱码 在git安装目录下找 ...

- python nose测试框架全面介绍十四 --- nose中的只跑上次失败用例

玩过一段时间nose的朋友,一定会发现nose中有一个--failed的功能,官方解释为: --failed Run the tests that failed in the last test ru ...

- 剖析虚幻渲染体系(12)- 移动端专题Part 2(GPU架构和机制)

目录 12.4 移动渲染技术要点 12.4.1 Tile-based (Deferred) Rendering 12.4.2 Hierarchical Tiling 12.4.3 Early-Z 12 ...

- mysql根据条件决定是否插入数据

这个问题其实分两个方面: 1.根据表的主键决定数据是否插入. 2.根据表的非主键决定是否插入. 假设有表DOC_INFO(医生表),联合主键HOS_ID(医院代码),DEPT_CODE(科室代码),D ...

- 日志收集之rsyslog to kafka

项目需要将日志收集起来做存储分析,数据的流向为rsyslog(收集) -> kafka(消息队列) -> logstash(清理) -> es.hdfs: 今天我们先将如何利用rsy ...

- java中static关键字的解析

静态的特点: A:随着类的加载而加载 B:优先于对象而存在 C:静态是被所有对象共享的数据 这也是我们来判断是否使用静态的标准 D:静态的出现,让我们的调用方式多了一种 类名.静态的内容 非静态的内容 ...

- html+css第一篇

行间样式表 <div style="--"></div> 内部样式表 <style>----</style> 外部样式表 <l ...

- pytest-rerunfailures/pytest-repeat重跑插件

在测试中,我们会经常遇到这种情况,由于环境等一些原因,一条case运行5次,只有两次成功 其它三次失败,针对这种概率性成功或失败,若是我们每次都运行一次就比较耗时间,这个时候 就需要pytest提供的 ...

- SpringBoot引入第三方jar的Bean的三种方式

在SpringBoot的大环境下,基本上很少使用之前的xml配置Bean,主要是因为这种方式不好维护而且也不够方便. 因此本篇博文也不再介绍Spring中通过xml来声明bean的使用方式. 一.注解 ...

- 力扣 - 剑指 Offer 46. 把数字翻译成字符串

题目 剑指 Offer 46. 把数字翻译成字符串 思路1(递归,自顶向下) 这题和青蛙跳台阶很类似,青蛙跳台阶说的是青蛙每次可以跳一层或者两层,跳到第 n 层有多少种解法,而这题说的是讲数字翻译成字 ...