Centos7+LVS-DR+keepalived实验(包含sorry-server、日志、及HTTP-GET的健康检测)

一、简介

1、lvs-dr原理请参考原理篇

2、keepalived原理请参考原理篇

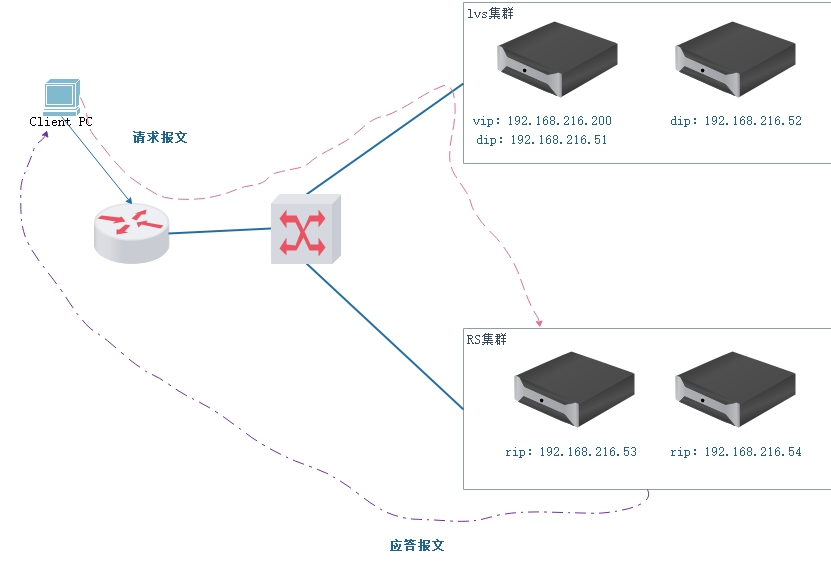

3、基于lvs-dr+keepalived故障切换架构图如下:

二、部署

1、环境

| web1 | lvs+keepalived | 192.168.216.51 |

| web2 | lvs+keepalived | 192.168.216.52 |

| web3 | web | 192.168.216.53 |

| web4 | web | 192.168.216.54 |

| client | 物理机 |

注意:确保每台机器防火墙、selinux关闭,时间同步

2、准备RS的web服务,这里安装httpd

web3/web4

yum install httpd -y

web3

echo "welcome to web3" >/var/www/html/index.html

systemctl start httpd

systemctl enable httpd

web4

echo "welcome to web4" >/var/www/html/index.html

systemctl start httpd

systemctl enable httpd

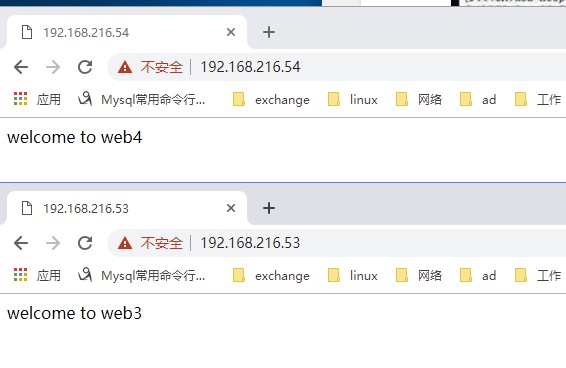

互相访问一下,在客户机浏览器上也访问一下

[root@web3 ~]# curl 192.168.216.54

welcome to web4

[root@web3 ~]# [root@web4 ~]# curl 192.168.216.54

welcome to web4

[root@web4 ~]#

arp抑制的意义 ,修改的应答级别

arp_ignore 改为1的意义是,响应报文,请求报文从哪个地址进来的,就只能这个接口地址响应

arp_announce 改为2的意义是通知,不通告不同网段

脚本实现:web3/web4,都运行一下

[root@web3 ~]# cd /arp

[root@web3 arp]# ll

total

-rwxr-xr-x. root root Apr : arp.sh

[root@web3 arp]# cat arp.sh

#!/bin/bash

case $ in

start)

echo >/proc/sys/net/ipv4/conf/all/arp_ignore

echo >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo >/proc/sys/net/ipv4/conf/all/arp_announce

echo >/proc/sys/net/ipv4/conf/lo/arp_announce

;;

stop)

echo >/proc/sys/net/ipv4/conf/all/arp_ignore

echo >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo >/proc/sys/net/ipv4/conf/all/arp_announce

echo >/proc/sys/net/ipv4/conf/lo/arp_announce

;;

esac [root@web3 arp]# chmod +x arp.sh

[root@web3 arp]# ./arp.sh

4、RS配置VIP接口

web3/web4 同时配置

首先几个问题解释一下:

为什么配置到lo接口

既然需要rs能够处理目标地址的vip的ip报文,首先需要接收这个包,在lo上配置vip就能够完全接收包并将结果返回client

配置到其他网卡上,会影响客户端的arp request,影响arp表,从而影响负载均衡

为什么是rs的掩码是255.255.255.255

由于rs的vip不对外通信,用做侦首部,所以一定要设置位32位掩码

1 ifconfig lo:0 192.168.216.200 netmask 255.255.255.255 broadcast 192.168.216.200 up

2 route add -host 192.168.216.200 dev lo:0 [root@web3 arp]# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.216.2 0.0.0.0 UG ens33

192.168.122.0 0.0.0.0 255.255.255.0 U virbr0

192.168.216.0 0.0.0.0 255.255.255.0 U ens33

192.168.216.200 0.0.0.0 255.255.255.255 UH lo

5、准备director的ipvsadm

web1/web2

yum install ipvsadm -y

[root@web2 keepalived]# ipvsadm -C

[root@web2 keepalived]# ipvsadm -A -t 192.168.216.200: -s rr

[root@web2 keepalived]# ipvsadm -a -t 192.168.216.200: -r 192.168.216.53 -g -w

[root@web2 keepalived]# ipvsadm -a -t 192.168.216.200: -r 192.168.216.54 -g -w

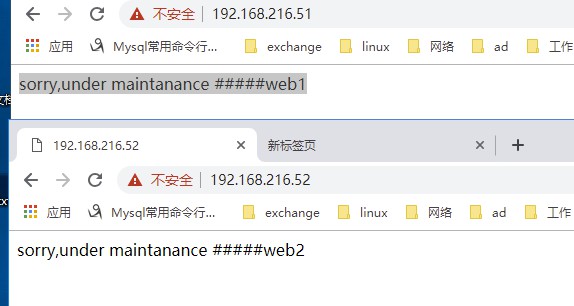

6、sorry-server的配置

web1/web2-安装web软件

yum install nginx -y

web1-

echo "sorry,under maintanance #####web1" >/usr/share/nginx/html/index.html

web2

echo "sorry,under maintanance #####web2" >/usr/share/nginx/html/index.html

web1/web2

systemctl start nginx

systemctl enable nginx

客户端访问web应用是否正常

后面在keepalived配置文件virtual_server区域添加sorry_server 127.0.0.1 80

7、配置keepalived,及基于HTTP-GET做监控检测

web1/web2-安装软件

yum install keepalived -y

web1-master配置

[root@web1 keepalived]# cat keepalived.conf

2 ! Configuration File for keepalived

3

4 global_defs {

# notification_email {

# acassen@firewall.loc

# failover@firewall.loc

# sysadmin@firewall.loc

# }

# notification_email_from Alexandre.Cassen@firewall.loc

# smtp_server 192.168.200.1

# smtp_connect_timeout

13 router_id LVS_DEVEL

# vrrp_skip_check_adv_addr

# vrrp_strict

# vrrp_garp_interval

# vrrp_gna_interval

}

19 vrrp_script chk_maintanance {

20

21 script "/etc/keepalived/chkdown.sh"

22 interval 1

23 weight -20

24 }

#vrrp_script chk_nginx {

# script "/etc/keepalived/chknginx.sh"

# interval

# weight -

#} 31 #VIP1

32 vrrp_instance VI_1 {

33 state MASTER

34 interface ens33

35 virtual_router_id 50

36 priority 100

37 advert_int 1

38 authentication {

39 auth_type PASS

40 auth_pass 1111

41 }

42 virtual_ipaddress {

43 192.168.216.200

44 }

45 track_script {

46 chk_maintanance

47 }

# track_script {

# chk_nginx

# }

}

#VIP2

#vrrp_instance VI_2 {

# state BAKCUP

# interface ens33

# virtual_router_id

# priority

# advert_int

# authentication {

# auth_type PASS

# auth_pass

# }

# virtual_ipaddress {

# 192.168.216.210

# }

# track_script {

# chk_maintanance

# }

# track_script {

# chk_nginx

# }

#} 74 virtual_server 192.168.216.200 80{

75 delay_loop 6

76 lb_algo wrr

77 lb_kind DR

78 nat_mask 255.255.0.0

79 protocol TCP

80

81 real_server 192.168.216.53 80 {

82 weight 1

83 HTTP_GET {

84 url {

85 path /

86 status_code 200

87 }

88 connect_timeout 3

89 nb_get_retry 3

90 delay_before_retry 3

91 }

92 }

93

94 real_server 192.168.216.54 80 {

95 weight 2

96 HTTP_GET {

97 url {

98 path /

99 status_code 200

100 }

101 connect_timeout 3

102 nb_get_retry 3

103 delay_before_retry 3

104 }

105 }

106 }

web2-backup配置

[root@web2 keepalived]# cat keepalived.conf

! Configuration File for keepalived global_defs {

# notification_email {

# acassen@firewall.loc

# failover@firewall.loc

# sysadmin@firewall.loc

# }

# notification_email_from Alexandre.Cassen@firewall.loc

# smtp_server 192.168.200.1

# smtp_connect_timeout

router_id LVS_DEVEL1

# vrrp_skip_check_adv_addr

# vrrp_strict

# vrrp_garp_interval

# vrrp_gna_interval

}

vrrp_script chk_maintanance { #这里是脚本通过实现动态切换在Centos7+nginx+keepalived集群及双主架构案例文章有介绍

script "/etc/keepalived/chkdown.sh”

interval

weight -

} vrrp_script chk_nginx {

script "/etc/keepalived/chknginx.sh"

interval

weight -

} #VIP1

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id

priority

advert_int

authentication {

auth_type PASS

auth_pass

}

virtual_ipaddress {

192.168.216.200

}

track_script {

chk_maintanance

}

# track_script {

# chk_nginx

# }

} #VIP2

#vrrp_instance VI_2 {

# state MASTER

# interface ens33

# virtual_router_id

# priority

# advert_int

# authentication {

# auth_type PASS

# auth_pass

# }

# virtual_ipaddress {

# 192.168.216.210

# }

# track_script {

# chk_maintanance

# }

# track_script {

# chk_nginx

# }

#} virtual_server 192.168.216.200 { #vip区域

delay_loop 6 #延迟轮询时间

lb_algo wrr #后端算法

lb_kind DR #调度类型

nat_mask 255.255.0.0 #

protocol TCP #监控服务协议类型

sorry_server 127.0.0.1 80 #sorry-server

real_server 192.168.216.53 { #真实服务器

weight 1 #权重

HTTP_GET { #健康检测方式 HTTP_GET|SSL_GET|TCP_CHECK|SMTP_CHECK|MISC_CHECK,这里用的HTTP_GET据说效率比TCP_CHECK高

url {

path / #请求rs上的路径

status_code 200 #状态码检测

}

connect_timeout 3 #超时时长

nb_get_retry 3 #重复次数

delay_before_retry 3 #下次重试时间延迟

}

}

real_server 192.168.216.54 {

weight

HTTP_GET {

url {

path /

status_code

}

connect_timeout

nb_get_retry

delay_before_retry

}

}

}

添加keepalived ,down脚本

[root@web1 keepalived]# cat chkdown.sh

#!/bin/bash [[ -f /etc/keepalived/down ]]&&exit || exit [root@web1 keepalived]#

8、开启日志功能

vim /etc/sysconfig/keepalived

KEEPALIVED_OPTIONS="-D" 修改成KEEPALIVED_OPTIONS="-D -d -S 0"

[root@web1 keepalived]# cat /etc/sysconfig/keepalived

# Options for keepalived. See `keepalived --help' output and keepalived(8) and

# keepalived.conf() man pages for a list of all options. Here are the most

# common ones :

#

# --vrrp -P Only run with VRRP subsystem.

# --check -C Only run with Health-checker subsystem.

# --dont-release-vrrp -V Dont remove VRRP VIPs & VROUTEs on daemon stop.

# --dont-release-ipvs -I Dont remove IPVS topology on daemon stop.

# --dump-conf -d Dump the configuration data.

# --log-detail -D Detailed log messages.

# --log-facility -S - Set local syslog facility (default=LOG_DAEMON)

# 15 KEEPALIVED_OPTIONS="-D -d -S 0"

开启rsyslog

vim /etc/rsyslog.conf

#keepalived -S 0

local0.* /var/log/keepalived.log

重启服务

systemctl restart keepalived

systemctl start rsyslog

systemctl enable rsyslog

三、验证

1、验证keepalived

web1上

touch down

ip a #查看vip 消失

rm -rf down

ip a #vip自动跳回

[root@web1 keepalived]# touch down

[root@web1 keepalived]# ll

total

-rwxr-xr-x root root Apr : chkdown.sh

-rwxr-xr-x root root Apr : chkmysql.sh

-rwxr-xr-x root root Apr : chknginx.sh

7 -rw-r--r-- 1 root root 0 Apr 24 17:31 down

-rw-r--r-- root root Apr : keepalived.conf

-rw-r--r-- root root Apr : notify.sh

10 [root@web1 keepalived]# ip a

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP qlen

link/ether :0c::1c:8b: brd ff:ff:ff:ff:ff:ff

inet 192.168.216.51/ brd 192.168.216.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80:::e73d:1ef:2e1/ scope link

valid_lft forever preferred_lft forever

: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu qdisc noqueue state DOWN qlen

link/ether ::::a5:7c brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/ brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

: virbr0-nic: <BROADCAST,MULTICAST> mtu qdisc pfifo_fast master virbr0 state DOWN qlen

link/ether ::::a5:7c brd ff:ff:ff:ff:ff:ff

[root@web1 keepalived]# rm -rf downn

30 [root@web1 keepalived]# rm -rf down

31 [root@web1 keepalived]# ip a

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP qlen

link/ether :0c::1c:8b: brd ff:ff:ff:ff:ff:ff

inet 192.168.216.51/ brd 192.168.216.255 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80:::e73d:1ef:2e1/ scope link

valid_lft forever preferred_lft forever

: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu qdisc noqueue state DOWN qlen

link/ether ::::a5:7c brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/ brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

: virbr0-nic: <BROADCAST,MULTICAST> mtu qdisc pfifo_fast master virbr0 state DOWN qlen

link/ether ::::a5:7c brd ff:ff:ff:ff:ff:ff

50 [root@web1 keepalived]# ip a

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN qlen

link/loopback ::::: brd :::::

inet 127.0.0.1/ scope host lo

valid_lft forever preferred_lft forever

inet6 ::/ scope host

valid_lft forever preferred_lft forever

: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP qlen

link/ether :0c::1c:8b: brd ff:ff:ff:ff:ff:ff

inet 192.168.216.51/ brd 192.168.216.255 scope global ens33

valid_lft forever preferred_lft forever

61 inet 192.168.216.200/32 scope global ens33 #vip自动跳回

valid_lft forever preferred_lft forever

inet6 fe80:::e73d:1ef:2e1/ scope link

valid_lft forever preferred_lft forever

: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu qdisc noqueue state DOWN qlen

link/ether ::::a5:7c brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/ brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

: virbr0-nic: <BROADCAST,MULTICAST> mtu qdisc pfifo_fast master virbr0 state DOWN qlen

link/ether ::::a5:7c brd ff:ff:ff:ff:ff:ff

[root@web1 keepalived]#

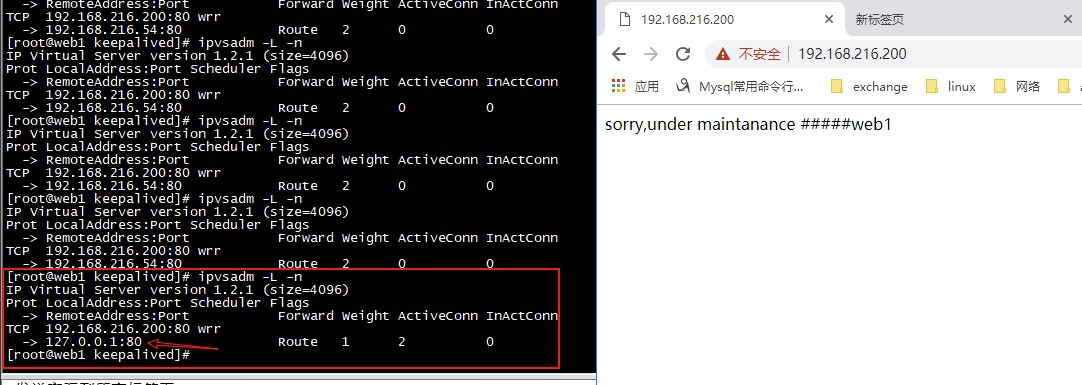

2、验证健康检测

1)、首先检查一下ipvsadm,并访问

[root@web1 keepalived]# ipvsadm -L -n

IP Virtual Server version 1.2. (size=)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.216.200: wrr

-> 192.168.216.53: Route

-> 192.168.216.54: Route

[root@web1 keepalived]#

正常状态

正常状态

2)、web3 停止httpd测试健康检测

systemctl stop httpd

web1上查看,ipvs策略已经剔除web3 ,日志文件也显示Removing service

[root@web1 keepalived]# ipvsadm -L -n

IP Virtual Server version 1.2. (size=)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.216.200: wrr

-> 192.168.216.54: Route

[root@web1 keepalived]# cat /var/log/keepalived.log |tail -

Apr :: web1 Keepalived_vrrp[]: Sending gratuitous ARP on ens33 for 192.168.216.200

Apr :: web1 Keepalived_vrrp[]: Sending gratuitous ARP on ens33 for 192.168.216.200

Apr :: web1 Keepalived_vrrp[]: Sending gratuitous ARP on ens33 for 192.168.216.200

Apr :: web1 Keepalived_vrrp[]: Sending gratuitous ARP on ens33 for 192.168.216.200

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

10 Apr 24 17:40:43 web1 Keepalived_healthcheckers[50390]: Check on service [192.168.216.53]:80 failed after 3 retry.

11 Apr 24 17:40:43 web1 Keepalived_healthcheckers[50390]: Removing service [192.168.216.53]:80 from VS [192.168.216.200]:0

[root@web1 keepalived]#

恢复web3的httpd

systemctl start httpd

web1上查看已经添加到负载均衡上,日志文件显示HTTP status code success 和adding service to VS

[root@web1 keepalived]# ipvsadm -L -n

IP Virtual Server version 1.2. (size=)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

5 TCP 192.168.216.200:80 wrr

6 -> 192.168.216.53:80 Route 1 0 0

7 -> 192.168.216.54:80 Route 2 0 0

[root@web1 keepalived]# cat /var/log/keepalived.log |tail -

Apr :: web1 Keepalived_vrrp[]: Sending gratuitous ARP on ens33 for 192.168.216.200

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

Apr :: web1 Keepalived_healthcheckers[]: Check on service [192.168.216.53]: failed after retry.

Apr :: web1 Keepalived_healthcheckers[]: Removing service [192.168.216.53]: from VS [192.168.216.200]:

16 Apr 24 17:44:37 web1 Keepalived_healthcheckers[50390]: HTTP status code success to [192.168.216.53]:80 url(1).

17 Apr 24 17:44:37 web1 Keepalived_healthcheckers[50390]: Remote Web server [192.168.216.53]:80 succeed on service.

18 Apr 24 17:44:37 web1 Keepalived_healthcheckers[50390]: Adding service [192.168.216.53]:80 to VS [192.168.216.200]:80

[root@web1 keepalived]#

3、验证sorry-server

web3/web4

systemctl stop httpd

web1上查看

[root@web1 keepalived]# cat /var/log/keepalived.log |tail -

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

Apr :: web1 Keepalived_healthcheckers[]: Check on service [192.168.216.53]: failed after retry.

Apr :: web1 Keepalived_healthcheckers[]: Removing service [192.168.216.53]: from VS [192.168.216.200]:

Apr :: web1 Keepalived_healthcheckers[]: HTTP status code success to [192.168.216.53]: url().

Apr :: web1 Keepalived_healthcheckers[]: Remote Web server [192.168.216.53]: succeed on service.

Apr :: web1 Keepalived_healthcheckers[]: Adding service [192.168.216.53]: to VS [192.168.216.200]:

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.54]:.

[root@web1 keepalived]# cat /var/log/keepalived.log |tail -

Apr :: web1 Keepalived_healthcheckers[]: Adding service [192.168.216.53]: to VS [192.168.216.200]:

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.54]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.53]:.

Apr :: web1 Keepalived_healthcheckers[]: Check on service [192.168.216.53]: failed after retry.

Apr :: web1 Keepalived_healthcheckers[]: Removing service [192.168.216.53]: from VS [192.168.216.200]:

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.54]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.54]:.

[root@web1 keepalived]# cat /var/log/keepalived.log |tail -

Apr :: web1 Keepalived_healthcheckers[]: Check on service [192.168.216.53]: failed after retry.

Apr :: web1 Keepalived_healthcheckers[]: Removing service [192.168.216.53]: from VS [192.168.216.200]:

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.54]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.54]:.

Apr :: web1 Keepalived_healthcheckers[]: Error connecting server [192.168.216.54]:.

Apr :: web1 Keepalived_healthcheckers[]: Check on service [192.168.216.54]: failed after retry.

Apr :: web1 Keepalived_healthcheckers[]: Removing service [192.168.216.54]: from VS [192.168.216.200]:

Apr :: web1 Keepalived_healthcheckers[]: Lost quorum -= > for VS [192.168.216.200]:

32 Apr 24 17:47:46 web1 Keepalived_healthcheckers[50390]: Adding sorry server [127.0.0.1]:80 to VS [192.168.216.200]:80

33 Apr 24 17:47:46 web1 Keepalived_healthcheckers[50390]: Removing alive servers from the pool for VS [192.168.216.200]:80

日志显示,Adding sorry server

转载请注明出处:https://www.cnblogs.com/zhangxingeng/p/10743501.html

Centos7+LVS-DR+keepalived实验(包含sorry-server、日志、及HTTP-GET的健康检测)的更多相关文章

- centos LB负载均衡集群 三种模式区别 LVS/NAT 配置 LVS/DR 配置 LVS/DR + keepalived配置 nginx ip_hash 实现长连接 LVS是四层LB 注意down掉网卡的方法 nginx效率没有LVS高 ipvsadm命令集 测试LVS方法 第三十三节课

centos LB负载均衡集群 三种模式区别 LVS/NAT 配置 LVS/DR 配置 LVS/DR + keepalived配置 nginx ip_hash 实现长连接 LVS是四层LB ...

- LVS DR模式实验

LVS DR模式实验 三台虚拟机,两个台节点机(Apache),一台DR实验调度机 一:关闭相关安全机制 systemctl stop firewalld iptables -F setenforce ...

- lvs/dr+keepalived搭建成功后,开启防火墙,虚拟IP不能访问,但是真实IP却可以访问

lvs/dr+keepalived搭建成功后,开启防火墙,虚拟IP不能访问,但是真实IP却可以访问,如果关闭防火墙虚拟IP就可以访问网站了,这个问题肯定是防火墙在作怪. 经过这两的不懈奋斗和大家的帮助 ...

- RHEL 5.4下部署LVS(DR)+keepalived实现高性能高可用负载均衡

原文地址:http://www.cnblogs.com/mchina/archive/2012/05/23/2514728.html 一.简介 LVS是Linux Virtual Server的简写, ...

- Linux下部署LVS(DR)+keepalived+Nginx负载均衡

架构部署 LVS/keepalived(master):192.168.21.3 LVS/keepalived(Slave):192.168.21.6 Nginx1:192.168.21.4 N ...

- VM虚拟机上 实现CentOS 6.X下部署LVS(DR)+keepalived实现高性能高可用负载均衡

一.简介 LVS是Linux Virtual Server的简写,意即Linux虚拟服务器,是一个虚拟的服务器集群系统.本项目在1998年5月由章文嵩博士成立,是中国国内最早出现的自由软件项目之一. ...

- RHEL 5.4下部署LVS(DR)+keepalived实现高性能高可用负载均衡(转)

一.简介 LVS是Linux Virtual Server的简写,意即Linux虚拟服务器,是一个虚拟的服务器集群系统.本项目在1998年5月由章文嵩博士成立,是中国国内最早出现的自由软件项目之一. ...

- 虚拟机 搭建LVS + DR + keepalived 高可用负载均衡

一:环境说明: LVS-DR-Master: 10.3.0.82 LVS-DR-Backup: 10.3.0.70 VIP: 10.3.0.60 ...

- lvs(dr)+keepalived

系统:centos6.5mini 环境: 机器名 Ip地址 角色 Vip-web: 192.168.20.50 Vip-mysql: 192.168.20.60 lvs01 192.168.20.10 ...

随机推荐

- ffmpeg 获得视频的时间长度, 仅仅学习一下

public static void main(String[] args) { String result = processFLV("E:\\test\\京视传媒\\体育类\\xiao. ...

- SA SD SE 区别

[SA(System Analysis)系统分析师] 通过一系列分析手法把User想要的结果,以各种文件方式表达出来. 此过程着重于工作流程和处理逻辑. 规划系统功能和模块. 定出初步的数据库内容及系 ...

- 教你如何使用Java手写一个基于数组实现的队列

一.概述 队列,又称为伫列(queue),是先进先出(FIFO, First-In-First-Out)的线性表.在具体应用中通常用链表或者数组来实现.队列只允许在后端(称为rear)进行插入操作,在 ...

- OKHttp源码学习同步请求和异步请求(二)

OKHttp get private void doGet(String method, String s) throws IOException { String url = urlAddress ...

- 华为云(ECS)-linux服务器中-Ubuntu图形界面安装-解决root登录受限-VNCviwer/Teamviwer远程访问教程

安装ubuntu-desktop .更新软件库 apt-get update .升级软件 apt-get upgrade .安装桌面 apt-get install ubuntu-desktop 解决 ...

- MySQL 上手教程

安装 通过官网选择版本下载安装.Mac 上可通过 Homebrew 方便地安装: $ brew install mysql 检查安装是否成功: $ mysql --version mysql Ver ...

- Java核心技术梳理-集合

一.前言 在日常开发中,我们经常会碰到需要在运行时才知道对象个数的情况,这种情况不能使用数组,因为数组是固定数量的,这个时候我们就会使用集合,因为集合可以存储数量不确定的对象. 集合类是特别有用的工具 ...

- 基于Unity的AR开发初探:第一个AR应用程序

记得2014年曾经写过一个Unity3D的游戏开发初探系列,收获了很多好评和鼓励,不过自那之后再也没有用过Unity,因为没有相关的需求让我能用到.目前公司有一个App开发的需求,想要融合一下AR到A ...

- asp.net core系列 51 Identity 授权(下)

1.6 基于资源的授权 前面二篇中,熟悉了五种授权方式(对于上篇讲的策略授权,还有IAuthorizationPolicyProvider的自定义授权策略提供程序没有讲,后面再补充).本篇讲的授权方式 ...

- 30分钟玩转Net MVC 基于WebUploader的大文件分片上传、断网续传、秒传(文末附带demo下载)

现在的项目开发基本上都用到了上传文件功能,或图片,或文档,或视频.我们常用的常规上传已经能够满足当前要求了, 然而有时会出现如下问题: 文件过大(比如1G以上),超出服务端的请求大小限制: 请求时间过 ...