【深度学习】吴恩达网易公开课练习(class1 week3)

知识点梳理

python工具使用:

- sklearn: 数据挖掘,数据分析工具,内置logistic回归

- matplotlib: 做图工具,可绘制等高线等

- 绘制散点图: plt.scatter(X[0, :], X[1, :], c=np.squeeze(Y), s=40, cmap=plt.cm.Spectral); s:绘制点大小 cmap:颜色集

- 绘制等高线: 先做网格,计算结果,绘图

x_min, x_max = X[0, :].min() - 1, X[0, :].max() + 1y_min, y_max = X[1, :].min() - 1, X[1, :].max() + 1h = 0.01xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h))Z = model(np.c_[xx.ravel(), yy.ravel()])Z = Z.reshape(xx.shape)#xx是x轴值, yy是y轴值, Z是预测结果值, cmap表示采用什么颜色plt.contourf(xx, yy, Z, cmap=plt.cm.Spectral)

关键变量:

- m: 训练样本数量

- n_x:一个训练样本的输入数量,输入层大小

- n_h:隐藏层大小

- 方括号上标[l]: 第l层

- 圆括号上标(i): 第i个样本

$$

X =

\left[

\begin{matrix}

\vdots & \vdots & \vdots & \vdots \\

x^{(1)} & x^{(2)} & \vdots & x^{(m)} \\

\vdots & \vdots & \vdots & \vdots \\

\end{matrix}

\right]_{(n\_x, m)}

$$

$$

W^{[1]} =

\left[

\begin{matrix}

\cdots & w^{[1] T}_1 & \cdots \\

\cdots & w^{[1] T}_2 & \cdots \\

\cdots & \cdots & \cdots \\

\cdots & w^{[1] T}_{n\_h} & \cdots \\

\end{matrix}

\right]_{(n\_h, n\_x)}

$$

$$

b^{[1]} =

\left[

\begin{matrix}

b^{[1]}_1 \\

b^{[1]}_2 \\

\vdots \\

b^{[1]}_{n\_h} \\

\end{matrix}

\right]_{(n\_h, 1)}

$$

$$

A^{[1]}=

\left[

\begin{matrix}

\vdots & \vdots & \vdots & \vdots \\

a^{[1](1)} & a^{[1](2)} & \vdots & a^{[1](m)} \\

\vdots & \vdots & \vdots & \vdots \\

\end{matrix}

\right]_{(n\_h, m)}

$$

$$

Z^{[1]}=

\left[

\begin{matrix}

\vdots & \vdots & \vdots & \vdots \\

z^{[1](1)} & z^{[1](2)} & \vdots & z^{[1](m)} \\

\vdots & \vdots & \vdots & \vdots \\

\end{matrix}

\right]_{(n\_h, m)}

$$

***

单隐层神经网络关键公式:

- 前向传播:

$$Z^{[1]}=W^{[1]}X+b^{[1]}$$

$$A^{[1]}=g^{[1]}(Z^{[1]})$$

$$Z^{[2]}=W^{[2]}A^{[1]}+b^{[2]}$$

$$A^{[2]}=g^{[2]}(Z^{[2]})$$

Z1 = np.dot(W1, X) + b1A1 = np.tanh(Z1)Z2 = np.dot(W2, A1) + b2A2 = sigmoid(Z2)

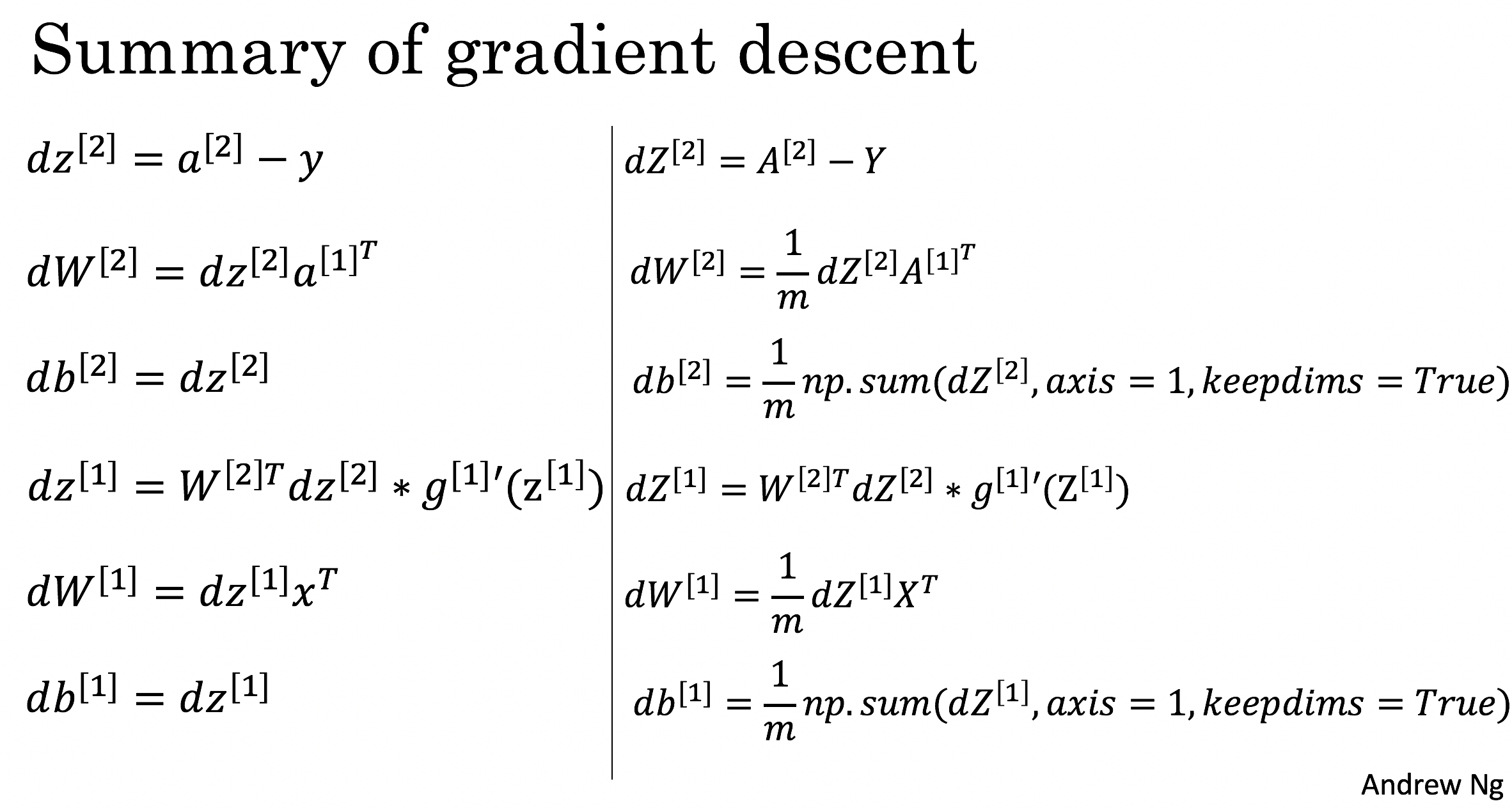

- 反向传播

dZ2 = A2 - YdW2 = 1 / m * np.dot(dZ2, A1.T)db2 = 1 / m * np.sum(dZ2, axis = 1, keepdims = True)dZ1 = np.dot(W2.T, dZ2) * (1 - np.power(A1, 2))dW1 = 1 / m * np.dot(dZ1, X.T)db1 = 1 / m * np.sum(dZ1, axis = 1, keepdims = True)

- cost计算

\]

logprobs = np.multiply(np.log(A2), Y) + np.multiply(np.log(1 - A2), 1 - Y)cost = - 1 / m * np.sum(logprobs)

单隐层神经网络代码:

# Package importsimport numpy as npimport matplotlib.pyplot as pltimport sklearnimport sklearn.datasetsimport sklearn.linear_modelfrom planar_utils import plot_decision_boundary, sigmoid, load_planar_dataset, load_extra_datasets%matplotlib inlinenp.random.seed(1) # set a seed so that the results are consistentdef initialize_parameters(n_x, n_h, n_y):np.random.seed(2) # we set up a seed so that your output matches ours although the initialization is random.W1 = np.random.randn(n_h, n_x) * 0.01b1 = np.zeros((n_h, 1))W2 = np.random.randn(n_y, n_h)b2 = np.zeros((n_y, 1))assert (W1.shape == (n_h, n_x))assert (b1.shape == (n_h, 1))assert (W2.shape == (n_y, n_h))assert (b2.shape == (n_y, 1))parameters = {"W1": W1,"b1": b1,"W2": W2,"b2": b2}return parametersdef forward_propagation(X, parameters):# Retrieve each parameter from the dictionary "parameters"W1 = parameters["W1"]b1 = parameters["b1"]W2 = parameters["W2"]b2 = parameters["b2"]# Implement Forward Propagation to calculate A2 (probabilities)Z1 = np.dot(W1, X) + b1A1 = np.tanh(Z1)Z2 = np.dot(W2, A1) + b2A2 = sigmoid(Z2)assert(A2.shape == (1, X.shape[1]))cache = {"Z1": Z1,"A1": A1,"Z2": Z2,"A2": A2}return A2, cachedef compute_cost(A2, Y, parameters):m = Y.shape[1] # number of example# Compute the cross-entropy costlogprobs = np.multiply(np.log(A2), Y) + np.multiply(np.log(1 - A2), 1 - Y)cost = - 1 / m * np.sum(logprobs)cost = np.squeeze(cost) # makes sure cost is the dimension we expect.# E.g., turns [[17]] into 17assert(isinstance(cost, float))return costdef backward_propagation(parameters, cache, X, Y):m = X.shape[1]# First, retrieve W1 and W2 from the dictionary "parameters".W1 = parameters["W1"]W2 = parameters["W2"]# Retrieve also A1 and A2 from dictionary "cache".A1 = cache["A1"]A2 = cache["A2"]# Backward propagation: calculate dW1, db1, dW2, db2.dZ2 = A2 - YdW2 = 1 / m * np.dot(dZ2, A1.T)db2 = 1 / m * np.sum(dZ2, axis = 1, keepdims = True)dZ1 = np.dot(W2.T, dZ2) * (1 - np.power(A1, 2))dW1 = 1 / m * np.dot(dZ1, X.T)db1 = 1 / m * np.sum(dZ1, axis = 1, keepdims = True)grads = {"dW1": dW1,"db1": db1,"dW2": dW2,"db2": db2}return gradsdef update_parameters(parameters, grads, learning_rate = 0.8):# Retrieve each parameter from the dictionary "parameters"W1 = parameters["W1"]b1 = parameters["b1"]W2 = parameters["W2"]b2 = parameters["b2"]# Retrieve each gradient from the dictionary "grads"dW1 = grads["dW1"]db1 = grads["db1"]dW2 = grads["dW2"]db2 = grads["db2"]# Update rule for each parameterW1 = W1 - learning_rate * dW1b1 = b1 - learning_rate * db1W2 = W2 - learning_rate * dW2b2 = b2 - learning_rate * db2parameters = {"W1": W1,"b1": b1,"W2": W2,"b2": b2}return parametersdef nn_model(X, Y, n_h, num_iterations = 10000, print_cost=False):np.random.seed(3)n_x = X.shape[0]n_y = Y.shape[0]# Initialize parameters, then retrieve W1, b1, W2, b2. Inputs: "n_x, n_h, n_y". Outputs = "W1, b1, W2, b2, parameters".parameters = initialize_parameters(n_x, n_h, n_y)W1 = parameters["W1"]b1 = parameters["b1"]W2 = parameters["W2"]b2 = parameters["b2"]# Loop (gradient descent)for i in range(0, num_iterations):# Forward propagation. Inputs: "X, parameters". Outputs: "A2, cache".A2, cache = forward_propagation(X, parameters)# Cost function. Inputs: "A2, Y, parameters". Outputs: "cost".cost = compute_cost(A2, Y, parameters)# Backpropagation. Inputs: "parameters, cache, X, Y". Outputs: "grads".grads = backward_propagation(parameters, cache, X, Y)# Gradient descent parameter update. Inputs: "parameters, grads". Outputs: "parameters".parameters = update_parameters(parameters, grads)# Print the cost every 1000 iterationsif print_cost and i % 1000 == 0:print ("Cost after iteration %i: %f" %(i, cost))return parametersdef predict(parameters, X):# Computes probabilities using forward propagation, and classifies to 0/1 using 0.5 as the threshold.A2, cache = forward_propagation(X, parameters)predictions = A2 > 0.5return predictionsX, Y = load_planar_dataset()# Build a model with a n_h-dimensional hidden layerparameters = nn_model(X, Y, n_h = 4, num_iterations = 10000, print_cost=True)# Plot the decision boundaryplot_decision_boundary(lambda x: predict(parameters, x.T), X, np.squeeze(Y))plt.title("Decision Boundary for hidden layer size " + str(4))

# planar_utils.pyimport matplotlib.pyplot as pltimport numpy as npimport sklearnimport sklearn.datasetsimport sklearn.linear_modeldef plot_decision_boundary(model, X, y):# Set min and max values and give it some paddingx_min, x_max = X[0, :].min() - 1, X[0, :].max() + 1y_min, y_max = X[1, :].min() - 1, X[1, :].max() + 1h = 0.01# Generate a grid of points with distance h between them# 创造网格,以0.01为间隔划分整个区间xx, yy = np.meshgrid(np.arange(x_min, x_max, h), np.arange(y_min, y_max, h))# Predict the function value for the whole grid# 计算每个网格点上的预测结果Z = model(np.c_[xx.ravel(), yy.ravel()])# 将预测结果变形为与网格形式一致Z = Z.reshape(xx.shape)# Plot the contour and training examples# xx是x轴值, yy是y轴值, Z是预测结果值, cmap表示采用什么颜色plt.contourf(xx, yy, Z, cmap=plt.cm.Spectral) #等位线plt.ylabel('x2')plt.xlabel('x1')plt.scatter(X[0, :], X[1, :], c=y, cmap=plt.cm.Spectral)def sigmoid(x):"""Compute the sigmoid of xArguments:x -- A scalar or numpy array of any size.Return:s -- sigmoid(x)"""s = 1/(1+np.exp(-x))return sdef load_planar_dataset():np.random.seed(1)m = 400 # number of examplesN = int(m/2) # number of points per classD = 2 # dimensionalityX = np.zeros((m,D)) # data matrix where each row is a single exampleY = np.zeros((m,1), dtype='uint8') # labels vector (0 for red, 1 for blue)a = 4 # maximum ray of the flowerfor j in range(2):ix = range(N*j,N*(j+1))t = np.linspace(j*3.12,(j+1)*3.12,N) + np.random.randn(N)*0.2 # thetar = a*np.sin(4*t) + np.random.randn(N)*0.2 # radiusX[ix] = np.c_[r*np.sin(t), r*np.cos(t)]Y[ix] = jX = X.TY = Y.Treturn X, Ydef load_extra_datasets():N = 200noisy_circles = sklearn.datasets.make_circles(n_samples=N, factor=.5, noise=.3)noisy_moons = sklearn.datasets.make_moons(n_samples=N, noise=.2)blobs = sklearn.datasets.make_blobs(n_samples=N, random_state=5, n_features=2, centers=6)gaussian_quantiles = sklearn.datasets.make_gaussian_quantiles(mean=None, cov=0.5, n_samples=N, n_features=2, n_classes=2, shuffle=True, random_state=None)no_structure = np.random.rand(N, 2), np.random.rand(N, 2)return noisy_circles, noisy_moons, blobs, gaussian_quantiles, no_structure

【深度学习】吴恩达网易公开课练习(class1 week3)的更多相关文章

- 【深度学习】吴恩达网易公开课练习(class1 week4)

概要 class1 week3的任务是实现单隐层的神经网络代码,而本次任务是实现有L层的多层深度全连接神经网络.关键点跟class3的基本相同,算清各个参数的维度即可. 关键变量: m: 训练样本数量 ...

- 【深度学习】吴恩达网易公开课练习(class1 week2)

知识点汇总 作业内容:用logistic回归对猫进行分类 numpy知识点: 查看矩阵维度: x.shape 初始化0矩阵: np.zeros((dim1, dim2)) 去掉矩阵中大小是1的维度: ...

- 【深度学习】吴恩达网易公开课练习(class2 week1 task2 task3)

正则化 定义:正则化就是在计算损失函数时,在损失函数后添加权重相关的正则项. 作用:减少过拟合现象 正则化有多种,有L1范式,L2范式等.一种常用的正则化公式 \[J_{regularized} = ...

- 【深度学习】吴恩达网易公开课练习(class2 week1)

权重初始化 参考资料: 知乎 CSDN 权重初始化不能全部为0,不能都是同一个值.原因是,如果所有的初始权重是相同的,那么根据前向和反向传播公式,之后每一个权重的迭代过程也是完全相同的.结果就是,无论 ...

- 深度学习 吴恩达深度学习课程2第三周 tensorflow实践 参数初始化的影响

博主 撸的 该节 代码 地址 :https://github.com/LemonTree1994/machine-learning/blob/master/%E5%90%B4%E6%81%A9%E8 ...

- cousera 深度学习 吴恩达 第一课 第二周 学习率对优化结果的影响

本文代码实验地址: https://github.com/guojun007/logistic_regression_learning_rate cousera 上的作业是 编写一个 logistic ...

- 2017年度好视频,吴恩达、李飞飞、Hinton、OpenAI、NIPS、CVPR、CS231n全都在

我们经常被问:机器翻译迭代了好几轮,专业翻译的饭碗都端不稳了,字幕组到底还能做什么? 对于这个问题,我们自己感受最深,却又来不及解释,就已经边感受边做地冲出去了很远,摸爬滚打了一整年. 其实,现在看来 ...

- 第19月第8天 斯坦福大学公开课机器学习 (吴恩达 Andrew Ng)

1.斯坦福大学公开课机器学习 (吴恩达 Andrew Ng) http://open.163.com/special/opencourse/machinelearning.html 笔记 http:/ ...

- 吴恩达深度学习第4课第3周编程作业 + PIL + Python3 + Anaconda环境 + Ubuntu + 导入PIL报错的解决

问题描述: 做吴恩达深度学习第4课第3周编程作业时导入PIL包报错. 我的环境: 已经安装了Tensorflow GPU 版本 Python3 Anaconda 解决办法: 安装pillow模块,而不 ...

随机推荐

- java基础_0204:运算符

掌握Java中标识符的定义: 掌握Java中数据类型的划分以及基本数据类型的使用原则: 掌握Java运算符的使用: 掌握Java分支结构.循环结构.循环控制语法的使用: 掌握方法的定义结构以及方法重载 ...

- Jmeter Md5加密操作之-------BeanShell PreProcessor

背景: 有一些登录会做一些md5校验,通过jmeter的BeanShell可以解决MD5加密情况. 1.首先需要一个解码的jar包,commons-codec-1.10.jar(网上很多),下载后,放 ...

- 源码学习之mybatis

1.先看看俩种调用方式 public static void main(String[] args) { SqlSessionFactory sqlSessionFactory; SqlSession ...

- Web项目笔记(一)JSONP跨域请求及其概念

https://blog.csdn.net/u014607184/article/details/52027879 https://blog.csdn.net/saytime/article/deta ...

- Flask网页模板的入门

#网页模板需要导入render_template from flask import Flask,render_template 方法一: #使用render_template模块来渲染模板文件 ...

- Serializable 和Parcelable 的区别

1.作用 Serializable的作用是为了保存对象的属性到本地文件.数据库.网络流.RMI(Remote Method Invocation)以方便数据传输,当然这种传输可以是程序内的也可以是两个 ...

- Linux就该这么学(1)-系统概述(学习笔记)

一.热门的Linux系统开源许可协议 GNU GPL(GNU General Public License,GNU 通用公共许可证) BSD(Berkeley Software Distributio ...

- Redis 通过 info 查看信息和状态

INFO INFO [section] 以一种易于解释(parse)且易于阅读的格式,返回关于 Redis 服务器的各种信息和统计数值. 通过给定可选的参数 section ,可以让命令只返回某一部分 ...

- requests库入门07-patch请求

使用data参数提交 设置邮件能见度,这个接口用来设置邮件是公共可见,还是私有的 import requests test_url = 'https://api.github.com' def get ...

- 关于session,cookie,Cache

昨天看了<ASP.NET 页面之间传值的几种方式>之后,对session,cookie,Cache有了更近一步的了解,以下是相关的内容 一.Session 1.Session基本操作 a. ...