opencv4 mask_rcnn模型调(c++)

昨天有人问我关于调用mask_rcnn模型的问题,忽然想到最近三个月都没用opencv调用训练好的mask_rcnn模型了,今晚做个尝试,所以重新编译了 opencv4,跑个案例试试

#include <fstream>

#include <sstream>

#include <iostream>

#include <string.h> #include <opencv2/dnn.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp> using namespace cv;

using namespace dnn;

using namespace std; RNG rng1; // Initialize the parameters

float confThreshold = 0.5; // Confidence threshold

float maskThreshold = 0.3; // Mask threshold //vector<string> classes;

//vector<Scalar> colors; // Draw the predicted bounding box

void drawBox(Mat& frame, int classId, float conf, Rect box, Mat& objectMask); // Postprocess the neural network's output for each frame

void postprocess(Mat& frame, const vector<Mat>& outs); int main()

{

// Give the configuration and weight files for the model

//String textGraph = "./mask_rcnn_inception_v2_coco_2018_01_28/mask_rcnn_inception_v2_coco_2018_01_28.pbtxt";

//String modelWeights = "./mask_rcnn_inception_v2_coco_2018_01_28/frozen_inference_graph.pb"; String modelWeights = "E:\\Opencv\\model_1\\mask_rcnn_inception_v2_coco_2018_01_28\\frozen_inference_graph.pb";

String textGraph = "E:\\Opencv\\model_1\\mask_rcnn_inception_v2_coco_2018_01_28\\mask_rcnn_inception_v2_coco_2018_01_28.pbtxt";

// Load the network

Net net = readNetFromTensorflow(modelWeights, textGraph);

net.setPreferableBackend(DNN_BACKEND_OPENCV);

net.setPreferableTarget(DNN_TARGET_CPU); // Open a video file or an image file or a camera stream.

string str, outputFile;

VideoCapture cap();//根据摄像头端口id不同,修改下即可

//VideoWriter video;

Mat frame, blob; // Create a window

static const string kWinName = "Deep learning object detection in OpenCV";

namedWindow(kWinName, WINDOW_NORMAL); // Process frames.

if (>)

{

// get frame from the video

//cap >> frame;

frame = cv::imread("D:\\image\\5.png"); // Stop the program if reached end of video

if (frame.empty())

{

cout << "Done processing !!!" << endl;

cout << "Output file is stored as " << outputFile << endl;

}

// Create a 4D blob from a frame.

blobFromImage(frame, blob, 1.0, Size(frame.cols, frame.rows), Scalar(), true, false);

//blobFromImage(frame, blob); //Sets the input to the network

net.setInput(blob); // Runs the forward pass to get output from the output layers

std::vector<String> outNames();

outNames[] = "detection_out_final";

outNames[] = "detection_masks";

vector<Mat> outs;

net.forward(outs, outNames); // Extract the bounding box and mask for each of the detected objects

postprocess(frame, outs); // Put efficiency information. The function getPerfProfile returns the overall time for inference(t) and the timings for each of the layers(in layersTimes)

vector<double> layersTimes;

double freq = getTickFrequency() / ;

double t = net.getPerfProfile(layersTimes) / freq;

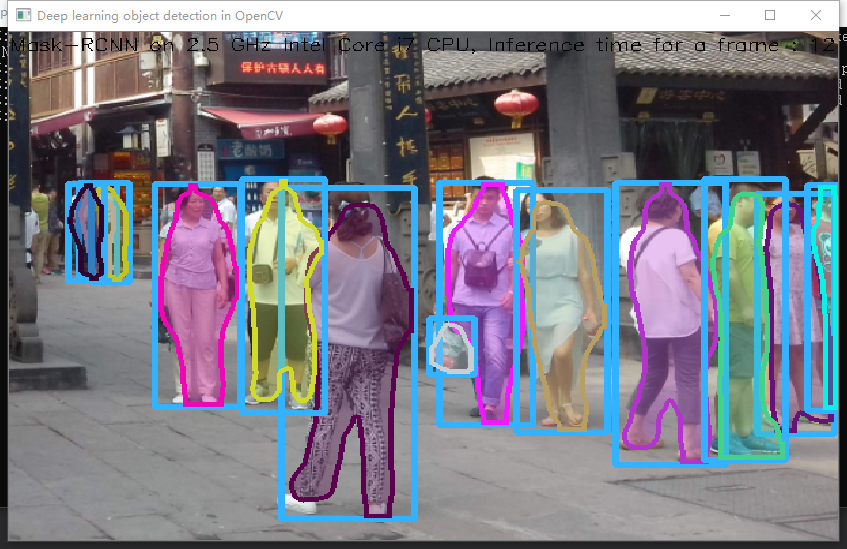

string label = format("Mask-RCNN on 2.5 GHz Intel Core i7 CPU, Inference time for a frame : %0.0f ms", t);

putText(frame, label, Point(, ), FONT_HERSHEY_SIMPLEX, 0.5, Scalar(, , )); // Write the frame with the detection boxes

Mat detectedFrame;

frame.convertTo(detectedFrame, CV_8U); imshow(kWinName, frame); }

//cap.release();

waitKey();

return ;

} // For each frame, extract the bounding box and mask for each detected object

void postprocess(Mat& frame, const vector<Mat>& outs)

{

Mat outDetections = outs[];

Mat outMasks = outs[]; // Output size of masks is NxCxHxW where

// N - number of detected boxes

// C - number of classes (excluding background)

// HxW - segmentation shape

const int numDetections = outDetections.size[];

const int numClasses = outMasks.size[]; outDetections = outDetections.reshape(, outDetections.total() / );

for (int i = ; i < numDetections; ++i)

{

float score = outDetections.at<float>(i, );

if (score > confThreshold)

{

// Extract the bounding box

int classId = static_cast<int>(outDetections.at<float>(i, ));

int left = static_cast<int>(frame.cols * outDetections.at<float>(i, ));

int top = static_cast<int>(frame.rows * outDetections.at<float>(i, ));

int right = static_cast<int>(frame.cols * outDetections.at<float>(i, ));

int bottom = static_cast<int>(frame.rows * outDetections.at<float>(i, )); left = max(, min(left, frame.cols - ));

top = max(, min(top, frame.rows - ));

right = max(, min(right, frame.cols - ));

bottom = max(, min(bottom, frame.rows - ));

Rect box = Rect(left, top, right - left + , bottom - top + ); // Extract the mask for the object

Mat objectMask(outMasks.size[], outMasks.size[], CV_32F, outMasks.ptr<float>(i, classId)); // Draw bounding box, colorize and show the mask on the image

drawBox(frame, classId, score, box, objectMask); }

}

} // Draw the predicted bounding box, colorize and show the mask on the image

void drawBox(Mat& frame, int classId, float conf, Rect box, Mat& objectMask)

{

//Draw a rectangle displaying the bounding box

rectangle(frame, Point(box.x, box.y), Point(box.x + box.width, box.y + box.height), Scalar(, , ), ); //Get the label for the class name and its confidence

/*string label = format("%.2f", conf);

if (!classes.empty())

{

CV_Assert(classId < (int)classes.size());

label = classes[classId] + ":" + label;

}*/ //Display the label at the top of the bounding box

/*

int baseLine;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

box.y = max(box.y, labelSize.height);

rectangle(frame, Point(box.x, box.y - round(1.5*labelSize.height)), Point(box.x + round(1.5*labelSize.width), box.y + baseLine), Scalar(255, 255, 255), FILLED);

putText(frame, label, Point(box.x, box.y), FONT_HERSHEY_SIMPLEX, 0.75, Scalar(0, 0, 0), 1);

*/

//Scalar color = colors[classId%colors.size()];

Scalar color = Scalar(rng1.uniform(, ), rng1.uniform(, ), rng1.uniform(, )); // Resize the mask, threshold, color and apply it on the image

resize(objectMask, objectMask, Size(box.width, box.height));

Mat mask = (objectMask > maskThreshold);

Mat coloredRoi = (0.3 * color + 0.7 * frame(box));

coloredRoi.convertTo(coloredRoi, CV_8UC3); // Draw the contours on the image

vector<Mat> contours;

Mat hierarchy;

mask.convertTo(mask, CV_8U);

findContours(mask, contours, hierarchy, RETR_CCOMP, CHAIN_APPROX_SIMPLE);

drawContours(coloredRoi, contours, -, color, , LINE_8, hierarchy, );

coloredRoi.copyTo(frame(box), mask); }

检测速度和python比起来偏慢

运行日志:

[ INFO:0] global E:\Opencv\opencv-4.1.1\modules\videoio\src\videoio_registry.cpp (187) cv::`anonymous-namespace'::VideoBackendRegistry::VideoBackendRegistry VIDEOIO: Enabled backends(7, sorted by priority): FFMPEG(1000); GSTREAMER(990); INTEL_MFX(980); MSMF(970); DSHOW(960); CV_IMAGES(950); CV_MJPEG(940)

[ INFO:0] global E:\Opencv\opencv-4.1.1\modules\videoio\src\backend_plugin.cpp (340) cv::impl::getPluginCandidates Found 2 plugin(s) for GSTREAMER

[ INFO:0] global E:\Opencv\opencv-4.1.1\modules\videoio\src\backend_plugin.cpp (172) cv::impl::DynamicLib::libraryLoad load E:\Opencv\opencv_4_1_1_install\bin\opencv_videoio_gstreamer411_64.dll => FAILED

[ INFO:0] global E:\Opencv\opencv-4.1.1\modules\videoio\src\backend_plugin.cpp (172) cv::impl::DynamicLib::libraryLoad load opencv_videoio_gstreamer411_64.dll => FAILED

[ INFO:0] global E:\Opencv\opencv-4.1.1\modules\core\src\ocl.cpp (888) cv::ocl::haveOpenCL Initialize OpenCL runtime...

opencv4 mask_rcnn模型调(c++)的更多相关文章

- 使用sklearn进行数据挖掘-房价预测(6)—模型调优

通过上一节的探索,我们会得到几个相对比较满意的模型,本节我们就对模型进行调优 网格搜索 列举出参数组合,直到找到比较满意的参数组合,这是一种调优方法,当然如果手动选择并一一进行实验这是一个十分繁琐的工 ...

- 【新人赛】阿里云恶意程序检测 -- 实践记录10.27 - TF-IDF模型调参 / 数据可视化

TF-IDF模型调参 1. 调TfidfVectorizer的参数 ngram_range, min_df, max_df: 上一篇博客调了ngram_range这个参数,得出了ngram_range ...

- 深度学习模型调优方法(Deep Learning学习记录)

深度学习模型的调优,首先需要对各方面进行评估,主要包括定义函数.模型在训练集和测试集拟合效果.交叉验证.激活函数和优化算法的选择等. 那如何对我们自己的模型进行判断呢?——通过模型训练跑代码,我们可以 ...

- python的随机森林模型调参

一.一般的模型调参原则 1.调参前提:模型调参其实是没有定论,需要根据不同的数据集和不同的模型去调.但是有一些调参的思想是有规律可循的,首先我们可以知道,模型不准确只有两种情况:一是过拟合,而是欠拟合 ...

- 机器学习笔记——模型调参利器 GridSearchCV(网格搜索)参数的说明

GridSearchCV,它存在的意义就是自动调参,只要把参数输进去,就能给出最优化的结果和参数.但是这个方法适合于小数据集,一旦数据的量级上去了,很难得出结果.这个时候就是需要动脑筋了.数据量比较大 ...

- 【新人赛】阿里云恶意程序检测 -- 实践记录11.3 - n-gram模型调参

主要工作 本周主要是跑了下n-gram模型,并调了下参数.大概看了几篇论文,有几个处理方法不错,准备下周代码实现一下. xgboost参数设置为: param = {'max_depth': 6, ' ...

- 【新人赛】阿里云恶意程序检测 -- 实践记录10.20 - 数据预处理 / 训练数据分析 / TF-IDF模型调参

Colab连接与数据预处理 Colab连接方法见上一篇博客 数据预处理: import pandas as pd import pickle import numpy as np # 训练数据和测试数 ...

- tenorflow 模型调优

# Create the Timeline object, and write it to a json from tensorflow.python.client import timeline t ...

- SPSS数据分析-时间序列模型

我们在分析数据时,经常会碰到一种数据,它是由时间累积起来的,并按照时间顺序排列的一系列观测值,我们称为时间序列,它有点类似于重复测量数据,但是区别在于重复测量数据的时间点不会很多,而时间序列的时间点非 ...

随机推荐

- java发送邮件javamail, freemarker读取html模板内容

https://www.cnblogs.com/xdp-gacl/p/4216311.html 一.RFC882文档简单说明 RFC882文档规定了如何编写一封简单的邮件(纯文本邮件),一封简单的邮件 ...

- 执行DTS包将excel导入数据库

利用ssms生成dtsx文件,必须以32bit执行,到路径执行C:\Program Files (x86)\Microsoft SQL Server\110\DTS\Binn > dtexec ...

- loj #6342. 跳一跳 期望dp

令 $f[i]$ 表示已经到达 $i$ 点,为了到大 $n$ 点还期望需要的时间,随便转移一下就行. 由于本题卡空间,要记得开滚动数组. #include <bits/stdc++.h> ...

- 38、数据源Parquet之使用编程方式加载数据

一.概述 Parquet是面向分析型业务的列式存储格式,由Twitter和Cloudera合作开发,2015年5月从Apache的孵化器里毕业成为Apache顶级项目,最新的版本是1.8.0. 列式存 ...

- Angular惰性加载的特性模块

一:Angular-CLI建立应用 cmd命令:ng new lazy-app --routing (创建一个名叫 lazy-app 的应用,而 --routing 标识生成了一个名叫 app- ...

- Makefile(二)

VERSION = SOURCE = $(wildcard ./*.cpp) OBJ = $(patsubst %.cpp,%.o,$(SOURCE)) INCLUDE = -I /usr/inclu ...

- gdb tui设置默认窗口高度

gdb -p 12999 -tui 先显示win信息(输入:info win) 显示如下: SRC (35 lines) <has focus> CMD (17 lines) 我们要改的是 ...

- Lua chunk文件结构

1.lua执行经过: xx.lua源码文件------->执行(lua虚拟机) 隐式调用luac编译器 我们可以直接用luac命令去编译lua源码文件,然后用编译后的文件运行在lvm(lua虚拟 ...

- HDU 6086 Rikka with String ——(AC自动机 + DP)

这是一个AC自动机+dp的问题,在中间的串的处理可以枚举中断点来插入自动机内来实现,具体参见代码. 在这题上不止为何一直MLE,一直找不到结果(lyf相同写法的代码消耗内存较少),还好考虑到这题节点应 ...

- python中的__init__方法

init()方法意义重大的原因有两个.第一个原因是在对象生命周期中初始化是最重要的一步:每个对象必须正确初始化后才能正常工作.第二个原因是init()参数值可以有多种形式. __init__方法使用 ...