Hypothesis Testing

Hypothesis Testing

What's Hypothesis Testing(假设检验)

Hypothesis testing is the statistical assessment of a statement or idea regarding a population.

A hypothesis is a statement about the value of a population parameter level developed for the purpose of testing a theory or belief. Hypotheses are stated in terms of the population parameters to be tested, like the population mean, μ.

Hypothesis testing procedures, based on sample statistics and probability theory, are used to determine whether a hypothesis is a reasonable statement and should not be rejected or if it is an unreasonable statement and should be rejected.

Hypothesis Testing Procedures

- State the hypothesis

- Select the appropriate test statistic

- Specify the level of significance

- State the decision rule regarding the hypothesis

- Collect the sample and calculate the sample statistics

- Make a decision regarding the hypothesis

- Make a decision based on the results of the test

The Null Hypothesis(零假设/原假设) and Alternative Hypothesis(对立假设)

The null hypothesis (H0), is the hypothesis that the researcher wants to reject. It is the hypothesis that is actually tested and is the basis for the selection of the test statistics.

The null is generally stated as a simple statement about a population parameter.

The null hypothesis always includes the "equal to" condition.

The alternative hypothesis(Ha), is what is concluded if there is sufficient evidence to reject the null hypothesis. It is usually the alternative hypothesis that you are really trying to assess. Why? Since you can never really prove anything with statistics, when the null hypothesis is discredited, the implication is that the alternative hypothesis is valid.

原假设 (null hypothesis): 基于一种假设的系统模型,在这种假设下我们认为观测到的效应是有偶然因素造成的,即不是统计上显著的(statistically significant).

One-Tailed and Two-Tailed Tests of Hypothesis

A two-tailed test (双边检验)for the population mean may be structured as:

Since the alternative hypothesis allows for values above and below the hypothesized parameter, a two-tailed test uses two critical values (or rejection points).

The general decision rule for a two-tailed test is:

A one-tailed test (单边检验)of the population mean, the null and alternative hypotheses are either:

The appropriate set of hypothesis depends on whether we believe the population mean, μ, to be greater than (upper tail) or less than (lower tail) the hypothesized value, μ0.

The Choice of the Null and Alternative Hypothesis

The most common null hypothesis will be an "equal to" hypothesis. Combined with a "not equal to" alternative,, this will require a two-tailed test. The alternative if often the hoped-for hypothesis. When the null is that a coefficient is equal to zero, we hope to reject it and show the significance of the relationship.

When the null is less than or equal to, the (mutually exclusive) alternative if framed as greater than, and a one-tailed test is appropriate. If we are trying to demonstrate that a return is greater than the risk-free rate, this would be the correct formulation. We will have set up the null and alternative hypothesis so that rejection of the null will lead to acceptance of the alternative, our goal in performing the test.

Test Statistic(检验统计量)

Hypothesis testing involves two statistics: the test statistic calculated from the sample data and the critical value of the test statistic. The value of the computed test statistic relative to the critical value is a key step in assessing the validity of a hypothesis.

A test statistic is calculated by comparing the point estimate of the population parameter with the hypothesized value of the parameter (i.e., the value specified in the null hypothesis).

The test statistic is the difference between the sample statistic and the hypothesized value, scaled by the standard error of the sample statistic.

The standard error of the sample statistic is the adjusted standard deviation of the sample. When the sample statistic is the sample mean, the standard error of the sample statistic for sample size n, is calculated as:

when the population standard deviation, σ, is known, or

when the population standard deviation, σ, is not known. In this case, it is estimated using the standard deviation of the sample, s.

A test statistic is a random variable that may follow one of several distributions, depending on the characteristics of the sample and the population.

Type I and Type II Errors

- Type I error: the rejection of the null hypothesis when it is actually true. (错判/拒真)

- Type II error: the failure to reject the null hypothesis when it is actually false. (漏判/取伪)

Significance Level = P(Type I error)

The significance level (显著水平) is the probability of making a Type I error(rejecting the null when it is true) and is designated by the Geek letter alpha (α). For instance, a significance level of 5% (α=0.05) means there is 5% chance of rejecting a true null hypothesis. When conducting hypothesis test, a significance level must be specified in order to identify the critical values needed to evaluate the test statistic.

I类错误: 也称假阳性(false positive),指的是我们接受了一个本质为假的假设。也就是说,我们认为某个效应具有统计显著性,但是实际上该效应却是由偶然因素产生的。

II类错误:也称假阴性(false negative),指的是我们推翻了一个本质为真的假设。也就是说,我们将某个效应归结为随即产生的,但实际上真实存在。

假设检验中最常见的方法是为p值选择一个阈值(α), 一旦p值小于这个阈值,我们就推翻原假设。通常情况下,我们选择5%作为阈值(α)。

对于这类假设检验,我们可以得到出现假阳性的精确概率,指责该概率就是α值。

我们解释下原因,首先回顾假阳性和p值的定义:假阳性是值接受了一个不成立的假设,p值是指假设不成立时出现测量效应的概率。

两者结合起来,我们的问题是:如果选择α为显著性阈值,当假设不成立时,出现该测量效应的概率会是多少呢?答案就是α.

我们可以通过降低阈值来控制假阳性。例如如果我们设置阈值为1%,那么出现假阳性的概率就等于1%

但是降低假阳性也是有代价的。阈值的降低会导致判断效应确实存在的标准提高,这样推翻有效假设的可能性就会变大,即我们更有可能接受原假设。

一般来说,I类错误和II类错误之间存在一种权衡,同时降低这两种错误的唯一方法是增加样本数量(或者,在某些情况下降低测量误差).

The Power of a Test [ = 1-P(Type II Error)]

While the significance level of a test is the probability of rejecting the null hypothesis when it is true, the power of a test is the probability of correctly rejecting the null hypothesis when it is false. The power of a test is actually one minus probability of making a Type II error (1-P(Type II Error)). In other words, the probability of rejecting the null when it is false(power of the test) equals one minus the probability of not rejecting the null when it is false (Type II error). When more than one test statistic may be used, the power of the test for the competing test statistic may be useful in deciding which test statistic to use. Ordinarily, we wish to use the test statistic that provides the most powerful test among all possible tests.

Sample size and the choice of significance level(Type 1 error probability) will together determine the probability of a Type II error. The relation is not simple, however, and calculating the probability of a Type II error in practice is quite difficult. Decreasing the significance level(probability of a Type I error) from 5% to 1%,, for example, will increase the probability of failing to reject a false null(Type II error) and therefore reduce the power of the test. Conversely, for a given sample size, we can increase the power of a test only with the cost that the probability of rejecting a true null (Type I error) increase. For a given significance level, we can decrease the probability of a Type II error and increase the power of a test, only by increasing the sample size.

p-value

The p-value is the probability of obtaining a test statistic that would lead to a rejection of the null hypothesis, assuming the null hypothesis is true.

It is the smallest level of significance for which the null hypothesis can be rejected.

For one-tailed tests, the p-value is the probability that lies above the computed test statistic for upper tail tests or below the computed statistic for lower tail tests.

For two-tailed tests, the p-value is the probability that lies above the positive value of the computed test statistic plus the probability that lies below thee negative value of the computed test statistic.

P-value decision rule:

- Reject H0 if p-value < α

- Fail to reject H0 if p-value > α

p值(p-value): 在原假设下,出现直观效应的概率

Decision Rule

The decision for a hypothesis test is to either reject the null hypothesis or fail to reject the null hypothesis. Note that it is statistically incorrect to say "accept" the null hypothesis; it can only be supported or rejected.

The decision rule for rejecting or failing to reject the null hypothesis is based on the distribution of the test statistic. For example, if the test statistic follows a normal distribution, the decision rule is based on critical values determined from the standard normal distribution(z-distribution). Regardless of the appropriate distribution, it must be determined if a one-tailed or two-tailed hypothesis test is appropriate before a decision rule(reject rule) can be determined.

A decision rule is specific and quantitative. Once we have determined whether a one- or two-tailed test is appropriate, the significance level we require, and the distribution of the test statistic, we can calculate the exact critical value for the test statistic. Then we have a decision rule of the following form: if the test statistic is (greater, less than) the value X, reject the null.

The Relation Between Confidence Intervals and Hypothesis Tests

A confidence interval is a range of values within which the researcher believes the true population parameter may lie.

A confidence interval is determined as:

The interpretation of a confidence interval is that for a level of confidence of 95%, for example, there is a 95% probability that the true population parameter is contained in the interval.

From the previous expression, we see that a confidence interval and a hypothesis test are linked by the critical value. For example, a 95% confidence interval uses a critical value associated with a given distribution at the 5% level of significance. Similarly, a hypothesis test would compare a test statistic to a critical value at the 5% level of significance. To see this relationship more clearly, the expression for the confidence interval can be manipulated and restated as:

-critical value <= test statistic <= +critical value

This is the range within which we fail to reject the null for a two-tailed hypothesis test at a given level of significance.

Degree of Freedom

See "Degree of Freedom in Statistics"

In statistics, the number of degree of freedom is the number of values in the final calculation of a statistic that are free to vary.

The number of independent ways by which a dynamic system can move without violating any constraint imposed on it, is called degree of freedom. In other words, the degree of freedom can be defined as the minimum number of independent coordinates that can specify the position of the system completely.

Estimates of statistical parameters can be based upon different amounts of information or data. The number of independent pieces of information that go into the estimate of a parameter is called the degrees of freedom. In general, the degrees of freedom of an estimate of a parameter is equal to the number of independent scores that go into the estimate minus the number of parameters used as intermediate steps in the estimation of the parameter itself (i.e. the sample variance has N-1 degree of freedom, since it is computed from N random scores minus the only 1 parameter estimated as intermediate step, which is the sample mean).

See here for another example.

Consider, for example, the statistic S^2.

To calculate the S^2 of a random sample, we must first calculate the mean of that sample and then compute the sum of the several squared deviations from that mean. While there will be n such squared deviations, only (n-1) of them are, in fact, free to assume any value whatsoever. This is because the final squared deviation from the mean must include the one value of X such that the sum of all the Xs divided by n will equal the obtained mean of the sample. All of the other (n-ve any values whatsoever. For these reasons, the statisti1) squared deviations from the mean can, theoretically, hac S^2 is said to have only (n-1) degrees of freedom.

白话解释下这个例子,因为sample mean确定的情况下,N个数中间只有N-1个数是可以是任意值,而剩下的一个数的取值取决于另外N-1个数(因为要使得sample mean确定),所以自由度只能是N-1.

From Baidu Zhidao

自由度(degree of freedom, df)在数学中能够自由取值的变量个数,如有3个变量x、y、z,但x+y+z=18,因此其自由度等于2。在统计学中,自由度指的是计算某一统计量时,取值不受限制的变量个数。通常df=n-k。其中n为样本含量,k为被限制的条件数或变量个数,或计算某一统计量时用到其它独立统计量的个数。自由度通常用于抽样分布中。

The t-Test

When hypothesis testing, the choice between using a critical value based on the t-distribution or the z-distribution depends on sample size, distribution of the population, and whether or not the variance of the population is known.

The t-test is a widely used hypothesis test that employs a test statistic that is distributed according to a t-distribution.

Use the t-test if the population variance is unknown and either the following conditions exist:

- The sample is large (n>=30)

- The sample is small (less than 30) but the distribution of the population is normal or approximately normal.

If the sample is small and the distribution is non-normal, we have no reliable statistical test.

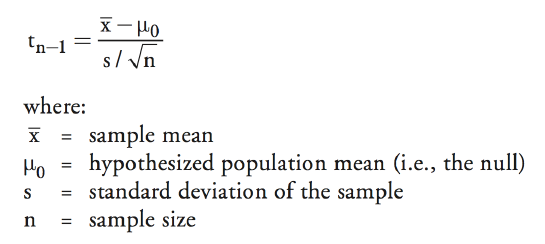

The computed value for the test statistic based on the t-distribution is referred to as the t-statistic. For hypothesis tests of a population mean, a t-statistic with n-1 degree of freedom is computed as:

To conduct a t-test, the t-statistic is compared to a critical t-value at the desired level of significance with the appropriate degree of freedom.

In the real world, the underlying variance of the population is rarely known, so the t-test enjoys widespread application.

The z-Test

The z-test is the appropriate hypothesis test of the population mean when the population is normally distributed with known variance. The computed test statistic used with the z-test is referred to as the z-statistic. The z-statistic for a hypothesis test for a population mean is computed as follows:

To test a hypothesis, the z-statistic is compared to the critical z-value corresponding to the significance of the test.

Critical z-values for the most common levels of significance are displayed in the following figure,

When the sample size is large and the population variance is unknown, the z-statistic is:

This is acceptable if the sample size is large, although the t-statistic is the more conservative measure when the population variance is unknown.

t-检验和z-检验都是用来检验总体的均值的(population mean)。

The chi-square test(卡方检验)

The chi-square test is used for hypothesis tests concerning the variance of a normally distributed population. Letting σ^2 represent the true population variance, and σ0^2 represent the hypothesized variance, the hypotheses for a two-tailed test of a single population variance are structured as:

The hypothesis for one-tailed test is structured as:

Hypothesis testing of the population variance requires the use of a chi-square distributed test statistic, denoted as  . The chi-square distribution is asymmetrical and approaches the normal distribution in shape as the degree of freedom increase.

. The chi-square distribution is asymmetrical and approaches the normal distribution in shape as the degree of freedom increase.

The chi-square test statistic,  , with n-1 degrees of freedom, is computed as:

, with n-1 degrees of freedom, is computed as:

Similar to other hypothesis tests, the chi-square test compares the test statistic to a critical chi-square value at a given level of significance and n-1 degrees of freedom. Note that since the chi-square distribution is bounded below by zero, chi-square values cannot be negative.

F-test

Testing the equality of the variances of two normally distributed populations, based on two independent random samples.

The hypotheses concerned with the equality of the variances of two populations are tested with an F-distributed test statistic. Hypothesis testing using a test statistic that follows an F-distribution is referred to as the F-test. The F-test is used under the assumption that the populations from which samples are drawn are normally distributed and that the samples are independent.

If we let σ1^2 and σ2^2 represent the variances of normal Population 1 and Population 2, respectively, the hypotheses for the two-tailed F-test of differences in the variances can be structured as:

and the one-sided test structures can be specified as:

The test statistic for the F-test is the ratio of the sample variances. The F-statistic is computed as:

Note that n1-1 and n2-1 are the degrees of the freedom used to identify the appropriate critical value from the F-table.

Always put the larger variance in the numerator. Following this convention means we only have to consider the critical value for the right-hand tail.

The F-distribution is right-skewed and is truncated at zero on the left-hand side. The shape of the F-distribution is determined by two separate degrees of freedom, the numerator degrees of freedom, df1, and the denominator degrees of freedom, df2. The rejection region is in the right-side tail of the distribution. This will always be the case as long as the F-statistic is computed with the largest sample variance in the numerator.

Hypothesis Testing的更多相关文章

- 假设检验(Hypothesis Testing)

假设检验(Hypothesis Testing) 1. 什么是假设检验呢? 假设检验又称为统计假设检验,是数理统计中根据一定假设条件由样本推断总体的一种方法. 什么意思呢,举个生活中的例子:买橘子(借 ...

- Critical-Value|Critical-Value Approach to Hypothesis Testing

9.2 Critical-Value Approach to Hypothesis Testing example: 对于mean 值 275 的假设: 有一个关于sample mean的distri ...

- [Math Review] Statistics Basics: Main Concepts in Hypothesis Testing

Case Study The case study Physicians' Reactions sought to determine whether physicians spend less ti ...

- The Most Simple Introduction to Hypothesis Testing

https://www.youtube.com/watch?v=UApFKiK4Hi8

- A/B Testing with Practice in Python (Part One)

I learned A/B testing from a Youtube vedio. The link is https://www.youtube.com/watch?v=Bu7OqjYk0jM. ...

- Null Hypotheses| Alternative Hypotheses|Hypothesis Test|Significance Level|two tailed |one tailed|

9.1 The Nature of Hypothesis Testing Over the years, however, null hypothesis has come to mean simpl ...

- 统计分析中Type I Error与Type II Error的区别

统计分析中Type I Error与Type II Error的区别 在统计分析中,经常提到Type I Error和Type II Error.他们的基本概念是什么?有什么区别? 下面的表格显示 b ...

- 《Spark 官方文档》机器学习库(MLlib)指南

spark-2.0.2 机器学习库(MLlib)指南 MLlib是Spark的机器学习(ML)库.旨在简化机器学习的工程实践工作,并方便扩展到更大规模.MLlib由一些通用的学习算法和工具组成,包括分 ...

- {ICIP2014}{收录论文列表}

This article come from HEREARS-L1: Learning Tuesday 10:30–12:30; Oral Session; Room: Leonard de Vinc ...

随机推荐

- Android控件之HorizontalScrollView 去掉滚动栏

在默认情况下.HorizontalScrollView控件里面的内容在滚动的情况下,会出现滚动栏,为了去掉滚动栏.仅仅须要在<HorizontalScrollView/>里面加一句 ...

- swift学习之元组

元组在oc中是没有的.在swift中是新加的,学oc数组概念时还在想既然能够存储同样类型的元素,那不同类型的元素有没有东西存储呢,答案非常悲伤,oc没有元组这个概念.只是swift中加入了这个东西,也 ...

- 电脑PE系统工具

自己收集的一些PE电脑维护工具 电脑店PE工具 http://u.diannaodian.com/ 通用PE工具箱 http://www.tongyongpe.com/ 大白菜PE工具 http:// ...

- uva 10721 - Bar Codes(dp)

题目链接:uva 10721 - Bar Codes 题目大意:给出n,k和m,用k个1~m的数组成n,问有几种组成方法. 解题思路:简单dp,cnt[i][j]表示用i个数组成j, cnt[i][j ...

- sql distinct 不能比较或排序 text、ntext 和 image 数据类型,除非使用 IS NULL 或 LIKE 运算符

有个文章的表内容是列项,类型是text 我查询的是内容相同的文章,把其中的一个删除 select 内容 from 文章 group by 内容 having count(*)>1 查询ID和题目 ...

- SpringMVC通过邮件找回密码功能的实现

1.最近开发一个系统,有个需求就是,忘记密码后通过邮箱找回.现在的系统在注册的时候都会强制输入邮箱,其一目的就是 通过邮件绑定找回,可以进行密码找回.通过java发送邮件的功能我就不说了,重点讲找回密 ...

- python之模块csv之CSV文件的写入(按行写入)

# -*- coding: utf-8 -*- #python 27 #xiaodeng #CSV文件的写入(按行写入) import csv #csv文件,是一种常用的文本格式,用以存储表格数据,很 ...

- getattr和setattr

>>> class MyData(): def __init__(self,name,phone): self.name=name self.phone=phone def upda ...

- cocos2dx跟eclipse交叉编译“make: * No rule to make target `all' Stop”的解决方案

cocos2dx和eclipse交叉编译“make: *** No rule to make target `all'. Stop”的解决方案 搞cocos2dx在eclipse上的交叉编译. 项目. ...

- 【laravel5.4】{{$name}}、{{name}}、@{{$name}} 和 @{{name}} 的区别

1.前面带@符号的,表示不需要laravel的blade引擎进行解析:有@的,则需要blade解析 2.{{$name}} 表示:blade解析 后台php的 name变量 {{name}} 表示:b ...