搭建Spark的单机版集群

一、创建用户

# useradd spark

# passwd spark

二、下载软件

JDK,Scala,SBT,Maven

版本信息如下:

JDK jdk-7u79-linux-x64.gz

Scala scala-2.10.5.tgz

SBT sbt-0.13.7.zip

Maven apache-maven-3.2.5-bin.tar.gz

注意:如果只是安装Spark环境,则只需JDK和Scala即可,SBT和Maven是为了后续的源码编译。

三、解压上述文件并进行环境变量配置

# cd /usr/local/

# tar xvf /root/jdk-7u79-linux-x64.gz

# tar xvf /root/scala-2.10.5.tgz

# tar xvf /root/apache-maven-3.2.5-bin.tar.gz

# unzip /root/sbt-0.13.7.zip

修改环境变量的配置文件

# vim /etc/profile

export JAVA_HOME=/usr/local/jdk1..0_79

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export SCALA_HOME=/usr/local/scala-2.10.

export MAVEN_HOME=/usr/local/apache-maven-3.2.

export SBT_HOME=/usr/local/sbt

export PATH=$PATH:$JAVA_HOME/bin:$SCALA_HOME/bin:$MAVEN_HOME/bin:$SBT_HOME/bin

使配置文件生效

# source /etc/profile

测试环境变量是否生效

# java –version

java version "1.7.0_79"

Java(TM) SE Runtime Environment (build 1.7.0_79-b15)

Java HotSpot(TM) -Bit Server VM (build 24.79-b02, mixed mode)

# scala –version

Scala code runner version 2.10. -- Copyright -, LAMP/EPFL

# mvn –version

Apache Maven 3.2. (12a6b3acb947671f09b81f49094c53f426d8cea1; --15T01::+:)

Maven home: /usr/local/apache-maven-3.2.

Java version: 1.7.0_79, vendor: Oracle Corporation

Java home: /usr/local/jdk1..0_79/jre

Default locale: en_US, platform encoding: UTF-

OS name: "linux", version: "3.10.0-229.el7.x86_64", arch: "amd64", family: "unix"

# sbt --version

sbt launcher version 0.13.

四、主机名绑定

[root@spark01 ~]# vim /etc/hosts

192.168.244.147 spark01

五、配置spark

切换到spark用户下

下载hadoop和spark,可使用wget命令下载

spark-1.4.0 http://d3kbcqa49mib13.cloudfront.net/spark-1.4.0-bin-hadoop2.6.tgz

Hadoop http://mirror.bit.edu.cn/apache/hadoop/common/hadoop-2.6.0/hadoop-2.6.0.tar.gz

解压上述文件并进行环境变量配置

修改spark用户环境变量的配置文件

[spark@spark01 ~]$ vim .bash_profile

export SPARK_HOME=$HOME/spark-1.4.-bin-hadoop2.

export HADOOP_HOME=$HOME/hadoop-2.6.

export HADOOP_CONF_DIR=$HOME/hadoop-2.6./etc/hadoop

export PATH=$PATH:$SPARK_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

使配置文件生效

[spark@spark01 ~]$ source .bash_profile

修改spark配置文件

[spark@spark01 ~]$ cd spark-1.4.0-bin-hadoop2.6/conf/

[spark@spark01 conf]$ cp spark-env.sh.template spark-env.sh

[spark@spark01 conf]$ vim spark-env.sh

在后面添加如下内容:

export SCALA_HOME=/usr/local/scala-2.10.

export SPARK_MASTER_IP=spark01

export SPARK_WORKER_MEMORY=1500m

export JAVA_HOME=/usr/local/jdk1..0_79

有条件的童鞋可将SPARK_WORKER_MEMORY适当设大一点,因为我虚拟机内存是2G,所以只给了1500m。

配置slaves

[spark@spark01 conf]$ cp slaves slaves.template

[spark@spark01 conf]$ vim slaves

将localhost修改为spark01

启动master

[spark@spark01 spark-1.4.0-bin-hadoop2.6]$ sbin/start-master.sh

starting org.apache.spark.deploy.master.Master, logging to /home/spark/spark-1.4.-bin-hadoop2./sbin/../logs/spark-spark-org.apache.spark.deploy.master.Master--spark01.out

查看上述日志的输出内容

[spark@spark01 spark-1.4.0-bin-hadoop2.6]$ cd logs/

[spark@spark01 logs]$ cat spark-spark-org.apache.spark.deploy.master.Master-1-spark01.out

Spark Command: /usr/local/jdk1..0_79/bin/java -cp /home/spark/spark-1.4.-bin-hadoop2./sbin/../conf/:/home/spark/spark-1.4.-bin-hadoop2./lib/spark-assembly-1.4.-hadoop2.6.0.jar:/home/spark/spark-1.4.-bin-hadoop2./lib/datanucleus-core-3.2..jar:/home/spark/spark-1.4.-bin-hadoop2./lib/datanucleus-api-jdo-3.2..jar:/home/spark/spark-1.4.-bin-hadoop2./lib/datanucleus-rdbms-3.2..jar:/home/spark/hadoop-2.6./etc/hadoop/ -Xms512m -Xmx512m -XX:MaxPermSize=128m org.apache.spark.deploy.master.Master --ip spark01 --port --webui-port

========================================

// :: INFO master.Master: Registered signal handlers for [TERM, HUP, INT]

// :: WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

// :: INFO spark.SecurityManager: Changing view acls to: spark

// :: INFO spark.SecurityManager: Changing modify acls to: spark

// :: INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(spark); users with modify permissions: Set(spark)

// :: INFO slf4j.Slf4jLogger: Slf4jLogger started

// :: INFO Remoting: Starting remoting

// :: INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkMaster@spark01:7077]

// :: INFO util.Utils: Successfully started service 'sparkMaster' on port .

// :: INFO server.Server: jetty-.y.z-SNAPSHOT

// :: INFO server.AbstractConnector: Started SelectChannelConnector@spark01:

// :: INFO util.Utils: Successfully started service on port .

// :: INFO rest.StandaloneRestServer: Started REST server for submitting applications on port

// :: INFO master.Master: Starting Spark master at spark://spark01:7077

// :: INFO master.Master: Running Spark version 1.4.

// :: INFO server.Server: jetty-.y.z-SNAPSHOT

// :: INFO server.AbstractConnector: Started SelectChannelConnector@0.0.0.0:

// :: INFO util.Utils: Successfully started service 'MasterUI' on port .

// :: INFO ui.MasterWebUI: Started MasterWebUI at http://192.168.244.147:8080

// :: INFO master.Master: I have been elected leader! New state: ALIVE

从日志中也可看出,master启动正常

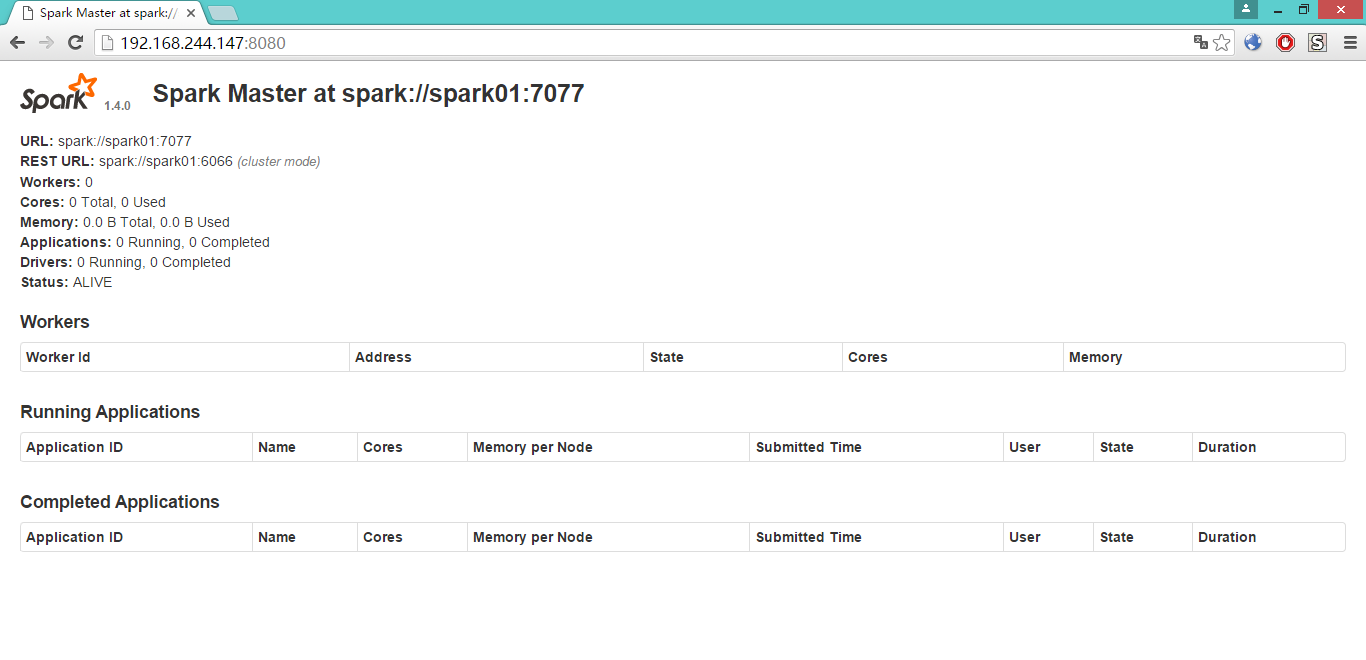

下面来看看master的 web管理界面,默认在8080端口

启动worker

[spark@spark01 spark-1.4.0-bin-hadoop2.6]$ sbin/start-slaves.sh spark://spark01:7077

spark01: Warning: Permanently added 'spark01,192.168.244.147' (ECDSA) to the list of known hosts.

spark@spark01's password:

spark01: starting org.apache.spark.deploy.worker.Worker, logging to /home/spark/spark-1.4.-bin-hadoop2./sbin/../logs/spark-spark-org.apache.spark.deploy.worker.Worker--spark01.out

输入spark01上spark用户的密码

可通过日志的信息来确认workder是否正常启动,因信息太多,在这里就不贴出了。

[spark@spark01 spark-1.4.0-bin-hadoop2.6]$ cd logs/

[spark@spark01 logs]$ cat spark-spark-org.apache.spark.deploy.worker.Worker-1-spark01.out

启动spark shell

[spark@spark01 spark-1.4.0-bin-hadoop2.6]$ bin/spark-shell --master spark://spark01:7077

// :: WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

// :: INFO spark.SecurityManager: Changing view acls to: spark

// :: INFO spark.SecurityManager: Changing modify acls to: spark

// :: INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(spark); users with modify permissions: Set(spark)

// :: INFO spark.HttpServer: Starting HTTP Server

// :: INFO server.Server: jetty-.y.z-SNAPSHOT

// :: INFO server.AbstractConnector: Started SocketConnector@0.0.0.0:

// :: INFO util.Utils: Successfully started service 'HTTP class server' on port .

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 1.4.

/_/ Using Scala version 2.10. (Java HotSpot(TM) -Bit Server VM, Java 1.7.0_79)

Type in expressions to have them evaluated.

Type :help for more information.

// :: INFO spark.SparkContext: Running Spark version 1.4.

// :: INFO spark.SecurityManager: Changing view acls to: spark

// :: INFO spark.SecurityManager: Changing modify acls to: spark

// :: INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(spark); users with modify permissions: Set(spark)

// :: INFO slf4j.Slf4jLogger: Slf4jLogger started

// :: INFO Remoting: Starting remoting

// :: INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriver@192.168.244.147:43850]

// :: INFO util.Utils: Successfully started service 'sparkDriver' on port .

// :: INFO spark.SparkEnv: Registering MapOutputTracker

// :: INFO spark.SparkEnv: Registering BlockManagerMaster

// :: INFO storage.DiskBlockManager: Created local directory at /tmp/spark-7b7bd4bd-ff20-4e3d-a354-61a4ca7c4b2f/blockmgr-0e855210---b5e3-151e0c096c15

// :: INFO storage.MemoryStore: MemoryStore started with capacity 265.4 MB

// :: INFO spark.HttpFileServer: HTTP File server directory is /tmp/spark-7b7bd4bd-ff20-4e3d-a354-61a4ca7c4b2f/httpd-56ac16d2-dd82-41cb-99d7-4d11ef36b42e

// :: INFO spark.HttpServer: Starting HTTP Server

// :: INFO server.Server: jetty-.y.z-SNAPSHOT

// :: INFO server.AbstractConnector: Started SocketConnector@0.0.0.0:

// :: INFO util.Utils: Successfully started service 'HTTP file server' on port .

// :: INFO spark.SparkEnv: Registering OutputCommitCoordinator

// :: INFO server.Server: jetty-.y.z-SNAPSHOT

// :: INFO server.AbstractConnector: Started SelectChannelConnector@0.0.0.0:

// :: INFO util.Utils: Successfully started service 'SparkUI' on port .

// :: INFO ui.SparkUI: Started SparkUI at http://192.168.244.147:4040

// :: INFO client.AppClient$ClientActor: Connecting to master akka.tcp://sparkMaster@spark01:7077/user/Master...

// :: INFO cluster.SparkDeploySchedulerBackend: Connected to Spark cluster with app ID app--

// :: INFO client.AppClient$ClientActor: Executor added: app--/ on worker--192.168.244.147- (192.168.244.147:) with cores

// :: INFO cluster.SparkDeploySchedulerBackend: Granted executor ID app--/ on hostPort 192.168.244.147: with cores, 512.0 MB RAM

// :: INFO client.AppClient$ClientActor: Executor updated: app--/ is now LOADING

// :: INFO client.AppClient$ClientActor: Executor updated: app--/ is now RUNNING

// :: INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port .

// :: INFO netty.NettyBlockTransferService: Server created on

// :: INFO storage.BlockManagerMaster: Trying to register BlockManager

// :: INFO storage.BlockManagerMasterEndpoint: Registering block manager 192.168.244.147: with 265.4 MB RAM, BlockManagerId(driver, 192.168.244.147, )

// :: INFO storage.BlockManagerMaster: Registered BlockManager

// :: INFO cluster.SparkDeploySchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

// :: INFO repl.SparkILoop: Created spark context..

Spark context available as sc.

// :: INFO hive.HiveContext: Initializing execution hive, version 0.13.

// :: INFO metastore.HiveMetaStore: : Opening raw store with implemenation class:org.apache.hadoop.hive.metastore.ObjectStore

// :: INFO metastore.ObjectStore: ObjectStore, initialize called

// :: INFO DataNucleus.Persistence: Property datanucleus.cache.level2 unknown - will be ignored

// :: INFO DataNucleus.Persistence: Property hive.metastore.integral.jdo.pushdown unknown - will be ignored

// :: INFO cluster.SparkDeploySchedulerBackend: Registered executor: AkkaRpcEndpointRef(Actor[akka.tcp://sparkExecutor@192.168.244.147:46741/user/Executor#-2043358626]) with ID 0

// :: WARN DataNucleus.Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies)

// :: INFO storage.BlockManagerMasterEndpoint: Registering block manager 192.168.244.147: with 265.4 MB RAM, BlockManagerId(, 192.168.244.147, )

// :: WARN DataNucleus.Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies)

// :: INFO metastore.ObjectStore: Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order"

// :: INFO metastore.MetaStoreDirectSql: MySQL check failed, assuming we are not on mysql: Lexical error at line , column . Encountered: "@" (), after : "".

// :: INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

// :: INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

// :: INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MFieldSchema" is tagged as "embedded-only" so does not have its own datastore table.

// :: INFO DataNucleus.Datastore: The class "org.apache.hadoop.hive.metastore.model.MOrder" is tagged as "embedded-only" so does not have its own datastore table.

// :: INFO metastore.ObjectStore: Initialized ObjectStore

// :: WARN metastore.ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 0.13.1aa

// :: INFO metastore.HiveMetaStore: Added admin role in metastore

// :: INFO metastore.HiveMetaStore: Added public role in metastore

// :: INFO metastore.HiveMetaStore: No user is added in admin role, since config is empty

// :: INFO session.SessionState: No Tez session required at this point. hive.execution.engine=mr.

// :: INFO repl.SparkILoop: Created sql context (with Hive support)..

SQL context available as sqlContext. scala>

打开spark shell以后,可以写一个简单的程序,say hello to the world

scala> println("helloworld")

helloworld

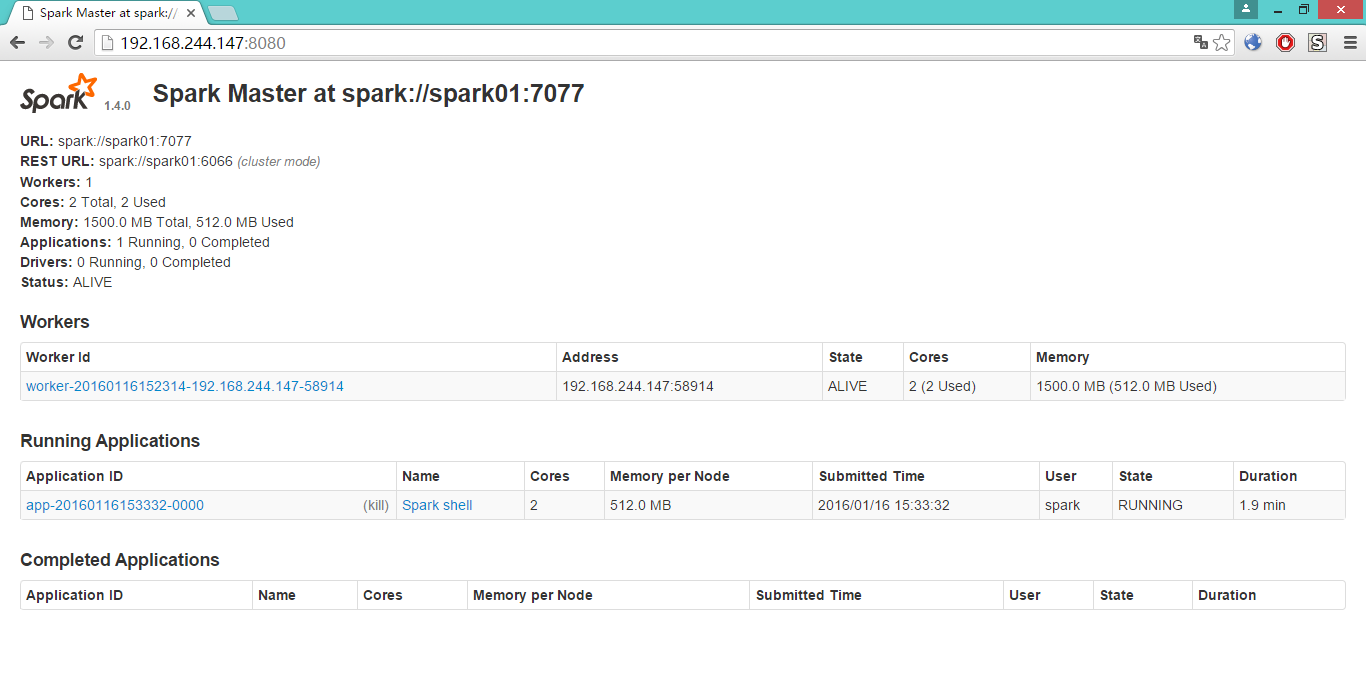

再来看看spark的web管理界面,可以看出,多了一个Workders和Running Applications的信息

至此,Spark的伪分布式环境搭建完毕,

有以下几点需要注意:

1. 上述中的Maven和SBT是非必须的,只是为了后续的源码编译,所以,如果只是单纯的搭建Spark环境,可不用下载Maven和SBT。

2. 该Spark的伪分布式环境其实是集群的基础,只需修改极少的地方,然后copy到slave节点上即可,鉴于篇幅有限,后文再表。

搭建Spark的单机版集群的更多相关文章

- 搭建Spark高可用集群

Spark简介 官网地址:http://spark.apache.org/ Apache Spark™是用于大规模数据处理的统一分析引擎. 从右侧最后一条新闻看,Spark也用于AI人工智能 sp ...

- 高效搭建Spark全然分布式集群

写在前面一: 本文具体总结Spark分布式集群的安装步骤,帮助想要学习Spark的技术爱好者高速搭建Spark的学习研究环境. 写在前面二: 使用软件说明 约定,Spark相关软件存放文件夹:/usr ...

- 基于 ZooKeeper 搭建 Spark 高可用集群

一.集群规划 二.前置条件 三.Spark集群搭建 3.1 下载解压 3.2 配置环境变量 3.3 集群配置 3.4 安装包分发 四.启 ...

- Spark学习之路(七)—— 基于ZooKeeper搭建Spark高可用集群

一.集群规划 这里搭建一个3节点的Spark集群,其中三台主机上均部署Worker服务.同时为了保证高可用,除了在hadoop001上部署主Master服务外,还在hadoop002和hadoop00 ...

- Spark 系列(七)—— 基于 ZooKeeper 搭建 Spark 高可用集群

一.集群规划 这里搭建一个 3 节点的 Spark 集群,其中三台主机上均部署 Worker 服务.同时为了保证高可用,除了在 hadoop001 上部署主 Master 服务外,还在 hadoop0 ...

- 入门大数据---基于Zookeeper搭建Spark高可用集群

一.集群规划 这里搭建一个 3 节点的 Spark 集群,其中三台主机上均部署 Worker 服务.同时为了保证高可用,除了在 hadoop001 上部署主 Master 服务外,还在 hadoop0 ...

- Spark高可用集群搭建

Spark高可用集群搭建 node1 node2 node3 1.node1修改spark-env.sh,注释掉hadoop(就不用开启Hadoop集群了),添加如下语句 export ...

- spark教程(一)-集群搭建

spark 简介 建议先阅读我的博客 大数据基础架构 spark 一个通用的计算引擎,专门为大规模数据处理而设计,与 mapreduce 类似,不同的是,mapreduce 把中间结果 写入 hdfs ...

- CentOS7.5搭建spark2.3.1集群

一 下载安装包 1 官方下载 官方下载地址:http://spark.apache.org/downloads.html 2 安装前提 Java8 安装成功 zookeeper 安 ...

随机推荐

- JS中原型链继承

当我们通过构造函数A来实现一项功能的时候,而构造函数B中需要用到构造函数A中的属性或者方法,如果我们对B中的属性或者方法进行重写就会出现冗杂的代码,同时写出来也很是麻烦.而在js中每个函数都有个原型, ...

- uploadify批量上传

js: $("#uploadify").uploadify({ 'uploader':'uploadServlet', 'swf':'image/uploadify.swf', ' ...

- dell笔记本三个系统,ubuntu16.04更新,boot分区容量不足解决办法

本人自己dell物理机上安装windows 7 .centos 1704 和ubuntu1604 三个系统的,分区当时没有使用lVM,boot单独挂/dev/sda7 分区,只有200M,随着2次li ...

- 用.net在画出镂空图片

最近的一个项目需要用到这个东西,冥思苦想了好几天.还是在同事的帮助下,完成此项难题,希望能够帮助以后的博友们 ! 废话不多说,先看看效果图吧. 首先写一下讲一下思路,首先画一张图,当你的背景,然后在图 ...

- Hadoop_常用存储与压缩格式

HDFS文件格式 file_format: TEXTFILE 默认格式 RCFILE hive 0.6.0 和以后的版本 ORC hive 0.11.0 和以后的版本 PARQUET hive 0.1 ...

- Python之路Day17-jQuery

本节内容: jQuery 参考:http://jquery.cuishifeng.cn/ 模块 <==>类库 Dom/Bom/JavaScript的类库 版本:1.x 1.12 2. ...

- C#上位机制作之串口接受数据(利用接受事件)

前面设计好了界面,现在就开始写代码了,首先定义一个串口对象.. SerialPort serialport = new SerialPort();//定义串口对象 添加串口扫描函数,扫描出来所有可用串 ...

- 使用CSS3制作三角形小图标

话不多说,直接写代码,希望能够对大家有所帮助! 1.html代码如下: <a href="#" class="usetohover"> <di ...

- <2048>调查报告心得与体会

老师这次给我们布置了一个任务,就是让我们写一份属于自己的调查报告,针对这个任务,我们小组的六个人通过积极的讨论,提出了一些关于我们产品的问题,当然这些问题并不是很全面,因为我们是从自己的角度出发,无法 ...

- 通过jquery js 实现幻灯片切换轮播效果

观察各个电商网址轮播图的效果,总结了一下主要突破点与难点 1.->封装函数的步骤与具体实现 2->this关键字的指向 3->jquery js函数熟练运用 如animate 4-& ...