吴裕雄 python 机器学习-DMT(1)

import numpy as np

import operator as op from math import log def createDataSet():

dataSet = [[1, 1, 'yes'],

[1, 1, 'yes'],

[1, 0, 'no'],

[0, 1, 'no'],

[0, 1, 'no']]

labels = ['no surfacing','flippers']

return dataSet, labels dataSet,labels = createDataSet()

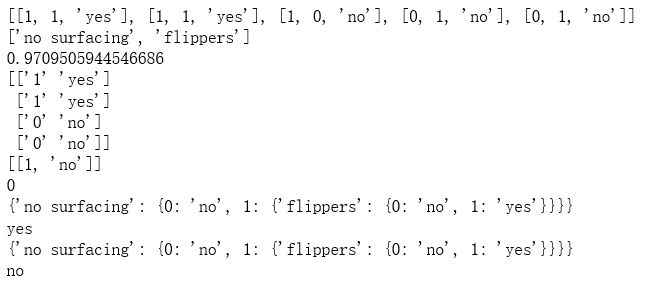

print(dataSet)

print(labels) def calcShannonEnt(dataSet):

labelCounts = {}

for featVec in dataSet:

currentLabel = featVec[-1]

if(currentLabel not in labelCounts.keys()):

labelCounts[currentLabel] = 0

labelCounts[currentLabel] += 1

shannonEnt = 0.0

rowNum = len(dataSet)

for key in labelCounts:

prob = float(labelCounts[key])/rowNum

shannonEnt -= prob * log(prob,2)

return shannonEnt shannonEnt = calcShannonEnt(dataSet)

print(shannonEnt) def splitDataSet(dataSet, axis, value):

retDataSet = []

for featVec in dataSet:

if(featVec[axis] == value):

reducedFeatVec = featVec[:axis]

reducedFeatVec.extend(featVec[axis+1:])

retDataSet.append(reducedFeatVec)

return retDataSet retDataSet = splitDataSet(dataSet,1,1)

print(np.array(retDataSet))

retDataSet = splitDataSet(dataSet,1,0)

print(retDataSet) def chooseBestFeatureToSplit(dataSet):

numFeatures = np.shape(dataSet)[1]-1

baseEntropy = calcShannonEnt(dataSet)

bestInfoGain = 0.0

bestFeature = -1

for i in range(numFeatures):

featList = [example[i] for example in dataSet]

uniqueVals = set(featList)

newEntropy = 0.0

for value in uniqueVals:

subDataSet = splitDataSet(dataSet, i, value)

prob = len(subDataSet)/float(len(dataSet))

newEntropy += prob * calcShannonEnt(subDataSet)

infoGain = baseEntropy - newEntropy

if (infoGain > bestInfoGain):

bestInfoGain = infoGain

bestFeature = i

return bestFeature bestFeature = chooseBestFeatureToSplit(dataSet)

print(bestFeature) def majorityCnt(classList):

classCount={}

for vote in classList:

if(vote not in classCount.keys()):

classCount[vote] = 0

classCount[vote] += 1

sortedClassCount = sorted(classCount.items(), key=op.itemgetter(1), reverse=True)

return sortedClassCount[0][0] def createTree(dataSet,labels):

classList = [example[-1] for example in dataSet]

if(classList.count(classList[0]) == len(classList)):

return classList[0]

if len(dataSet[0]) == 1:

return majorityCnt(classList)

bestFeat = chooseBestFeatureToSplit(dataSet)

bestFeatLabel = labels[bestFeat]

myTree = {bestFeatLabel:{}}

del(labels[bestFeat])

featValues = [example[bestFeat] for example in dataSet]

uniqueVals = set(featValues)

for value in uniqueVals:

subLabels = labels[:]

myTree[bestFeatLabel][value] = createTree(splitDataSet(dataSet, bestFeat, value),subLabels)

return myTree myTree = createTree(dataSet,labels)

print(myTree) def classify(inputTree,featLabels,testVec):

for i in inputTree.keys():

firstStr = i

break

secondDict = inputTree[firstStr]

featIndex = featLabels.index(firstStr)

key = testVec[featIndex]

valueOfFeat = secondDict[key]

if isinstance(valueOfFeat, dict):

classLabel = classify(valueOfFeat, featLabels, testVec)

else:

classLabel = valueOfFeat

return classLabel featLabels = ['no surfacing', 'flippers']

classLabel = classify(myTree,featLabels,[1,1])

print(classLabel) import pickle def storeTree(inputTree,filename):

fw = open(filename,'wb')

pickle.dump(inputTree,fw)

fw.close() def grabTree(filename):

fr = open(filename,'rb')

return pickle.load(fr) filename = "D:\\mytree.txt"

storeTree(myTree,filename)

mySecTree = grabTree(filename)

print(mySecTree) featLabels = ['no surfacing', 'flippers']

classLabel = classify(mySecTree,featLabels,[0,0])

print(classLabel)

吴裕雄 python 机器学习-DMT(1)的更多相关文章

- 吴裕雄 python 机器学习-DMT(2)

import matplotlib.pyplot as plt decisionNode = dict(boxstyle="sawtooth", fc="0.8" ...

- 吴裕雄 python 机器学习——分类决策树模型

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets from sklearn.model_s ...

- 吴裕雄 python 机器学习——回归决策树模型

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets from sklearn.model_s ...

- 吴裕雄 python 机器学习——线性判断分析LinearDiscriminantAnalysis

import numpy as np import matplotlib.pyplot as plt from matplotlib import cm from mpl_toolkits.mplot ...

- 吴裕雄 python 机器学习——逻辑回归

import numpy as np import matplotlib.pyplot as plt from matplotlib import cm from mpl_toolkits.mplot ...

- 吴裕雄 python 机器学习——ElasticNet回归

import numpy as np import matplotlib.pyplot as plt from matplotlib import cm from mpl_toolkits.mplot ...

- 吴裕雄 python 机器学习——Lasso回归

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets, linear_model from s ...

- 吴裕雄 python 机器学习——岭回归

import numpy as np import matplotlib.pyplot as plt from sklearn import datasets, linear_model from s ...

- 吴裕雄 python 机器学习——线性回归模型

import numpy as np from sklearn import datasets,linear_model from sklearn.model_selection import tra ...

随机推荐

- visual studio 2017调试时闪退。

解决方案: 在工程上右键--->属性--->配置属性--->连接器--->系统--->子系统(在窗口右边)--->下拉框选择控制台(/SUBSYSTEM:CONSO ...

- HBase原理和架构

HBase是什么 HBase在生态体系中的位置 HBase vs HDFS HBase表的特点 HBase是真正的分布式存储,存储级别达到TB级别,而才传统数据库就不是真正的分布式了,传统数据库在底层 ...

- Java 3-Java 基本数据类型

Java 基本数据类型 变量就是申请内存来存储值.也就是说,当创建变量的时候,需要在内存中申请空间. 内存管理系统根据变量的类型为变量分配存储空间,分配的空间只能用来储存该类型数据. 因此,通过定义不 ...

- Android Activity传递数据使用getIntent()接收不到,揭秘Intent传递数据与Activity启动模式singleTask的关系。

activity通过intent传递数据的时候,如果activity未启动,那么在这个刚启动的activity里通过getIntent()会获取到这个intent的数据.. 如果要启动的activit ...

- BZOJ2560串珠子

/* 很清新的一道题(相比上一道题) g[S]表示该 S集合中胡乱连的所有方案数, f[S] 表示S集合的答案 那么F[S] 等于G[S]减去不合法的部分方案 不合法的方案就枚举合法的部分就好了 g[ ...

- linux&php:ubuntu安装php-7.2

1.下载php源码,地址:http://www.php.net/downloads.php 这里下载的是tar.gz的包 2.解压安装 将安装包解压到/usr/local/php 安装C的编译工具 s ...

- 在虚拟机中安装ubuntu

初始安装: 1.安装新虚拟机时,选择稍后安装操作系统,这可以自己设置语言等信息 2.修改自定义硬件:为网卡生成一个mac地址,(这里需要注意,有时网卡会冲突,导致连接时好时坏,以后可以删除掉网卡,重新 ...

- ORACLE 归档日志打开关闭方法

一 设置为归档方式 1 sql> archive log list; #查看是不是归档方式 2 sql> alter system set log_archive_start=true ...

- spring boot 自定义视图路径

boot 自定义访问视图路径 . 配置文件 目录结构 启动类: html页面 访问: 覆盖boot默认路径引用. 如果没有重新配置,则在pom引用模板. 修改配置文件. 注意一定要编译工程

- 《汇编语言 基于x86处理器》第十三章高级语言接口部分的代码 part 2

▶ 书中第十三章的程序,主要讲了汇编语言和 C/++ 相互调用的方法 ● 代码,汇编中调用 C++ 函数 ; subr.asm INCLUDE Irvine32.inc askForInteger P ...