Hadoop生态圈-zookeeper的API用法详解

Hadoop生态圈-zookeeper的API用法详解

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.测试前准备

1>.开启集群

[yinzhengjie@s101 ~]$ more `which xzk.sh`

#!/bin/bash

#@author :yinzhengjie

#blog:http://www.cnblogs.com/yinzhengjie

#EMAIL:y1053419035@qq.com #判断用户是否传参

if [ $# -ne ];then

echo "无效参数,用法为: $0 {start|stop|restart|status}"

exit

fi #获取用户输入的命令

cmd=$ #定义函数功能

function zookeeperManger(){

case $cmd in

start)

echo "启动服务"

remoteExecution start

;;

stop)

echo "停止服务"

remoteExecution stop

;;

restart)

echo "重启服务"

remoteExecution restart

;;

status)

echo "查看状态"

remoteExecution status

;;

*)

echo "无效参数,用法为: $0 {start|stop|restart|status}"

;;

esac

} #定义执行的命令

function remoteExecution(){

for (( i= ; i<= ; i++ )) ; do

tput setaf

echo ========== s$i zkServer.sh $ ================

tput setaf

ssh s$i "source /etc/profile ; zkServer.sh $1"

done

} #调用函数

zookeeperManger

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ xzk.sh start

启动服务

========== s102 zkServer.sh start ================

ZooKeeper JMX enabled by default

Using config: /soft/zk/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

========== s103 zkServer.sh start ================

ZooKeeper JMX enabled by default

Using config: /soft/zk/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

========== s104 zkServer.sh start ================

ZooKeeper JMX enabled by default

Using config: /soft/zk/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[yinzhengjie@s101 ~]$ xcall.sh jps

============= s101 jps ============

Jps

命令执行成功

============= s102 jps ============

Jps

QuorumPeerMain

命令执行成功

============= s103 jps ============

Jps

QuorumPeerMain

命令执行成功

============= s104 jps ============

Jps

QuorumPeerMain

命令执行成功

============= s105 jps ============

Jps

命令执行成功

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ xzk.sh start

[yinzhengjie@s101 ~]$ start-dfs.sh

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

Starting namenodes on [s101 s105]

s101: starting namenode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-namenode-s101.out

s105: starting namenode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-namenode-s105.out

s103: starting datanode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-datanode-s103.out

s102: starting datanode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-datanode-s102.out

s105: starting datanode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-datanode-s105.out

s104: starting datanode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-datanode-s104.out

Starting journal nodes [s102 s103 s104]

s102: starting journalnode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-journalnode-s102.out

s103: starting journalnode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-journalnode-s103.out

s104: starting journalnode, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-journalnode-s104.out

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/soft/hadoop-2.7./share/hadoop/common/lib/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/soft/apache-hive-2.1.-bin/lib/log4j-slf4j-impl-2.4..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

Starting ZK Failover Controllers on NN hosts [s101 s105]

s101: starting zkfc, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-zkfc-s101.out

s105: starting zkfc, logging to /soft/hadoop-2.7./logs/hadoop-yinzhengjie-zkfc-s105.out

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ xcall.sh jps

============= s101 jps ============

Jps

DFSZKFailoverController

命令执行成功

============= s102 jps ============

Jps

DataNode

QuorumPeerMain

JournalNode

命令执行成功

============= s103 jps ============

DataNode

JournalNode

Jps

QuorumPeerMain

命令执行成功

============= s104 jps ============

JournalNode

Jps

DataNode

QuorumPeerMain

命令执行成功

============= s105 jps ============

DFSZKFailoverController

DataNode

Jps

命令执行成功

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ start-dfs.sh

2>.添加Maven依赖

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>cn.org.yinzhengjie</groupId>

<artifactId>MyZookeep</artifactId>

<version>1.0-SNAPSHOT</version> <dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency> <dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>3.4.6</version>

</dependency>

</dependencies>

</project>

二.Zookeeper的API常用方法介绍

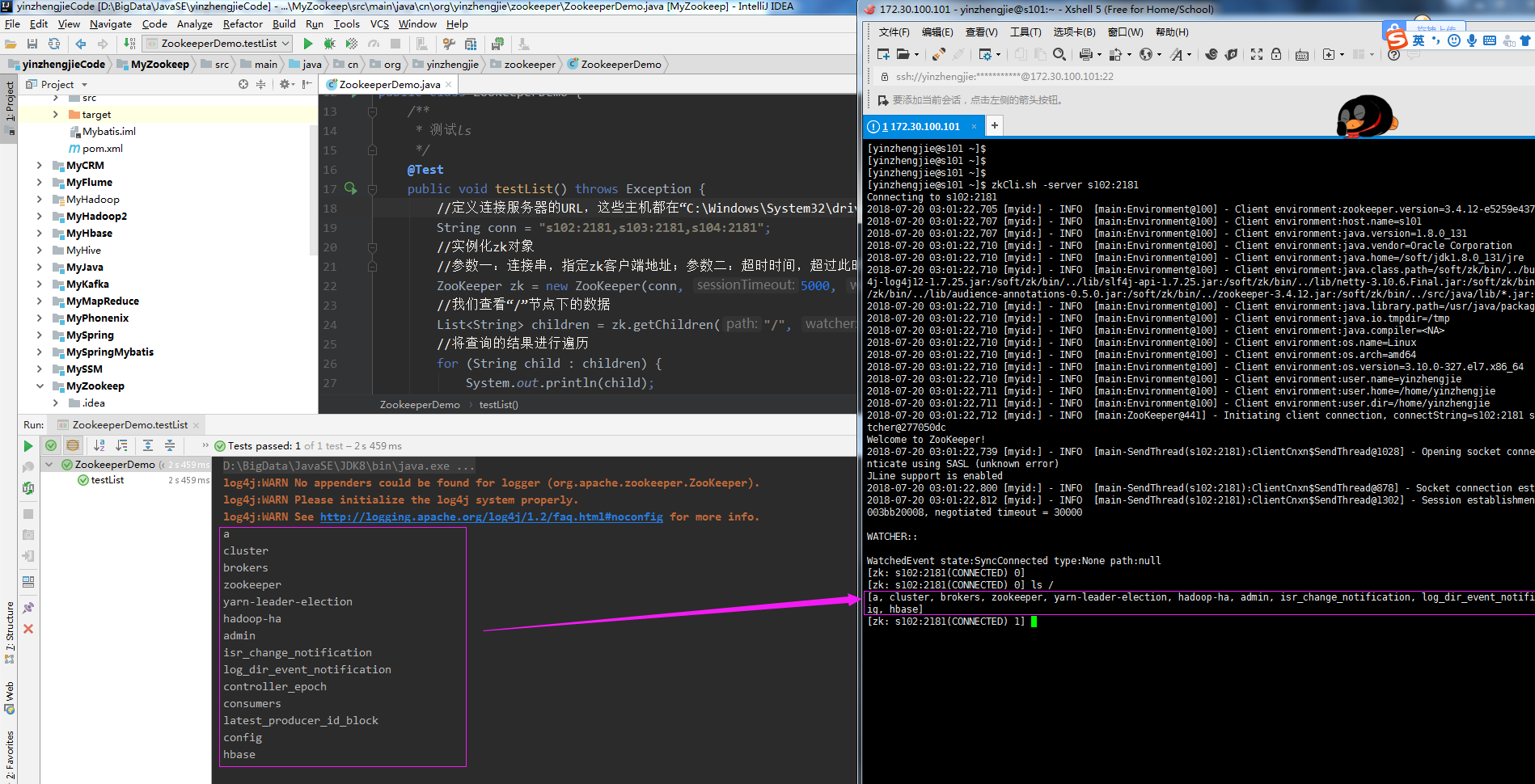

1>.测试ls命令

/*

@author :yinzhengjie

Blog:http://www.cnblogs.com/yinzhengjie/tag/Hadoop%E7%94%9F%E6%80%81%E5%9C%88/

EMAIL:y1053419035@qq.com

*/

package cn.org.yinzhengjie.zookeeper; import org.apache.zookeeper.ZooKeeper;

import org.junit.Test;

import java.util.List; public class ZookeeperDemo {

/**

* 测试ls

*/

@Test

public void testList() throws Exception {

//定义连接服务器的URL,这些主机都在“C:\Windows\System32\drivers\etc\HOSTS”中做的有映射哟!

String conn = "s102:2181,s103:2181,s104:2181";

//实例化zk对象

//参数一:连接串,指定zk客户端地址;参数二:超时时间,超过此时间未获得连接,抛出异常;参数三:指定watcher对象,我们指定为null即可

ZooKeeper zk = new ZooKeeper(conn, 5000, null);

//我们查看“/”节点下的数据

List<String> children = zk.getChildren("/", null);

//将查询的结果进行遍历

for (String child : children) {

System.out.println(child);

}

}

}

以上代码输出结果如下:

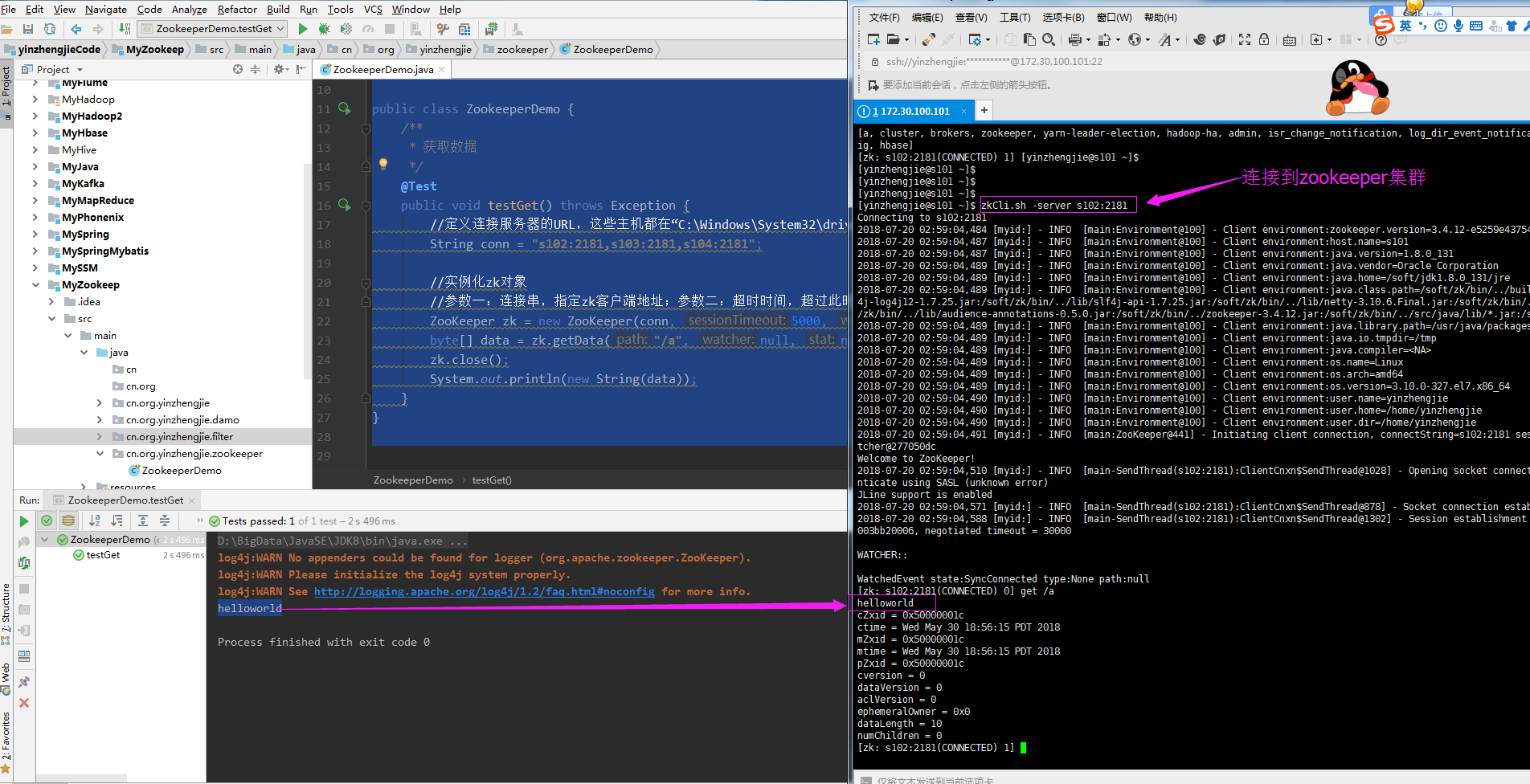

2>.获取数据

/*

@author :yinzhengjie

Blog:http://www.cnblogs.com/yinzhengjie/tag/Hadoop%E7%94%9F%E6%80%81%E5%9C%88/

EMAIL:y1053419035@qq.com

*/

package cn.org.yinzhengjie.zookeeper; import org.apache.zookeeper.ZooKeeper;

import org.junit.Test; public class ZookeeperDemo {

/**

* 获取数据

*/

@Test

public void testGet() throws Exception {

//定义连接服务器的URL,这些主机都在“C:\Windows\System32\drivers\etc\HOSTS”中做的有映射哟!

String conn = "s102:2181,s103:2181,s104:2181"; //实例化zk对象

//参数一:连接串,指定zk客户端地址;参数二:超时时间,超过此时间未获得连接,抛出异常;参数三:指定watcher对象,我们指定为null即可

ZooKeeper zk = new ZooKeeper(conn, 5000, null);

byte[] data = zk.getData("/a", null, null);

zk.close();

System.out.println(new String(data));

}

}

以上代码输出结果如下:

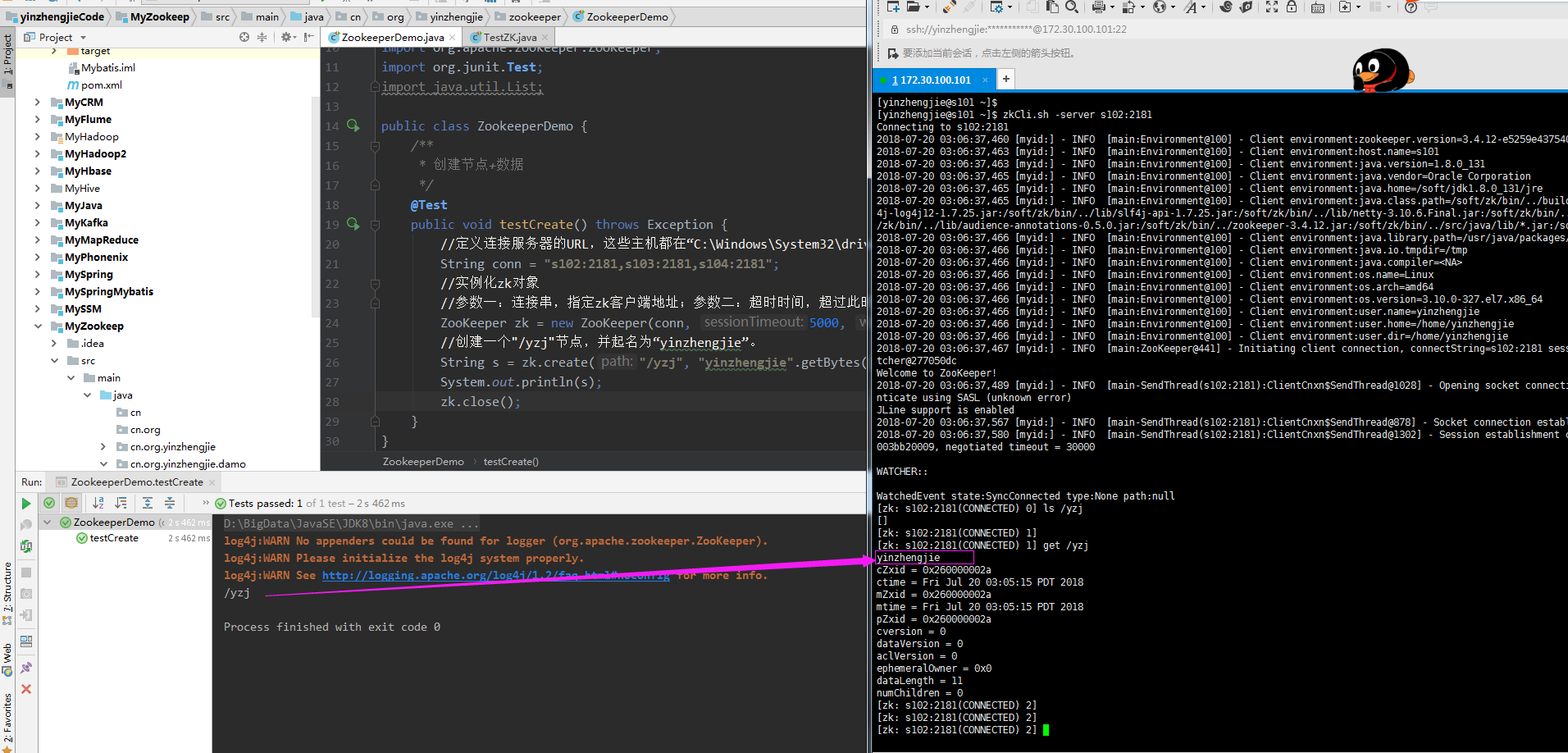

3>.创建节点+数据

/*

@author :yinzhengjie

Blog:http://www.cnblogs.com/yinzhengjie/tag/Hadoop%E7%94%9F%E6%80%81%E5%9C%88/

EMAIL:y1053419035@qq.com

*/

package cn.org.yinzhengjie.zookeeper; import org.apache.zookeeper.CreateMode;

import org.apache.zookeeper.ZooDefs;

import org.apache.zookeeper.ZooKeeper;

import org.junit.Test; public class ZookeeperDemo {

/**

* 创建节点+数据

*/

@Test

public void testCreate() throws Exception {

//定义连接服务器的URL,这些主机都在“C:\Windows\System32\drivers\etc\HOSTS”中做的有映射哟!

String conn = "s102:2181,s103:2181,s104:2181";

//实例化zk对象

//参数一:连接串,指定zk客户端地址;参数二:超时时间,超过此时间未获得连接,抛出异常;参数三:指定watcher对象,我们指定为null即可

ZooKeeper zk = new ZooKeeper(conn, 5000, null);

//创建一个"/yzj"节点(该节点不能再zookeeper集群中存在,否则会抛异常哟),并起名为“yinzhengjie”(写入的数据)。

String s = zk.create("/yzj", "yinzhengjie".getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT);

System.out.println(s);

zk.close();

}

}

以上代码输出结果如下:

4>.删除节点

/*

@author :yinzhengjie

Blog:http://www.cnblogs.com/yinzhengjie/tag/Hadoop%E7%94%9F%E6%80%81%E5%9C%88/

EMAIL:y1053419035@qq.com

*/

package cn.org.yinzhengjie.zookeeper; import org.apache.zookeeper.ZooKeeper;

import org.junit.Test; public class ZookeeperDemo {

/**

* 删除节点

*/

@Test

public void testDelete() throws Exception {

String conn = "s102:2181,s103:2181,s104:2181";

//实例化zk对象

//param1:连接串,指定zk客户端地址;param2:超时时间,超过此时间未获得连接,抛出异常

ZooKeeper zk = new ZooKeeper(conn, 5000, null);

//删除zookeeper集群中的节点,删除的节点必须存在,如果不存在会抛异常哟(KeeperException$NoNodeException)

zk.delete("/yzj",-1);

zk.close();

}

}

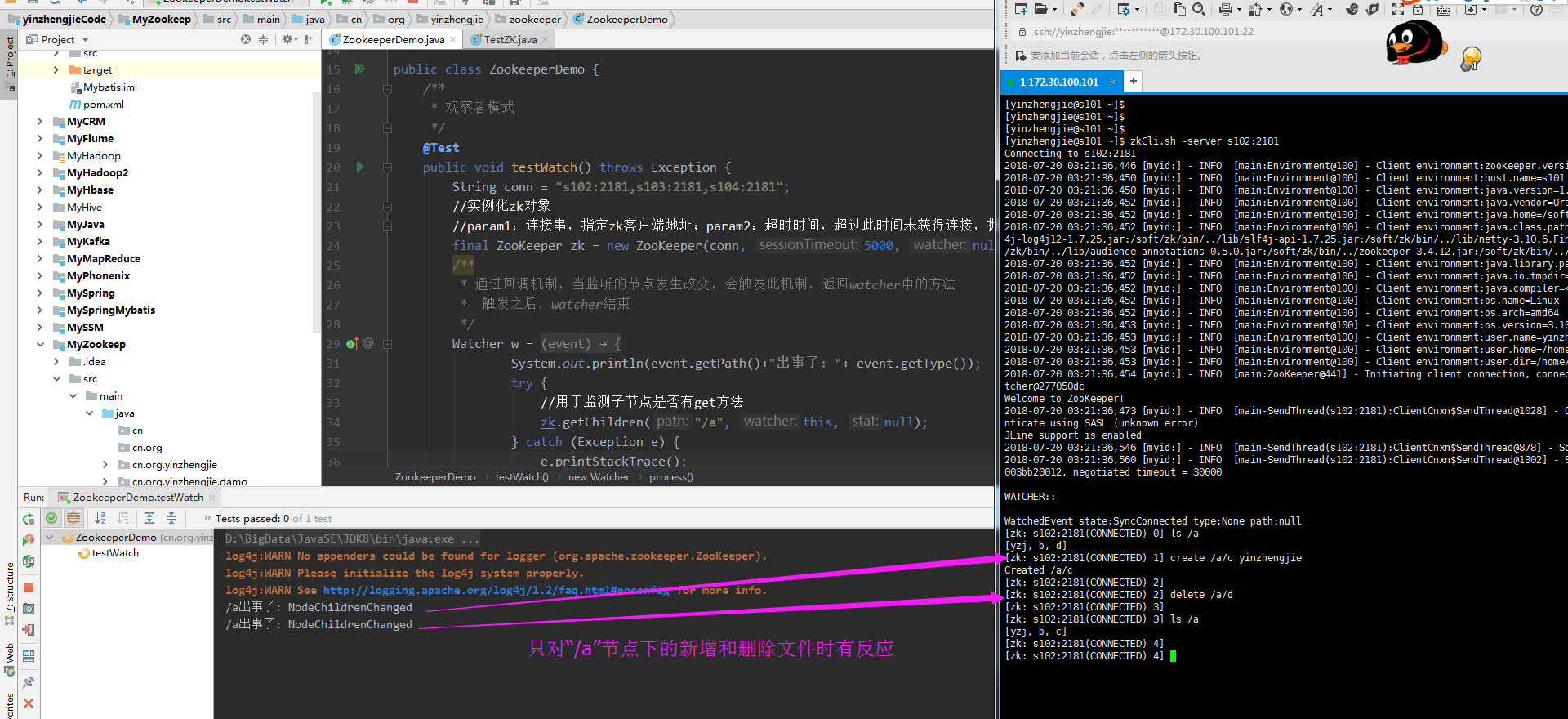

5>.观察者模式,监控某个子节点小案例

/*

@author :yinzhengjie

Blog:http://www.cnblogs.com/yinzhengjie/tag/Hadoop%E7%94%9F%E6%80%81%E5%9C%88/

EMAIL:y1053419035@qq.com

*/

package cn.org.yinzhengjie.zookeeper; import org.apache.zookeeper.WatchedEvent;

import org.apache.zookeeper.Watcher;

import org.apache.zookeeper.ZooKeeper;

import org.junit.Test; public class ZookeeperDemo {

/**

* 观察者模式

*/

@Test

public void testWatch() throws Exception {

String conn = "s102:2181,s103:2181,s104:2181";

//实例化zk对象

//param1:连接串,指定zk客户端地址;param2:超时时间,超过此时间未获得连接,抛出异常

final ZooKeeper zk = new ZooKeeper(conn, 5000, null);

/**

* 通过回调机制,当监听的节点发生改变,会触发此机制,返回watcher中的方法

* 触发之后,watcher结束

*/

Watcher w = new Watcher() {

public void process(WatchedEvent event) {

System.out.println(event.getPath()+"出事了: "+ event.getType());

try {

//用于监控"/a"节点下数据是否发生变化

zk.getChildren("/a", this, null);

} catch (Exception e) {

e.printStackTrace();

}

}

};

zk.getChildren("/a", w, null);

for ( ; ;){

Thread.sleep(1000);

}

}

}

以上代码执行结果如下:

6>.

Hadoop生态圈-zookeeper的API用法详解的更多相关文章

- JavaEE基础(02):Servlet核心API用法详解

本文源码:GitHub·点这里 || GitEE·点这里 一.核心API简介 1.Servlet执行流程 Servlet是JavaWeb的三大组件之一(Servlet.Filter.Listener) ...

- FFmpeg原始帧处理-滤镜API用法详解

本文为作者原创,转载请注明出处:https://www.cnblogs.com/leisure_chn/p/10429145.html 在FFmpeg中,滤镜(filter)处理的是未压缩的原始音视频 ...

- Java基础篇(04):日期与时间API用法详解

本文源码:GitHub·点这里 || GitEE·点这里 一.时间和日期 在系统开发中,日期与时间作为重要的业务因素,起到十分关键的作用,例如同一个时间节点下的数据生成,基于时间范围的各种数据统计和分 ...

- Java并发编程(06):Lock机制下API用法详解

本文源码:GitHub·点这里 || GitEE·点这里 一.Lock体系结构 1.基础接口简介 Lock加锁相关结构中涉及两个使用广泛的基础API:ReentrantLock类和Condition接 ...

- Hadoop生态圈-Zookeeper的工作原理分析

Hadoop生态圈-Zookeeper的工作原理分析 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 无论是是Kafka集群,还是producer和consumer都依赖于Zoo ...

- Zookeeper客户端Curator使用详解

Zookeeper客户端Curator使用详解 前提 最近刚好用到了zookeeper,做了一个基于SpringBoot.Curator.Bootstrap写了一个可视化的Web应用: zookeep ...

- Hadoop生态圈-zookeeper本地搭建以及常用命令介绍

Hadoop生态圈-zookeeper本地搭建以及常用命令介绍 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.下载zookeeper软件 下载地址:https://www.ap ...

- 转:Zookeeper客户端Curator使用详解

原文:https://www.jianshu.com/p/70151fc0ef5d Zookeeper客户端Curator使用详解 前提 最近刚好用到了zookeeper,做了一个基于SpringBo ...

- Extjs Window用法详解

今天我们来介绍一下Extjs中一个常用的控件Window.Window的作用是在页面中创建一个窗口,这个窗口作为容器,可以在它里面加入grid.form等控件,从而来实现更加复杂的界面逻辑. 本文的示 ...

随机推荐

- CS229笔记:支持向量机

考虑一个分类问题,用\(1\)表示正类标签,用\(-1\)表示负类标签,引入假设函数\(h\): \[ \begin{align*} g(z) &= \begin{cases} 1 & ...

- CS229笔记:线性回归

线性回归问题 首先做一些符号上的说明: \(x^{(i)}\):特征(feature) \(y^{(i)}\):目标变量(target variables) \(\mathcal{X}\):特征空间 ...

- 使用 restTemplate 实现get/post 请求

get 请求(这里是在 idea 的 test包中,所以需要直接 new RestTemplate() ) 即:RestTemplate restTemplate = new RestTemplate ...

- mysql安装版多次安装导致安装失败的解决方法(windows)(直接使用免安装方法)

https://www.cnblogs.com/feilongblog/p/mysql_install_init.html 测试成功 要点:mysqld install MySQL --default ...

- fiddler之会话数据的修改

fiddler之会话数据的修改 fiddler记录http的请求,并且针对特定的http请求,可以分析请求数据.修改数据.调试web系统等,功能十分强大.本篇主要讲两种修改的数据的方法,断点和Unlo ...

- 图-图的表示、搜索算法及其Java实现

1.图的表示方法 图:G=(V,E),V代表节点,E代表边. 图有两种表示方法:邻接链表和邻接矩阵 邻接链表因为在表示稀疏图(边的条数|E|远远小于|V|²的图)时非常紧凑而成为通常的选择. 如果需要 ...

- Grin v0.5在Ubuntu下的安装和启动

Grin和bitcoin一样也是一种点对点的现金交易系统,但它通过零和验证算法,使得双方的交易金额不会被第三方知晓,让它在隐私保护方面更强.其官方的介绍是: 所有人的电子交易,没有审查或限制.并提出它 ...

- PHP Laravel 连接并访问数据库

第一次连接数据库 数据库配置位于config/database.php数据库用户名及密码等敏感信息位于.env文件创建一个测试表laravel_course <?php namespace Ap ...

- python爬虫-使用cookie登录

前言: 什么是cookie? Cookie,指某些网站为了辨别用户身份.进行session跟踪而储存在用户本地终端上的数据(通常经过加密). 比如说有些网站需要登录后才能访问某个页面,在登录之前,你想 ...

- 一张图理解Git

更详细的git介绍:Git操作指南