k8s-0-集群

Docker回顾

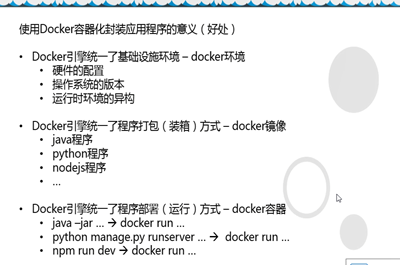

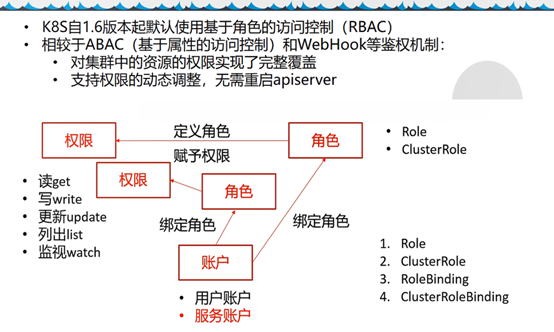

docker容器封装应用程序好处

内核在3.8以上,才能完整使用docker隔离功能(所有centos6不推荐用)

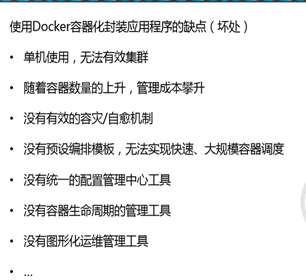

Docker容器化封装应用程序缺点

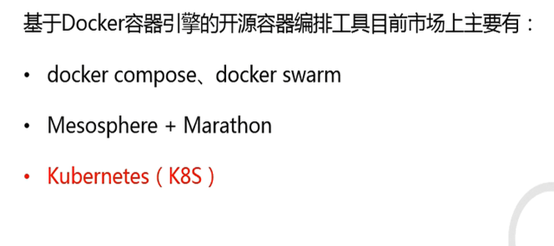

容器编排工具有哪些

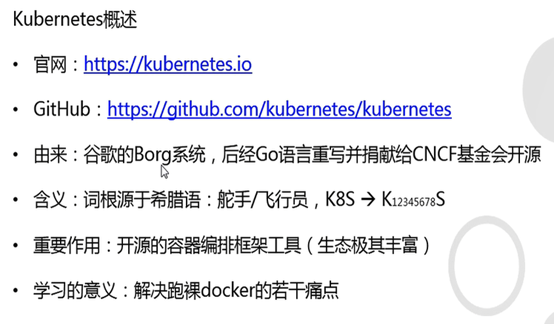

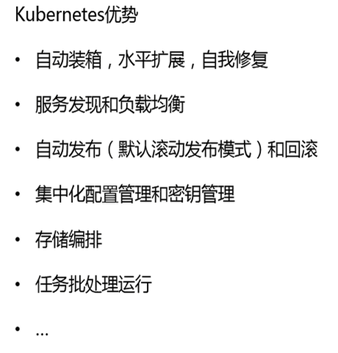

一: K8s概述

1.16版本及之后版本变化较大,建议使用1.15版本

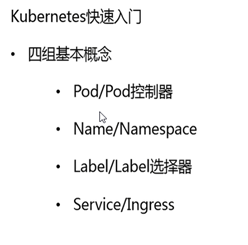

二: K8s快速入门

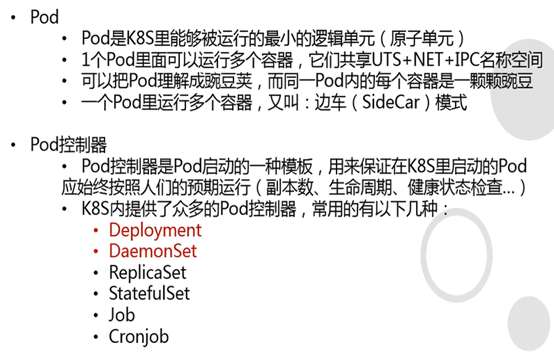

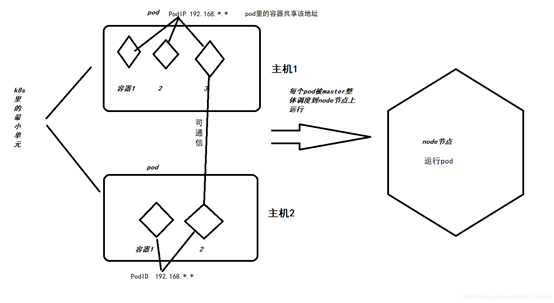

2.1: pod

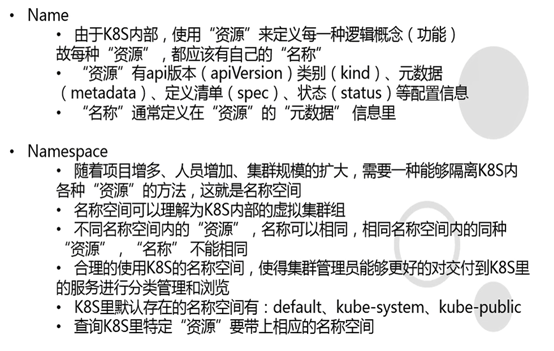

2.2: name namespace

2.3: Label label选择器

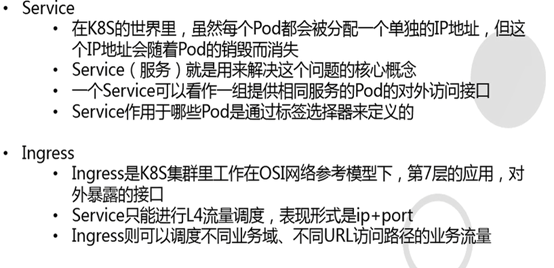

2.4: Service ingress

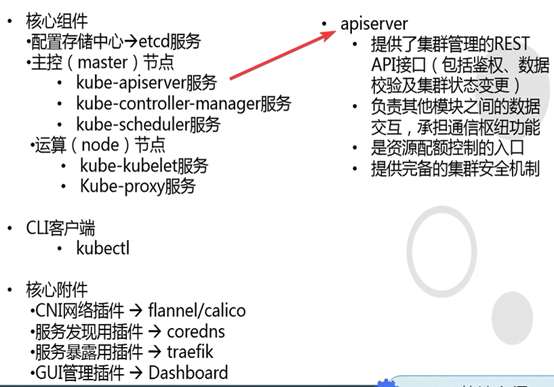

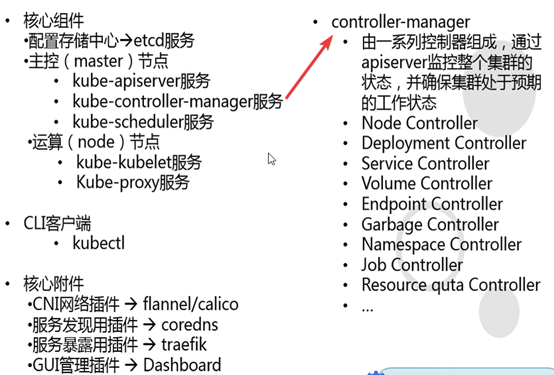

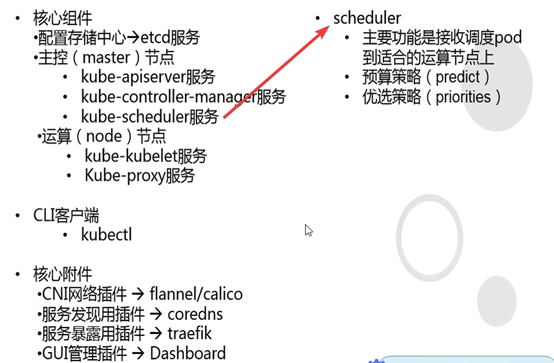

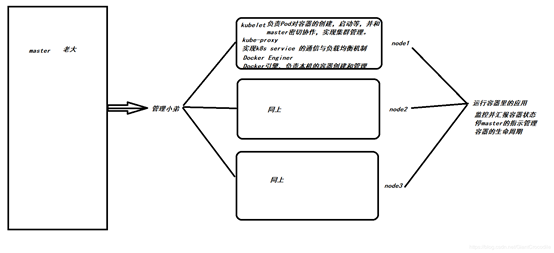

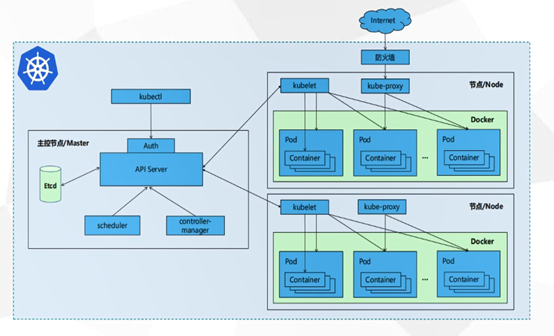

2.5: K8s核心组件

Apiserver

Controller-manager

Scheduler

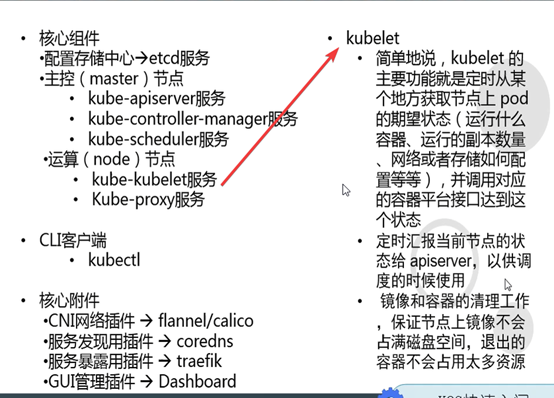

Kubelet

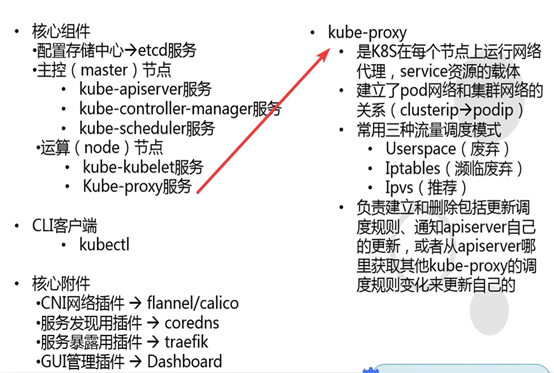

Kube-proxy

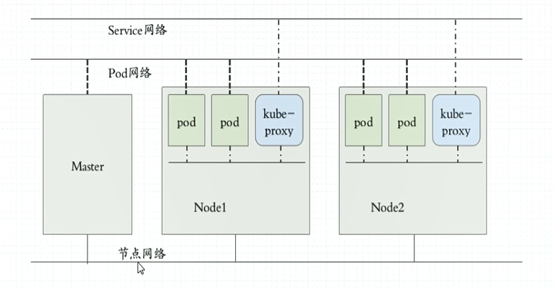

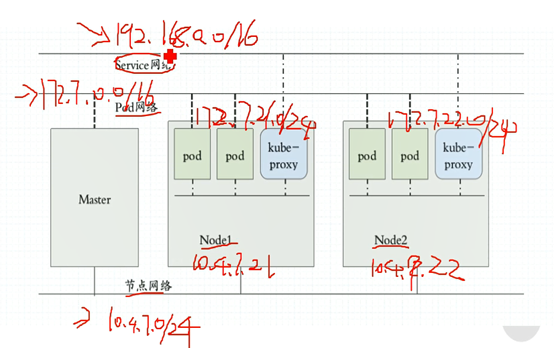

三种网络

网段配置,配置3种网段,易排错,易区分

核心组件原理图

三、k8s环境准备

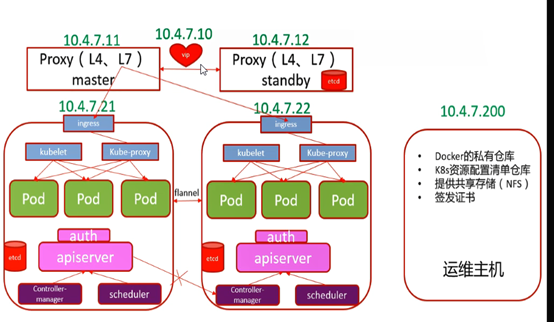

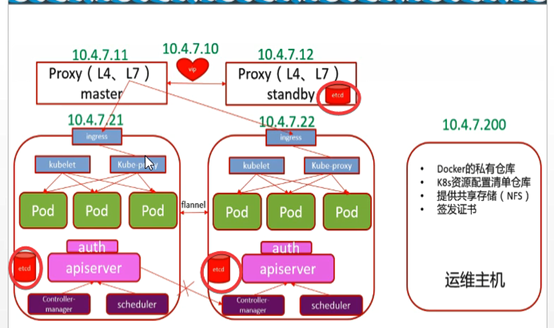

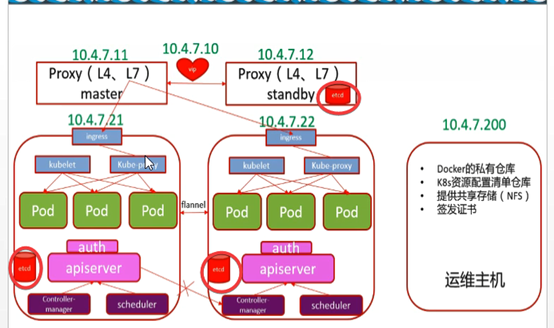

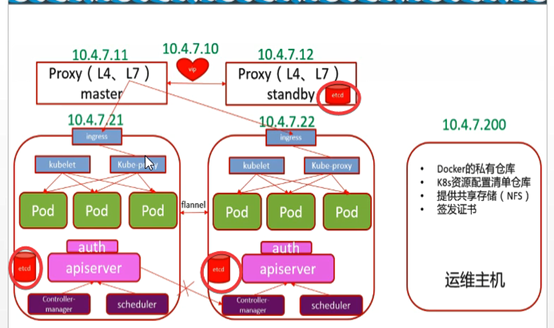

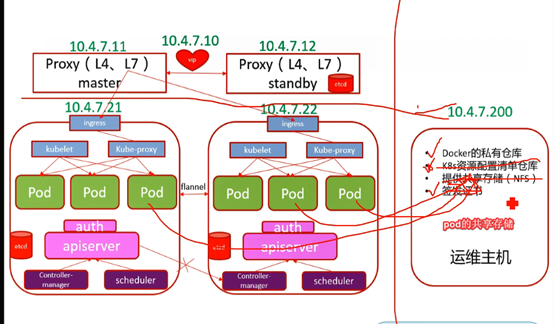

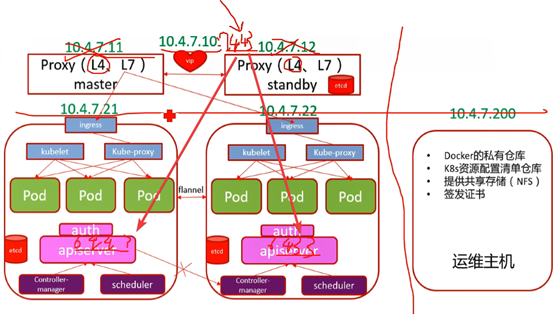

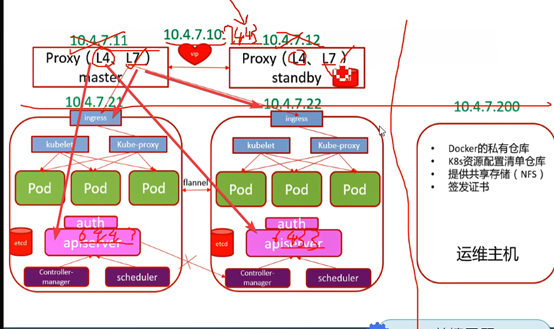

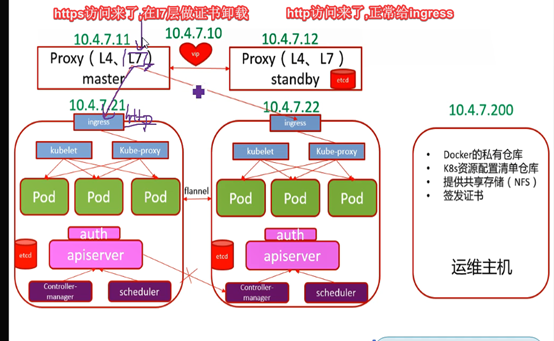

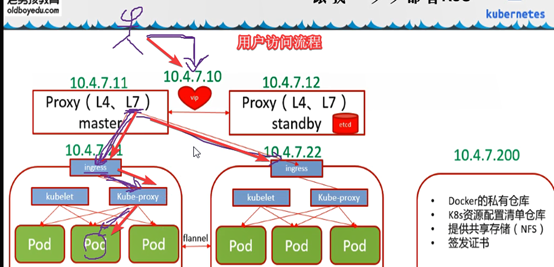

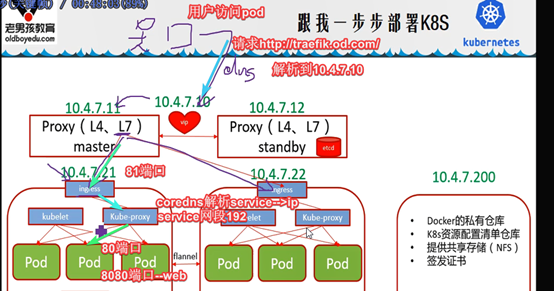

K8s服务架构

部署架构

5台主机

Etcd需要配奇数台高可用

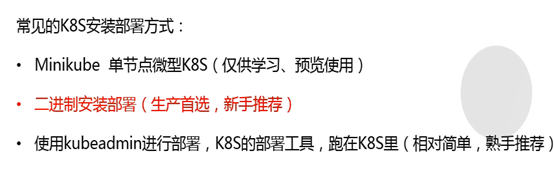

3.1: 安装部署几种方法

Kubeadmin不推荐 #证书存在etcd里,不好更新

Minkube

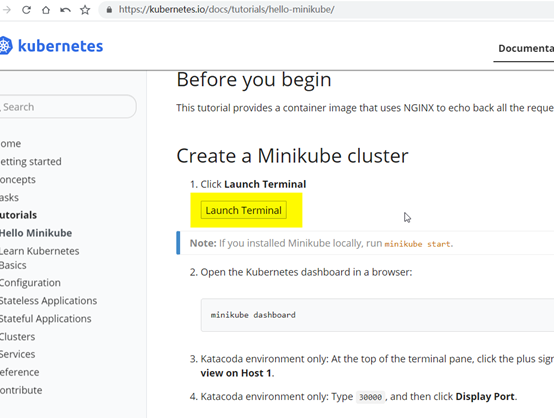

Minkube:官网进入使用

https://kubernetes.io/docs/tutorials/hello-minikube/

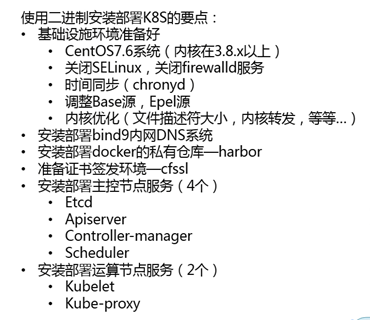

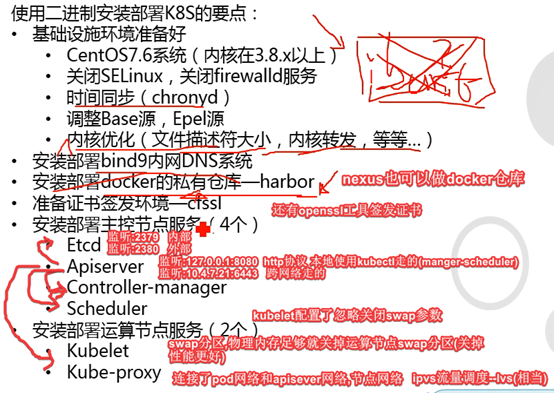

3.2: 安装部署(二进制)

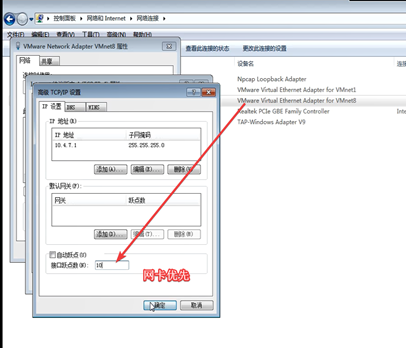

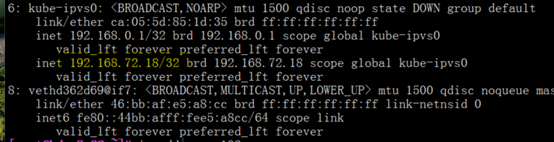

虚拟网卡设置

|

主机名 |

角色 |

ip |

|

HDSS7-11.host.com |

k8s代理节点1 |

10.4.7.11 |

|

HDSS7-12.host.com |

k8s代理节点2 |

10.4.7.12 |

|

HDSS7-21.host.com |

k8s运算节点1 |

10.4.7.21 |

|

HDSS7-22.host.com |

k8s运算节点2 |

10.4.7.22 |

|

HDSS7-200.host.com |

k8s运维节点(docker仓库) |

10.4.7.200 |

四、 k8s安装部署

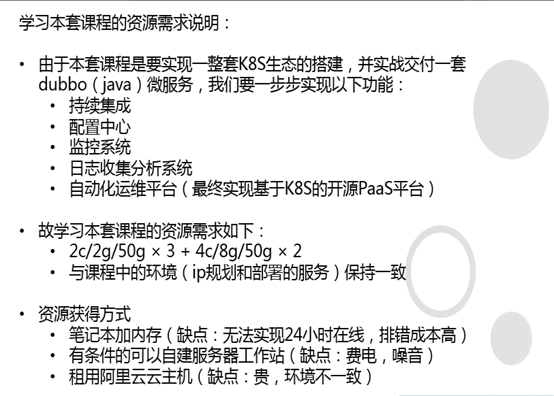

资源需求

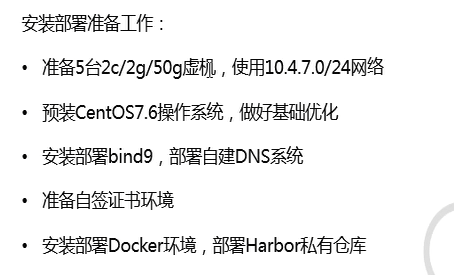

3.2.1: 服务器环境准备

5台服务器对应ip地址,内核在3.8以上

调整操作系统

修改主机名

[root@hdss7-11 ~]# hostnamectl set-hostname hdss7-11.host.com

[root@hdss7-11 ~]# vim /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

ONBOOT=yes

IPADDR=10.4.7.11

PREFIX=24

GATEWAY=10.4.7.254

DNS1=10.4.7.254 #dns可以指向网关,网关有dns服务

yum install epel-release -y #安装epel源

#安装必要工具(所有主机)

yum install wget net-tools telnet tree nmap sysstat lrzsz dos2unix bind-utils -y

3.2.2: 部署DNS服务

#安装bind9软件,

[root@hdss7-11 ~]# yum install bind -y #在hdss7-11主机上安装

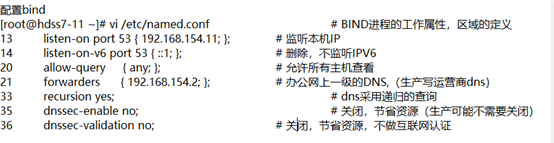

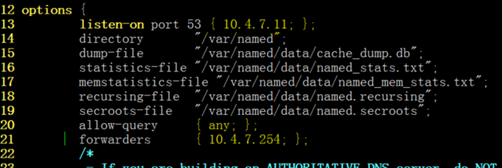

修改bind dns配置文件

#空格,空行严格要求

[root@hdss7-11 ~]# vim /etc/named.conf

options {

listen-on port 53 { 10.4.7.11; }; #使用哪块网卡监听

directory "/var/named";

allow-query { any; }; #所有地址都可以来查询dns

forwarders { 10.4.7.254; }; #本地找不到的dns,抛给这个地址(新增)

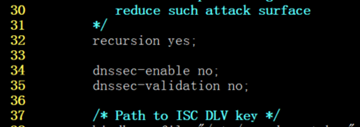

recursion yes; #使用递归算法查询dns

dnssec-enable no;

dnssec-validation no;

[root@hdss7-11 ~]# named-checkconf #检查配置

配置区域配置文件

主机名-配置成地址+ip+功能域名

[root@hdss7-11 ~]# vi /etc/named.rfc1912.zones

#文件最后添加

zone "host.com" IN {

type master;

file "host.com.zone";

allow-update { 10.4.7.11; };

};

zone "od.com" IN {

type master;

file "od.com.zone";

allow-update { 10.4.7.11; };

};

配置区域数据文件

#文件名称与区域配置文件中一致

host.com.zone 域名

[root@hdss7-11 ~]# vi /var/named/host.com.zone

$ORIGIN host.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.host.com. dnsadmin.host.com. (

2020072201 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.host.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

HDSS7-11 A 10.4.7.11

HDSS7-12 A 10.4.7.12

HDSS7-21 A 10.4.7.21

HDSS7-22 A 10.4.7.22

HDSS7-200 A 10.4.7.200

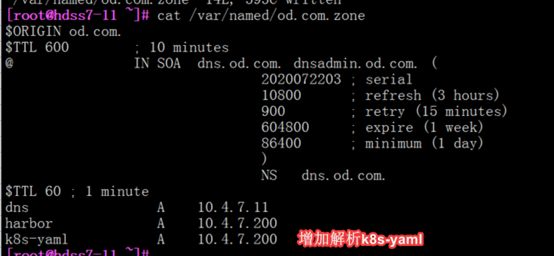

od.com.zone域名

[root@hdss7-11 ~]# vi /var/named/od.com.zone

$ORIGIN od.com.

$TTL 600 ; 10 minutes

@ IN SOA dns.od.com. dnsadmin.od.com. (

2020072201 ; serial

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.od.com.

$TTL 60 ; 1 minute

dns A 10.4.7.11

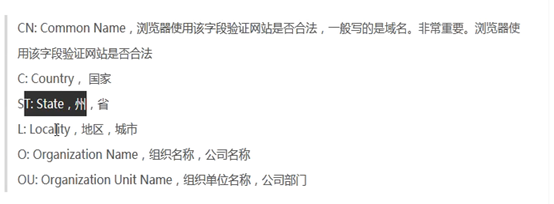

注释:

$ORIGIN host.com.

$TTL 600 ; 10 minutes # 过期时间

@ IN SOA dns.host.com. dnsadmin.host.com. (

# 区域授权文件的开始,OSA记录,dnsadmin.host.com为邮箱

#2019.12.09+01序号

2019120901 ; serial # 安装的当天时间

10800 ; refresh (3 hours)

900 ; retry (15 minutes)

604800 ; expire (1 week)

86400 ; minimum (1 day)

)

NS dns.host.com. # NS记录

$TTL 60 ; 1 minute

dns A 10.4.7.11 # A记录

HDSS7-11 A 10.4.7.11

HDSS7-12 A 10.4.7.12

HDSS7-21 A 10.4.7.21

HDSS7-22 A 10.4.7.22

HDSS7-200 A 10.4.7.200

[root@hdss7-11 ~]# named-checkconf #检查配置

[root@hdss7-11 ~]# systemctl start named #启动服务

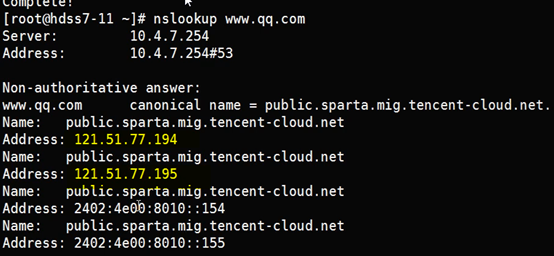

[root@hdss7-11 ~]# dig -t A hdss7-21.host.com @10.4.7.11 +short #解析测试

10.4.7.21

#修改5台主机,dns地址为11

[root@hdss7-200 ~]# sed -i 's/DNS1=10.4.7.254/DNS1=10.4.7.11/g' /etc/sysconfig/network-scripts/ifcfg-eth0

systemctl restart network

[root@hdss7-11 ~]# ping hdss7-12.host.com #ping测试

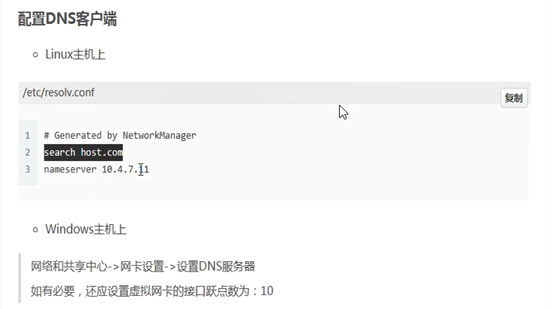

配置dns客户端

echo 'search host.com'>>/etc/resolv.conf

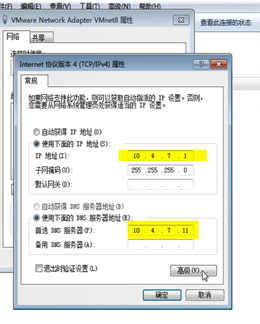

WINDOWs配置测试用

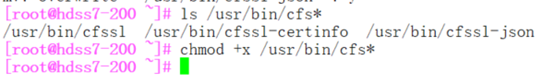

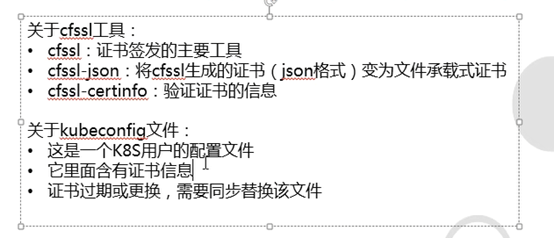

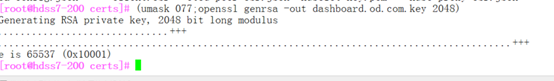

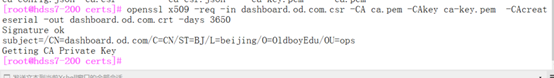

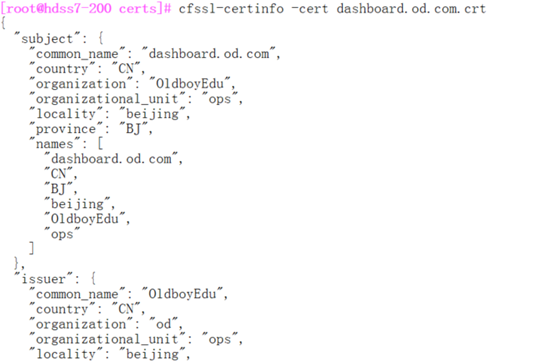

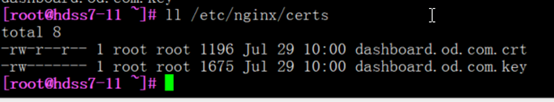

3.2.3: 准备签发证书环境

安装CFSSL

证书签发工具CFSSL:R1.2

cfssl下载地址

cfssl-json下载地址

cfssl-certinfo下载地址

在hdss7-200服务器上

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/bin/cfssl-json

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /usr/bin/cfssl-certinfo

加可执行权限

[root@hdss7-200 opt]# mkdir certs

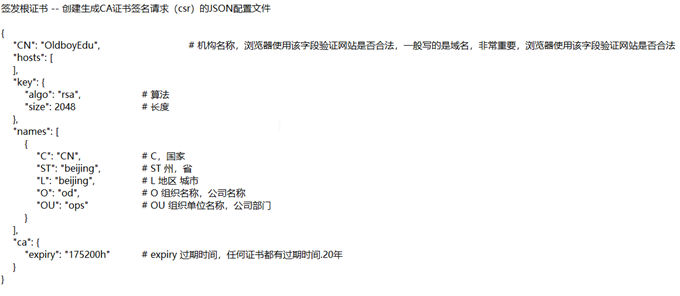

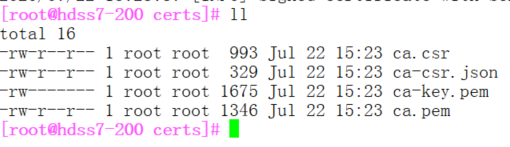

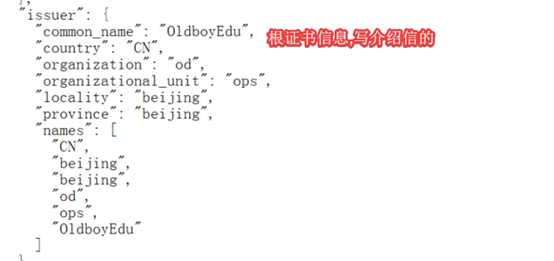

签发根证书

[root@hdss7-200 certs]# vi /opt/certs/ca-csr.json

{

"CN": "OldboyEdu ",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

],

"ca": {

"expiry": "175200h"

}

}

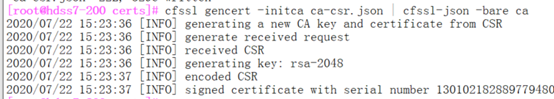

#生成证书,|前面是生成证书,后面是承载生成的证书

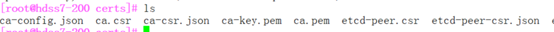

[root@hdss7-200 certs]# cfssl gencert -initca ca-csr.json | cfssl-json -bare ca

ca.pem # 根证书

ca-key.pem #根证书私钥

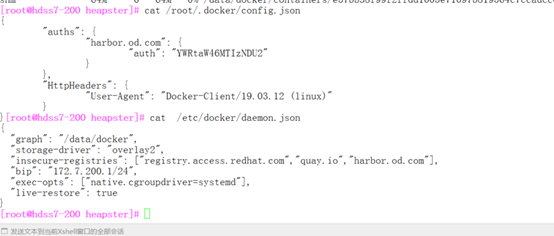

3.2.4: 部署docker环境

在node主机与运维主机上:21、22、200

[root@hdss7-200 ]# curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun #安装docker在线脚本

[root@hdss7-200 ]# mkdir -p /etc/docker

[root@hdss7-200 ]# mkdir -p /data/docker

配置docker

[root@hdss7-200 ]# vi /etc/docker/daemon.json

{

"graph": "/data/docker",

"storage-driver": "overlay2",

"insecure-registries": ["registry.access.redhat.com","quay.io","harbor.od.com"],

"bip": "172.7.200.1/24",

"exec-opts": ["native.cgroupdriver=systemd"],

"live-restore": true

}

# "bip": "172.7.200.1/24" 定义k8s主机上k8s pod的ip地址网段 -- 改成node节点的ip

启动

[root@hdss7-21 ~]# systemctl start docker

[root@hdss7-21 ~]# docker info

docker version

3.2.5部署docker镜像私有仓库harbor

选择1.7.6及以上的版本

上传安装包

[root@hdss7-200 ~]# tar xvf harbor-offline-installer-v1.8.3.tgz -C /opt/

[root@hdss7-200 ~]# cd /opt/

[root@hdss7-200 opt]# mv harbor/ harbor-v1.8.3

[root@hdss7-200 opt]# ln -s harbor-v1.8.3/ harbor

修改harbor配置文件

[root@hdss7-200 harbor]# mkdir /data/harbor/logs -p

[root@hdss7-200 harbor]# vim harbor.yml

hostname: harbor.od.com

port: 180

harbor_admin_password: 123456

data_volume: /data/harbor #数据存储目录

location: /data/harbor/logs #日志所在位置

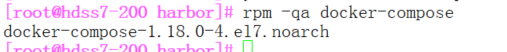

安装docker-compose

#harbor安装依赖于docker-compose做单机编排

[root@hdss7-200 harbor]# yum install docker-compose -y

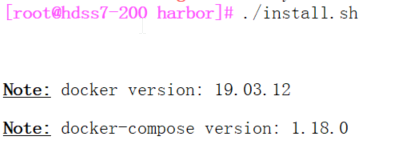

开始安装harbor

#根据本地的offonline安装

[root@hdss7-200 harbor]# ./install.sh

[root@hdss7-200 harbor]# docker-compose ps #查看安装是否正常

安装nginx

[root@hdss7-200 harbor]# yum install nginx -y #安装nginx

[root@hdss7-200 harbor]# cat /etc/nginx/conf.d/harbor.od.com.conf #配置文件

server {

listen 80;

server_name harbor.od.com; #域名

client_max_body_size 1000m; # 1G

location / {

proxy_pass http://127.0.0.1:180;

}

}

[root@hdss7-200 harbor]# systemctl start nginx

[root@hdss7-200 harbor]# systemctl enable nginx

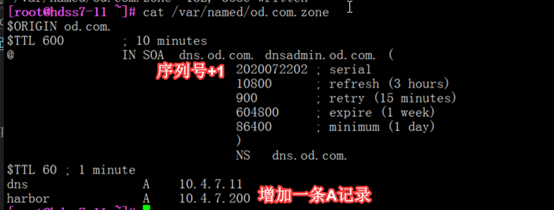

添加dns解析

vim /var/named/od.com.zone

#序号必须+1,可能是为了识别配置变化

[root@hdss7-11 ~]# systemctl restart named

[root@hdss7-200 harbor]# dig -t A harbor.od.com +short #检测是否正常

登录harbor

账户:admin 密码:123456

上传镜像nginx

[root@hdss7-200 harbor]# docker pull nginx:1.7.9

[root@hdss7-200 harbor]# docker tag 84581e99d807 harbor.od.com/public/nginx:v1.7.9

[root@hdss7-200 harbor]# docker login harbor.od.com #需要登录认证才能推

[root@hdss7-200 harbor]# docker push harbor.od.com/public/nginx:v1.7.9

#如果登录不上

- 检查nginx

- Docker ps -a 检查harbor开启的服务是否有退出状态

4.1: 部署Master节点服务

属于主控节点服务

4.1.1: 部署etcd集群

集群规划

|

主机名 |

角色 |

ip |

|

HDSS7-12.host.com |

etcd lead |

10.4.7.12 |

|

HDSS7-21.host.com |

etcd follow |

10.4.7.21 |

|

HDSS7-22.host.com |

etcd follow |

10.4.7.22 |

创建生成证书签名请求(csr)的JSON配置文件

[root@hdss7-200 harbor]# cd /opt/certs/

[root@hdss7-200 certs]# vi ca-config.json

{

"signing": {

"default": {

"expiry": "175200h"

},

"profiles": {

"server": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth"

]

},

"client": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"client auth"

]

},

"peer": {

"expiry": "175200h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

[root@hdss7-200 certs]# cat etcd-peer-csr.json

{

"CN": "k8s-etcd",

"hosts": [

"10.4.7.11",

"10.4.7.12",

"10.4.7.21",

"10.4.7.22"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

#hosts段有可能部署在哪些主机上,不支持网段

签发证书

[root@hdss7-200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=peer etcd-peer-csr.json|cfssl-json -bare etcd-peer

安装etcd

[root@hdss7-12 ~]# useradd -s /sbin/nologin -M etcd

[root@hdss7-12 ~]# id etcd

uid=1002(etcd) gid=1002(etcd) groups=1002(etcd)

下载地址: https://github.com/etcd-io/etcd/releases/download/v3.1.8/etcd-v3.1.8-linux-amd64.tar.gz

建议使用3.1x版本

HDSS7-12.host.com上

cd /opt/src

[root@hdss7-12 src]# tar xf etcd-v3.1.20-linux-amd64.tar.gz

[root@hdss7-12 src]# mv etcd-v3.1.20-linux-amd64 /opt/

[root@hdss7-12 opt]# mv etcd-v3.1.20-linux-amd64/ etcd-v3.1.20

[root@hdss7-12 opt]# ln -s etcd-v3.1.20/ etcd

#创建目录,拷贝证书、私钥

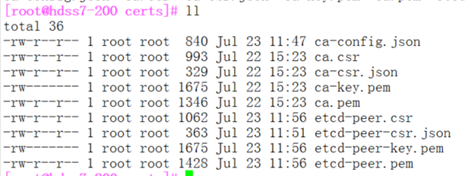

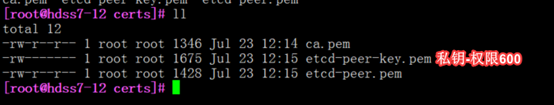

mkdir -p /opt/etcd/certs /data/etcd /data/logs/etcd-server

[root@hdss7-12 etcd]# cd certs/

[root@hdss7-12 certs]# scp hdss7-200:/opt/certs/ca.pem .

[root@hdss7-12 certs]# scp hdss7-200:/opt/certs/etcd-peer-key.pem .

[root@hdss7-12 certs]# scp hdss7-200:/opt/certs/etcd-peer.pem .

私钥要保管好,不能给别人看

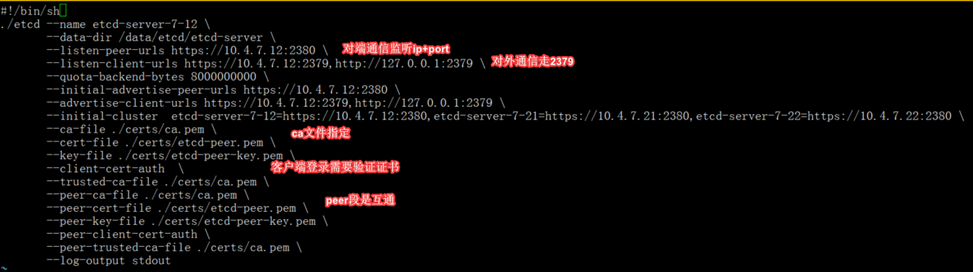

#!/bin/sh

./etcd --name etcd-server-7-12 \

--data-dir /data/etcd/etcd-server \

--listen-peer-urls https://10.4.7.12:2380 \

--listen-client-urls https://10.4.7.12:2379,http://127.0.0.1:2379 \

--quota-backend-bytes 8000000000 \

--initial-advertise-peer-urls https://10.4.7.12:2380 \

--advertise-client-urls https://10.4.7.12:2379,http://127.0.0.1:2379 \

--initial-cluster etcd-server-7-12=https://10.4.7.12:2380,etcd-server-7-21=https://10.4.7.21:2380,etcd-server-7-22=https://10.4.7.22:2380 \

--ca-file ./certs/ca.pem \

--cert-file ./certs/etcd-peer.pem \

--key-file ./certs/etcd-peer-key.pem \

--client-cert-auth \

--trusted-ca-file ./certs/ca.pem \

--peer-ca-file ./certs/ca.pem \

--peer-cert-file ./certs/etcd-peer.pem \

--peer-key-file ./certs/etcd-peer-key.pem \

--peer-client-cert-auth \

--peer-trusted-ca-file ./certs/ca.pem \

--log-output stdout

[root@hdss7-12 etcd]# chown -R etcd.etcd /opt/etcd-v3.1.20/

[root@hdss7-12 etcd]# chmod +x etcd-server-startup.sh

[root@hdss7-12 etcd]# chown -R etcd.etcd /data/etcd/

[root@hdss7-12 etcd]# chown -R etcd.etcd /data/logs/etcd-server/

#权限不对,supervisor起不来etcd

安装supervisor

#安装管理后台进程的软件,etcd进程掉了,自动拉起来

[root@hdss7-12 etcd]# yum install supervisor -y

[root@hdss7-12 etcd]# systemctl start supervisord.service

[root@hdss7-12 etcd]# systemctl enable supervisord.service

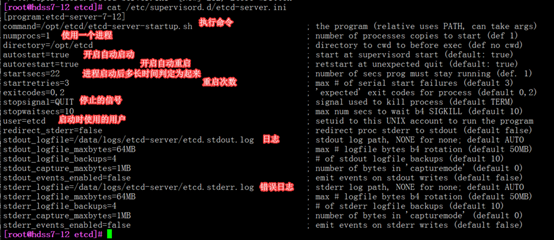

创建etcd-server的启动配置

[root@hdss7-12 etcd]# cat /etc/supervisord.d/etcd-server.ini

[program:etcd-server-7-12]

command=/opt/etcd/etcd-server-startup.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/etcd ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=22 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=etcd ; setuid to this UNIX account to run the program

redirect_stderr=false ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/etcd-server/etcd.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

stderr_logfile=/data/logs/etcd-server/etcd.stderr.log ; stderr log path, NONE for none; default AUTO

stderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stderr_logfile_backups=4 ; # of stderr logfile backups (default 10)

stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stderr_events_enabled=false ; emit events on stderr writes (default false)

#使配置生效

[root@hdss7-12 etcd]# supervisorctl update

etcd-server-7-12: added process group

[root@hdss7-12 etcd]# supervisorctl status

etcd-server-7-12 RUNNING pid 2143, uptime 0:00:25

[root@hdss7-12 etcd]# tail -fn 200 /data/logs/etcd-server/etcd.stdout.log

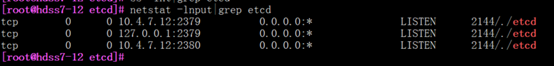

[root@hdss7-12 etcd]# netstat -lnput|grep etcd

部署21,22节点,配置与11一样

tar xvf etcd-v3.1.20-linux-amd64.tar.gz -C /opt/

mv /opt/etcd-v3.1.20-linux-amd64/ /opt/etcd-v3.1.20

ln -s /opt/etcd-v3.1.20 /opt/etcd

cd /opt/etcd

略….

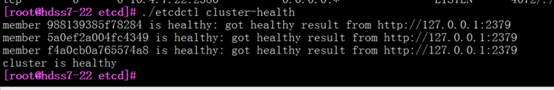

检查etcd集群状态

#检查etcd集群节点健康状态

cd /opt/etcd

[root@hdss7-22 etcd]# ./etcdctl cluster-health

#检查方法二,可以看到谁是主

[root@hdss7-22 etcd]# ./etcdctl member list

4.1.2: 部署apiserver

集群规划

|

主机名 |

角色 |

ip |

|

HDSS7-21.host.com |

kube-apiserver |

10.4.7.21 |

|

HDSS7-22.host.com |

kube-apiserver |

10.4.7.22 |

|

HDSS7-11.host.com |

4层负载均衡 |

10.4.7.11 |

|

HDSS7-12.host.com |

4层负载均衡 |

10.4.7.12 |

注意:这里10.4.7.11和10.4.7.12使用nginx做4层负载均衡器,用keepalived跑一个vip:10.4.7.10,代理两个kube-apiserver,实现高可用

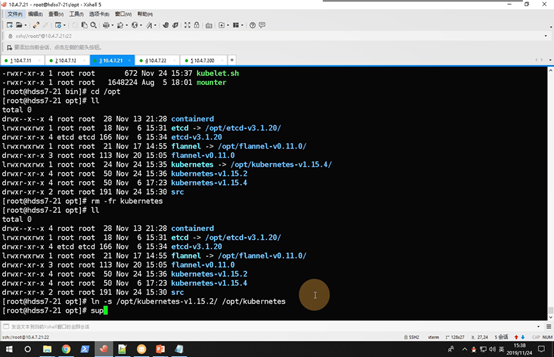

安装下载k8s

这里部署文档以HDSS7-21.host.com主机为例,另外一台运算节点安装部署方法类似

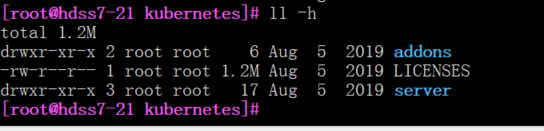

[root@hdss7-21 src]# tar xf kubernetes-server-linux-amd64-v1.15.2.tar.gz -C /opt/kubernetes-v1.15.2

[root@hdss7-21 opt]# ln -s kubernetes-v1.15.2 kubernetes

[root@hdss7-21 kubernetes]# rm -rf kubernetes-src.tar.gz #删除源码包,占空间没用

[root@hdss7-21 kubernetes]# rm server/bin/*.tar -rf #这些压缩包都用不到

[root@hdss7-21 kubernetes]# rm server/bin/*_tag -rf #用不到删掉

安装包其实很小

签发client证书

Client证书: apiserver与etcd通信时用的证书

(apiserver=客户端 etcd=服务器端,所以配置client证书)

运维主机HDSS7-200.host.com上:

创建生成证书签名请求(csr)的JSON配置文件

vim /opt/certs/client-csr.json

{

"CN": "k8s-node",

"hosts": [

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

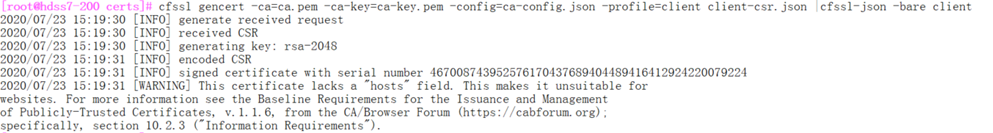

创建证书

# client 证书: apisever找etcd需要的证书

[root@hdss7-200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client client-csr.json |cfssl-json -bare client

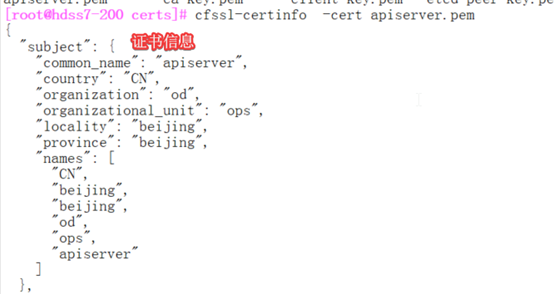

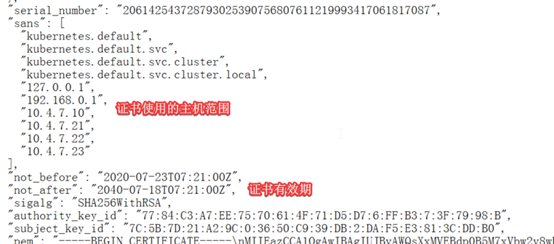

签发kube-apiserver证书

# client 证书: 找apisever需要的证书

{

"CN": "apiserver",

"hosts": [

"127.0.0.1",

"192.168.0.1",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

"10.4.7.10",

"10.4.7.21",

"10.4.7.22",

"10.4.7.23"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

#创建apisever证书(自己启动用apiserver证书)

[root@hdss7-200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server apiserver-csr.json |cfssl-json -bare apiserver

-profile 指定的是证书类型(client,server,peer)

拷贝证书

[root@hdss7-21 kubernetes]# mkdir /opt/kubernetes/certs -p

[root@hdss7-21 kubernetes]# cd /opt/kubernetes/server/bin/certs

scp hdss7-200:/opt/certs/ca-key.pem .

scp hdss7-200:/opt/certs/client-key.pem .

scp hdss7-200:/opt/certs/client.pem .

scp hdss7-200:/opt/certs/apiserver.pem .

scp hdss7-200:/opt/certs/apiserver-key.pem .

配置audit.yaml

K8s资源配置清单,专门给k8s做日志审计用的

[root@hdss7-21 bin]# mkdir conf

[root@hdss7-21 bin]# cd conf/

vim /opt/kubernetes/server/bin/conf/audit.yaml

apiVersion: audit.k8s.io/v1beta1 # This is required.

kind: Policy

# Don't generate audit events for all requests in RequestReceived stage.

omitStages:

- "RequestReceived"

rules:

# Log pod changes at RequestResponse level

- level: RequestResponse

resources:

- group: ""

# Resource "pods" doesn't match requests to any subresource of pods,

# which is consistent with the RBAC policy.

resources: ["pods"]

# Log "pods/log", "pods/status" at Metadata level

- level: Metadata

resources:

- group: ""

resources: ["pods/log", "pods/status"]

# Don't log requests to a configmap called "controller-leader"

- level: None

resources:

- group: ""

resources: ["configmaps"]

resourceNames: ["controller-leader"]

# Don't log watch requests by the "system:kube-proxy" on endpoints or services

- level: None

users: ["system:kube-proxy"]

verbs: ["watch"]

resources:

- group: "" # core API group

resources: ["endpoints", "services"]

# Don't log authenticated requests to certain non-resource URL paths.

- level: None

userGroups: ["system:authenticated"]

nonResourceURLs:

- "/api*" # Wildcard matching.

- "/version"

# Log the request body of configmap changes in kube-system.

- level: Request

resources:

- group: "" # core API group

resources: ["configmaps"]

# This rule only applies to resources in the "kube-system" namespace.

# The empty string "" can be used to select non-namespaced resources.

namespaces: ["kube-system"]

# Log configmap and secret changes in all other namespaces at the Metadata level.

- level: Metadata

resources:

- group: "" # core API group

resources: ["secrets", "configmaps"]

# Log all other resources in core and extensions at the Request level.

- level: Request

resources:

- group: "" # core API group

- group: "extensions" # Version of group should NOT be included.

# A catch-all rule to log all other requests at the Metadata level.

- level: Metadata

# Long-running requests like watches that fall under this rule will not

# generate an audit event in RequestReceived.

omitStages:

- "RequestReceived"

编写启动脚本

#apiserver存放日志

[root@hdss7-21 bin]# mkdir /data/logs/kubernetes/kube-apiserver/ -p

#查看启动参数帮助

./kube-apiserver –help|grep -A 5 target-ram-mb

#脚本

[root@hdss7-21 bin]# vim /opt/kubernetes/server/bin/kube-apiserver.sh

#!/bin/bash

./kube-apiserver \

--apiserver-count 2 \

--audit-log-path /data/logs/kubernetes/kube-apiserver/audit-log \

--audit-policy-file ./conf/audit.yaml \

--authorization-mode RBAC \

--client-ca-file ./cert/ca.pem \

--requestheader-client-ca-file ./cert/ca.pem \

--enable-admission-plugins NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionW

ebhook,ResourceQuota \

--etcd-cafile ./cert/ca.pem \

--etcd-certfile ./cert/client.pem \

--etcd-keyfile ./cert/client-key.pem \

--etcd-servers https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 \

--service-account-key-file ./cert/ca-key.pem \

--service-cluster-ip-range 192.168.0.0/16 \

--service-node-port-range 3000-29999 \

--target-ram-mb=1024 \

--kubelet-client-certificate ./cert/client.pem \

--kubelet-client-key ./cert/client-key.pem \

--log-dir /data/logs/kubernetes/kube-apiserver \

--tls-cert-file ./cert/apiserver.pem \

--tls-private-key-file ./cert/apiserver-key.pem \

--v 2

#脚本加执行权限

[root@hdss7-21 bin]# chmod +x /opt/kubernetes/server/bin/kube-apiserver.sh

创建supervisor配置管理apiserver进程

[root@hdss7-21 bin]# vim /etc/supervisord.d/kube-apiserver.ini

[program:kube-apiserver]

command=/opt/kubernetes/server/bin/kube-apiserver.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=22 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=false ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

stderr_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stderr.log ; stderr log path, NONE for none; default AUTO

stderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stderr_logfile_backups=4 ; # of stderr logfile backups (default 10)

stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stderr_events_enabled=false ; emit events on stderr writes (default false)

#加载supervisor配置

[root@hdss7-21 bin]# mv certs/ cert

[root@hdss7-21 bin]# supervisorctl update

[root@hdss7-21 bin]# systemctl restart supervisord.service

[root@hdss7-21 bin]# supervisorctl status

部署apiserver-hdss-7-22服务器

将hdss-7-21服务器kbutenets拷贝到hdss-7-22目录

mkdir /data/logs/kubernetes/kube-apiserver/ -p #日志存放目录

[root@hdss7-22 opt]# ln -s kubernetes-v1.15.2/ kubernetes #软链接

#启动服务

[root@hdss7-22 opt]# systemctl restart supervisord.service

[root@hdss7-22 opt]# supervisorctl status

etcd-server-7-22 STARTING

kube-apiserver-7-22 STARTING

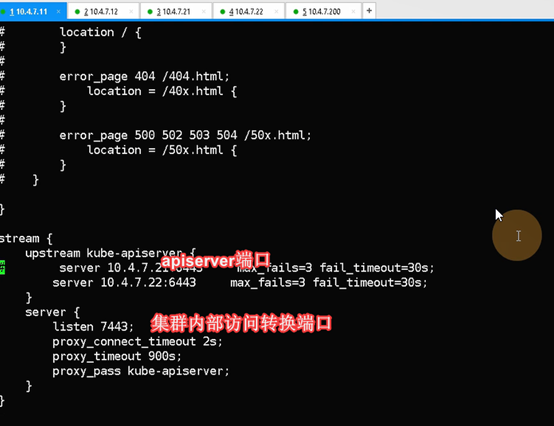

4.1.3: 配4层反向代理

HDSS7-11和HDSS7-12上配置:

实现4层负载需要将server标签写到http标签外面,即最后(7层不然)

yum install nginx -y

vim /etc/nginx/nginx.conf

stream {

upstream kube-apiserver {

server 10.4.7.21:6443 max_fails=3 fail_timeout=30s; #6443时apisever的端口

server 10.4.7.22:6443 max_fails=3 fail_timeout=30s;

}

server {

listen 7443; #针对集群内部组件调用的交流端口

proxy_connect_timeout 2s;

proxy_timeout 900s;

proxy_pass kube-apiserver;

}

}

检查语法

[root@hdss7-11 opt]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@hdss7-12 opt]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

#启动nginx

[root@hdss7-12 opt]# systemctl start nginx

[root@hdss7-11 opt]# systemctl enable nginx

7层负载均衡是针对外网访问pod

配置keepalive高可用

HDSS7-11和HDSS7-12上配置:

yum install keepalived -y

编写监听脚本 -- 直接上传

[root@hdss7-11 ~]# vi /etc/keepalived/check_port.sh

#!/bin/bash

#keepalived 监控端口脚本

#使用方法:

#在keepalived的配置文件中

#vrrp_script check_port {#创建一个vrrp_script脚本,检查配置

# script "/etc/keepalived/check_port.sh 7443" #配置监听的端口

# interval 2 #检查脚本的频率,单位(秒)

#}

CHK_PORT=$1

if [ -n "$CHK_PORT" ];then

PORT_PROCESS=`ss -lnt|grep $CHK_PORT|wc -l`

if [ $PORT_PROCESS -eq 0 ];then

echo "Port $CHK_PORT Is Not Used,End."

Exit 1

fi

else

echo "Check Port Cant Be Empty!"

fi

[root@hdss7-12 ~]# chmod +x /etc/keepalived/check_port.sh

配置keepalived -- 删除原文件,直接上传修改

keepalived 主:

vip在生产上动,属于重大生产事故

所有增加了nopreempt (非抢占机制)参数,当vip飘逸后,原主服务器正常后,不再飘逸回去,

回去方案:在流量低谷时动,必须确定原主nginx正常,再重启从的keepalived(即停掉了vrrp广播),将飘逸回去

[root@hdss7-11 ~]# vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id 10.4.7.11

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 251

priority 100

advert_int 1

mcast_src_ip 10.4.7.11

nopreempt #

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

10.4.7.10

}

}

Keepalived 备

HDSS7-12.host.com上

! Configuration File for keepalived

global_defs {

router_id 10.4.7.12

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_port.sh 7443"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 251

mcast_src_ip 10.4.7.12

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 11111111

}

track_script {

chk_nginx

}

virtual_ipaddress {

10.4.7.10

}

}

启动代理并检查

HDSS7-11.host.com,HDSS7-12.host.com上:

启动

[root@hdss7-11 ~]# systemctl start keepalived

[root@hdss7-11 ~]# systemctl enable keepalived

[root@hdss7-11 ~]# nginx -s reload

[root@hdss7-12 ~]# systemctl start keepalived

[root@hdss7-12 ~]# systemctl enable keepalived

[root@hdss7-12 ~]# nginx -s reload

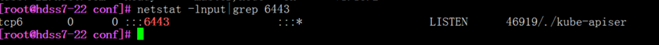

检查

[root@hdss7-11 ~]## netstat -luntp|grep 7443

tcp 0 0 0.0.0.0:7443 0.0.0.0:* LISTEN 17970/nginx: master

[root@hdss7-12 ~]## netstat -luntp|grep 7443

tcp 0 0 0.0.0.0:7443 0.0.0.0:* LISTEN 17970/nginx: master

[root@hdss7-11 ~]# ip add|grep 10.4.9.10

inet 10.9.7.10/32 scope global vir0

4.1.4: 部署controller-manager

控制器服务

集群规划

|

主机名 |

角色 |

ip |

|

HDSS7-21.host.com |

controller-manager |

10.4.7.21 |

|

HDSS7-22.host.com |

controller-manager |

10.4.7.22 |

注意:这里部署文档以HDSS7-21.host.com主机为例,另外一台运算节点安装部署方法类似

创建启动脚本

HDSS7-21.host.com上:

HDSS7-22.host.com上:

vim /opt/kubernetes/server/bin/kube-controller-manager.sh

#!/bin/sh

./kube-controller-manager \

--cluster-cidr 172.7.0.0/16 \

--leader-elect true \

--log-dir /data/logs/kubernetes/kube-controller-manager \

--master http://127.0.0.1:8080 \

--service-account-private-key-file ./cert/ca-key.pem \

--service-cluster-ip-range 192.168.0.0/16 \

--root-ca-file ./cert/ca.pem \

--v 2

调整文件权限,创建目录

HDSS7-21.host.com上:

HDSS7-22.host.com上:

[root@hdss7-21 bin]# chmod +x /opt/kubernetes/server/bin/kube-controller-manager.sh

#日志目录

[root@hdss7-21 bin]# mkdir -p /data/logs/kubernetes/kube-controller-manager

创建supervisor配置

HDSS7-21.host.com上:

HDSS7-22.host.com上:

/etc/supervisord.d/kube-conntroller-manager.ini

[program:kube-controller-manager-7-21]

command=/opt/kubernetes/server/bin/kube-controller-manager.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=22 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=false ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controll.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

stderr_logfile=/data/logs/kubernetes/kube-controller-manager/controll.stderr.log ; stderr log path, NONE for none; default AUTO

stderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stderr_logfile_backups=4 ; # of stderr logfile backups (default 10)

stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stderr_events_enabled=false ; emit events on stderr writes (default false)

启动服务并检查

HDSS7-21.host.com上:

[root@hdss7-21 bin]# supervisorctl update

kube-controller-manager: added process group

[root@hdss7-21 bin]# supervisorctl status

etcd-server-7-21 RUNNING pid 6661, uptime 1 day, 8:41:13

kube-apiserver RUNNING pid 43765, uptime 2:09:41

kube-controller-manager RUNNING pid 44230, uptime 2:05:01

4.1.5: 部署kube-scheduler服务

调度器服务

部署kube-scheduler

集群规划

|

主机名 |

角色 |

ip |

|

HDSS7-21.host.com |

kube-scheduler |

10.4.7.21 |

|

HDSS7-22.host.com |

kube-scheduler |

10.4.7.22 |

创建启动脚本

HDSS7-21.host.com上:

vi /opt/kubernetes/server/bin/kube-scheduler.sh

#!/bin/sh

./kube-scheduler \

--leader-elect \

--log-dir /data/logs/kubernetes/kube-scheduler \

--master http://127.0.0.1:8080 \

--v 2

#controller-manager和scheduler不需要配证书,因为不需要跨主机与别的主机通信

调整文件权限,创建目录

HDSS7-21.host.com上:

/opt/kubernetes/server/bin

[root@hdss7-21 bin]# chmod +x /opt/kubernetes/server/bin/kube-scheduler.sh

[root@hdss7-21 bin]# mkdir -p /data/logs/kubernetes/kube-scheduler

创建supervisor配置

HDSS7-21.host.com上:

/etc/supervisord.d/kube-scheduler.ini

[program:kube-scheduler-7-21]

command=/opt/kubernetes/server/bin/kube-scheduler.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=22 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=false ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

stderr_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stderr.log ; stderr log path, NONE for none; default AUTO

stderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stderr_logfile_backups=4 ; # of stderr logfile backups (default 10)

stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stderr_events_enabled=false ; emit events on stderr writes (default false)

启动服务并检查

HDSS7-21.host.com上:

[root@hdss7-21 bin]# supervisorctl update

kube-scheduler: added process group

[root@hdss7-21 bin]# supervisorctl status

etcd-server-7-21 RUNNING pid 6661, uptime 1 day, 8:41:13

kube-apiserver RUNNING pid 43765, uptime 2:09:41

kube-controller-manager RUNNING pid 44230, uptime 2:05:01

kube-scheduler RUNNING pid 44779, uptime 2:02:27

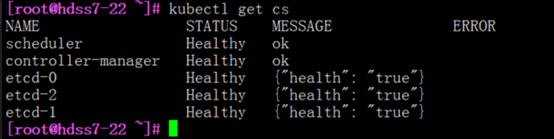

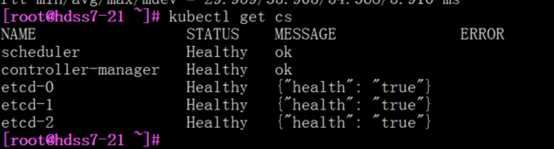

4.1.6: 检查所有集群规划主机上的服务

#以下检查了etcd服务,control-manager,scheduler

[root@hdss7-21 ~]# ln -s /opt/kubernetes/server/bin/kubectl /usr/bin/kubectl

[root@hdss7-22 ~]# kubectl get cs

4.2: 部署Node节点服务

属于运算节点服务

包含: kubelet服务,kube-proxy服务

4.2.1: 部署kubelet

集群规划

|

主机名 |

角色 |

ip |

|

HDSS7-21.host.com |

kubelet |

10.4.7.21 |

|

HDSS7-22.host.com |

kubelet |

10.4.7.22 |

签发kubelet证书

创建生成证书签名请求(csr)的JSON配置文件

在200服务器上签发

vi kubelet-csr.json

{

"CN": "kubelet-node",

"hosts": [

"127.0.0.1",

"10.4.7.10",

"10.4.7.21",

"10.4.7.22",

"10.4.7.23",

"10.4.7.24",

"10.4.7.25",

"10.4.7.26",

"10.4.7.27",

"10.4.7.28"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

生成kubelet证书和私钥

/opt/certs

[root@hdss7-200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server kubelet-csr.json | cfssl-json -bare kubelet

检查生成的证书、私钥

[root@hdss7-200 certs]# ls -l|grep kubelet

total 88

-rw-r--r-- 1 root root 415 Jan 22 16:58 kubelet-csr.json

-rw------- 1 root root 1679 Jan 22 17:00 kubelet-key.pem

-rw-r--r-- 1 root root 1086 Jan 22 17:00 kubelet.csr

-rw-r--r-- 1 root root 1456 Jan 22 17:00 kubelet.pem

拷贝证书至各运算节点,并创建配置

HDSS7-21.host.com上:

拷贝证书、私钥,注意私钥文件属性600

[root@hdss7-21 ~]# cd /opt/kubernetes/server/bin/cert/

scp hdss7-200:/opt/certs/kubelet-key.pem .

scp hdss7-200:/opt/certs/kubelet.pem .

/opt/kubernetes/server/bin/cert

[root@hdss7-21 cert]# ls -l /opt/kubernetes/server/bin/cert

total 40

-rw------- 1 root root 1676 Jan 21 16:39 apiserver-key.pem

-rw-r--r-- 1 root root 1599 Jan 21 16:36 apiserver.pem

-rw------- 1 root root 1675 Jan 21 13:55 ca-key.pem

-rw-r--r-- 1 root root 1354 Jan 21 13:50 ca.pem

-rw------- 1 root root 1679 Jan 21 13:53 client-key.pem

-rw-r--r-- 1 root root 1368 Jan 21 13:53 client.pem

-rw------- 1 root root 1679 Jan 22 17:00 kubelet-key.pem

-rw-r--r-- 1 root root 1456 Jan 22 17:00 kubelet.pem

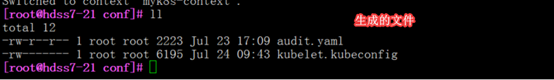

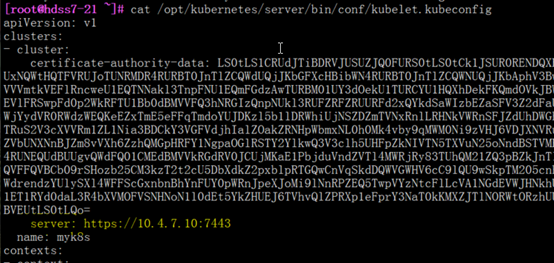

4.2.2: kubelet创建配置

HDSS7-21.host.com上:只需要在一台机器上部署,会存储到etcd中

给kubectl创建软连接

/opt/kubernetes/server/bin

[root@hdss7-21 bin]# ln -s /opt/kubernetes/server/bin/kubectl /usr/bin/kubectl

[root@hdss7-21 bin]# which kubectl

/usr/bin/kubectl

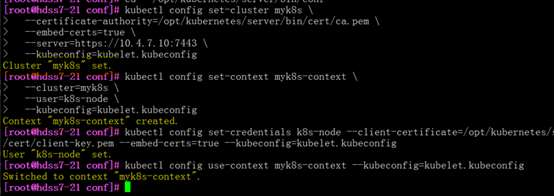

set-cluster

注意:在conf目录下

cd /opt/kubernetes/server/bin/conf

[root@hdss7-21 conf]# kubectl config set-cluster myk8s \

--certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \

--embed-certs=true \

--server=https://10.4.7.10:7443 \

--kubeconfig=kubelet.kubeconfig

set-credentials

注意:在conf目录下

cd /opt/kubernetes/server/bin/conf

[root@hdss7-21 conf]# kubectl config set-credentials k8s-node --client-certificate=/opt/kubernetes/server/bin/cert/client.pem --client-key=/opt/kubernetes/server/bin/cert/client-key.pem --embed-certs=true --kubeconfig=kubelet.kubeconfig

#创建了一个k8s-node用户

#客户端证书与服务端证书都是一个ca签出来的,所以客户端能访问服务端,而且不同服务同一个ca签出来的证书都可以互相通信(比如,etcd,keepalived,kubelet,)

set-context

注意:在conf目录下

cd /opt/kubernetes/server/bin/conf

[root@hdss7-21 conf]# kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=k8s-node \

--kubeconfig=kubelet.kubeconfig

use-context

注意:在conf目录下

cd /opt/kubernetes/server/bin/conf

[root@hdss7-21 conf]# kubectl config use-context myk8s-context --kubeconfig=kubelet.kubeconfig

结果如下:

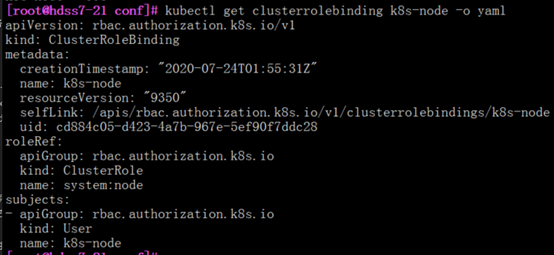

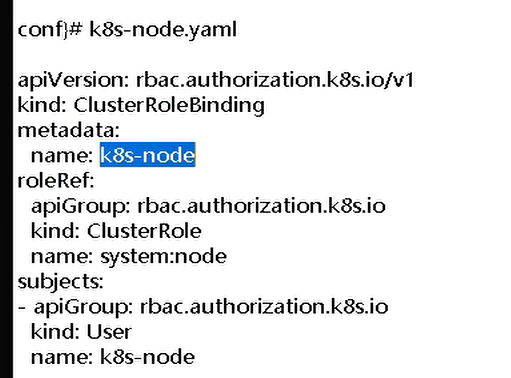

k8s-node.yaml

创建资源配置文件

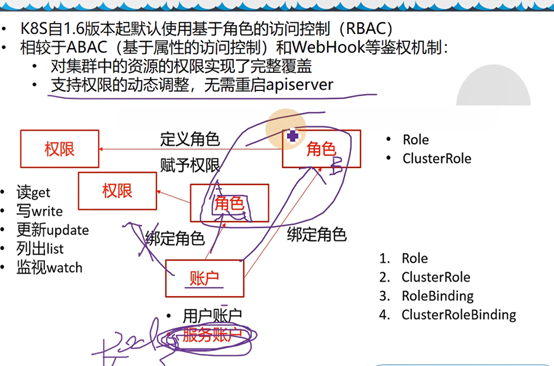

配置k8s-node用户具有集群权限(成为运算节点的角色)

vi /opt/kubernetes/server/bin/conf/k8s-node.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: k8s-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: k8s-node

应用资源配置文件

[root@hdss7-21 conf]# kubectl create -f k8s-node.yaml

clusterrolebinding.rbac.authorization.k8s.io/k8s-node created

检查

[root@hdss7-21 conf]# kubectl get clusterrolebinding k8s-node

NAME AGE

k8s-node 3m

[root@hdss7-21 conf]# kubectl get clusterrolebinding k8s-node -o yaml

Clusterrolebinding 集群角色资源

资源名称 k8s-node

集群用户绑定了集群角色

22节点复制配置文件

HDSS7-22上:

[root@hdss7-22 ~]# cd /opt/kubernetes/server/bin/conf/

[root@hdss7-22 conf]# scp hdss7-21:/opt/kubernetes/server/bin/conf/kubelet.kubeconfig .

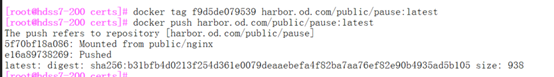

准备pause基础镜像 -- 边车模式

运维主机hdss7-200.host.com上:

在业务pod还没起来时,先启动pause对应的pod,分配好ip,再让业务进来

初始化网络空间,ipc空间,uff空间

#推送pause镜像到harbor

[root@hdss7-200 certs]# docker pull kubernetes/pause

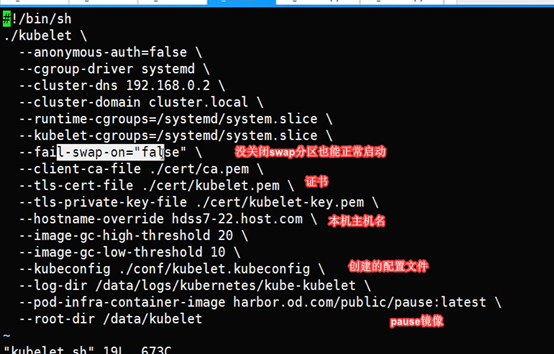

创建kubelet启动脚本

vi /opt/kubernetes/server/bin/kubelet-721.sh

HDSS7-21.host.com上:

HDSS7-22.host.com上:

#!/bin/sh

./kubelet \

--anonymous-auth=false \

--cgroup-driver systemd \

--cluster-dns 192.168.0.2 \

--cluster-domain cluster.local \

--runtime-cgroups=/systemd/system.slice \

--kubelet-cgroups=/systemd/system.slice \

--fail-swap-on="false" \

--client-ca-file ./cert/ca.pem \

--tls-cert-file ./cert/kubelet.pem \

--tls-private-key-file ./cert/kubelet-key.pem \

--hostname-override hdss7-21.host.com \

--image-gc-high-threshold 20 \

--image-gc-low-threshold 10 \

--kubeconfig ./conf/kubelet.kubeconfig \

--log-dir /data/logs/kubernetes/kube-kubelet \

--pod-infra-container-image harbor.od.com/public/pause:latest \

--root-dir /data/kubelet

[root@hdss7-21 conf]# ls -l|grep kubelet.kubeconfig

-rw------- 1 root root 6471 Jan 22 17:33 kubelet.kubeconfig

#加执行权限,添加目录

[root@hdss7-21 conf]# chmod +x /opt/kubernetes/server/bin/kubelet-721.sh

[root@hdss7-21 conf]# mkdir -p /data/logs/kubernetes/kube-kubelet /data/kubelet

创建supervisor配置

HDSS7-21.host.com上:

HDSS7-22.host.com上:

vi /etc/supervisord.d/kube-kubelet.ini

[program:kube-kubelet-7-21]

command=/opt/kubernetes/server/bin/kubelet-721.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=22 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=false ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

stderr_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stderr.log ; stderr log path, NONE for none; default AUTO

stderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stderr_logfile_backups=4 ; # of stderr logfile backups (default 10)

stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stderr_events_enabled=false ; emit events on stderr writes (default false)

启动服务并检查

HDSS7-21.host.com上:

[root@hdss7-21 bin]# supervisorctl update

kube-kubelet: added process group

[root@hdss7-21 bin]# supervisorctl status

etcd-server-7-21 RUNNING pid 9507, uptime 22:44:48

kube-apiserver RUNNING pid 9770, uptime 21:10:49

kube-controller-manager RUNNING pid 10048, uptime 18:22:10

kube-kubelet STARTING

kube-scheduler RUNNING pid 10041, uptime 18:22:13

#启动指定失败的服务

[root@hdss7-22 cert]# supervisorctl start kube-kubelet-7-22

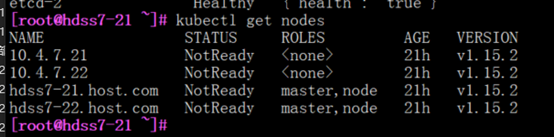

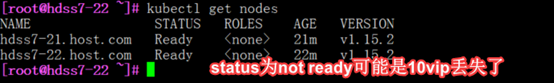

检查运算节点

HDSS7-21.host.com上:

[root@hdss7-21 bin]# kubectl get node

NAME STATUS ROLES AGE VERSION

10.4.7.21 Ready <none> 3m v1.13.2

加角色标签

标签可以过滤某些节点

[root@hdss7-21 cert]# kubectl label node hdss7-21.host.com node-role.kubernetes.io/master=

[root@hdss7-21 cert]# kubectl label node hdss7-21.host.com node-role.kubernetes.io/node=

[root@hdss7-22 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

hdss7-21.host.com Ready master,node 15h v1.15.2

hdss7-22.host.com Ready master,node 12m v1.15.2

4.2.3: 部署kube-proxy

集群规划

|

主机名 |

角色 |

ip |

|

HDSS7-21.host.com |

kube-proxy |

10.4.7.21 |

|

HDSS7-22.host.com |

kube-proxy |

10.4.7.22 |

注意:这里部署文档以HDSS7-21.host.com主机为例,另外一台运算节点安装部署方法类似

创建生成证书签名请求(csr)的JSON配置文件

/opt/certs/kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "beijing",

"L": "beijing",

"O": "od",

"OU": "ops"

}

]

}

生成kube-proxy证书和私钥

/opt/certs

[root@hdss7-200 certs]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client kube-proxy-csr.json | cfssl-json -bare kube-proxy-client

检查生成的证书、私钥

[root@hdss7-200 certs]# ls -l|grep kube-proxy

拷贝证书至各运算节点,并创建配置

HDSS7-21.host.com上:

[root@hdss7-21 cert]# ls -l /opt/kubernetes/server/bin/cert

total 40

-rw------- 1 root root 1676 Jan 21 16:39 apiserver-key.pem

-rw-r--r-- 1 root root 1599 Jan 21 16:36 apiserver.pem

-rw------- 1 root root 1675 Jan 21 13:55 ca-key.pem

-rw-r--r-- 1 root root 1354 Jan 21 13:50 ca.pem

-rw------- 1 root root 1679 Jan 21 13:53 client-key.pem

-rw-r--r-- 1 root root 1368 Jan 21 13:53 client.pem

-rw------- 1 root root 1679 Jan 22 17:00 kubelet-key.pem

-rw-r--r-- 1 root root 1456 Jan 22 17:00 kubelet.pem

-rw------- 1 root root 1679 Jan 22 17:31 kube-proxy-client-key.pem

-rw-r--r-- 1 root root 1383 Jan 22 17:31 kube-proxy-client.pem

拷贝证书至各运算节点,并创建配置

[root@hdss7-200 certs]# ls -l|grep kube-proxy

-rw------- 1 root root 1679 Jan 22 17:31 kube-proxy-client-key.pem

-rw-r--r-- 1 root root 1005 Jan 22 17:31 kube-proxy-client.csr

-rw-r--r-- 1 root root 1383 Jan 22 17:31 kube-proxy-client.pem

-rw-r--r-- 1 root root 268 Jan 22 17:23 kube-proxy-csr.json

HDSS7-21.host.com上:

拷贝证书、私钥,注意私钥文件属性600

cd /opt/kubernetes/server/bin/cert

scp hdss7-200:/opt/certs/kube-proxy-client* .

rm -rf kube-proxy-client.csr

4.2.4: kube-proxy创建配置

set-cluster

注意:在conf目录下

cd /opt/kubernetes/server/bin/conf

[root@hdss7-21 conf]# kubectl config set-cluster myk8s \

--certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \

--embed-certs=true \

--server=https://10.4.7.10:7443 \

--kubeconfig=kube-proxy.kubeconfig

set-credentials

注意:在conf目录下

/opt/kubernetes/server/bin/conf

[root@hdss7-21 conf]# kubectl config set-credentials kube-proxy \

--client-certificate=/opt/kubernetes/server/bin/cert/kube-proxy-client.pem \

--client-key=/opt/kubernetes/server/bin/cert/kube-proxy-client-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

set-context

注意:在conf目录下

/opt/kubernetes/server/bin/conf

[root@hdss7-21 conf]# kubectl config set-context myk8s-context \

--cluster=myk8s \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

use-context

注意:在conf目录下

/opt/kubernetes/server/bin/conf

[root@hdss7-21 conf]# kubectl config use-context myk8s-context --kubeconfig=kube-proxy.kubeconfig

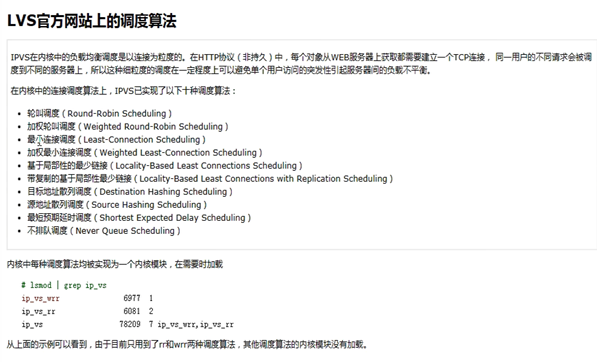

加载ipvs模块

-– 脚本需要设置成开启自动运行

不使用ipvs,默认使用iptables调度流量

用的比较多的算法:

最短预期延时调度 sed,

基于最短预期延时之上的不排队调度, nq(属于动态算法)

[root@hdss7-21 conf]# vi /root/ipvs.sh

#!/bin/bash

ipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs"

for i in $(ls $ipvs_mods_dir|grep -o "^[^.]*")

do

/sbin/modinfo -F filename $i &>/dev/null

if [ $? -eq 0 ];then

/sbin/modprobe $i

fi

done

[root@hdss7-21 conf]# chmod +x /root/ipvs.sh

执行脚本

[root@hdss7-21 conf]# /root/ipvs.sh

查看内核是否加载ipvs模块

[root@hdss7-21 conf]# lsmod | grep ip_vs

开启开机自启动脚本功能 -– 详见本文件夹内 开启开机自启动脚本文件

[root@hdss7-21 ~]# chmod +x /etc/rc.d/rc.local

[root@hdss7-21 ~]# mkdir -p /usr/lib/system/system/

[root@hdss7-21 ~]# vim /usr/lib/system/system/rc-local.service

[Install]

WantedBy=multi-user.target

[root@hdss7-21 ~]# ln -s '/lib/systemd/system/rc-local.service' '/etc/systemd/system/multi-user.target.wants/rc-local.service'

开启 rc-local.service 服务:

[root@hdss7-21 ~]# systemctl start rc-loacl.service

[root@hdss7-21 ~]# systemctl enable rc-local.service

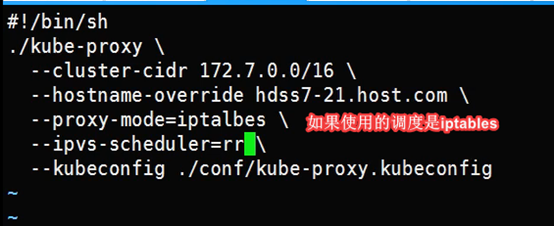

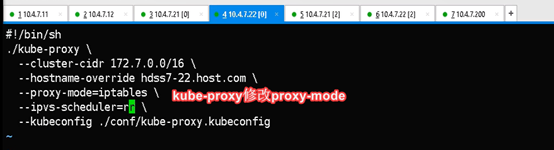

创建kube-proxy启动脚本

HDSS7-21.host.com上:

vi /opt/kubernetes/server/bin/kube-proxy-721.sh

#!/bin/bash

./kube-proxy \

--cluster-cidr 172.7.0.0/16 \

--hostname-override hdss7-21.host.com \

--proxy-mode=ipvs \

--ipvs-scheduler=nq \

--kubeconfig ./conf/kube-proxy.kubeconfig

rr算法是轮询调度,效率低

chmod +x /opt/kubernetes/server/bin/kube-proxy-721.sh

mkdir -p /data/logs/kubernetes/kube-proxy

chmod +x /opt/kubernetes/server/bin/kube-proxy-721.sh

创建supervisor配置

HDSS7-21.host.com上:

vi /etc/supervisord.d/kube-proxy.ini

[program:kube-proxy-7-21]

command=/opt/kubernetes/server/bin/kube-proxy-721.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/server/bin ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=22 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=false ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-proxy/proxy.stdout.log ; stdout log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false ; emit events on stdout writes (default false)

stderr_logfile=/data/logs/kubernetes/kube-proxy/proxy.stderr.log ; stderr log path, NONE for none; default AUTO

stderr_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stderr_logfile_backups=4 ; # of stderr logfile backups (default 10)

stderr_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stderr_events_enabled=false

启动服务并检查

HDSS7-21.host.com上:

[root@hdss7-21 bin]# supervisorctl update

kube-proxy: added process group

[root@hdss7-21 bin]# supervisorctl status

etcd-server-7-21 RUNNING pid 9507, uptime 22:44:48

kube-apiserver RUNNING pid 9770, uptime 21:10:49

kube-controller-manager RUNNING pid 10048, uptime 18:22:10

kube-kubelet RUNNING pid 14597, uptime 0:32:59

kube-proxy STARTING

kube-scheduler RUNNING pid 10041, uptime 18:22:13

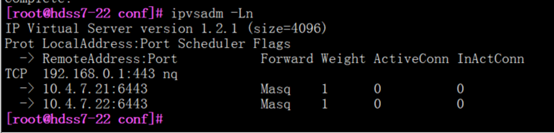

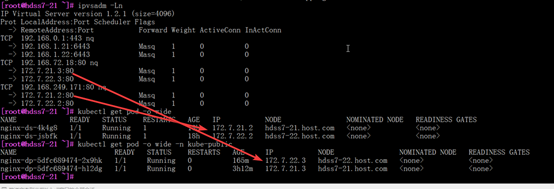

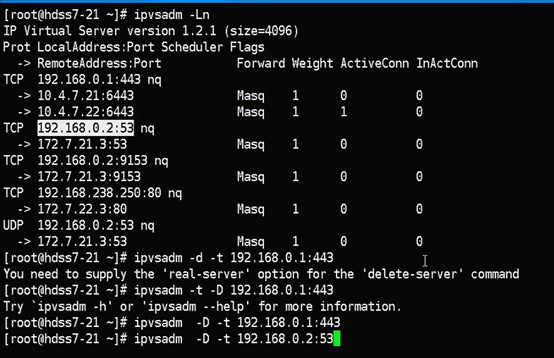

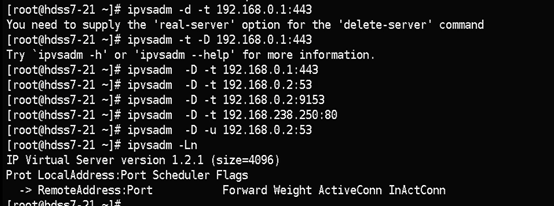

安装ipvsadm

[root@hdss7-21 conf]# yum install ipvsadm -y

查看ipvs 转发

ipvsadm -Ln

#192.168.0.1:443指向了apisever服务

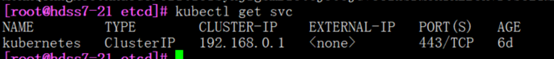

kubectl get svc

安装部署集群规划主机上的kube-proxy服务

Hdss-7-22机器同样配置

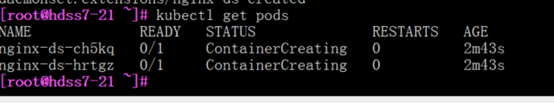

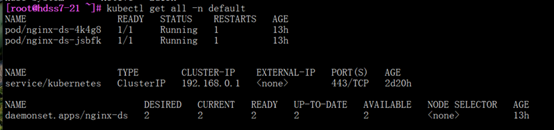

4.2.5: 验证kubernetes集群

在任意一个运算节点,创建一个资源配置清单

这里我们选择HDSS7-21.host.com主机

#使用的是harbor仓库上传的nginx镜像到k8s集群

vi /root/nginx-ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: nginx-ds

spec:

template:

metadata:

labels:

app: nginx-ds

spec:

containers:

- name: my-nginx

image: harbor.od.com/public/nginx:v1.7.9

ports:

- containerPort: 80

[root@hdss7-21 ~]# kubectl create -f nginx-ds.yaml

daemonset.extensions/nginx-ds created

该主机有docker容器的网卡,所以能访问

pod管理命令

#查看在k8s集群中创建的nginx容器

kubectl get pods

#查看nginx容器建立的详细情况

kubectl describe pod nginx

#查看pod使用的node节点

kubectl get pods -o wide

#根据存在的pod导出为创建pod脚本文件

[root@host131 ~]# kubectl get pod test-pod -n default -o yaml >ttt.yml

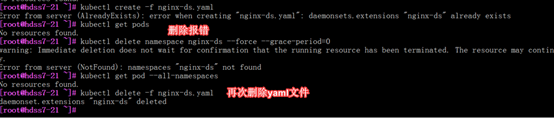

删除

#删除指定pod

[root@host131 ~]# kubectl delete pod test-pod

#根据yml文件生成新pod

[root@host131 ~]# kubectl create -f ttt.yml

#使用replace命令替换旧节点生成新节点

kubectl replace --force -f busybox-pod-test.yaml

强致删除pod

[root@hdss7-22 ~]# kubectl delete pod nginx-ds-lphxq --force --grace-period=0

|

# 删除POD kubectl delete pod [pod name] --force --grace-period=0 -n [namespace] #黄色可选 # 删除NAMESPACE kubectl delete namespace NAMESPACENAME --force --grace-period=0 |

强制删除后,报错yaml文件仍存在

[root@hdss7-21 ~]# kubectl delete -f nginx-ds.yaml

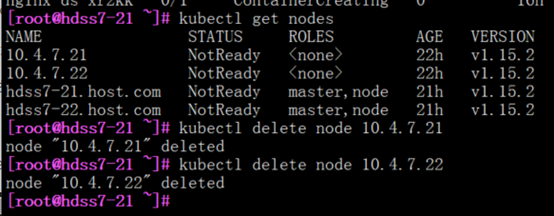

集群管理命令

#检查集群节点

kubectl get cs

#查看node节点

kubectl get nodes

#删除无用节点

kubectl delete node 10.4.7.21

故障检查

1,检查docker是否在21,22,200节点上开启

2.检查21与22节点的/root/ipvs.sh是否开启

3.使用supervisorctl status查看哪些启动脚本运行失败

4.使用集群管理命令查看集群状态

5.docker重启后,node节点,harbor仓库就得重启

6.nodes节点日志出现与10.4.7.10通信故障,可能是keepalived出了问题(11,12主机)

7.同时重启网卡会让keepalived失效

8.保证3台机器都能docker login harbor.od.com

9.看几个核心组件的日志

10.3个常见问题,harbor容器down了,10.4.7.10掉了,kubectl启动脚本写错了

11.yaml的仓库url写错,必须与insecure的一致

12.启动脚本\后面跟了其他符号

11.iptables开启了拒绝规则

12.pod起不来,端口占用

13.iptables规则修改后修改后会影响到docker原本的iptables链的规则,所以需要重启docker服务(kubelet报iptables的错)

14.pod访问不正常,删除pod自动重建

15.拉取不了镜像

还有yaml的镜像地址

16.traefik访问404:nginx没带主机名转发,pod没起来

4.3: 回顾

4.3.1: 安装部署回顾

4.3.2: 回顾cfssl证书

证书过期要重新生成kubeconfig文件

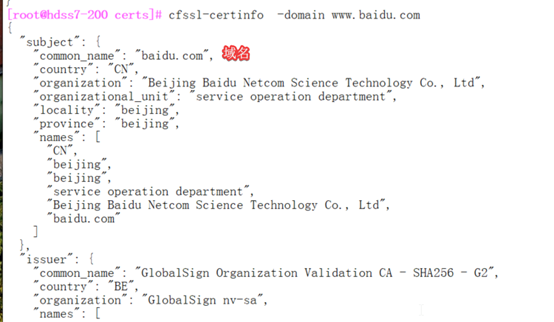

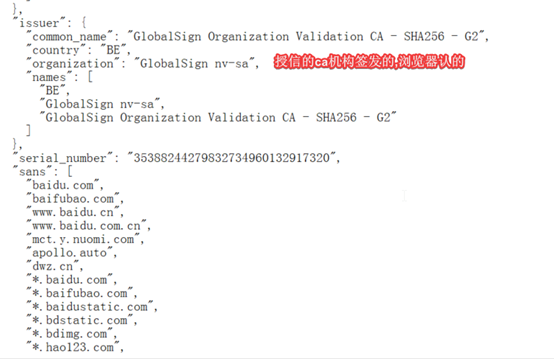

[root@hdss7-200 certs]# cfssl-certinfo -cert apiserver.pem

查看baidu的证书信息

[root@hdss7-200 certs]# cfssl-certinfo -domain www.baidu.com

所有互相通信的组件,根证书要保证一致的(ca证书,同一个机构颁发)

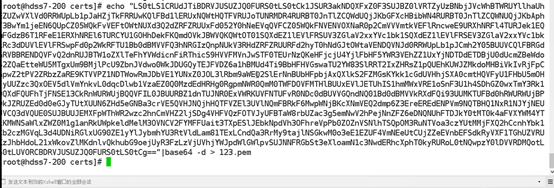

反解证书

echo "证书信息kubelet.kubeconfig信息" |base64 -d>123.pem

解析证书信息

cfssl-certinfo -cert 123.pem

阿里云的k8s:

此方法可以根据阿里云k8s给的kubelet.kubeconfig,获得阿里云的ca证书,再通过ca证书生成能通信的阿里云证书

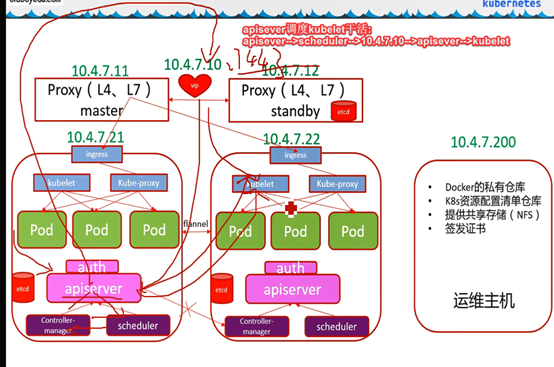

4.3.3: 回顾架构

10.4.7.200

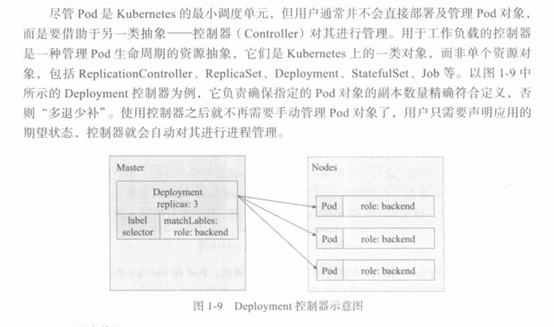

Pod创建流程

Pod 在 Kubernetes 集群中被创建的基本流程如下所示:

用户通过 REST API 创建一个 Pod

apiserver 将其写入 etcd

scheduluer 检测到未绑定 Node 的 Pod,开始调度并更新 Pod 的 Node 绑定

kubelet 检测到有新的 Pod 调度过来,通过 container runtime 运行该 Pod

kubelet 通过 container runtime 取到 Pod 状态,并更新到 apiserver 中

组件通信

apisever调度kubelet干活:

apisever-->scheduler-->10.4.7.10-->apisever-->kubelet

Pod 在 Kubernetes 集群中被创建的基本流程如下所示:

用户通过 REST API 创建一个 Pod

apiserver 将其写入 etcd

scheduluer 检测到未绑定 Node 的 Pod,开始调度并更新 Pod 的 Node 绑定

kubelet 检测到有新的 Pod 调度过来,通过 container runtime 运行该 Pod

kubelet 通过 container runtime 取到 Pod 状态,并更新到 apiserver 中

Kubelet.kubeletconfig指定了sever端必须是10.4.7.10:7443

五、Kubectl详解

Kubectl通过apisever访问资源

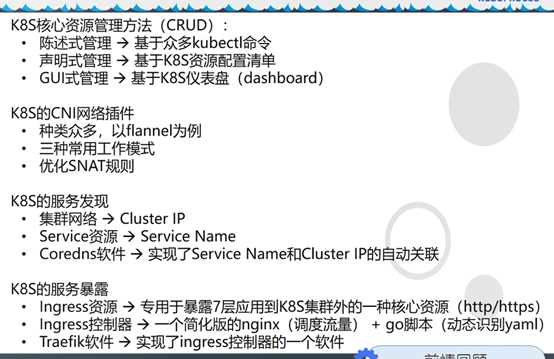

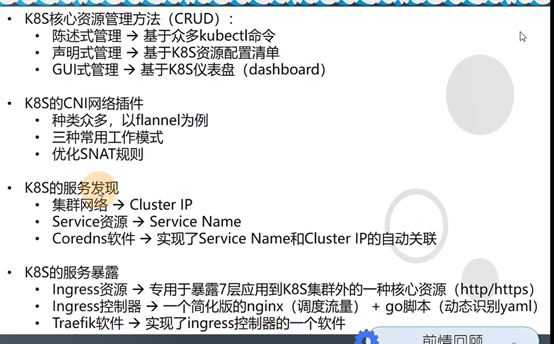

管理K8s核心资源的三种方法

4种核心资源:pod,pod控制器,service,ingress

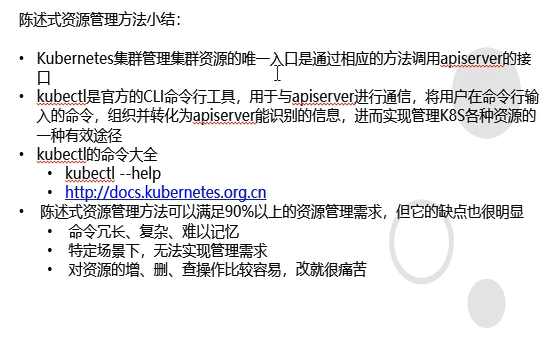

5.1: 陈述式资源管理方法

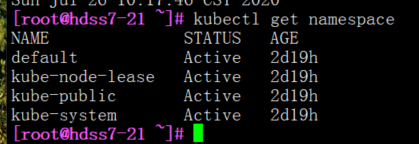

5.1.0: namespace资源

获取命名空间

#查找名称空间

[root@hdss7-21 ~]# kubectl get namespace

[root@hdss7-21 ~]# kubectl get ns

#以上大部分是系统的namespace

#查看default命名空间所有资源

[root@hdss7-21 ~]# kubectl get all [-n default]

#daemonset是pod控制器

创建名称空间

[root@hdss7-21 ~]# kubectl create namespace app

[root@hdss7-21 ~]# kubectl get namespaces

删除名称空间

[root@hdss7-21 ~]# kubectl delete namespace app

[root@hdss7-21 ~]# kubectl get namespaces

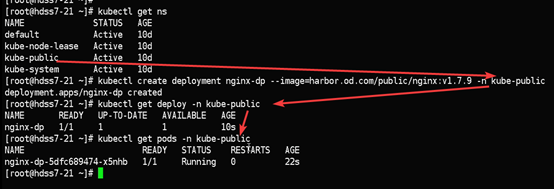

5.1.1: 管理: deployment资源

#创建deployment

kubectl get deploy #默认走defalut名称空间

[root@hdss7-21 ~]# kubectl create deployment nginx-dp --image=harbor.od.com/public/nginx:v1.7.9 -n kube-public

#查看kube-public名称空间下的pod控制器

[root@hdss7-21 ~]# kubectl get deploy -n kube-public

[-o wide]

#查看kube-public名称空间下的pod

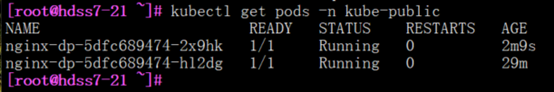

[root@hdss7-21 ~]# kubectl get pods -n kube-public

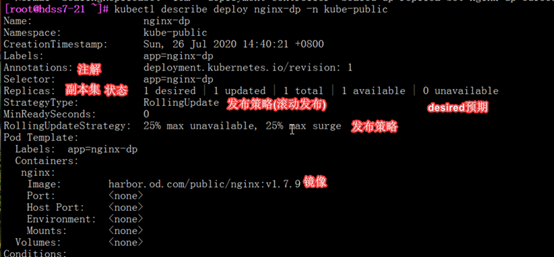

查看指定deploy详细信息

[root@hdss7-21 ~]# kubectl describe deploy nginx-dp -n kube-public

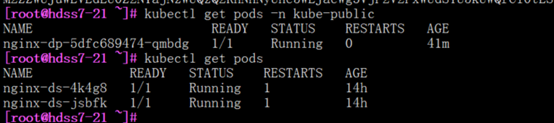

5.1.2: 查看pod资源

不指定命名空间也是走的默认

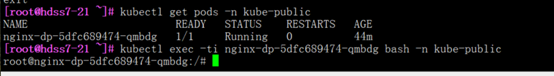

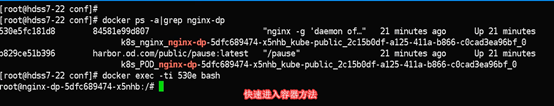

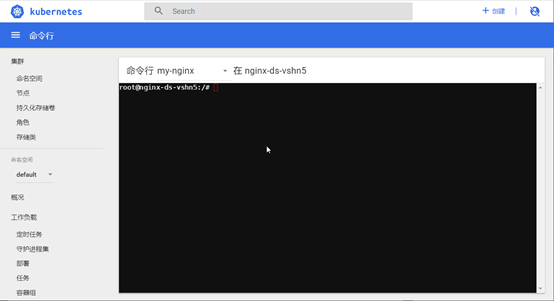

进入pod资源

[root@hdss7-21 ~]# kubectl exec -ti nginx-dp-5dfc689474-qmbdg bash -n kube-public

#kubectl exec与docker exec的区别的就是 ,前者可以跨主机访问,后者得切到对应容器所在主机访问

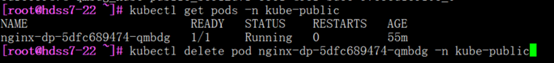

删除pod资源

[root@hdss7-22 ~]# kubectl delete pod nginx-dp-5dfc689474-qmbdg -n kube-public

参数 –force –grace-period=0 ,强制删除

#由于该pod依赖于deploy,删除后deploy会再拉起pod,所以删除pod是重启pod的好方法

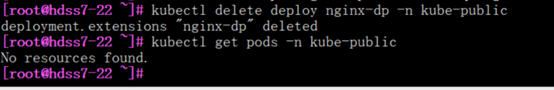

删除deploy控制器

#删除deploy后,pod资源会消失

[root@hdss7-22 ~]# kubectl delete deploy nginx-dp -n kube-public

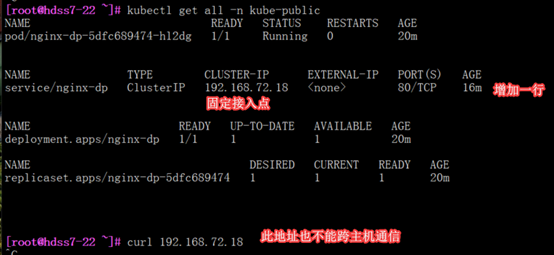

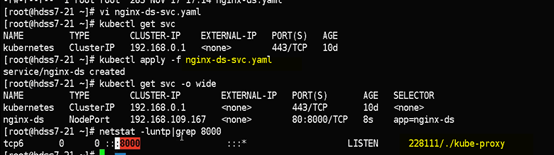

5.1.3: 管理service资源

#恢复容器

[root@hdss7-21 ~]# kubectl create deployment nginx-dp --image=harbor.od.com/public/nginx:v1.7.9 -n kube-public

创建service

#为pod提供稳定的ip端口

[root@hdss7-22 ~]# kubectl expose deployment nginx-dp --port=80 -n kube-public

#deploy扩容

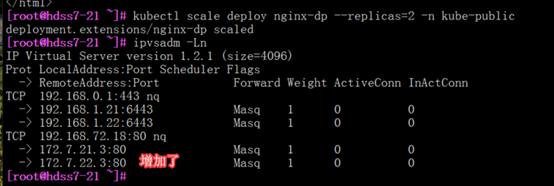

[root@hdss7-21 ~]# kubectl scale deploy nginx-dp --replicas=2 -n kube-public

deployment.extensions/nginx-dp scaled

#sevice就是产生了一个稳定接入点

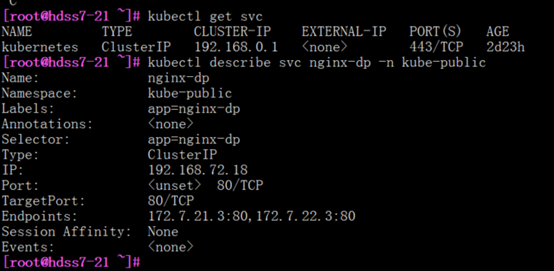

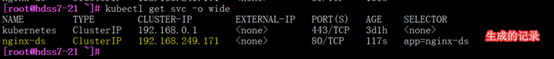

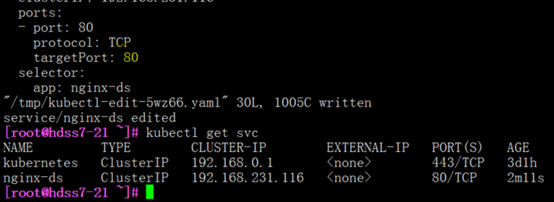

查看service

[root@hdss7-21 ~]# kubectl get svc

[root@hdss7-21 ~]# kubectl describe svc nginx-dp -n kube-public

5.1.4: 小结

改不好实现<==陈述式资源管理

docs.kubernetes.org.cn 中文社区(文档)

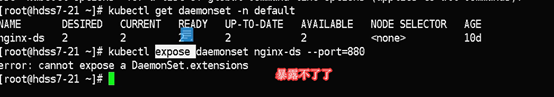

kubectl的局限性

#daemontset是控制器

[root@hdss7-22 ~]# kubectl get daemonset -n default

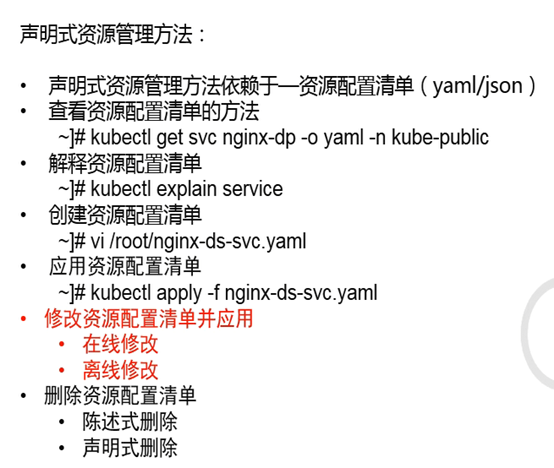

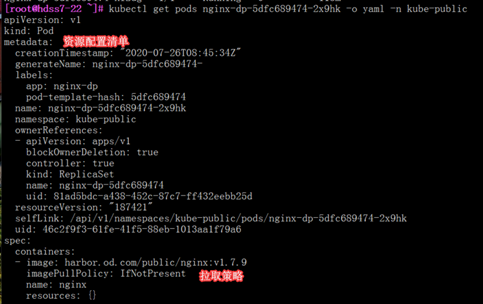

5.2: 声明式资源管理

yaml格式和json格式互通的(yaml看着更舒服)

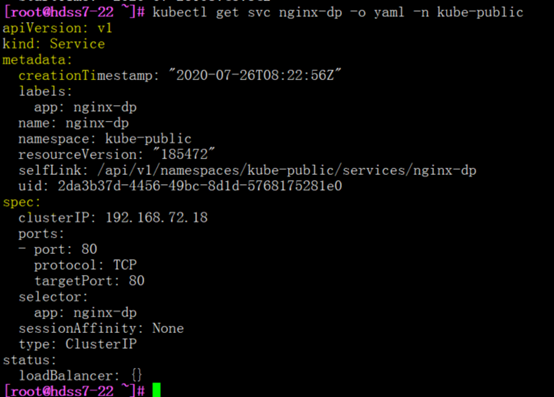

查看资源配置清单

[root@hdss7-22 ~]# kubectl get pods nginx-dp-5dfc689474-2x9hk -o yaml -n kube-public

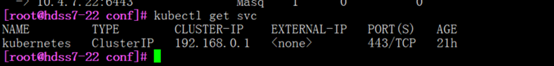

[root@hdss7-22 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 192.168.0.1 <none> 443/TCP 3d

[root@hdss7-22 ~]# kubectl get svc nginx-dp -o yaml -n kube-public

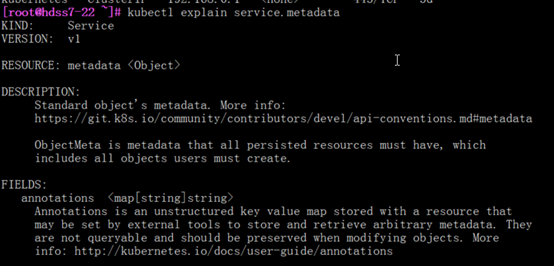

查看帮助explain

[root@hdss7-22 ~]# kubectl explain service.metadata

创建资源配置清单

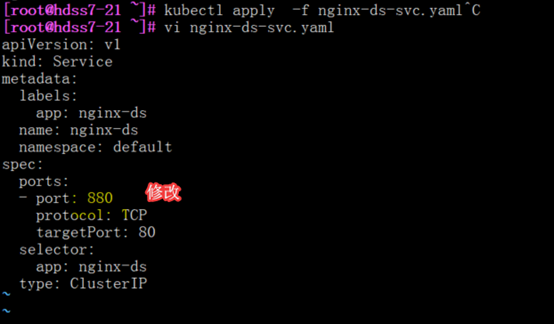

[root@hdss7-21 ~]# vi nginx-ds-svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-ds

name: nginx-ds

namespace: default

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80 #找到对容器的80端口(未使用名称)

selector:

app: nginx-ds

type: ClusterIP

#查看svc

[root@hdss7-21 ~]# kubectl create -f nginx-ds-svc.yaml

[root@hdss7-21 ~]# kubectl get svc nginx-ds -o yaml

修改资源配置清单

离线修改(推荐)

生效

[root@hdss7-21 ~]# kubectl apply -f nginx-ds-svc.yaml --force # --force需要加-f强制参数

在线修改

[root@hdss7-21 ~]# kubectl edit svc nginx-ds

删除资源配置清单

陈述式删除

即:直接删除创建好的资源

kubectl delete svc nginx-ds -n default

声明式删除

即:通过制定配置文件的方式,删除用该配置文件创建出的资源

kubectl delete -f nginx-ds-svc.yaml

小结

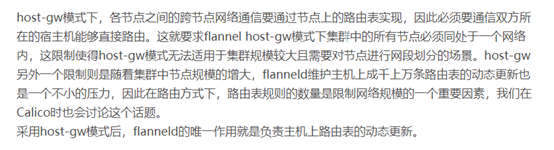

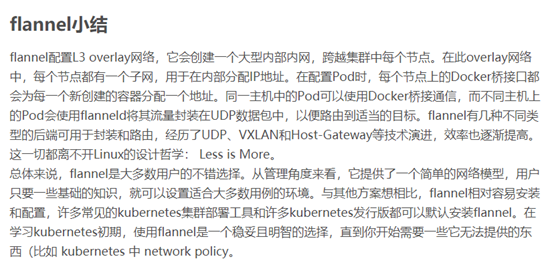

六、K8S核心网络插件Flannel

Flanel也会与apiserver通信

- 解决容器跨宿主机通信问题

k8s虽然设计了网络模型,然后将实现方式交给了CNI网络插件,而CNI网络插件的主要目的,就是实现POD资源能够跨宿主机进行通信

常见的网络插件有flannel,calico,canal,但是最简单的flannel已经完全满足我们的要求,故不在考虑其他网络插件

网络插件Flannel介绍:https://www.kubernetes.org.cn/3682.html

etcd get "" --prefix --key-only | grep -Ev "^$" | grep "flannel"

除了flannel,Calico和Canal在etcd中配置数据的key都可以用以上的方式查询

6.1: 安装部署

6.1.1: 部署准备

下载软件

wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz

[root@hdss7-21 src]# mkdir /opt/flannel-v0.11.0

[root@hdss7-21 src]# tar xf flannel-v0.11.0-linux-amd64.tar.gz -C /opt/flannel-v0.11.0/

[root@hdss7-21 src]# ln -s /opt/flannel-v0.11.0/ /opt/flannel

拷贝证书

#因为要和apiserver通信,所以要配置client证书,当然ca公钥自不必说

scp hdss7-200:/opt/certs/ca.pem cert/

scp hdss7-200:/opt/certs/client.pem cert/

scp hdss7-200:/opt/certs/client-key.pem cert/

配置子网信息

cat >/opt/flannel/subnet.env <<EOF

注意:subnet子网网段信息,每个宿主机都要修改,21,22不一样(在21和22上都要配)

6.1.2: 启动flannel服务

创建flannel启动脚本

cat >/opt/flannel/flanneld.sh <<'EOF'

--etcd-endpoints=https://10.4.7.12:2379,https://10.4.7.21:2379,https://10.4.7.22:2379 \

--etcd-keyfile=./cert/client-key.pem \

--etcd-certfile=./cert/client.pem \

操作etcd,增加host-gw模式

#fannel是依赖于etcd的存储信息的(在一台机器上写入就可以)

#在etcd中写入网络信息, 以下操作在任意etcd节点中执行都可以

[root@hdss7-12 ~]# /opt/etcd/etcdctl member list #查看谁是leader

[root@hdss7-12 ~]# /opt/etcd/etcdctl get /coreos.com/network/config

{"Network": "172.7.0.0/16", "Backend": {"Type": "host-gw"}}

创建supervisor启动脚本

cat >/etc/supervisord.d/flannel.ini <<EOF

command=sh /opt/flannel/flanneld.sh

stdout_logfile=/data/logs/flanneld/flanneld.stdout.log

[root@hdss7-22 ~]# mkdir -p /data/logs/flanneld/

启动flannel服务并验证

[root@hdss7-21 flannel]# tail -fn 200 /data/logs/flanneld/flanneld.stdout.log

22主机相同部署

6.1.3: flannel支SNAT规则优化

前因后果

我们使用的是gw网络模型,而这个网络模型只是创建了一条到其他宿主机下POD网络的路由信息.

从A宿主机访问B宿主机中的POD,源IP应该是A宿主机的IP

从A的POD-A01中,访问B中的POD,源IP应该是POD-A01的容器IP

此情形可以想象是一个路由器下的2个不同网段的交换机下的设备通过路由器(gw)通信

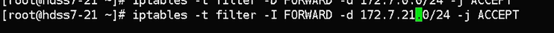

Docker容器的跨网络隔离与通信,借助了iptables的机制

因此虽然K8S我们使用了ipvs调度,但是宿主机上还是有iptalbes规则

若数据出网前,先判断出网设备是不是本机docker0设备(容器网络)

[root@hdss7-21 ~]# iptables-save |grep -i postrouting|grep docker0

-A POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE

由于gw模式产生的数据,是从eth0流出,因而不在此规则过滤范围内

修改此IPTABLES规则,增加过滤目标:过滤目的地是宿主机网段的流量

问题复现

在7-21宿主机启动busybox容器,进入并访问172.7.22.2

docker run --rm -it busybox bash

[root@hdss7-22 ~]# kubectl logs nginx-ds-j777c --tail=2

10.4.7.21 - - [xxx] "GET / HTTP/1.1" 200 612 "-" "curl/7.29.0" "-"

10.4.7.21 - - [xxx] "GET / HTTP/1.1" 200 612 "-" "Wget" "-"

具体优化过程

[root@hdss7-21 ~]# iptables-save |grep -i postrouting|grep docker0

-A POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE

[root@hdss7-21 flannel]# yum install iptables-services -y

iptables -t nat -D POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE

做nat转换,针对不是docker0出网的,源地址是172.7.21.0/24

iptables -t nat -I POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

[root@hdss7-21 bin]# iptables-save |grep -i reject

-A INPUT -j REJECT --reject-with icmp-host-prohibited #删掉这条

-A FORWARD -j REJECT --reject-with icmp-host-prohibited

[root@hdss7-21 bin]# iptables -t filter -D INPUT -j REJECT --reject-with icmp-host-prohibited

[root@hdss7-21 bin]# iptables -t filter -D FORWARD -j REJECT --reject-with icmp-host-prohibited

# 验证规则并保存配置

[root@hdss7-21 ~]# iptables-save |grep -i postrouting|grep docker0

-A POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

[root@hdss7-21 ~]# iptables-save > /etc/sysconfig/iptables

注意docker重启后操作

docker服务重启后,会再次增加该规则,要注意在每次重启docker服务后,删除该规则

修改后会影响到docker原本的iptables链的规则,所以需要重启docker服务

[root@hdss7-21 ~]# systemctl restart docker

[root@hdss7-21 ~]# iptables-save |grep -i postrouting|grep docker0

-A POSTROUTING -s 172.7.21.0/24 ! -o docker0 -j MASQUERADE

-A POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

# 可以用iptables-restore重新应用iptables规则,也可以直接再删

[root@hdss7-21 ~]# iptables-restore /etc/sysconfig/iptables

[root@hdss7-21 ~]# iptables-save |grep -i postrouting|grep docker0

-A POSTROUTING -s 172.7.21.0/24 ! -d 172.7.0.0/16 ! -o docker0 -j MASQUERADE

结果验证

[root@hdss7-21 ~]# docker run --rm -it busybox sh

[root@hdss7-22 ~]# kubectl logs nginx-ds-j777c --tail=1

172.7.21.3 - - [xxxx] "GET / HTTP/1.1" 200 612 "-" "Wget" "-"

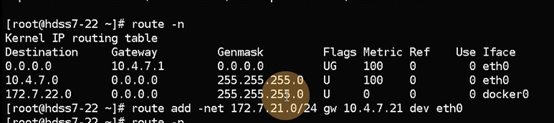

6.2: 手动实现fannel

1.在不同节点设置路由转发

#在22节点添加访问172.7.21.0/24节点的路由指向21主机,如果有其他节点每个节点对应都要添加

2.如果防火墙开启过,需要添加允许访问规则

七、k8s服务发现coredns

7.1: 安装部署coredns

增加域名解析

[root@hdss7-11 ~]# vi /var/named/od.com.zone

[root@hdss7-11 ~]# systemctl restart named

[root@hdss7-11 ~]# dig -t A k8s-yaml.od.com @10.4.7.11 +short #解析无问题

配置nginx-200

在200主机上,配置一个nginx虚拟主机,用以提供k8s统一的资源清单访问入口

[root@hdss7-200 conf.d]# cat k8s-yaml.od.com.conf

[root@hdss7-200 conf.d]# mkdir -p /data/k8s-yaml

[root@hdss7-200 conf.d]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

[root@hdss7-200 conf.d]# nginx -s reload

[root@hdss7-200 conf.d]# cd /data/k8s-yaml/

[root@hdss7-200 k8s-yaml]# mkdir coredns

部署coredns(docker)

https://github.com/coredns/coredns

coredns_1.6.5_linux_amd64.tgz #这个是二进制安装包

[root@hdss7-200 k8s-yaml]# cd coredns/

[root@hdss7-200 coredns]# pwd

/data/k8s-yaml/coredns

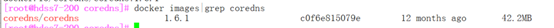

[root@hdss7-200 coredns]# docker pull docker.io/coredns/coredns:1.6.1

[root@hdss7-200 coredns]# docker tag c0f6e815079e harbor.od.com/public/coredns:v1.6.1

#推到harbor仓库

[root@hdss7-200 coredns]# docker push harbor.od.com/public/coredns:v1.6.1

7.2: 准备资源配置清单

在200主机上

[root@hdss7-200 coredns]# pwd

/data/k8s-yaml/coredns

Github上有参考

rbac集群权限清单

rbac权限资源

cat >/data/k8s-yaml/coredns/rbac.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

EOF

configmap配置清单

对coredns做了一些配置

cat >/data/k8s-yaml/coredns/cm.yaml <<EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

log

health

ready

kubernetes cluster.local 192.168.0.0/16 #service资源cluster地址

forward . 10.4.7.11

#上级DNS地址

cache 30

loop

reload

loadbalance

}

EOF

depoly控制器清单

#写了镜像拉取的地址(harbor仓库),生成pod

cat >/data/k8s-yaml/coredns/dp.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

#命令空间

labels:

k8s-app: coredns

kubernetes.io/name: "CoreDNS"

spec:

replicas: 1

selector:

matchLabels:

k8s-app: coredns

template:

metadata:

labels:

k8s-app: coredns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

containers:

- name: coredns

image: harbor.od.com/public/coredns:v1.6.1

args:

- -conf

- /etc/coredns/Corefile

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

EOF

service资源清单

暴露端口

cat >/data/k8s-yaml/coredns/svc.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: coredns

clusterIP: 192.168.0.2

#集群dns地址

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

- name: metrics

port: 9153

protocol: TCP

EOF

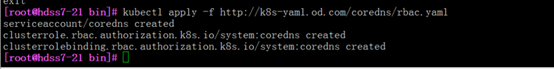

加载资源配置清单

[root@hdss7-21 bin]# kubectl apply -f http://k8s-yaml.od.com/coredns/rbac.yaml

[root@hdss7-21 bin]# kubectl apply -f http://k8s-yaml.od.com/coredns/cm.yaml

[root@hdss7-21 bin]# kubectl apply -f http://k8s-yaml.od.com/coredns/dp.yaml

[root@hdss7-21 bin]# kubectl apply -f http://k8s-yaml.od.com/coredns/svc.yaml

#删除需要写创建时的全路径

#检查状态

[root@hdss7-21 bin]# kubectl get all -n kube-system -o wide

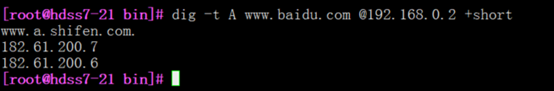

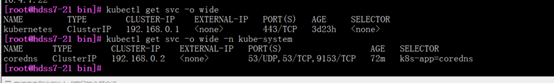

7.3: coredns原理

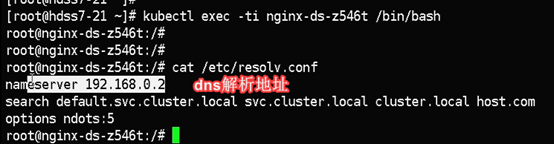

Kublet指定的集群Dns地址

使用coredns解析

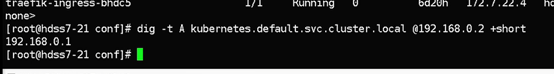

[root@hdss7-21 bin]# dig -t A www.baidu.com @192.168.0.2 +short

Coredns的上层dns是10.4.7.11

(资源配置清单中制定了)

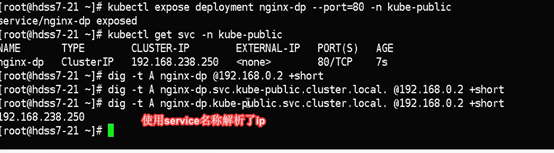

Service名称与ip建立联系

给pod创建一个service

kubectl expose deployment nginx-dp --port=80 -n kube-public

~]# kubectl -n kube-public get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-dp ClusterIP 192.168.63.255 <none> 80/TCP 11s

验证是否可以解析

~]# dig -t A nginx-dp @192.168.0.2 +short

# 发现无返回数据,难道解析不了

# 其实是需要完整域名:服务名.名称空间.svc.cluster.local.

~]# dig -t A nginx-dp.kube-public.svc.cluster.local. @192.168.0.2 +short

192.168.63.255

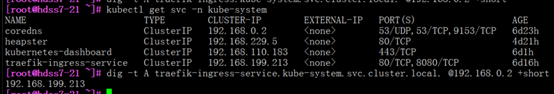

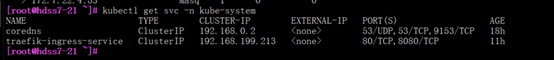

[root@hdss7-21 ~]# dig -t A traefik-ingress-service.kube-system.svc.cluster.local. @192.168.0.2 +short

192.168.199.213

#可以看到我们没有手动添加任何解析记录,我们nginx-dp的service资源的IP,已经被解析了:

进入到pod内部再次验证

~]# kubectl -n kube-public exec -it nginx-dp-568f8dc55-rxvx2 /bin/bash

-qjwmz:/# apt update && apt install curl

-qjwmz:/# ping nginx-dp

PING nginx-dp.kube-public.svc.cluster.local (192.168.191.232): 56 data bytes

64 bytes from 192.168.191.232: icmp_seq=0 ttl=64 time=0.184 ms

64 bytes from 192.168.191.232: icmp_seq=1 ttl=64 time=0.225 ms

为什么在容器中不用加全域名?

-qjwmz:/# cat /etc/resolv.conf

nameserver 192.168.0.2

search kube-public.svc.cluster.local svc.cluster.local cluster.local host.com

options ndots:5

当我进入到pod内部以后,会发现我们的dns地址是我们的coredns地址,以及搜索域中已经添加了搜索域:kube-public.svc.cluster.local

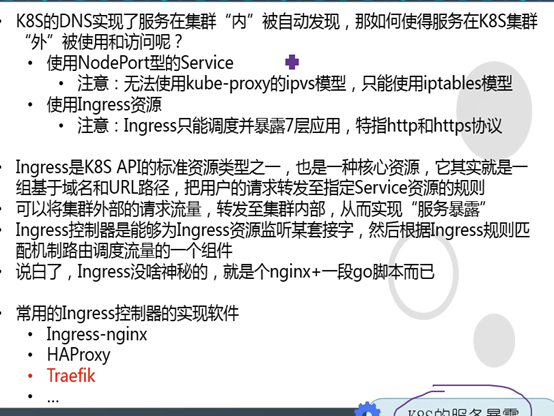

八、 服务暴露

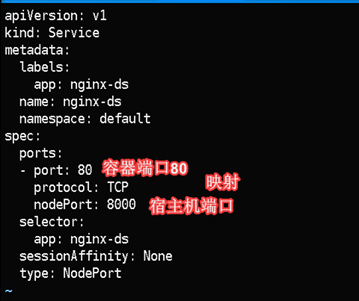

8.1: 使用NodePort型的Service

基于iptables

nodeport型的service原理相当于端口映射,将容器内的端口映射到宿主机上的某个端口。

K8S集群不能使用ipvs的方式调度,必须使用iptables,且只支持rr模式

删除ipvs规则,使用iptables规则

21,22台机器都执行

vi nginx-ds-svc.yaml

资源配置文件中,将容器端口指定了宿主机的端口

kubectl apply -f nginx-ds-svc.yaml

服务暴露原理

其实就是把service给暴露出来了.再通过service访问不固定ip的pod

所有宿主机上的8000转给service的80,service对应一组pod,再由sevice80转给容器80

Pod的ip是不断变化的,selector相当于组名称.每次要通过组名找到对应pod的ip

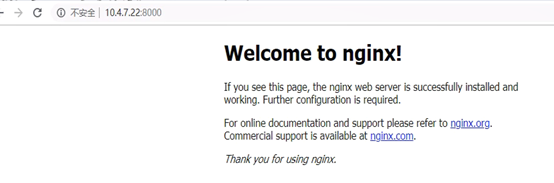

浏览器访问10.4.7.21:8000 访问nginx

浏览器访问10.4.7.22:8000 访问nginx

再做个负载均衡

#这是k8s的容器服务,达到集群外可以访问效果

Traefik就没有映射端口

Ingress和nodeport的区别大概就是一个使用的是负载均衡,一个做的是iptables端口转发

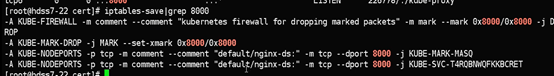

查看iptables规则

#其实就是端口转发

8.2: ingress—traefik

Ingress和nodeport的区别大概就是一个使用的是负载均衡,一个做的是iptables端口转发

traefik是个容器, 好像是ingress资源的载体

实现ingress软件:Haproxy,ingress-nginx,traefik

部署traefik

1.可以理解为一个简化版本的nginx

2.Ingress控制器是能够为Ingress资源健康某套接字,然后根据ingress规则匹配机制路由调度流量的一个组件

3.只能工作在七层网络下,建议暴露http, https可以使用前端nginx来做证书方面的卸载

4.我们使用的ingress控制器为Traefik

5.traefik带有web管理界面

6.可以使用容器,也可以二进制安装在宿主机

#下载地址

https://github.com/containous/traefik

#下载上传镜像

同样的,现在7.200完成docker镜像拉取和配置清单创建,然后再到任意master节点执行配置清单

docker pull traefik:v1.7.2-alpine #失败,多拉几次

docker tag traefik:v1.7.2-alpine harbor.od.com/public/traefik:v1.7.2

docker push harbor.od.com/public/traefik:v1.7.2

准备资源配置清单

Github配置清单书写参考地址

https://github.com/containous/traefik/tree/v1.5/examples/k8s

rbac授权清单

cat >/data/k8s-yaml/traefik/rbac.yaml <<EOF

apiVersion: v1

kind: ServiceAccount #声明了一个叫serviceaccount服务用户

metadata:

name: traefik-ingress-controller #服务用户名称

namespace: kube-system #名称空间在kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole #声明集群角色

metadata:

name: traefik-ingress-controller #集群角色名称

rules: #集群角色在哪个组下哪些资源下,拥有的权限

- apiGroups:

- ""

resources:

- services

- endpoints

- secrets

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding #集群角色绑定,将角色规则绑定到集群用户

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller #集群绑定名字

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole #集群角色

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount #服务用户

name: traefik-ingress-controller

namespace: kube-system

EOF

#绑定role和服务账户

depoly资源清单

cat >/data/k8s-yaml/traefik/ds.yaml <<EOF

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: traefik-ingress

namespace: kube-system

labels:

k8s-app: traefik-ingress

spec:

template:

metadata:

labels:

k8s-app: traefik-ingress

name: traefik-ingress

spec:

serviceAccountName: traefik-ingress-controller #服务用户,rbac声明的用户

terminationGracePeriodSeconds: 60

containers:

- image: harbor.od.com/public/traefik:v1.7.2

name: traefik-ingress

ports:

- name: controller #ingress

controller

containerPort: 80 #traefik端口

hostPort: 81

#宿主机端口

- name: admin-web #2个端口所以根据端口名区分

containerPort: 8080 #web界面端口

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

args:

- --api

- --kubernetes

- --logLevel=INFO

- --insecureskipverify=true

- --kubernetes.endpoint=https://10.4.7.10:7443 #与apisever通信地址

- --accesslog

- --accesslog.filepath=/var/log/traefik_access.log

- --traefiklog

- --traefiklog.filepath=/var/log/traefik.log

- --metrics.prometheus

EOF

service清单

service好像就做了一个代理转发,给后端多个pods

cat >/data/k8s-yaml/traefik/svc.yaml <<EOF

kind: Service

apiVersion: v1

metadata:

name: traefik-ingress-service #proxy-pass

namespace: kube-system

spec:

selector:

k8s-app: traefik-ingress

ports:

- protocol: TCP

port: 80

name: controller

- protocol: TCP

port: 8080

name: admin-web #根据端口名找到容器端口名,与80区分开来,在svc使用targetpod也可以找到

EOF

ingress清单(相当于nginx的pod), 把service暴露了

cat >/data/k8s-yaml/traefik/ingress.yaml <<EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: traefik-web-ui #顶级,唯一识别名称,下级是service

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: traefik.od.com #servername

http:

paths:

- path: / #location

backend:

serviceName: traefik-ingress-service #proxypass,coredns自动解析

servicePort: 8080 #i访问service的8080

EOF

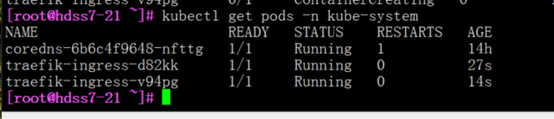

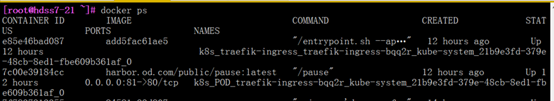

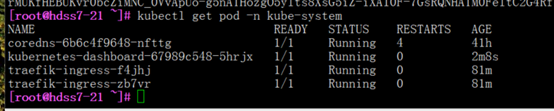

应用资源配置清单

kubectl create -f http://k8s-yaml.od.com/traefik/rbac.yaml

kubectl create -f http://k8s-yaml.od.com/traefik/ds.yaml

kubectl create -f http://k8s-yaml.od.com/traefik/svc.yaml

kubectl create -f http://k8s-yaml.od.com/traefik/ingress.yaml

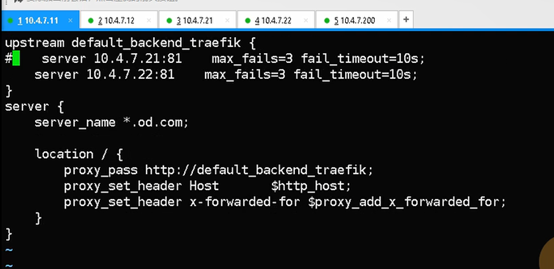

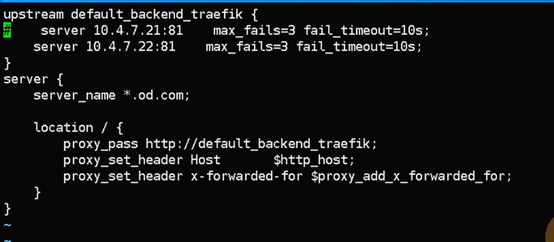

在前端nginx上做反向代理

在7.11和7.12上,都做反向代理,将泛域名的解析都转发到traefik上去

cat >/etc/nginx/conf.d/od.com.conf <<'EOF'

upstream default_backend_traefik {

server 10.4.7.21:81 max_fails=3 fail_timeout=10s;

server 10.4.7.22:81 max_fails=3 fail_timeout=10s;

}

server {

server_name *.od.com; #该域名的流量分发给ingress

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

EOF

# 重启nginx服务

nginx -t

nginx -s reload

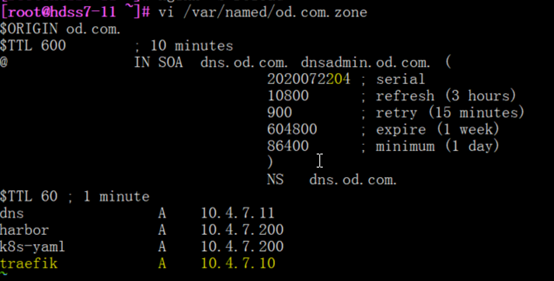

在bind9中添加域名解析

在11主机上,需要将traefik 服务的解析记录添加的DNS解析中,注意是绑定到VIP上

vi /var/named/od.com.zone

........

traefik A 10.4.7.10

注意前滚serial编号

重启named服务

systemctl restart named

#dig验证解析结果

[root@hdss7-11 ~]# dig -t A traefik.od.com +short

10.4.7.10

用户访问pod流程

宿主机10.4.7.21:81---映射à192.168.x.x:80 (ingress—容器pause的边车模式=traefik)

192.168.x.x:80 (ingress)根据对应ingress清单(coredns解析serviceName)à找对应service-负载均衡(nq,rr算法)到后端podà找到deploy

Service端口应该是和pod端口是一致的.

小结

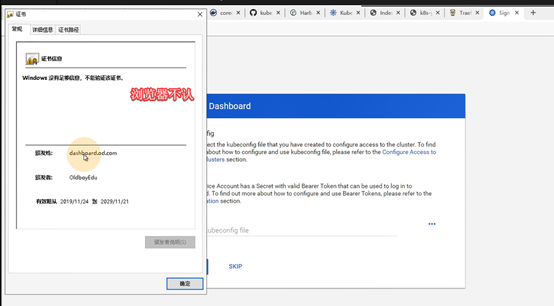

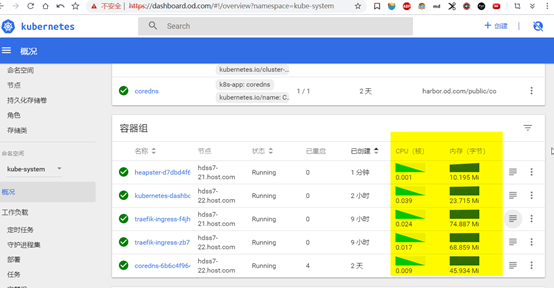

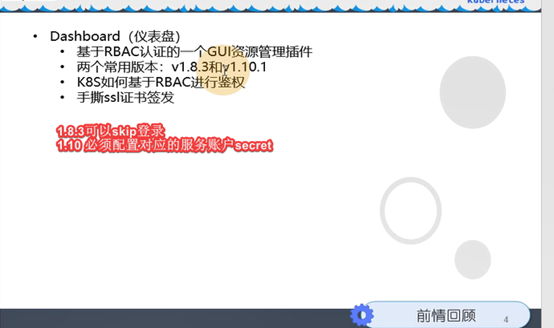

九、K8S的web管理方式-dashboard

9.1: 部署dashboard

9.1.1: 获取dashboard镜像

获取镜像和创建资源配置清单的操作,还是老规矩:7.200上操作

获取1.8.3版本的dsashboard

docker pull k8scn/kubernetes-dashboard-amd64:v1.8.3

docker tag k8scn/kubernetes-dashboard-amd64:v1.8.3 harbor.od.com/public/dashboard:v1.8.3

docker push harbor.od.com/public/dashboard:v1.8.3

获取1.10.1版本的dashboard

docker pull loveone/kubernetes-dashboard-amd64:v1.10.1

docker tag loveone/kubernetes-dashboard-amd64:v1.10.1 harbor.od.com/public/dashboard:v1.10.1

docker push harbor.od.com/public/dashboard:v1.10.1

为何要两个版本的dashbosrd

1.8.3版本授权不严格,方便学习使用

1.10.1版本授权严格,学习使用麻烦,但生产需要

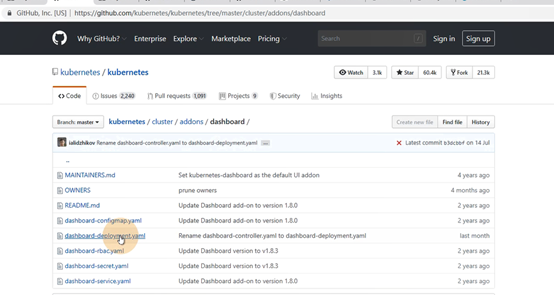

9.1.2: 创建dashboard资源配置清单

mkdir -p /data/k8s-yaml/dashboard

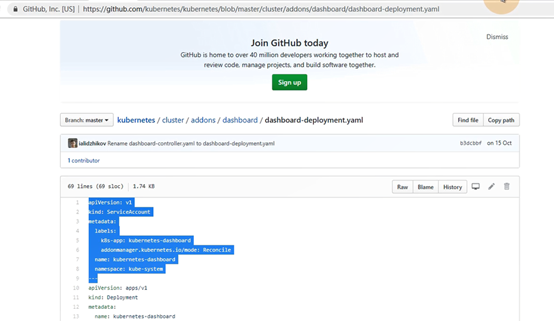

资源配置清单,在github的kubernetes

创建rbca授权清单

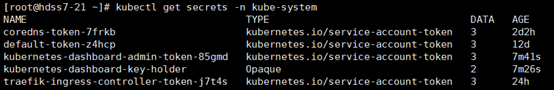

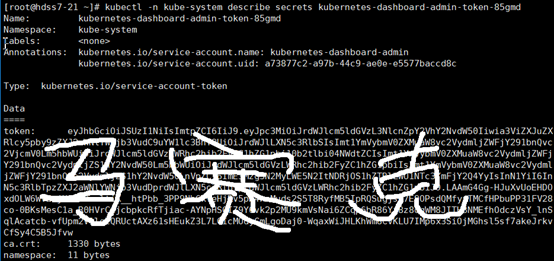

cat >/data/k8s-yaml/dashboard/rbac.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef: #参考角色

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

#系统自带的role

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard-admin

namespace: kube-system

EOF

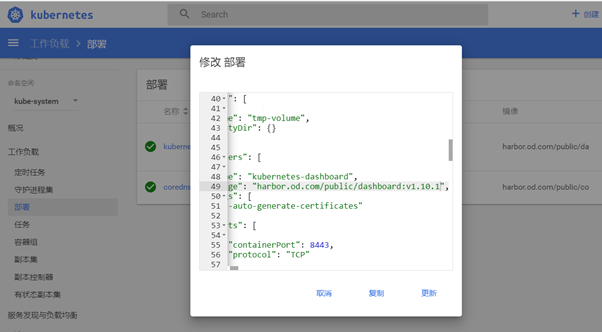

创建depoloy清单

cat >/data/k8s-yaml/dashboard/dp.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels: