Lucene的入门

Lucene准备

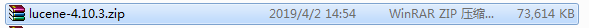

Lucene可以在官网上下载,我这里已经下载好了,用的是4.10.3版本的,

解压以后的文件为:

如果没有使用maven管理的话,就需要引入这三个jar包,实现lucene功能.

我在测试的时候,使用的是mysql数据库,所以还需要使用mysql进行下面的测试.

创建数据库

create database lucene;

create table `book` (

`id` int (),

`name` varchar (),

`price` float ,

`pic` varchar (),

`description` text

);

insert into `book` (`id`, `name`, `price`, `pic`, `description`) values('','java 编程思想','71.5','23488292934.jpg','作者简介 Bruce Eckel,是MindView公司的总裁,该公司向客户提供软件咨询和培训。他是C++标准委员会拥有表决权的成员之一,拥有应用物理学学士和计算机工程硕士学位。除本书外,他还是《C++编程思想》的作者,并与人合著了《C++编程思想第2卷》。\r\n\r\n《计算机科学丛书:Java编程思想(第4版)》赢得了全球程序员的广泛赞誉,即使是最晦涩的概念,在BruceEckel的文字亲和力和小而直接的编程示例面前也会化解于无形。从Java的基础语法到最高级特性(深入的面向对象概念、多线程、自动项目构建、单元测试和调试等),本书都能逐步指导你轻松掌握。\r\n 从《计算机科学丛书:Java编程思想(第4版)》获得的各项大奖以及来自世界各地的读者评论中,不难看出这是一本经典之作。本书的作者拥有多年教学经验,对C、C++以及Java语言都有独到、深入的见解,以通俗易懂及小而直接的示例解释了一个个晦涩抽象的概念。本书共22章,包括操作符、控制执行流程、访问权限控制、复用类、多态、接口、通过异常处理错误、字符串、泛型、数组、容器深入研究、JavaI/O系统、枚举类型、并发以及图形化用户界面等内容。这些丰富的内容,包含了Java语言基础语法以及高级特性,适合各个层次的Java程序员阅读,同时也是高等院校讲授面向对象程序设计语言以及Java语言的绝佳教材和参考书。\r\n 《计算机科学丛书:Java编程思想(第4版)》特点:\r\n 适合初学者与专业人员的经典的面向对象叙述方式,为更新的JavaSE5/6增加了新的示例和章节。\r\n 测验框架显示程序输出。\r\n 设计模式贯穿于众多示例中:适配器、桥接器、职责链、命令、装饰器、外观、工厂方法、享元、点名、数据传输对象、空对象、代理、单例、状态、策略、模板方法以及访问者。\r\n 为数据传输引入了XML,为用户界面引入了SWT和Flash。\r\n 重新撰写了有关并发的章节,有助于读者掌握线程的相关知识。\r\n 专门为第4版以及JavaSE5/6重写了700多个编译文件中的500多个程序。\r\n 支持网站包含了所有源代码、带注解的解决方案指南、网络日志以及多媒体学习资料。\r\n 覆盖了所有基础知识,同时论述了高级特性。\r\n 详细地阐述了面向对象原理。\r\n 在线可获得Java讲座CD,其中包含BruceEckel的全部多媒体讲座。\r\n 在网站上可以观看现场讲座、咨询和评论。\r\n 专门为第4版以及JavaSE5/6重写了700多个编译文件中的500多个程序。\r\n 支持网站包含了所有源代码、带注解的解决方案指南、网络日志以及多媒体学习资料。\r\n 覆盖了所有基础知识,同时论述了高级特性。\r\n 详细地阐述了面向对象原理。\r\n\r\n\r\n');

insert into `book` (`id`, `name`, `price`, `pic`, `description`) values('','apache lucene','66.0','77373773737.jpg','lucene是apache的开源项目,是一个全文检索的工具包。\r\n# Apache Lucene README file\r\n\r\n## Introduction\r\n\r\nLucene is a Java full-text search engine. Lucene is not a complete\r\napplication, but rather a code library and API that can easily be used\r\nto add search capabilities to applications.\r\n\r\n * The Lucene web site is at: http://lucene.apache.org/\r\n * Please join the Lucene-User mailing list by sending a message to:\r\n java-user-subscribe@lucene.apache.org\r\n\r\n## Files in a binary distribution\r\n\r\nFiles are organized by module, for example in core/:\r\n\r\n* `core/lucene-core-XX.jar`:\r\n The compiled core Lucene library.\r\n\r\nTo review the documentation, read the main documentation page, located at:\r\n`docs/index.html`\r\n\r\nTo build Lucene or its documentation for a source distribution, see BUILD.txt');

insert into `book` (`id`, `name`, `price`, `pic`, `description`) values('','mybatis','55.0','88272828282.jpg','MyBatis介绍\r\n\r\nMyBatis 本是apache的一个开源项目iBatis, 2010年这个项目由apache software foundation 迁移到了google code,并且改名为MyBatis。 \r\nMyBatis是一个优秀的持久层框架,它对jdbc的操作数据库的过程进行封装,使开发者只需要关注 SQL 本身,而不需要花费精力去处理例如注册驱动、创建connection、创建statement、手动设置参数、结果集检索等jdbc繁杂的过程代码。\r\nMybatis通过xml或注解的方式将要执行的statement配置起来,并通过java对象和statement中的sql进行映射生成最终执行的sql语句,最后由mybatis框架执行sql并将结果映射成java对象并返回。\r\n');

insert into `book` (`id`, `name`, `price`, `pic`, `description`) values('','spring','56.0','83938383222.jpg','## Spring Framework\r\nspringmvc.txt\r\nThe Spring Framework provides a comprehensive programming and configuration model for modern\r\nJava-based enterprise applications - on any kind of deployment platform. A key element of Spring is\r\ninfrastructural support at the application level: Spring focuses on the \"plumbing\" of enterprise\r\napplications so that teams can focus on application-level business logic, without unnecessary ties\r\nto specific deployment environments.\r\n\r\nThe framework also serves as the foundation for\r\n[Spring Integration](https://github.com/SpringSource/spring-integration),\r\n[Spring Batch](https://github.com/SpringSource/spring-batch) and the rest of the Spring\r\n[family of projects](http://springsource.org/projects). Browse the repositories under the\r\n[SpringSource organization](https://github.com/SpringSource) on GitHub for a full list.\r\n\r\n[.NET](https://github.com/SpringSource/spring-net) and\r\n[Python](https://github.com/SpringSource/spring-python) variants are available as well.\r\n\r\n## Downloading artifacts\r\nInstructions on\r\n[downloading Spring artifacts](https://github.com/SpringSource/spring-framework/wiki/Downloading-Spring-artifacts)\r\nvia Maven and other build systems are available via the project wiki.\r\n\r\n## Documentation\r\nSee the current [Javadoc](http://static.springsource.org/spring-framework/docs/current/api)\r\nand [Reference docs](http://static.springsource.org/spring-framework/docs/current/reference).\r\n\r\n## Getting support\r\nCheck out the [Spring forums](http://forum.springsource.org) and the\r\n[Spring tag](http://stackoverflow.com/questions/tagged/spring) on StackOverflow.\r\n[Commercial support](http://springsource.com/support/springsupport) is available too.\r\n\r\n## Issue Tracking\r\nSpring\'s JIRA issue tracker can be found [here](http://jira.springsource.org/browse/SPR). Think\r\nyou\'ve found a bug? Please consider submitting a reproduction project via the\r\n[spring-framework-issues](https://github.com/springsource/spring-framework-issues) repository. The\r\n[readme](https://github.com/springsource/spring-framework-issues#readme) provides simple\r\nstep-by-step instructions.\r\n\r\n## Building from source\r\nInstructions on\r\n[building Spring from source](https://github.com/SpringSource/spring-framework/wiki/Building-from-source)\r\nare available via the project wiki.\r\n\r\n## Contributing\r\n[Pull requests](http://help.github.com/send-pull-requests) are welcome; you\'ll be asked to sign our\r\ncontributor license agreement ([CLA](https://support.springsource.com/spring_committer_signup)).\r\nTrivial changes like typo fixes are especially appreciated (just\r\n[fork and edit!](https://github.com/blog/844-forking-with-the-edit-button)). For larger changes,\r\nplease search through JIRA for similiar issues, creating a new one if necessary, and discuss your\r\nideas with the Spring team.\r\n\r\n## Staying in touch\r\nFollow [@springframework](http://twitter.com/springframework) and its\r\n[team members](http://twitter.com/springframework/team/members) on Twitter. In-depth articles can be\r\nfound at the SpringSource [team blog](http://blog.springsource.org), and releases are announced via\r\nour [news feed](http://www.springsource.org/news-events).\r\n\r\n## License\r\nThe Spring Framework is released under version 2.0 of the\r\n[Apache License](http://www.apache.org/licenses/LICENSE-2.0).\r\n');

insert into `book` (`id`, `name`, `price`, `pic`, `description`) values('','solr','78.0','99999229292.jpg','solr是一个全文检索服务\r\n# Licensed to the Apache Software Foundation (ASF) under one or more\r\n# contributor license agreements. See the NOTICE file distributed with\r\n# this work for additional information regarding copyright ownership.\r\n# The ASF licenses this file to You under the Apache License, Version 2.0\r\n# (the \"License\"); you may not use this file except in compliance with\r\n# the License. You may obtain a copy of the License at\r\n#\r\n# http://www.apache.org/licenses/LICENSE-2.0\r\n#\r\n# Unless required by applicable law or agreed to in writing, software\r\n# distributed under the License is distributed on an \"AS IS\" BASIS,\r\n# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.\r\n# See the License for the specific language governing permissions and\r\n# limitations under the License.\r\n\r\n\r\nWelcome to the Apache Solr project!\r\n-----------------------------------\r\n\r\nSolr is the popular, blazing fast open source enterprise search platform\r\nfrom the Apache Lucene project.\r\n\r\nFor a complete description of the Solr project, team composition, source\r\ncode repositories, and other details, please see the Solr web site at\r\nhttp://lucene.apache.org/solr\r\n\r\n\r\nGetting Started\r\n---------------\r\n\r\nSee the \"example\" directory for an example Solr setup. A tutorial\r\nusing the example setup can be found at\r\n http://lucene.apache.org/solr/tutorial.html\r\nor linked from \"docs/index.html\" in a binary distribution.\r\nAlso, there are Solr clients for many programming languages, see \r\n http://wiki.apache.org/solr/IntegratingSolr\r\n\r\n\r\nFiles included in an Apache Solr binary distribution\r\n----------------------------------------------------\r\n\r\nexample/\r\n A self-contained example Solr instance, complete with a sample\r\n configuration, documents to index, and the Jetty Servlet container.\r\n Please see example/README.txt for information about running this\r\n example.\r\n\r\ndist/solr-XX.war\r\n The Apache Solr Application. Deploy this WAR file to any servlet\r\n container to run Apache Solr.\r\n\r\ndist/solr-<component>-XX.jar\r\n The Apache Solr libraries. To compile Apache Solr Plugins,\r\n one or more of these will be required. The core library is\r\n required at a minimum. (see http://wiki.apache.org/solr/SolrPlugins\r\n for more information).\r\n\r\ndocs/index.html\r\n The Apache Solr Javadoc API documentation and Tutorial\r\n\r\n\r\nInstructions for Building Apache Solr from Source\r\n-------------------------------------------------\r\n\r\n1. Download the Java SE 7 JDK (Java Development Kit) or later from http://java.sun.com/\r\n You will need the JDK installed, and the $JAVA_HOME/bin (Windows: %JAVA_HOME%\\bin) \r\n folder included on your command path. To test this, issue a \"java -version\" command \r\n from your shell (command prompt) and verify that the Java version is 1.7 or later.\r\n\r\n2. Download the Apache Ant binary distribution (1.8.2+) from \r\n http://ant.apache.org/ You will need Ant installed and the $ANT_HOME/bin (Windows: \r\n %ANT_HOME%\\bin) folder included on your command path. To test this, issue a \r\n \"ant -version\" command from your shell (command prompt) and verify that Ant is \r\n available. \r\n\r\n You will also need to install Apache Ivy binary distribution (2.2.0) from \r\n http://ant.apache.org/ivy/ and place ivy-2.2.0.jar file in ~/.ant/lib -- if you skip \r\n this step, the Solr build system will offer to do it for you.\r\n\r\n3. Download the Apache Solr distribution, linked from the above web site. \r\n Unzip the distribution to a folder of your choice, e.g. C:\\solr or ~/solr\r\n Alternately, you can obtain a copy of the latest Apache Solr source code\r\n directly from the Subversion repository:\r\n\r\n http://lucene.apache.org/solr/versioncontrol.html\r\n\r\n4. Navigate to the \"solr\" folder and issue an \"ant\" command to see the available options\r\n for building, testing, and packaging Solr.\r\n \r\n NOTE: \r\n To see Solr in action, you may want to use the \"ant example\" command to build\r\n and package Solr into the example/webapps directory. See also example/README.txt.\r\n\r\n\r\nExport control\r\n-------------------------------------------------\r\nThis distribution includes cryptographic software. The country in\r\nwhich you currently reside may have restrictions on the import,\r\npossession, use, and/or re-export to another country, of\r\nencryption software. BEFORE using any encryption software, please\r\ncheck your country\'s laws, regulations and policies concerning the\r\nimport, possession, or use, and re-export of encryption software, to\r\nsee if this is permitted. See <http://www.wassenaar.org/> for more\r\ninformation.\r\n\r\nThe U.S. Government Department of Commerce, Bureau of Industry and\r\nSecurity (BIS), has classified this software as Export Commodity\r\nControl Number (ECCN) 5D002.C.1, which includes information security\r\nsoftware using or performing cryptographic functions with asymmetric\r\nalgorithms. The form and manner of this Apache Software Foundation\r\ndistribution makes it eligible for export under the License Exception\r\nENC Technology Software Unrestricted (TSU) exception (see the BIS\r\nExport Administration Regulations, Section 740.13) for both object\r\ncode and source code.\r\n\r\nThe following provides more details on the included cryptographic\r\nsoftware:\r\n Apache Solr uses the Apache Tika which uses the Bouncy Castle generic encryption libraries for\r\n extracting text content and metadata from encrypted PDF files.\r\n See http://www.bouncycastle.org/ for more details on Bouncy Castle.\r\n');

引入maven依赖

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.</modelVersion> <groupId>com.qingmu</groupId>

<artifactId>lucene_qingmu</artifactId>

<version>1.0-SNAPSHOT</version> <name>lucene_qingmu</name>

<!-- FIXME change it to the project's website -->

<url>http://www.example.com</url> <properties>

<project.build.sourceEncoding>UTF-</project.build.sourceEncoding>

<maven.compiler.source>1.8</maven.compiler.source>

<maven.compiler.target>1.8</maven.compiler.target>

</properties> <dependencies>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.9</version>

</dependency>

<!--lucene的分词器包-->

<dependency>

<groupId>org.apache.lucene</groupId>

<artifactId>lucene-analyzers-common</artifactId>

<version>4.10.</version>

</dependency>

<!--lucene的核心包-->

<dependency>

<groupId>org.apache.lucene</groupId>

<artifactId>lucene-core</artifactId>

<version>4.10.</version>

</dependency>

<!--lucene的查询解析包-->

<dependency>

<groupId>org.apache.lucene</groupId>

<artifactId>lucene-queryparser</artifactId>

<version>4.10.</version>

</dependency> <!-- ik中文分词器:可以分析中文语法 -->

<dependency>

<groupId>com.janeluo</groupId>

<artifactId>ikanalyzer</artifactId>

<version>2012_u6</version>

</dependency>

</dependencies> <build>

<pluginManagement><!-- lock down plugins versions to avoid using Maven defaults (may be moved to parent pom) -->

<plugins>

<!-- clean lifecycle, see https://maven.apache.org/ref/current/maven-core/lifecycles.html#clean_Lifecycle -->

<plugin>

<artifactId>maven-clean-plugin</artifactId>

<version>3.1.</version>

</plugin>

<!-- default lifecycle, jar packaging: see https://maven.apache.org/ref/current/maven-core/default-bindings.html#Plugin_bindings_for_jar_packaging -->

<plugin>

<artifactId>maven-resources-plugin</artifactId>

<version>3.0.</version>

</plugin>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.8.</version>

</plugin>

<plugin>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.22.</version>

</plugin>

<plugin>

<artifactId>maven-jar-plugin</artifactId>

<version>3.0.</version>

</plugin>

<plugin>

<artifactId>maven-install-plugin</artifactId>

<version>2.5.</version>

</plugin>

<plugin>

<artifactId>maven-deploy-plugin</artifactId>

<version>2.8.</version>

</plugin>

<!-- site lifecycle, see https://maven.apache.org/ref/current/maven-core/lifecycles.html#site_Lifecycle -->

<plugin>

<artifactId>maven-site-plugin</artifactId>

<version>3.7.</version>

</plugin>

<plugin>

<artifactId>maven-project-info-reports-plugin</artifactId>

<version>3.0.</version>

</plugin>

</plugins>

</pluginManagement>

</build>

</project>

创建实体类

package com.qingmu.domain; /**

* @Auther:qingmu

* @Description:脚踏实地,只为出人头地

* @Date:Created in 16:51 2019/4/2

*/

public class Book {

private Integer id;

private String name;

private float price;

private String pic;

private String description; @Override

public String toString() {

return "Book{" +

"id=" + id +

", name='" + name + '\'' +

", price=" + price +

", pic='" + pic + '\'' +

", description='" + description + '\'' +

'}';

} public Integer getId() {

return id;

} public void setId(Integer id) {

this.id = id;

} public String getName() {

return name;

} public void setName(String name) {

this.name = name;

} public float getPrice() {

return price;

} public void setPrice(float price) {

this.price = price;

} public String getPic() {

return pic;

} public void setPic(String pic) {

this.pic = pic;

} public String getDescription() {

return description;

} public void setDescription(String description) {

this.description = description;

}

}

/**

* 创建索引库:

* 1.索引库包含索引和文档

* 2.创建分词器对象,StandardAnalyzer

* 3.创建文档,用来存储域

* 4.指定文件的位置FSDirectory

* 5.创建流对象配置IndexWriterConfig

* 6.创建索引库的流对象IndexWriter

* 7.提交文档

* 8.关闭流资源

*/

创建库.

package com.qingmu.test; import com.qingmu.dao.JdbcDao;

import com.qingmu.domain.Book;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.StringField;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.util.Version;

import org.junit.Test; import java.io.File;

import java.io.IOException;

import java.text.MessageFormat;

import java.util.ArrayList;

import java.util.List; /**

* @Auther:qingmu

* @Description:脚踏实地,只为出人头地

* @Date:Created in 17:11 2019/4/2

*/ /**

* 创建索引库:

* 1.索引库包含索引和文档

* 2.创建分词器对象,StandardAnalyzer

* 3.创建文档,用来存储域

* 4.指定文件的位置FSDirectory

* 5.创建流对象配置IndexWriterConfig

* 6.创建索引库的流对象IndexWriter

* 7.提交文档

* 8.关闭流资源

*/

public class CreateTest {

/**

* 创建索引库

* 索引库:索引和文档

*/

@Test

public void createTest() throws IOException {

// 查询数据

JdbcDao jdbc = new JdbcDao();

List<Book> bookList = jdbc.findAll();

// 创建分词器对象

// 标准的分词器对象,是Analyzer,对中文不友好.

Analyzer analyzer = new StandardAnalyzer();

// 创建一个文档集合对象

List<Document> list = new ArrayList<Document>();

// 遍历从数据库中取出来的文件

for (Book book : bookList) {

// 创建document对象

Document document = new Document();

//文档中包含的是域,一个域对应了一行中的一列 , 一个book对象中的一个属性对应一个域

//创建域对象

/**

* 参数1:域名:一般用列名

* 参数2: 域中的值,一行中对应的列值

* 参数3: 是否存储到索引库(暂时设置为存储); Field.Store.YES

*/

// TextField idField = new TextField("id",String.valueOf(book.getId()) , Field.Store.YES);

// TextField nameField = new TextField("name",book.getName() , Field.Store.YES);

// TextField picField = new TextField("pic",book.getPic() , Field.Store.YES);

// TextField priceField = new TextField("price",String.valueOf(book.getPrice()) , Field.Store.YES);

// TextField descriptionField = new TextField("description",book.getDescription(), Field.Store.YES);

/**

* id

* 是否分词:否

* 是否索引:是

* 是否存储:是

*/

StringField idField = new StringField("id", String.valueOf(book.getId()), Field.Store.YES);

StringField nameField = new StringField("name", book.getName(), Field.Store.YES);

StringField picField = new StringField("pic", book.getPic(), Field.Store.YES);

StringField priceField = new StringField("price", String.valueOf(book.getPrice()), Field.Store.YES);

StringField descriptionField = new StringField("description", book.getDescription(), Field.Store.YES); // 把域对象存储在文档对象中

document.add(idField);

document.add(nameField);

document.add(picField);

document.add(priceField);

document.add(descriptionField);

// 把文档对象存储在集合中

list.add(document);

}

// 指定索引库的位置

FSDirectory fsDirectory = FSDirectory.open(new File("f:dic"));

//把内容写入到索引库:索引输出流对象

//创建索引输出流的配置对象

/**

* 参数1: 版本

* 参数2:分词器对象

*/

IndexWriterConfig indexWriterConfig = new IndexWriterConfig(Version.LUCENE_4_10_3, analyzer);

//创建索引库输出流对象

/**

* 参数1: 索引库的位置

* 参数2:索引输出流配置对象

*/

IndexWriter indexWriter = new IndexWriter(fsDirectory, indexWriterConfig);

// 把文档写入到索引库

for (Document indexableFields : list) {

indexWriter.addDocument(indexableFields);

} // 提交,就是将在写入流中的东西,刷出去

indexWriter.commit();

// 关闭流资源

indexWriter.close();

} }

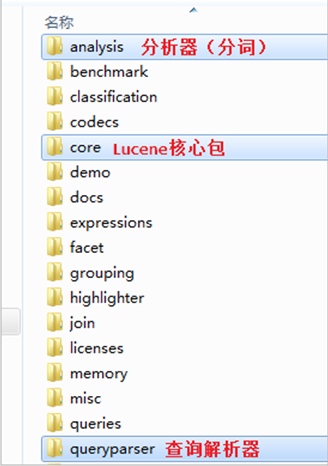

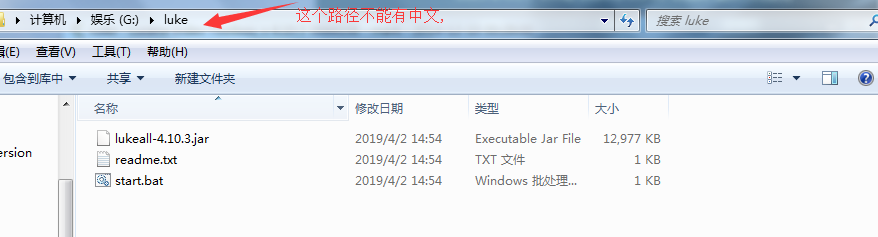

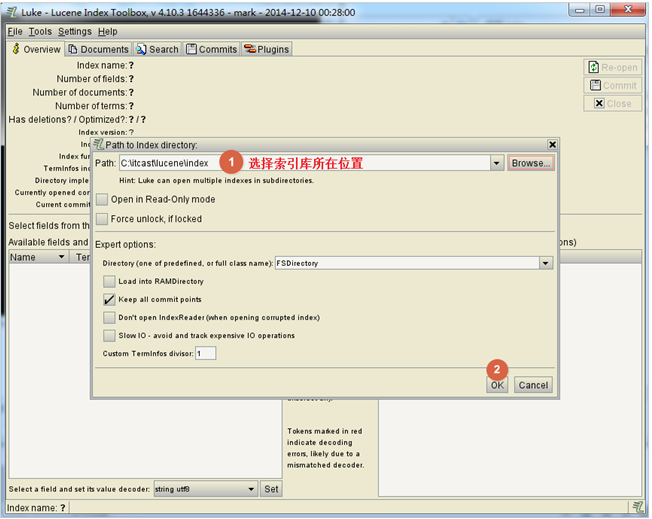

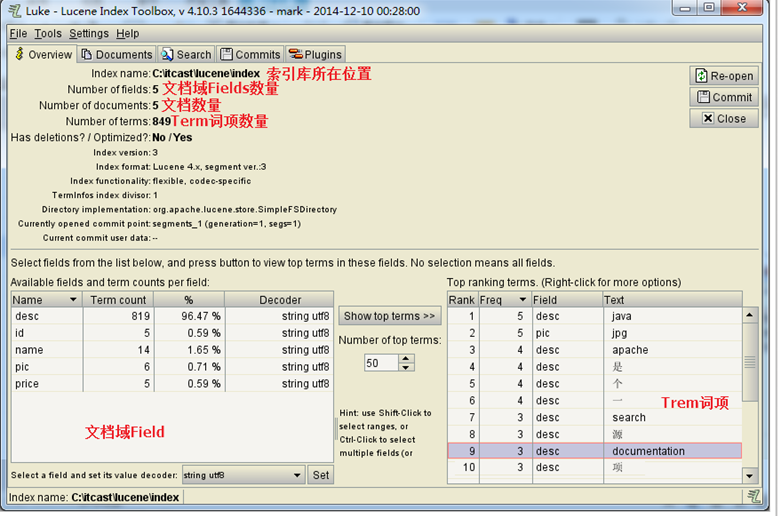

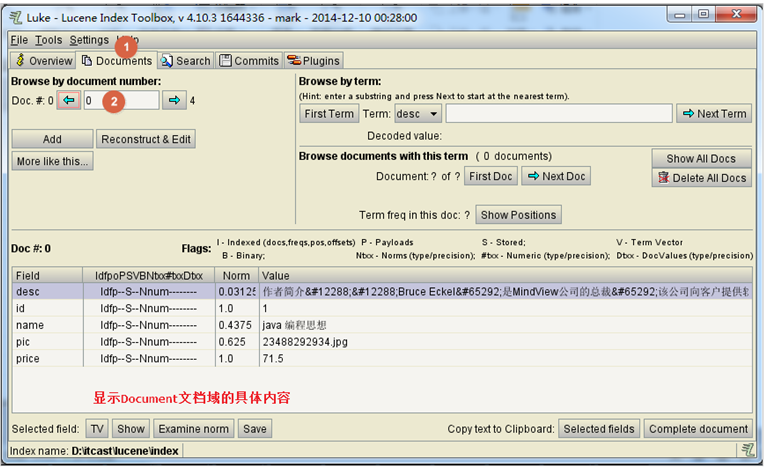

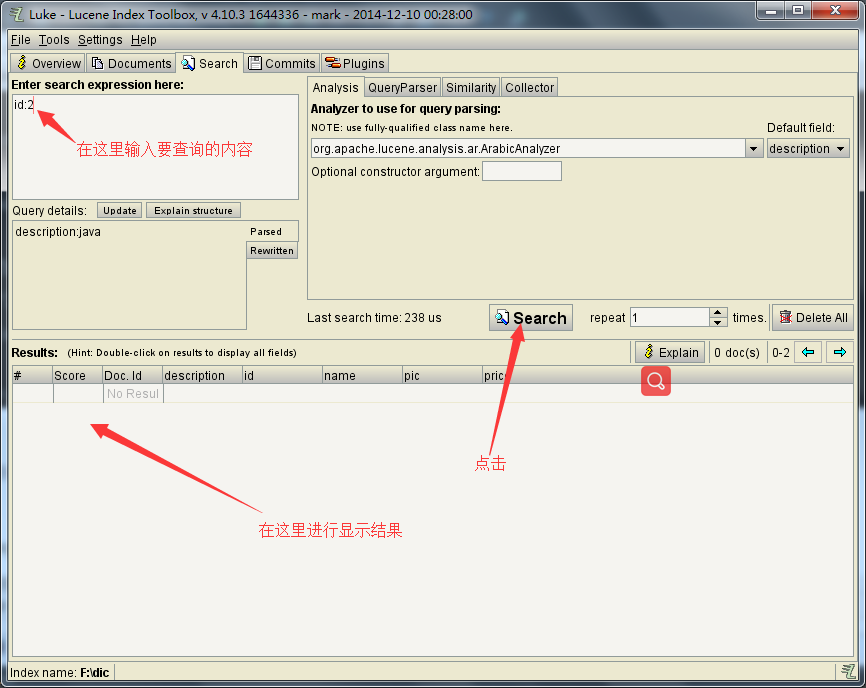

可以使用一个图形化工具,叫做lukeall

这是一个jar包,放在一个没有中文的路径上.

然后在cmd的黑窗口中将这个文件打开.

使用命令

java -jar luke\lukeall-4.10..jar

打开以后是这样的效果.

搜索:

使用这个软件可以方便的进行查看功能.

接下来使用索引进行查询.

/**

* 使用索引进行查询

* 1.使用同一个abakyzer分词器.

* 2.指定库的位置

* 3.创建查询的解析对象

* 4.查询对象

* 5.创建流对象,进行读取该文件夹

* 6.使用索引库的查询对象,进行查询.

* 7.获取到查询最高分数的几个文档,

* 8.遍历文档对象,读取出来域中存着的数据,

* 9..关闭流对象.

*/

package com.qingmu.test; import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.index.IndexReader;

import org.apache.lucene.queryparser.classic.ParseException;

import org.apache.lucene.queryparser.classic.QueryParser;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.store.FSDirectory;

import org.junit.Test; import java.io.File;

import java.io.IOException; /**

* @Auther:qingmu

* @Description:脚踏实地,只为出人头地

* @Date:Created in 7:50 2019/4/4

*/ /**

* 使用索引进行查询

* 1.使用同一个abakyzer分词器.

* 2.指定库的位置

* 3.创建查询的解析对象

* 4.查询对象

* 5.创建流对象,进行读取该文件夹

* 6.使用索引库的查询对象,进行查询.

* 7.获取到查询最高分数的几个文档,

* 8.遍历文档对象,读取出来域中存着的数据,

* 9..关闭流对象.

*/

public class queryTest {

@Test

public void query() throws IOException, ParseException {

// 使用分词器对象,保证和创建时候,使用的同一个对象

StandardAnalyzer analyzer = new StandardAnalyzer();

// 指定索引库的位置

FSDirectory fsDirectory = FSDirectory.open(new File("F:/dic"));

// 创建查询的解析对象

/**

* 参数1.默认查询的域

* 参数2.分词器对象

*/

QueryParser queryParser = new QueryParser("name", analyzer);

// 查询对象

/**

* 参数:查询的关键字

*/

Query parse = queryParser.parse("id:2");

// 索引的输入流

IndexReader indexReader = IndexReader.open(fsDirectory);

// 索引库的查询对象

IndexSearcher indexSearcher = new IndexSearcher(indexReader);

// 开启查询

/**

* 参数1:查询的关键字

* 参数2:最多可以查询几条数据

*/

TopDocs search = indexSearcher.search(parse, 10);

// 获取分数文档数据

ScoreDoc[] scoreDocs = search.scoreDocs;

for (ScoreDoc scoreDoc : scoreDocs) {

// 获取文档的id索引

int docId = scoreDoc.doc;

// 根据id查询文档

Document doc = indexSearcher.doc(docId);

// 根据文档展示文档中的内容

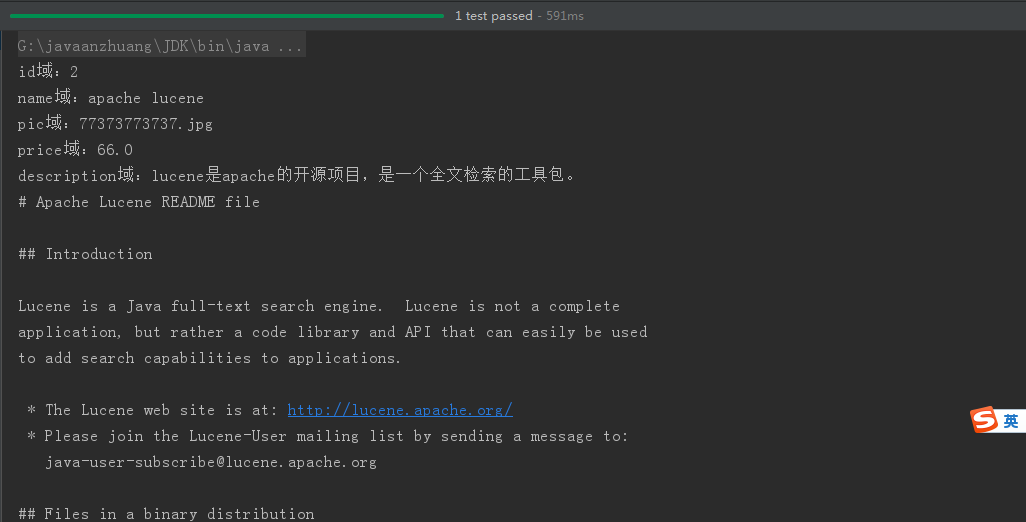

System.out.println("id域:"+doc.get("id"));

System.out.println("name域:"+doc.get("name"));

System.out.println("pic域:"+doc.get("pic"));

System.out.println("price域:"+doc.get("price"));

System.out.println("description域:"+doc.get("description")); }

// 关闭流对象

indexReader.close();

}

}

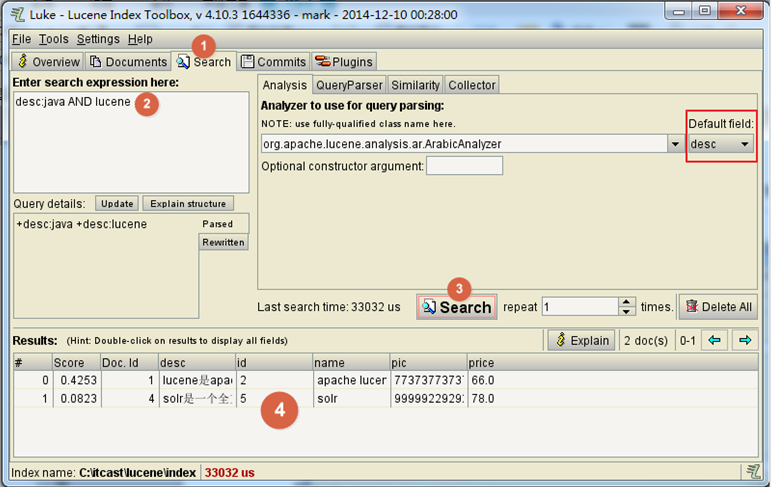

使用图形化界面进行查询:

删除库中的文档

/**

* 1.创建分词器对象

* 2.确定库的位置

* 3.删除的关键字

* 4.创建输出流配置对象

* 5.创建输出流对象

* 6.开始删除

* 7.提交

* 8.关闭流

*/

package com.qingmu.test; import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.index.Term;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.util.Version;

import org.junit.Test; import java.io.File;

import java.io.IOException;

/**

* 删除索引

* @Auther:qingmu

* @Description:脚踏实地,只为出人头地

* @Date:Created in 7:50 2019/4/4

*/

public class DelTest {

@Test

public void test() throws IOException {

// 分词器

Analyzer analyzer = new StandardAnalyzer();

// 索引库的位置

FSDirectory directory = FSDirectory.open(new File("f:/dic"));

// 删除的关键字

Term term = new Term("id", "2");

//创建输出流配置对象

/**

* 参数1:版本

* 参数2:分词器

*/

IndexWriterConfig indexWriterConfig = new IndexWriterConfig(Version.LUCENE_4_10_3, analyzer);

// 输出流对象

/**

* 参数1:索引库的位置

* 参数2:输出流配置对象

*

*/

IndexWriter indexWriter = new IndexWriter(directory, indexWriterConfig);

/// 开始删除

/// 结果:删除了文档,保留了索引

/// indexWriter.deleteDocuments(term);

/// 结果:删除了所有的文档和索引

indexWriter.deleteAll();

/// 提交

indexWriter.commit();

/// 关闭

indexWriter.close();

}

}

进行更新:

package com.qingmu.test; import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.TextField;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.index.Term;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.util.Version;

import org.junit.Test; import javax.print.Doc;

import java.io.File;

import java.io.IOException; /**

* @Auther:qingmu

* @Description:脚踏实地,只为出人头地

* @Date:Created in 15:28 2019/4/4

*/ /**

* 1.创建分词器对象

* 2.确定库的位置

* 3.确定要更新的内容

* 4.创建修改以后的文档

* 5.创建写出流对象

* 6.提交

* 7.关闭流

*/ public class TestUpdateIndex { @Test

public void test() throws IOException {

//分词器对象

Analyzer analyzer = new StandardAnalyzer();

//索引库的位置

FSDirectory directory = FSDirectory.open(new File("f:/dic"));

//修改的关键字

Term term = new Term("id","1");

//创建修改后的文档对象

Document doc = new Document();

//文档中包含的是域,一个域对应了一行中的一列 , 一个book对象中的一个属性对应一个域

//创建域对象

/**

* 参数1:域名:一般用列名

* 参数2: 域中的值,一行中对应的列值

* 参数3: 是否存储到索引库(暂时设置为存储); Field.Store.YES

*/

TextField idField = new TextField("id",String.valueOf(6) , Field.Store.YES);

TextField nameField = new TextField("name","射雕英雄传" , Field.Store.YES);

TextField picField = new TextField("pic","123124141.jpg" , Field.Store.YES);

TextField priceField = new TextField("price",String.valueOf(18.8) , Field.Store.YES);

TextField descriptionField = new TextField("description","人和貂的故事", Field.Store.YES);

//把域对象添加到文档中

doc.add(idField);

doc.add(nameField);

doc.add(picField);

doc.add(priceField);

doc.add(descriptionField);

//创建输出流配置对象

/**

* 参数1:版本

* 参数2:分词器

*/

IndexWriterConfig indexWriterConfig = new IndexWriterConfig(Version.LUCENE_4_10_3, analyzer);

//输出流对象

/**

* 参数1:索引库的位置

* 参数2:索引输出流配置对象

*/

IndexWriter indexWriter = new IndexWriter(directory, indexWriterConfig);

//开始修改

/**

* 参数1:term: 分词, 修改的关键字

* 参数2:文档对象-- 修改后的文档对象

*

* 结果:保留了原来的索引,删除原来的文档,新加了一个文档

*/

indexWriter.updateDocument(term, doc);

// 提交

indexWriter.commit();

//关闭

indexWriter.close();

}

}

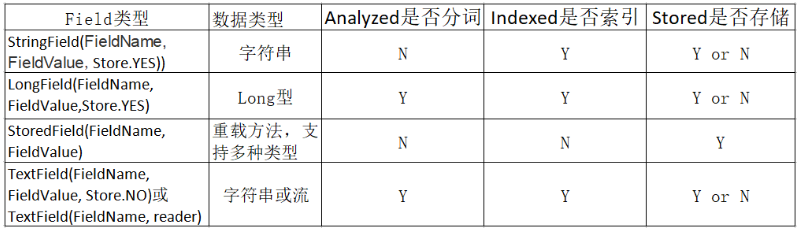

Field域的类型:

关于Lucene的一些名词的注释

1. 切分词:把内容中不重要的此删除,比如:的,地,得,啊,嗯,哦,哎,a,an ,the ......, 把大写转小写...

2. 索引:目录

3. 文档:是lucene中的一个对象,一个文档可以存储数据库的一条记录

4. 索引库:对应到了电脑上一个文件夹,保存索引和文档对象

5. 域对象的选择

是否分词:是否有意义,如果分词后有意义分词,无意义不分词

是:有意义

举例:name, price, description

否:无意义

举例:id,pic

是否索引: 索引的目的,就是为了查询

是:需要使用它查询

举例:id,name,price ,decription

否:需要使用它查询

举例:pic

是否存储: 是否要存储到索引库中,需要在查询页展示的数据则需要存储, 描述域一般内容比较多,一般不存储,如果需要描述信息,通过id,jdbc快速从数据库查询出来

是:需要展示

举例:id,name,price,pic,

否:不需要展示

举例:decription

6. 特例:如果需要范围检索,则需要分词,需要索引,需要存储,这是lucene中的特例

本文中有一般分,并不是个人的体会,是查寻出来的.

Lucene的入门的更多相关文章

- 【转载】Lucene.Net入门教程及示例

本人看到这篇非常不错的Lucene.Net入门基础教程,就转载分享一下给大家来学习,希望大家在工作实践中可以用到. 一.简单的例子 //索引Private void Index(){ Index ...

- Lucene.net入门学习

Lucene.net入门学习(结合盘古分词) Lucene简介 Lucene是apache软件基金会4 jakarta项目组的一个子项目,是一个开放源代码的全文检索引擎工具包,即它不是一个完整的全 ...

- Lucene.net入门学习系列(2)

Lucene.net入门学习系列(2) Lucene.net入门学习系列(1)-分词 Lucene.net入门学习系列(2)-创建索引 Lucene.net入门学习系列(3)-全文检索 在使用Luce ...

- Lucene.net入门学习系列(1)

Lucene.net入门学习系列(1) Lucene.net入门学习系列(1)-分词 Lucene.net入门学习系列(2)-创建索引 Lucene.net入门学习系列(3)-全文检索 这几天在公 ...

- 1.搜索引擎的历史,搜索引擎起步,发展,繁荣,搜索引擎的原理,搜索技术用途,信息检索过程,倒排索引,什么是Lucene,Lucene快速入门

一: 1 搜索引擎的历史 萌芽:Archie.Gopher Archie:搜索FTP服务器上的文件 Gopher:索引网页 2 起步:Robot(网络机器人)的出现与spider(网络爬虫) ...

- Lucene 02 - Lucene的入门程序(Java API的简单使用)

目录 1 准备环境 2 准备数据 3 创建工程 3.1 创建Maven Project(打包方式选jar即可) 3.2 配置pom.xml, 导入依赖 4 编写基础代码 4.1 编写图书POJO 4. ...

- Lucene从入门到实战

Lucene 在了解Lucene之前,我们先了解下全文数据查询. 全文数据查询 我们的数据一般分为两种:结构化数据和非结构化数据 结构化数据:有固定格式或有限长度的数据,如数据库中的数据.元数据 非结 ...

- [全文检索]Lucene基础入门.

本打算直接来学习Solr, 现在先把Lucene的只是捋一遍. 本文内容: 1. 搜索引擎的发展史 2. Lucene入门 3. Lucene的API详解 4. 索引调优 5. Lucene搜索结果排 ...

- Lucene全文检索入门使用

一. 什么是全文检索 全文检索是计算机程序通过扫描文章中的每一个词,对每一个词建立一个索引,指明该词在文章中出现的次数和位置.当用户查询时根据建立的索引查找,类似于通过字典的检索字表查字的过程 全文检 ...

- lucene 快速入门

日常开发中,相信大家经常会用like去匹配一些数据,同时我们也知道,like往往会导致全表扫描,当数据量越来越大的时候,我们会纠结于 数据库的龟速查找,此时我们必须另寻蹊跷,这时lucene就可以大显 ...

随机推荐

- 如何利用VMware安装XP系统

如何利用VMware安装XP系统 百度经验 http://jingyan.baidu.com/article/215817f78ba0c51eda142322.html 1 运行分区工具 2 ...

- mac 安装geckodriver和chromedriver

Last login: Fri Apr :: on ttys000 (base) localhost:~ ligaijiang$ env TERM_PROGRAM=Apple_Terminal SHE ...

- ODS与DW之间的关系

1.什么是数据仓库? 数据仓库是面向主题的.集成的.相对稳定的.反应历史变化的数据集合,主要用于决策支持和信息的全局共享. 时效:T+1 2.什么是ODS? ODS全称为Operational Dat ...

- ROSETTA使用技巧随笔--Full Atom Representation和Centroid Representation

Full Atom Representation vs Centroid Representation Full Atom Representation即全原子标识,氨基酸残基的所有相关原子,均原封不 ...

- iOS UI布局-回到顶部

回到顶部,是比较常用的一个效果 核心代码 在ViewDidLoad中,添加回到顶部按钮 计算偏移量,如果当前显示的内容全部向上隐藏掉,则显示“回到顶部”按钮 // // ViewController. ...

- python模拟艺龙网登录带验证码输入

1.使用urllib与urllib2包 2.使用cookielib自动管理cookie 3.360浏览器F12抓信息 登录请求地址和验证码地址都拿到了如图 # -*- coding: utf-8 -* ...

- ideal使用eclipse快捷键

1.修改使用Eclipse风格的快捷键目的是习惯了使用eclipse的快捷键,在使用IDEA时不想重头记一套新的快捷键.按照下面的顺序操作File --> settings --> key ...

- MyBatis基础入门《十五》ResultMap子元素(collection)

MyBatis基础入门<十五>ResultMap子元素(collection) 描述: 见<MyBatis基础入门<十四>ResultMap子元素(association ...

- 使用spring的特殊bean完成分散配置

1.在使用分散配置时,spring的配置文件applicationContext.xml中写法如下: <!-- 引入db.properties文件, --> <context:pro ...

- [9]Windows内核情景分析 --- DPC

DPC不同APC,DPC的全名是'延迟过程调用'. DPC最初作用是设计为中断服务程序的一部分.因为每次触发中断,都会关中断,然后执行中断服务例程.由于关中断了,所以中断服务例程必须短小精悍,不能消耗 ...