自然语言17_Chinking with NLTK

sklearn实战-乳腺癌细胞数据挖掘(博主亲自录制视频教程)

https://study.163.com/course/introduction.htm?courseId=1005269003&utm_campaign=commission&utm_source=cp-400000000398149&utm_medium=share

https://www.pythonprogramming.net/chinking-nltk-tutorial/?completed=/chunking-nltk-tutorial/

代码

# -*- coding: utf-8 -*-

"""

Created on Sun Nov 13 09:14:13 2016 @author: daxiong

"""

import nltk

from nltk.corpus import state_union

from nltk.tokenize import PunktSentenceTokenizer #训练数据

train_text=state_union.raw("2005-GWBush.txt")

#测试数据

sample_text=state_union.raw("2006-GWBush.txt")

'''

Punkt is designed to learn parameters (a list of abbreviations, etc.)

unsupervised from a corpus similar to the target domain.

The pre-packaged models may therefore be unsuitable:

use PunktSentenceTokenizer(text) to learn parameters from the given text

'''

#我们现在训练punkttokenizer(分句器)

custom_sent_tokenizer=PunktSentenceTokenizer(train_text)

#训练后,我们可以使用punkttokenizer(分句器)

tokenized=custom_sent_tokenizer.tokenize(sample_text) '''

nltk.pos_tag(["fire"]) #pos_tag(列表)

Out[19]: [('fire', 'NN')]

'''

'''

#测试语句

words=nltk.word_tokenize(tokenized[0])

tagged=nltk.pos_tag(words)

chunkGram=r"""Chunk:{<RB.?>*<VB.?>*<NNP>+<NN>?}"""

chunkParser=nltk.RegexpParser(chunkGram)

chunked=chunkParser.parse(tagged)

#lambda t:t.label()=='Chunk' 包含Chunk标签的列

for subtree in chunked.subtrees(filter=lambda t:t.label()=='Chunk'):

print(subtree)

''' #文本词性标记函数

def process_content():

try:

for i in tokenized[0:5]:

words = nltk.word_tokenize(i)

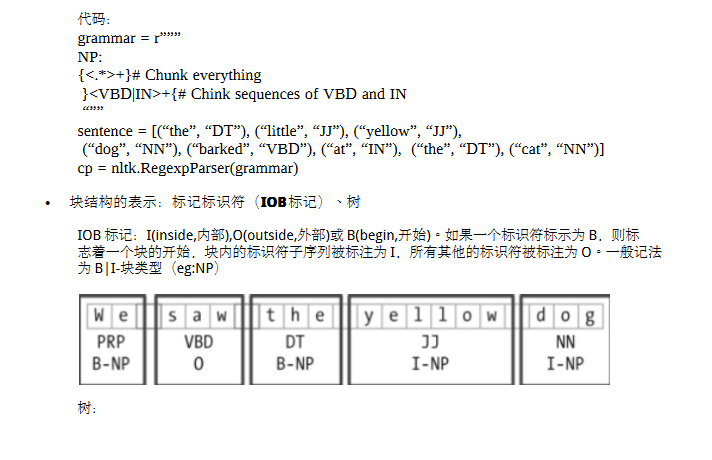

tagged = nltk.pos_tag(words) chunkGram = r"""Chunk: {<.*>+}

}<VB.?|IN|DT|TO>+{""" chunkParser = nltk.RegexpParser(chunkGram)

chunked = chunkParser.parse(tagged) chunked.draw() except Exception as e:

print(str(e)) process_content()

百度文库参考

http://wenku.baidu.com/link?url=YIrqeVS8a1zO_H0t66kj1AbUUReLUJIqId5So5Szk0JJAupyg_m2U_WqxEHqAHDy9DfmoAAPu0CdNFf-rePBsTHkx-0WDpoYTH1txFDKQxC

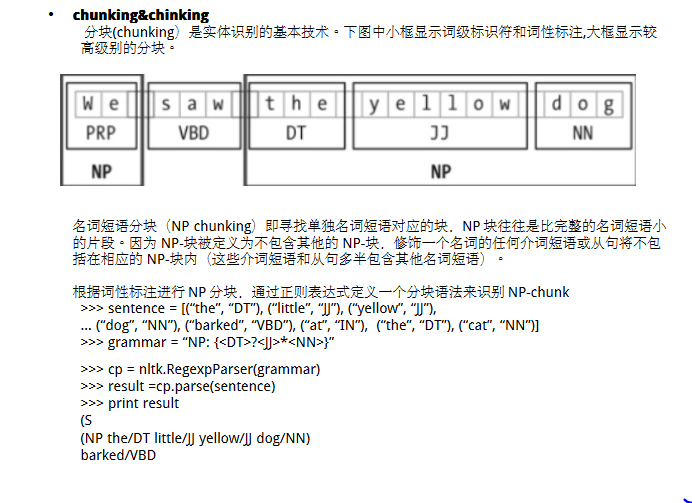

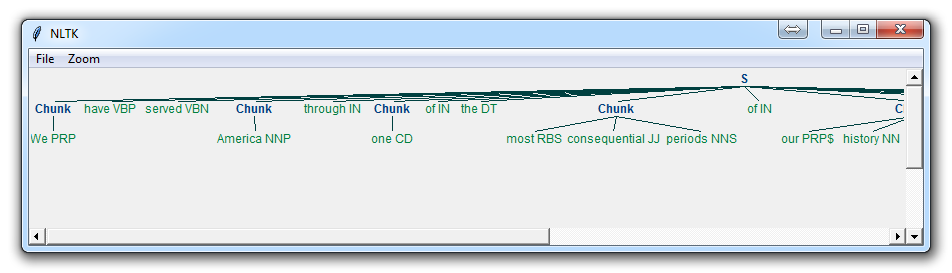

chinking可用于提取句子主干,去除不需要的修饰语

Chinking with NLTK

You may find that, after a lot of chunking, you have some words in

your chunk you still do not want, but you have no idea how to get rid

of them by chunking. You may find that chinking is your solution.

Chinking is a lot like chunking, it is basically a way for you to

remove a chunk from a chunk. The chunk that you remove from your chunk

is your chink.

The code is very similar, you just denote the chink, after the chunk, with }{ instead of the chunk's {}.

import nltk

from nltk.corpus import state_union

from nltk.tokenize import PunktSentenceTokenizer train_text = state_union.raw("2005-GWBush.txt")

sample_text = state_union.raw("2006-GWBush.txt") custom_sent_tokenizer = PunktSentenceTokenizer(train_text) tokenized = custom_sent_tokenizer.tokenize(sample_text) def process_content():

try:

for i in tokenized[5:]:

words = nltk.word_tokenize(i)

tagged = nltk.pos_tag(words) chunkGram = r"""Chunk: {<.*>+}

}<VB.?|IN|DT|TO>+{""" chunkParser = nltk.RegexpParser(chunkGram)

chunked = chunkParser.parse(tagged) chunked.draw() except Exception as e:

print(str(e)) process_content()

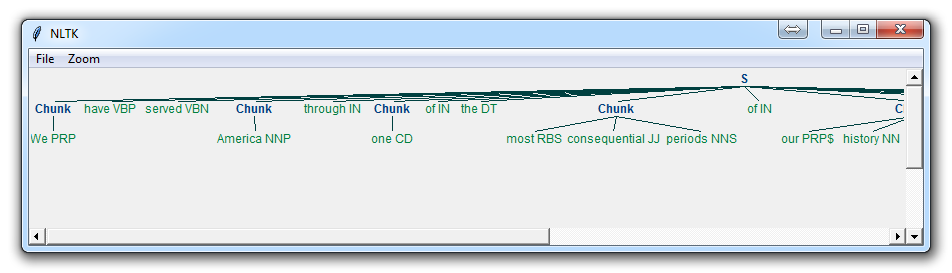

With this, you are given something like:

Now, the main difference here is:

}<VB.?|IN|DT|TO>+{

此句表示,我们移除一个或多个动词,介词,定冠词,或to

This means we're removing from the chink one or more verbs, prepositions, determiners, or the word 'to'.

Now that we've learned how to do some custom forms of chunking, and chinking, let's discuss a built-in form of chunking that comes with NLTK, and that is named entity recognition.

自然语言17_Chinking with NLTK的更多相关文章

- 转 --自然语言工具包(NLTK)小结

原作者:http://www.cnblogs.com/I-Tegulia/category/706685.html 1.自然语言工具包(NLTK) NLTK 创建于2001 年,最初是宾州大学计算机与 ...

- 自然语言22_Wordnet with NLTK

QQ:231469242 欢迎喜欢nltk朋友交流 https://www.pythonprogramming.net/wordnet-nltk-tutorial/?completed=/nltk-c ...

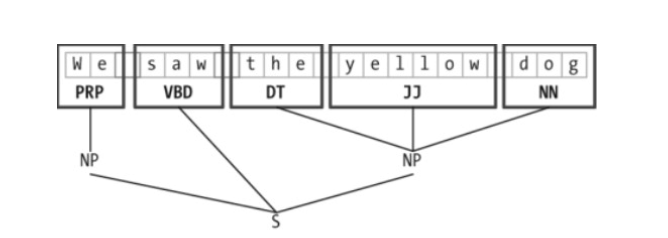

- 自然语言16_Chunking with NLTK

Chunking with NLTK 对chunk分类数据结构可以图形化输出,用于分析英语句子主干结构 # -*- coding: utf-8 -*-"""Created ...

- Python自然语言处理工具NLTK的安装FAQ

1 下载Python 首先去python的主页下载一个python版本http://www.python.org/,一路next下去,安装完毕即可 2 下载nltk包 下载地址:http://www. ...

- Python自然语言工具包(NLTK)入门

在本期文章中,小生向您介绍了自然语言工具包(Natural Language Toolkit),它是一个将学术语言技术应用于文本数据集的 Python 库.称为“文本处理”的程序设计是其基本功能:更深 ...

- Python NLTK 自然语言处理入门与例程(转)

转 https://blog.csdn.net/hzp666/article/details/79373720 Python NLTK 自然语言处理入门与例程 在这篇文章中,我们将基于 Pyt ...

- NLTK在自然语言处理

nltk-data.zip 本文主要是总结最近学习的论文.书籍相关知识,主要是Natural Language Pracessing(自然语言处理,简称NLP)和Python挖掘维基百科Infobox ...

- Python自然语言处理工具小结

Python自然语言处理工具小结 作者:白宁超 2016年11月21日21:45:26 目录 [Python NLP]干货!详述Python NLTK下如何使用stanford NLP工具包(1) [ ...

- 自然语言处理(NLP)入门学习资源清单

Melanie Tosik目前就职于旅游搜索公司WayBlazer,她的工作内容是通过自然语言请求来生产个性化旅游推荐路线.回顾她的学习历程,她为期望入门自然语言处理的初学者列出了一份学习资源清单. ...

随机推荐

- 利用ajaxfileupload.js异步上传文件

1.引入ajaxfileupload.js 2.html代码 <input type="file" id="enclosure" name="e ...

- 进程间通信方式与Binder机制原理

1, Intent隐式意图携带数据 2, AIDL(Binder) 3, 广播BroadCast 4, 内容提供者ContentProvider --------------------------- ...

- Java实现本地 fileCopy

前言: Java中流是重要的内容,基础的文件读写与拷贝知识点是很多面试的考点.故通过本文进行简单测试总结. 2.图展示[文本IO/二进制IO](这是参考自网上的一张总结图,相当经典,方便对比记忆) 3 ...

- golang学习之旅:方法、函数使用心得

假设要在$GOPATH/pkg/$GOOS_$GOARCH/basepath/ProjectName/目录下开发一个名为xxx的package.(这里basepath指的是github.com/mic ...

- 【BZOJ-4690】Never Wait For Weights 带权并查集

4690: Never Wait for Weights Time Limit: 15 Sec Memory Limit: 256 MBSubmit: 88 Solved: 41[Submit][ ...

- 【BZOJ-4631】踩气球 线段树 + STL

4631: 踩气球 Time Limit: 10 Sec Memory Limit: 256 MBSubmit: 224 Solved: 114[Submit][Status][Discuss] ...

- VS2008 查找 替换对话框无法打开的解决方法

1.今天碰到了这个窗口打不开的问题.醉了 解决方案: 窗口->重置窗口布局.

- Jenkins从2.x新建Job时多了一个文件夹的功能(注意事项)

这个job如果在一个文件夹里面,那么想要的URL就会改变,默认会带上这个文件夹上去,所以在用[参数化构建插件]的时候要留意这个点.获取的URL将会不一样.

- tomcat部署web应用的4种方法

在Tomcat中有四种部署Web应用的方式,简要的概括分别是: (1)利用Tomcat自动部署 (2)利用控制台进行部署 (3)增加自定义的Web部署文件(%Tomcat_Home%\conf\Cat ...

- Uva11538 排列组合水题

画个图就很容易推出公式: 设mn=min(m,n),mx=max(m,n) 对角线上: 横向:m*C(n,2) 纵向:n*C(m,2) 因为所有的C函数都是只拿了两个,所以可以优化下.不过不优化也过了 ...