Android:Mstar Android8.0平台音量控制流程

一、Speaker 音量、静音流程分析

java层音量设置首先调用到的是AudioManager.java中的方法,在这里有两种方法可以设置音量 setStreamVolume 和 adjustStreamVolume :

setStreamVolume:传入index直接设置音量值

adjustStreamVolume:传入direction,根据direction和获取到的步长设置音量。

frameworks\base\services\core\java\com\android\server\policy\PhoneWindowManager.java 的 interceptKeyBeforeDispatching 函数中进行了按键监听:

......

} else if (keyCode == KeyEvent.KEYCODE_VOLUME_UP

|| keyCode == KeyEvent.KEYCODE_VOLUME_DOWN

|| keyCode == KeyEvent.KEYCODE_VOLUME_MUTE) {

if (mUseTvRouting || mHandleVolumeKeysInWM) {

// On TVs or when the configuration is enabled, volume keys never

// go to the foreground app.

// MStar Android Patch Begin

if (mContext.getPackageManager().hasSystemFeature(PackageManager.FEATURE_HDMI_CEC)) {

dispatchDirectAudioEvent(event);

return -1;

}

return 0;

// MStar Android Patch End

}

......

在 dispatchDirectAudioEvent 函数中获取了AudioService:

private void dispatchDirectAudioEvent(KeyEvent event) {

......case KeyEvent.KEYCODE_VOLUME_MUTE:

try {

if (event.getRepeatCount() == 0) {

getAudioService().adjustSuggestedStreamVolume(

AudioManager.ADJUST_TOGGLE_MUTE,

AudioManager.USE_DEFAULT_STREAM_TYPE, flags, pkgName, TAG);

}

} catch (Exception e) {

Log.e(TAG, "Error dispatching mute in dispatchTvAudioEvent.", e);

}

break;

}

}

然后通过 AIDL 接口调用到 frameworks\base\services\core\java\com\android\server\audio\AudioService.java 中的adjustSuggestedStreamVolume()方法,第一个参数direction指示了音量的调整方向,1为增大,-1为减小;第二个参数suggestedStreamType表示要求调整音量的流类型;第三个参数flags,其实是在AudioManager在handleKeyDown()中设置了两个flags,分别是FLAG_SHOW_UI和FLAG_VIBRATE,前者告诉AudioService需要弹出一个音量控制面板,而在handleKeyUp()里设置了FLAG_PLAY_SOUND,这是为什么在松开音量键后“有时候”(在特定的流类型且没有处于锁屏状态下)会有一个提示音。

// 1.确定要调整音量的流类型 2.在某些情况下屏蔽FLAG_PLAY_SOUND 3.调用adjustStreamVolume()

private void adjustSuggestedStreamVolume(int direction, int suggestedStreamType, int flags, String callingPackage, int uid) {

......

//在AudioService中还可以强行改变音量键控制的流类型。

//mVolumeControlStream是VolumePanel通过forceVolumeControlStream()设置的,

//VolumePanel显示时会调用forceVolumeControlStream强制后续的音量键操作固定为促使它显示的那个流类型,

//并在它关闭时取消这个强制设置,设值为-1

if (mUserSelectedVolumeControlStream) { // implies mVolumeControlStream != -1

streamType = mVolumeControlStream;

} else {

final int maybeActiveStreamType = getActiveStreamType(suggestedStreamType);

final boolean activeForReal;

if (maybeActiveStreamType == AudioSystem.STREAM_MUSIC) {

activeForReal = isAfMusicActiveRecently(0);

} else {

activeForReal = AudioSystem.isStreamActive(maybeActiveStreamType, 0);

}

if (activeForReal || mVolumeControlStream == -1) {

streamType = maybeActiveStreamType;

} else {

streamType = mVolumeControlStream;

}

}

final boolean isMute = isMuteAdjust(direction);

......

}

这里主要关心静音的处理流程,通过isMuteAdjust()判断direction是否为支持的静音类型:ADJUST_MUTE(静音) ,ADJUST_UNMUTE(取消静音),ADJUST_TOGGLE_MUTE(取反)

private boolean isMuteAdjust(int adjust) {

return adjust == AudioManager.ADJUST_MUTE || adjust == AudioManager.ADJUST_UNMUTE

|| adjust == AudioManager.ADJUST_TOGGLE_MUTE;

}

接着调用到adjustStreamVolume()方法,判断mute类型并

private void adjustStreamVolume(int streamType, int direction, int flags,

String callingPackage, String caller, int uid) {

if (mUseFixedVolume) {

return;

}

if (DEBUG_VOL) Log.d(TAG, "adjustStreamVolume() stream=" + streamType + ", dir=" + direction

+ ", flags=" + flags + ", caller=" + caller); ensureValidDirection(direction); //确认一下调整的音量方向

ensureValidStreamType(streamType); //确认一下调整的流类型 boolean isMuteAdjust = isMuteAdjust(direction); //检查静音控制类型 if (isMuteAdjust && !isStreamAffectedByMute(streamType)) { //如果是静音操作,则需要进一步判断流类型是否能被静音,否则直接返回

return;

} // use stream type alias here so that streams with same alias have the same behavior,

// including with regard to silent mode control (e.g the use of STREAM_RING below and in

// checkForRingerModeChange() in place of STREAM_RING or STREAM_NOTIFICATION)

int streamTypeAlias = mStreamVolumeAlias[streamType]; //获取streamType映射到的流类型 //VolumeStreamState类,保存与一个流类型所有音量相关的信息

VolumeStreamState streamState = mStreamStates[streamTypeAlias]; final int device = getDeviceForStream(streamTypeAlias); int aliasIndex = streamState.getIndex(device); //获取当前音量

boolean adjustVolume = true;

int step;

......

flags &= ~AudioManager.FLAG_FIXED_VOLUME;

if ((streamTypeAlias == AudioSystem.STREAM_MUSIC) &&

((device & mFixedVolumeDevices) != 0)) {

flags |= AudioManager.FLAG_FIXED_VOLUME; // Always toggle between max safe volume and 0 for fixed volume devices where safe

// volume is enforced, and max and 0 for the others.

// This is simulated by stepping by the full allowed volume range

if (mSafeMediaVolumeState == SAFE_MEDIA_VOLUME_ACTIVE &&

(device & mSafeMediaVolumeDevices) != 0) {

step = mSafeMediaVolumeIndex;

} else {

step = streamState.getMaxIndex();

}

if (aliasIndex != 0) {

aliasIndex = step;

}

} else {

// convert one UI step (+/-1) into a number of internal units on the stream alias

//rescaleIndex用于将音量值的变化量从源流类型变换到目标流类型下,

//由于不同的流类型的音量调节范围不同,所以这个转换是必需的

step = rescaleIndex(10, streamType, streamTypeAlias);

} // If either the client forces allowing ringer modes for this adjustment,

// or the stream type is one that is affected by ringer modes

if (((flags & AudioManager.FLAG_ALLOW_RINGER_MODES) != 0) ||

(streamTypeAlias == getUiSoundsStreamType())) {

int ringerMode = getRingerModeInternal();

// do not vibrate if already in vibrate mode

if (ringerMode == AudioManager.RINGER_MODE_VIBRATE) {

flags &= ~AudioManager.FLAG_VIBRATE;

}

// Check if the ringer mode handles this adjustment. If it does we don't

// need to adjust the volume further.

final int result = checkForRingerModeChange(aliasIndex, direction, step,

streamState.mIsMuted, callingPackage, flags);

adjustVolume = (result & FLAG_ADJUST_VOLUME) != 0; //布尔变量,用来表示是否有必要继续设置音量值

// If suppressing a volume adjustment in silent mode, display the UI hint

if ((result & AudioManager.FLAG_SHOW_SILENT_HINT) != 0) {

flags |= AudioManager.FLAG_SHOW_SILENT_HINT;

}

// If suppressing a volume down adjustment in vibrate mode, display the UI hint

if ((result & AudioManager.FLAG_SHOW_VIBRATE_HINT) != 0) {

flags |= AudioManager.FLAG_SHOW_VIBRATE_HINT;

}

}

// If the ringermode is suppressing media, prevent changes

if (!volumeAdjustmentAllowedByDnd(streamTypeAlias, flags)) {

adjustVolume = false;

}

int oldIndex = mStreamStates[streamType].getIndex(device); if (adjustVolume && (direction != AudioManager.ADJUST_SAME)) {

mAudioHandler.removeMessages(MSG_UNMUTE_STREAM); ...... if (isMuteAdjust) { // 如果当前是静音操作则判断类型并设置对应状态

boolean state;

if (direction == AudioManager.ADJUST_TOGGLE_MUTE) {

state = !streamState.mIsMuted;

} else {

state = direction == AudioManager.ADJUST_MUTE;

}

if (streamTypeAlias == AudioSystem.STREAM_MUSIC) {

setSystemAudioMute(state); // TV 平台涉及的 HDMI 音量控制

}

for (int stream = 0; stream < mStreamStates.length; stream++) {

if (streamTypeAlias == mStreamVolumeAlias[stream]) {

if (!(readCameraSoundForced()

&& (mStreamStates[stream].getStreamType()

== AudioSystem.STREAM_SYSTEM_ENFORCED))) {

mStreamStates[stream].mute(state); // 设置对应流为静音状态

}

}

}

} else if ((direction == AudioManager.ADJUST_RAISE) &&

!checkSafeMediaVolume(streamTypeAlias, aliasIndex + step, device)) {

Log.e(TAG, "adjustStreamVolume() safe volume index = " + oldIndex);

mVolumeController.postDisplaySafeVolumeWarning(flags);

} else if (streamState.adjustIndex(direction * step, device, caller) //判断streamState.adjustIndex返回值,如果音量值在调整之后并没有发生变化,比如到了最大值,就不需要继续后面的操作了

|| streamState.mIsMuted) {

// Post message to set system volume (it in turn will post a

// message to persist).

if (streamState.mIsMuted) {

// Unmute the stream if it was previously muted

if (direction == AudioManager.ADJUST_RAISE) {

// unmute immediately for volume up

streamState.mute(false);

} else if (direction == AudioManager.ADJUST_LOWER) {

if (mIsSingleVolume) {

sendMsg(mAudioHandler, MSG_UNMUTE_STREAM, SENDMSG_QUEUE,

streamTypeAlias, flags, null, UNMUTE_STREAM_DELAY);

}

}

} //这个消息将把音量设置到底层去,并将其存储到Settingsprovider中

sendMsg(mAudioHandler,

MSG_SET_DEVICE_VOLUME,

SENDMSG_QUEUE,

device,

0,

streamState,

0);

} // Check if volume update should be sent to Hdmi system audio.

int newIndex = mStreamStates[streamType].getIndex(device);

if (streamTypeAlias == AudioSystem.STREAM_MUSIC) {

setSystemAudioVolume(oldIndex, newIndex, getStreamMaxVolume(streamType), flags);

}

......

}

int index = mStreamStates[streamType].getIndex(device);

sendVolumeUpdate(streamType, oldIndex, index, flags); // 通知外界音量值发生了变化

}

总结一下这个函数:

- 计算按下音量键的音量步进值。这个步进值是10而不是1。在VolumeStreamState中保存的音量值是其实际值的10倍,这是为了在不同流类型之间进行音量转化时能够保证一定精度的一种实现。可以理解为在转化过程中保留了小数点后一位的精度。

- 检查是否需要改变情景模式。checkForRingerModeChange()和情景模式有关。

- 调用adjustIndex()更改VolumeStreamState对象中保存的音量值。

- 通过sendMsg()发送消息MSG_SET_DEVICE_VOLUME到mAudioHandler。

- 调用sendVolumeUpdate()函数,通知外界音量值发生了变化。

接着分析 mute() 函数实现:

public void mute(boolean state) {

boolean changed = false;

synchronized (VolumeStreamState.class) {

if (state != mIsMuted) {

changed = true;

mIsMuted = state;

// Set the new mute volume. This propagates the values to

// the audio system, otherwise the volume won't be changed

// at the lower level.

// 发送消息到 audio system

sendMsg(mAudioHandler,

MSG_SET_ALL_VOLUMES,

SENDMSG_QUEUE,

0,

0,

this, 0);

}

}

if (changed) {

// Stream mute changed, fire the intent.

// 发送广播通知

Intent intent = new Intent(AudioManager.STREAM_MUTE_CHANGED_ACTION);

intent.putExtra(AudioManager.EXTRA_VOLUME_STREAM_TYPE, mStreamType);

intent.putExtra(AudioManager.EXTRA_STREAM_VOLUME_MUTED, state);

sendBroadcastToAll(intent);

}

}

mAudioHandler是在 AudioSystemThread 启动时创建:

/** Thread that handles native AudioSystem control. */

private class AudioSystemThread extends Thread {

AudioSystemThread() {

super("AudioService");

} @Override

public void run() {

// Set this thread up so the handler will work on it

Looper.prepare(); synchronized(AudioService.this) {

mAudioHandler = new AudioHandler(); // Notify that the handler has been created

AudioService.this.notify();

} // Listen for volume change requests that are set by VolumePanel

Looper.loop();

}

}

通过AudioHandler中的重载方法 handleMessage() 处理相应的消息:

public void handleMessage(Message msg) {

switch (msg.what) {

case MSG_SET_DEVICE_VOLUME:

setDeviceVolume((VolumeStreamState) msg.obj, msg.arg1);

break;

case MSG_SET_ALL_VOLUMES:

setAllVolumes((VolumeStreamState) msg.obj);

break;

case MSG_PERSIST_VOLUME:

persistVolume((VolumeStreamState) msg.obj, msg.arg1);

break;

......

}

上面发送的消息是 MSG_SET_ALL_VOLUMES,所以看下setAllVolumes()方法:

private void setAllVolumes(VolumeStreamState streamState) {

// Apply volume

streamState.applyAllVolumes();

// Apply change to all streams using this one as alias

int numStreamTypes = AudioSystem.getNumStreamTypes();

for (int streamType = numStreamTypes - 1; streamType >= 0; streamType--) {

if (streamType != streamState.mStreamType &&

mStreamVolumeAlias[streamType] == streamState.mStreamType) {

mStreamStates[streamType].applyAllVolumes();

}

}

}

applyAllVolumes中调用了AudioSystem中的setStreamVolumeIndex方法根据index设置音量值:

public void applyAllVolumes() {

synchronized (VolumeStreamState.class) {

// apply device specific volumes first

int index;

for (int i = ; i < mIndexMap.size(); i++) {

final int device = mIndexMap.keyAt(i);

if (device != AudioSystem.DEVICE_OUT_DEFAULT) {

if (mIsMuted) {

index = ;

} else if ((device & AudioSystem.DEVICE_OUT_ALL_A2DP) != &&

mAvrcpAbsVolSupported) {

index = getAbsoluteVolumeIndex((getIndex(device) + )/);

} else if ((device & mFullVolumeDevices) != ) {

index = (mIndexMax + )/;

} else {

index = (mIndexMap.valueAt(i) + )/;

}

AudioSystem.setStreamVolumeIndex(mStreamType, index, device);

}

}

// apply default volume last: by convention , default device volume will be used

// by audio policy manager if no explicit volume is present for a given device type

if (mIsMuted) {

index = ;

} else {

index = (getIndex(AudioSystem.DEVICE_OUT_DEFAULT) + )/;

}

AudioSystem.setStreamVolumeIndex(

mStreamType, index, AudioSystem.DEVICE_OUT_DEFAULT);

}

}

调用到frameworks\base\services\core\java\com\android\server\audio\AudioService.java 中声明的JNI层setStreamVolumeIndex() 接口,

下面就进入到了Native层 frameworks\base\core\jni\android_media_AudioSystem.cpp,对应的JNI方法:

{"setStreamVolumeIndex","(III)I", (void *)android_media_AudioSystem_setStreamVolumeIndex},

实现是在 frameworks\av\media\libaudioclient\AudioSystem.cpp

status_t AudioSystem::setStreamVolumeIndex(audio_stream_type_t stream,

int index,

audio_devices_t device)

{

const sp<IAudioPolicyService>& aps = AudioSystem::get_audio_policy_service();

if (aps == ) return PERMISSION_DENIED;

return aps->setStreamVolumeIndex(stream, index, device);

}

先看AudioSystem::get_audio_policy_service()返回了一个什么对象:

// establish binder interface to AudioPolicy service

const sp<IAudioPolicyService> AudioSystem::get_audio_policy_service()

{

sp<IAudioPolicyService> ap;

sp<AudioPolicyServiceClient> apc;

{

Mutex::Autolock _l(gLockAPS);

if (gAudioPolicyService == ) {

sp<IServiceManager> sm = defaultServiceManager();

sp<IBinder> binder;

do {

// 通过ServiceManager获取音频服务(Binder相关知识)

binder = sm->getService(String16("media.audio_policy"));

if (binder != )

break;

ALOGW("AudioPolicyService not published, waiting...");

usleep(); // 0.5 s

} while (true);

if (gAudioPolicyServiceClient == NULL) {

gAudioPolicyServiceClient = new AudioPolicyServiceClient();

}

binder->linkToDeath(gAudioPolicyServiceClient); // 使用interface_cast对binder对象进行转换,后作为client端对象返回

gAudioPolicyService = interface_cast<IAudioPolicyService>(binder); LOG_ALWAYS_FATAL_IF(gAudioPolicyService == );

apc = gAudioPolicyServiceClient;

// Make sure callbacks can be received by gAudioPolicyServiceClient

ProcessState::self()->startThreadPool();

}

ap = gAudioPolicyService;

}

if (apc != ) {

ap->registerClient(apc);

} return ap;

}

上面的 interface_cast 是一个模版,在frameworks\native\include\binder\IInterface.h中定义如下

template<typename INTERFACE>

inline sp<INTERFACE> interface_cast(const sp<IBinder>& obj)

{

return INTERFACE::asInterface(obj);

}

展开后返回的是 IAudioPolicyService::asInterface(binder)

此时asInterface是在IAudioPolicyService作用域下,所以再去IAudioPolicyService.h查找asInterface

在IAudioPolicyService中发现 DECLARE_META_INTERFACE(AudioPolicyService); 这个宏的定义也在frameworks\native\include\binder\IInterface.h中。

#define DECLARE_META_INTERFACE(INTERFACE) \

static const ::android::String16 descriptor; \

static ::android::sp<I##INTERFACE> asInterface( \

const ::android::sp<::android::IBinder>& obj); \

virtual const ::android::String16& getInterfaceDescriptor() const; \

I##INTERFACE(); \

virtual ~I##INTERFACE(); \

其中 ::android::sp<I##INTERFACE> I##INTERFACE::asInterface 返回的intr 就是Bp##INTERFACE(obj),其中INTERFACE就是AudioPolicyService,obj就是binder,

所以展开返回的对象就是BpAudioPolicyService(binder)。

#define IMPLEMENT_META_INTERFACE(INTERFACE, NAME) \

const ::android::String16 I##INTERFACE::descriptor(NAME); \

const ::android::String16& \

I##INTERFACE::getInterfaceDescriptor() const { \

return I##INTERFACE::descriptor; \

} \

::android::sp<I##INTERFACE> I##INTERFACE::asInterface( \

const ::android::sp<::android::IBinder>& obj) \

{ \

::android::sp<I##INTERFACE> intr; \

if (obj != NULL) { \

intr = static_cast<I##INTERFACE*>( \

obj->queryLocalInterface( \

I##INTERFACE::descriptor).get()); \

if (intr == NULL) { \

intr = new Bp##INTERFACE(obj); \

} \

} \

return intr; \

} \

I##INTERFACE::I##INTERFACE() { } \

I##INTERFACE::~I##INTERFACE() { } \

再回到AudioSystem.cpp中 aps->setStreamVolumeIndex() 调用的就是BpAudioPolicyService(binder)->setStreamVolumeIndex(stream, index, device),

而这个BpAudioPolicyService的定义在IAudioPolicyService.cpp中

virtual status_t setStreamVolumeIndex(audio_stream_type_t stream,

int index,

audio_devices_t device)

{

Parcel data, reply;

//调用Parcel讲接口描述符以及stream,index,device包装进datadata.writeInterfaceToken(IAudioPolicyService::getInterfaceDescriptor());

data.writeInt32(static_cast <uint32_t>(stream));

data.writeInt32(index);

data.writeInt32(static_cast <uint32_t>(device));

//使用transact将data以及SET_STREAM_VOLUME传走,并且传了一个reply的引用来保存传回来的值

remote()->transact(SET_STREAM_VOLUME, data, &reply);

return static_cast <status_t> (reply.readInt32());

}

其中remote()是通过继承关系BpAudioPolicyService -> BpInterface -> BpRefBase,在类BpRefBase中定义:

inline IBinder* remote() { return mRemote; }

IBinder* const mRemote;

这里是内联的,并且return的mRemote也是const的,只赋值一次,它是在前面获取远程服务AudioPolicyService时候创建的BpBinder对象(主要是打开了binder驱动,获得FD描述符,并且内存映射了空间),所以调用BpBinder.cpp的transact函数:

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// Once a binder has died, it will never come back to life.

if (mAlive) {

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = ;

return status;

} return DEAD_OBJECT;

}

调用IPCThreadState.cpp的transact,进入IPCThreadState.cpp ,在这个IPCThreadState就是真正交换通讯的地方了,void IPCThreadState::joinThreadPool(bool isMain)会循环调用 result = getAndExecuteCommand();处理命令,而我们的IPCThreadState::self()->transact就会使用Pacle来封装我们传入的数据交给 joinThreadPool来处理。最终会在 status_t IPCThreadState::executeCommand(int32_t cmd)中调用 error = the_context_object->transact(tr.code, buffer, &reply, tr.flags); 这个the_context_object我们可以根据定义 sp the_context_object;了解这是一个bbinder对象(在binder.cpp中)。进入binder.cpp :

status_t BBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

data.setDataPosition();

status_t err = NO_ERROR;

switch (code) {

case PING_TRANSACTION:

reply->writeInt32(pingBinder());

break;

default:

err = onTransact(code, data, reply, flags);

break;

}

if (reply != NULL) {

reply->setDataPosition();

}

return err;

}

这里调用了err = onTransact(code, data, reply, flags);根据继承关系,可以知道调用的是AudioPolicyService的ontransact方法:

status_t AudioPolicyService::onTransact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

return BnAudioPolicyService::onTransact(code, data, reply, flags);

}

IAudioPolicyService.cpp

case SET_STREAM_VOLUME: {

CHECK_INTERFACE(IAudioPolicyService, data, reply);

audio_stream_type_t stream =

static_cast <audio_stream_type_t>(data.readInt32());

int index = data.readInt32();

audio_devices_t device = static_cast <audio_devices_t>(data.readInt32());

reply->writeInt32(static_cast <uint32_t>(setStreamVolumeIndex(stream,

index,

device)));

return NO_ERROR;

} break;

此处的reply->writeInt32(static_cast (setStreamVolumeIndex(stream,index,device));调用了setStreamVolumeIndex方法,所以AudioPolicyService调用了BnAudioPolicyService::onTransact后回去调用自己的 setStreamVolumeIndex方法,而setStreamVolumeIndex的实现在android8.0中在AudioPolicyInterfaceImpl.cpp中。

status_t AudioPolicyService::setStreamVolumeIndex(audio_stream_type_t stream,

int index,

audio_devices_t device)

{

if (mAudioPolicyManager == NULL) {

return NO_INIT;

}

if (!settingsAllowed()) {

return PERMISSION_DENIED;

}

if (uint32_t(stream) >= AUDIO_STREAM_PUBLIC_CNT) {

return BAD_VALUE;

}

Mutex::Autolock _l(mLock);

return mAudioPolicyManager->setStreamVolumeIndex(stream,

index,

device);

}

这里调用mAudioPolicyManager->setStreamVolumeIndex(stream, index,device); AudioPolicyManager下的 setStreamVolumeIndex 方法,回到了AudioPolicyManager.cpp中:

status_t AudioPolicyManager::setStreamVolumeIndex(audio_stream_type_t stream,

int index,

audio_devices_t device)

{ ......if (applyVolume) {

//FIXME: workaround for truncated touch sounds

// delayed volume change for system stream to be removed when the problem is

// handled by system UI

status_t volStatus =

checkAndSetVolume((audio_stream_type_t)curStream, index, desc, curDevice,

(stream == AUDIO_STREAM_SYSTEM) ? TOUCH_SOUND_FIXED_DELAY_MS : );

if (volStatus != NO_ERROR) {

status = volStatus;

}

}

.......

}

在这里会在audiopolicymanager中做一系列的处理,比较关键的是 checkAndSetVolume()

status_t AudioPolicyManager::checkAndSetVolume(audio_stream_type_t stream,

int index,

const sp<AudioOutputDescriptor>& outputDesc,

audio_devices_t device,

int delayMs,

bool force)

{

// do not change actual stream volume if the stream is muted

if (outputDesc->mMuteCount[stream] != ) {

ALOGVV("checkAndSetVolume() stream %d muted count %d",

stream, outputDesc->mMuteCount[stream]);

return NO_ERROR;

}

audio_policy_forced_cfg_t forceUseForComm =

mEngine->getForceUse(AUDIO_POLICY_FORCE_FOR_COMMUNICATION);

// do not change in call volume if bluetooth is connected and vice versa

if ((stream == AUDIO_STREAM_VOICE_CALL && forceUseForComm == AUDIO_POLICY_FORCE_BT_SCO) ||

(stream == AUDIO_STREAM_BLUETOOTH_SCO && forceUseForComm != AUDIO_POLICY_FORCE_BT_SCO)) {

ALOGV("checkAndSetVolume() cannot set stream %d volume with force use = %d for comm",

stream, forceUseForComm);

return INVALID_OPERATION;

} if (device == AUDIO_DEVICE_NONE) {

device = outputDesc->device();

} float volumeDb = computeVolume(stream, index, device);

if (outputDesc->isFixedVolume(device)) {

volumeDb = 0.0f;

}

// 实际项目开发中此处便可直接切入自己的中间件

// Sheldon Android Patch Begin

if (stream == AUDIO_STREAM_MUSIC) {

if ((device & AUDIO_DEVICE_OUT_SPEAKER) ||

(device & AUDIO_DEVICE_OUT_HDMI_ARC) ||

(device & AUDIO_DEVICE_OUT_SPDIF) ||

(device & AUDIO_DEVICE_OUT_WIRED_HEADPHONE)) {

sp<IAudioFlinger> af = AudioSystem::get_audio_flinger();

float volumeDbToAmpl = Volume::DbToAmpl(volumeDb); if (af != NULL) {

ALOGD("%s: index %d, device 0x%x, volume %f(Db %f)", __FUNCTION__, index, device, volumeDbToAmpl, volumeDb);

af->setMasterVolume(volumeDbToAmpl);

volumeDb = 0.0f;

} else {

ALOGE("%s: can Not set master volume because af is null", __FUNCTION__);

}

}

}

// Sheldon Android Patch End

...... return NO_ERROR;

}

在播放音乐时上报静音键值的log:

- ::04.399 D APM_AudioPolicyManager: checkAndSetVolume: index , device 0x2, volume 0.050119(Db -26.000000)

- ::12.820 D APM_AudioPolicyManager: checkAndSetVolume: index , device 0x2, volume 0.000002(Db -115.000000)

同上面获取AudioPolicyService一样,这里获取到 Audiofliger Service 后,通过Binder远程调用到最终的实现在 frameworks\av\services\audioflinger\AudioFlinger.cpp

status_t AudioFlinger::setMasterVolume(float value)

{

......

Mutex::Autolock _l(mLock);

mMasterVolume = value; // Set master volume in the HALs which support it.

for (size_t i = ; i < mAudioHwDevs.size(); i++) {

AutoMutex lock(mHardwareLock);

AudioHwDevice *dev = mAudioHwDevs.valueAt(i); mHardwareStatus = AUDIO_HW_SET_MASTER_VOLUME;

if (dev->canSetMasterVolume()) {

dev->hwDevice()->setMasterVolume(value); // 调用到HAL层接口,开始与底层硬件接口交互

}

mHardwareStatus = AUDIO_HW_IDLE;

}

......

}

其中 mAudioHwDevs 列表是在 ::loadHwModule(const char *name) 中初始化:

// loadHwModule_l() must be called with AudioFlinger::mLock held

audio_module_handle_t AudioFlinger::loadHwModule_l(const char *name)

{

for (size_t i = ; i < mAudioHwDevs.size(); i++) {

if (strncmp(mAudioHwDevs.valueAt(i)->moduleName(), name, strlen(name)) == ) {

ALOGW("loadHwModule() module %s already loaded", name);

return mAudioHwDevs.keyAt(i);

}

} sp<DeviceHalInterface> dev; int rc = mDevicesFactoryHal->openDevice(name, &dev); //Android 7.0 开始引入的HIDL概念需要查阅相关资料了解

if (rc) {

ALOGE("loadHwModule() error %d loading module %s", rc, name);

return AUDIO_MODULE_HANDLE_NONE;

} ......

ALOGI("loadHwModule() Loaded %s audio interface, handle %d", name, handle); return handle; }

先看mDevicesFactoryHal 的初始化,new了一个DevicesFactoryHalHybrid:

mDevicesFactoryHal = DevicesFactoryHalInterface::create();

create的方法实现在 frameworks\av\media\libaudiohal\DevicesFactoryHalHybrid.cpp 中,发现最终其实是new了一个DevicesFactoryHalHybrid.

该对象是一个拥有一个DevicesFactoryHalLocal对象和DevicesFactoryHalHidl对象的“混血儿”(Hybrid字面之意):

DevicesFactoryHalHybrid::DevicesFactoryHalHybrid()

: mLocalFactory(new DevicesFactoryHalLocal()),

mHidlFactory(

#ifdef USE_LEGACY_LOCAL_AUDIO_HAL

nullptr

#else

new DevicesFactoryHalHidl()

#endif

) {

}

也就是说mDevicesFactoryHal是一个DevicesFactoryHalHybrid对象,前面调用的openDevice方法即为:

status_t DevicesFactoryHalHybrid::openDevice(const char *name, sp<DeviceHalInterface> *device) {

//如果mHidlFactory存在,而且要打开的设备不是AUDIO_HARDWARE_MODULE_ID_A2DP.

if (mHidlFactory != && strcmp(AUDIO_HARDWARE_MODULE_ID_A2DP, name) != ) {

return mHidlFactory->openDevice(name, device);

}

return mLocalFactory->openDevice(name, device);

}

mDevicesFactory赋值:

DevicesFactoryHalHidl::DevicesFactoryHalHidl() {

mDevicesFactory = IDevicesFactory::getService();

...

}

IDevicesFactory是一个由IDevicesFactory.hal生成的类,有对应的实现方法:

hardware/interfaces/audio/2.0/default/DevicesFactory.cpp

// Methods from ::android::hardware::audio::V2_0::IDevicesFactory follow.

Return<void> DevicesFactory::openDevice(IDevicesFactory::Device device, openDevice_cb _hidl_cb) {

audio_hw_device_t *halDevice;

Result retval(Result::INVALID_ARGUMENTS);

sp<IDevice> result;

const char* moduleName = deviceToString(device);

if (moduleName != nullptr) {

//画重点

int halStatus = loadAudioInterface(moduleName, &halDevice);

if (halStatus == OK) {

if (device == IDevicesFactory::Device::PRIMARY) {

result = new PrimaryDevice(halDevice);

} else {

result = new ::android::hardware::audio::V2_0::implementation::

Device(halDevice);

}

retval = Result::OK;

} else if (halStatus == -EINVAL) {

retval = Result::NOT_INITIALIZED;

}

}

_hidl_cb(retval, result);

return Void();

}

重点:

// static

int DevicesFactory::loadAudioInterface(const char *if_name, audio_hw_device_t **dev)

{

...

hw_get_module_by_class(AUDIO_HARDWARE_MODULE_ID, if_name, &mod);

...

audio_hw_device_open(mod, dev);

...

}

回去再分析DevicesFactoryHalHybrid::openDevice调用的另一个分支(传统方式),如果mHidlFactory不存在,或者要打开的设备是

AUDIO_HARDWARE_MODULE_ID_A2DP(strcmp(AUDIO_HARDWARE_MODULE_ID_A2DP, name) = 0)

代码走mLocalFactory->openDevice(name, device);

status_t DevicesFactoryHalLocal::openDevice(const char *name, sp<DeviceHalInterface> *device) {

audio_hw_device_t *dev;

status_t rc = load_audio_interface(name, &dev);

if (rc == OK) {

//重点在这里

*device = new DeviceHalLocal(dev);

}

return rc;

}

static status_t load_audio_interface(const char *if_name, audio_hw_device_t **dev)

{

...

rc = hw_get_module_by_class(AUDIO_HARDWARE_MODULE_ID, if_name, &mod);

...

rc = audio_hw_device_open(mod, dev);

...

}

以下就是传统方式和HIDL方式都会去调用的audio.h中的接口了.

./hardware/libhardware/include/hardware/audio.h

static inline int audio_hw_device_open(const struct hw_module_t* module,

struct audio_hw_device** device)

{

return module->methods->open(module, AUDIO_HARDWARE_INTERFACE,

TO_HW_DEVICE_T_OPEN(device));

}

它调用的就是audio_hw.c中的adev_open,audio_hw对应的动态库在开机时加载:

- ::14.802 I AudioFlinger: loadHwModule() Loaded primary audio interface, handle

- ::14.884 I AudioFlinger: loadHwModule() Loaded a2dp audio interface, handle

- ::14.887 I AudioFlinger: loadHwModule() Loaded usb audio interface, handle

- ::14.890 I AudioFlinger: loadHwModule() Loaded r_submix audio interface, handle

进入HAL层后便是跟厂商封装代码、硬件相关。

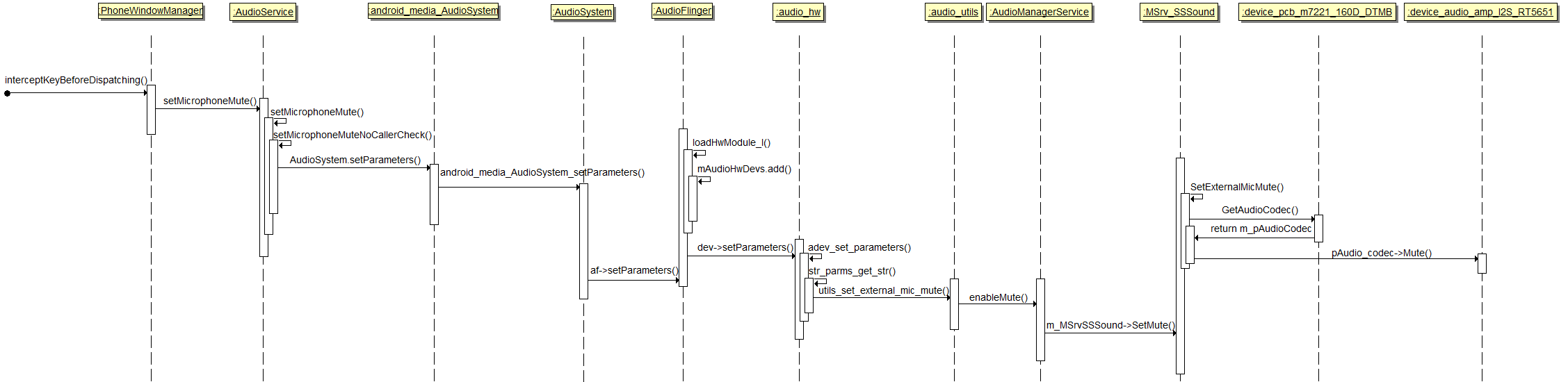

二、麦克风静音设置流程

Mstar平台项目上使用外部的一个RT5651 Codec,由于平台端ASOC框架不完整,所以直接通过I2C控制其工作,

简单记录一下上层到底层的对单独Codec的麦克风输入的控制流程:

\frameworks\base\services\core\java\com\android\server\policy\PhoneWindowManager.java

---》interceptKeyBeforeDispatching()

---》 getAudioService().setMicrophoneMute(muteOffOn, pkgName, mCurrentUserId);

\frameworks\base\services\core\java\com\android\server\audio\AudioService.java

---》public void setMicrophoneMute(boolean on, String callingPackage, int userId)

---》setMicrophoneMuteNoCallerCheck(boolean on, int userId)

---》AudioSystem.muteMicrophone(on); // 系统标准的USB 声卡设备

---》AudioSystem.setParameters(String.format("mute_external_mic_by_user=%s", on)); //可以自己添加参数,对应特定的硬件

\frameworks\base\core\jni\android_media_AudioSystem.cpp

---》AudioSystem::setParameters(audio_io_handle_t ioHandle, const String8& keyValuePairs)

---》af->setParameters(ioHandle, keyValuePairs);

\frameworks\av\services\audioflinger\AudioFlinger.cpp

---》AudioFlinger::setParameters(audio_io_handle_t ioHandle, const String8& keyValuePairs)

---》status_t result = dev->setParameters(keyValuePairs);

\vendor\mstar\hardware\audio\audio_hw_7_0\audio_hw.cpp

---》str_parms_get_str(parms, AUDIO_PARAMETER_MUTE_EXT_MIC, value, sizeof(value)); // HAL层获取传下的参数并处理

\vendor\mstar\hardware\audio\audio_hw_7_0\audio_utils.cpp

---》pAudioManagerInterface->enableMute(snMutePath);

\vendor\mstar\supernova\projects\tvos\audiomanager\libaudiomanagerservice\AudioManagerService.cpp

---》bool AudioManagerService::Client::enableMute(int32_t enMuteType)

---》m_MSrvSSSound->SetMute((SSSOUND_MUTE_TYPE)enMuteType,1);

vendor\mstar\supernova\projects\msrv\common\src\MSrv_SSSound.cpp

---》SetMute(SSSOUND_MUTE_TYPE muteType, MAPI_BOOL onOff)

---》SetMuteStatus(SSSOUND_MUTE_TYPE muteType, MAPI_BOOL onOff, MSRV_AUDIO_PROCESSOR_TYPE eType)

---》Get_mapi_audio()->SetSoundMuteStatus(_TransMSrvMuteTypeToSDKMuteType(muteType,onOff), E_AUDIOMUTESOURCE_MAINSOURCE_);

\vendor\mstar\supernova\projects\customization\MStarSDK\audio\mapi_audio_customer.cpp ---》m_pGPIOAumuteOut->SetOff();

---》SetSoundMute(SOUND_MUTE_SOURCE_ eSoundMuteSource, SOUND_MUTE_TYPE_ eOnOff)

---》void mapi_audio_customer::SetSoundMute(SOUND_MUTE_SOURCE_ eSoundMuteSource, SOUND_MUTE_TYPE_ eOnOff)

---》mapi_gpio *gptr = mapi_gpio::GetGPIO_Dev(MUTE);

vendor\mstar\supernova\projects\board\m7221\pcb\device_pcb_m7221_160D_DTMB.cpp //涉及硬件相关的GPIO、I2C接口操作

时序图:

Android:Mstar Android8.0平台音量控制流程的更多相关文章

- Android 4.4 音量调节流程分析(二)

之前在Android 4.4 音量调节流程分析(一)里已经有简单的分析音量控制的流程,今天想接着继续分析下音量大小计算的方法.对于任一播放文件而言其本身都有着固定大小的音量Volume_Max,而在A ...

- Android 7.0 Power 按键处理流程

Android 7.0 Power 按键处理流程 Power按键的处理逻辑由PhoneWindowManager来完成,本文只关注PhoneWindowManager中与Power键相关的内容,其他 ...

- Android深入四大组件(四)Android8.0 根Activity启动过程(前篇)

前言 在几个月前我写了Android深入四大组件(一)应用程序启动过程(前篇)和Android深入四大组件(一)应用程序启动过程(后篇)这两篇文章,它们都是基于Android 7.0,当我开始阅读An ...

- Android 4.4 音量调节流程分析(一)

最近在做Android Audio方面的工作,有需求是在调节Volume_Up_Key & Volume_Down_key时,Spearker or Headset每音阶的衰减变为3db左右. ...

- Android原生音量控制【转】

本文转载自:http://blog.csdn.net/u013082948/article/details/65630085 本文主要涉及AudioService.还是基于5.1.1版本的代码. Au ...

- Android之声音管理器《AudioManager》的使用以及音量控制

以下为网上下载然后拼接-- Android声音管理AudioManager使用 手机都有声音模式,声音.静音还有震动,甚至震动加声音兼备,这些都是手机的基本功能.在Android手机中,我们同样可以通 ...

- Android应用--简、美音乐播放器增加音量控制

Android应用--简.美音乐播放器增加音量控制 2013年6月26日简.美音乐播放器继续完善中.. 题外话:上一篇博客是在6月11号发的,那篇博客似乎有点问题,可能是因为代码结构有点乱的原因,很难 ...

- Android深入四大组件(五)Android8.0 根Activity启动过程(后篇)

前言 在几个月前我写了Android深入四大组件(一)应用程序启动过程(前篇)和Android深入四大组件(一)应用程序启动过程(后篇)这两篇文章,它们都是基于Android 7.0,当我开始阅读An ...

- 《Android游戏开发详解》一1.7 控制流程第1部分——if和else语句

本节书摘来异步社区<Android游戏开发详解>一书中的第1章,第1.7节,译者: 李强 责编: 陈冀康,更多章节内容可以访问云栖社区"异步社区"公众号查看. 1.7 ...

随机推荐

- kvm虚拟机控制台登录配置

vm虚拟机能否像xen虚拟机一样通过virsh console 一样采用字符界面进行linux虚拟机控制台呢,答案是肯定的,默认情况下该命令是不起作用的,需要修改相关文件才能实现. 本文出自:http ...

- 2019-ACM-ICPC-南昌区网络赛-H. The Nth Item-特征根法求通项公式+二次剩余+欧拉降幂

2019-ACM-ICPC-南昌区网络赛-H. The Nth Item-特征根法求通项公式+二次剩余+欧拉降幂 [Problem Description] 已知\(f(n)=3\cdot f(n ...

- 40个优化你的php代码的小提示

1. 若是一个办法可静态化,就对它做静态声明.速度可提拔至4倍. 2. echo 比 print 快. 3. 应用echo的多重参数(译注:指用逗号而不是句点)庖代字符串连接. 4. 在履行for轮回 ...

- Linux网络编程综合运用之MiniFtp实现(五)

转眼兴奋的五一小长假就要到来了,在放假前夕还是需要保持一颗淡定的心,上次中已经对miniFTP有基础框架进行了搭建,这次继续进行往上加代码,这次主要还是将经历投射到handle_child()服务进程 ...

- 利用k8s实现HPA

如何利用kubernetes实现应用的水平扩展(HPA) 云计算具有水平弹性的特性,这个是云计算区别于传统IT技术架构的主要特性.对于Kubernetes中的POD集群来说,HPA就是实现这种水平伸缩 ...

- 模拟webpack 实现自己的打包工具

本框架模拟webpack打包工具 详细代码个步骤请看git地址:https://github.com/jiangzhenfei/easy-webpack 创建package.json { " ...

- JavaScript异步学习笔记——主线程和任务队列

任务队列 单线程就意味着,所有任务需要排队,前一个任务结束,才会执行后一个任务.如果前一个任务耗时很长,后一个任务就不得不一直等着. 同步任务指的是,在主线程上排队执行的任务,只有前一个任务执行完毕, ...

- Pycharm----【Mac】设置默认模板

使用场景:新建的文件中,有某些字段或者代码段是每次都需要写入的,因此为了编写的方便,我们会创建对应的模板,每次新建选择模板即可. 操作步骤如下: pycharm--->preference--- ...

- tomcat配置虛擬路徑

1.server.xml设置 打开Tomcat安装目录,在server.xml中<Host>标签中,增加<Context docBase="硬盘目录" path= ...

- document基本操作 动态脚本-动态样式-创建表格

var html = document.documentElement; var body = document.body; window.onload = function() { //docume ...