kube-router代替kube-proxy+calico

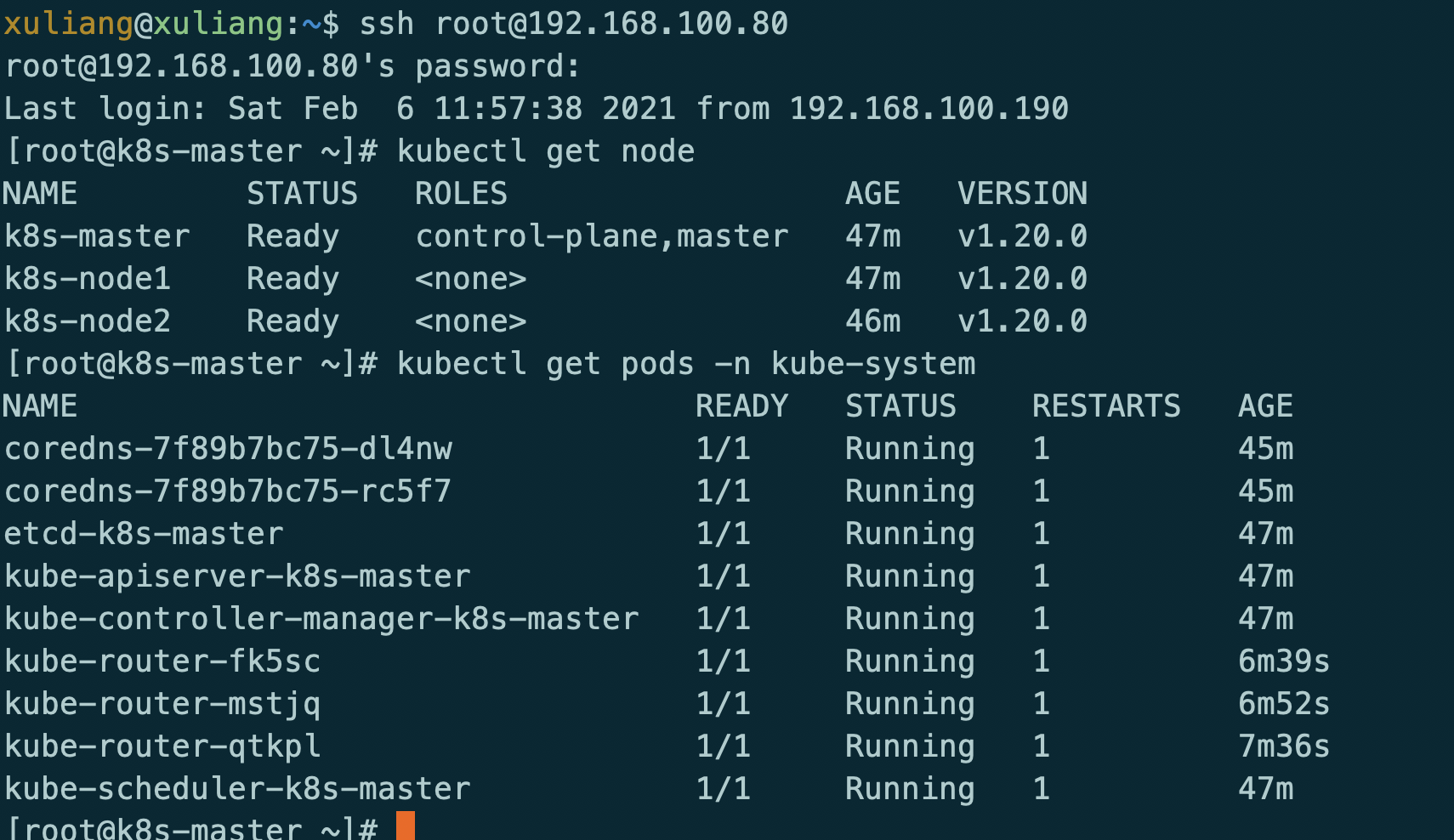

使用kubeadm安装kubernetes,并使用kube-router代替kube-proxy+calico网络。

即:kube-router providing service proxy, firewall and pod networking.

版本:kubernetes v1.20.0

安装kubernetes集群

升级linux系统内核,关闭swap,关闭防火墙,调整内核参数等自己做。

主要安装命令

yum install kubectl-1.20.0-0.x86_64 kubeadm-1.20.0-0.x86_64 kubelet-1.20.0-0.x86_64

kubeadm init --kubernetes-version=1.20.0 --apiserver-advertise-address=192.168.100.80 --image-repository registry.aliyuncs.com/google_containers --pod-network-cidr=10.244.0.0/16

[root@k8s-master ~]# kubeadm init --kubernetes-version=1.20.0 --apiserver-advertise-address=192.168.100.80 --image-repository registry.aliyuncs.com/google_containers --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.20.0

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Hostname]: hostname "k8s-master" could not be reached

[WARNING Hostname]: hostname "k8s-master": lookup k8s-master on 192.168.100.96:53: no such host

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.100.80]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.100.80 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.100.80 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 13.002683 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.20" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master as control-plane by adding the labels "node-role.kubernetes.io/master=''" and "node-role.kubernetes.io/control-plane='' (deprecated)"

[mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 68mv1r.5ljn91n2yms71dth

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.100.80:6443 --token 68mv1r.5ljn91n2yms71dth \

--discovery-token-ca-cert-hash sha256:2a29deaa942a7eacb055f608caa686d9c59cb34abb0365b32c22d959b2327dc8

[root@k8s-master ~]# rm -rf .kube/

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master ~]# kubectl get node

安装完成后

安装网络

源文件地址:https://github.com/cloudnativelabs/kube-router/blob/master/daemonset/kubeadm-kuberouter.yaml

kubectl apply -f kubeadm-kuberouter.yaml

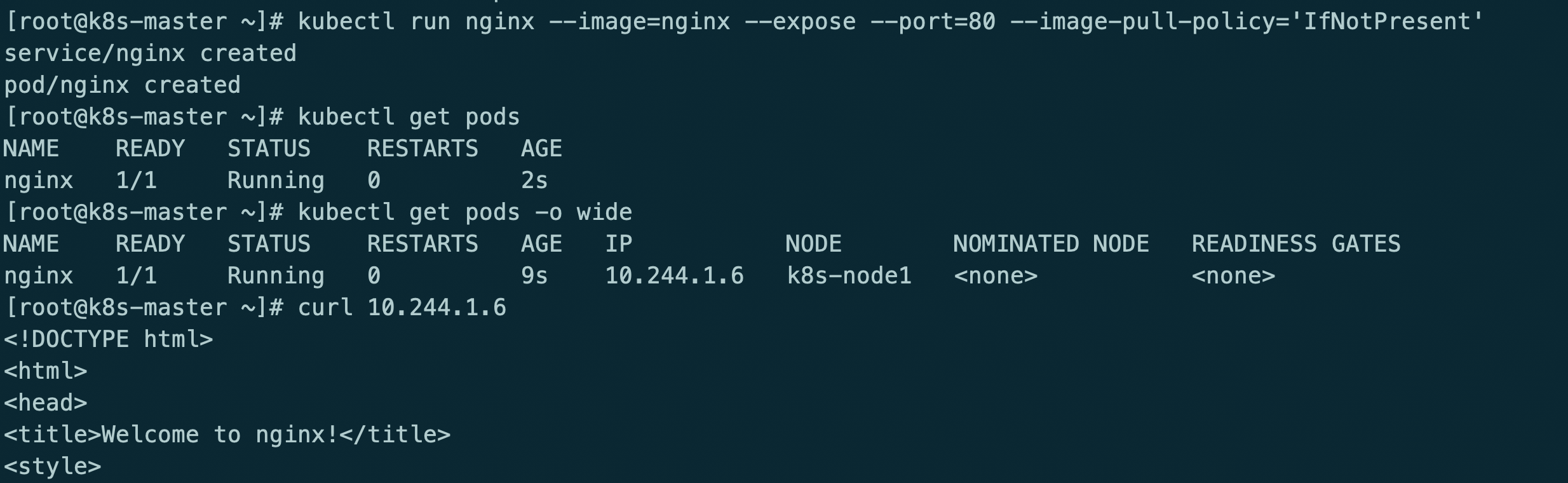

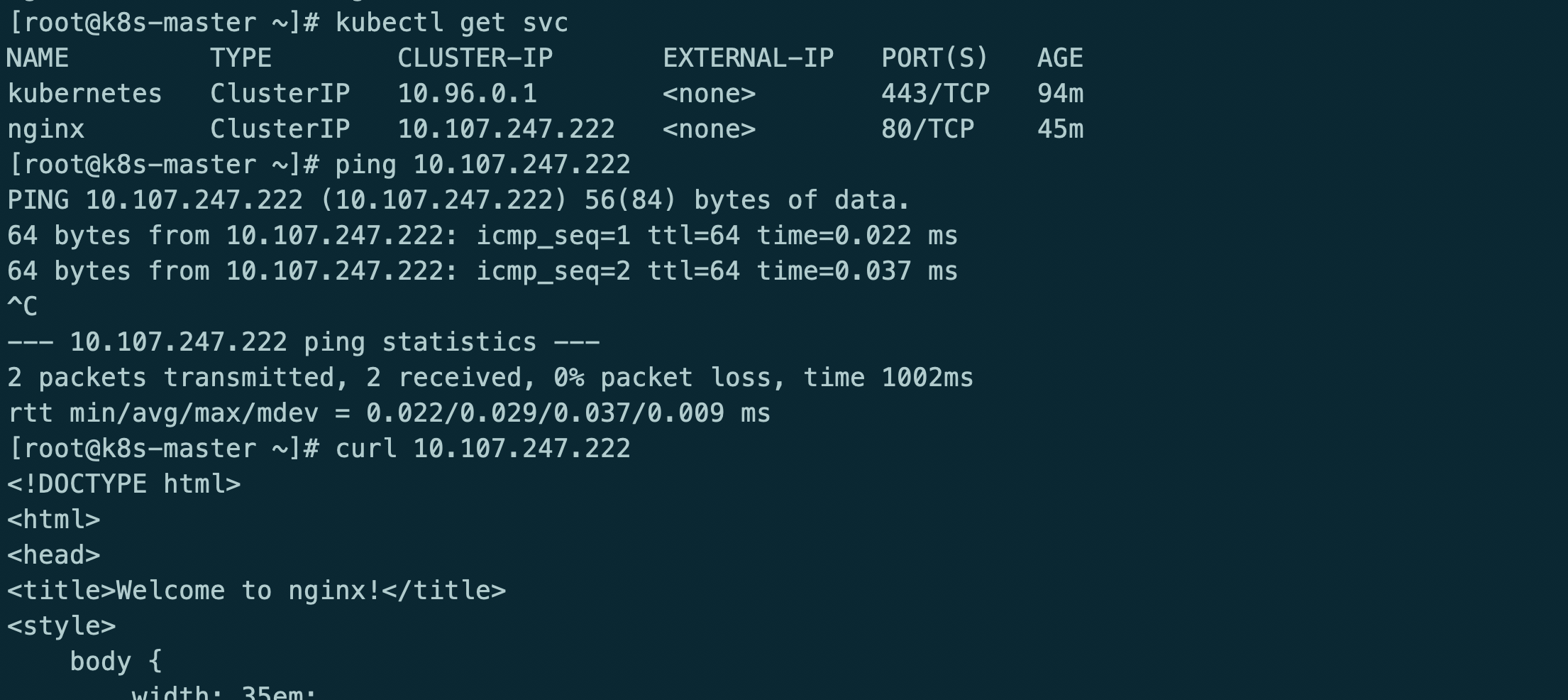

安装服务测试

kubectl run nginx --image=nginx --expose --port=80 --image-pull-policy='IfNotPresent'

此时kube-router只提供网络功能,kube-proxy提供的service和防火墙策略

kube-router providing service proxy, firewall and pod networking.

参考地址:https://github.com/cloudnativelabs/kube-router/blob/master/docs/kubeadm.md

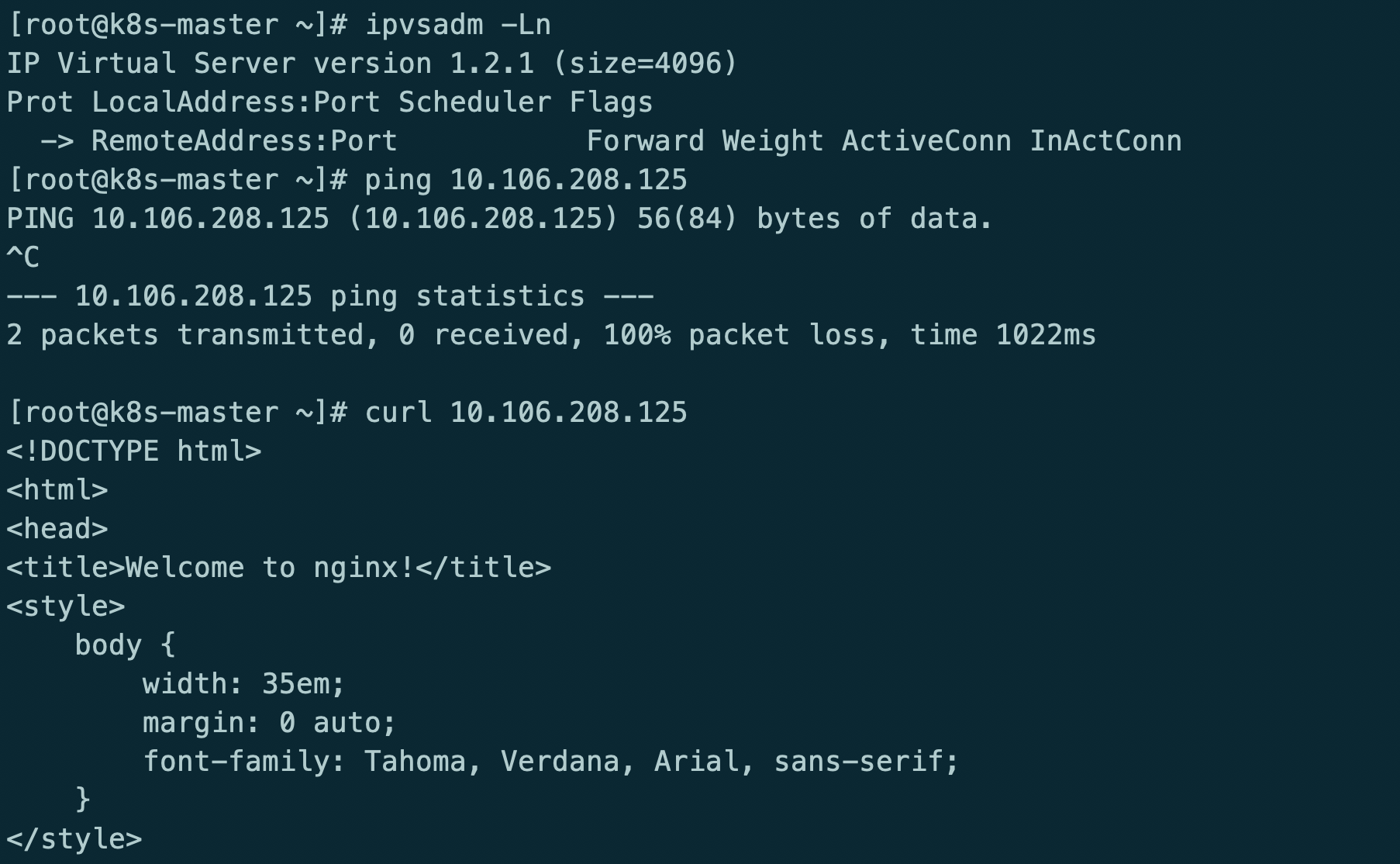

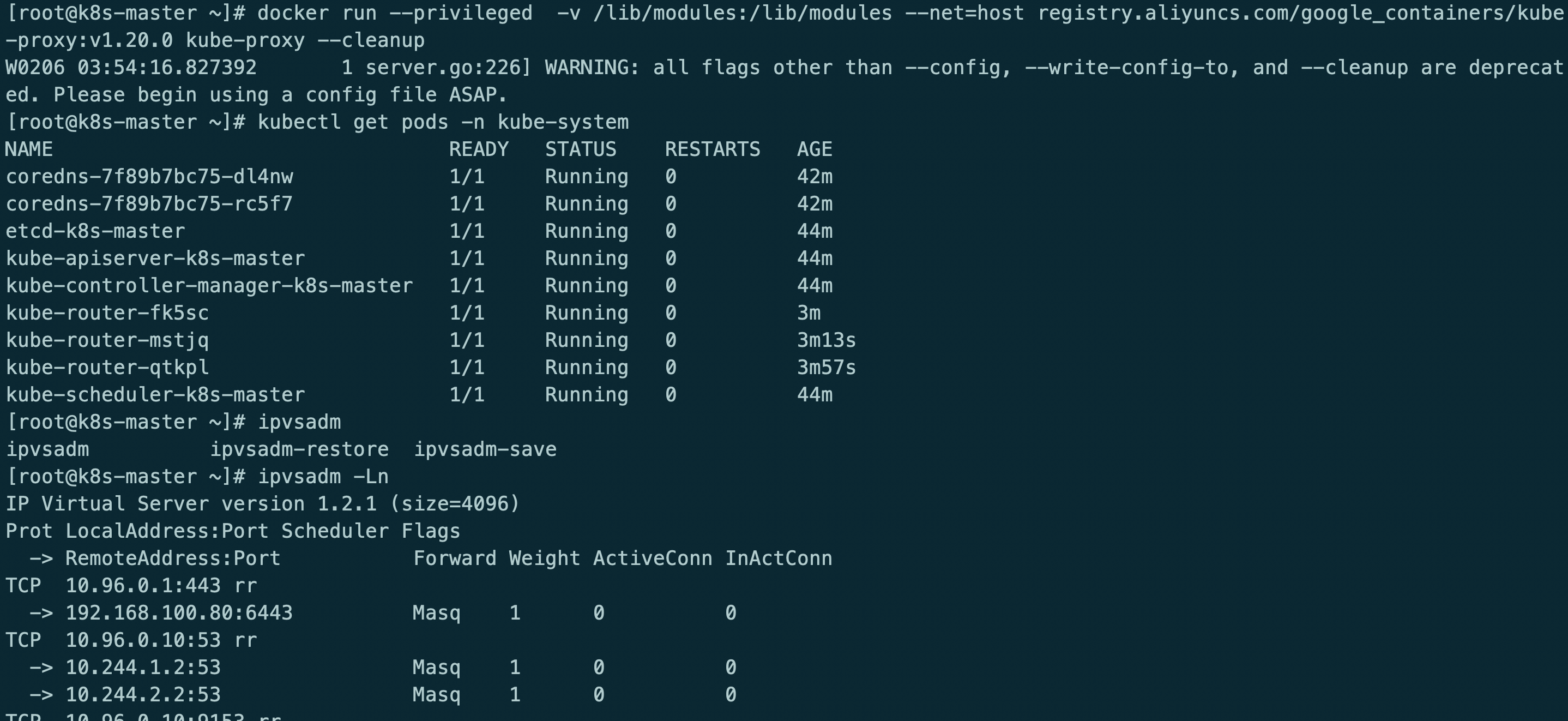

kubectl delete -f kubeadm-kuberouter.yaml

kubectl apply -f kubeadm-kuberouter-all-features.yaml

kubectl -n kube-system delete ds kube-proxy

docker run --privileged -v /lib/modules:/lib/modules --net=host registry.aliyuncs.com/google_containers/kube-proxy:v1.20.0 kube-proxy --cleanup

kube-router模式使用ipvs rr策略

最后在重启服务器

测试服务

格式化集群

kubectl drain k8s-master --delete-local-data --force --ignore-daemonsets

kubectl drain k8s-node1 --delete-local-data --force --ignore-daemonsets

kubectl drain k8s-node2 --delete-local-data --force --ignore-daemonsets

kubectl delete node k8s-master

rm -rf /etc/cni/net.d/10-kuberouter.conflist

kubeadm reset

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

ipvsadm -C

reboot

kube-router代替kube-proxy+calico的更多相关文章

- 《kubernetes + .net core 》dev ops部分

目录 1.kubernetes 预备知识 1.1 集群资源 1.1.1 role 1.1.2 namespace 1.1.3 node 1.1.4 persistent volume 1.1.5 st ...

- k8s集群部署(2)

一.利用ansible部署kubernetes准备阶段 1.集群介绍 基于二进制方式部署k8s集群和利用ansible-playbook实现自动化:二进制方式部署有助于理解系统各组件的交互原理和熟悉组 ...

- k8s1.20环境搭建部署(二进制版本)

1.前提知识 1.1 生产环境部署K8s集群的两种方式 kubeadm Kubeadm是一个K8s部署工具,提供kubeadm init和kubeadm join,用于快速部署Kubernetes集群 ...

- kubernetes入门之skydns

部署kubernetes dns服务 kubernetes可以为pod提供dns内部域名解析服务.其主要作用是为pod提供可以直接通过service的名字解析为对应service的ip的功能. 部署k ...

- CNCF CloudNative Landscape

cncf landscape CNCF Cloud Native Interactive Landscape 1. App Definition and Development 1. Database ...

- kubectl version报did you specify the right host or port

现象: [root@localhost shell]# kubectl version Client Version: version.Info{Major:", GitVersion:&q ...

- centos7.5单机yum安装kubernetes

1.系统配置 centos7.5 docker 1.13.1 centos7下安装docker 2.关闭防火墙,selinux,swapoff systemctl disable firewalld ...

- 二进制方式部署Kubernetes 1.6.0集群(开启TLS)

本节内容: Kubernetes简介 环境信息 创建TLS加密通信的证书和密钥 下载和配置 kubectl(kubecontrol) 命令行工具 创建 kubeconfig 文件 创建高可用 etcd ...

- kubernetes 集群安全配置

版本:v1.10.0-alpha.3 openssl genrsa -out ca.key 2048 openssl req -x509 -new -nodes -key ca.key -subj & ...

- kubernetes1.5.2集群部署过程--安全模式

使用https安全模式部署kubernetes集群,能保证集群通讯安全.有效限制非授权用户访问.但部署比非安全模式复杂的多. 本文为etcd.kubernetes集群中各个组件配置证书认证,所有组件通 ...

随机推荐

- 翻译:《实用的Python编程》03_00_Overview

目录 | 上一节 (2 处理数据) | 下一节 (4 类和对象) 3. 程序组织 到目前为止,我们已经学习了一些 Python 基础知识并编写了一些简短的脚本.但是,当开始编写更大的程序时,我们会想要 ...

- Nginx解析漏洞复现以及哥斯拉连接Webshell实践

Nginx解析漏洞复现以及哥斯拉连接Webshell实践 目录 1. 环境 2. 过程 2.1 vulhub镜像拉取 2.2 漏洞利用 2.3 webshell上传 2.4 哥斯拉Webshell连接 ...

- Redis之面试连环炮

目录 1.简单介绍一下Redis 2.分布式缓存常见的技术选型方案有哪些? 3.Redis和Memcached的区别和共同点 4. 缓存数据的处理流程是怎样的? 5. 为什么要用 Redis/为什么要 ...

- Codeforces 598D (ccpc-wannafly camp day1) Igor In the Museum

http://codeforces.com/problemset/problem/598/D 分析:BFS,同一连通区域的周长一样,但查询过多会导致TLE,所以要将连通区域的答案储存,下次查询到该连通 ...

- 基于角色访问控制RBAC权限模型的动态资源访问权限管理实现

RBAC权限模型(Role-Based Access Control) 前面主要介绍了元数据管理和业务数据的处理,通常一个系统都会有多个用户,不同用户具有不同的权限,本文主要介绍基于RBAC动态权限管 ...

- MindSpore:基于本地差分隐私的 Bandit 算法

摘要:本文将先简单介绍Bandit 问题和本地差分隐私的相关背景,然后介绍基于本地差分隐私的 Bandit 算法,最后通过一个简单的电影推荐场景来验证 LDP LinUCB 算法. Bandit问题是 ...

- FreeBSD 家图谱

https://cgit.freebsd.org/src/tree/share/misc/bsd-family-tree

- 如何使用jQuery $.post() 方法实现前后台数据传递

基础方法为 $.post(URL,data,callback); 参数介绍: 1.URL 参数规定您希望请求的 URL. 2.data 参数规定连同请求发送的数据. 3.callback 参数是请求成 ...

- requirejs的用法

requirejs的用法 2014年11月6日 17164次浏览 之前我的一片文章介绍过requirejs,具体地址是:http://www.haorooms.com/post/RequireJS_m ...

- hdu 4622 (hash+“map”)

题目链接:https://vjudge.net/problem/HDU-4622 题意:给定t组字符串每组m条询问--求问每条询问区间内有多少不同的子串. 题解:把每个询问区间的字符串hash一下存图 ...