精通python网络爬虫之自动爬取网页的爬虫 代码记录

items的编写

# -*- coding: utf-8 -*- # Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html import scrapy class AutopjtItem(scrapy.Item):

# define the fields for your item here like:

# 用来存储商品名

name = scrapy.Field()

#用来存储商品价格

price = scrapy.Field()

# 用来存储商品链接

link = scrapy.Field()

# 用来存储商品评论数

comnum = scrapy.Field()

# 用来存储商品评论内容链接

comnum_link = scrapy.Field()

piplines的编写

# -*- coding: utf-8 -*-

import codecs

import json

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html class AutopjtPipeline(object):

def __init__(self):

self.file = codecs.open("D:/git/learn_scray/day11/1.json", "wb", encoding="utf-8") def process_item(self, item, spider):

# 爬取当前页的所有信息

for i in range(len(item["name"])):

name = item["name"][i]

price = item["price"][i]

link = item["link"][i]

comnum = item["comnum"][i]

comnum_link = item["comnum_link"][i]

current_conent = {"name":name,"price":price,"link":link,

"comnum":comnum,"comnum_link":comnum_link}

j = json.dumps(dict(current_conent),ensure_ascii=False)

# 为每条数据添加换行

line = j + '\n'

print(line)

self.file.write(line)

# for key,value in current_conent.items():

# print(key,value)

return item def close_spider(self,spider):

self.file.close()

自动爬虫编写实战

# -*- coding: utf-8 -*-

import scrapy

from autopjt.items import AutopjtItem

from scrapy.http import Request class AutospdSpider(scrapy.Spider):

name = 'autospd'

allowed_domains = ['dangdang.com']

# 当当地方特产

start_urls = ['http://category.dangdang.com/pg1-cid10010056.html'] def parse(self, response):

item = AutopjtItem()

print("进入item")

# print("获取标题:")

# 获取标题

item["name"] = response.xpath("//p[@class='name']/a/@title").extract()

# print(title) # print("获取价格:")

# 价格

item["price"] = response.xpath("//span[@class='price_n']/text()").extract()

# print(price) # print("获取商品链接:")

# 获取商品链接

item["link"] = response.xpath("//p[@class='name']/a/@href").extract()

# print(link) # print("\n")

# print("获取商品评论数:")

# 获取商品评论数

item["comnum"] = response.xpath("//a[@name='itemlist-review']/text()").extract()

# comnum = response.xpath("//a[@name='itemlist-review']/text()").extract()

# print(comnum) # print("获取商品评论数链接:")

# 获取商品评论数链接

item["comnum_link"] = response.xpath("//a[@name='itemlist-review']/@href").extract()

# comnum_link = response.xpath("//a[@name='itemlist-review']/@href").extract()

# print(comnum_link)

yield item

for i in range(1,79):

# print(i)

url = "http://category.dangdang.com/pg"+ str(i) + "-cid10010056.html"

# print(url)

yield Request(url, callback=self.parse)

yield详解:

https://stackoverflow.com/questions/231767/what-does-the-yield-keyword-do

settings的设置:

# -*- coding: utf-8 -*- # Scrapy settings for autopjt project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://doc.scrapy.org/en/latest/topics/settings.html

# https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

# https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'autopjt' SPIDER_MODULES = ['autopjt.spiders']

NEWSPIDER_MODULE = 'autopjt.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'autopjt (+http://www.yourdomain.com)' # Obey robots.txt rules

# 默认为true遵守robots.txt协议 我试了一下能爬 为了保险设置为false

ROBOTSTXT_OBEY = True # Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0)

# See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default)

COOKIES_ENABLED = False # Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False # Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#} # Enable or disable spider middlewares

# See https://doc.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'autopjt.middlewares.AutopjtSpiderMiddleware': 543,

#} # Enable or disable downloader middlewares

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'autopjt.middlewares.AutopjtDownloaderMiddleware': 543,

#} # Enable or disable extensions

# See https://doc.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#} # Configure item pipelines

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'autopjt.pipelines.AutopjtPipeline': 300,

} # Enable and configure the AutoThrottle extension (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

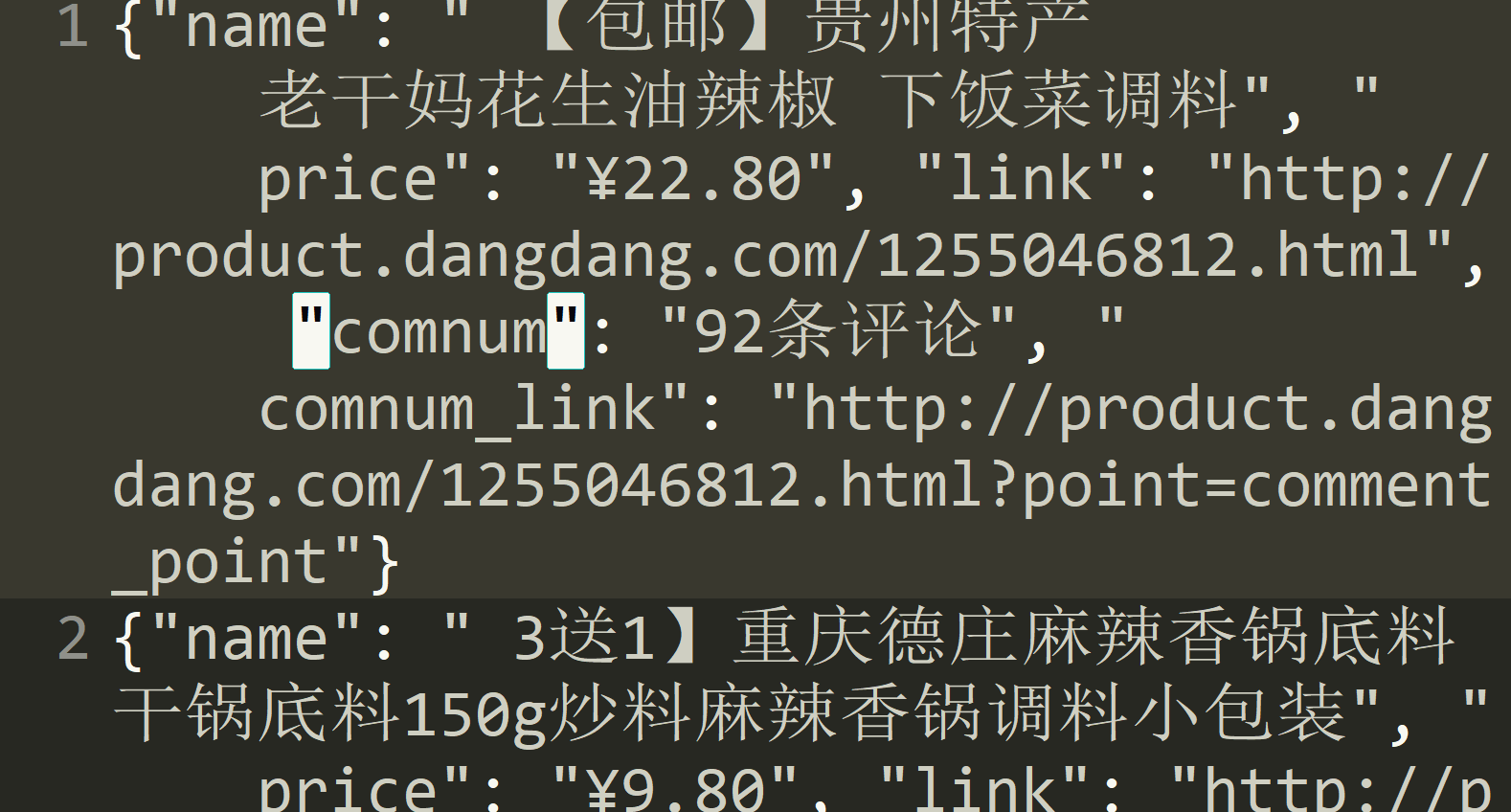

最后的效果:

精通python网络爬虫之自动爬取网页的爬虫 代码记录的更多相关文章

- python爬取网页的通用代码框架

python爬取网页的通用代码框架: def getHTMLText(url):#参数code缺省值为‘utf-8’(编码方式) try: r=requests.get(url,timeout=30) ...

- python(27)requests 爬取网页乱码,解决方法

最近遇到爬取网页乱码的情况,找了好久找到了种解决的办法: html = requests.get(url,headers = head) html.apparent_encoding html.enc ...

- 【Python网络爬虫三】 爬取网页新闻

学弟又一个自然语言处理的项目,需要在网上爬一些文章,然后进行分词,刚好牛客这周的是从一个html中找到正文,就实践了一下.写了一个爬门户网站新闻的程序 需求: 从门户网站爬取新闻,将新闻标题,作者,时 ...

- 爬虫-----selenium模块自动爬取网页资源

selenium介绍与使用 1 selenium介绍 什么是selenium?selenium是Python的一个第三方库,对外提供的接口可以操作浏览器,然后让浏览器完成自动化的操作. sel ...

- python爬虫学习(7) —— 爬取你的AC代码

上一篇文章中,我们介绍了python爬虫利器--requests,并且拿HDU做了小测试. 这篇文章,我们来爬取一下自己AC的代码. 1 确定ac代码对应的页面 如下图所示,我们一般情况可以通过该顺序 ...

- [原创]python爬虫之BeautifulSoup,爬取网页上所有图片标题并存储到本地文件

from bs4 import BeautifulSoup import requests import re import os r = requests.get("https://re. ...

- 爬虫系列----scrapy爬取网页初始

一 基本流程 创建工程,工程名称为(cmd):firstblood: scrapy startproject firstblood 进入工程目录中(cmd):cd :./firstblood 创建爬虫 ...

- Python学习--两种方法爬取网页图片(requests/urllib)

实际上,简单的图片爬虫就三个步骤: 获取网页代码 使用正则表达式,寻找图片链接 下载图片链接资源到电脑 下面以博客园为例子,不同的网站可能需要更改正则表达式形式. requests版本: import ...

- 《精通python网络爬虫》笔记

<精通python网络爬虫>韦玮 著 目录结构 第一章 什么是网络爬虫 第二章 爬虫技能概览 第三章 爬虫实现原理与实现技术 第四章 Urllib库与URLError异常处理 第五章 正则 ...

随机推荐

- Bootstrap CSS概览

HTML5文档类型(<!DOCTYPE html>) Bootstrap前端框架使用了HTML5和CSS属性,为了让这些能正常工作,您需要使用HTML5文档类型(<!DOCTYPE ...

- ios之UIPickView

以下为控制器代码,主要用到的是UIPickerView 主要步骤:新建一个Single View Application 然后,如上图所示,拖进去一个UILabel Title设置为导航,再拖进去一个 ...

- http常用状态吗以及分别代表什么意思?

http常用状态码: 状态码 定义 说明 1xx 信息 街道请求继续处理 2xx 成功 成功的收到.理解.接受 3xx 重定向 浏览器需要执行某些特殊处理一完成请求 4xx 客户端错误 请求的语法有问 ...

- 使用Spring AOP实现业务依赖解耦

Spring IOC用于解决对象依赖之间的解耦,而Spring AOP则用于解决业务依赖之间的解耦: 统一在一个地方定义[通用功能],通过声明的方式定义这些通用的功能以何种[方式][织入]到某些[特定 ...

- (14)zabbix Simple checks基本检测

1. 开始 Simple checks通常用来检查远程未安装代理或者客户端的服务 使用simple checks,被监控客户端无需安装zabbix agent客户端,zabbix server直接使用 ...

- 七:MYSQL之常用操作符

前言: 运算符连接表达式中各个操作数,其作用是用来指明对操作数所进行的运算. 常见的运算有数学计算.比较运算.位运算及逻辑运算 一:算数运算符 用于各类数值运算.包括加(+).减(-).乘(*).除( ...

- Luogu 2627 修建草坪 (动态规划Dp + 单调队列优化)

题意: 已知一个序列 { a [ i ] } ,求取出从中若干不大于 KK 的区间,求这些区间和的最大值. 细节: 没有细节???感觉没有??? 分析: 听说有两种方法!!! 好吧实际上是等价的只是看 ...

- LeetCode答案(python)

1. 两数之和 给定一个整数数组 nums 和一个目标值 target,请你在该数组中找出和为目标值的那 两个 整数,并返回他们的数组下标. 你可以假设每种输入只会对应一个答案.但是,你不能重复利用这 ...

- js中的事件委托或事件代理

一,概述 JavaScript高级程序设计上讲:事件委托就是利用事件冒泡,只指定一个事件处理程序,就可以管理某一类型的所有事件. 举一个网上大牛们讲事件委托都会举的例子:就是取快递来解释,有三个同事预 ...

- 【Python】SyntaxError: Non-ASCII character '\xe8' in file

遇到的第一个问题: SyntaxError: Non-ASCII character '\xe8' in file D:/PyCharmProject/TempConvert.py on line 2 ...