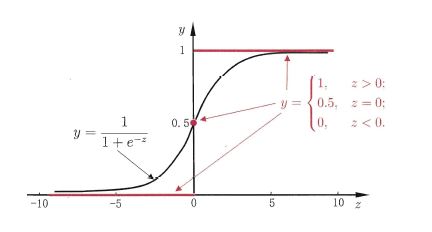

ANN神经网络——Sigmoid 激活函数编程练习 (Python实现)

# ----------

#

# There are two functions to finish:

# First, in activate(), write the sigmoid activation function.

# Second, in update(), write the gradient descent update rule. Updates should be

# performed online, revising the weights after each data point.

#

# ----------

import numpy as np

class Sigmoid:

"""

This class models an artificial neuron with sigmoid activation function.

"""

def __init__(self, weights = np.array([1])):

"""

Initialize weights based on input arguments. Note that no type-checking

is being performed here for simplicity of code.

"""

self.weights = weights

# NOTE: You do not need to worry about these two attribues for this

# programming quiz, but these will be useful for if you want to create

# a network out of these sigmoid units!

self.last_input = 0 # strength of last input

self.delta = 0 # error signal

def activate(self, values):

"""

Takes in @param values, a list of numbers equal to length of weights.

@return the output of a sigmoid unit with given inputs based on unit

weights.

"""

# YOUR CODE HERE

# First calculate the strength of the input signal.

strength = np.dot(values, self.weights)

self.last_input = strength

# TODO: Modify strength using the sigmoid activation function and

# return as output signal.

# HINT: You may want to create a helper function to compute the

# logistic function since you will need it for the update function.

result = self.logistic(strength)

return result

def logistic(self,strength):

return 1/(1+np.exp(-strength))

def update(self, values, train, eta=.1):

"""

Takes in a 2D array @param values consisting of a LIST of inputs and a

1D array @param train, consisting of a corresponding list of expected

outputs. Updates internal weights according to gradient descent using

these values and an optional learning rate, @param eta.

"""

# TODO: for each data point...

for X, y_true in zip(values, train):

# obtain the output signal for that point

y_pred = self.activate(X)

# YOUR CODE HERE

# TODO: compute derivative of logistic function at input strength

# Recall: d/dx logistic(x) = logistic(x)*(1-logistic(x))

dx = self.logistic(self.last_input)*(1 - self.logistic(self.last_input) )

print ("dx{}:".format(dx))

print ('\n')

# TODO: update self.weights based on learning rate, signal accuracy,

# function slope (derivative) and input value

delta_w = eta * (y_true - y_pred) * dx * X

print ("delta_w:{} weight before {}".format(delta_w, self.weights))

self.weights += delta_w

print ("delta_w:{} weight after {}".format(delta_w, self.weights))

print ('\n')

def test():

"""

A few tests to make sure that the perceptron class performs as expected.

Nothing should show up in the output if all the assertions pass.

"""

def sum_almost_equal(array1, array2, tol = 1e-5):

return sum(abs(array1 - array2)) < tol

u1 = Sigmoid(weights=[3,-2,1])

assert abs(u1.activate(np.array([1,2,3])) - 0.880797) < 1e-5

u1.update(np.array([[1,2,3]]),np.array([0]))

assert sum_almost_equal(u1.weights, np.array([2.990752, -2.018496, 0.972257]))

u2 = Sigmoid(weights=[0,3,-1])

u2.update(np.array([[-3,-1,2],[2,1,2]]),np.array([1,0]))

assert sum_almost_equal(u2.weights, np.array([-0.030739, 2.984961, -1.027437]))

if __name__ == "__main__":

test()

OUTPUT

Running test()...

dx0.104993585404:

delta_w:[-0.0092478 -0.01849561 -0.02774341] weight before [3, -2, 1]

delta_w:[-0.0092478 -0.01849561 -0.02774341] weight after [ 2.9907522 -2.01849561 0.97225659]

dx0.00664805667079:

delta_w:[-0.00198107 -0.00066036 0.00132071] weight before [0, 3, -1]

delta_w:[-0.00198107 -0.00066036 0.00132071] weight after [ -1.98106867e-03 2.99933964e+00 -9.98679288e-01]

dx0.196791859198:

delta_w:[-0.02875794 -0.01437897 -0.02875794] weight before [ -1.98106867e-03 2.99933964e+00 -9.98679288e-01]

delta_w:[-0.02875794 -0.01437897 -0.02875794] weight after [-0.03073901 2.98496067 -1.02743723]

All done!

ANN神经网络——Sigmoid 激活函数编程练习 (Python实现)的更多相关文章

- ANN神经网络——实现异或XOR (Python实现)

一.Introduction Perceptron can represent AND,OR,NOT 用初中的线性规划问题理解 异或的里程碑意义 想学的通透,先学历史! 据说在人工神经网络(artif ...

- 目前所有的ANN神经网络算法大全

http://blog.sina.com.cn/s/blog_98238f850102w7ik.html 目前所有的ANN神经网络算法大全 (2016-01-20 10:34:17) 转载▼ 标签: ...

- 【python实现卷积神经网络】激活层实现

代码来源:https://github.com/eriklindernoren/ML-From-Scratch 卷积神经网络中卷积层Conv2D(带stride.padding)的具体实现:https ...

- BP神经网络求解异或问题(Python实现)

反向传播算法(Back Propagation)分二步进行,即正向传播和反向传播.这两个过程简述如下: 1.正向传播 输入的样本从输入层经过隐单元一层一层进行处理,传向输出层:在逐层处理的过程中.在输 ...

- OpenCV——ANN神经网络

ANN-- Artificial Neural Networks 人工神经网络 //定义人工神经网络 CvANN_MLP bp; // Set up BPNetwork's parameters Cv ...

- 神经网络BP算法C和python代码

上面只显示代码. 详BP原理和神经网络的相关知识,请参阅:神经网络和反向传播算法推导 首先是前向传播的计算: 输入: 首先为正整数 n.m.p.t,分别代表特征个数.训练样本个数.隐藏层神经元个数.输 ...

- 神经网络的训练和测试 python

承接上一节,神经网络需要训练,那么训练集来自哪?测试的数据又来自哪? <python神经网络编程>一书给出了训练集,识别图片中的数字.测试集的链接如下: https://raw.githu ...

- 吴裕雄--天生自然神经网络与深度学习实战Python+Keras+TensorFlow:TensorFlow与神经网络的实现

import tensorflow as tf import numpy as np ''' 初始化运算图,它包含了上节提到的各个运算单元,它将为W,x,b,h构造运算部件,并将它们连接 起来 ''' ...

- 吴裕雄--天生自然神经网络与深度学习实战Python+Keras+TensorFlow:使用TensorFlow和Keras开发高级自然语言处理系统——LSTM网络原理以及使用LSTM实现人机问答系统

!mkdir '/content/gdrive/My Drive/conversation' ''' 将文本句子分解成单词,并构建词库 ''' path = '/content/gdrive/My D ...

随机推荐

- abp部署端口和域名映射配置

前引 apb部署 后端服务9900端口,域名访问地址是:http://nihao-api.hellow.com: 前端4200端口,域名访问地址是:http://nihao.hellow.com: 前 ...

- 洛谷 P3285 / loj 2212 [SCOI2014] 方伯伯的 OJ 题解【平衡树】【线段树】

平衡树分裂钛好玩辣! 题目描述 方伯伯正在做他的 OJ.现在他在处理 OJ 上的用户排名问题. OJ 上注册了 \(n\) 个用户,编号为 \(1\sim n\),一开始他们按照编号排名.方伯伯会按照 ...

- PHP 数字金额转换成中文大写金额的函数 数字转中文

/** *数字金额转换成中文大写金额的函数 *String Int $num 要转换的小写数字或小写字符串 *return 大写字母 *小数位为两位 **/ function num_to_rmb($ ...

- scrapy抓取中文后乱码解决方法

出现这种东西不是乱码,是unicode,只是人看不懂,例如: \u96a8\u6642\u66f4\u65b0> \u25a0\u25a0\u25a 我们把他解码成中文码即可,在settings ...

- Windows与linux添加用户命令

Windows 查看当前存在用户: net user 查看当前用户组: net localgroup 添加用户(以添加用户test密码test1234为例): net user test test12 ...

- 转 oracle ASM中ASM_POWER_LIMIT参数

https://daizj.iteye.com/blog/1753434 ASM_POWER_LIMIT 该初始化参数用于指定ASM例程平衡磁盘所用的最大权值,其数值范围为0~11,默认值为1.该初始 ...

- 查找Ubuntu下包的归属

今天在制作docker时,发现我的程序有些依赖的包不太好找应该安装什么. 在centos下面,可以用命令: rpm -qf <libraryname> 在Ubuntu下面,发现一个网站基本 ...

- numpy多维矩阵,取出第一行或者第一列,方法和df一样

# 定义一个多维矩阵 arr = np.array([[1,2,3], [4,5,6], [7,8,9]]) # 取出第一行 arr[0,:] # 取出第一列 arr[:,0]

- 《大数据日知录》读书笔记-ch3大数据常用的算法与数据结构

布隆过滤器(bloom filter,BF): 二进制向量数据结构,时空效率很好,尤其是空间效率极高.作用:检测某个元素在某个巨量集合中存在. 构造: 查询: 不会发生漏判(false negativ ...

- (转)Apache和Nginx运行原理解析

Apache和Nginx运行原理解析 原文:https://www.server110.com/nginx/201402/6543.html Web服务器 Web服务器也称为WWW(WORLD WID ...