吴裕雄 python 神经网络——TensorFlow训练神经网络:不使用隐藏层

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data INPUT_NODE = 784 # 输入节点

OUTPUT_NODE = 10 # 输出节点

BATCH_SIZE = 100 # 每次batch打包的样本个数 # 模型相关的参数

LEARNING_RATE_BASE = 0.01

LEARNING_RATE_DECAY = 0.99

REGULARAZTION_RATE = 0.0001

TRAINING_STEPS = 5000

MOVING_AVERAGE_DECAY = 0.99 def inference(input_tensor, avg_class, weights1, biases1):

# 不使用滑动平均类

if avg_class == None:

layer1 = tf.nn.relu(tf.matmul(input_tensor, weights1) + biases1)

return layer1

else:

# 使用滑动平均类

layer1 = tf.nn.relu(tf.matmul(input_tensor, avg_class.average(weights1)) + avg_class.average(biases1))

return layer1 def train(mnist):

x = tf.placeholder(tf.float32, [None, INPUT_NODE], name='x-input')

y_ = tf.placeholder(tf.float32, [None, OUTPUT_NODE], name='y-input')

# 生成输出层的参数。

weights1 = tf.Variable(tf.truncated_normal([INPUT_NODE, OUTPUT_NODE], stddev=0.1))

biases1 = tf.Variable(tf.constant(0.1, shape=[OUTPUT_NODE])) # 计算不含滑动平均类的前向传播结果

y = inference(x, None, weights1, biases1) # 定义训练轮数及相关的滑动平均类

global_step = tf.Variable(0, trainable=False)

variable_averages = tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DECAY, global_step)

variables_averages_op = variable_averages.apply(tf.trainable_variables())

average_y = inference(x, variable_averages, weights1, biases1) # 计算交叉熵及其平均值

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=y, labels=tf.argmax(y_, 1))

cross_entropy_mean = tf.reduce_mean(cross_entropy) # 损失函数的计算

regularizer = tf.contrib.layers.l2_regularizer(REGULARAZTION_RATE)

regularaztion = regularizer(weights1)

loss = cross_entropy_mean + regularaztion # 设置指数衰减的学习率。

learning_rate = tf.train.exponential_decay(

LEARNING_RATE_BASE,

global_step,

mnist.train.num_examples / BATCH_SIZE,

LEARNING_RATE_DECAY,

staircase=True) # 优化损失函数

train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss, global_step=global_step) # 反向传播更新参数和更新每一个参数的滑动平均值

with tf.control_dependencies([train_step, variables_averages_op]):

train_op = tf.no_op(name='train') # 计算正确率

correct_prediction = tf.equal(tf.argmax(average_y, 1), tf.argmax(y_, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32)) # 初始化会话,并开始训练过程。

with tf.Session() as sess:

tf.global_variables_initializer().run()

validate_feed = {x: mnist.validation.images, y_: mnist.validation.labels}

test_feed = {x: mnist.test.images, y_: mnist.test.labels} # 循环的训练神经网络。

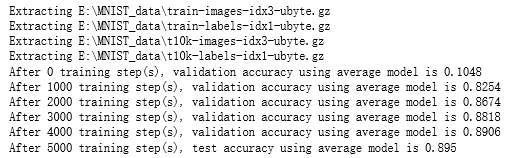

for i in range(TRAINING_STEPS):

if i % 1000 == 0:

validate_acc = sess.run(accuracy, feed_dict=validate_feed)

print("After %d training step(s), validation accuracy using average model is %g " % (i, validate_acc))

xs,ys=mnist.train.next_batch(BATCH_SIZE)

sess.run(train_op,feed_dict={x:xs,y_:ys})

test_acc=sess.run(accuracy,feed_dict=test_feed)

print(("After %d training step(s), test accuracy using average model is %g" %(TRAINING_STEPS, test_acc))) def main(argv=None):

mnist = input_data.read_data_sets("E:\\MNIST_data\\", one_hot=True)

train(mnist) if __name__=='__main__':

main()

吴裕雄 python 神经网络——TensorFlow训练神经网络:不使用隐藏层的更多相关文章

- 吴裕雄 python 神经网络——TensorFlow训练神经网络:不使用滑动平均

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data INPUT_NODE = 784 ...

- 吴裕雄 python 神经网络——TensorFlow训练神经网络:不使用激活函数

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data INPUT_NODE = 784 ...

- 吴裕雄 python 神经网络——TensorFlow训练神经网络:不使用指数衰减的学习率

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data INPUT_NODE = 784 ...

- 吴裕雄 python 神经网络——TensorFlow训练神经网络:不使用正则化

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data INPUT_NODE = 784 ...

- 吴裕雄 python 神经网络——TensorFlow训练神经网络:全模型

import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data INPUT_NODE = 784 ...

- 吴裕雄 python 神经网络——TensorFlow训练神经网络:花瓣识别

import os import glob import os.path import numpy as np import tensorflow as tf from tensorflow.pyth ...

- 吴裕雄 python 神经网络——TensorFlow训练神经网络:MNIST最佳实践

import os import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data INPUT_N ...

- 吴裕雄 python 神经网络——TensorFlow训练神经网络:卷积层、池化层样例

import numpy as np import tensorflow as tf M = np.array([ [[1],[-1],[0]], [[-1],[2],[1]], [[0],[2],[ ...

- 吴裕雄--天生自然 Tensorflow卷积神经网络:花朵图片识别

import os import numpy as np import matplotlib.pyplot as plt from PIL import Image, ImageChops from ...

随机推荐

- calloc函数的使用和对内存free的认识

#include<stdlib.h> void *calloc(size_t n, size_t size): free(); 目前的理解: n是多少个这样的size,这样的使用类似有f ...

- python文件读取:遇见的错误及解决办法

问题一: TypeError: 'str' object is not callable 产生原因: 该错误TypeError: 'str' object is not callable字面上意思:就 ...

- nmonchart 分析.nmon监控数据成html展示

下载地址:http://nmon.sourceforge.net/pmwiki.php?n=Site.Nmonchart chart安装包:http://sourceforge.net/project ...

- axios 请求中的Form Data 与 Request Payload的区别

在vue项目中使用axios发post请求时候,后台返回500. 发现是form Data 和 Request payload的问题. 后台对两者的处理方式不同,导致我们接收不到数据. 解决方案:使用 ...

- c/c++学习01

c++指针初始赋值: //指针初始赋值 int* a = new int(3); //第二种赋值 int 初始值 = 100; int *b = &初始值; //由new分配的内存块通常使用过 ...

- m大子段和 hdu1024

给出n个数,m个区间: 求选区m个区间的最大值: #include<cstdio> #include<algorithm> #include<math.h> #in ...

- java项目上有个红色感叹号(在project Explorer视图下)

启动项目时一直报错,检查也没问题,最后看到项目上有个红色感叹号,发现是jar包路径不对,把错误路径的jar包移除,然后再重新添加即可.

- docker 报错 docker: Error response from daemon: driver failed....iptables failed:

现象: [root@localhost test]# docker run --name postgres1 -e POSTGRES_PASSWORD=password -p : -d postgre ...

- Knapsack Cryptosystem 牛客团队赛

时限2s题意: 第一行包含两个整数,分别是n(1 <= n <= 36)和s(0 <= s <9 * 10 18) 第二行包含n个整数,它们是{a i }(0 <a i ...

- Go非缓冲/缓冲/双向/单向通道

1. 非缓冲和缓冲 package main import ( "fmt" "strconv" ) func main() { /* 非缓冲通道:make(ch ...