基于GPU 显卡在k8s 集群上实现hpa 功能

前言

Kubernetes 支持HPA模块进行容器伸缩,默认支持CPU和内存等指标。原生的HPA基于Heapster,不支持GPU指标的伸缩,但是支持通过CustomMetrics的方式进行HPA指标的扩展。我们可以通过部署一个基于Prometheus Adapter 作为CustomMetricServer,它能将Prometheus指标注册的APIServer接口,提供HPA调用。 通过配置,HPA将CustomMetric作为扩缩容指标, 可以进行GPU指标的弹性伸缩。

阿里云容器Kubernetes监控-GPU监控

- k8s集群准备好gpu 服务器

# kubectl get node

NAME STATUS ROLES AGE VERSION

master-11 Ready master 466d v1.18.20

master-12 Ready master 466d v1.18.20

master-13 Ready master 466d v1.18.20

slave-gpu-103 Ready <none> 159d v1.18.20

slave-gpu-105 Ready <none> 160d v1.18.20

slave-gpu-109 Ready <none> 160d v1.18.20

slave-rtx3080-gpu-111 Ready <none> 6d3h v1.18.20

- 给每个GPU 服务器打上标签、并添加污点

kubectl label node slave-gpu-103 aliyun.accelerator/nvidia_name=yes

kubectl taint node slave-gpu-103 gpu_type=moviebook:NoSchedule

- 部署Prometheus 的GPU 采集器,网络采用hostNetwork

# cat gpu-exporter.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

namespace: monitoring

name: ack-prometheus-gpu-exporter

spec:

selector:

matchLabels:

k8s-app: ack-prometheus-gpu-exporter

template:

metadata:

labels:

k8s-app: ack-prometheus-gpu-exporter

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: aliyun.accelerator/nvidia_name

operator: Exists

hostNetwork: true

hostPID: true

containers:

- name: node-gpu-exporter

image: registry.cn-hangzhou.aliyuncs.com/acs/gpu-prometheus-exporter:0.1-5cc5f27

imagePullPolicy: Always

ports:

- name: http-metrics

containerPort: 9445

env:

- name: MY_NODE_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: spec.nodeName

resources:

requests:

memory: 50Mi

cpu: 200m

limits:

memory: 100Mi

cpu: 300m

volumeMounts:

- mountPath: /var/run/docker.sock

name: docker-sock

volumes:

- hostPath:

path: /var/run/docker.sock

type: File

name: docker-sock

tolerations:

- effect: NoSchedule

key: server_type

operator: Exists

---

apiVersion: v1

kind: Service

metadata:

name: node-gpu-exporter

namespace: monitoring

labels:

k8s-app: ack-prometheus-gpu-exporter

spec:

type: ClusterIP

ports:

- name: http-metrics

port: 9445

protocol: TCP

selector:

k8s-app: ack-prometheus-gpu-exporter

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: ack-prometheus-gpu-exporter

labels:

release: ack-prometheus-operator

app: ack-prometheus-gpu-exporter

namespace: monitoring

spec:

selector:

matchLabels:

k8s-app: ack-prometheus-gpu-exporter

namespaceSelector:

matchNames:

- monitoring

endpoints:

- port: http-metrics

interval: 30s

#创建GPU 采集器

kubectl apply -f gpu-exporter.yaml

#查看Pod 状态

# kubectl get pod -n monitoring -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ack-prometheus-gpu-exporter-c2kdj 1/1 Running 0 21h 10.147.100.111 slave-rtx3080-gpu-111 <none> <none>

ack-prometheus-gpu-exporter-g98zv 1/1 Running 0 21h 10.147.100.105 slave-gpu-105 <none> <none>

ack-prometheus-gpu-exporter-jn7rj 1/1 Running 0 21h 10.147.100.103 slave-gpu-103 <none> <none>

ack-prometheus-gpu-exporter-tt7cg 1/1 Running 0 21h 10.147.100.109 slave-gpu-109 <none> <none>

- prometheus 增加监控GPU 服务器实例列表

# kubectl edit cm -n prometheus prometheus-conf

- job_name: 'GPU服务监控'

static_configs:

#- targets: ['node-gpu-exporter.monitoring:9445']

- targets:

- 10.147.100.103:9445

- 10.147.100.105:9445

- 10.147.100.111:9445

- 10.147.100.109:9445

#重启prometheus 使配置文件生效

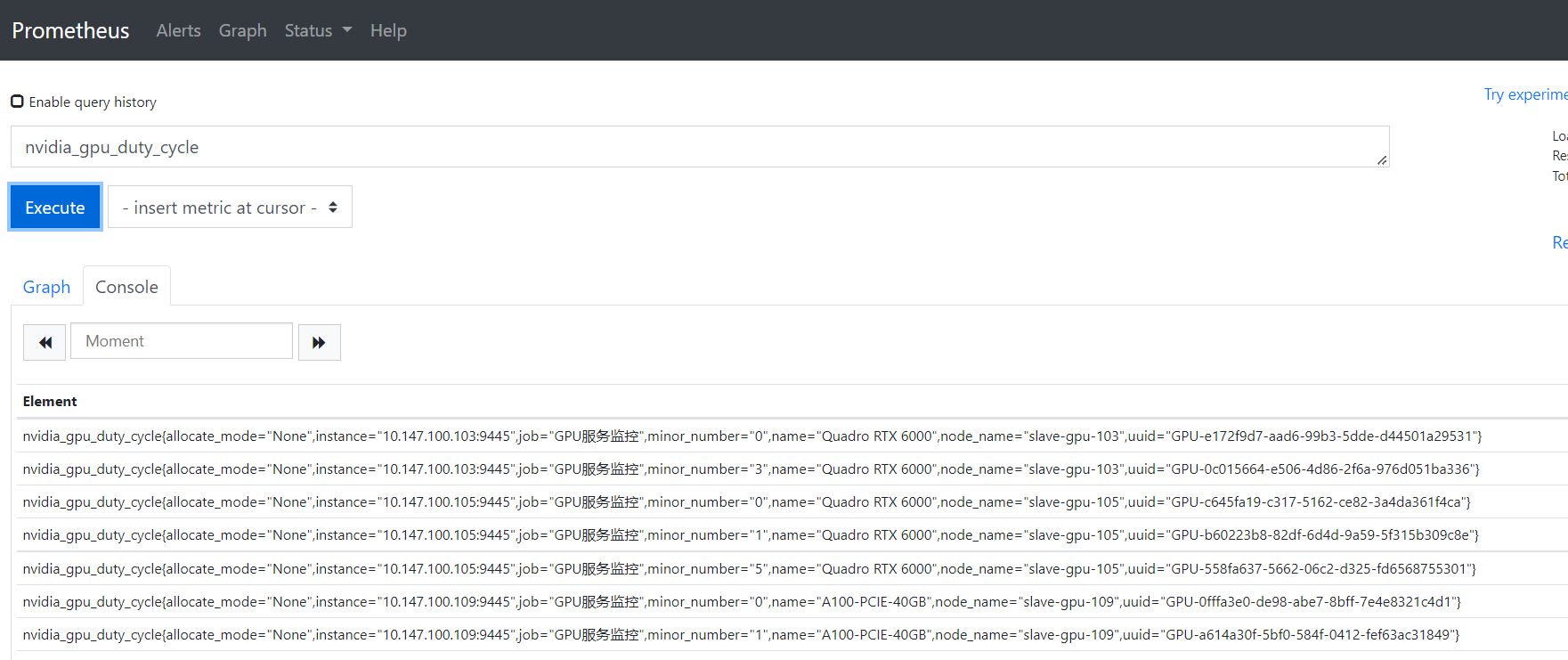

#查看prometheus gpu信息相关指标 nvidia_gpu_duty_cycle

部署CustomMetricServer

- 准备PROMETHEUS ADAPTER的证书

#准备证书

mkdir /opt/gpu/

cd /opt/gpu/

set -e

set -o pipefail

set -u

b64_opts='--wrap=0'

export PURPOSE=metrics

openssl req -x509 -sha256 -new -nodes -days 365 -newkey rsa:2048 -keyout ${PURPOSE}-ca.key -out ${PURPOSE}-ca.crt -subj "/CN=ca"

echo '{"signing":{"default":{"expiry":"43800h","usages":["signing","key encipherment","'${PURPOSE}'"]}}}' > "${PURPOSE}-ca-config.json"

export SERVICE_NAME=custom-metrics-apiserver

export ALT_NAMES='"custom-metrics-apiserver.monitoring","custom-metrics-apiserver.monitoring.svc"'

echo "{\"CN\":\"${SERVICE_NAME}\", \"hosts\": [${ALT_NAMES}], \"key\": {\"algo\": \"rsa\",\"size\": 2048}}" | \

cfssl gencert -ca=metrics-ca.crt -ca-key=metrics-ca.key -config=metrics-ca-config.json - | cfssljson -bare apiserver

cat <<-EOF > cm-adapter-serving-certs.yaml

apiVersion: v1

kind: Secret

metadata:

name: cm-adapter-serving-certs

data:

serving.crt: $(base64 ${b64_opts} < apiserver.pem)

serving.key: $(base64 ${b64_opts} < apiserver-key.pem)

EOF

#创建配置文件

kubectl -n kube-system apply -f cm-adapter-serving-certs.yaml

#查看证书

#kubectl get secrets -n kube-system |grep cm-adapter-serving-certs

cm-adapter-serving-certs Opaque 2 49s

- 部署PROMETHEUS CUSTOMMETRIC ADAPTER

# cat custom-metrics-apiserver.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: kube-system

name: custom-metrics-apiserver

labels:

app: custom-metrics-apiserver

spec:

replicas: 1

selector:

matchLabels:

app: custom-metrics-apiserver

template:

metadata:

labels:

app: custom-metrics-apiserver

name: custom-metrics-apiserver

spec:

serviceAccountName: custom-metrics-apiserver

containers:

- name: custom-metrics-apiserver

#image: registry.cn-beijing.aliyuncs.com/test-hub/k8s-prometheus-adapter-amd64

image: quay.io/coreos/k8s-prometheus-adapter-amd64:v0.5.0

args:

- --secure-port=6443

- --tls-cert-file=/var/run/serving-cert/serving.crt

- --tls-private-key-file=/var/run/serving-cert/serving.key

- --logtostderr=true

- --prometheus-url=http://prometheus-service.prometheus.svc.cluster.local:9090/

- --metrics-relist-interval=1m

- --v=10

- --config=/etc/adapter/config.yaml

ports:

- containerPort: 6443

volumeMounts:

- mountPath: /var/run/serving-cert

name: volume-serving-cert

readOnly: true

- mountPath: /etc/adapter/

name: config

readOnly: true

- mountPath: /tmp

name: tmp-vol

volumes:

- name: volume-serving-cert

secret:

secretName: cm-adapter-serving-certs

- name: config

configMap:

name: adapter-config

- name: tmp-vol

emptyDir: {}

---

kind: ServiceAccount

apiVersion: v1

metadata:

name: custom-metrics-apiserver

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

name: custom-metrics-apiserver

namespace: kube-system

spec:

ports:

- port: 443

targetPort: 6443

selector:

app: custom-metrics-apiserver

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: custom-metrics-server-resources

namespace: kube-system

rules:

- apiGroups:

- custom.metrics.k8s.io

resources: ["*"]

verbs: ["*"]

---

apiVersion: v1

kind: ConfigMap

metadata:

name: adapter-config

namespace: kube-system

data:

config.yaml: |

rules:

- seriesQuery: '{uuid!=""}'

resources:

overrides:

node_name: {resource: "node"}

pod_name: {resource: "pod"}

namespace_name: {resource: "namespace"}

name:

matches: ^nvidia_gpu_(.*)$

as: "${1}_over_time"

metricsQuery: ceil(avg_over_time(<<.Series>>{<<.LabelMatchers>>}[3m]))

- seriesQuery: '{uuid!=""}'

resources:

overrides:

node_name: {resource: "node"}

pod_name: {resource: "pod"}

namespace_name: {resource: "namespace"}

name:

matches: ^nvidia_gpu_(.*)$

as: "${1}_current"

metricsQuery: <<.Series>>{<<.LabelMatchers>>}

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: custom-metrics-resource-reader

rules:

- apiGroups:

- ""

resources:

- namespaces

- pods

- services

verbs:

- get

- list

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: hpa-controller-custom-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: custom-metrics-server-resources

subjects:

- kind: ServiceAccount

name: horizontal-pod-autoscaler

namespace: kube-system

#创建配置文件

kubectl apply -f custom-metrics-apiserver.yaml

#查看pod 状态

# kubectl get pod -n kube-system |grep custom-metrics-apiserver

custom-metrics-apiserver-56777c5757-b422b 1/1 Running 0 64s

- 角色授权

# cat custom-metrics-apiserver-rbac.yaml

apiVersion: apiregistration.k8s.io/v1beta1

kind: APIService

metadata:

name: v1beta1.custom.metrics.k8s.io

namespace: kube-system

spec:

service:

name: custom-metrics-apiserver

namespace: kube-system

group: custom.metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: custom-metrics-resource-reader

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: custom-metrics-resource-reader

subjects:

- kind: ServiceAccount

name: custom-metrics-apiserver

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: custom-metrics:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: custom-metrics-apiserver

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: custom-metrics-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: custom-metrics-apiserver

namespace: kube-system

#创建rbac

kubectl apply -f custom-metrics-apiserver-rbac.yaml

- 验证部署是否完成

#部署完成后,可以通过customMetric的ApiServer调用,验证Prometheus Adapter部署成功

# kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1/namespaces/default/pods/*/temperature_celsius_current"

{"kind":"MetricValueList","apiVersion":"custom.metrics.k8s.io/v1beta1","metadata":{"selfLink":"/apis/custom.metrics.k8s.io/v1beta1/namespaces/default/pods/%2A/temperature_celsius_current"},"items":[]}

- 查看 kube-controller-manager 服务中是否启用以下参数,未开启需要开启

#参数

--horizontal-pod-autoscaler-use-rest-clients=true

# cat /etc/systemd/system/kube-controller-manager.service |grep horizontal-pod-autoscaler-use-rest-clients

--horizontal-pod-autoscaler-use-rest-clients=true \

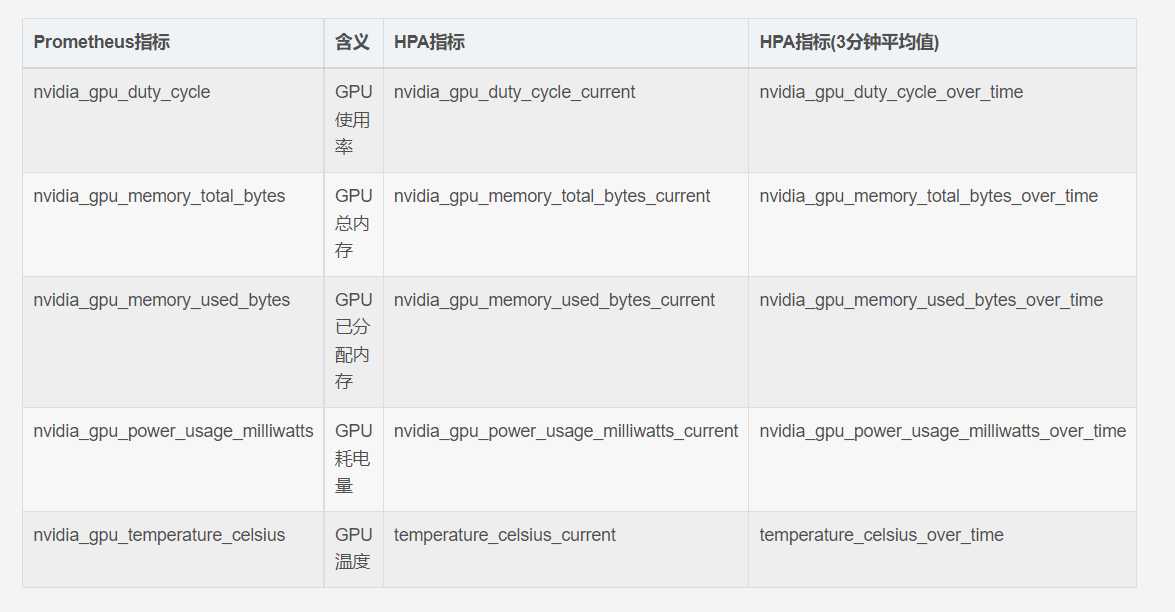

伸缩指标

#伸缩指标信息

测试GPU 服务的弹性扩缩容

| 指标名称 | 说明 | 单位 |

|---|---|---|

| duty_cycle_current | GPU利用率 | 百分比 |

| memory_used_bytes_current | 显存使用量 | 字节 |

部署HPA

- 部署测试应用

# cat test-gpu-bert-container-alot.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: alot-stream-python

name: alot-stream-python

namespace: alot-stream

spec:

replicas: 1

selector:

matchLabels:

name: alot-stream-python

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

name: alot-stream-python

spec:

containers:

- image: yz.harborxxx.com/alot/prod/000001-alot-ue4-runtime/python_test:20221114142453

#- image: registry.cn-hangzhou.aliyuncs.com/ai-samples/bert-intent-detection:1.0.1

imagePullPolicy: IfNotPresent

name: alot-stream-python-anmoyi

resources:

limits:

nvidia.com/gpu: "1"

securityContext:

runAsGroup: 0

runAsUser: 0

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /home/wkspace

name: workdir

- mountPath: /etc/localtime

name: hosttime

dnsPolicy: ClusterFirst

initContainers:

- image: yz.harbor.moviebook.com/alot/test/000001-init/node_test:20221114142453

imagePullPolicy: IfNotPresent

name: download

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /home/wkspace

name: workdir

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoSchedule

key: server_type

operator: Exists

volumes:

- emptyDir: {}

name: workdir

- hostPath:

path: /etc/localtime

type: ""

name: hosttime

# 创建测试应用

kubectl apply -f test-gpu-bert-container-alot.yaml

#查看pod 状态

# kubectl get pod -n alot-stream |grep -v "prod"

NAME READY STATUS RESTARTS AGE

alot-stream-python-64ffc68756-fs8lw 1/1 Running 0 38s

- 创建hpa,根据GPU 利用率进行扩缩容

# cat test-hap.yaml

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: gpu-hpa-kangpeng

namespace: alot-stream

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: alot-stream-python

minReplicas: 1

maxReplicas: 4

metrics:

- type: Pods

pods:

metricName: duty_cycle_current #Pod的GPU利用率。

targetAverageValue: 10 #当GPU利用率超过20%,触发扩容。

#创建文件

kubectl apply -f test-hap.yaml

#查看hpa

# kubectl get hpa -n alot-stream

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

gpu-hpa-kangpeng Deployment/alot-stream-python 0/20 1 4 1 21s

#查看hpa 状态,查看是否有报错信息

# kubectl describe hpa -n alot-stream gpu-hpa-kangpeng

Name: gpu-hpa-kangpeng

Namespace: alot-stream

Labels: <none>

Annotations: CreationTimestamp: Thu, 01 Dec 2022 15:04:25 +0800

Reference: Deployment/alot-stream-python

Metrics: ( current / target )

"duty_cycle_current" on pods: 43750m / 10

Min replicas: 1

Max replicas: 4

Deployment pods: 4 current / 4 desired

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True ScaleDownStabilized recent recommendations were higher than current one, applying the highest recent recommendation

ScalingActive True ValidMetricFound the HPA was able to successfully calculate a replica count from pods metric duty_cycle_current

ScalingLimited True TooManyReplicas the desired replica count is more than the maximum replica count

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulRescale 2m5s horizontal-pod-autoscaler New size: 4; reason: pods metric duty_cycle_current above target

#查看pod 副本数,已自动扩展到4副本

# kubectl get pod -n alot-stream |grep -v "prod"

NAME READY STATUS RESTARTS AGE

alot-stream-python-64ffc68756-62rsw 1/1 Running 0 6m3s

alot-stream-python-64ffc68756-9xcgr 1/1 Running 0 6m3s

alot-stream-python-64ffc68756-drj96 1/1 Running 0 6m3s

alot-stream-python-64ffc68756-fs8lw 1/1 Running 0 8m15s

- 创建hpa,根据GPU 显存使用率进行扩缩容

#显存hpa 配置文件

# cat test-hap-kangpeng-memory.yaml

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: gpu-hpa-kangpeng-memory

namespace: alot-stream

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: alot-stream-python

minReplicas: 1

maxReplicas: 3

metrics:

- type: Pods

pods:

metricName: memory_used_bytes_current #Pod使用的显存,单位为字节。

targetAverageValue: 1G #单个Pod显存超过4 GB触发扩容。

#创建配置文件

# kubectl apply -f test-hap-kangpeng-memory.yaml

#查看hpa

# kubectl get hpa -n alot-stream

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

gpu-hpa-kangpeng-memory Deployment/alot-stream-python 2562719744/1G 1 3 1 13s

#查看pod扩展状态

# kubectl get pod -n alot-stream |grep -v "prod"

NAME READY STATUS RESTARTS AGE

alot-stream-python-64ffc68756-9xcgr 1/1 Running 0 16m

alot-stream-python-64ffc68756-b8tdh 0/1 Init:0/1 0 16s

alot-stream-python-64ffc68756-pjr66 0/1 Init:0/1 0 16s

# kubectl get pod -n alot-stream |grep -v "prod"

NAME READY STATUS RESTARTS AGE

alot-stream-python-64ffc68756-9xcgr 1/1 Running 0 16m

alot-stream-python-64ffc68756-b8tdh 1/1 Running 0 56s

alot-stream-python-64ffc68756-pjr66 1/1 Running 0 56s

#待服务处理完请求将自动完成缩容

补充

- 问:如何禁止自动缩容?

答:在创建HPA时,通过配置behavior来禁止自动缩容。

behavior:

scaleDown:

policies:

- type: pods

value: 0

#完整的HPA配置文件如下

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: gpu-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: bert-intent-detection

minReplicas: 1

maxReplicas: 10

metrics:

- type: Pods

pods:

metricName: duty_cycle_current #Pod的GPU利用率。

targetAverageValue: 20 #GPU利用率超过20%,触发扩容。

behavior:

scaleDown:

policies:

- type: pods

value: 0

- 问:如何延迟缩容时间窗口?

答:缩容默认时间窗口(--horizontal-pod-autoscaler-downscale-stabilization-window)是5分钟 ,如果您需要延长时间窗口以避免一些流量毛刺造成的异常,可以指定缩容的时间窗口,behavior 参数配置示例如下:

behavior:

scaleDown:

stabilizationWindowSeconds: 600 #等待10分钟后再开始缩容。

policies:

- type: pods

value: 5 #每次只缩容5个Pod。

#上述示例表示当负载下降时,系统会等待600秒(10分钟)后再次进行缩容,每次只缩容5个Pod。

- 问:如果执行kubectl get hpa命令后,发现target一栏为UNKNOWN怎么办?

答:请按照以下步骤进行验证:

a、确认HorizontalPodAutoscaler中指标名字是否正确。

b、执行kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1"命令,查看返回结果中是否有对应的指标。

c、检查ack-alibaba-cloud-metrics-adapter中配置的Prometheus url是否正确。

基于GPU 显卡在k8s 集群上实现hpa 功能的更多相关文章

- 超详细实操教程!在现有K8S集群上安装JenkinsX,极速提升CI/CD体验!

在2018年年初,Jenkins X首次发布,它由Apache Groovy语言的创建者Jame Strachan创建.Jenkins X 是一个高度集成化的 CI/CD 平台,基于 Jenkins ...

- 从认证到调度,K8s 集群上运行的小程序到底经历了什么?

导读:不知道大家有没有意识到一个现实:大部分时候,我们已经不像以前一样,通过命令行,或者可视窗口来使用一个系统了. 前言 现在我们上微博.或者网购,操作的其实不是眼前这台设备,而是一个又一个集群.通常 ...

- Istio(二):在Kubernetes(k8s)集群上安装部署istio1.14

目录 一.模块概览 二.系统环境 三.安装istio 3.1 使用 Istioctl 安装 3.2 使用 Istio Operator 安装 3.3 生产部署情况如何? 3.4 平台安装指南 四.Ge ...

- 5.基于二进制部署kubernetes(k8s)集群

1 kubernetes组件 1.1 Kubernetes 集群图 官网集群架构图 1.2 组件及功能 1.2.1 控制组件(Control Plane Components) 控制组件对集群做出全局 ...

- Blazor+Dapr+K8s微服务之基于WSL安装K8s集群并部署微服务

前面文章已经演示过,将我们的示例微服务程序DaprTest1部署到k8s上并运行.当时用的k8s是Docker for desktop 自带的k8s,只要在Docker for deskto ...

- K8S集群组件

master节点主要由apiserver.controller-manager和scheduler三个组件,以及一个用于集群状态存储的etcd存储服务组成,而每个node节点则主要包含kubelet. ...

- k8s集群部署(2)

一.利用ansible部署kubernetes准备阶段 1.集群介绍 基于二进制方式部署k8s集群和利用ansible-playbook实现自动化:二进制方式部署有助于理解系统各组件的交互原理和熟悉组 ...

- 轻量化安装 TKEStack:让已有 K8s 集群拥有企业级容器云平台的能力

关于我们 更多关于云原生的案例和知识,可关注同名[腾讯云原生]公众号~ 福利: ①公众号后台回复[手册],可获得<腾讯云原生路线图手册>&<腾讯云原生最佳实践>~ ②公 ...

- k8s集群节点更换ip 或者 k8s集群添加新节点

1.需求情景:机房网络调整,突然要回收我k8s集群上一台node节点机器的ip,并调予新的ip到这台机器上,所以有了k8s集群节点更换ip一说:同时,k8s集群节点更换ip也相当于k8s集群添加新节点 ...

- client-go获取k8s集群内部连接,实现deployment的增删改查

一开始写了一个client-java版本的,但是java放在k8s集群上跑需要装jvm而且java的包比较大,client-go版本更适合主机端,下面是整个实现 说明:k8s官方维护的客户端库只有go ...

随机推荐

- day04-Spring管理Bean-IOC-02

Spring管理Bean-IOC-02 2.基于XML配置bean 2.7通过util空间名称创建list BookStore.java: package com.li.bean; import ja ...

- A+B Problem C++

前言继上次发表的A+B Problem C语言后,今天我们来学习一下A+B Problem C++ 正文什么是C++? C++既可以进行C语言的过程化程序设计,又可以进行以抽象数据类型为特点的基于对象 ...

- 字节输出流的续写和换行-字节输入流_inputS Stream类

字节输出流的续写和换行 package demo02.OutputStream; import java.io.FileOutputStream; import java.io.IOException ...

- 编程哲学之 C# 篇:003——为什么选择 C#

国内开设C#课程的学校或培训机构是越来越少,使用C#作为开发的语言企业也是越来越少.企业要招C#的开发人员越来越难,会C#的要开发人员要找工作也是越来越难,然后我还是选择C#作为本系列的主要语言,我给 ...

- 非代码的贡献也能成为Committer,我与DolphinScheduler社区的故事

点亮 ️ Star · 照亮开源之路 https://github.com/apache/dolphinscheduler // 每个人对于"开源社区"的定义都不一样, ...

- Grafana 系列文章(十四):Helm 安装Loki

前言 写或者翻译这么多篇 Loki 相关的文章了, 发现还没写怎么安装 现在开始介绍如何使用 Helm 安装 Loki. 前提 有 Helm, 并且添加 Grafana 的官方源: helm repo ...

- WPF跨平台方案?

Avalonia XPF 通过我们的跨平台UI框架,释放现有WPF应用程序的全部潜力,使WPF应用程序能够在macOS和Linux上运行,而不需要昂贵和有风险的重写. 工作原理 我们使用 Fork o ...

- 1 - 【RocketMQ 系列】CentOS 7.6 安装部署RocketMQ

一.前置准备工作 CentOS 7.6 安装 jdk1.8 openjdk 1.查看JDK版本 yum search java|grep jdk 2.安装jdk1.8,安装默认的目录为: /usr/l ...

- Spring IOC官方文档学习笔记(十一)之使用JSR 330标准注解

1.使用@Inject和@Named进行依赖注入 (1) Spring提供了对JSR 330标准注解的支持,因此我们也可以使用JSR 330标准注解来进行依赖注入,不过,在此之前,我们得先使用mave ...

- 体验AI乐趣:基于AI Gallery的二分类猫狗图片分类小数据集自动学习

摘要:直接使用AI Gallery里面现有的数据集进行自动学习训练,很简单和方便,节约时间,不用自己去训练了,AI Gallery 里面有很多类似的有趣数据集,也非常好玩,大家一起试试吧. 本文分享自 ...