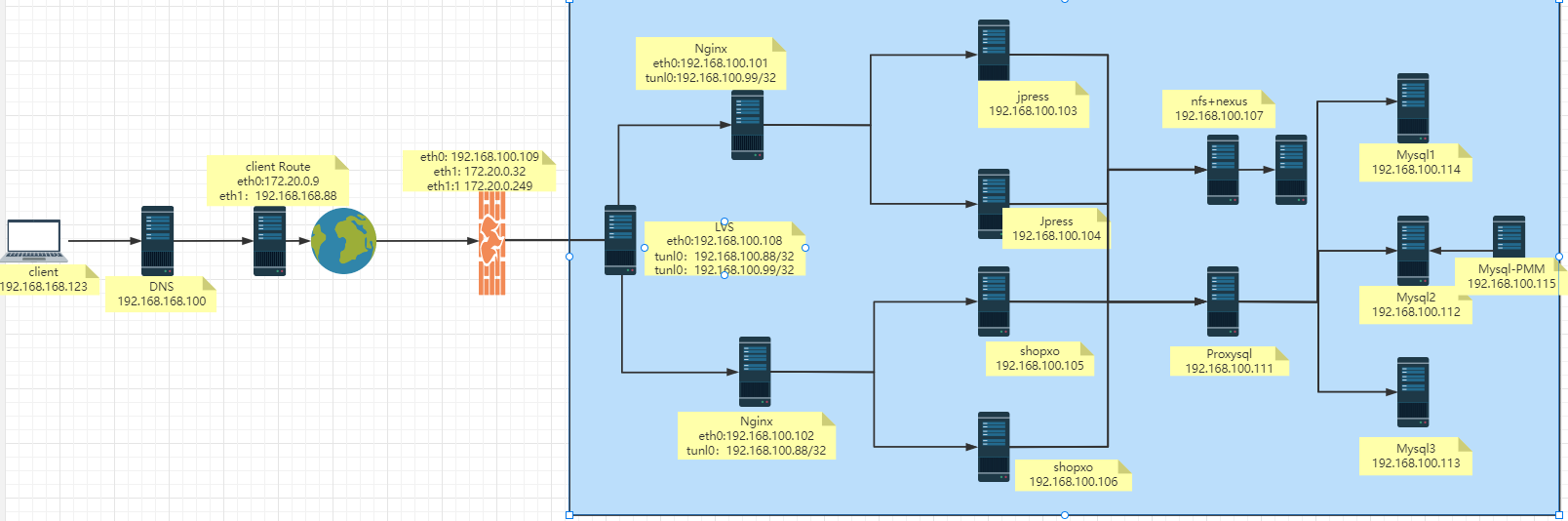

中小型企业综合项目(Nginx+LVS+Tomcat+MGR+Nexus+NFS)

Nginx+Tomcat+Mysql综合实验

1、环境准备

| 服务器 | IP地址 | 作用 | 系统版本 |

|---|---|---|---|

| 数据库服务器1 | 192.168.100.111 | MGR集群数据库master节点 | Rocky8.6 |

| 数据库服务器2 | 192.168.100.112 | MGR集群数据库slave节点 | Rocky8.6 |

| 数据库服务器3 | 192.168.100.113 | MGR集群数据库slave节点 | Rocky8.6 |

| 数据库监控 | 192.168.100.114 | PMM监控数据库服务器 | Rocky8.6 |

| proxysql | 192.168.100.115 | 读写分离 | Rocky8.6 |

| Nginx1反向代理 | 192.168.100.101 | 反向代理负载均衡jpress服务器 | Rocky8.6 |

| Nginx2反向代理 | 192.168.100.102 | 反向代理负载均衡shopxo服务器 | Rocky8.6 |

| Jpress1服务器 | 192.168.100.103 | Jpress博客网站服务器 | Rocky8.6 |

| Jpress2服务器 | 192.168.100.104 | Jpress博客网站服务器 | Rocky8.6 |

| Shopxo1服务器 | 192.168.100.105 | shopxo电商网站服务器 | Rocky8.6 |

| Shopxo2服务器 | 192.168.100.106 | shopxo电商网站服务器 | Rocky8.6 |

| NFS+Nexus服务器 | 192.168.100.107 | NFS服务器、局域网镜像软件仓库服务器 | Rocky8.6 |

| LVS | 192.168.100.108 | 四层负载均衡服务器 | Rocky8.6 |

| Firewalld | 192.168.100.109 | 防火墙 | Rocky8.6 |

| Client-roter | 192.168.168.88 | 用户路由器 | Rocky8.6 |

| DNS | 192.168.168.100 | DNS服务器 | Rocky8.6 |

| client | 192.168.168.123 | 测试 | Rocky8.6 |

2、数据库配置MGR

# 数据库所有节点(192.168.100.112、192.168.100.113、192.168.100.114):

[root@node112 ~]# vim /etc/hosts

192.168.100.114 node114.wang.org

192.168.100.112 node112.wang.org

192.168.100.113 node113.wang.org

[root@node112 ~]# vim /etc/my.cnf.d/mysql-server.cnf

server-id=112 #ID不能一样

gtid_mode=ON

enforce_gtid_consistency=ON

default_authentication_plugin=mysql_native_password

binlog_checksum=NONE

loose-group_replication_group_name="bb6ba65e-a862-4e3f-bcd8-f247c7e3c483"

loose-group_replication_start_on_boot=OFF

loose-group_replication_local_address="192.168.100.112:24901" #填写自己的IP地址

loose-group_replication_group_seeds="192.168.100.112:24901,192.168.100.113:24901,192.168.100.114:24901"

loose-group_replication_bootstrap_group=OFF

loose-group_replication_recovery_use_ssl=ON

[root@node112 ~]# systemctl restart mysqld.service # 数据库所有节点(192.168.100.112、192.168.100.113、192.168.100.114):

[root@node112 ~]# mysql

mysql> set sql_log_bin=0;

mysql> create user repluser@'%' identified by '123456';

mysql> grant replication slave on *.* to repluser@'%';

mysql> flush privileges;

mysql> set sql_log_bin=1;

mysql> install plugin group_replication soname 'group_replication.so';

mysql> select * from information_schema.plugins where plugin_name='group_replication'\G #第一节点(引导启动)192.168.100.112:

mysql> set global group_replication_bootstrap_group=ON;

mysql> start group_replication;

mysql> set global group_replication_bootstrap_group=OFF;

mysql> select * from performance_schema.replication_group_members;

#剩余节点(192.168.100.113、192.168.100.114):

mysql> change master to master_user='repluser',master_password='123456' for channel 'group_replication_recovery';

mysql> start group_replication;

mysql> select * from performance_schema.replication_group_members; #查看所有节点都是ONLINE状态

+---------------------------+--------------------------------------+------------------+-------------+--------------+-------------+----------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+---------------------------+--------------------------------------+------------------+-------------+--------------+-------------+----------------+

| group_replication_applier | 5b6cd57d-3ca6-11ed-8e94-5254002f2692 | node114.wang.org | 3306 | ONLINE | SECONDARY | 8.0.26 |

| group_replication_applier | 9a3d8d76-3ca6-11ed-9ea4-525400cc151b | node113.wang.org | 3306 | ONLINE | SECONDARY | 8.0.26 |

| group_replication_applier | 9d3110ab-3ca6-11ed-8484-5254002864ca | node112.wang.org | 3306 | ONLINE | PRIMARY | 8.0.26 |

+---------------------------+--------------------------------------+------------------+-------------+--------------+-------------+----------------+

3、配置PMM服务端

# PMM服务器(192.168.100.115):

[root@node115-wang ~]# yum install -y yum-utils

[root@node115-wang ~]# vim /etc/yum.repos.d/aliyun_docker.repo #配置aliyun—docker yum源

[docker-ce-stable]

name=Docker CE Stable - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/stable

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-stable-debuginfo]

name=Docker CE Stable - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/debug-$basearch/stable

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-stable-source]

name=Docker CE Stable - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/source/stable

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test]

name=Docker CE Test - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test-debuginfo]

name=Docker CE Test - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/debug-$basearch/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-test-source]

name=Docker CE Test - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/source/test

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly]

name=Docker CE Nightly - $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/$basearch/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly-debuginfo]

name=Docker CE Nightly - Debuginfo $basearch

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/debug-$basearch/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[docker-ce-nightly-source]

name=Docker CE Nightly - Sources

baseurl=https://mirrors.aliyun.com/docker-ce/linux/centos/$releasever/source/nightly

enabled=0

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

[root@node115-wang ~]# yum repolist

[root@node115-wang ~]# yum install -y device-mapper-persistent-data lvm2 #安装依赖包

[root@node115-wang ~]# yum install -y docker-ce

[root@node115-wang ~]# systemctl enable --now docker

[root@node115-wang ~]# docker pull percona/pmm-server:latest

latest: Pulling from percona/pmm-server

2d473b07cdd5: Pull complete

bf7875dc8ab7: Pull complete

Digest: sha256:975ad1b212771360298c1f9e0bd67ec36d1fcfd89d58134960696750d73f4576

Status: Downloaded newer image for percona/pmm-server:latest

docker.io/percona/pmm-server:latest

[root@node115-wang ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

percona/pmm-server latest 3d3c8937808d 5 weeks ago 1.94GB

[root@node115-wang ~]# mkdir /opt/prometheus/data -p

[root@node115-wang ~]# docker create -v /opt/prometheus/data/ \

> -v /opt/consul-data \

> -v /var/lib/mysql \

> -v /var/lib/grafana \

> --name pmm-data \

> percona/pmm-server:latest /bin/true

8f7910c9eb77b627e9c36fa3477900f2173c97c88788fee1f7bf999172ce884d

[root@node115-wang ~]# docker run --detach --restart always \

> --publish 443:443 \

> --volumes-from pmm-data \

> --name pmm-server \

> percona/pmm-server:latest

8db367fac8d7f3560c82c137372db705a96bda6a4f342004541741dce6be3a36

[root@node115-wang ~]# docker ps -a

# 浏览器 打开https://192.168.100.115/graph/login登陆 默认用户名admin密码admin4、配置PMM-Client

# PMM-client(192.168.100.112、192.168.100.113、192.168.100.114):

[root@node112 ~]# yum install -y https://repo.percona.com/yum/percona-release-latest.noarch.rpm

[root@node112 ~]# yum install -y pmm2-client

[root@node112 ~]# pmm-admin config --server-insecure-tls --server-url=https://admin:admin@192.168.100.115:443 # 192.168.100.112:

mysql> create user pmm@'192.168.100.%' identified by '123456';

mysql> grant select,process,replication client,reload,backup_admin on *.* to pmm@'192.168.100.%';

mysql> UPDATE performance_schema.setup_consumers SET ENABLED = 'YES' WHERE NAME LIKE '%statements%';

mysql> select * from performance_schema.setup_consumers WHERE NAME LIKE '%statements%';

mysql> SET persist innodb_monitor_enable = all;5、PMM监控MRG

# # 浏览器 打开https://192.168.100.115/graph/login登陆 添加三个数据库节点即可,具体添加流程请参照:https://blog.51cto.com/dayu/5687167 6、配置proxysql

#proxy服务器(192.168.100.111):

[root@node11 ~]# yum -y localinstall proxysql-2.2.0-1-centos8.x86_64.rpm #安装已经下载好的proxysql

[root@node11 ~]# systemctl enable --now proxysql

[root@node11 ~]# ss -ntlp

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:22 0.0.0.0:* users:(("sshd",pid=846,fd=4))

LISTEN 0 128 0.0.0.0:6032 0.0.0.0:* users:(("proxysql",pid=4491,fd=39))

LISTEN 0 128 0.0.0.0:6033 0.0.0.0:* users:(("proxysql",pid=4491,fd=34))

LISTEN 0 128 0.0.0.0:6033 0.0.0.0:* users:(("proxysql",pid=4491,fd=33))

LISTEN 0 128 0.0.0.0:6033 0.0.0.0:* users:(("proxysql",pid=4491,fd=32))

LISTEN 0 128 0.0.0.0:6033 0.0.0.0:* users:(("proxysql",pid=4491,fd=30))

LISTEN 0 128 [::]:22 [::]:* users:(("sshd",pid=846,fd=6))

[root@node11 ~]# yum install -y mysql #安装mysql客户端

[root@node11 ~]# mysql -uadmin -padmin -h127.0.0.1 -P6032 #连接ProxySQL的管理端口,默认管理员用户和密码都是admin,管理接口6032

mysql> insert into mysql_servers(hostgroup_id,hostname,port) values (10,'192.168.100.112',3306); #添加MySQL节点

mysql> insert into mysql_servers(hostgroup_id,hostname,port) values (10,'192.168.100.113',3306); #添加MySQL节点

mysql> insert into mysql_servers(hostgroup_id,hostname,port) values (10,'192.168.100.114',3306); #添加MySQL节点

# 数据库master节点(192.168.100.112):创建monitor、proxysql账号

mysql> create user monitor@'192.168.100.%' identified by '123456';

mysql> create user proxysql@'192.168.100.%' identified by '123456';

mysql> grant all privileges on *.* to monitor@'192.168.100.%';

mysql> grant all privileges on *.* to proxysql@'192.168.100.%'; # proxy服务器(192.168.100.111):添加监控账号密码

mysql> set mysql-monitor_username='monitor';

mysql> set mysql-monitor_password='123456';

mysql> insert into mysql_users(username,password,active,default_hostgroup,transaction_persistent) values ('proxysql','123456',1,10,1); # 数据库master节点(192.168.100.112):导入proxysql监控sql

[root@node12 ~]# vim proxysql-monitor.sql

USE sys;

DELIMITER $$

CREATE FUNCTION IFZERO(a INT, b INT)

RETURNS INT

DETERMINISTIC

RETURN IF(a = 0, b, a)$$

CREATE FUNCTION LOCATE2(needle TEXT(10000), haystack TEXT(10000), offset INT)

RETURNS INT

DETERMINISTIC

RETURN IFZERO(LOCATE(needle, haystack, offset), LENGTH(haystack) + 1)$$

CREATE FUNCTION GTID_NORMALIZE(g TEXT(10000))

RETURNS TEXT(10000)

DETERMINISTIC

RETURN GTID_SUBTRACT(g, '')$$

CREATE FUNCTION GTID_COUNT(gtid_set TEXT(10000))

RETURNS INT

DETERMINISTIC

BEGIN

DECLARE result BIGINT DEFAULT 0;

DECLARE colon_pos INT;

DECLARE next_dash_pos INT;

DECLARE next_colon_pos INT;

DECLARE next_comma_pos INT;

SET gtid_set = GTID_NORMALIZE(gtid_set);

SET colon_pos = LOCATE2(':', gtid_set, 1);

WHILE colon_pos != LENGTH(gtid_set) + 1 DO

SET next_dash_pos = LOCATE2('-', gtid_set, colon_pos + 1);

SET next_colon_pos = LOCATE2(':', gtid_set, colon_pos + 1);

SET next_comma_pos = LOCATE2(',', gtid_set, colon_pos + 1);

IF next_dash_pos < next_colon_pos AND next_dash_pos < next_comma_pos THEN

SET result = result +

SUBSTR(gtid_set, next_dash_pos + 1,

LEAST(next_colon_pos, next_comma_pos) - (next_dash_pos + 1)) -

SUBSTR(gtid_set, colon_pos + 1, next_dash_pos - (colon_pos + 1)) + 1;

ELSE

SET result = result + 1;

END IF;

SET colon_pos = next_colon_pos;

END WHILE;

RETURN result;

END$$

CREATE FUNCTION gr_applier_queue_length()

RETURNS INT

DETERMINISTIC

BEGIN

RETURN (SELECT sys.gtid_count( GTID_SUBTRACT( (SELECT

Received_transaction_set FROM performance_schema.replication_connection_status

WHERE Channel_name = 'group_replication_applier' ), (SELECT

@@global.GTID_EXECUTED) )));

END$$

CREATE FUNCTION gr_member_in_primary_partition()

RETURNS VARCHAR(3)

DETERMINISTIC

BEGIN

RETURN (SELECT IF( MEMBER_STATE='ONLINE' AND ((SELECT COUNT(*) FROM

performance_schema.replication_group_members WHERE MEMBER_STATE != 'ONLINE') >=

((SELECT COUNT(*) FROM performance_schema.replication_group_members)/2) = 0),

'YES', 'NO' ) FROM performance_schema.replication_group_members JOIN

performance_schema.replication_group_member_stats USING(member_id)

where performance_schema.replication_group_members.member_host=@@hostname);

END$$

CREATE VIEW gr_member_routing_candidate_status AS

SELECT

sys.gr_member_in_primary_partition() AS viable_candidate,

IF((SELECT

(SELECT

GROUP_CONCAT(variable_value)

FROM

performance_schema.global_variables

WHERE

variable_name IN ('read_only' , 'super_read_only')) != 'OFF,OFF'

),

'YES',

'NO') AS read_only,

sys.gr_applier_queue_length() AS transactions_behind,

Count_Transactions_in_queue AS 'transactions_to_cert'

FROM

performance_schema.replication_group_member_stats a

JOIN

performance_schema.replication_group_members b ON a.member_id = b.member_id

WHERE

b.member_host IN (SELECT

variable_value

FROM

performance_schema.global_variables

WHERE

variable_name = 'hostname')$$

DELIMITER ;

[root@node12 ~]# mysql < proxysql-monitor.sql #导入proxysql监控sql

# proxy服务器(192.168.100.111): 设置读写组

mysql> insert into mysql_group_replication_hostgroups (writer_hostgroup,backup_writer_hostgroup,reader_hostgroup, offline_hostgroup,active,max_writers,writer_is_also_reader,max_transactions_behind) values (10,20,30,40,1,1,0,100);

mysql> load mysql servers to runtime;

mysql> save mysql servers to disk;

mysql> load mysql users to runtime;

mysql> save mysql users to disk;

mysql> load mysql variables to runtime;

mysql> save mysql variables to disk; # proxy服务器(192.168.100.111):设置读写规则

mysql> insert into mysql_query_rules(rule_id,active,match_digest,destination_hostgroup,apply) VALUES (1,1,'^SELECT.*FOR UPDATE$',10,1),(2,1,'^SELECT',30,1);

mysql> load mysql servers to runtime;

mysql> save mysql servers to disk;

mysql> load mysql users to runtime;

mysql> save mysql users to disk;

mysql> load mysql variables to runtime;

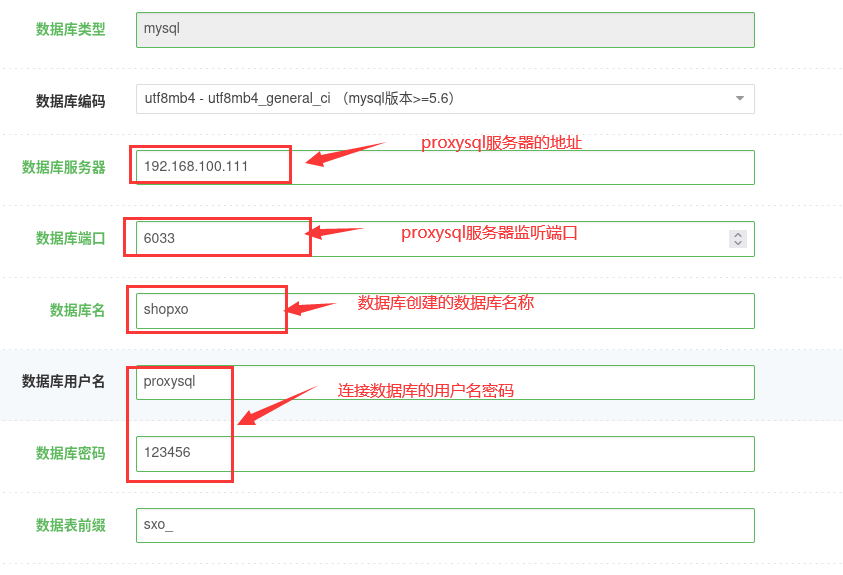

mysql> save mysql variables to disk;7、部署shopxo

#shopxo服务器(192.168.100.105、192.168.100.106):

[root@rocky-105 ~]# wget https://mirrors.tuna.tsinghua.edu.cn/remi/enterprise/remi-release-8.rpm

[root@rocky-105 ~]# yum install -y remi-release-8.rpm

[root@rocky-105 ~]# yum -y install nginx php74 php74-php-fpm php74-php-mysqlnd php74-php-json php74-php-gd php74-php-xml php74-php-pecl-zip php74-php-mbstring

[root@rocky-105 ~]# unzip shopxov2.3.0.zip

[root@rocky-105 ~]# mkdir /data/shopxo -p

[root@rocky-105 ~]# mv /root/shopxo-v2.3.0/* /data/shopxo/

[root@rocky-105 ~]# chown -R nginx. /data/shopxo/

[root@rocky-105 ~]# cd /data/shopxo/

[root@rocky-105 shopxo]# vim /etc/opt/remi/php74/php-fpm.d/www.conf

[www]

user = nginx # 最好改为nginx运行的用户

group = nginx # 最好改为nginx运行的组

listen = 127.0.0.1:9000 #监听地址及IP (跨网络需要写网卡的IP)

pm.status_path = /status #取消注释

ping.path = /ping #取消注释

ping.response = pong #取消注释

[root@rocky-105 shopxo]# php74 -l /etc/opt/remi/php74/php-fpm.d/www.conf #语法检查

[root@rocky-105 shopxo]# systemctl restart php74-php-fpm.service

[root@rocky-105 shopxo]# vim /etc/nginx/conf.d/shopxo.wang.org.conf

server {

listen 80;

server_name shopxo.wang.org;

root /data/shopxo;

location / {

index index.php index.html index.htm;

}

location ~ \.php$ {

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

}

[root@shopxo1 config]# vim /data/shopxo/config/shopxo.sql

....

ENGINE = MyISAM AUTO_INCREMENT

.....

:%s/MyISAM/InnoDB/g # 搜索MyISAM替换成InnoDB ENGINE = InnoDB

## 更改hosts解析域名即可用浏览器连接,连接的数据库地址写proxysql的地址,用proxysql实现读写分离

8、部署jpress

#jpress服务器(192.168.100.103、192.168.100.104):

[root@rocky-103 ~]# yum install java-1.8.0-openjdk #建议安装8版本,如果安装11版本,可能会出现登陆后台验证码刷不出来的问题

[root@rocky-103 ~]# bash install_tomcat.sh

[root@rocky-103 ~]# vim /usr/local/tomcat/conf/server.xml

</Host>

<Host name="jpress.wang.org" appBase="/data/jpress" unpackWARs="true" autoDeploy="true">

</Host>

[root@rocky-103 ~]# mkdir -p /data/jpress

[root@rocky-103 ~]# mv /root/jpress-v5.0.2.war /data/jpress/ROOT.war

[root@rocky-103 ~]# chown -R tomcat. /data/jpress

[root@rocky-103 ~]# systemctl restart tomcat.service

## 更改hosts解析域名即可用浏览器连接,连接的数据库地址,测试了jpress5.0.2暂时无法连接mycat和proxysql9、部署nginx反向代理jpress

# nginx服务器1(192.168.100.101):

[root@rocky-101 ~]# vim /apps/nginx/conf/nginx.conf

include /apps/nginx/conf/conf.d/*.conf;

[root@rocky-101 ~]# mkdir /apps/nginx/conf/conf.d

[root@rocky-101 ~]# cd /apps/nginx/

[root@rocky-101 conf]# mkdir ssl

[root@rocky-101 ssl]# openssl req -newkey rsa:4096 -nodes -sha256 -keyout ca.key -x509 -days 3650 -out ca.crt

[root@rocky-101 ssl]# openssl req -newkey rsa:4096 -nodes -sha256 -keyout jpress.wang.org.key -out jpress.wang.org.csr

[root@rocky-101 ssl]# openssl x509 -req -days 3650 -in jpress.wang.org.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out jpress.wang.org.crt

[root@rocky-101 ssl]# cat jpress.wang.org.crt ca.crt > jpress.wang.org.pem

[root@rocky-101 ssl]# cd

[root@rocky-101 ~]# vim /apps/nginx/conf/conf.d/jpress.wang.org.conf

upstream jpress {

hash $remote_addr;

server 192.168.100.103:8080;

server 192.168.100.104:8080;

}

server {

listen 80;

server_name jpress.wang.org;

return 302 https://$server_name$request_uri; #$server_name 来自于上面的server_name,即jpress.wang.org

}

server {

listen 443 ssl http2;

server_name jpress.wang.org;

ssl_certificate /etc/nginx/ssl/jpress.wang.org.pem;

ssl_certificate_key /etc/nginx/ssl/jpress.wang.org.key;

client_max_body_size 20m;

location / {

proxy_pass http://jpress;

proxy_set_header host $http_host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

[root@rocky-101 ~]# nginx -s reload10、部署nginx反向代理shopxo

[root@rocky-102 ~]# vim /apps/nginx/conf/nginx.conf

include /apps/nginx/conf/conf.d/*.conf;

[root@rocky-102 ~]# mkdir /apps/nginx/conf/conf.d

[root@rocky-102 ~]# cd /apps/nginx/

[root@rocky-102 conf]# mkdir ssl

[root@rocky-102 ssl]# openssl req -newkey rsa:4096 -nodes -sha256 -keyout ca.key -x509 -days 3650 -out ca.crt

[root@rocky-102 ssl]# openssl req -newkey rsa:4096 -nodes -sha256 -keyout jpress.wang.org.key -out jpress.wang.org.csr

[root@rocky-102 ssl]# openssl x509 -req -days 3650 -in jpress.wang.org.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out jpress.wang.org.crt

[root@rocky-102 ssl]# cat jpress.wang.org.crt ca.crt > jpress.wang.org.pem

[root@rocky-102 ssl]# cd

[root@rocky-102 ~]# vim /apps/nginx/conf/conf.d/shopxo.wang.org

upstream shopxo {

# hash $remote_addr;

server 192.168.100.105;

server 192.168.100.106;

}

server {

listen 80;

server_name shopxo.wang.org;

return 302 https://$server_name$request_uri;

}

server {

listen 443 ssl http2;

server_name shopxo.wang.org;

ssl_certificate /etc/nginx/ssl/jpress.wang.org.pem;

ssl_certificate_key /etc/nginx/ssl/jpress.wang.org.key;

client_max_body_size 20m;

location / {

proxy_pass http://shopxo;

proxy_set_header host $http_host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

[root@rocky-102 ~]# nginx -s reload

11、部署NFS+Nexus

#搭建NFS(192.168.100.107):

[root@node07 ~]# vim /etc/exports

/data/jpress 192.168.100.0/24(rw,all_squash,anonuid=997,anongid=992)

/data/shopxo 192.168.100.0/24(rw,all_squash,anonuid=997,anongid=992)

[root@node07 ~]# exportfs -r

[root@node07 ~]# exportfs -v

[root@node07 ~]# systemctl restart nfs-server.service

[root@node07 ~]# yum install -y nfs-utils

=================================================================================

# jpress服务器(192.168.100.103、192.168.100.104):

[root@node05 ~]# yum install -y rsync

[root@node03 ~]# rsync -av /data/jpress/ROOT/* root@192.168.100.107:/data/jpress/ #把现有的网站页面拷贝到nfs上,一台服务器执行即可

[root@node03 ~]# yum install -y nfs-utils

[root@node03 ~]# showmount -e 192.168.100.107

[root@node03 ~]# vim /etc/fstab

192.168.100.107:/data/jpress /data/jpress/ROOT nfs _netdev 0 0

[root@node03 ~]# mount -a

==============================================================================

# shopxo服务器(192.168.100.105、192.168.100.106):

[root@node05 ~]# rsync -av /data/shopxo/public/static/upload/* root@192.168.100.107:/data/shopxo/ #把现有的网站页面拷贝到nfs上,一台服务器执行即可

[root@node05 ~]# yum install -y rsync

[root@node05 ~]# yum install nfs-utils

[root@node05 ~]# vim /etc/fstab

192.168.100.107:/data/shopxo /data/shopxo/public/static/upload nfs _netdev 0 0

==============================================================================

# 部署nexus

[root@node07 ~]# yum install java -y

[root@node07 ~]# tar xf nexus-3.41.1-01-unix.tar.gz -C /usr/local/

[root@node07 ~]# cd /usr/local/

[root@node07 local]# mv nexus-3.41.1-01/ nexus

[root@node07 local]# echo 'PATH=/usr/local/nexus/bin:$PATH' > /etc/profile.d/nexus.sh

[root@node07 local]# . /etc/profile.d/nexus.s

[root@node07 local]# vim nexus/bin/nexus.vmoptions

-Xms1024m

-Xmx1024m

-XX:MaxDirectMemorySize=1500m

......

[root@node07 local]# nexus run

[root@node07 local]# vim /lib/systemd/system/nexus.service

[Unit]

Description=nexus service

After=network.target

[Service]

Type=forking

LimitNOFILE=65535

ExecStart=/usr/local/nexus/bin/nexus start

ExecStop=/usr/local/nexus/bin/nexus stop

User=root

Restart=on-abort

[Install]

WantedBy=multi-user.target

[root@node07 ~]# systemctl daemon-reload

[root@node07 ~]# systemctl start nexus.service

[root@node07 ~]# systemctl status nexus.service

[root@node07 ~]# cat /usr/local/sonatype-work/nexus3/admin.password #查看默认密码

f4864636-480a-4cf6-af97-6d6977fb040a

# 找一台电脑连接192.168.100.107:8081设置仓库即可12、部署lvs

# nginx2(192.168.100.102):

[root@node02 ~]# ip a a 192.168.100.88/32 dev tunl0

[root@node01 ~]# ip link set up tunl0

[root@node02 ~]# echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

[root@node02 ~]# echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

[root@node02 ~]# echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

[root@node02 ~]# echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

[root@node02 ~]# echo 0 > /proc/sys/net/ipv4/conf/all/rp_filter

[root@node02 ~]# echo 0 > /proc/sys/net/ipv4/conf/tunl0/rp_filter # nginx2(192.168.100.101):

[root@node01 ~]# ip a a 192.168.100.99/32 dev tunl0

[root@node01 ~]# ip link set up tunl0

[root@node01 ~]# echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

[root@node01 ~]# echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

[root@node01 ~]# echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

[root@node01 ~]# echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

[root@node01 ~]# echo 0 > /proc/sys/net/ipv4/conf/all/rp_filter

[root@node01 ~]# echo 0 > /proc/sys/net/ipv4/conf/tunl0/rp_filter # LVS(192.168.100.108): (因各个反向代理服务器只有一台,所以每个网站只设置一个IP,如果增加了反向代理服务器,在此多增加一个ip即可)

[root@node08 ~]# echo 1 > /proc/sys/net/ipv4/ip_forward #临时开启ip转发功能

[root@node08 ~]# yum install ipvsadm -y

[root@node08 ~]# ipvsadm -A -t 192.168.100.88:80 -s wrr

[root@node08 ~]# ipvsadm -a -t 192.168.100.88:80 -r 192.168.100.102:80 -i

[root@node08 ~]# ipvsadm -A -t 192.168.100.99:80

[root@node08 ~]# ipvsadm -a -t 192.168.100.99:80 -r 192.168.100.101:80 -i13、部署firewalld

# firewalld(192.168.100.109):

[root@node09 ~]# echo 1 > /proc/sys/net/ipv4/ip_forward

[root@node09 ~]# iptables -t nat -A PREROUTING -d 172.20.0.32 -p tcp --dport 80 -j DNAT --to-destination 192.168.100.88:80

[root@node09 ~]# iptables -t nat -A PREROUTING -d 172.20.0.249 -p tcp --dport 80 -j DNAT --to-destination 192.168.100.99:80

[root@node09 ~]# iptables -t nat -nvL

# 这里就可以用172.20.0.0网段的主机测试下是否能访问jpress和shopxo,如果无法访问,把nginx反向代理的ssl禁用,如下:

upstream shopxo {

# hash $remote_addr;

server 192.168.100.105;

server 192.168.100.106;

}

server {

listen 80;

server_name shopxo.wang.org;

# return 302 https://$server_name$request_uri;

location / {

proxy_pass http://shopxo;

proxy_set_header host $http_host;

# proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

#server {

# listen 443 ssl http2;

# server_name shopxo.wang.org;

# ssl_certificate /etc/nginx/ssl/jpress.wang.org.pem;

# ssl_certificate_key /etc/nginx/ssl/jpress.wang.org.key;

# client_max_body_size 20m;

# location / {

# proxy_pass http://shopxo;

# proxy_set_header host $http_host;

# proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

# }

#}

14、部署客户路由器

#client-router(192.168.168.88):

[root@client-router ~]#echo 1 > /proc/sys/net/ipv4/ip_forward

[root@client-router ~]#iptables -t nat -A POSTROUTING -s 192.168.168.0/24 -j MASQUERADE15、部署DNS

# DNS(192.168.168.100):

[root@dns ~]# vim /etc/named.conf

// listen-on port 53 { 127.0.0.1; };

// listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

secroots-file "/var/named/data/named.secroots";

recursing-file "/var/named/data/named.recursing";

// allow-query { localhost; };

[root@dns ~]# vim /etc/named.rfc1912.zones

zone "wang.org" IN {

type master;

file "wang.org.zone";

};

[root@dns ~]# cd /var/named/

[root@dns named]# cp -p named.localhost wang.org.zone

[root@dns named]# vim wang.org.zone

$TTL 1D

@ IN SOA admin admin.wang.org. (

0 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

NS admin

admin A 192.168.168.100;

jpress A 172.20.0.249;

shopxo A 172.20.0.32;

[root@dns named]# named-checkconf #检查配置文件语法是否有问题,如果需要检查zone文件的语法是否有问题,需要安装上bind-utils

[root@dns named]# systemctl restart named

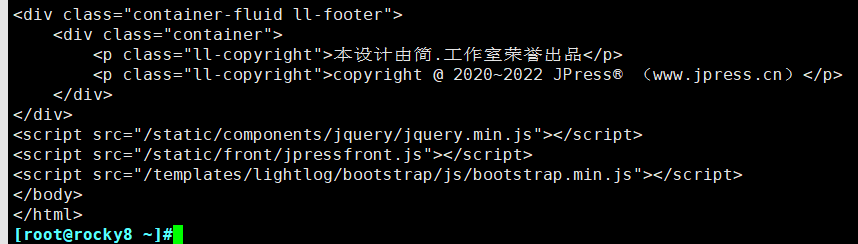

16、客户端测试

[root@rocky8 ~]#vim /etc/resolv.conf

nameserver 192.168.168.100

[root@rocky8 ~]#route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.168.88 0.0.0.0 UG 100 0 0 eth0

192.168.168.0 0.0.0.0 255.255.255.0 U 100 0 0 eth0

[root@rocky8 ~]#curl jpress.wang.org

...

<p class="ll-copyright">本设计由简.工作室荣誉出品</p>

<p class="ll-copyright">copyright @ 2020~2022 JPress (www.jpress.cn)</p>

</div>

</div>

<script src="/static/components/jquery/jquery.min.js"></script>

<script src="/static/front/jpressfront.js"></script>

<script src="/templates/lightlog/bootstrap/js/bootstrap.min.js"></script>

</body>

</html>

[root@rocky8 ~]#curl shopxo.wang.org

....

演示站点请勿支付,可在后台站点配置->基础配置(底部代码)修改</div>

<!-- js钩子 -->

<!-- 公共页面底部钩子 -->

中小型企业综合项目(Nginx+LVS+Tomcat+MGR+Nexus+NFS)的更多相关文章

- Linux系统部署JavaWeb项目(超详细tomcat,nginx,mysql)

转载自:Linux系统部署JavaWeb项目(超详细tomcat,nginx,mysql) 我的系统是阿里云的,香港的系统,本人选择的是系统镜像:CentOS 7.3 64位. 具体步骤: 配置Jav ...

- nginx于tomcat项目整合(拆分静态文件)

1.在很多时候我们在网站上应用的时候都会用到nginx,由于我们是java开发者,不可避免的是我们需要在我们的tomcat的工程中应用到nginx,这里的应用可以是请求转发,负载均衡,反向代理,配置虚 ...

- 15套java架构师大型分布式综合项目实战、千万高并发-视频教程

* { font-family: "Microsoft YaHei" !important } h1 { color: #FF0 } 15套java架构师.集群.高可用.高可扩 展 ...

- 项目实战8—tomcat企业级Web应用服务器配置与会话保持

tomcat企业级Web应用服务器配置与实战 环境背景:公司业务经过长期发展,有了很大突破,已经实现盈利,现公司要求加强技术架构应用功能和安全性以及开始向企业应用.移动APP等领域延伸,此时原来开发w ...

- Linux运维企业架构项目实战系列

Linux运维企业架构项目实战系列 项目实战1—LNMP的搭建.nginx的ssl加密.权限控制的实现 项目实战2—LVS.nginx实现负载均衡系列2.1 项目实战2.1—实现基于LVS负载均衡集群 ...

- Nginx/LVS/HAProxy负载均衡软件的优缺点详解

PS:Nginx/LVS/HAProxy是目前使用最广泛的三种负载均衡软件,本人都在多个项目中实施过,参考了一些资料,结合自己的一些使用经验,总结一下. 一般对负载均衡的使用是随着网站规模的提升根据不 ...

- Nginx/LVS/HAProxy负载均衡软件的优缺点详解(转)

PS:Nginx/LVS/HAProxy是目前使用最广泛的三种负载均衡软件,本人都在多个项目中实施过,参考了一些资料,结合自己的一些使用经验,总结一下. 一般对负载均衡的使用是随着网站规模的提升根据不 ...

- Nginx/LVS/HAProxy负载均衡软件的优缺点详解(转)

PS:Nginx/LVS/HAProxy是目前使用最广泛的三种负载均衡软件,本人都在多个项目中实施过,参考了一些资料,结合自己的一些使用经验,总结一下. 一般对负载均衡的使用是随着网站规模的提升根据不 ...

- (总结)Nginx/LVS/HAProxy负载均衡软件的优缺点详解

PS:Nginx/LVS/HAProxy是目前使用最广泛的三种负载均衡软件,本人都在多个项目中实施过,参考了一些资料,结合自己的一些使用经验,总结一下. 一般对负载均衡的使用是随着网站规模的提升根据不 ...

随机推荐

- 微软Azure配置中心 App Configuration (一):轻松集成到Asp.Net Core

写在前面 在日常开发中,我这边比较熟悉的配置中心有,携程Apollo,阿里Nacos(配置中心,服务治理一体) 之前文章: Asp.Net Core与携程阿波罗(Apollo)的第一次亲密接触 总体来 ...

- P4675 [BalticOI 2016 day1]Park (并查集)

题面 在 Byteland 的首都,有一个以围墙包裹的矩形公园,其中以圆形表示游客和树. 公园里有四个入口,分别在四个角落( 1 , 2 , 3 , 4 1, 2, 3, 4 1,2,3,4 分别对应 ...

- Qt 场景创建

1 创建 Q t Widget Application 2 创建窗口 3 创建后的目录 创建完成后运行一下 4 导入资源 将res文件拷贝到 项目工程目录下 添加资源 选择一模版.Qt-Reso ...

- 【lwip】06-网络接口层分析

目录 前言 6.1 概念引入 6.2 网络接口层数据概念流图 6.3 网卡收包程序流图 6.4 网卡数据结构 6.4.1 struct netif源码 6.4.2 字段分析 6.4.2.1 网卡链表 ...

- luogu P1488 肥猫的游戏

肥猫的游戏 P1488 肥猫的游戏 - 洛谷 | 计算机科学教育新生态 (luogu.com.cn) 题目描述 野猫与胖子,合起来简称肥猫,是一个班的同学,他们也都是数学高手,所以经常在一起讨论数学问 ...

- KingbaseES 如何查看应用执行的SQL的执行计划

通过explain ,我们可以获取特定SQL 的执行计划.但对于同一条SQL,不同的变量.不同的系统负荷,其执行计划可能不同.我们要如何取得SQL执行时间点的执行计划?KingbaseES 提供了 a ...

- Linux 常用脚本命令

Linux 常用(脚本)命令 1. 统计目录下文件个数 ll |grep "^-"|wc -1 解释 grep "^-"表示抓取以-开头的行(其他忽略)

- Thrift RPC改进—更加准确的超时管理

前言: 之前我们组内部使用Thrift搭建了一个小型的RPC框架,具体的实现细节可以参考我之前的一篇技术文章:https://www.cnblogs.com/kaiblog/p/9507642.htm ...

- 四、frp内网穿透服务端frps.ini各配置参数详解

[必须]标识头[common]是不可或缺的部分 [必须]服务器IPbind_addr = 0.0.0.00.0.0.0为服务器全局所有IP可用,假如你的服务器有多个IP则可以这样做,或者填写为指定其中 ...

- Elasticsearch索引生命周期管理探索

文章转载自: https://mp.weixin.qq.com/s?__biz=MzI2NDY1MTA3OQ==&mid=2247484130&idx=1&sn=454f199 ...