scrapy--多爬虫

大家好,我胡汉三又回来了!!!开心QAQ 由于最近一直在忙工作的事,之前学的一些爬虫知识忘得差不多了,只能再花多一些时间来回顾,否则根本无法前进。所以在这里也像高中老师那样提醒一下大家,--每天晚上花一点时间回顾一下,会省去很多回来再看的时间。

好了,闲话扯完了,让我们开始今天学到的知识点:一次运行多个爬虫

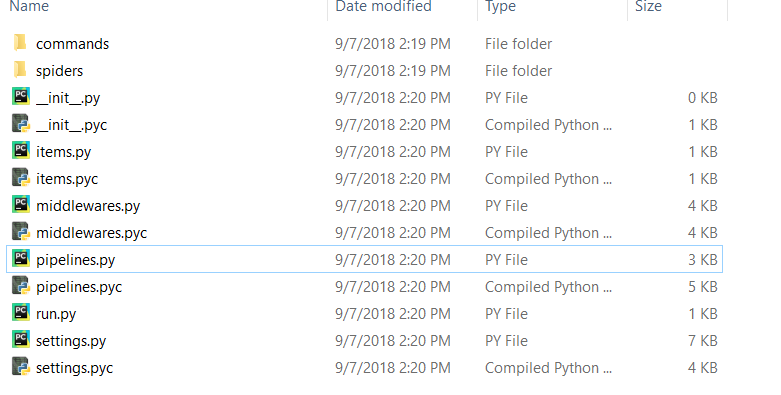

一.先给大家看下文件结构

1.1 Books文件

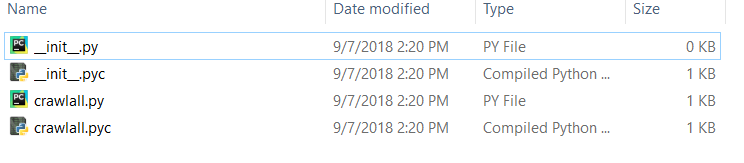

1.2 spiders文件

1.3 commands文件(__init__.py文件一定要加上,证明commands是个模块,否则报错--

__import__(name)

ImportError: No module named commands

)

二:文件详情

2.1 books.py

# -*- coding: utf-8 -*-

import scrapy

import pdb

from Books.settings import USER_AGENT

from Books.items import BooksItem

from scrapy.linkextractors import LinkExtractor

import redis class BooksSpider(scrapy.Spider):

name = 'books'

#allowed_domains = ['books.toscrape.com']

start_urls = ['http://books.toscrape.com']

#start_urls = ['https://blog.csdn.net/u011781521/article/details/70194744?locationNum=4&fps=1'] headers = {

'Accept': 'application/json, text/javascript, */*; q=0.01',

'Accept-Encoding': 'gzip, deflate',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Connection': 'keep-alive',

'Content-Length': '',

'Content-Type': 'application/x-www-form-urlencoded; charset=UTF-8',

'Host': 'books.toscrape.com',

'Origin': 'books.toscrape.com',

'Referer': 'http://books.toscrape.com',

'User-Agent': USER_AGENT,

'X-Requested-With': 'XMLHttpRequest',

} def parse(self, response):

#pass

sels = response.css('article.product_pod')

book = BooksItem()

for sel in sels: book['name'] = sel.css('h3 a::attr(title)').extract()[0]

book['price'] = sel.css('div.product_price p::text').extract()[0] yield book

#yield{ 'name':name,

#'price':price}

links = LinkExtractor(restrict_css='ul.pager li.next')

link = links.extract_links(response)

yield scrapy.Request(link[0].url,callback=self.parse)

2.2 login.py

# -*- coding: utf-8 -*-

import scrapy

import json

from scrapy.selector import Selector

from scrapy import FormRequest,Request

import pdb

#from Login.settings import USER_AGENT

from Books.settings import USER_AGENT

import time

from PIL import Image

import urllib

import re class ExampleLoginSpider(scrapy.Spider):

name = "login" allowed_domains = ["example.webscraping.com"]

login_url = 'http://example.webscraping.com/places/default/user/login'

headers = {

'Accept': 'application/json, text/javascript, */*; q=0.01',

'Accept-Encoding': 'gzip, deflate',

'Accept-Language': 'zh-CN,zh;q=0.9',

'Connection': 'keep-alive',

'Content-Length': '',

'Content-Type': 'application/x-www-form-urlencoded; charset=UTF-8',

# 'Host': 'matplotlib.org',

# 'Origin': 'matplotlib.org',

'Referer': 'http://example.webscraping.com/places/default/user/login',

'User-Agent': USER_AGENT,

'X-Requested-With': 'XMLHttpRequest',

} # 网页主页

#def parse(self, response):

#print(response.text) def start_requests(self):

#pdb.set_trace()

#为了能使用同一个状态持续的爬取网站, 就需要保存cookie, 使用cookie保存状态,

yield scrapy.Request(self.login_url,meta={'cookiejar':1},callback=self.parse_login) #启用cookie

def parse_login(self,response):

#pdb.set_trace()

next = response.xpath('//input[@name="_next"]/@value').extract()[0]

formname = response.xpath('//input[@name="_formname"]/@value').extract()[0]

formkey = response.xpath('//input[@name="_formkey"]/@value').extract()[0]

formdata = {'email':'youremail',

'password':'yourpasswd',

'_next':next,

'_formkey':formkey,

'_formname':formname,

} yield FormRequest.from_response(response,formdata=formdata,meta={'cookiejar':response.meta['cookiejar']},#注意这里cookie的获取

callback=self.parse_after) def parse_after(self,response):

pdb.set_trace()

if 'Welcome' in response.body:

print 'login acuessfully'

2.3 crawlall.py

#! /usr/bin/python #-*- coding:utf-8 -*- from scrapy.commands import ScrapyCommand

from scrapy.utils.project import get_project_settings

import pdb class Command(ScrapyCommand):

requires_project = True #以下是可以去掉的,并不影响结果,大家可以试一试

'''

def syntax(self):

return '[options]' def short_desc(self):

return 'Runs all of the spiders'

'''

def run(self,args,opts):

spider_list = self.crawler_process.spiders.list()

for name in spider_list:

self.crawler_process.crawl(name,**opts.__dict__)

self.crawler_process.start()

三:基本配置文件

3.1 middlewares.py

from scrapy import signals

import random

from Books.settings import IPPOOL class BooksSpiderMiddleware(object):

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the spider middleware does not modify the

# passed objects.

def __init__(self,ip=''):

self.ip = ip def process_request(self,request,spider):

#thisip = random.choice(IPPOOL)

#print('this is ip:'+ thisip['ipaddr'])

request.meta['proxy']='http://'+ IPPOOL[0]['ipaddr'] class LoginSpiderMiddleware(BooksSpiderMiddleware): #使用继承,login爬虫也可以使用同一代理

pass

3.2 pipelines.py

# -*- coding: utf-8 -*- # Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

import scrapy

import pdb

from scrapy.item import Item

from scrapy.exceptions import DropItem

import pymongo

import redis class BooksPipeline(object):

def process_item(self, item, spider):

return item class Price_convertPipeline(object):

price_rate = 8.04

def process_item(self,item,spider):

#price_old = item['price']

#pdb.set_trace()

price_new = self.price_rate * float(item['price'][1:])

item['price'] = '$%.2f'%price_new

return item class DuplicatePipeline(object):

def __init__(self):

self.set = set() def process_item(self,item,spider):

name = item['name']

if name in self.set:

raise DropItem('Duplicate book found:%s'%item) self.set.add(name)

return item class MongoDBPipeline(object):

@classmethod

def from_crawler(cls,crawler):

cls.DB_URL = crawler.settings.get('MONGO_DB_URL','mongodb://localhost:27017/')

cls.DB_NAME = crawler.settings.get('MONGO_DB_NAME','scrapy_data')

return cls()

def open_spider(self,spider):

self.client = pymongo.MongoClient(self.DB_URL)

self.db = self.client[self.DB_NAME]

def close_spider(self,spider):

self.client.close()

def process_item(self,item,spider):

#pdb.set_trace()

collections = self.db[spider.name]

post = dict(item) if isinstance(item,Item) else item

collections.insert_one(post)

return item class RedisPipeline(object):

def open_spider(self,spider):

db_host = spider.settings.get('REDIS_HOST','localhost')

db_port = spider.settings.get('REDIS_PORT',6379)

db_index = spider.settings.get('REDIS_DB_INDEX',0)

self.item_i = 0

self.db_conn = redis.StrictRedis(host=db_host,port=db_port,db=db_index) def close_spider(self,spider):

self.db_conn.connection_pool.disconnect() def process_item(self,item,spider):

post = dict(item) if isinstance(item,Item) else item

self.item_i += 1

self.db_conn.hmset('books:%s'%self.item_i,post) return item

3.3 settings.py

BOT_NAME = 'Books' SPIDER_MODULES = ['Books.spiders']

NEWSPIDER_MODULE = 'Books.spiders' USER_AGENT ={ #设置浏览器的User_agent

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1",

"Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 (KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 (KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 (KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 (KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24"

} IPPOOL = [

{"ipaddr":"10.240.252.20:911"},] ROBOTSTXT_OBEY = False

CONCURRENT_REQUESTS = 16

DOWNLOAD_DELAY = 0.5

COOKIES_ENABLED = False

TELNETCONSOLE_ENABLED = False MONGO_DB_URL = 'mongodb://localhost:27017/'

MONGO_DB_NAME = 'eilinge' REDIS_HOST='localhost'

REDIS_PORT=6379

REDIS_DB_INDEX=0 COMMANDS_MODULE = 'Books.commands' #项目名.commands(scrawlall.py文件所在目录) DOWNLOADER_MIDDLEWARES = {

#'Books.middlewares.BooksDownloaderMiddleware': 543,

'scrapy.contrib.downloadermiddleware.httpproxy.HttpProxyMiddleware': 543,

'Books.middlewares.LoginSpiderMiddleware':124,

'Books.middlewares.BooksSpiderMiddleware':125, #这里在middlewares.py文件中使用继承,即可共同使用同一文件,同理,当另一个爬虫文件需要特殊的设置,使用继承即可,或重写方法

#'Books.middlewares.ProxyMiddleware':125,

} ITEM_PIPELINES = {

'scrapy.contrib.downloadermiddleware.httpproxy.HttpProxyMiddleware': 1,

'Books.pipelines.Price_convertPipeline': 300,

'Books.pipelines.DuplicatePipeline': 350,

'Books.pipelines.RedisPipeline':404,

#'Books.pipelines.MongoDBPipeline':404,

}

四:运行命令

scrapy crawlall

五:查看结果

{'name': u'In a Dark, Dark Wood', 'price': '$157.83'}

2018-09-07 07:49:12 [scrapy.core.scraper] DEBUG: Scraped from <200 http://books.toscrape.com/catalogue/page-2.html>

{'name': u'Behind Closed Doors', 'price': '$419.85'}

2018-09-07 07:49:12 [scrapy.core.scraper] DEBUG: Scraped from <200 http://books.toscrape.com/catalogue/page-2.html>

{'name': u"You can't bury them all: Poems", 'price': '$270.39'} #books.py在跑

2018-09-07 07:49:12 [scrapy.core.engine] DEBUG: Crawled (200) <POST http://example.webscraping.com/places/default/user/login> (referer: http://example.webscraping.com/places/default/user/login)

> /home/eilinge/folder/Books/Books/spiders/login.py(60)parse_after()#login.py开始跑了

-> if 'Welcome' in response.body:

scrapy--多爬虫的更多相关文章

- 爬虫学习之基于Scrapy的爬虫自动登录

###概述 在前面两篇(爬虫学习之基于Scrapy的网络爬虫和爬虫学习之简单的网络爬虫)文章中我们通过两个实际的案例,采用不同的方式进行了内容提取.我们对网络爬虫有了一个比较初级的认识,只要发起请求获 ...

- scrapy爬虫学习系列二:scrapy简单爬虫样例学习

系列文章列表: scrapy爬虫学习系列一:scrapy爬虫环境的准备: http://www.cnblogs.com/zhaojiedi1992/p/zhaojiedi_python_00 ...

- Scrapy框架-----爬虫

说明:文章是本人读了崔庆才的Python3---网络爬虫开发实战,做的简单整理,希望能帮助正在学习的小伙伴~~ 1. 准备工作: 安装Scrapy框架.MongoDB和PyMongo库,如果没有安装, ...

- Scrapy创建爬虫项目

1.打开cmd命令行工具,输入scrapy startproject 项目名称 2.使用pycharm打开项目,查看项目目录 3.创建爬虫,打开CMD,cd命令进入到爬虫项目文件夹,输入scrapy ...

- Scrapy - CrawlSpider爬虫

crawlSpider 爬虫 思路: 从response中提取满足某个条件的url地址,发送给引擎,同时能够指定callback函数. 1. 创建项目 scrapy startproject mysp ...

- 第三百五十六节,Python分布式爬虫打造搜索引擎Scrapy精讲—scrapy分布式爬虫要点

第三百五十六节,Python分布式爬虫打造搜索引擎Scrapy精讲—scrapy分布式爬虫要点 1.分布式爬虫原理 2.分布式爬虫优点 3.分布式爬虫需要解决的问题

- 第三百三十五节,web爬虫讲解2—Scrapy框架爬虫—豆瓣登录与利用打码接口实现自动识别验证码

第三百三十五节,web爬虫讲解2—Scrapy框架爬虫—豆瓣登录与利用打码接口实现自动识别验证码 打码接口文件 # -*- coding: cp936 -*- import sys import os ...

- 第三百三十四节,web爬虫讲解2—Scrapy框架爬虫—Scrapy爬取百度新闻,爬取Ajax动态生成的信息

第三百三十四节,web爬虫讲解2—Scrapy框架爬虫—Scrapy爬取百度新闻,爬取Ajax动态生成的信息 crapy爬取百度新闻,爬取Ajax动态生成的信息,抓取百度新闻首页的新闻rul地址 有多 ...

- 第三百三十三节,web爬虫讲解2—Scrapy框架爬虫—Scrapy模拟浏览器登录—获取Scrapy框架Cookies

第三百三十三节,web爬虫讲解2—Scrapy框架爬虫—Scrapy模拟浏览器登录 模拟浏览器登录 start_requests()方法,可以返回一个请求给爬虫的起始网站,这个返回的请求相当于star ...

- 第三百三十二节,web爬虫讲解2—Scrapy框架爬虫—Scrapy使用

第三百三十二节,web爬虫讲解2—Scrapy框架爬虫—Scrapy使用 xpath表达式 //x 表示向下查找n层指定标签,如://div 表示查找所有div标签 /x 表示向下查找一层指定的标签 ...

随机推荐

- Spring Bean相互依赖问题

如果是通过get,set 注入就不会有问题 如果是通过构造函数注入,SPRING就会报循环引用注入出错 循环依赖——在采用构造器注入的方式配置bean时,很有可能会产生循环依赖的情况.比如说,一个类A ...

- python面试题——网络编程和并发

1.简述 OSI 七层协议. 物理层(电信号.比特流) 基于电器特性发送高低电压(电信号) RJ45.IEEE802.3 数据链路层(数据帧) 定义了电信号的分组方式,分组方式后来形成了统一的标准,即 ...

- 一道Java集合框架题

问题:某班30个学生的学号为20070301-20070330,全部选修了Java程序设计课程,给出所有同学的成绩(可用随机数产生,范围60-100),请编写程序将本班各位同学的成绩按照从低到高排序打 ...

- ssh代理登录内网服务器

服务器 192.168.48.81 # client 192.168.48.82 # bastion 192.168.48.83 # private password方式 192.168.48.81 ...

- redis在Windows下以后台服务一键搭建哨兵(主从复制)模式(多机)

redis在Windows下以后台服务一键搭建哨兵(主从复制)模式(多机) 一.概述 此教程介绍如何在windows系统中多个服务器之间,布置redis哨兵模式(主从复制),同时要以后台服务的模式运行 ...

- 基于FPGA的VGA显示设计(一)

前言 FPGA主要运用于芯片验证.通信.图像处理.显示VGA接口的显示器是最基本的要求了. 原理 首先需要了解 : (1)VGA接口协议:VGA端子_维基百科 .VGA视频传输标准_百度 引脚1 RE ...

- 在VirtualBox ubuntu/linux虚拟机中挂载mount共享文件夹

referemce: https://www.smarthomebeginner.com/mount-virtualbox-shared-folder-on-ubuntu-linux/ 1) Virt ...

- avast从隔离区恢复后,仍无法打开被误杀文件的解决方案

从隔离区中手动恢复后,隔离区中被恢复的文件将不再展示. 此时,如果手动恢复的文件仍无法打开(图标此时也异常),请: 将avast禁用: 将avast启用. 然后尝试重新打开被误隔离并手动恢复的文件.

- C# 安装 Visual Studio IDE

官网: https://visualstudio.microsoft.com/zh-hans/ 下载社区版(免费的) .微软的软件安装都是很nice的.安装过程中选择需要的配置进行安装(比如.net桌 ...

- 页面文本超出后CSS实现隐藏的方法

text-overflow: ellipsis !important; white-space: nowrap !important; overflow: hidden !important; dis ...