k8s-离线安装k8s

1.开始

目标

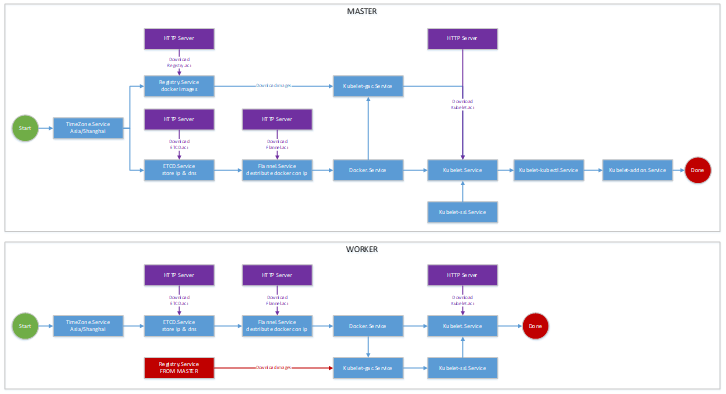

coreos的igniton.json有很强的可配置性,通过安装时指定集群配置的ignition.json,

安装完成后打开https://{{Master_IP}}:6443/ui直接访问k8s集群。

背景

coreos的官网有一篇裸机安装coreos并部署k8s的教程,CoreOS + Kubernetes Step By Step,

请确保已阅,下图是在ignition.json中配置的实际流程。

|

服务 |

说明 |

|

HTTP Server |

提供aci镜像下载,以及其他文件下载 |

|

TimeZone.Service |

将时区设置为上海 |

|

Registry.Service |

在rkt容器中运行,docker镜像服务,供整个集群下载k8s镜像 |

|

ETCD.Service |

在rkt容器中运行,集群发现服务 |

|

Flannel.Service |

在rkt容器中运行,为docker容器设置网络 |

|

Docker.Service |

|

|

Kubelet.Service |

在rkt容器中运行,K8S的基础服务kubelet |

|

kubelet-gac.Service |

为K8S基础服务预缓存镜像gcr.io/google_containers |

|

kubelet-ssl.Service |

为K8S制作证书 |

|

kubelet-kubectl.Service |

为K8S的Master节点安装kubectl工具 |

|

kubelet-addon.Service |

为K8S集群安装dns,dashboard,heapster等服务 |

2.部署

准备http服务器

|

文件 |

说明 |

|

./registry-2.6.1.tgz |

registry-2.6.1.aci |

|

./etcd-v3.2.0.tgz |

etcd-v3.2.0.aci |

|

./flannel-v0.7.1.tgz |

flannel-v0.7.1.aci |

|

./hyperkube-v1.7.3.tgz |

hyperkube-v1.7.3.aci |

|

./registry-data-v1.7.3.tgz |

./registry/data(pause,hyperkube,dns,dashboard,heapster) |

|

./kubectl-v1.7.0.tgz |

kubectl-v1.7.0 |

aci , rkt的标准镜像,coreos启动时把aci镜像下载到本地,这样不需要请求镜像服务即可运行服务;

registry-data , 在互联网环境下预下载好安装k8s所需镜像,然后将registry/data导出,服务器下载后,待registry服务启动将能提供k8s镜像给集群内节点下载;

kubectl , kubectl工具目前是1.7.0版本

准备pem格式根证书

|

文件 |

示例 |

|

|

./ca.key |

-----BEGIN RSA PRIVATE KEY----- MIIEpAIBAAKCAQEA4dafEVttwUB7eXofPzmpdmR533+Imn0KuMg4XhtB0sdyjKFf … PaDJxByNh84ysmAfuadDgcXNrF/8fDupIA0wf2qGLDlttahr2DA7Ug== -----END RSA PRIVATE KEY----- |

|

|

./ca.pem |

-----BEGIN CERTIFICATE----- MIIC+TCCAeECCQDjC0MVaVasEjANBgkqhkiG9w0BAQsFADASMRAwDgYDVQQDDAdr … RousxpNr1QvU6EwLupKYZc006snlVh4//r9QNjh5QRxRhl71XafR1S3pamBo -----END CERTIFICATE----- |

|

|

$ openssl genrsa -out ca.key 2048 $ openssl req -x509 -new -nodes -key ca.key -days 36500 -out ca.pem -subj "/CN=kube-ca" |

||

为CoreOS分配IP

Match : eth0

DNS : 192.168.3.1

Gateway : 192.168.3.1

|

节点 |

IP |

HOSTNAME |

|

Worker |

192.168.3.101 |

systech01 |

|

Master |

192.168.3.102 |

systech02 |

|

Worker |

192.168.3.103 |

systech03 |

master.yml模版

|

参数 |

示例 |

|

{{ MASTER_HOSTNAME }} |

systech01 |

|

{{ MASTER_IP }} |

192.168.3.102 |

|

{{ ETCD_CLUSTER }} |

systech01=http://192.168.3.101:2380,systech02=http://192.168.3.102:2380,systech03=http://192.168.3.103:2380 |

|

{{ CA_KEY }} |

-----BEGIN RSA PRIVATE KEY----- MIIEpAIBAAKCAQEA4dafEVttwUB7eXofPzmpdmR533+Imn0KuMg4XhtB0sdyjKFf … PaDJxByNh84ysmAfuadDgcXNrF/8fDupIA0wf2qGLDlttahr2DA7Ug== -----END RSA PRIVATE KEY----- |

|

{{ CA_PEM }} |

-----BEGIN CERTIFICATE----- MIIC+TCCAeECCQDjC0MVaVasEjANBgkqhkiG9w0BAQsFADASMRAwDgYDVQQDDAdr … RousxpNr1QvU6EwLupKYZc006snlVh4//r9QNjh5QRxRhl71XafR1S3pamBo -----END CERTIFICATE----- |

|

{{ NETWORK_MATCH }} |

eth0 |

|

{{ NETWORK_DNS }} |

192.168.3.1 |

|

{{ NETWORK_GATEWAY }} |

192.168.3.1 |

|

{{ HTTP_SERVER_LOCAL }} |

passwd:

users:

- name: "root"

password_hash: "$1$maTXmv6V$4UuGlRDpBZtipAhlPZ2/J0"

update:

group: "stable"

server: "https://public.update.core-os.net/v1/update/"

locksmith:

reboot_strategy: "etcd-lock"

window_start: "Sun 1:00"

window_length: "2h"

storage:

files:

- filesystem: "root"

path: "/etc/hostname"

mode: 0644

contents:

inline: {{ MASTER_HOSTNAME }}

- filesystem: "root"

path: "/etc/hosts"

mode: 0644

contents:

inline: |

127.0.0.1 localhost

127.0.0.1 {{ MASTER_HOSTNAME }}

{{ MASTER_IP }} reg.local

- filesystem: "root"

path: "/etc/kubernetes/scripts/registry.sh"

mode: 0755

contents:

inline: |

#!/bin/bash set -e KUBELET_SESSION="${KUBELET_SESSION:-/etc/kubernetes/session}"

HTTP_SERVER="${HTTP_SERVER:-http://192.168.3.100:8000/k8s}" mkdir -p $KUBELET_SESSION cd /etc/kubernetes if [[ -e $KUBELET_SESSION/registry.session ]]; then

exit 0

fi mkdir -p /etc/kubernetes/downloads

curl $HTTP_SERVER/registry-2.6.1.tgz > /etc/kubernetes/downloads/registry-2.6.1.tgz

curl $HTTP_SERVER/registry-data-v1.7.3.tgz > /etc/kubernetes/downloads/registry-data-v1.7.3.tgz

cd /etc/kubernetes/downloads && tar -xzf /etc/kubernetes/downloads/registry-2.6.1.tgz

cd /etc/kubernetes/downloads && tar -xzf /etc/kubernetes/downloads/registry-data-v1.7.3.tgz

mkdir -p /home/docker/registry

mv -n /etc/kubernetes/downloads/data /home/docker/registry/data touch $KUBELET_SESSION/registry.session

- filesystem: "root"

path: "/etc/kubernetes/scripts/kubelet-gac.sh"

mode: 0755

contents:

inline: |

#!/bin/bash set -e KUBELET_SESSION="${KUBELET_SESSION:-/etc/kubernetes/session}" mkdir -p $KUBELET_SESSION cd /etc/kubernetes if [[ -e $KUBELET_SESSION/kubelet-gac.session ]]; then

exit 0

fi docker pull reg.local:5000/k8s/pause-amd64:3.0

docker tag reg.local:5000/k8s/pause-amd64:3.0 gcr.io/google_containers/pause-amd64:3.0

docker rmi reg.local:5000/k8s/pause-amd64:3.0 touch $KUBELET_SESSION/kubelet-gac.session

- filesystem: "root"

path: "/etc/kubernetes/scripts/etcd3.sh"

mode: 0755

contents:

inline: |

#!/bin/bash set -e KUBELET_SESSION="${KUBELET_SESSION:-/etc/kubernetes/session}"

HTTP_SERVER="${HTTP_SERVER:-http://192.168.3.100:8000/k8s}" mkdir -p $KUBELET_SESSION cd /etc/kubernetes if [[ -e $KUBELET_SESSION/etcd3.session ]]; then

exit 0

fi mkdir -p /etc/kubernetes/downloads

curl $HTTP_SERVER/etcd-v3.2.0.tgz > /etc/kubernetes/downloads/etcd-v3.2.0.tgz

cd /etc/kubernetes/downloads && tar -xzf /etc/kubernetes/downloads/etcd-v3.2.0.tgz touch $KUBELET_SESSION/etcd3.session

- filesystem: "root"

path: "/etc/kubernetes/scripts/flannel.sh"

mode: 0755

contents:

inline: |

#!/bin/bash set -e KUBELET_SESSION="${KUBELET_SESSION:-/etc/kubernetes/session}"

HTTP_SERVER="${HTTP_SERVER:-http://192.168.3.100:8000/k8s}" mkdir -p $KUBELET_SESSION cd /etc/kubernetes if [[ -e $KUBELET_SESSION/flannel.session ]]; then

exit 0

fi mkdir -p /etc/kubernetes/downloads

curl $HTTP_SERVER/flannel-v0.7.1.tgz > /etc/kubernetes/downloads/flannel-v0.7.1.tgz

cd /etc/kubernetes/downloads && tar -xzf /etc/kubernetes/downloads/flannel-v0.7.1.tgz touch $KUBELET_SESSION/flannel.session

- filesystem: "root"

path: "/etc/kubernetes/cni/net.d/10-flannel.conf"

mode: 0755

contents:

inline: |

{

"name": "podnet",

"type": "flannel",

"delegate": {

"isDefaultGateway": true

}

}

- filesystem: "root"

path: "/etc/kubernetes/scripts/kubelet.sh"

mode: 0755

contents:

inline: |

#!/bin/bash set -e KUBELET_SESSION="${KUBELET_SESSION:-/etc/kubernetes/session}"

HTTP_SERVER="${HTTP_SERVER:-http://192.168.3.100:8000/k8s}" mkdir -p $KUBELET_SESSION cd /etc/kubernetes if [[ -e $KUBELET_SESSION/kubelet.session ]]; then

exit 0

fi mkdir -p /etc/kubernetes/downloads

curl $HTTP_SERVER/hyperkube-v1.7.3.tgz > /etc/kubernetes/downloads/hyperkube-v1.7.3.tgz

cd /etc/kubernetes/downloads && tar -xzf /etc/kubernetes/downloads/hyperkube-v1.7.3.tgz touch $KUBELET_SESSION/kubelet.session

- filesystem: "root"

path: "/etc/kubernetes/manifests/kube-apiserver.yaml"

mode: 0644

contents:

inline: |

apiVersion: v1

kind: Pod

metadata:

name: kube-apiserver

namespace: kube-system

spec:

hostNetwork: true

containers:

- name: kube-apiserver

image: reg.local:5000/k8s/hyperkube:v1.7.3

command:

- /hyperkube

- apiserver

- --bind-address=0.0.0.0

- --etcd-servers=http://{{ MASTER_IP }}:2379

- --allow-privileged=true

- --service-cluster-ip-range=10.3.0.0/24

- --service-node-port-range=0-32767

- --secure-port=6443

- --advertise-address={{ MASTER_IP }}

- --admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota

- --authorization-mode=RBAC

- --tls-cert-file=/etc/kubernetes/ssl/apiserver.pem

- --tls-private-key-file=/etc/kubernetes/ssl/apiserver.key

- --client-ca-file=/etc/kubernetes/ssl/ca.pem

- --service-account-key-file=/etc/kubernetes/ssl/apiserver.key

- --basic-auth-file=/etc/kubernetes/ssl/admin.csv

- --anonymous-auth=false

- --runtime-config=extensions/v1beta1=true,extensions/v1beta1/networkpolicies=true,rbac.authorization.k8s.io/v1beta1=true

ports:

- containerPort: 6443

hostPort: 6443

name: https

- containerPort: 8080

hostPort: 8080

name: local

volumeMounts:

- mountPath: /etc/kubernetes/ssl

name: ssl-certs-kubernetes

readOnly: true

- mountPath: /etc/ssl/certs

name: ssl-certs-host

readOnly: true

volumes:

- hostPath:

path: /etc/kubernetes/ssl

name: ssl-certs-kubernetes

- hostPath:

path: /usr/share/ca-certificates

name: ssl-certs-host

- filesystem: "root"

path: "/etc/kubernetes/manifests/kube-proxy.yaml"

mode: 0644

contents:

inline: |

---

apiVersion: v1

kind: Pod

metadata:

name: kube-proxy

namespace: kube-system

spec:

hostNetwork: true

containers:

- name: kube-proxy

image: reg.local:5000/k8s/hyperkube:v1.7.3

command:

- /hyperkube

- proxy

- --master=http://127.0.0.1:8080

securityContext:

privileged: true

volumeMounts:

- mountPath: /etc/ssl/certs

name: ssl-certs-host

readOnly: true

volumes:

- hostPath:

path: /usr/share/ca-certificates

name: ssl-certs-host

- filesystem: "root"

path: "/etc/kubernetes/manifests/kube-controller-manager.yaml"

mode: 0644

contents:

inline: |

apiVersion: v1

kind: Pod

metadata:

name: kube-controller-manager

namespace: kube-system

spec:

hostNetwork: true

containers:

- name: kube-controller-manager

image: reg.local:5000/k8s/hyperkube:v1.7.3

command:

- /hyperkube

- controller-manager

- --master=http://127.0.0.1:8080

- --leader-elect=true

- --service-account-private-key-file=/etc/kubernetes/ssl/apiserver.key

- --root-ca-file=/etc/kubernetes/ssl/ca.pem

resources:

requests:

cpu: 200m

livenessProbe:

httpGet:

host: 127.0.0.1

path: /healthz

port: 10252

initialDelaySeconds: 15

timeoutSeconds: 15

volumeMounts:

- mountPath: /etc/kubernetes/ssl

name: ssl-certs-kubernetes

readOnly: true

- mountPath: /etc/ssl/certs

name: ssl-certs-host

readOnly: true

volumes:

- hostPath:

path: /etc/kubernetes/ssl

name: ssl-certs-kubernetes

- hostPath:

path: /usr/share/ca-certificates

name: ssl-certs-host

- filesystem: "root"

path: "/etc/kubernetes/manifests/kube-scheduler.yaml"

mode: 0644

contents:

inline: |

apiVersion: v1

kind: Pod

metadata:

name: kube-scheduler

namespace: kube-system

spec:

hostNetwork: true

containers:

- name: kube-scheduler

image: reg.local:5000/k8s/hyperkube:v1.7.3

command:

- /hyperkube

- scheduler

- --master=http://127.0.0.1:8080

- --leader-elect=true

resources:

requests:

cpu: 100m

livenessProbe:

httpGet:

host: 127.0.0.1

path: /healthz

port: 10251

initialDelaySeconds: 15

timeoutSeconds: 15

- filesystem: "root"

path: "/etc/kubernetes/ssl/admin.csv"

mode: 0644

contents:

inline: |

58772015,admin,admin

- filesystem: "root"

path: "/etc/kubernetes/ssl/ca.key"

mode: 0644

contents:

inline: |

{{ CA_KEY }}

- filesystem: "root"

path: "/etc/kubernetes/ssl/ca.pem"

mode: 0644

contents:

inline: |

{{ CA_PEM }}

- filesystem: "root"

path: "/etc/kubernetes/ssl/openssl-admin.cnf"

mode: 0644

contents:

inline: |

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

IP.1 = {{ MASTER_IP }}

- filesystem: "root"

path: "/etc/kubernetes/ssl/openssl-apiserver.cnf"

mode: 0644

contents:

inline: |

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

DNS.1 = kubernetes

DNS.2 = kubernetes.default

DNS.3 = kubernetes.default.svc

DNS.4 = kubernetes.default.svc.cluster.local

IP.1 = 10.3.0.1

IP.2 = {{ MASTER_IP }}

- filesystem: "root"

path: "/etc/kubernetes/scripts/kubelet-ssl.sh"

mode: 0755

contents:

inline: |

#!/bin/bash set -e KUBELET_SESSION="${KUBELET_SESSION:-/etc/kubernetes/session}" mkdir -p $KUBELET_SESSION cd /etc/kubernetes/ssl if [[ -e $KUBELET_SESSION/kubelet-ssl.session ]]; then

exit 0

fi openssl genrsa -out apiserver.key 2048

openssl req -new -key apiserver.key -out apiserver.csr -subj "/CN=apiserver/C=CN/ST=BeiJing/L=Beijing/O=k8s/OU=System" -config /etc/kubernetes/ssl/openssl-apiserver.cnf

openssl x509 -req -in apiserver.csr -CA ca.pem -CAkey ca.key -CAcreateserial -out apiserver.pem -days 365 -extensions v3_req -extfile /etc/kubernetes/ssl/openssl-apiserver.cnf openssl genrsa -out admin.key 2048

openssl req -new -key admin.key -out admin.csr -subj "/CN=admin/C=CN/ST=BeiJing/L=Beijing/O=system:masters/OU=System"

openssl x509 -req -in admin.csr -CA ca.pem -CAkey ca.key -CAcreateserial -out admin.pem -days 365 touch $KUBELET_SESSION/kubelet-ssl.session

- filesystem: "root"

path: "/etc/kubernetes/scripts/kubelet-kubectl.sh"

mode: 0755

contents:

inline: |

#!/bin/bash set -e KUBELET_SESSION="${KUBELET_SESSION:-/etc/kubernetes/session}"

HTTP_SERVER="${HTTP_SERVER:-http://192.168.3.100:8000/k8s}" mkdir -p $KUBELET_SESSION cd /etc/kubernetes if [[ -e $KUBELET_SESSION/kubelet-kubectl.session ]]; then

exit 0

fi mkdir -p /opt/bin

rm -rf /opt/bin/kubectl-v1.7.0

rm -rf /opt/bin/kubectl curl $HTTP_SERVER/kubectl-v1.7.0.tgz > /opt/bin/kubectl-v1.7.0.tgz

cd /opt/bin && tar -xzf /opt/bin/kubectl-v1.7.0.tgz

rm -rf /opt/bin/kubectl-v1.7.0.tgz

chmod 0744 /opt/bin/kubectl-v1.7.0

ln -s /opt/bin/kubectl-v1.7.0 /opt/bin/kubectl MASTER_HOST={{ MASTER_IP }}

CA_CERT=/etc/kubernetes/ssl/ca.pem

ADMIN_KEY=/etc/kubernetes/ssl/admin.key

ADMIN_CERT=/etc/kubernetes/ssl/admin.pem /opt/bin/kubectl config set-cluster kubernetes --server=https://$MASTER_HOST:6443 --certificate-authority=$CA_CERT --embed-certs=true

/opt/bin/kubectl config set-credentials admin --certificate-authority=$CA_CERT --client-key=$ADMIN_KEY --client-certificate=$ADMIN_CERT --embed-certs=true

/opt/bin/kubectl config set-context kubernetes --cluster=kubernetes --user=admin

/opt/bin/kubectl config use-context kubernetes touch $KUBELET_SESSION/kubelet-kubectl.session

- filesystem: "root"

path: "/etc/kubernetes/addons/dns.yml"

mode: 0644

contents:

inline: |

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-dns

namespace: kube-system

data:

stubDomains: |

{"dex.ispacesys.cn":["{{ MASTER_IP }}"]} ---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "KubeDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.3.0.10

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP ---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-dns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile ---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1alpha1

metadata:

name: k8s-kube-dns

roleRef:

kind: ClusterRole

name: system:kube-dns

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: kube-dns

namespace: kube-system ---

apiVersion: v1

kind: ReplicationController

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

version: "1.14.4"

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

replicas: 1

selector:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

scheduler.alpha.kubernetes.io/tolerations: '[{"key":"CriticalAddonsOnly", "operator":"Exists"}]'

spec:

volumes:

- name: kube-dns-config

configMap:

name: kube-dns

optional: true

containers:

- name: kubedns

image: reg.local:5000/k8s/k8s-dns-kube-dns-amd64:1.14.4

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

livenessProbe:

httpGet:

path: /healthcheck/kubedns

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /readiness

port: 8081

scheme: HTTP

initialDelaySeconds: 3

timeoutSeconds: 5

args:

- --domain=cluster.local

- --dns-port=10053

- --config-dir=/kube-dns-config

- --v=2

env:

- name: PROMETHEUS_PORT

value: "10055"

ports:

- containerPort: 10053

name: dns-local

protocol: UDP

- containerPort: 10053

name: dns-tcp-local

protocol: TCP

- containerPort: 10055

name: metrics

protocol: TCP

volumeMounts:

- name: kube-dns-config

mountPath: /kube-dns-config

- name: dnsmasq

image: reg.local:5000/k8s/k8s-dns-dnsmasq-nanny-amd64:1.14.4

livenessProbe:

httpGet:

path: /healthcheck/dnsmasq

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- -v=2

- -logtostderr

- -configDir=/etc/k8s/dns/dnsmasq-nanny

- -restartDnsmasq=true

- --

- -k

- --cache-size=1000

- --log-facility=-

- --server=/cluster.local/127.0.0.1#10053

- --server=/in-addr.arpa/127.0.0.1#10053

- --server=/ip6.arpa/127.0.0.1#10053

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

resources:

requests:

cpu: 150m

memory: 20Mi

volumeMounts:

- name: kube-dns-config

mountPath: /etc/k8s/dns/dnsmasq-nanny

- name: sidecar

image: reg.local:5000/k8s/k8s-dns-sidecar-amd64:1.14.4

livenessProbe:

httpGet:

path: /metrics

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- --v=2

- --logtostderr

- --probe=kubedns,127.0.0.1:10053,kubernetes.default.svc.cluster.local,5,A

- --probe=dnsmasq,127.0.0.1:53,kubernetes.default.svc.cluster.local,5,A

ports:

- containerPort: 10054

name: metrics

protocol: TCP

resources:

requests:

memory: 20Mi

cpu: 10m

dnsPolicy: Default

serviceAccountName: kube-dns

- filesystem: "root"

path: "/etc/kubernetes/addons/dashboard.yml"

mode: 0644

contents:

inline: |

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard

namespace: kube-system ---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1alpha1

metadata:

name: dashboard-extended

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: dashboard

namespace: kube-system ---

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 80

targetPort: 9090 ---

apiVersion: v1

kind: ReplicationController

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

version: "v1.6.1"

kubernetes.io/cluster-service: "true"

spec:

replicas: 1

selector:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

version: "v1.6.1"

kubernetes.io/cluster-service: "true"

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

scheduler.alpha.kubernetes.io/tolerations: '[{"key":"CriticalAddonsOnly", "operator":"Exists"}]'

spec:

serviceAccountName: dashboard

nodeSelector:

kubernetes.io/hostname: {{ MASTER_IP }}

containers:

- name: kubernetes-dashboard

image: reg.local:5000/k8s/kubernetes-dashboard-amd64:v1.6.1

resources:

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 100m

memory: 50Mi

ports:

- containerPort: 9090

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30 - filesystem: "root"

path: "/etc/kubernetes/addons/heapster.yml"

mode: 0644

contents:

inline: |

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: heapster

namespace: kube-system ---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: heapster

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:heapster

subjects:

- kind: ServiceAccount

name: heapster

namespace: kube-system ---

kind: Service

apiVersion: v1

metadata:

name: heapster

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "Heapster"

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapster ---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: heapster

namespace: kube-system

labels:

k8s-app: heapster

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v1.3.0

spec:

replicas: 1

selector:

matchLabels:

k8s-app: heapster

version: v1.3.0

template:

metadata:

labels:

k8s-app: heapster

version: v1.3.0

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

serviceAccountName: heapster

containers:

- image: reg.local:5000/k8s/heapster-amd64:v1.3.0

name: heapster

livenessProbe:

httpGet:

path: /healthz

port: 8082

scheme: HTTP

initialDelaySeconds: 180

timeoutSeconds: 5

command:

- /heapster

- --source=kubernetes.summary_api:''

- --sink=influxdb:http://monitoring-influxdb:8086

- image: reg.local:5000/k8s/heapster-amd64:v1.3.0

name: eventer

command:

- /eventer

- --source=kubernetes:''

- --sink=influxdb:http://monitoring-influxdb:8086

- image: reg.local:5000/k8s/addon-resizer:1.7

name: heapster-nanny

resources:

limits:

cpu: 50m

memory: 93160Ki

requests:

cpu: 50m

memory: 93160Ki

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

command:

- /pod_nanny

- --cpu=80m

- --extra-cpu=0.5m

- --memory=140Mi

- --extra-memory=4Mi

- --threshold=5

- --deployment=heapster-v1.3.0

- --container=heapster

- --poll-period=300000

- --estimator=exponential

- image: reg.local:5000/k8s/addon-resizer:1.7

name: eventer-nanny

resources:

limits:

cpu: 50m

memory: 93160Ki

requests:

cpu: 50m

memory: 93160Ki

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

command:

- /pod_nanny

- --cpu=100m

- --extra-cpu=0m

- --memory=190Mi

- --extra-memory=500Ki

- --threshold=5

- --deployment=heapster-v1.3.0

- --container=eventer

- --poll-period=300000

- --estimator=exponential

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

- filesystem: "root"

path: "/etc/kubernetes/addons/influxdb-grafana.yml"

mode: 0644

contents:

inline: |

---

apiVersion: v1

kind: Service

metadata:

name: monitoring-grafana

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "Grafana"

spec:

# On production clusters, consider setting up auth for grafana, and

# exposing Grafana either using a LoadBalancer or a public IP.

# type: LoadBalancer

ports:

- port: 80

targetPort: 3000

selector:

k8s-app: influxGrafana ---

apiVersion: v1

kind: Service

metadata:

name: monitoring-influxdb

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "InfluxDB"

spec:

ports:

- name: http

port: 8083

targetPort: 8083

- name: api

port: 8086

targetPort: 8086

selector:

k8s-app: influxGrafana ---

apiVersion: v1

kind: ReplicationController

metadata:

name: monitoring-influxdb-grafana

namespace: kube-system

labels:

k8s-app: influxGrafana

version: v4.0.2

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

replicas: 1

selector:

k8s-app: influxGrafana

version: v4.0.2

template:

metadata:

labels:

k8s-app: influxGrafana

version: v4.0.2

kubernetes.io/cluster-service: "true"

spec:

containers:

- image: reg.local:5000/k8s/heapster-influxdb-amd64:v1.1.1

name: influxdb

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 500Mi

requests:

cpu: 100m

memory: 500Mi

ports:

- containerPort: 8083

- containerPort: 8086

volumeMounts:

- name: influxdb-persistent-storage

mountPath: /data

- image: reg.local:5000/k8s/heapster-grafana-amd64:v4.0.2

name: grafana

env:

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 100m

memory: 100Mi

env:

# This variable is required to setup templates in Grafana.

- name: INFLUXDB_SERVICE_URL

value: http://monitoring-influxdb:8086

# The following env variables are required to make Grafana accessible via

# the kubernetes api-server proxy. On production clusters, we recommend

# removing these env variables, setup auth for grafana, and expose the grafana

# service using a LoadBalancer or a public IP.

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

value: /api/v1/proxy/namespaces/kube-system/services/monitoring-grafana/

volumeMounts:

- name: grafana-persistent-storage

mountPath: /var

volumes:

- name: influxdb-persistent-storage

emptyDir: {}

- name: grafana-persistent-storage

emptyDir: {}

- filesystem: "root"

path: "/etc/kubernetes/addons/rbac-admin.yml"

mode: 0644

contents:

inline: |

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: k8s-admin

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: admin

- filesystem: "root"

path: "/etc/kubernetes/addons/rbac-mengkzhaoyun.yml"

mode: 0644

contents:

inline: |

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: k8s-mengkzhaoyun

namespace: kube-system

roleRef:

kind: ClusterRole

name: admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: User

name: mengkzhaoyun@gmail.com

apiGroup: rbac.authorization.k8s.io

- filesystem: "root"

path: "/etc/kubernetes/scripts/kubelet-addon.sh"

mode: 0755

contents:

inline: |

#!/bin/bash set -e KUBELET_SESSION="${KUBELET_SESSION:-/etc/kubernetes/session}" mkdir -p $KUBELET_SESSION cd /etc/kubernetes if [[ -e $KUBELET_SESSION/kubelet-addon.session ]]; then

exit 0

fi /opt/bin/kubectl get pods --namespace=kube-system /opt/bin/kubectl create -f /etc/kubernetes/addons/rbac-admin.yml

/opt/bin/kubectl create -f /etc/kubernetes/addons/rbac-mengkzhaoyun.yml /opt/bin/kubectl create -f /etc/kubernetes/addons/dns.yml

/opt/bin/kubectl create -f /etc/kubernetes/addons/dashboard.yml

/opt/bin/kubectl create -f /etc/kubernetes/addons/heapster.yml

/opt/bin/kubectl create -f /etc/kubernetes/addons/influxdb-grafana.yml touch $KUBELET_SESSION/kubelet-addon.session networkd:

units:

- name: 00-static.network

contents: |

[Match]

Name={{ NETWORK_MATCH }} [Network]

DNS={{ NETWORK_DNS }}

Address={{ MASTER_IP }}/24

Gateway={{ NETWORK_GATEWAY }}

DHCP=no

systemd:

units:

- name: "settimezone.service"

enable: true

contents: |

[Unit]

Description=time zone Asia/Shanghai

[Service]

ExecStart=/usr/bin/timedatectl set-timezone Asia/Shanghai

RemainAfterExit=yes

Type=oneshot

[Install]

WantedBy=multi-user.target

- name: "download-registry.service"

enable: true

contents: |

[Unit]

Description=download registry aci and data (HTTP)

Documentation=https://github.com/coreos/registry [Service]

Type=oneshot

Environment=HTTP_SERVER={{ HTTP_SERVER_LOCAL }}

ExecStart=/etc/kubernetes/scripts/registry.sh

[Install]

WantedBy=multi-user.target

- name: "registry.service"

enable: true

contents: |

[Unit]

Description=registry (Docker Hub)

Documentation=https://github.com/coreos/registry

After=download-registry.service [Service]

Environment=PATH=/opt/bin/:/usr/bin/:/usr/sbin:$PATH ExecStartPre=/usr/bin/mkdir -p /home/docker/registry/auth

ExecStartPre=/usr/bin/mkdir -p /home/docker/registry/data

ExecStartPre=/usr/bin/mkdir --parents /var/lib/coreos

ExecStartPre=-/usr/bin/rkt rm --uuid-file=/var/lib/coreos/registry-pod.uuid ExecStart=/usr/bin/rkt run \

--insecure-options=image \

--uuid-file-save=/var/lib/coreos/registry-pod.uuid \

--port=5000-tcp:5000 \

--volume auth,kind=host,source=/home/docker/registry/auth \

--volume data,kind=host,source=/home/docker/registry/data \

--mount volume=auth,target=/auth \

--mount volume=data,target=/var/lib/registry \

/etc/kubernetes/downloads/registry-2.6.1.aci \

--name=registry ExecStop=-/usr/bin/rkt stop --uuid-file=/var/lib/coreos/registry-pod.uuid Restart=always

RestartSec=10

TimeoutSec=infinity

[Install]

WantedBy=multi-user.target

- name: "etcd2.service"

enable: false

- name: "etcd-member.service"

enable: false

- name: "download-etcd3.service"

enable: true

contents: |

[Unit]

Description=download etcd3 aci (HTTP)

Documentation=https://github.com/coreos/registry [Service]

Type=oneshot

Environment=HTTP_SERVER={{ HTTP_SERVER_LOCAL }}

ExecStart=/etc/kubernetes/scripts/etcd3.sh

[Install]

WantedBy=multi-user.target

- name: "etcd3.service"

enable: true

contents: |

[Unit]

Description=etcd (System Application Container)

Documentation=https://github.com/coreos/etcd

Wants=network.target

Conflicts=etcd.service

Conflicts=etcd2.service

Conflicts=etcd-member.service

After=download-etcd3.service [Service]

Type=notify

RestartSec=10s

LimitNOFILE=40000 ExecStartPre=/usr/bin/mkdir --parents /var/lib/coreos

ExecStartPre=-/usr/bin/rkt rm --uuid-file=/var/lib/coreos/etcd3-pod.uuid Environment="ETCD_IMAGE=/etc/kubernetes/downloads/etcd-v3.2.0.aci"

Environment="ETCD_USER=etcd"

Environment="ETCD_DATA_DIR=/var/lib/etcd3"

Environment="RKT_GLOBAL_ARGS=--insecure-options=image"

Environment="RKT_RUN_ARGS=--uuid-file-save=/var/lib/coreos/etcd3-pod.uuid"

Environment="ETCD_IMAGE_ARGS=--name=etcd" ExecStart=/usr/lib/coreos/etcd-wrapper \

--name={{ MASTER_HOSTNAME }} \

--initial-cluster-token=spacesystech.com \

--initial-cluster={{ ETCD_CLUSTER }} \

--initial-cluster-state=new \

--advertise-client-urls=http://{{ MASTER_IP }}:2379 \

--initial-advertise-peer-urls=http://{{ MASTER_IP }}:2380 \

--listen-client-urls=http://{{ MASTER_IP }}:2379,http://127.0.0.1:2379 \

--listen-peer-urls=http://{{ MASTER_IP }}:2380 ExecStop=-/usr/bin/rkt stop --uuid-file=/var/lib/coreos/etcd3-pod.uuid [Install]

WantedBy=multi-user.target

- name: "download-flannel.service"

enable: true

contents: |

[Unit]

Description=download flannel aci (HTTP)

Documentation=https://github.com/coreos/registry [Service]

Type=oneshot

Environment=HTTP_SERVER={{ HTTP_SERVER_LOCAL }}

ExecStart=/etc/kubernetes/scripts/flannel.sh

[Install]

WantedBy=multi-user.target

- name: "flannel-docker-opts.service"

enable: true

dropins:

- name: 10-image.conf

contents: |

[Unit]

After=download-flannel.service

[Service]

Environment="FLANNEL_IMAGE=/etc/kubernetes/downloads/flannel-v0.7.1.aci"

Environment="RKT_GLOBAL_ARGS=--insecure-options=image"

- name: "flanneld.service"

enable: true

dropins:

- name: 10-image.conf

contents: |

[Unit]

After=etcd3.service download-flannel.service

[Service]

ExecStartPre=/usr/bin/etcdctl set /spacesystech.com/network2/config '{ "Network": "10.2.0.0/16","Backend": {"Type":"vxlan"} }' Environment="FLANNELD_IFACE={{ MASTER_IP }}"

Environment="FLANNELD_ETCD_ENDPOINTS=http://{{ MASTER_IP }}:2379"

Environment="FLANNELD_ETCD_PREFIX=/spacesystech.com/network2"

Environment="FLANNEL_IMAGE=/etc/kubernetes/downloads/flannel-v0.7.1.aci"

Environment="RKT_GLOBAL_ARGS=--insecure-options=image"

Environment="FLANNEL_IMAGE_ARGS=--name=flannel"

- name: "docker.service"

enable: true

dropins:

- name: 40-flannel.conf

contents: |

[Unit]

Requires=flanneld.service

After=flanneld.service

[Service]

Environment=DOCKER_OPT_BIP=""

Environment=DOCKER_OPT_IPMASQ=""

- name: 50-insecure-registry.conf

contents: |

[Service]

Environment=DOCKER_OPTS='--insecure-registry="reg.local:5000" --insecure-registry="registry.ispacesys.cn:5000"' - name: "kubelet-ssl.service"

enable: true

contents: |

[Unit]

Description=kubelet-ssl

Documentation=https://kubernetes.io

[Service]

Type=oneshot

Environment=KUBELET_SESSION=/etc/kubernetes/session

ExecStartPre=/usr/bin/mkdir -p /etc/kubernetes/session

ExecStart=/etc/kubernetes/scripts/kubelet-ssl.sh

[Install]

WantedBy=multi-user.target

- name: "kubelet-gac.service"

enable: true

contents: |

[Unit]

Description=kubelet-gac

Documentation=https://kubernetes.io

Requires=docker.service

After=docker.service

[Service]

Type=oneshot

Environment=KUBELET_SESSION=/etc/kubernetes/session

ExecStartPre=/usr/bin/mkdir -p /etc/kubernetes/session

ExecStart=/etc/kubernetes/scripts/kubelet-gac.sh

[Install]

WantedBy=multi-user.target

- name: "download-kubelet.service"

enable: true

contents: |

[Unit]

Description=download kubelet aci (HTTP)

Documentation=https://github.com/coreos/registry [Service]

Type=oneshot

Environment=HTTP_SERVER={{ HTTP_SERVER_LOCAL }}

ExecStart=/etc/kubernetes/scripts/kubelet.sh

[Install]

WantedBy=multi-user.target

- name: "kubelet.service"

enable: true

contents: |

[Unit]

Description=kubelet

Documentation=https://kubernetes.io

After=kubelet-ssl.service kubelet-gac.service docker.service registry.service download-kubelet.service

[Service]

ExecStartPre=/usr/bin/mkdir -p /etc/kubernetes/manifests

ExecStartPre=/usr/bin/mkdir -p /var/log/containers

ExecStartPre=-/usr/bin/rkt rm --uuid-file=/var/run/kubelet-pod.uuid Environment=PATH=/opt/bin/:/usr/bin/:/usr/sbin:$PATH

Environment=KUBELET_IMAGE=/etc/kubernetes/downloads/hyperkube-v1.7.3.aci

Environment="RKT_GLOBAL_ARGS=--insecure-options=image"

Environment="RKT_OPTS=--volume modprobe,kind=host,source=/usr/sbin/modprobe \

--mount volume=modprobe,target=/usr/sbin/modprobe \

--volume lib-modules,kind=host,source=/lib/modules \

--mount volume=lib-modules,target=/lib/modules \

--uuid-file-save=/var/run/kubelet-pod.uuid \

--volume var-log,kind=host,source=/var/log \

--mount volume=var-log,target=/var/log \

--volume dns,kind=host,source=/etc/resolv.conf \

--mount volume=dns,target=/etc/resolv.conf" ExecStart=/usr/lib/coreos/kubelet-wrapper \

--api-servers=http://127.0.0.1:8080 \

--network-plugin-dir=/etc/kubernetes/cni/net.d \

--network-plugin= \

--register-node=true \

--allow-privileged=true \

--pod-manifest-path=/etc/kubernetes/manifests \

--hostname-override={{ MASTER_IP }} \

--cluster-dns=10.3.0.10 \

--cluster-domain=cluster.local ExecStop=-/usr/bin/rkt stop --uuid-file=/var/run/kubelet-pod.uuid Restart=always

RestartSec=10

[Install]

WantedBy=multi-user.target

- name: "kubelet-kubectl.service"

enable: true

contents: |

[Unit]

Description=kubelet-kubectl

Documentation=https://kubernetes.io

After=kubelet-ssl.service kubelet.service

[Service]

Type=oneshot

RestartSec=20

Environment=KUBELET_SESSION=/etc/kubernetes/session

Environment=HTTP_SERVER={{ HTTP_SERVER_LOCAL }}

ExecStartPre=/usr/bin/mkdir -p /etc/kubernetes/session

ExecStart=/etc/kubernetes/scripts/kubelet-kubectl.sh

[Install]

WantedBy=multi-user.target

- name: "kubelet-addon.service"

enable: true

contents: |

[Unit]

Description=kubelet-addon

Documentation=https://kubernetes.io

After=kubelet-kubectl

[Service]

Restart=on-failure

RestartSec=10

Environment=KUBELET_SESSION=/etc/kubernetes/session

ExecStartPre=/usr/bin/mkdir -p /etc/kubernetes/session

ExecStart=/etc/kubernetes/scripts/kubelet-addon.sh

[Install]

WantedBy=multi-user.target

WantedBy=multi-user.target

woker.yml模版

|

参数 |

示例 |

|

{{ WORKER_HOSTNAME }} |

systech01 |

|

{{ WORKER_IP }} |

192.168.3.101 |

|

{{ MASTER_IP }} |

192.168.3.102 |

|

{{ ETCD_CLUSTER }} |

systech01=http://192.168.3.101:2380,systech02=http://192.168.3.102:2380,systech03=http://192.168.3.103:2380 |

|

{{ CA_KEY }} |

-----BEGIN RSA PRIVATE KEY----- MIIEpAIBAAKCAQEA4dafEVttwUB7eXofPzmpdmR533+Imn0KuMg4XhtB0sdyjKFf … PaDJxByNh84ysmAfuadDgcXNrF/8fDupIA0wf2qGLDlttahr2DA7Ug== -----END RSA PRIVATE KEY----- |

|

{{ CA_PEM }} |

-----BEGIN CERTIFICATE----- MIIC+TCCAeECCQDjC0MVaVasEjANBgkqhkiG9w0BAQsFADASMRAwDgYDVQQDDAdr … RousxpNr1QvU6EwLupKYZc006snlVh4//r9QNjh5QRxRhl71XafR1S3pamBo -----END CERTIFICATE----- |

|

{{ NETWORK_MATCH }} |

eth0 |

|

{{ NETWORK_DNS }} |

192.168.3.1 |

|

{{ NETWORK_GATEWAY }} |

192.168.3.1 |

|

{{ HTTP_SERVER_LOCAL }} |

passwd:

users:

- name: "root"

password_hash: "$1$maTXmv6V$4UuGlRDpBZtipAhlPZ2/J0"

update:

group: "stable"

server: "https://public.update.core-os.net/v1/update/"

locksmith:

reboot_strategy: "etcd-lock"

window_start: "Sun 1:00"

window_length: "2h"

storage:

files:

- filesystem: "root"

path: "/etc/hostname"

mode: 0644

contents:

inline: {{ WORKER_HOSTNAME }}

- filesystem: "root"

path: "/etc/hosts"

mode: 0644

contents:

inline: |

127.0.0.1 localhost

127.0.0.1 {{ WORKER_HOSTNAME }}

{{ MASTER_IP }} reg.local

- filesystem: "root"

path: "/etc/kubernetes/scripts/kubelet-gac.sh"

mode: 0755

contents:

inline: |

#!/bin/bash set -e KUBELET_SESSION="${KUBELET_SESSION:-/etc/kubernetes/session}" mkdir -p $KUBELET_SESSION cd /etc/kubernetes if [[ -e $KUBELET_SESSION/kubelet-gac.session ]]; then

exit 0

fi docker pull reg.local:5000/k8s/pause-amd64:3.0

docker tag reg.local:5000/k8s/pause-amd64:3.0 gcr.io/google_containers/pause-amd64:3.0

docker rmi reg.local:5000/k8s/pause-amd64:3.0 touch $KUBELET_SESSION/kubelet-gac.session

- filesystem: "root"

path: "/etc/kubernetes/scripts/etcd3.sh"

mode: 0755

contents:

inline: |

#!/bin/bash set -e KUBELET_SESSION="${KUBELET_SESSION:-/etc/kubernetes/session}"

HTTP_SERVER="${HTTP_SERVER:-http://192.168.3.100:8000/k8s}" mkdir -p $KUBELET_SESSION cd /etc/kubernetes if [[ -e $KUBELET_SESSION/etcd3.session ]]; then

exit 0

fi mkdir -p /etc/kubernetes/downloads

curl $HTTP_SERVER/etcd-v3.2.0.tgz > /etc/kubernetes/downloads/etcd-v3.2.0.tgz

cd /etc/kubernetes/downloads && tar -xzf /etc/kubernetes/downloads/etcd-v3.2.0.tgz touch $KUBELET_SESSION/etcd3.session

- filesystem: "root"

path: "/etc/kubernetes/scripts/flannel.sh"

mode: 0755

contents:

inline: |

#!/bin/bash set -e KUBELET_SESSION="${KUBELET_SESSION:-/etc/kubernetes/session}"

HTTP_SERVER="${HTTP_SERVER:-http://192.168.3.100:8000/k8s}" mkdir -p $KUBELET_SESSION cd /etc/kubernetes if [[ -e $KUBELET_SESSION/flannel.session ]]; then

exit 0

fi mkdir -p /etc/kubernetes/downloads

curl $HTTP_SERVER/flannel-v0.7.1.tgz > /etc/kubernetes/downloads/flannel-v0.7.1.tgz

cd /etc/kubernetes/downloads && tar -xzf /etc/kubernetes/downloads/flannel-v0.7.1.tgz touch $KUBELET_SESSION/flannel.session

- filesystem: "root"

path: "/etc/kubernetes/scripts/kubelet.sh"

mode: 0755

contents:

inline: |

#!/bin/bash set -e KUBELET_SESSION="${KUBELET_SESSION:-/etc/kubernetes/session}"

HTTP_SERVER="${HTTP_SERVER:-http://192.168.3.100:8000/k8s}" mkdir -p $KUBELET_SESSION cd /etc/kubernetes if [[ -e $KUBELET_SESSION/kubelet.session ]]; then

exit 0

fi mkdir -p /etc/kubernetes/downloads

curl $HTTP_SERVER/hyperkube-v1.7.3.tgz > /etc/kubernetes/downloads/hyperkube-v1.7.3.tgz

cd /etc/kubernetes/downloads && tar -xzf /etc/kubernetes/downloads/hyperkube-v1.7.3.tgz touch $KUBELET_SESSION/kubelet.session

- filesystem: "root"

path: "/etc/kubernetes/manifests/kube-proxy.yaml"

mode: 0644

contents:

inline: |

---

apiVersion: v1

kind: Pod

metadata:

name: kube-proxy

namespace: kube-system

spec:

hostNetwork: true

containers:

- name: kube-proxy

image: reg.local:5000/k8s/hyperkube:v1.7.3

command:

- /hyperkube

- proxy

- --master=https://{{ MASTER_IP }}:6443

- --kubeconfig=/etc/kubernetes/kubeproxy-kubeconfig.yaml

- --proxy-mode=iptables

securityContext:

privileged: true

volumeMounts:

- mountPath: /etc/ssl/certs

name: "ssl-certs"

- mountPath: /etc/kubernetes/kubeproxy-kubeconfig.yaml

name: "kubeconfig"

readOnly: true

- mountPath: /etc/kubernetes/ssl

name: "etc-kube-ssl"

readOnly: true

- mountPath: /var/run/dbus

name: dbus

readOnly: false

volumes:

- name: "ssl-certs"

hostPath:

path: "/usr/share/ca-certificates"

- name: "kubeconfig"

hostPath:

path: "/etc/kubernetes/kubeproxy-kubeconfig.yaml"

- name: "etc-kube-ssl"

hostPath:

path: "/etc/kubernetes/ssl"

- hostPath:

path: /var/run/dbus

name: dbus

- filesystem: "root"

path: "/etc/kubernetes/kubeproxy-kubeconfig.yaml"

mode: 0644

contents:

inline: |

apiVersion: v1

kind: Config

clusters:

- name: local

cluster:

certificate-authority: /etc/kubernetes/ssl/ca.pem

users:

- name: kubelet

user:

client-certificate: /etc/kubernetes/ssl/kubelet.pem

client-key: /etc/kubernetes/ssl/kubelet.key

contexts:

- context:

cluster: local

user: kubelet

name: kubelet-context

current-context: kubelet-context

- filesystem: "root"

path: "/etc/kubernetes/kubelet-kubeconfig.yaml"

mode: 0644

contents:

inline: |

---

apiVersion: v1

kind: Config

clusters:

- name: local

cluster:

certificate-authority: /etc/kubernetes/ssl/ca.pem

users:

- name: kubelet

user:

client-certificate: /etc/kubernetes/ssl/kubeproxy.pem

client-key: /etc/kubernetes/ssl/kubeproxy.key

contexts:

- context:

cluster: local

user: kubelet

name: kubelet-context

current-context: kubelet-context

- filesystem: "root"

path: "/etc/kubernetes/ssl/ca.key"

mode: 0644

contents:

inline: |

{{ CA_KEY }}

- filesystem: "root"

path: "/etc/kubernetes/ssl/ca.pem"

mode: 0644

contents:

inline: |

{{ CA_PEM }}

- filesystem: "root"

path: "/etc/kubernetes/ssl/openssl-kubelet.cnf"

mode: 0644

contents:

inline: |

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

IP.1 = {{ WORKER_IP }}

- filesystem: "root"

path: "/etc/kubernetes/ssl/openssl-kubeproxy.cnf"

mode: 0644

contents:

inline: |

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

IP.1 = {{ WORKER_IP }}

- filesystem: "root"

path: "/etc/kubernetes/scripts/ssl.sh"

mode: 0755

contents:

inline: |

#!/bin/bash set -e KUBELET_SESSION="${KUBELET_SESSION:-/etc/kubernetes/session}" mkdir -p $KUBELET_SESSION cd /etc/kubernetes/ssl if [[ -e $KUBELET_SESSION/kubelet-ssl.session ]]; then

exit 0

fi openssl genrsa -out kubeproxy.key 2048

openssl req -new -key kubeproxy.key -out kubeproxy.csr -subj "/CN=admin/C=CN/ST=BeiJing/L=Beijing/O=system:masters/OU=System" -config openssl-kubeproxy.cnf

openssl x509 -req -in kubeproxy.csr -CA ca.pem -CAkey ca.key -CAcreateserial -out kubeproxy.pem -days 365 -extensions v3_req -extfile openssl-kubeproxy.cnf openssl genrsa -out kubelet.key 2048

openssl req -new -key kubelet.key -out kubelet.csr -subj "/CN=admin/C=CN/ST=BeiJing/L=Beijing/O=system:masters/OU=System" -config openssl-kubelet.cnf

openssl x509 -req -in kubelet.csr -CA ca.pem -CAkey ca.key -CAcreateserial -out kubelet.pem -days 365 -extensions v3_req -extfile openssl-kubelet.cnf touch $KUBELET_SESSION/kubelet-ssl.session

networkd:

units:

- name: 00-static.network

contents: |

[Match]

Name={{ NETWORK_MATCH }} [Network]

DNS={{ NETWORK_DNS }}

Address={{ WORKER_IP }}/24

Gateway={{ NETWORK_GATEWAY }}

DHCP=no

systemd:

units:

- name: "settimezone.service"

enable: true

contents: |

[Unit]

Description=Set the time zone Asia/Shanghai [Service]

ExecStart=/usr/bin/timedatectl set-timezone Asia/Shanghai

RemainAfterExit=yes

Type=oneshot [Install]

WantedBy=multi-user.target

- name: "etcd2.service"

enable: false

- name: "etcd-member.service"

enable: false

- name: "download-etcd3.service"

enable: true

contents: |

[Unit]

Description=download etcd3 aci (HTTP)

Documentation=https://github.com/coreos/registry [Service]

Type=oneshot

Environment=HTTP_SERVER={{ HTTP_SERVER_LOCAL }}

ExecStart=/etc/kubernetes/scripts/etcd3.sh

[Install]

WantedBy=multi-user.target

- name: "etcd3.service"

enable: true

contents: |

[Unit]

Description=etcd (System Application Container)

Documentation=https://github.com/coreos/etcd

Wants=network.target

Conflicts=etcd.service

Conflicts=etcd2.service

Conflicts=etcd-member.service

Requires=download-etcd3.service

After=download-etcd3.service [Service]

Type=notify

RestartSec=10s

LimitNOFILE=40000 ExecStartPre=/usr/bin/mkdir --parents /var/lib/coreos

ExecStartPre=-/usr/bin/rkt rm --uuid-file=/var/lib/coreos/etcd3-pod.uuid Environment="ETCD_IMAGE=/etc/kubernetes/downloads/etcd-v3.2.0.aci"

Environment="ETCD_USER=etcd"

Environment="ETCD_DATA_DIR=/var/lib/etcd3"

Environment="RKT_GLOBAL_ARGS=--insecure-options=image"

Environment="RKT_RUN_ARGS=--uuid-file-save=/var/lib/coreos/etcd3-pod.uuid"

Environment="ETCD_IMAGE_ARGS=--name=etcd" ExecStart=/usr/lib/coreos/etcd-wrapper \

--name={{ WORKER_HOSTNAME }} \

--initial-cluster-token=spacesystech.com \

--initial-cluster={{ ETCD_CLUSTER }} \

--initial-cluster-state=new \

--advertise-client-urls=http://{{ WORKER_IP }}:2379 \

--initial-advertise-peer-urls=http://{{ WORKER_IP }}:2380 \

--listen-client-urls=http://{{ WORKER_IP }}:2379,http://127.0.0.1:2379 \

--listen-peer-urls=http://{{ WORKER_IP }}:2380 ExecStop=-/usr/bin/rkt stop --uuid-file=/var/lib/coreos/etcd3-pod.uuid [Install]

WantedBy=multi-user.target

- name: "download-flannel.service"

enable: true

contents: |

[Unit]

Description=download flannel aci (HTTP)

Documentation=https://github.com/coreos/registry [Service]

Type=oneshot

Environment=HTTP_SERVER={{ HTTP_SERVER_LOCAL }}

ExecStart=/etc/kubernetes/scripts/flannel.sh

[Install]

WantedBy=multi-user.target

- name: "flannel-docker-opts.service"

enable: true

dropins:

- name: 10-image.conf

contents: |

[Unit]

Requires=download-flannel.service

After=download-flannel.service

[Service]

Environment="FLANNEL_IMAGE=/etc/kubernetes/downloads/flannel-v0.7.1.aci"

Environment="RKT_GLOBAL_ARGS=--insecure-options=image"

- name: "flanneld.service"

enable: true

dropins:

- name: 10-image.conf

contents: |

[Unit]

Requires=etcd3.service download-flannel.service

After=etcd3.service download-flannel.service

[Service]

ExecStartPre=/usr/bin/etcdctl set /spacesystech.com/network2/config '{ "Network": "10.2.0.0/16","Backend": {"Type":"vxlan"} }' Environment="FLANNELD_IFACE={{ WORKER_IP }}"

Environment="FLANNELD_ETCD_ENDPOINTS=http://{{ WORKER_IP }}:2379"

Environment="FLANNELD_ETCD_PREFIX=/spacesystech.com/network2"

Environment="FLANNEL_IMAGE=/etc/kubernetes/downloads/flannel-v0.7.1.aci"

Environment="RKT_GLOBAL_ARGS=--insecure-options=image"

Environment="FLANNEL_IMAGE_ARGS=--name=flannel"

- name: "docker.service"

enable: true

dropins:

- name: 40-flannel.conf

contents: |

[Unit]

Requires=flanneld.service

After=flanneld.service

[Service]

Environment=DOCKER_OPT_BIP=""

Environment=DOCKER_OPT_IPMASQ=""

- name: 50-insecure-registry.conf

contents: |

[Service]

Environment=DOCKER_OPTS='--insecure-registry="reg.local:5000"'

- name: "kubelet-ssl.service"

enable: true

contents: |

[Unit]

Description=kubelet-ssl

Documentation=https://kubernetes.io

[Service]

Type=oneshot

Environment=KUBELET_SESSION=/etc/kubernetes/session

ExecStartPre=/usr/bin/mkdir -p /etc/kubernetes/session

ExecStart=/etc/kubernetes/scripts/ssl.sh

[Install]

WantedBy=multi-user.target

- name: "kubelet-gac.service"

enable: true

contents: |

[Unit]

Description=kubelet-gac

Documentation=https://kubernetes.io

Requires=docker.service

After=docker.service

[Service]

Type=oneshot

Environment=KUBELET_SESSION=/etc/kubernetes/session

ExecStartPre=/usr/bin/mkdir -p /etc/kubernetes/session

ExecStart=/etc/kubernetes/scripts/kubelet-gac.sh

[Install]

WantedBy=multi-user.target

- name: "download-kubelet.service"

enable: true

contents: |

[Unit]

Description=download kubelet aci (HTTP)

Documentation=https://github.com/coreos/registry [Service]

Type=oneshot

Environment=HTTP_SERVER={{ HTTP_SERVER_LOCAL }}

ExecStart=/etc/kubernetes/scripts/kubelet.sh

[Install]

WantedBy=multi-user.target

- name: "kubelet.service"

enable: true

contents: |

[Unit]

Description=kubelet

Documentation=https://kubernetes.io

Requires=kubelet-ssl.service kubelet-gac.service docker.service download-kubelet.service

After=kubelet-ssl.service kubelet-gac.service docker.service download-kubelet.service

[Service]

ExecStartPre=/usr/bin/mkdir -p /etc/kubernetes/manifests

ExecStartPre=/usr/bin/mkdir -p /var/log/containers

ExecStartPre=-/usr/bin/rkt rm --uuid-file=/var/run/kubelet-pod.uuid Environment=PATH=/opt/bin/:/usr/bin/:/usr/sbin:$PATH

Environment=KUBELET_IMAGE=/etc/kubernetes/downloads/hyperkube-v1.7.3.aci

Environment="RKT_GLOBAL_ARGS=--insecure-options=image"

Environment="RKT_OPTS=--uuid-file-save=/var/run/kubelet-pod.uuid \

--volume var-log,kind=host,source=/var/log \

--mount volume=var-log,target=/var/log \

--volume dns,kind=host,source=/etc/resolv.conf \

--mount volume=dns,target=/etc/resolv.conf" ExecStart=/usr/lib/coreos/kubelet-wrapper \

--api-servers=https://{{ MASTER_IP }}:6443 \

--network-plugin-dir=/etc/kubernetes/cni/net.d \

--network-plugin= \

--register-node=true \

--allow-privileged=true \

--pod-manifest-path=/etc/kubernetes/manifests \

--hostname-override={{ WORKER_IP }} \

--cluster-dns=10.3.0.10 \

--cluster-domain=cluster.local \

--kubeconfig=/etc/kubernetes/kubelet-kubeconfig.yaml \

--tls-cert-file=/etc/kubernetes/ssl/kubelet.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kubelet.key ExecStop=-/usr/bin/rkt stop --uuid-file=/var/run/kubelet-pod.uuid Restart=always

RestartSec=10

[Install]

WantedBy=multi-user.target

生成ignition.json

使用jinja2将上述模版转化为实际配置

./systech01.yml

./systech02.yml

./systech03.yml

下载CT工具将yml转化为ignition.json

# ct v0.4.2

2017.09.23

github : https://github.com/coreos/container-linux-config-transpiler

安装coreos

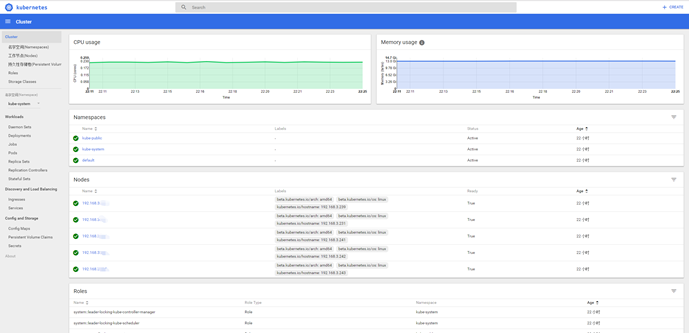

使用上面生成的ignition.json安装coreos,一切顺利访问https://192.168.3.102:6443/ui将会看到dashboard页面

k8s-离线安装k8s的更多相关文章

- centos7.3 kubernetes/k8s 1.10 离线安装 --已验证

本文介绍在centos7.3使用kubeadm快速离线安装kubernetes 1.10. 采用单master,单node(可以多node),占用资源较少,方便在笔记本或学习环境快速部署,不适用于生产 ...

- 在Ubuntu上使用离线方式快速安装K8S v1.11.1

在Ubuntu上使用离线方式快速安装K8S v1.11.1 0.安装包文件下载 https://pan.baidu.com/s/1nmC94Uh-lIl0slLFeA1-qw v1.11.1 文件大小 ...

- 【从零开始搭建K8S】【第一篇】CentOS7.6离线安装Docker(手动安装以及基于yum本地源安装)

下载CentOS7.6以及最小化安装CentOS7.6版本.由于CentOS属于开源软件,在国内也有很多的mirror站点可供下载,我选择的是华为站点进行下载:http://mirrors.huawe ...

- 微服务架构 - 离线部署k8s平台并部署测试实例

一般在公司部署或者真实环境部署k8s平台,很有可能是内网环境,也即意味着是无法连接互联网的环境,这时就需要离线部署k8s平台.在此整理离线部署k8s的步骤,分享给大家,有什么不足之处,欢迎指正. 1. ...

- 最小化安装k8s

最小化安装k8s Nick_4438 关注 2018.07.11 10:40* 字数 670 阅读 0评论 0喜欢 0 1.前言 之前写过一篇二进制手工安装k8s的文章,过程复杂,搞了多日才安装成功. ...

- 在vultr中安装k8s测试

vultr 安装k8s *** 如果国内访问 k8s.gcr.io 很慢,或者无法访问 *** 在应用yaml文件创建资源时,将文件中镜像地址进行内容替换即可: 将k8s.gcr.io替换为 regi ...

- 二进制方式安装 k8s

推荐个好用的安装k8s的工具 https://github.com/easzlab/kubeasz 该工具基于二进制方式部署 k8s, 利用 ansible-playbook 实现自动化 1.1 ...

- kubernetes(k8s) helm安装kafka、zookeeper

通过helm在k8s上部署kafka.zookeeper 通过helm方法安装 k8s上安装kafka,可以使用helm,将kafka作为一个应用安装.当然这首先要你的k8s支持使用helm安装.he ...

- 国内环境安装k8s

环境准备 1. 配置/etc/hosts文件,将所有机器配置成通过主机名可以访问. 2. 如果环境中有代理,请一定要在环境变量中将no_proxy配置正确. 3. master还需要执行下面的命令 ...

- Win10 for Docker 安装 K8S

win 10 docker安装K8S中遇见的一些问题,记录下来方便自己以后避免采坑. 安装步骤: 1.安装Docker for windows.在docker官方下载,然后傻瓜式安装. 安装成功以后再 ...

随机推荐

- 【NOIP模拟题】Incr(dp)

太水的dp没啥好说的.. #include <cstdio> #include <cstring> #include <cmath> #include <st ...

- openstacksdk resource2 打印__dict__

在一个继承resource2的实体里,打印self.__dict__结果是: {'_body': <openstack.resource2._ComponentManager object at ...

- typescript 实现函数重载

class Demo { // #region 声明 log() : void; log(arg1: string): void; log(arg1: number, arg2: string): v ...

- string类(二、常用string函数)

常用string相关,参至System.String类: 1/ string.Length a.Length字符串长度 string a="a5"; //a.Length==2 s ...

- 【H.264/AVC视频编解码技术具体解释】十三、熵编码算法(4):H.264使用CAVLC解析宏块的残差数据

<H.264/AVC视频编解码技术具体解释>视频教程已经在"CSDN学院"上线,视频中详述了H.264的背景.标准协议和实现,并通过一个实战project的形式对H.2 ...

- THINKPHP5操作数据库代码示例

数据库表结构 #表结构 CREATE TABLE `qrcode_file` ( `id` ) NOT NULL AUTO_INCREMENT, `active` ) ' COMMENT '是否有效' ...

- ios开发之 -- 单例类

单例模式是一种软件设计模式,再它的核心结构中指包含一个被称为单例类的特殊类. 通过单例模式可以保证系统中一个类只有一个势力而且该势力易于外界访问,从而方便对势力个数的控制并节约系统资源.如果希望在系统 ...

- 阿里云CentOS6.8云服务器配置安全组规则

前提:已经购买阿里云服务器,域名解析也完成了 需要对安全组规则进行配置,才能进行访问 1.进入阿里云首页https://www.aliyun.com/,如下图 2.进入控制台首页,如下图 3.在上图页 ...

- Android测试:从零开始3—— Instrumented单元测试1

Instrumented单元测试是指运行在物理机器或者模拟机上的测试,这样可以使用Android framework 的API和supporting API.这会在你需要使用设备信息,如app的Con ...

- 简单深搜:POJ1546——Sum it up

结束了三分搜索的旅程 我开始迈入深搜的大坑.. 首先是一道比较基础的深搜题目(还是很难理解好么) POJ 1564 SUM IT UP 大体上的思路无非是通过深搜来进行穷举.匹配 为了能更好地理解深搜 ...