zookeeper实现分布式锁总结,看这一篇足矣(设计模式应用实战)

zk实现分布式锁纵观网络各种各样的帖子层出不穷,笔者查阅很多资料发现一个问题,有些文章只写原理并没有具体实现,有些文章虽然写了实现但是并不全面

借这个周末给大家做一个总结,代码拿来就可以用并且每一种实现都经过了测试没有bug。下面我们先从最简单的实现开始介绍:

- 简单的实现

package com.srr.lock; /**

* @Description 分布式锁的接口

*/

abstract public interface DistributedLock {

/**

* 获取锁

*/

boolean lock();

/**

* 解锁

*/

void unlock(); abstract boolean readLock();

abstract boolean writeLock();

} package com.srr.lock; /**

* 简单的zk分布式做实现策略

* 性能比较低会导致羊群效应

*/

public abstract class SimplerZKLockStrategy implements DistributedLock{

/**

* 模板方法,搭建的获取锁的框架,具体逻辑交于子类实现

* @throws Exception

*/

@Override

public boolean lock() {

//获取锁成功

if (tryLock()){

System.out.println(Thread.currentThread().getName()+"获取锁成功");

return true;

}else{ //获取锁失败

//阻塞一直等待

waitLock();

//递归,再次获取锁

return lock();

}

} /**

* 尝试获取锁,子类实现

*/

protected abstract boolean tryLock() ;

/**

* 等待获取锁,子类实现

*/

protected abstract void waitLock();

/**

* 解锁:删除key

*/

@Override

public abstract void unlock();

} package com.srr.lock; import org.I0Itec.zkclient.IZkDataListener;

import org.I0Itec.zkclient.ZkClient;

import java.util.concurrent.CountDownLatch; /**

* 分布式锁简单实现

*/

public class SimpleZKLock extends SimplerZKLockStrategy{

private static final String PATH = "/lowPerformance_zklock";

private CountDownLatch countDownLatch = null;

//zk地址和端口

public static final String ZK_ADDR = "192.168.32.129:2181";

//创建zk

protected ZkClient zkClient = new ZkClient(ZK_ADDR); @Override

protected boolean tryLock() {

//如果不存在这个节点,则创建持久节点

try{

zkClient.createEphemeral(PATH, "lock");

return true;

}catch (Exception e){

return false;

}

} @Override

protected void waitLock() {

IZkDataListener lIZkDataListener = new IZkDataListener() { @Override

public void handleDataDeleted(String dataPath) throws Exception {

if (null != countDownLatch){

countDownLatch.countDown();

}

System.out.println("listen lock unlock");

} @Override

public void handleDataChange(String dataPath, Object data) throws Exception { }

};

//监听前一个节点的变化

zkClient.subscribeDataChanges(PATH, lIZkDataListener);

if (zkClient.exists(PATH)) {

countDownLatch = new CountDownLatch(1);

try {

countDownLatch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

zkClient.unsubscribeDataChanges(PATH, lIZkDataListener); } @Override

public void unlock() {

if (null != zkClient) {

System.out.println("lock unclock");

zkClient.delete(PATH);

}

} @Override

public boolean readLock() {

return true;

} @Override

public boolean writeLock() {

return true;

}

} package com.srr.lock; import redis.clients.jedis.Jedis; import java.util.concurrent.CountDownLatch; /**

* 测试场景

* count从1加到4

* 使用简单的分布式锁在分布式环境下保证结果正确

*/

public class T { volatile int count = 1; public void inc(){

for(int i = 0;i<3;i++){

try {

Thread.sleep(10);

} catch (InterruptedException e) {

e.printStackTrace();

}

count++;

System.out.println("count == "+count);

}

} public int getCount(){

return count;

} public static void main(String[] args) throws InterruptedException {

final T t = new T();

final Lock lock = new Lock();

final CountDownLatch countDownLatch = new CountDownLatch(5);

for(int i = 0;i<5;i++){

new Thread(new Runnable() {

@Override

public void run() {

DistributedLock distributedLock = new SimpleZKLock();

if(lock.lock(distributedLock)){

t.inc();

lock.unlock(distributedLock);

countDownLatch.countDown();

}

System.out.println("count == "+t.getCount());

}

}).start();

}

countDownLatch.await();

}

}

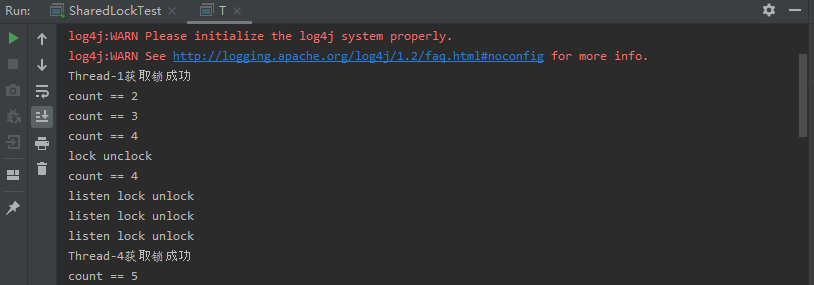

运行结果:

这种方式实现虽然简单,但是会引发羊群效应,因为每个等待锁的客户端都需要注册监听lock节点的删除事件,如果客户端并发请求很多,那么这将会非常消耗zookeeper集群

的资源,严重的化则会导致zookeeper集群宕机也不是没有可能。

- 高性能实现,解决羊群效应问题

package com.srr.lock; /**

* @Description 分布式锁的接口

*/

abstract public interface DistributedLock {

/**

* 获取锁

*/

boolean lock();

/**

* 解锁

*/

void unlock(); abstract boolean readLock();

abstract boolean writeLock();

} package com.srr.lock; public abstract class BlockingZKLockStrategy implements DistributedLock{

/**

* 模板方法,搭建的获取锁的框架,具体逻辑交于子类实现

* @throws Exception

*/

@Override

public final boolean lock() {

//获取锁成功

if (tryLock()){

System.out.println(Thread.currentThread().getName()+"获取锁成功");

return true;

}else{ //获取锁失败

//阻塞一直等待

waitLock();

//递归,再次获取锁

return true;

}

} /**

* 尝试获取锁,子类实现

*/

protected abstract boolean tryLock() ;

/**

* 等待获取锁,子类实现

*/

protected abstract void waitLock();

/**

* 解锁:删除key

*/

@Override

public abstract void unlock();

} package com.srr.lock; import org.I0Itec.zkclient.IZkDataListener;

import org.I0Itec.zkclient.ZkClient; import java.util.ArrayList;

import java.util.Collections;

import java.util.Comparator;

import java.util.List;

import java.util.concurrent.CountDownLatch; public class BlockingZKLock extends BlockingZKLockStrategy{

private static final String PATH = "/highPerformance_zklock";

//当前节点路径

private String currentPath;

//前一个节点的路径

private String beforePath;

private CountDownLatch countDownLatch = null;

//zk地址和端口

public static final String ZK_ADDR = "192.168.32.129:2181";

//超时时间

public static final int SESSION_TIMEOUT = 30000;

//创建zk

protected ZkClient zkClient = new ZkClient(ZK_ADDR, SESSION_TIMEOUT); public BlockingZKLock() {

//如果不存在这个节点,则创建持久节点

if (!zkClient.exists(PATH)) {

zkClient.createPersistent(PATH);

}

} @Override

protected boolean tryLock() {

//如果currentPath为空则为第一次尝试加锁,第一次加锁赋值currentPath

//if (null == currentPath || "".equals(currentPath)) {

//在path下创建一个临时的顺序节点

currentPath = zkClient.createEphemeralSequential(PATH+"/", "lock");

//}

try {

Thread.sleep(10);

} catch (InterruptedException e) {

e.printStackTrace();

}

//获取所有的临时节点,并排序

List<String> childrens = zkClient.getChildren(PATH); Collections.sort(childrens); if (currentPath.equals(PATH+"/"+childrens.get(0))) {

return true;

}else {//如果当前节点不是排名第一,则获取它前面的节点名称,并赋值给beforePath

int pathLength = PATH.length();

int wz = Collections.binarySearch(childrens, currentPath.substring(pathLength+1));

beforePath = PATH+"/"+childrens.get(wz-1);

}

return false; } @Override

protected void waitLock() {

IZkDataListener lIZkDataListener = new IZkDataListener() { @Override

public void handleDataDeleted(String dataPath) throws Exception {

if (null != countDownLatch){

countDownLatch.countDown();

}

System.out.println("listen lock unlock");

} @Override

public void handleDataChange(String dataPath, Object data) throws Exception { }

};

//监听前一个节点的变化

zkClient.subscribeDataChanges(beforePath, lIZkDataListener);

if (zkClient.exists(beforePath)) {

countDownLatch = new CountDownLatch(1);

try {

countDownLatch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

zkClient.unsubscribeDataChanges(beforePath, lIZkDataListener); } @Override

public void unlock() {

if (null != zkClient) {

System.out.println("lock unclock");

zkClient.delete(currentPath);

}

} @Override

public boolean readLock() {

return true;

} @Override

public boolean writeLock() {

return true;

}

} package com.srr.lock; import java.util.concurrent.CountDownLatch; /**

* 测试场景

* count从1加到4

* 使用高性能的分布式锁在分布式环境下保证结果正确

*/

public class T { volatile int count = 1; public void inc(){

for(int i = 0;i<3;i++){

try {

Thread.sleep(10);

} catch (InterruptedException e) {

e.printStackTrace();

}

count++;

System.out.println("count == "+count);

}

} public int getCount(){

return count;

} public static void main(String[] args) throws InterruptedException {

final T t = new T();

final Lock lock = new Lock();

final CountDownLatch countDownLatch = new CountDownLatch(5);

for(int i = 0;i<5;i++){

new Thread(new Runnable() {

@Override

public void run() {

DistributedLock distributedLock = new BlockingZKLock();

if(lock.lock(distributedLock)){

t.inc();

lock.unlock(distributedLock);

countDownLatch.countDown();

}

System.out.println("count == "+t.getCount());

}

}).start();

}

countDownLatch.await();

}

}

这种实现客户端只需监听它前一个节点的变化,不需要监听所有的节点,从而提高了zookeeper锁的性能。

- 共享锁(S锁)

- 写到这个,看了网络上很多错误的文章实现把排它锁当做共享锁

共享锁正确是实现姿势如下:

package com.srr.lock; /**

* @Description 分布式锁的接口

*/

abstract public interface DistributedLock {

/**

* 获取锁

*/

boolean lock();

/**

* 解锁

*/

void unlock(); abstract boolean readLock();

abstract boolean writeLock();

} package com.srr.lock; /**

* 共享锁策略

*/

abstract public class ZKSharedLockStrategy implements DistributedLock{

@Override

public boolean readLock() {

//获取锁成功

if (tryReadLock()){

System.out.println(Thread.currentThread().getName()+"获取读锁成功");

return true;

}else{ //获取锁失败

//阻塞一直等待

waitLock();

//递归,再次获取锁

return true;

}

} @Override

public boolean writeLock() {

//获取锁成功

if (tryWriteLock()){

System.out.println(Thread.currentThread().getName()+"获取写锁成功");

return true;

}else{ //获取锁失败

//阻塞一直等待

waitLock();

//递归,再次获取锁

return true;

}

} /**

* 尝试获取锁,子类实现

*/

protected abstract boolean tryWriteLock() ; /**

* 尝试获取锁,子类实现

*/

protected abstract boolean tryReadLock() ; /**

* 等待获取锁,子类实现

*/

protected abstract void waitLock(); /**

* 解锁:删除key

*/

@Override

public abstract void unlock();

} package com.srr.lock; import org.I0Itec.zkclient.IZkDataListener;

import org.I0Itec.zkclient.ZkClient; import java.util.Collections;

import java.util.List;

import java.util.concurrent.CountDownLatch; /**

* 共享锁

*/

public class ZKSharedLock extends ZKSharedLockStrategy{ private static final String PATH = "/zk-root-readwrite-lock";

//当前节点路径

private String currentPath;

//前一个节点的路径

private String beforePath;

private CountDownLatch countDownLatch = null;

//zk地址和端口

public static final String ZK_ADDR = "192.168.32.129:2181";

//超时时间

public static final int SESSION_TIMEOUT = 30000;

//创建zk

protected ZkClient zkClient = new ZkClient(ZK_ADDR, SESSION_TIMEOUT);

public ZKSharedLock() {

//如果不存在这个节点,则创建持久节点

if (!zkClient.exists(PATH)) {

zkClient.createPersistent(PATH);

}

} @Override

protected boolean tryWriteLock() {

//如果currentPath为空则为第一次尝试加锁,第一次加锁赋值currentPath

if (null == currentPath || "".equals(currentPath)) {

//在path下创建一个临时的顺序节点

currentPath = zkClient.createEphemeralSequential(PATH+"/w", "writelock");

}

try {

Thread.sleep(10);

} catch (InterruptedException e) {

e.printStackTrace();

}

//获取所有的临时节点,并排序

List<String> childrens = zkClient.getChildren(PATH);

Collections.sort(childrens); if (currentPath.equals(PATH+"/"+childrens.get(0))) {

return true;

}else {//如果当前节点不是排名第一,则获取它前面的节点名称,并赋值给beforePath

int pathLength = PATH.length();

int wz = Collections.binarySearch(childrens, currentPath.substring(pathLength+1));

beforePath = PATH+"/"+childrens.get(wz-1);

}

return false;

} @Override

protected boolean tryReadLock() {

//如果currentPath为空则为第一次尝试加锁,第一次加锁赋值currentPath

if (null == currentPath || "".equals(currentPath)) {

//在path下创建一个临时的顺序节点

currentPath = zkClient.createEphemeralSequential(PATH+"/r", "readklock");

}

try {

Thread.sleep(10);

} catch (InterruptedException e) {

e.printStackTrace();

}

//获取所有的临时节点,并排序

List<String> childrens = zkClient.getChildren(PATH);

Collections.sort(childrens); if (currentPath.equals(PATH+"/"+childrens.get(0))) {

return true;

}else if(isAllReadNodes(childrens)){

return true;

}else {//如果当前节点不是排名第一,则获取它前面的节点名称,并赋值给beforePath

int pathLength = PATH.length();

int wz = Collections.binarySearch(childrens, currentPath.substring(pathLength+1)); for (int i = wz - 1; i > 0; i--) {

// 找到了离得最近的一个写节点,那么它的后一个节点要么是一个读节点,要么就是待加锁的节点本身

if (childrens.get(i).indexOf("w") >= 0) {

beforePath = PATH + "/" + childrens.get(i);

break;

}

}

}

return false;

} // 判断比自已小的节点是否都是读节点

private boolean isAllReadNodes(List<String> sortNodes) {

int pathLength = PATH.length();

int currentIndex = Collections.binarySearch(sortNodes, currentPath.substring(pathLength+1));

for (int i = 0; i < currentIndex - 1; i++) {

// 只要有一个写锁,则不能直接获取读锁

if (sortNodes.get(i).indexOf("w") >= 0) {

return false;

}

} return true;

} @Override

protected void waitLock() {

IZkDataListener lIZkDataListener = new IZkDataListener() {

@Override

public void handleDataDeleted(String dataPath) throws Exception {

if (null != countDownLatch){

countDownLatch.countDown();

}

System.out.println("listen lock unlock");

}

@Override

public void handleDataChange(String dataPath, Object data) throws Exception { }

};

//监听前一个节点的变化

zkClient.subscribeDataChanges(beforePath, lIZkDataListener);

if (zkClient.exists(beforePath)) {

countDownLatch = new CountDownLatch(1);

try {

countDownLatch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

zkClient.unsubscribeDataChanges(beforePath, lIZkDataListener);

} @Override

public boolean lock() {

return false;

} @Override

public void unlock() {

if (null != zkClient) {

System.out.println("lock unclock");

zkClient.delete(currentPath);

zkClient.close();

}

}

} package com.srr.lock; /**

* 锁工具类

*/

public class Lock {

/**

* 获取锁

*/

boolean lock(DistributedLock lock) {

return lock.lock();

}; /**

* 获取读锁

*/

boolean readlock(DistributedLock lock) {

return lock.readLock();

}; /**

* 获取读锁

*/

boolean writeLock(DistributedLock lock) {

return lock.writeLock();

}; /**

* 释放锁

*/

void unlock(DistributedLock lock) {

lock.unlock();

};

} package com.srr.lock; import java.util.concurrent.CountDownLatch; /**

* 测试共享锁

*/

public class SharedLockTest {

private static volatile int count = 0;

public static void main(String[] args) throws Exception {

final Lock lock = new Lock();

final CountDownLatch countDownLatch = new CountDownLatch(10); new Thread(new Runnable() {

@Override

public void run() {

testWriteLock(8);

}

}).start(); new Thread(new Runnable() {

@Override

public void run() {

testReadLock(10);

}

}).start(); new Thread(new Runnable() {

@Override

public void run() {

testReadLock(20);

}

}).start(); new Thread(new Runnable() {

@Override

public void run() {

testWriteLock(11);

}

}).start(); new Thread(new Runnable() {

@Override

public void run() {

testWriteLock(30);

}

}).start(); new Thread(new Runnable() {

@Override

public void run() {

testReadLock(9);

}

}).start(); countDownLatch.await();

} // 读锁

private static void testReadLock(long sleepTime) {

try {

Lock lock = new Lock();

DistributedLock dlock = new ZKSharedLock();

lock.readlock(dlock);

System.out.println("i get readlock ->" + sleepTime);

System.out.println("count = "+ count);

Thread.sleep(sleepTime);

lock.unlock(dlock);

} catch (Exception e) {

e.printStackTrace();

}

} // 写锁

private static void testWriteLock(long sleepTime) {

try {

Lock lock = new Lock();

DistributedLock dlock = new ZKSharedLock();

lock.writeLock(dlock);

System.out.println("i get writelock ->" + sleepTime);

count++;

Thread.sleep(sleepTime);

lock.unlock(dlock);

} catch (Exception e) {

e.printStackTrace();

}

} }

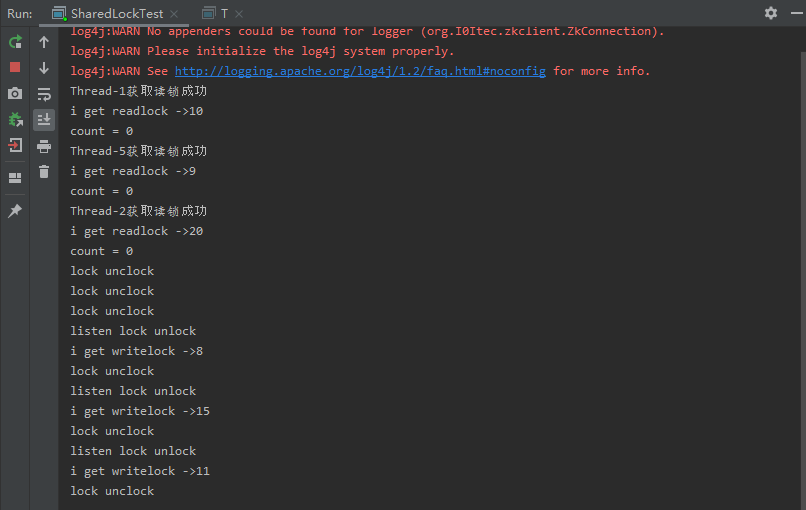

运行结果:

从结果可以看出读锁和读锁可以共享锁,而写锁必须等待读锁或者写锁释放之后才能获取锁。

最后,zk分布式锁完美解决方案:

- Apache Curator

- Apache Curator is a Java/JVM client library for Apache ZooKeeper, a distributed coordination service. It includes a highlevel API framework and utilities to make using Apache ZooKeeper much easier and more reliable. It also includes recipes for common use cases and extensions such as service discovery and a Java 8 asynchronous DSL.

- Curator n ˈkyoor͝ˌātər: a keeper or custodian of a museum or other collection - A ZooKeeper Keeper.

网上很多文章竟然标题用Curator实现分布式锁,大哥Curator框架本身已经实现了分布式锁而且提供了各种各样的锁api供大家使用,我们不用再基于Curator实现分布式锁,这不是多此一举吗?这里给出一个简单的使用案例,旨在说明意图:

package com.srr.lock; import org.apache.curator.RetryPolicy;

import org.apache.curator.framework.CuratorFramework;

import org.apache.curator.framework.CuratorFrameworkFactory;

import org.apache.curator.framework.recipes.locks.InterProcessMutex;

import org.apache.curator.retry.ExponentialBackoffRetry; import java.util.concurrent.CountDownLatch;

import java.util.concurrent.TimeUnit; /**

* 测试场景

* count从1加到101

* 使用redis分布式锁在分布式环境下保证结果正确

*/

public class CuratorDistributedLockTest {

private static final String lockPath = "/curator_lock";

//zk地址和端口

public static final String zookeeperConnectionString = "192.168.32.129:2181"; volatile int count = 1; public void inc(){

for(int i = 0;i<10;i++){

count++;

System.out.println("count == "+count);

}

} public int getCount(){

return count;

} public static void main(String[] args) throws InterruptedException {

final T t = new T();

final Lock lock = new Lock();

final CountDownLatch countDownLatch = new CountDownLatch(4);

for(int i = 0;i<4;i++){

new Thread(new Runnable() {

@Override

public void run() {

RetryPolicy retryPolicy = new ExponentialBackoffRetry(10, 5000);

CuratorFramework client = CuratorFrameworkFactory.newClient(zookeeperConnectionString, retryPolicy);

client.start();

InterProcessMutex lock = new InterProcessMutex(client, lockPath);

try {

if (lock.acquire(10 * 1000, TimeUnit.SECONDS))

{

try

{

System.out.println("get the lock");

t.inc();

}

finally

{

lock.release();

System.out.println("unlock the lock");

}

}

}catch (Exception e){

e.printStackTrace();

}

countDownLatch.countDown();

}

}).start();

} countDownLatch.await();

System.out.println("total count == "+t.getCount());

}

}

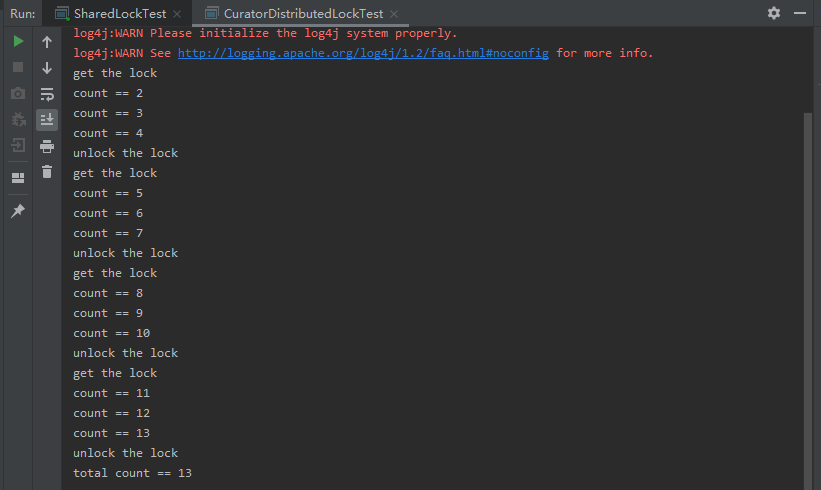

运行结果:

如果想更多了解Curator框架,请移步http://curator.apache.org/,官网给出了详细的使用案例及介绍。至此zk实现分布式锁总结完毕!

原创不易,请多多关注!

zookeeper实现分布式锁总结,看这一篇足矣(设计模式应用实战)的更多相关文章

- 10分钟看懂!基于Zookeeper的分布式锁

实现分布式锁目前有三种流行方案,分别为基于数据库.Redis.Zookeeper的方案,其中前两种方案网络上有很多资料可以参考,本文不做展开.我们来看下使用Zookeeper如何实现分布式锁. 什么是 ...

- [ZooKeeper.net] 3 ZooKeeper的分布式锁

基于ZooKeeper的分布式锁 ZooKeeper 里实现分布式锁的基本逻辑: 1.zookeeper中创建一个根节点(Locks),用于后续各个客户端的锁操作. 2.想要获取锁的client都在L ...

- 基于 Zookeeper 的分布式锁实现

1. 背景 最近在学习 Zookeeper,在刚开始接触 Zookeeper 的时候,完全不知道 Zookeeper 有什么用.且很多资料都是将 Zookeeper 描述成一个“类 Unix/Linu ...

- zookeeper的分布式锁

实现分布式锁目前有三种流行方案,分别为基于数据库.Redis.Zookeeper的方案,其中前两种方案网络上有很多资料可以参考,本文不做展开.我们来看下使用Zookeeper如何实现分布式锁. 什么是 ...

- zookeeper 实现分布式锁安全用法

zookeeper 实现分布式锁安全用法 标签: zookeeper sessionExpire connectionLoss 分布式锁 背景 ConnectionLoss 链接丢失 SessionE ...

- 基于Zookeeper的分布式锁

实现分布式锁目前有三种流行方案,分别为基于数据库.Redis.Zookeeper的方案,其中前两种方案网络上有很多资料可以参考,本文不做展开.我们来看下使用Zookeeper如何实现分布式锁. 什么是 ...

- 转载 [ZooKeeper.net] 3 ZooKeeper的分布式锁

[ZooKeeper.net] 3 ZooKeeper的分布式锁 基于ZooKeeper的分布式锁 源码分享:http://pan.baidu.com/s/1miQCDKk ZooKeeper ...

- ZooKeeper示例 分布式锁

[转载请注明作者和原文链接, 如有谬误, 欢迎在评论中指正. ] 场景描述 在分布式应用, 往往存在多个进程提供同一服务. 这些进程有可能在相同的机器上, 也有可能分布在不同的机器上. 如果这些进程 ...

- 【连载】redis库存操作,分布式锁的四种实现方式[一]--基于zookeeper实现分布式锁

一.背景 在电商系统中,库存的概念一定是有的,例如配一些商品的库存,做商品秒杀活动等,而由于库存操作频繁且要求原子性操作,所以绝大多数电商系统都用Redis来实现库存的加减,最近公司项目做架构升级,以 ...

- 【Zookeeper】分布式锁

一.概述 实现原理 实现代码 一.概述 分布式锁解决方案(目的:为了保证在分布式领域中共享数据安全问题) 数据库实现分布式锁(不推荐.效率特别低) 基于Redis实现分布式锁setNx (非常麻烦考虑 ...

随机推荐

- sed命令的正则表达式实践

1. 取系统ip [root@oldboy logs]# ifconfig eth3 eth3 Link encap:Ethernet HWaddr 08:00:27:4C:6F:AD inet ad ...

- JS基础入门篇(十)— 数组方法

1.join 作用: 将数组通过指定字符拼接成字符串.语法: string arr.join([separator = ',']);参数: separator可选,如果省略的话,默认为一个逗号.如果 ...

- 使用PHP-Beast加密你的PHP源代码

PHP-Beast是一个PHP源码加密的模块,其使用DES算法加密,用户可以自定义加密的key来加密源代码. 1. PHP-Beast的安装 $ wget https://github.com/lie ...

- Sunday算法:字符串匹配算法进阶

背景 我们第一次接触字符串匹配,想到的肯定是直接用2个循环来遍历,这样代码虽然简单,但时间复杂度却是\(Ω(m*n)\),也就是达到了字符串匹配效率的下限.于是后来人经过研究,构造出了著名的KMP算法 ...

- checked 完整版全选,单选,反选

<!DOCTYPE html> <html> <head> <meta charset="UTF-8"> <link rel= ...

- springboot rabbitmq 找不到队列

错误报告: org.springframework.amqp.rabbit.listener.BlockingQueueConsumer$DeclarationException: Failed to ...

- D. Sequence Sorting dp

D. Sequence Sorting 题目大意:给你一个序列,有一种操作就是对所有相同的数可以挪到最前面,也可以挪到最后面,问最少操作次数. 首先,对于很多个相同的数,可以缩成两个位置,一个是就是这 ...

- 【BIM】BIMFACE中实现电梯实时动效

背景 在运维场景中,电梯作为运维环节重要的一部分是不可获缺的,如果能够在三维场景中,将逼真的电梯效果,包括外观.运行状态等表现出来,无疑是产品的一大亮点.本文将从无到有介绍如何在bimface中实现逼 ...

- ASP.NET Core中结合枚举和资源文件显示列表

模型类的某些属性以枚举形式出现,我们希望在打开编辑表单时在选择列表中显示枚举值.有时我们想要枚举元素名称,但有时我们想要使用自定义名称甚至翻译. 这个例子演示了如何获取枚举元素名称以选择ASP.NET ...

- gRPC负载均衡(客户端负载均衡)

前言 上篇介绍了如何使用etcd实现服务发现,本篇将基于etcd的服务发现前提下,介绍如何实现gRPC客户端负载均衡. gRPC负载均衡 gRPC官方文档提供了关于gRPC负载均衡方案Load Bal ...