通过源码学习@functools.lru_cache

一、前言

通常在一些代码中包含了重复运算,而这些重复运算会大大增加代码运行所耗费的时间,比如使用递归实现斐波那契数列。

举个例子,当求 fibonacci(5) 时,需要求得 fibonacci(3) 和 fibonacci(4) 的结果,而求 fibonacci(4) 时,又需要求 fibonacci(2) 和 fibonacci(3) ,但此时 fibonacci(3) 就被重新计算一遍了,继续递归下去,重复计算的内容就更多了。求 fibonacci(5) 的代码和运行结果如下:

def fibonacci(n):

# 递归实现斐波那契数列

print("n is {}".format(n))

if n < 2:

return n

return fibonacci(n - 2) + fibonacci(n - 1) if __name__ == '__main__':

fibonacci(5) # n is 5

# n is 3

# n is 1

# n is 2

# n is 0

# n is 1

# n is 4

# n is 2

# n is 0

# n is 1

# n is 3

# n is 1

# n is 2

# n is 0

# n is 1

从打印的结果来看,有很多重复计算的部分,传入的 n 越大,重复计算的部分就越多,程序的耗时也大大增加,例如当 n = 40 时,运行耗时已经很长了,代码如下:

import time def fibonacci(n):

# 递归实现斐波那契数列

if n < 2:

return n

return fibonacci(n - 2) + fibonacci(n - 1) if __name__ == '__main__':

print("Start: {}".format(time.time()))

print("Fibonacci(40) = {}".format(fibonacci(40)))

print("End: {}".format(time.time())) # Start: 1594197671.6210408

# Fibonacci(40) = 102334155

# End: 1594197717.8520994

二、@functools.lru_cache

1.使用方法

@functools.lru_cache 是一个装饰器,所谓装饰器,就是在不改变原有代码的基础上,为其增加额外的功能,例如打印日志、计算运行时间等,该装饰器的用法如下:

import functools @functools.lru_cache(100)

def fibonacci(n):

# 递归实现斐波那契数列

print("n is {}".format(n))

if n < 2:

return n

return fibonacci(n - 2) + fibonacci(n - 1) if __name__ == '__main__':

fibonacci(5) # n is 5

# n is 3

# n is 1

# n is 2

# n is 0

# n is 4

从打印的结果来看,从0到5都只计算了一遍,没有出现重复计算的情况,那当 n = 40 时,程序的耗时情况又是如何呢?代码如下:

import time

import functools @functools.lru_cache(100)

def fibonacci(n):

# 递归实现斐波那契数列

if n < 2:

return n

return fibonacci(n - 2) + fibonacci(n - 1) if __name__ == '__main__':

print("Start: {}".format(time.time()))

print("Fibonacci(40) = {}".format(fibonacci(40)))

print("End: {}".format(time.time())) # Start: 1594197813.2185402

# Fibonacci(40) = 102334155

# End: 1594197813.2185402

从结果可知,没有了这些重复计算,程序运行所耗费的时间也大大减少了。

2.源码解析

在 Pycharm 中点击 lru_cache 可以查看源码,其源码如下:

def lru_cache(maxsize=128, typed=False):

"""Least-recently-used cache decorator. If *maxsize* is set to None, the LRU features are disabled and the cache

can grow without bound. If *typed* is True, arguments of different types will be cached separately.

For example, f(3.0) and f(3) will be treated as distinct calls with

distinct results. Arguments to the cached function must be hashable. View the cache statistics named tuple (hits, misses, maxsize, currsize)

with f.cache_info(). Clear the cache and statistics with f.cache_clear().

Access the underlying function with f.__wrapped__. See: http://en.wikipedia.org/wiki/Cache_algorithms#Least_Recently_Used """ # Users should only access the lru_cache through its public API:

# cache_info, cache_clear, and f.__wrapped__

# The internals of the lru_cache are encapsulated for thread safety and

# to allow the implementation to change (including a possible C version). # Early detection of an erroneous call to @lru_cache without any arguments

# resulting in the inner function being passed to maxsize instead of an

# integer or None.

if maxsize is not None and not isinstance(maxsize, int):

raise TypeError('Expected maxsize to be an integer or None') def decorating_function(user_function):

wrapper = _lru_cache_wrapper(user_function, maxsize, typed, _CacheInfo)

return update_wrapper(wrapper, user_function) return decorating_function

注释的第一行就指明了这是一个 LRU 缓存装饰器(“Least-recently-used cache decorator”)。如果 maxsize 参数被设置为 None,则禁用了 LRU 特性,且缓存可以无限制地增长;如果 typed 参数被设置为 True,则不同类型的参数会被视为不同的调用,例如 f(3.0) 和 f(3) 就会被视为不同的调用,其结果也就不同了。

再看代码部分,maxsize 只能为 None 或者 int 类型数据,然后就是一个装饰的函数 decorating_function,包含了两个函数 _lru_cache_wrapper 和 update_wrapper,而其中主要功能包含在 _lru_cache_wrapper() 函数中,其源码如下:

def _lru_cache_wrapper(user_function, maxsize, typed, _CacheInfo):

# Constants shared by all lru cache instances:

sentinel = object() # unique object used to signal cache misses

make_key = _make_key # build a key from the function arguments

PREV, NEXT, KEY, RESULT = 0, 1, 2, 3 # names for the link fields cache = {}

hits = misses = 0

full = False

cache_get = cache.get # bound method to lookup a key or return None

cache_len = cache.__len__ # get cache size without calling len()

lock = RLock() # because linkedlist updates aren't threadsafe

root = [] # root of the circular doubly linked list

root[:] = [root, root, None, None] # initialize by pointing to self if maxsize == 0: def wrapper(*args, **kwds):

# No caching -- just a statistics update after a successful call

nonlocal misses

result = user_function(*args, **kwds)

misses += 1

return result elif maxsize is None: def wrapper(*args, **kwds):

# Simple caching without ordering or size limit

nonlocal hits, misses

key = make_key(args, kwds, typed)

result = cache_get(key, sentinel)

if result is not sentinel:

hits += 1

return result

result = user_function(*args, **kwds)

cache[key] = result

misses += 1

return result else: def wrapper(*args, **kwds):

# Size limited caching that tracks accesses by recency

nonlocal root, hits, misses, full

key = make_key(args, kwds, typed)

with lock:

link = cache_get(key)

if link is not None:

# Move the link to the front of the circular queue

link_prev, link_next, _key, result = link

link_prev[NEXT] = link_next

link_next[PREV] = link_prev

last = root[PREV]

last[NEXT] = root[PREV] = link

link[PREV] = last

link[NEXT] = root

hits += 1

return result

result = user_function(*args, **kwds)

with lock:

if key in cache:

# Getting here means that this same key was added to the

# cache while the lock was released. Since the link

# update is already done, we need only return the

# computed result and update the count of misses.

pass

elif full:

# Use the old root to store the new key and result.

oldroot = root

oldroot[KEY] = key

oldroot[RESULT] = result

# Empty the oldest link and make it the new root.

# Keep a reference to the old key and old result to

# prevent their ref counts from going to zero during the

# update. That will prevent potentially arbitrary object

# clean-up code (i.e. __del__) from running while we're

# still adjusting the links.

root = oldroot[NEXT]

oldkey = root[KEY]

oldresult = root[RESULT]

root[KEY] = root[RESULT] = None

# Now update the cache dictionary.

del cache[oldkey]

# Save the potentially reentrant cache[key] assignment

# for last, after the root and links have been put in

# a consistent state.

cache[key] = oldroot

else:

# Put result in a new link at the front of the queue.

last = root[PREV]

link = [last, root, key, result]

last[NEXT] = root[PREV] = cache[key] = link

# Use the cache_len bound method instead of the len() function

# which could potentially be wrapped in an lru_cache itself.

full = (cache_len() >= maxsize)

misses += 1

return result def cache_info():

"""Report cache statistics"""

with lock:

return _CacheInfo(hits, misses, maxsize, cache_len()) def cache_clear():

"""Clear the cache and cache statistics"""

nonlocal hits, misses, full

with lock:

cache.clear()

root[:] = [root, root, None, None]

hits = misses = 0

full = False wrapper.cache_info = cache_info

wrapper.cache_clear = cache_clear

return wrapper

可以看到根据 maxsize 的值会返回不同的 wrapper 函数。当 maxsize 为零时,定义了一个局部变量 misses,并在每次调用时加1;当 maxsize 为 None 时,在函数调用时会先从缓存中获取,若缓存中有就返回结果,若缓存中没有则运行函数并将结果加入到缓存中;当 maxsize 为非零整数时,可以缓存最多 maxsize 个此函数的调用结果,此时使用了一个双向链表 root,其初始化如下:

root = [] # root of the circular doubly linked list

root[:] = [root, root, None, None] # initialize by pointing to self

当调用时也会先从缓存中进行获取,如果有则更新 root 并返回结果,如果没有则调用函数,此时需要判断缓存是否达到最大数量,若已满,则删除 root 中最久未访问的数据并更新 root 和缓存。

三、LRU Cache

1.基本认识

我们知道计算机的缓存容量有限,如果缓存满了就要删除一些内容,给新内容腾位置。但问题是,删除哪些内容呢?

LRU 缓存策略就是一种常用的策略。LRU,全称 least recently used,表示最近最少使用。LRU 缓存策略认为最近使用过的数据应该是是有用的,而很久都没用过的数据应该是无用的,内存满了就优先删那些很久没用过的数据。

2.自定义实现

实现 lru cache 需要两个数据结构:双向链表和哈希表,双向链表用于记录存储数据的顺序,用于淘汰最久未使用的数据,哈希表用于记录元素位置,可在 O(1) 的时间复杂度下获取元素。

然后要实现两个操作,分别是 get 和 put:

1)get 操作:根据传入的 key 从哈希表中获取元素的位置,若没有返回 None,若有则从链表中获取元素并将该元素移到链表尾部;

2)put 操作:首先判断传入的 key 是否在哈希表中存在,若有则进行更新,并将该元素移到链表尾部;若没有,表示是一个新元素,需要添加到哈希表中,再判断数据量是否超过最大容量,若达到最大容量则删除最久未使用的数据,即链表头部元素,再将新元素添加到链表尾部,若未达到最大容量则直接添加到链表尾部。

首先要实现双向链表,代码如下:

# Node of the list

class Node:

def __init__(self, val):

self.val = val

self.prev = None

self.next = None def __str__(self):

return "The value is " + str(self.val) # Double Linked List

class DoubleList:

def __init__(self):

self.head = None

self.tail = None def is_empty(self):

"""

returns true if the list is empty, false otherwise

:return:

"""

return self.head is None def append(self, value):

"""

append element after the list

:param value: the value of node

:return:

"""

node = Node(value)

if self.is_empty():

self.head = node

self.tail = node

return

cur = self.head

# find the tail of the list

while cur.next:

cur = cur.next

cur.next = node

node.prev = cur

self.tail = node def remove(self, value):

"""

if value in the list, remove the element

:param value: the value of node

:return:

"""

if self.is_empty():

return

cur = self.head

while cur:

if cur.val == value:

if len(self) == 1:

# when the list has only one node

self.head, self.tail = None, None

else:

if cur == self.head:

self.head = cur.next

elif cur == self.tail:

self.tail = cur.prev

else:

cur.prev.next = cur.next

return

else:

cur = cur.next def traverse(self):

"""

iterate through the list

:return:

"""

cur = self.head

index = 1

while cur:

print("Index: {}".format(index) + cur)

cur = cur.next

index += 1 def __len__(self):

count = 0

cur = self.head

while cur:

count += 1

cur = cur.next

return count def __str__(self):

cur = self.head

ret = ""

while cur:

ret += str(cur.val) + "->" if cur.next else str(cur.val)

cur = cur.next

return ret

其中实现了添加节点、删除节点、获取长度等方法,已经足够作为我们需要的双向链表来使用了,最后就是实现 LRU Cache,主要实现 get(获取数据) 和 put(添加数据)方法,下面是自定义实现的 LRU Cache 类的代码:

# LRU Cache

class LRU:

def __init__(self, size):

self.size = size

self._list = DoubleList()

self._cache = dict() def _set_recent(self, node):

"""

set the node to most recently used

:param node: node

:return:

"""

# when the node is the tail of the list

if node == self._list.tail:

return

cur = self._list.head

while cur:

# remove the node from the list

if cur == node:

if cur == self._list.head:

self._list.head = cur.next

else:

prev = cur.prev

prev.next = cur.next

if cur.next:

cur = cur.next

else:

break

# set node to the tail of the list

cur.next = node

node.next = None

node.prev = cur

self._list.tail = node def get(self, key):

"""

get value of the key

:param key: key

:return:

"""

node = self._cache.get(key, None)

if not node:

return

self._set_recent(node)

return node.val def put(self, key, value):

"""

set value of the key and add to the cache

:param key: key

:param value: value

:return:

"""

node = self._cache.get(key, None)

if not node:

if len(self._list) < self.size:

self._list.append(value)

else:

# when the quantity reaches the maximum, delete the head node

name = None

for k, v in self._cache.items():

if v == self._list.head:

name = k

if name:

del self._cache[name]

self._list.head = self._list.head.next

self._list.append(value)

else:

self._set_recent(node)

self._list.tail.val = value

# add to cache

self._cache[key] = self._list.tail def show(self):

"""

show data of the list

:return:

"""

return "The list is: {}".format(self._list)

下面是测试代码:

if __name__ == '__main__':

lru = LRU(8)

for i in range(10):

lru.put(str(i), i)

print(lru.show())

for i in range(10):

if i % 3 == 0:

print("Get {}: {}".format(i, lru.get(str(i))))

print(lru.show())

lru.put("", 22)

lru.put("", 44)

lru.put("", 66)

print(lru.show())

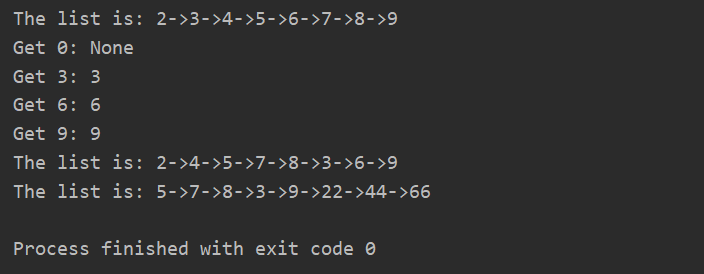

最后是运行结果的截图:

- 当插入数据时,因为最大容量为8,而插入了10个数据,那么最开始添加进去的0和1就会被删掉;

- 当获取数据时,不存在则返回None,存在则返回对应的值,并将该节点移到链表的尾部;

- 当更新数据时,会将对应节点的值进行更新,并将节点移到链表的尾部。

完整代码已上传到 GitHub!

通过源码学习@functools.lru_cache的更多相关文章

- 通过源码了解ASP.NET MVC 几种Filter的执行过程

一.前言 之前也阅读过MVC的源码,并了解过各个模块的运行原理和执行过程,但都没有形成文章(所以也忘得特别快),总感觉分析源码是大神的工作,而且很多人觉得平时根本不需要知道这些,会用就行了.其实阅读源 ...

- Linux下通过源码编译安装程序

本文简单的记录了下,在linux下如何通过源码安装程序,以及相关的知识.(大神勿喷^_^) 一.程序的组成部分 Linux下程序大都是由以下几部分组成: 二进制文件:也就是可以运行的程序文件 库文件: ...

- 通过源码了解ASP.NET MVC 几种Filter的执行过程 在Winform中菜单动态添加“最近使用文件”

通过源码了解ASP.NET MVC 几种Filter的执行过程 一.前言 之前也阅读过MVC的源码,并了解过各个模块的运行原理和执行过程,但都没有形成文章(所以也忘得特别快),总感觉分析源码是大神 ...

- 在centos6.7通过源码安装python3.6.7报错“zipimport.ZipImportError: can't decompress data; zlib not available”

在centos6.7通过源码安装python3.6.7报错: zipimport.ZipImportError: can't decompress data; zlib not available 从 ...

- Kafka详解六:Kafka如何通过源码实现监控

问题导读: 1.kafka的消费者组的消费偏移存储,kafka支持两个版本? 2.ConsumerOffsetChecker类的作用是什么? 3.Kafka如何通过源码实现 ...

- 通过源码编译安装VIM

开发中使用的是Ubuntu 12.04 LTS,通过sudo apt-get install vim安装的版本较低,不支持YCM,所以,用源码编译并安装最新的Vim. 卸载旧版本的Vim: sudo ...

- echarts 通过源码方法 传入对应data数据获取分割步长值

通过源码方法获取这里的分割数字长度 /** * Quantity of a number. e.g. 0.1, 1, 10, 100 * * @param {number} val * @return ...

- 通过源码安装PostgresSQL

通过源码安装PostgresSQL 1.1 下载源码包环境: Centos6.8 64位 yum -y install bison flex readline-devel zlib-devel yum ...

- 如何通过源码包的方式在linux安装python36

背景: python34的安装非常简单,直接用yum就可以安装,但是安装最新版的python36通过yum方式是不行的,需要通过源码包进行安装 具体步骤如下: 1.安装openssl静态库[pip3安 ...

随机推荐

- Flask 安装和简单使用

安装 pip install flask # 1 导入 Falsk from flask import Flask # 2 生成一个 Flask 对象,__name__表示当前文件的名字 app = ...

- 多态的C语言实现版本

#ifndef _51_2_H #define _51_2_H typedef void Demo; typedef void Derived; Demo* Demo_create(int i,int ...

- hadoop知识整理(5)之kafka

一.简介 来自官网介绍: 翻译:kafka,是一个分布式的流处理平台.LinkedIn公司开发.scala语言编写. 1.支持流处理的发布订阅模式,类似一个消息队列系统: 2.多备份存储,副本冗余 ...

- Android 图片裁剪踩坑

今天做图库图片的裁剪遇到了不少坑,今天记录一下,以下坑位供各位看官参考: 如果有不对之处,欢迎各位看官留言评论! 图片裁剪踩坑锦囊: 问题一:相册裁剪权限问题 解:这个简单,对于Android6. ...

- 顺序表的基本方法实现C语言版

顺序表--------------线性表的第一个儿子 这个儿子的结构体定义: typedef int ElemType;//取别名 typedef struct link{ ElemType * he ...

- python flask API 返回状态码

@app.route('/dailyupdate', methods = ['POST','GET'])def dailyUpdate(): try: db=MySQLdb.connect(" ...

- Dubbo——服务引用

文章目录 引言 正文 服务订阅 Invoker的创建 单注册中心的Invoker创建 Dubbo直连的Invoker创建 创建代理类 引言 上一篇我们分析了服务发布的原理,可以看到默认是创建了一个Ne ...

- Java并发编程的艺术(一、二章) ——学习笔记

第一章 并发编程的挑战 需要了解的一些概念 转自 https://blog.csdn.net/TzBugs/article/details/80921351 (1) 同步VS异步 同步和异步通常用来 ...

- HTTP参数污染学习

HTTP参数污染 --- HPP 参考: 参数污染漏洞(HPP)挖掘技巧及实战案例全汇总 视频内容 HPP,简而言之,就是给参数赋上多个值. 比如: https://www.baidu.com/s?w ...

- 绕过PowerShell执行策略方法总结

默认情况下,PowerShell配置为阻止Windows系统上执行PowerShell脚本.对于渗透测试人员,系统管理员和开发人员而言,这可能是一个障碍,但并非必须如此. 什么是PowerShell执 ...