Convolutional Neural Network-week2编程题2(Residual Networks)

1. Residual Networks(残差网络)

残差网络 就是为了解决深网络的难以训练的问题的。

In this assignment, you will:

Implement the basic building blocks of ResNets.

Put together these building blocks to implement and train a state-of-the-art neural network for image classification.

This assignment will be done in Keras.

1.1 导入库

import numpy as np

from keras import layers

from keras.layers import Input, Add, Dense, Activation, ZeroPadding2D, BatchNormalization, Flatten, Conv2D, AveragePooling2D, MaxPooling2D, GlobalMaxPooling2D

from keras.models import Model, load_model

from keras.preprocessing import image

from keras.utils import layer_utils

from keras.utils.data_utils import get_file

from keras.applications.imagenet_utils import preprocess_input

import pydot

from IPython.display import SVG

from keras.utils.vis_utils import model_to_dot

from keras.utils import plot_model

from resnets_utils import *

from keras.initializers import glorot_uniform

import scipy.misc

from matplotlib.pyplot import imshow

%matplotlib inline

import keras.backend as K

K.set_image_data_format('channels_last')

K.set_learning_phase(1)

2. The problem of very deep neural networks

使用深层网络最大的好处就是它能够完成很复杂的功能,它能够从边缘(浅层)到 非常复杂的特征(深层)中不同的抽象层次的特征中学习。

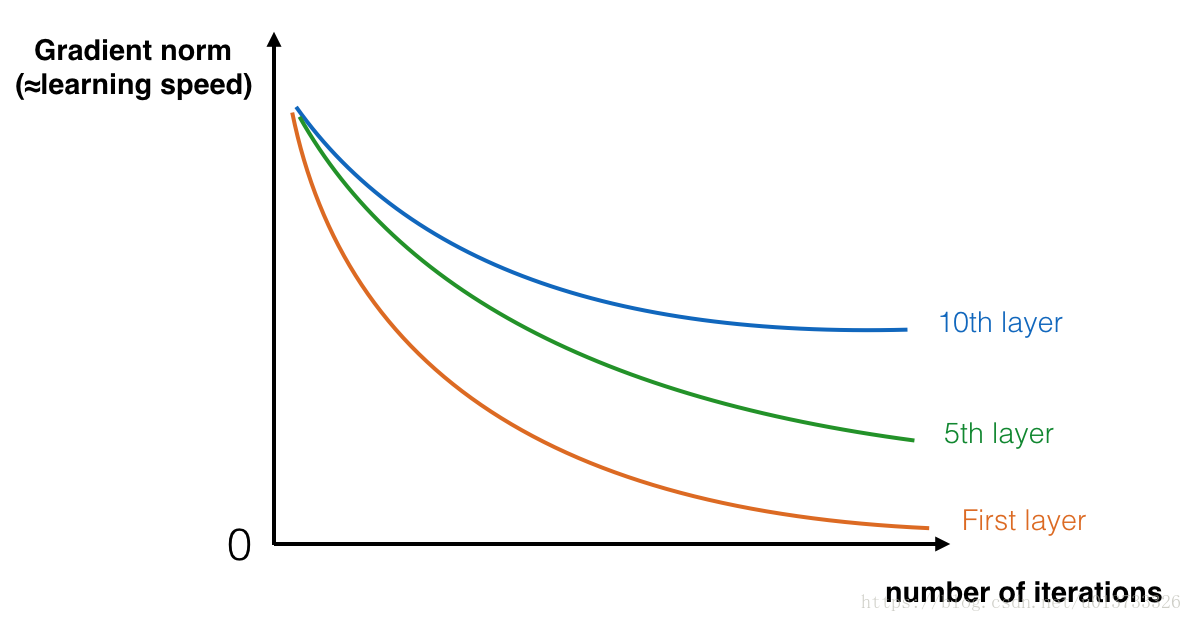

然而,深层神经网络会出现梯度消失,非常深的网络通常会有一个梯度信号,该信号会迅速的消退,从而使得梯度下降变得非常缓慢。

更具体的说,在梯度下降的过程中,当你从最后一层回到第一层的时候,你在每个步骤上乘以权重矩阵,因此梯度值可以迅速的指数式地减少到0(在极少数的情况下会迅速增长,造成梯度爆炸)。

在训练的过程中,你可能会看到开始几层的梯度的大小(或范数)下降到0 十分的快速,如下图:

在前几层中随着迭代次数的增加,学习的速度会下降的非常快。

3. Building a Residual Network

In ResNets, a "shortcut" or a "skip connection" allows the gradient to be directly backpropagated to earlier layers:

**Figure 2** : A ResNet block showing a **skip-connection**

图像右边是添加了一条 shortcut 的主路,通过把 these ResNet blocks 堆叠在一起,可以形成一个非常深的网络。

ResNet blocks有两种类型,主要取决于输入输出的维度是否相同:

3.1 Identity block (恒等块)

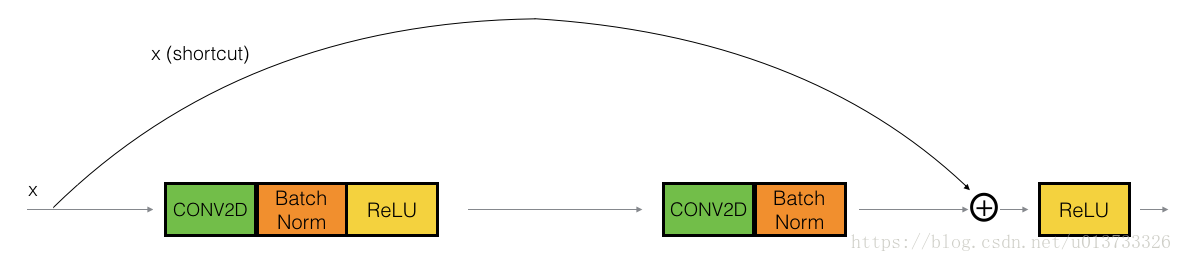

输入的激活值 \(a^{[l]}\) 与 输出的激活值 \(a^{[l+2]}\) 具有相同的维度

**Figure 3** : **Identity block.** Skip connection "skips over" 2 layers.

在上图中,我们依旧把 CONV2D 与 ReLU 包含到了每个步骤中

为了提升训练的速度,我们在每一步也把数据进行了 归一化(BatchNorm)

在实践中,我们要实现: skip connection 会跳过3个隐藏层而不是两个,就像下图:

**Figure 4** : **Identity block.** Skip connection "skips over" 3 layers.

Here're the individual steps.

First component of main path:

The first CONV2D has \(F_1\) filters of shape (1,1) and a stride of (1,1). 没有padding操作,padding=0 即"Valid convolutions" and its name should be

conv_name_base + '2a'. 使用0作为随机种子为其random initialization.The first BatchNorm is normalizing the channels axis. Its name should be

bn_name_base + '2a'.Then apply the ReLU activation function. 它没有命名也没有超参数(no hyperparameters).

Second component of main path:

The second CONV2D has \(F_2\) filters of shape \((f,f)\) and a stride of (1,1). 它的填充方式(padding)是 "same" and its name should be

conv_name_base + '2b'. 使用0作为随机种子为其random initialization.The second BatchNorm is normalizing the channels axis. Its name should be

bn_name_base + '2b'.Then apply the ReLU activation function. 它没有命名也没有超参数(no hyperparameters).

Third component of main path:

The third CONV2D has \(F_3\) filters of shape (1,1) and a stride of (1,1). 没有padding操作,padding=0 即"Valid convolutions" and its name should be

conv_name_base + '2c'. 使用0作为随机种子为其random initialization.The third BatchNorm is normalizing the channels axis. Its name should be

bn_name_base + '2c'. 注意这里没有ReLU函数

Final step:

把 the shortcut 和 the input 加到一起.

Then apply the ReLU activation function. 它没有命名也没有超参数(no hyperparameters).

Exercise: Implement the ResNet identity block.

实现Conv2D:参见这里

实现BatchNorm:参见这里

实现

activation(激活):使用Activation('relu')(X)To add the value passed forward by the shortcut:参见这里

# GRADED FUNCTION: identity_block

def identity_block(X, f, filters, stage, block):

"""

Implementation of the identity block as defined in Figure 3

Arguments:

X -- input tensor of shape (m, n_H_prev, n_W_prev, n_C_prev)

f -- integer, specifying the shape of the middle CONV's window for the main path

filters -- python list of integers, defining the number of filters in the CONV layers of the main path

stage -- integer, used to name the layers, depending on their position in the network

block -- string/character, used to name the layers, depending on their position in the network

Returns:

X -- output of the identity block, tensor of shape (n_H, n_W, n_C)

"""

# defining name basis

conv_name_base = 'res' + str(stage) + block + '_branch'

bn_name_base = 'bn' + str(stage) + block + '_branch'

# Retrieve Filters

F1, F2, F3 = filters

# Save the input value. You'll need this later to add back to the main path.

X_shortcut = X

# First component of main path

X = Conv2D(filters = F1, kernel_size = (1, 1), strides = (1,1), padding = 'valid', name = conv_name_base + '2a', kernel_initializer = glorot_uniform(seed=0))(X)

X = BatchNormalization(axis = 3, name = bn_name_base + '2a')(X)

X = Activation('relu')(X)

### START CODE HERE ###

# Second component of main path (≈3 lines)

X = Conv2D(filters = F2, kernel_size = (f, f), strides = (1,1), padding = 'same', name = conv_name_base + '2b', kernel_initializer = glorot_uniform(seed=0))(X)

X = BatchNormalization(axis = 3, name = bn_name_base + '2b')(X)

X = Activation('relu')(X)

# Third component of main path (≈2 lines)

X = Conv2D(filters = F3, kernel_size = (1, 1), strides = (1,1), padding = 'valid', name = conv_name_base + '2c', kernel_initializer = glorot_uniform(seed=0))(X)

X = BatchNormalization(axis = 3, name = bn_name_base + '2c')(X)

# Final step: Add shortcut value to main path, and pass it through a RELU activation (≈2 lines)

X = Add()([X, X_shortcut])

X = Activation('relu')(X)

### END CODE HERE ###

return X

测试:

tf.reset_default_graph()

with tf.Session() as test:

np.random.seed(1)

A_prev = tf.placeholder("float", [3, 4, 4, 6])

X = np.random.randn(3, 4, 4, 6)

A = identity_block(A_prev, f = 2, filters = [2, 4, 6], stage = 1, block = 'a')

test.run(tf.global_variables_initializer())

out = test.run([A], feed_dict={A_prev: X, K.learning_phase(): 0})

print("out = " + str(out[0][1][1][0]))

out = [0.94822985 0. 1.1610144 2.747859 0. 1.36677 ]

3.2 The convolutional block

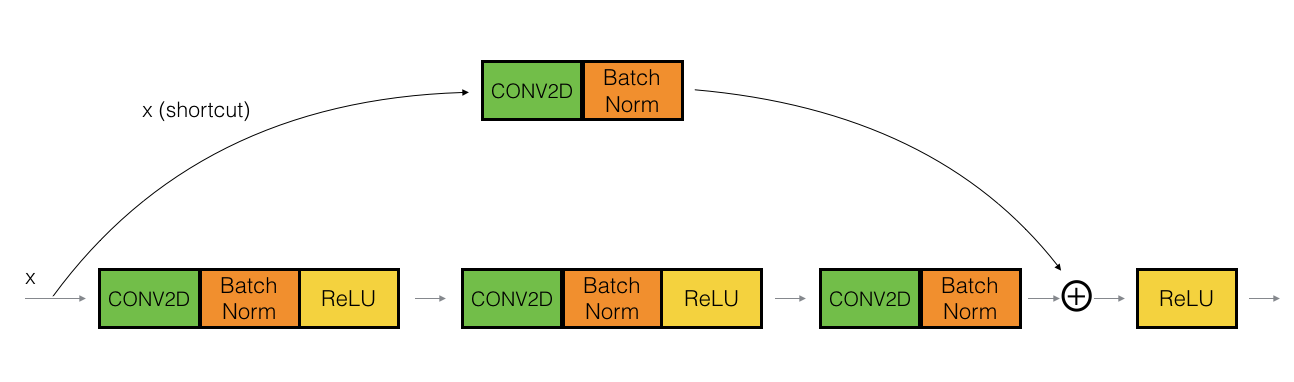

The ResNet "convolutional block" is the other type of block. ,它适用于输入输出的维度不一致的情况,它不同于上面的恒等块,与之区别在于,shortcut 中有一个CONV2D层,如下图:

**Figure 4** : **Convolutional block**

The CONV2D layer in the shortcut path is used to resize the input \(x\) to a different dimension, so that the dimensions match up in the final addition needed to add the shortcut value back to the main path. (\(W_s\))

For example, 把 the activation dimensions's height and width 减少一半, 你可以使用 a 1x1 convolution with a stride of 2.

The CONV2D layer on the shortcut path 不使用任何 non-linear activation function. 它主要作用是 应用一个学习后的 linear function 来 reduces the dimension of the input, 以便在后面的加法步骤中的维度相匹配。

The convolutional block 步骤如下.

First component of main path:

The first CONV2D has \(F_1\) filters of shape (1,1) and a stride of (s,s). 没有padding操作,padding=0 即"Valid convolutions" and its name should be

conv_name_base + '2a'.The first BatchNorm is normalizing the channels axis. Its name should be

bn_name_base + '2a'.Then apply the ReLU activation function. 它没有命名也没有超参数.

Second component of main path:

The second CONV2D has \(F_2\) filters of (f,f) and a stride of (1,1). Its padding is "same" and it's name should be

conv_name_base + '2b'.The second BatchNorm is normalizing the channels axis. Its name should be

bn_name_base + '2b'.Then apply the ReLU activation function. 它没有命名也没有超参数.

Third component of main path:

The third CONV2D has \(F_3\) filters of (1,1) and a stride of (1,1). 没有padding操作,padding=0 即"Valid convolutions" and it's name should be

conv_name_base + '2c'.The third BatchNorm is normalizing the channels axis. Its name should be

bn_name_base + '2c'. Note that there is no ReLU activation function in this component.注意这里没有ReLU函数

Shortcut path:

The CONV2D has \(F_3\) filters of shape (1,1) and a stride of (s,s). 没有padding操作,padding=0 即"Valid convolutions" and its name should be

conv_name_base + '1'.The BatchNorm is normalizing the channels axis. Its name should be

bn_name_base + '1'.注意这里没有ReLU函数

Final step:

把 the shortcut 和 the input 加到一起.

应用 ReLU activation function. 它没有命名也没有超参数.

Exercise: Implement the convolutional block.

实现Conv2D:参见这里

实现BatchNorm:参见这里

实现

activation(激活):使用Activation('relu')(X)To add the value passed forward by the shortcut:参见这里

Conv2D(filters, kernel_size, strides=(1, 1), padding='valid', ......):

filters:卷积核的数目(即输出的维度)

kernel_size:单个整数或由两个整数构成的list/tuple,卷积核的宽度和长度。如为单个整数,则表示在各个空间维度的相同长度。

strides:单个整数或由两个整数构成的list/tuple,为卷积的步长。如为单个整数,则表示在各个空间维度的相同步长。任何不为1的strides均与任何不为1的dilation_rate均不兼容

padding:补0策略,为“valid”, “same” 。“valid”代表只进行有效的卷积,即对边界数据不处理。“same”代表保留边界处的卷积结果,通常会导致输出shape与输入shape相同。

# GRADED FUNCTION: convolutional_block

def convolutional_block(X, f, filters, stage, block, s = 2):

"""

Implementation of the convolutional block as defined in Figure 4

Arguments:

X -- input tensor of shape (m, n_H_prev, n_W_prev, n_C_prev)

f -- integer, specifying the shape of the middle CONV's window for the main path

filters -- python list of integers, defining the number of filters in the CONV layers of the main path

stage -- integer, used to name the layers, depending on their position in the network

block -- string/character, used to name the layers, depending on their position in the network

s -- Integer, specifying the stride to be used

Returns:

X -- output of the convolutional block, tensor of shape (n_H, n_W, n_C)

"""

# defining name basis

conv_name_base = 'res' + str(stage) + block + '_branch'

bn_name_base = 'bn' + str(stage) + block + '_branch'

# Retrieve Filters

F1, F2, F3 = filters

# Save the input value

X_shortcut = X

##### MAIN PATH #####

# First component of main path

X = Conv2D(F1, (1, 1), strides = (s,s), name = conv_name_base + '2a', kernel_initializer = glorot_uniform(seed=0))(X)

X = BatchNormalization(axis = 3, name = bn_name_base + '2a')(X)

X = Activation('relu')(X)

### START CODE HERE ###

# Second component of main path (≈3 lines)

X = Conv2D(F2, (f, f), strides = (1,1),padding = 'same', name = conv_name_base + '2b', kernel_initializer = glorot_uniform(seed=0))(X)

X = BatchNormalization(axis = 3, name = bn_name_base + '2b')(X)

X = Activation('relu')(X)

# Third component of main path (≈2 lines)

X = Conv2D(F3, (1, 1), strides = (1,1),padding = 'valid', name = conv_name_base + '2c', kernel_initializer = glorot_uniform(seed=0))(X)

X = BatchNormalization(axis = 3, name = bn_name_base + '2c')(X)

##### SHORTCUT PATH #### (≈2 lines)

# 让 X_shortcur 维度 和 Third之后的X 的维度,相同

# 使用 F3个 1x1 的filter,X_shortcut需要步长 stride=(s,s), X已经步长 (s,s)过了

X_shortcut = Conv2D(F3, (1, 1), strides = (s,s), name = conv_name_base + '1', kernel_initializer = glorot_uniform(seed=0))(X_shortcut)

X_shortcut = BatchNormalization(axis = 3, name = bn_name_base + '1')(X_shortcut)

# Final step: Add shortcut value to main path, and pass it through a RELU activation (≈2 lines)

X = Add()([X, X_shortcut])

X = Activation('relu')(X)

### END CODE HERE ###

return X

测试:

tf.reset_default_graph()

with tf.Session() as test:

np.random.seed(1)

A_prev = tf.placeholder("float", [3, 4, 4, 6])

X = np.random.randn(3, 4, 4, 6)

A = convolutional_block(A_prev, f = 2, filters = [2, 4, 6], stage = 1, block = 'a')

test.run(tf.global_variables_initializer())

out = test.run([A], feed_dict={A_prev: X, K.learning_phase(): 0})

print("out = " + str(out[0][1][1][0]))

out = [0.09018463 1.2348977 0.46822017 0.0367176 0. 0.65516603]

4. Building your first ResNet model (50 layers)

下图就描述了神经网络的算法细节,图中的"ID BLOCK"是指标准的恒等块,"ID BLOCK X3"是指把三个恒等块放在一起。

**Figure 5** : **ResNet-50 model**

The details of this ResNet-50 model are:

Zero-padding pads the input with a pad of (3,3)

Stage 1:

The 2D Convolution has 64 filters of shape (7,7) and uses a stride of (2,2). Its name is "conv1".

BatchNorm is applied to the channels axis of the input.

MaxPooling uses a (3,3) window and a (2,2) stride.

Stage 2:

The convolutional block 使用3组个数为[64,64,256]的过滤器, "f" is 3, "s" is 1 and the block is "a".

2个 identity blocks 使用3组个数为[64,64,256]的过滤器, "f" is 3 and the blocks are "b" and "c".

Stage 3:

The convolutional block 使用3组个数为[128,128,512]的过滤器, "f" is 3, "s" is 2 and the block is "a".

3个 identity blocks 使用3组个数为[128,128,512]的过滤器, "f" is 3 and the blocks are "b", "c" and "d".

Stage 4:

The convolutional block uses three set of filters of size [256, 256, 1024], "f" is 3, "s" is 2 and the block is "a".

5个 identity blocks use three set of filters of size [256, 256, 1024], "f" is 3 and the blocks are "b", "c", "d", "e" and "f".

Stage 5:

The convolutional block uses three set of filters of size [512, 512, 2048], "f" is 3, "s" is 2 and the block is "a".

2个 identity blocks use three set of filters of size [512, 512, 2048], "f" is 3 and the blocks are "b" and "c".

The 2D Average Pooling uses a window of shape (2,2) and its name is "avg_pool".

The flatten 没有任何 hyperparameters 或 命名.

The Fully Connected (Dense) layer 将input减少到分类的数量 using a softmax activation. Its name should be

'fc' + str(classes).

Exercise: Implement the ResNet with 50 layers described in the figure above.

参考文档:

Average pooling see reference

Conv2D:参见这里

BatchNorm:参见这里

Zero padding: See reference

Max pooling: See reference

Fully conected layer: See reference

Addition: See reference

# GRADED FUNCTION: ResNet50

def ResNet50(input_shape = (64, 64, 3), classes = 6):

"""

Implementation of the popular ResNet50 the following architecture:

CONV2D -> BATCHNORM -> RELU -> MAXPOOL -> CONVBLOCK -> IDBLOCK*2 -> CONVBLOCK -> IDBLOCK*3

-> CONVBLOCK -> IDBLOCK*5 -> CONVBLOCK -> IDBLOCK*2 -> AVGPOOL -> TOPLAYER

Arguments:

input_shape -- shape of the images of the dataset

classes -- integer, number of classes

Returns:

model -- a Model() instance in Keras

"""

# Define the input as a tensor with shape input_shape

X_input = Input(input_shape)

# Zero-Padding

X = ZeroPadding2D((3, 3))(X_input)

# Stage 1

X = Conv2D(64, (7, 7), strides = (2, 2), name = 'conv1', kernel_initializer = glorot_uniform(seed=0))(X)

X = BatchNormalization(axis = 3, name = 'bn_conv1')(X)

X = Activation('relu')(X)

X = MaxPooling2D((3, 3), strides=(2, 2))(X)

# Stage 2

X = convolutional_block(X, f = 3, filters = [64, 64, 256], stage = 2, block='a', s = 1)

# f = 3, filter个数分别为 64, 64, 256

X = identity_block(X, 3, [64, 64, 256], stage=2, block='b')

X = identity_block(X, 3, [64, 64, 256], stage=2, block='c')

### START CODE HERE ###

# Stage 3 (≈4 lines)

X = convolutional_block(X, f = 3, filters = [128, 128, 512], stage = 3, block = 'a', s = 2)

X = identity_block(X, 3, filters = [128, 128, 512], stage = 3, block = 'b')

X = identity_block(X, 3, filters = [128, 128, 512], stage = 3, block = 'c')

X = identity_block(X, 3, filters = [128, 128, 512], stage = 3, block = 'd')

# Stage 4 (≈6 lines)

X = convolutional_block(X, f = 3, filters = [256, 256, 1024], stage = 4, block = 'a', s = 2)

X = identity_block(X, 3, filters = [256, 256, 1024], stage = 4, block = 'b')

X = identity_block(X, 3, filters = [256, 256, 1024], stage = 4, block = 'c')

X = identity_block(X, 3, filters = [256, 256, 1024], stage = 4, block = 'd')

X = identity_block(X, 3, filters = [256, 256, 1024], stage = 4, block = 'e')

X = identity_block(X, 3, filters = [256, 256, 1024], stage = 4, block = 'f')

# Stage 5 (≈3 lines)

X = convolutional_block(X, f = 3, filters = [512, 512, 2048], stage = 5, block='a', s = 2)

X = identity_block(X, 3, [512, 512, 2048], stage=5, block='b')

X = identity_block(X, 3, [512, 512, 2048], stage=5, block='c')

# AVGPOOL (≈1 line). Use "X = AveragePooling2D(...)(X)"

X = AveragePooling2D(pool_size=(2, 2), strides=None, padding='valid', data_format=None, name="avg_pool")(X)

### END CODE HERE ###

# output layer

X = Flatten()(X)

X = Dense(classes, activation='softmax', name='fc' + str(classes), kernel_initializer = glorot_uniform(seed=0))(X)

# Create model

# from keras.layers import Lambda

# my_concat = Lambda(lambda x: K.concatenate([x[0],x[1]],axis=-1))

model = Model(inputs = X_input, outputs = X, name='ResNet50')

return model

# 创建模型

model = ResNet50(input_shape = (64, 64, 3), classes = 6)

# 编译模型

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

导入数据

X_train_orig, Y_train_orig, X_test_orig, Y_test_orig, classes = load_dataset()

# Normalize image vectors

X_train = X_train_orig/255.

X_test = X_test_orig/255.

# Convert training and test labels to one hot matrices

Y_train = convert_to_one_hot(Y_train_orig, 6).T

Y_test = convert_to_one_hot(Y_test_orig, 6).T

print ("number of training examples = " + str(X_train.shape[0]))

print ("number of test examples = " + str(X_test.shape[0]))

print ("X_train shape: " + str(X_train.shape))

print ("Y_train shape: " + str(Y_train.shape))

print ("X_test shape: " + str(X_test.shape))

print ("Y_test shape: " + str(Y_test.shape))

number of training examples = 1080

number of test examples = 120

X_train shape: (1080, 64, 64, 3)

Y_train shape: (1080, 6)

X_test shape: (120, 64, 64, 3)

Y_test shape: (120, 6)

# 训练模型

model.fit(X_train, Y_train, epochs = 2, batch_size = 32)

Epoch 1/2

1080/1080 [==============================] - 360s - loss: 2.8792 - acc: 0.2574

Epoch 2/2

1080/1080 [==============================] - 357s - loss: 2.1027 - acc: 0.3426

# 评估模型

preds = model.evaluate(X_test, Y_test)

print ("Loss = " + str(preds[0]))

print ("Test Accuracy = " + str(preds[1]))

120/120 [==============================] - 11s

Loss = 2.249542538324992

Test Accuracy = 0.16666666666666666

在完成这个任务之后,如果愿意的话,您还可以选择继续训练ResNet。当

我们训练20 epochs时,我们得到了更好的性能,但是在得在CPU上训练需要一个多小时。

我们加载已经训练好的 ResNet50模型的权重:

model = load_model('ResNet50.h5', compile=False) # 因为版本问题,必须加 False

# 重新编译

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

# 评估模型

preds = model.evaluate(X_test, Y_test)

print ("Loss = " + str(preds[0]))

print ("Test Accuracy = " + str(preds[1]))

120/120 [==============================] - 10s

Loss = 0.10854306469360987

Test Accuracy = 0.9666666626930237

5. 测试你自己的图片

import imageio

img_path = 'images/my_image.jpg'

img = image.load_img(img_path, target_size=(64, 64))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0) / 255

# # x = preprocess_input(x)

print('Input image shape:', x.shape)

# # 显示图像

my_image = imageio.imread(img_path)

imshow(my_image)

print("class prediction vector [p(0), p(1), p(2), p(3), p(4), p(5)] = ")

print(model.predict(x))

print(np.argmax(model.predict(x)))

Input image shape: (1, 64, 64, 3)

class prediction vector [p(0), p(1), p(2), p(3), p(4), p(5)] =

[[2.9170844e-08 4.4383746e-06 9.9998820e-01 3.6407993e-08 7.3888405e-06

4.3413018e-10]]

2

显示模型总结

model.summary()

____________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

====================================================================================================

input_1 (InputLayer) (None, 64, 64, 3) 0

____________________________________________________________________________________________________

zero_padding2d_1 (ZeroPadding2D) (None, 70, 70, 3) 0 input_1[0][0]

____________________________________________________________________________________________________

conv1 (Conv2D) (None, 32, 32, 64) 9472 zero_padding2d_1[0][0]

____________________________________________________________________________________________________

bn_conv1 (BatchNormalization) (None, 32, 32, 64) 256 conv1[0][0]

____________________________________________________________________________________________________

activation_4 (Activation) (None, 32, 32, 64) 0 bn_conv1[0][0]

____________________________________________________________________________________________________

max_pooling2d_1 (MaxPooling2D) (None, 15, 15, 64) 0 activation_4[0][0]

____________________________________________________________________________________________________

res2a_branch2a (Conv2D) (None, 15, 15, 64) 4160 max_pooling2d_1[0][0]

____________________________________________________________________________________________________

bn2a_branch2a (BatchNormalizatio (None, 15, 15, 64) 256 res2a_branch2a[0][0]

____________________________________________________________________________________________________

activation_5 (Activation) (None, 15, 15, 64) 0 bn2a_branch2a[0][0]

____________________________________________________________________________________________________

res2a_branch2b (Conv2D) (None, 15, 15, 64) 36928 activation_5[0][0]

____________________________________________________________________________________________________

bn2a_branch2b (BatchNormalizatio (None, 15, 15, 64) 256 res2a_branch2b[0][0]

____________________________________________________________________________________________________

activation_6 (Activation) (None, 15, 15, 64) 0 bn2a_branch2b[0][0]

____________________________________________________________________________________________________

res2a_branch2c (Conv2D) (None, 15, 15, 256) 16640 activation_6[0][0]

____________________________________________________________________________________________________

res2a_branch1 (Conv2D) (None, 15, 15, 256) 16640 max_pooling2d_1[0][0]

____________________________________________________________________________________________________

bn2a_branch2c (BatchNormalizatio (None, 15, 15, 256) 1024 res2a_branch2c[0][0]

____________________________________________________________________________________________________

bn2a_branch1 (BatchNormalization (None, 15, 15, 256) 1024 res2a_branch1[0][0]

____________________________________________________________________________________________________

add_2 (Add) (None, 15, 15, 256) 0 bn2a_branch2c[0][0]

bn2a_branch1[0][0]

____________________________________________________________________________________________________

activation_7 (Activation) (None, 15, 15, 256) 0 add_2[0][0]

____________________________________________________________________________________________________

res2b_branch2a (Conv2D) (None, 15, 15, 64) 16448 activation_7[0][0]

____________________________________________________________________________________________________

bn2b_branch2a (BatchNormalizatio (None, 15, 15, 64) 256 res2b_branch2a[0][0]

____________________________________________________________________________________________________

activation_8 (Activation) (None, 15, 15, 64) 0 bn2b_branch2a[0][0]

____________________________________________________________________________________________________

res2b_branch2b (Conv2D) (None, 15, 15, 64) 36928 activation_8[0][0]

____________________________________________________________________________________________________

bn2b_branch2b (BatchNormalizatio (None, 15, 15, 64) 256 res2b_branch2b[0][0]

____________________________________________________________________________________________________

activation_9 (Activation) (None, 15, 15, 64) 0 bn2b_branch2b[0][0]

____________________________________________________________________________________________________

res2b_branch2c (Conv2D) (None, 15, 15, 256) 16640 activation_9[0][0]

____________________________________________________________________________________________________

bn2b_branch2c (BatchNormalizatio (None, 15, 15, 256) 1024 res2b_branch2c[0][0]

____________________________________________________________________________________________________

add_3 (Add) (None, 15, 15, 256) 0 bn2b_branch2c[0][0]

activation_7[0][0]

____________________________________________________________________________________________________

activation_10 (Activation) (None, 15, 15, 256) 0 add_3[0][0]

____________________________________________________________________________________________________

res2c_branch2a (Conv2D) (None, 15, 15, 64) 16448 activation_10[0][0]

____________________________________________________________________________________________________

bn2c_branch2a (BatchNormalizatio (None, 15, 15, 64) 256 res2c_branch2a[0][0]

____________________________________________________________________________________________________

activation_11 (Activation) (None, 15, 15, 64) 0 bn2c_branch2a[0][0]

____________________________________________________________________________________________________

res2c_branch2b (Conv2D) (None, 15, 15, 64) 36928 activation_11[0][0]

____________________________________________________________________________________________________

bn2c_branch2b (BatchNormalizatio (None, 15, 15, 64) 256 res2c_branch2b[0][0]

____________________________________________________________________________________________________

activation_12 (Activation) (None, 15, 15, 64) 0 bn2c_branch2b[0][0]

____________________________________________________________________________________________________

res2c_branch2c (Conv2D) (None, 15, 15, 256) 16640 activation_12[0][0]

____________________________________________________________________________________________________

bn2c_branch2c (BatchNormalizatio (None, 15, 15, 256) 1024 res2c_branch2c[0][0]

____________________________________________________________________________________________________

add_4 (Add) (None, 15, 15, 256) 0 bn2c_branch2c[0][0]

activation_10[0][0]

____________________________________________________________________________________________________

activation_13 (Activation) (None, 15, 15, 256) 0 add_4[0][0]

____________________________________________________________________________________________________

res3a_branch2a (Conv2D) (None, 8, 8, 128) 32896 activation_13[0][0]

____________________________________________________________________________________________________

bn3a_branch2a (BatchNormalizatio (None, 8, 8, 128) 512 res3a_branch2a[0][0]

____________________________________________________________________________________________________

activation_14 (Activation) (None, 8, 8, 128) 0 bn3a_branch2a[0][0]

____________________________________________________________________________________________________

res3a_branch2b (Conv2D) (None, 8, 8, 128) 147584 activation_14[0][0]

____________________________________________________________________________________________________

bn3a_branch2b (BatchNormalizatio (None, 8, 8, 128) 512 res3a_branch2b[0][0]

____________________________________________________________________________________________________

activation_15 (Activation) (None, 8, 8, 128) 0 bn3a_branch2b[0][0]

____________________________________________________________________________________________________

res3a_branch2c (Conv2D) (None, 8, 8, 512) 66048 activation_15[0][0]

____________________________________________________________________________________________________

res3a_branch1 (Conv2D) (None, 8, 8, 512) 131584 activation_13[0][0]

____________________________________________________________________________________________________

bn3a_branch2c (BatchNormalizatio (None, 8, 8, 512) 2048 res3a_branch2c[0][0]

____________________________________________________________________________________________________

bn3a_branch1 (BatchNormalization (None, 8, 8, 512) 2048 res3a_branch1[0][0]

____________________________________________________________________________________________________

add_5 (Add) (None, 8, 8, 512) 0 bn3a_branch2c[0][0]

bn3a_branch1[0][0]

____________________________________________________________________________________________________

activation_16 (Activation) (None, 8, 8, 512) 0 add_5[0][0]

____________________________________________________________________________________________________

res3b_branch2a (Conv2D) (None, 8, 8, 128) 65664 activation_16[0][0]

____________________________________________________________________________________________________

bn3b_branch2a (BatchNormalizatio (None, 8, 8, 128) 512 res3b_branch2a[0][0]

____________________________________________________________________________________________________

activation_17 (Activation) (None, 8, 8, 128) 0 bn3b_branch2a[0][0]

____________________________________________________________________________________________________

res3b_branch2b (Conv2D) (None, 8, 8, 128) 147584 activation_17[0][0]

____________________________________________________________________________________________________

bn3b_branch2b (BatchNormalizatio (None, 8, 8, 128) 512 res3b_branch2b[0][0]

____________________________________________________________________________________________________

activation_18 (Activation) (None, 8, 8, 128) 0 bn3b_branch2b[0][0]

____________________________________________________________________________________________________

res3b_branch2c (Conv2D) (None, 8, 8, 512) 66048 activation_18[0][0]

____________________________________________________________________________________________________

bn3b_branch2c (BatchNormalizatio (None, 8, 8, 512) 2048 res3b_branch2c[0][0]

____________________________________________________________________________________________________

add_6 (Add) (None, 8, 8, 512) 0 bn3b_branch2c[0][0]

activation_16[0][0]

____________________________________________________________________________________________________

activation_19 (Activation) (None, 8, 8, 512) 0 add_6[0][0]

____________________________________________________________________________________________________

res3c_branch2a (Conv2D) (None, 8, 8, 128) 65664 activation_19[0][0]

____________________________________________________________________________________________________

bn3c_branch2a (BatchNormalizatio (None, 8, 8, 128) 512 res3c_branch2a[0][0]

____________________________________________________________________________________________________

activation_20 (Activation) (None, 8, 8, 128) 0 bn3c_branch2a[0][0]

____________________________________________________________________________________________________

res3c_branch2b (Conv2D) (None, 8, 8, 128) 147584 activation_20[0][0]

____________________________________________________________________________________________________

bn3c_branch2b (BatchNormalizatio (None, 8, 8, 128) 512 res3c_branch2b[0][0]

____________________________________________________________________________________________________

activation_21 (Activation) (None, 8, 8, 128) 0 bn3c_branch2b[0][0]

____________________________________________________________________________________________________

res3c_branch2c (Conv2D) (None, 8, 8, 512) 66048 activation_21[0][0]

____________________________________________________________________________________________________

bn3c_branch2c (BatchNormalizatio (None, 8, 8, 512) 2048 res3c_branch2c[0][0]

____________________________________________________________________________________________________

add_7 (Add) (None, 8, 8, 512) 0 bn3c_branch2c[0][0]

activation_19[0][0]

____________________________________________________________________________________________________

activation_22 (Activation) (None, 8, 8, 512) 0 add_7[0][0]

____________________________________________________________________________________________________

res3d_branch2a (Conv2D) (None, 8, 8, 128) 65664 activation_22[0][0]

____________________________________________________________________________________________________

bn3d_branch2a (BatchNormalizatio (None, 8, 8, 128) 512 res3d_branch2a[0][0]

____________________________________________________________________________________________________

activation_23 (Activation) (None, 8, 8, 128) 0 bn3d_branch2a[0][0]

____________________________________________________________________________________________________

res3d_branch2b (Conv2D) (None, 8, 8, 128) 147584 activation_23[0][0]

____________________________________________________________________________________________________

bn3d_branch2b (BatchNormalizatio (None, 8, 8, 128) 512 res3d_branch2b[0][0]

____________________________________________________________________________________________________

activation_24 (Activation) (None, 8, 8, 128) 0 bn3d_branch2b[0][0]

____________________________________________________________________________________________________

res3d_branch2c (Conv2D) (None, 8, 8, 512) 66048 activation_24[0][0]

____________________________________________________________________________________________________

bn3d_branch2c (BatchNormalizatio (None, 8, 8, 512) 2048 res3d_branch2c[0][0]

____________________________________________________________________________________________________

add_8 (Add) (None, 8, 8, 512) 0 bn3d_branch2c[0][0]

activation_22[0][0]

____________________________________________________________________________________________________

activation_25 (Activation) (None, 8, 8, 512) 0 add_8[0][0]

____________________________________________________________________________________________________

res4a_branch2a (Conv2D) (None, 4, 4, 256) 131328 activation_25[0][0]

____________________________________________________________________________________________________

bn4a_branch2a (BatchNormalizatio (None, 4, 4, 256) 1024 res4a_branch2a[0][0]

____________________________________________________________________________________________________

activation_26 (Activation) (None, 4, 4, 256) 0 bn4a_branch2a[0][0]

____________________________________________________________________________________________________

res4a_branch2b (Conv2D) (None, 4, 4, 256) 590080 activation_26[0][0]

____________________________________________________________________________________________________

bn4a_branch2b (BatchNormalizatio (None, 4, 4, 256) 1024 res4a_branch2b[0][0]

____________________________________________________________________________________________________

activation_27 (Activation) (None, 4, 4, 256) 0 bn4a_branch2b[0][0]

____________________________________________________________________________________________________

res4a_branch2c (Conv2D) (None, 4, 4, 1024) 263168 activation_27[0][0]

____________________________________________________________________________________________________

res4a_branch1 (Conv2D) (None, 4, 4, 1024) 525312 activation_25[0][0]

____________________________________________________________________________________________________

bn4a_branch2c (BatchNormalizatio (None, 4, 4, 1024) 4096 res4a_branch2c[0][0]

____________________________________________________________________________________________________

bn4a_branch1 (BatchNormalization (None, 4, 4, 1024) 4096 res4a_branch1[0][0]

____________________________________________________________________________________________________

add_9 (Add) (None, 4, 4, 1024) 0 bn4a_branch2c[0][0]

bn4a_branch1[0][0]

____________________________________________________________________________________________________

activation_28 (Activation) (None, 4, 4, 1024) 0 add_9[0][0]

____________________________________________________________________________________________________

res4b_branch2a (Conv2D) (None, 4, 4, 256) 262400 activation_28[0][0]

____________________________________________________________________________________________________

bn4b_branch2a (BatchNormalizatio (None, 4, 4, 256) 1024 res4b_branch2a[0][0]

____________________________________________________________________________________________________

activation_29 (Activation) (None, 4, 4, 256) 0 bn4b_branch2a[0][0]

____________________________________________________________________________________________________

res4b_branch2b (Conv2D) (None, 4, 4, 256) 590080 activation_29[0][0]

____________________________________________________________________________________________________

bn4b_branch2b (BatchNormalizatio (None, 4, 4, 256) 1024 res4b_branch2b[0][0]

____________________________________________________________________________________________________

activation_30 (Activation) (None, 4, 4, 256) 0 bn4b_branch2b[0][0]

____________________________________________________________________________________________________

res4b_branch2c (Conv2D) (None, 4, 4, 1024) 263168 activation_30[0][0]

____________________________________________________________________________________________________

bn4b_branch2c (BatchNormalizatio (None, 4, 4, 1024) 4096 res4b_branch2c[0][0]

____________________________________________________________________________________________________

add_10 (Add) (None, 4, 4, 1024) 0 bn4b_branch2c[0][0]

activation_28[0][0]

____________________________________________________________________________________________________

activation_31 (Activation) (None, 4, 4, 1024) 0 add_10[0][0]

____________________________________________________________________________________________________

res4c_branch2a (Conv2D) (None, 4, 4, 256) 262400 activation_31[0][0]

____________________________________________________________________________________________________

bn4c_branch2a (BatchNormalizatio (None, 4, 4, 256) 1024 res4c_branch2a[0][0]

____________________________________________________________________________________________________

activation_32 (Activation) (None, 4, 4, 256) 0 bn4c_branch2a[0][0]

____________________________________________________________________________________________________

res4c_branch2b (Conv2D) (None, 4, 4, 256) 590080 activation_32[0][0]

____________________________________________________________________________________________________

bn4c_branch2b (BatchNormalizatio (None, 4, 4, 256) 1024 res4c_branch2b[0][0]

____________________________________________________________________________________________________

activation_33 (Activation) (None, 4, 4, 256) 0 bn4c_branch2b[0][0]

____________________________________________________________________________________________________

res4c_branch2c (Conv2D) (None, 4, 4, 1024) 263168 activation_33[0][0]

____________________________________________________________________________________________________

bn4c_branch2c (BatchNormalizatio (None, 4, 4, 1024) 4096 res4c_branch2c[0][0]

____________________________________________________________________________________________________

add_11 (Add) (None, 4, 4, 1024) 0 bn4c_branch2c[0][0]

activation_31[0][0]

____________________________________________________________________________________________________

activation_34 (Activation) (None, 4, 4, 1024) 0 add_11[0][0]

____________________________________________________________________________________________________

res4d_branch2a (Conv2D) (None, 4, 4, 256) 262400 activation_34[0][0]

____________________________________________________________________________________________________

bn4d_branch2a (BatchNormalizatio (None, 4, 4, 256) 1024 res4d_branch2a[0][0]

____________________________________________________________________________________________________

activation_35 (Activation) (None, 4, 4, 256) 0 bn4d_branch2a[0][0]

____________________________________________________________________________________________________

res4d_branch2b (Conv2D) (None, 4, 4, 256) 590080 activation_35[0][0]

____________________________________________________________________________________________________

bn4d_branch2b (BatchNormalizatio (None, 4, 4, 256) 1024 res4d_branch2b[0][0]

____________________________________________________________________________________________________

activation_36 (Activation) (None, 4, 4, 256) 0 bn4d_branch2b[0][0]

____________________________________________________________________________________________________

res4d_branch2c (Conv2D) (None, 4, 4, 1024) 263168 activation_36[0][0]

____________________________________________________________________________________________________

bn4d_branch2c (BatchNormalizatio (None, 4, 4, 1024) 4096 res4d_branch2c[0][0]

____________________________________________________________________________________________________

add_12 (Add) (None, 4, 4, 1024) 0 bn4d_branch2c[0][0]

activation_34[0][0]

____________________________________________________________________________________________________

activation_37 (Activation) (None, 4, 4, 1024) 0 add_12[0][0]

____________________________________________________________________________________________________

res4e_branch2a (Conv2D) (None, 4, 4, 256) 262400 activation_37[0][0]

____________________________________________________________________________________________________

bn4e_branch2a (BatchNormalizatio (None, 4, 4, 256) 1024 res4e_branch2a[0][0]

____________________________________________________________________________________________________

activation_38 (Activation) (None, 4, 4, 256) 0 bn4e_branch2a[0][0]

____________________________________________________________________________________________________

res4e_branch2b (Conv2D) (None, 4, 4, 256) 590080 activation_38[0][0]

____________________________________________________________________________________________________

bn4e_branch2b (BatchNormalizatio (None, 4, 4, 256) 1024 res4e_branch2b[0][0]

____________________________________________________________________________________________________

activation_39 (Activation) (None, 4, 4, 256) 0 bn4e_branch2b[0][0]

____________________________________________________________________________________________________

res4e_branch2c (Conv2D) (None, 4, 4, 1024) 263168 activation_39[0][0]

____________________________________________________________________________________________________

bn4e_branch2c (BatchNormalizatio (None, 4, 4, 1024) 4096 res4e_branch2c[0][0]

____________________________________________________________________________________________________

add_13 (Add) (None, 4, 4, 1024) 0 bn4e_branch2c[0][0]

activation_37[0][0]

____________________________________________________________________________________________________

activation_40 (Activation) (None, 4, 4, 1024) 0 add_13[0][0]

____________________________________________________________________________________________________

res4f_branch2a (Conv2D) (None, 4, 4, 256) 262400 activation_40[0][0]

____________________________________________________________________________________________________

bn4f_branch2a (BatchNormalizatio (None, 4, 4, 256) 1024 res4f_branch2a[0][0]

____________________________________________________________________________________________________

activation_41 (Activation) (None, 4, 4, 256) 0 bn4f_branch2a[0][0]

____________________________________________________________________________________________________

res4f_branch2b (Conv2D) (None, 4, 4, 256) 590080 activation_41[0][0]

____________________________________________________________________________________________________

bn4f_branch2b (BatchNormalizatio (None, 4, 4, 256) 1024 res4f_branch2b[0][0]

____________________________________________________________________________________________________

activation_42 (Activation) (None, 4, 4, 256) 0 bn4f_branch2b[0][0]

____________________________________________________________________________________________________

res4f_branch2c (Conv2D) (None, 4, 4, 1024) 263168 activation_42[0][0]

____________________________________________________________________________________________________

bn4f_branch2c (BatchNormalizatio (None, 4, 4, 1024) 4096 res4f_branch2c[0][0]

____________________________________________________________________________________________________

add_14 (Add) (None, 4, 4, 1024) 0 bn4f_branch2c[0][0]

activation_40[0][0]

____________________________________________________________________________________________________

activation_43 (Activation) (None, 4, 4, 1024) 0 add_14[0][0]

____________________________________________________________________________________________________

res5a_branch2a (Conv2D) (None, 2, 2, 512) 524800 activation_43[0][0]

____________________________________________________________________________________________________

bn5a_branch2a (BatchNormalizatio (None, 2, 2, 512) 2048 res5a_branch2a[0][0]

____________________________________________________________________________________________________

activation_44 (Activation) (None, 2, 2, 512) 0 bn5a_branch2a[0][0]

____________________________________________________________________________________________________

res5a_branch2b (Conv2D) (None, 2, 2, 512) 2359808 activation_44[0][0]

____________________________________________________________________________________________________

bn5a_branch2b (BatchNormalizatio (None, 2, 2, 512) 2048 res5a_branch2b[0][0]

____________________________________________________________________________________________________

activation_45 (Activation) (None, 2, 2, 512) 0 bn5a_branch2b[0][0]

____________________________________________________________________________________________________

res5a_branch2c (Conv2D) (None, 2, 2, 2048) 1050624 activation_45[0][0]

____________________________________________________________________________________________________

res5a_branch1 (Conv2D) (None, 2, 2, 2048) 2099200 activation_43[0][0]

____________________________________________________________________________________________________

bn5a_branch2c (BatchNormalizatio (None, 2, 2, 2048) 8192 res5a_branch2c[0][0]

____________________________________________________________________________________________________

bn5a_branch1 (BatchNormalization (None, 2, 2, 2048) 8192 res5a_branch1[0][0]

____________________________________________________________________________________________________

add_15 (Add) (None, 2, 2, 2048) 0 bn5a_branch2c[0][0]

bn5a_branch1[0][0]

____________________________________________________________________________________________________

activation_46 (Activation) (None, 2, 2, 2048) 0 add_15[0][0]

____________________________________________________________________________________________________

res5b_branch2a (Conv2D) (None, 2, 2, 512) 1049088 activation_46[0][0]

____________________________________________________________________________________________________

bn5b_branch2a (BatchNormalizatio (None, 2, 2, 512) 2048 res5b_branch2a[0][0]

____________________________________________________________________________________________________

activation_47 (Activation) (None, 2, 2, 512) 0 bn5b_branch2a[0][0]

____________________________________________________________________________________________________

res5b_branch2b (Conv2D) (None, 2, 2, 512) 2359808 activation_47[0][0]

____________________________________________________________________________________________________

bn5b_branch2b (BatchNormalizatio (None, 2, 2, 512) 2048 res5b_branch2b[0][0]

____________________________________________________________________________________________________

activation_48 (Activation) (None, 2, 2, 512) 0 bn5b_branch2b[0][0]

____________________________________________________________________________________________________

res5b_branch2c (Conv2D) (None, 2, 2, 2048) 1050624 activation_48[0][0]

____________________________________________________________________________________________________

bn5b_branch2c (BatchNormalizatio (None, 2, 2, 2048) 8192 res5b_branch2c[0][0]

____________________________________________________________________________________________________

add_16 (Add) (None, 2, 2, 2048) 0 bn5b_branch2c[0][0]

activation_46[0][0]

____________________________________________________________________________________________________

activation_49 (Activation) (None, 2, 2, 2048) 0 add_16[0][0]

____________________________________________________________________________________________________

res5c_branch2a (Conv2D) (None, 2, 2, 512) 1049088 activation_49[0][0]

____________________________________________________________________________________________________

bn5c_branch2a (BatchNormalizatio (None, 2, 2, 512) 2048 res5c_branch2a[0][0]

____________________________________________________________________________________________________

activation_50 (Activation) (None, 2, 2, 512) 0 bn5c_branch2a[0][0]

____________________________________________________________________________________________________

res5c_branch2b (Conv2D) (None, 2, 2, 512) 2359808 activation_50[0][0]

____________________________________________________________________________________________________

bn5c_branch2b (BatchNormalizatio (None, 2, 2, 512) 2048 res5c_branch2b[0][0]

____________________________________________________________________________________________________

activation_51 (Activation) (None, 2, 2, 512) 0 bn5c_branch2b[0][0]

____________________________________________________________________________________________________

res5c_branch2c (Conv2D) (None, 2, 2, 2048) 1050624 activation_51[0][0]

____________________________________________________________________________________________________

bn5c_branch2c (BatchNormalizatio (None, 2, 2, 2048) 8192 res5c_branch2c[0][0]

____________________________________________________________________________________________________

add_17 (Add) (None, 2, 2, 2048) 0 bn5c_branch2c[0][0]

activation_49[0][0]

____________________________________________________________________________________________________

activation_52 (Activation) (None, 2, 2, 2048) 0 add_17[0][0]

____________________________________________________________________________________________________

avg_pool (AveragePooling2D) (None, 1, 1, 2048) 0 activation_52[0][0]

____________________________________________________________________________________________________

flatten_1 (Flatten) (None, 2048) 0 avg_pool[0][0]

____________________________________________________________________________________________________

fc6 (Dense) (None, 6) 12294 flatten_1[0][0]

====================================================================================================

Total params: 23,600,006

Trainable params: 23,546,886

Non-trainable params: 53,120

plot_model(model, to_file='model.png')

SVG(model_to_dot(model).create(prog='dot', format='svg'))

Convolutional Neural Network-week2编程题2(Residual Networks)的更多相关文章

- ISSCC 2017论文导读 Session 14 Deep Learning Processors,A 2.9TOPS/W Deep Convolutional Neural Network

最近ISSCC2017大会刚刚举行,看了关于Deep Learning处理器的Session 14,有一些不错的东西,在这里记录一下. A 2.9TOPS/W Deep Convolutional N ...

- ISSCC 2017论文导读 Session 14 Deep Learning Processors,A 2.9TOPS/W Deep Convolutional Neural Network SOC

最近ISSCC2017大会刚刚举行,看了关于Deep Learning处理器的Session 14,有一些不错的东西,在这里记录一下. A 2.9TOPS/W Deep Convolutional N ...

- 论文翻译:2020_FLGCNN: A novel fully convolutional neural network for end-to-end monaural speech enhancement with utterance-based objective functions

论文地址:FLGCNN:一种新颖的全卷积神经网络,用于基于话语的目标函数的端到端单耳语音增强 论文代码:https://github.com/LXP-Never/FLGCCRN(非官方复现) 引用格式 ...

- 论文翻译:2019_TCNN: Temporal convolutional neural network for real-time speech enhancement in the time domain

论文地址:TCNN:时域卷积神经网络用于实时语音增强 论文代码:https://github.com/LXP-Never/TCNN(非官方复现) 引用格式:Pandey A, Wang D L. TC ...

- 论文阅读(Weilin Huang——【TIP2016】Text-Attentional Convolutional Neural Network for Scene Text Detection)

Weilin Huang--[TIP2015]Text-Attentional Convolutional Neural Network for Scene Text Detection) 目录 作者 ...

- 卷积神经网络(Convolutional Neural Network,CNN)

全连接神经网络(Fully connected neural network)处理图像最大的问题在于全连接层的参数太多.参数增多除了导致计算速度减慢,还很容易导致过拟合问题.所以需要一个更合理的神经网 ...

- Convolutional Neural Network in TensorFlow

翻译自Build a Convolutional Neural Network using Estimators TensorFlow的layer模块提供了一个轻松构建神经网络的高端API,它提供了创 ...

- 卷积神经网络(Convolutional Neural Network, CNN)简析

目录 1 神经网络 2 卷积神经网络 2.1 局部感知 2.2 参数共享 2.3 多卷积核 2.4 Down-pooling 2.5 多层卷积 3 ImageNet-2010网络结构 4 DeepID ...

- HYPERSPECTRAL IMAGE CLASSIFICATION USING TWOCHANNEL DEEP CONVOLUTIONAL NEURAL NETWORK阅读笔记

HYPERSPECTRAL IMAGE CLASSIFICATION USING TWOCHANNEL DEEP CONVOLUTIONAL NEURAL NETWORK 论文地址:https:/ ...

- A NEW HYPERSPECTRAL BAND SELECTION APPROACH BASED ON CONVOLUTIONAL NEURAL NETWORK文章笔记

A NEW HYPERSPECTRAL BAND SELECTION APPROACH BASED ON CONVOLUTIONAL NEURAL NETWORK 文章地址:https://ieeex ...

随机推荐

- SSH整合(二)

SSH框架实现登录.新闻增删改查.树形菜单 项目结构 pom.xml 网不好不要一次引入太多,容易下不全 <project xmlns="http://maven.apache.org ...

- WEB漏洞——RCE

RCE(remote command/code execute)远程命令/代码执行漏洞,可以让攻击者直接向后台服务器远程注入操作系统命令或者代码,从而控制后台系统. RCE漏洞 应用程序有时需要调用一 ...

- JS010. 三元运算符扩展运用(多层判断语句 / 多条表达式)

MDN - 三元运算符 语法 Condition ? exprIfTrue : exprIfFalse 用例: function getFee(isMember) { return(isMember ...

- 如何让阿三 Windows 10、11 的恢复分区(Recovery Partition)恢复到 “盖茨” 模式

如何将 Windows Server 2022 的恢复分区(Recovery Partition)移动到 C 盘之前,恢复 C 盘容量调整功能. 请访问原文链接:https://sysin.org/b ...

- servlet请求转发于重定向

请求的转发与重定向是Servlet控制页面跳转的主要方法,在Web应用中使用非常广泛. 一. 请求的转发 Servlet接收到浏览器端请求后,进行一定的处理,先不进行响应,而是在服务器端内部" ...

- ClickOnce 获取客户端发布版本号

https://social.microsoft.com/Forums/es-ES/26786b8d-0155-4261-9672-11b786d8c1d6/clickonceandsetup /// ...

- 使用zipKin构建NetCore分布式链路跟踪

本文主要讲解使用ZipKin构建NetCore分布式链路跟踪 场景 因为最近公司业务量增加,而项目也需要增大部署数量,K8S中Pod基本都扩容了一倍,新增了若干物理机,部分物理机网络通信存在问题,导致 ...

- 【简单数据结构】并查集--洛谷 P1111

题目背景 AA地区在地震过后,连接所有村庄的公路都造成了损坏而无法通车.政府派人修复这些公路. 题目描述 给出A地区的村庄数NN,和公路数MM,公路是双向的.并告诉你每条公路的连着哪两个村庄,并告诉你 ...

- Vue3的新特性及相关的Composition API使用

首先 创建项目 Vue3 Vue3 相较于Vue2 的6大亮点: 1 性能快. 2 按需编译 体积更小 3 提供了组合API 类似于react 的React Hooks 4 更好的Ts支持 5 暴露了 ...

- 自己实现一个Controller——终极型

经过前两篇的学习与实操,也大致掌握了一个k8s资源的Controller写法了,如有不熟,可回顾 自己实现一个Controller--标准型 自己实现一个Controller--精简型 但是目前也只能 ...