Scrapy Architecture overview--官方文档

原文地址:https://doc.scrapy.org/en/latest/topics/architecture.html

This document describes the architecture of Scrapy and how its components interact.

Overview

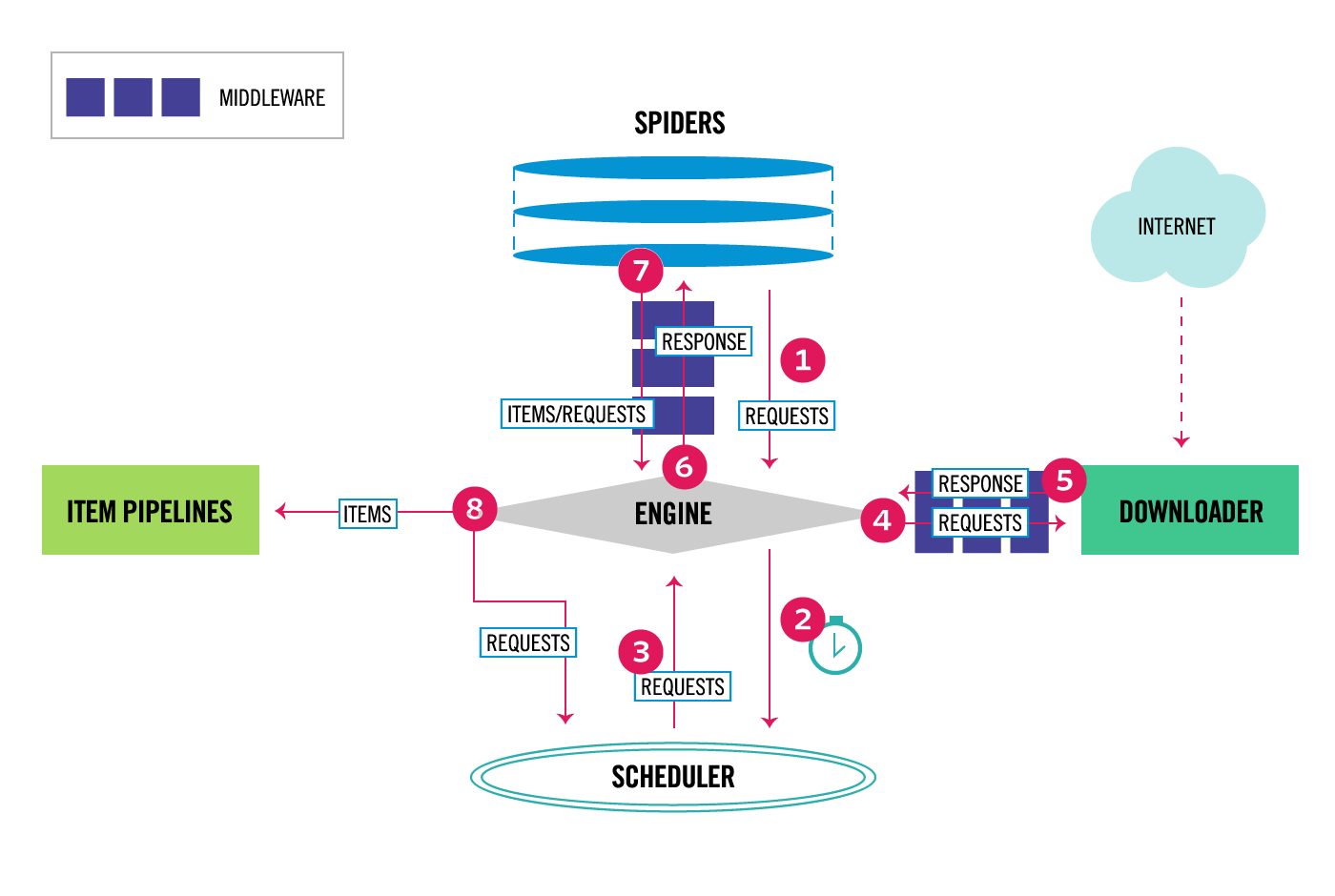

The following diagram shows an overview of the Scrapy architecture with its components and an outline of the data flow that takes place inside the system (shown by the red arrows). A brief description of the components is included below with links for more detailed information about them. The data flow is also described below.

Data flow

The data flow in Scrapy is controlled by the execution engine, and goes like this:

- The Engine gets the initial Requests to crawl from the Spider.

- The Engine schedules the Requests in the Scheduler and asks for the next Requests to crawl.

- The Scheduler returns the next Requests to the Engine.

- The Engine sends the Requests to the Downloader, passing through the Downloader Middlewares (see

process_request()). - Once the page finishes downloading the Downloader generates a Response (with that page) and sends it to the Engine, passing through the Downloader Middlewares (see

process_response()). - The Engine receives the Response from the Downloader and sends it to the Spider for processing, passing through the Spider Middleware (see

process_spider_input()). - The Spider processes the Response and returns scraped items and new Requests (to follow) to the Engine, passing through the Spider Middleware (see

process_spider_output()). - The Engine sends processed items to Item Pipelines, then send processed Requests to the Scheduler and asks for possible next Requests to crawl.

- The process repeats (from step 1) until there are no more requests from the Scheduler.

Components

Scrapy Engine

The engine is responsible for controlling the data flow between all components of the system, and triggering events when certain actions occur. See the Data Flow section above for more details.

Scheduler

The Scheduler receives requests from the engine and enqueues them for feeding them later (also to the engine) when the engine requests them.

Downloader

The Downloader is responsible for fetching web pages and feeding them to the engine which, in turn, feeds them to the spiders.

Spiders

Spiders are custom classes written by Scrapy users to parse responses and extract items (aka scraped items) from them or additional requests to follow. For more information see Spiders.

Item Pipeline

The Item Pipeline is responsible for processing the items once they have been extracted (or scraped) by the spiders. Typical tasks include cleansing, validation and persistence (like storing the item in a database). For more information see Item Pipeline.

Downloader middlewares

Downloader middlewares are specific hooks that sit between the Engine and the Downloader and process requests when they pass from the Engine to the Downloader, and responses that pass from Downloader to the Engine.

Use a Downloader middleware if you need to do one of the following:

- process a request just before it is sent to the Downloader (i.e. right before Scrapy sends the request to the website);

- change received response before passing it to a spider;

- send a new Request instead of passing received response to a spider;

- pass response to a spider without fetching a web page;

- silently drop some requests.

For more information see Downloader Middleware.

Spider middlewares

Spider middlewares are specific hooks that sit between the Engine and the Spiders and are able to process spider input (responses) and output (items and requests).

Use a Spider middleware if you need to

- post-process output of spider callbacks - change/add/remove requests or items;

- post-process start_requests;

- handle spider exceptions;

- call errback instead of callback for some of the requests based on response content.

For more information see Spider Middleware.

Event-driven networking

Scrapy is written with Twisted, a popular event-driven networking framework for Python. Thus, it’s implemented using a non-blocking (aka asynchronous) code for concurrency.

Scrapy Architecture overview--官方文档的更多相关文章

- hbase官方文档(转)

FROM:http://www.just4e.com/hbase.html Apache HBase™ 参考指南 HBase 官方文档中文版 Copyright © 2012 Apache Soft ...

- HBase官方文档

HBase官方文档 目录 序 1. 入门 1.1. 介绍 1.2. 快速开始 2. Apache HBase (TM)配置 2.1. 基础条件 2.2. HBase 运行模式: 独立和分布式 2.3. ...

- Spark官方文档 - 中文翻译

Spark官方文档 - 中文翻译 Spark版本:1.6.0 转载请注明出处:http://www.cnblogs.com/BYRans/ 1 概述(Overview) 2 引入Spark(Linki ...

- Spring 4 官方文档学习 Spring与Java EE技术的集成

本部分覆盖了以下内容: Chapter 28, Remoting and web services using Spring -- 使用Spring进行远程和web服务 Chapter 29, Ent ...

- Spark SQL 官方文档-中文翻译

Spark SQL 官方文档-中文翻译 Spark版本:Spark 1.5.2 转载请注明出处:http://www.cnblogs.com/BYRans/ 1 概述(Overview) 2 Data ...

- 人工智能系统Google开源的TensorFlow官方文档中文版

人工智能系统Google开源的TensorFlow官方文档中文版 2015年11月9日,Google发布人工智能系统TensorFlow并宣布开源,机器学习作为人工智能的一种类型,可以让软件根据大量的 ...

- Google Android官方文档进程与线程(Processes and Threads)翻译

android的多线程在开发中已经有使用过了,想再系统地学习一下,找到了android的官方文档,介绍进程与线程的介绍,试着翻译一下. 原文地址:http://developer.android.co ...

- OGR 官方文档

OGR 官方文档 http://www.gdal.org/ogr/index.html The OGR Simple Features Library is a C++ open source lib ...

- cassandra 3.x官方文档(5)---探测器

写在前面 cassandra3.x官方文档的非官方翻译.翻译内容水平全依赖本人英文水平和对cassandra的理解.所以强烈建议阅读英文版cassandra 3.x 官方文档.此文档一半是翻译,一半是 ...

- Cuda 9.2 CuDnn7.0 官方文档解读

目录 Cuda 9.2 CuDnn7.0 官方文档解读 准备工作(下载) 显卡驱动重装 CUDA安装 系统要求 处理之前安装的cuda文件 下载的deb安装过程 下载的runfile的安装过程 安装完 ...

随机推荐

- MFC常用控件之滚动条

近期学习了鸡啄米大神的博客,对其中的一些知识点做了一些自己的总结.不过,博客内容大部分来自鸡啄米.因此,这个博客算是转载博客,只是加了一些我自己的理解而已.若想学习鸡啄米大神的博客总结,请点击连接:h ...

- 电商物流仓储WMS业务流程

电商物流仓储WMS业务流程 SKU是什么意思? 一文详解电商仓储管理中SKU的含义 从货品角度看,SKU是指单独一种商品,其货品属性已经被确定.只要货品属性有所不同,那么就是不同的SKU. PO信息 ...

- vue-留言板-bootstrap

最近看完入门API,看完视频自己写了个留言板,因为主要是学习vue,所以就复习了一下bootstrap,布局更简单,先看看样式吧. 简单清晰的布局,先说一下功能, 1.输入用户名密码点击提交放入表格 ...

- boost的单例模式

template <typename T> struct singleton_default { private: struct object_creator { ...

- html img加载不同大小图像速度

最近要想法提高网页的性能,在查看图片加载时,产生了试验的想法.一直以来都没有太去深究,还是挖掘一下的好. 很简单的试验,<img>加载两个图像,一个2.3MB,5000*5000,一个22 ...

- layui table 时间戳

, { field: , title: '时间', templet: '<div>{{ laytpl.toDateString(d) }}</div>' }, 或者 , { f ...

- 添加图标:before 和 :after css中用法

#sTitle:after{ position: absolute; top: 2px; font-family: "FontAwesome"; content: "\f ...

- sqlserver重组索引,优化碎片

dbcc dbreindex('digitlab.dbo.RequestForm','',90) dbcc dbreindex('digitlab.dbo.Requestitem','',90) db ...

- javaee字节流文件复制

package Zy; import java.io.FileInputStream; import java.io.FileNotFoundException; import java.io.Fil ...

- python 中的一些小命令