ubuntu18.04 flink-1.9.0 Standalone集群搭建

集群规划

Master JobManager Standby JobManager Task Manager Zookeeper flink01 √ √ flink02 √ √ flink03 √ √ √ flink04 √ √ √ 前置准备

克隆4台虚拟机

网络配置

vim /etc/netplan/01-network-manager-all.yaml 修改配置文件

集群网络配置如下:

# flink01

network:

version: 2

renderer: NetworkManager ethernets:

ens33:

dhcp4: no

dhcp6: no

addresses: [192.168.180.160/24]

gateway4: 192.168.180.2

nameservers:

addresses: [114.114.114.114, 8.8.8.8] # flink02

network:

version: 2

renderer: NetworkManager ethernets:

ens33:

dhcp4: no

dhcp6: no

addresses: [192.168.180.161/24]

gateway4: 192.168.180.2

nameservers:

addresses: [114.114.114.114, 8.8.8.8] # flink03

network:

version: 2

renderer: NetworkManager ethernets:

ens33:

dhcp4: no

dhcp6: no

addresses: [192.168.180.162/24]

gateway4: 192.168.180.2

nameservers:

addresses: [114.114.114.114, 8.8.8.8] #flink04

network:

version: 2

renderer: NetworkManager ethernets:

ens33:

dhcp4: no

dhcp6: no

addresses: [192.168.180.163/24]

gateway4: 192.168.180.2

nameservers:

addresses: [114.114.114.114, 8.8.8.8]能 ping 通 baidu 连上 xshell 即可, 连不上的可能是防火墙问题, ubuntu 用的防火墙是ufw, selinux 默认是disabled, 正常来说应该不会连不上。

修改 hostname 及 hosts文件

vim /etc/hostname 修改 hostname 为 flink01

vim /etc/hosts 修改 host文件

127.0.0.1 localhost

192.168.180.160 flink01

192.168.180.161 flink02

192.168.180.162 flink03

192.168.180.163 flink04 # The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

JDK配置

- 如果没有配置jdk请参考https://www.cnblogs.com/ronnieyuan/p/11461377.html

- 如果之前有下过别的版本的jdk请将jdk的tar包解压到/usr/lib/jvm,然后再修改配置文件并选择jdk

- 如果没有配置jdk请参考https://www.cnblogs.com/ronnieyuan/p/11461377.html

免密登录

root@flink01:~# ssh-keygen -t rsa -P ""

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:2P9NCcuEljhcWBndG8FBoAxOeGbZDIHIdw06r3bbsug root@flink01

The key's randomart image is:

+---[RSA 2048]----+

| . . o*Oo+.=+o |

| o ++*==.. + |

| .o=o + o |

| * o o . |

| . S + o |

| . + o o . |

| o . . o o |

| . o.o . o |

| .E oo. . . |

+----[SHA256]-----+在~目录下vim .ssh/authorized_keys:

将5台虚拟机公钥都存入该文件中, 每台的authorized_keys都一致

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDZGR9+3bTIq6kZ5c+1O2mvyPBM790/0QUuVcjPSREK1pMpyyKRu6CUaBlTOM8JJzkCnEe7DVKY8lV2q7Zv7VVXTLI2dKuUmo0oMSGo5ABvfjGUJfFWdNkqgrPgT+Opl/1kIqr6wTGGCJsRx+Nfkic31WhnPij2IM/Zpqu88kiXmUbaNwBDM5jaRqB/nk7DW4aMwF5oeX6LvEuI1SVmY3DH0w6Cf1EDtOyYG1f9Vof8ao88JOwRZazTNbBdsVcPKDbtvovs/lP+CrtwmAcGFGZSjA22I0dc9ek0puQ5pNUgindpb9egJJFoGhtduut6OfmmvbB8u9PjdBkHcp3Vof4j root@flink01 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDMgNUIzT1YRTGX5E4MiWyQ9UxfQ1pRwCSMy3wqJOuyqv/kW5VsL0BS1VAwtSS9FqCBon3lT0zfgPEkscDdKugMtpgSeREjbQQJSDYPQGyCsHcXQgne5dfaWbvLvaFbNO1G5wqW6E3zC5zy85mSdg9qg8NmqYwyz0O8WSRottuAMHfhoNkHemdrIHhVBBZeKxFbsiXxiWi1EQJUYhYWU1LAqcpiIgFhjAGJkH91qULmm8FxkbB3ytcMlkFBNbcr3UkN5EK0VGV/Ly1qgs9UmXtS7xb5Lw5RlqjUMvwc28oHZYSfPRS1TL/oaFBZSIIGPjudke5wsBwNnzHGE44sTWUt root@flink02 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDRYkED/hA9nqmCBClIByAIcrT94edjno1HXn/KIgrCXy0aoIfsgY5FDr/3iZgaEdvnDz1woyKyj5gqkYxdQw0vLiJGOefgSC4yx7j6vKaK+2aSxJD+DLFB6toFAlyfWziTnyoPj3hPhlpSdG1P4YSEpMub5p1gVsEX9+vRvyazIabsbY9YsCsv/WDs3aAYyalfuvN+AXTrXI8di+AGgsjbwmo0/VQWQfItHY+WdTGcfBQQ8Ad9UX2wVRQPXq7gHh3LlBWKZnB+nZxavnI/G9Lo9w9MODSBgxfCfKNu6fpnpcvh8u+AqmT6rNKZg5NuZWW16siGvLNReSnWn7KwHzzD root@flink03 ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDkBQxFdKvg2MzGl1eFeDO1iqL2+fdJ9b4ktU9fjO/APEkumbftfrDTgJAnQhcXMsU7RjCJ+Zwt/dpeGhql3o7hXuhjQiUXf+8GXYre8cw9+xaYmaVhCZYbpcFiSrd/8TK3qv+gf21YIT7iQEFEl384qxPbyoU4Lm+M/1aY3m4gHvELv3jfg8oVBypxgaAJpJj9ZnnA0zN470cod1E67yVbfIkoSTy8BXd8UhVedYODD1ddaFX8MF53acUdJgLCGzh4axxivlGqdXWB7lhjbBvjllt2LYb7hW+O1qyCFNiRx/+zR9dSu5ZSW3QNg2EP/ljcCjDWa7AHgTvw6BYkdpj1 root@flink04依次测试免密登录是否成功

成功案例:

root@flink01:~# ssh root@192.168.180.161

Welcome to Ubuntu 18.04.2 LTS (GNU/Linux 4.18.0-17-generic x86_64) * Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage * Canonical Livepatch is available for installation.

- Reduce system reboots and improve kernel security. Activate at:

https://ubuntu.com/livepatch 273 packages can be updated.

272 updates are security updates. Your Hardware Enablement Stack (HWE) is supported until April 2023.

Last login: Sun Oct 27 11:11:26 2019 from 192.168.180.160

root@flink02:~#

集群搭建

Zookeeper 搭建

上传 tar 包 并解压到指定目录 并修改解压后的目录名称

tar -zxvf apache-zookeeper-3.5.5-bin.tar.gz -C /opt/ronnie/ cd /opt/ronnie mv apache-zookeeper-3.5.5-bin/ zookeeper-3.5.5

创建并修改zookeeper配置文件

cd zookeeper-3.5.5/conf/ 进入Zookeeper配置文件目录

cp zoo_sample.cfg zoo.cfg 拷贝一份zoo_sample.cfg 为 zoo.cfg

vim zoo.cfg 修改配置文件

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/opt/ronnie/zookeeper-3.5.5/data/zk

dataLogDir=/opt/ronnie/zookeeper-3.5.5/data/log

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=flink01:2888:3888

server.2=flink02:2888:3888

server.3=flink03:2888:3888

server.4=flink04:2888:3888

创建data目录:

mkdir -p /opt/ronnie/zookeeper-3.5.5/data

创建data目录下的日志目录:

mkdir -p /opt/ronnie/zookeeper-3.5.5/data/log

创建data目录下的zk目录

mkdir -p /opt/ronnie/zookeeper-3.5.5/data/zk

cd zk/, 创建myid, 四台虚拟机flink01, flink02, flink03, flink04 分别对应 myid 1,2,3,4

添加环境变量

vim ~/.bashrc , 完成后 source ~/.bashrc

# Zookeeper

export ZOOKEEPER_HOME=/opt/ronnie/zookeeper-3.5.5

export PATH=$ZOOKEEPER_HOME/bin:$PATH

将Zookeeper目录传送给其他几台虚拟机(记得改myid)

cd /opt/ronnie

scp -r zookeeper-3.5.5/ root@192.168.180.161:/opt/ronnie/

scp -r zookeeper-3.5.5/ root@192.168.180.162:/opt/ronnie/

scp -r zookeeper-3.5.5/ root@192.168.180.163:/opt/ronnie/

启动Zookeeper: zkServer.sh start

检查Zookeeper 状态: zkServer.sh status

有显示该节点状态就表示启动成功了

root@flink01:~# zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/ronnie/zookeeper-3.5.5/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost.

Mode: follower

报错请查看日志定位错误

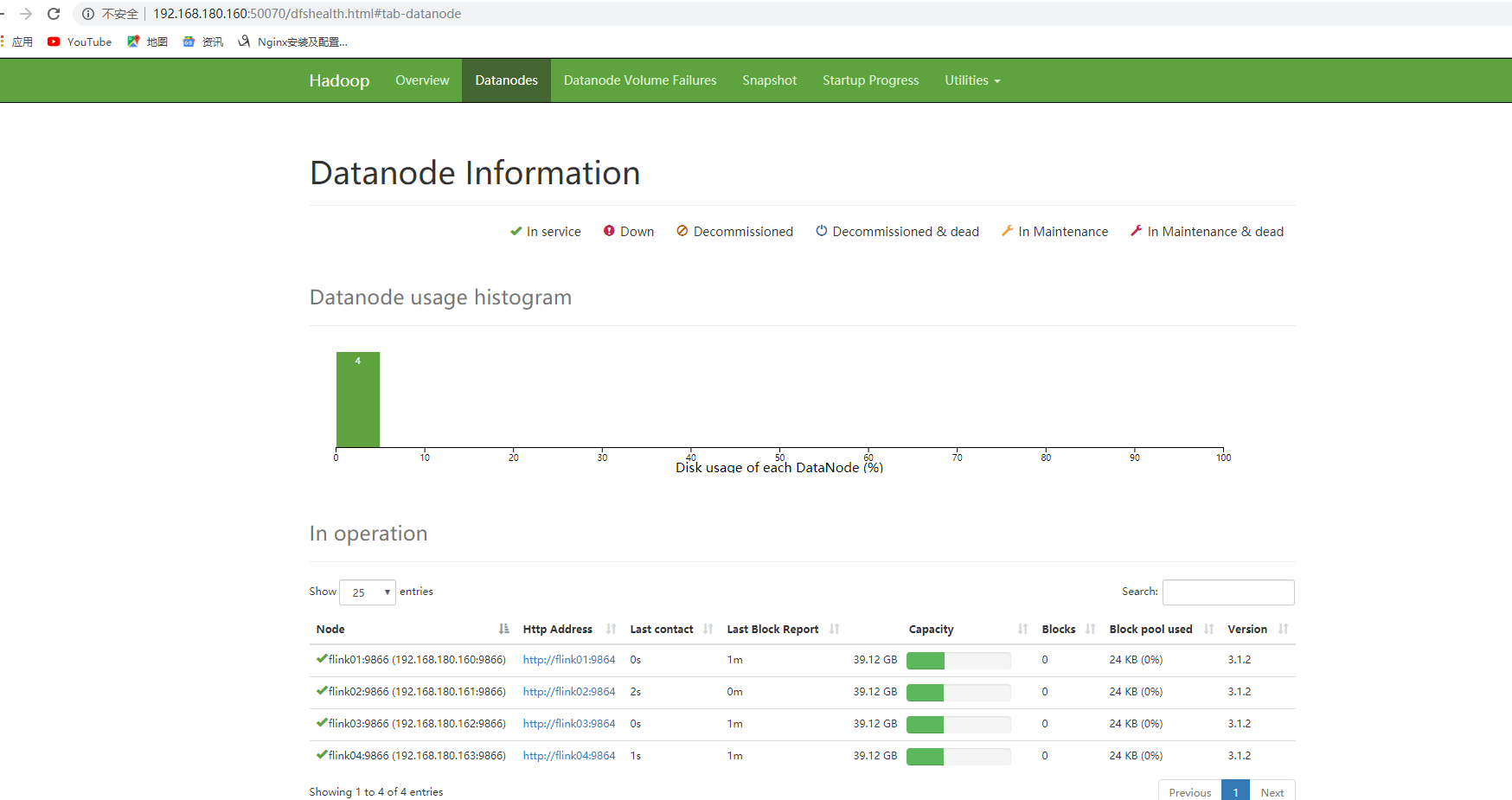

Hadoop集群搭建(主要还是为了hdfs, On-Yarn后面再弄)

集群分配

| | NN-1 | NN-2 | DN | ZK | ZKFC | JN |

| ------- | ---- | ---- | ---- | ---- | ---- | ---- |

| flink01 | * | | * | * | * | * |

| flink02 | | * | * | * | * | * |

| flink03 | | | * | * | | * |

| flink04 | | | * | * | | * |

上传tar包并解压到指定路径:

tar -zxvf hadoop-3.1.2.tar.gz -C /opt/ronnie

vim /opt/ronnie/hadoop-3.1.2/etc/hadoop/hadoop-env.sh 将 环境文件中的jdk路径替换为本机jdk路径

export JAVA_HOME=/usr/lib/jvm/jdk1.8

vim /opt/ronnie/hadoop-3.1.2/etc/hadoop/mapred-env.sh, 添加:

export JAVA_HOME=/usr/lib/jvm/jdk1.8

vim /opt/ronnie/hadoop-3.1.2/etc/hadoop/yarn-env.sh,添加:

export JAVA_HOME=/usr/lib/jvm/jdk1.8

vim /opt/ronnie/hadoop-3.1.2/etc/hadoop/core-site.xml 修改core-site文件

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://ronnie</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>flink01:2181,flink02:2181,flink03:2181,flink04:2181</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/var/ronnie/hadoop/ha</value>

</property>

</configuration>

vim /opt/ronnie/hadoop-3.1.2/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.nameservices</name>

<value>ronnie</value>

</property>

<property>

<name>dfs.ha.namenodes.ronnie</name>

<value>nn1,nn2</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ronnie.nn1</name>

<value>flink01:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ronnie.nn2</name>

<value>flink02:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.ronnie.nn1</name>

<value>flink01:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.ronnie.nn2</name>

<value>flink02:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://flink01:8485;flink02:8485;flink03:8485;flink04:8485/ronnie</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/var/ronnie/hadoop/ha/jn</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.ronnie</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_dsa</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

</configuration>vim /opt/ronnie/hadoop-3.1.2/etc/hadoop/workers修改工作组

flink01

flink02

flink03

flink04

vim /opt/ronnie/hadoop-3.1.2/sbin/start-dfs.sh

vim /opt/ronnie/hadoop-3.1.2/sbin/stop-dfs.sh

在文件顶部头文件之后添加:

HDFS_DATANODE_USER=root HADOOP_SECURE_DN_USER=hdfs HDFS_NAMENODE_USER=root HDFS_SECONDARYNAMENODE_USER=root HDFS_JOURNALNODE_USER=root HDFS_ZKFC_USER=root

vim /opt/ronnie/hadoop-3.1.2/sbin/start-yarn.sh

vim /opt/ronnie/hadoop-3.1.2/sbin/stop-yarn.sh

在文件顶部头文件之后添加:

YARN_RESOURCEMANAGER_USER=root HADOOP_SECURE_DN_USER=yarn YARN_NODEMANAGER_USER=root

将文件发送给其他三台虚拟机:

scp -r /opt/ronnie/hadoop-3.1.2/ root@flink02:/opt/ronnie/

scp -r /opt/ronnie/hadoop-3.1.2/ root@flink03:/opt/ronnie/

scp -r /opt/ronnie/hadoop-3.1.2/ root@flink04:/opt/ronnie/

vim ~/.bashrc 添加hadoop路径

#HADOOP VARIABLES

export HADOOP_HOME=/opt/ronnie/hadoop-3.1.2

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

source ~/.bashrc 使新配置生效(记得每台都要改)

hadoop version查看版本, 显示如下则hadoop路径配置成功:

root@flink01:~# hadoop version

Hadoop 3.1.2

Source code repository https://github.com/apache/hadoop.git -r 1019dde65bcf12e05ef48ac71e84550d589e5d9a

Compiled by sunilg on 2019-01-29T01:39Z

Compiled with protoc 2.5.0

From source with checksum 64b8bdd4ca6e77cce75a93eb09ab2a9

This command was run using /opt/ronnie/hadoop-3.1.2/share/hadoop/common/hadoop-common-3.1.2.jar

启动集群:

启动Zookeeper

zkServer.sh start

在 flink01, flink02, flink03, flink04上启动journalnode

hadoop-daemon.sh start journalnode

jps 查看进程

root@flink01:~# jps

2842 QuorumPeerMain

3068 Jps

3004 JournalNode在两台NameNode中选一台进行格式化(这里选flink01)

- hdfs namenode -format

- hdfs --daemon start 启动 namenode

在另一台NameNode上同步格式化后的相关信息

- hdfs namenode -bootstrapStandby

格式化zkfc

- hdfs zkfc -formatZK

启动 hdfs 集群

- start-dfs.sh

打开50070端口:

- hdfs搭建成功

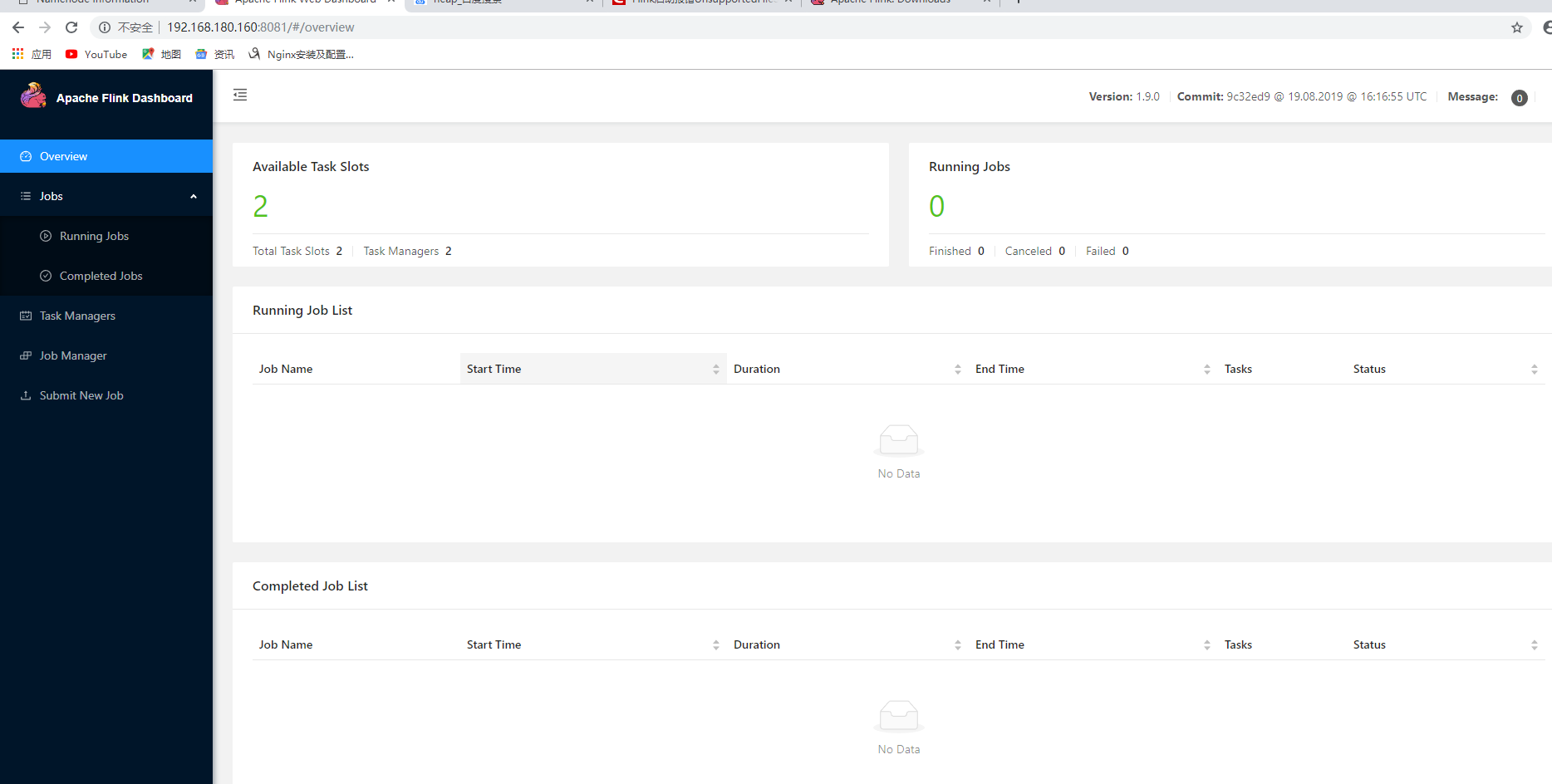

Flink 集群搭建

上传 tar 包并解压到指定目录

这次发生了一个问题, rz -E上传 乱码了, 解决方案: rz -be 上传

解压到指定目录

tar zxvf flink-1.9.0-bin-scala_2.12.tgz -C /opt/ronnie/

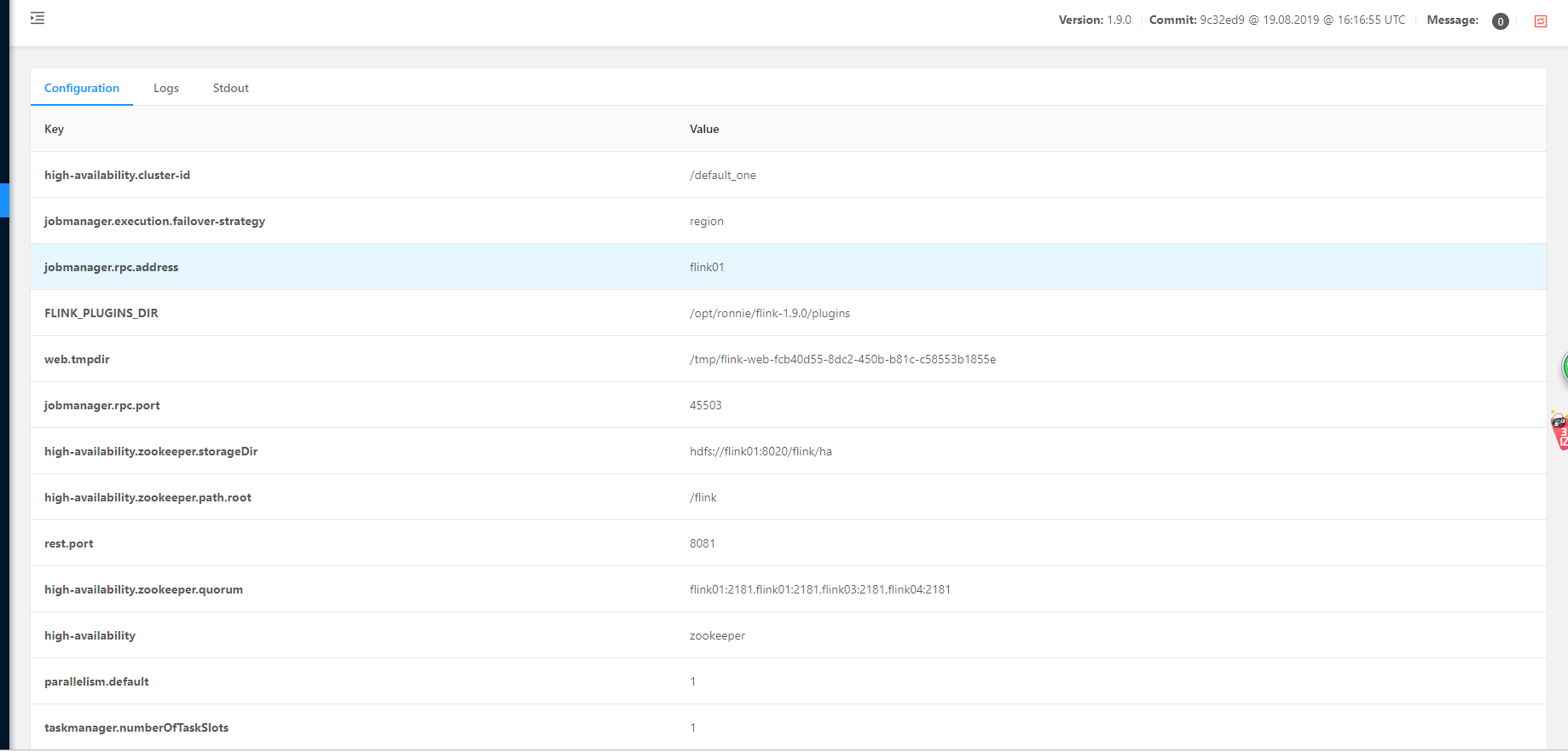

- vim /opt/ronnie/flink-1.9.0/conf/flink-conf.yaml 修改flink配置文件: ```shell

# 如果你有多个jdk那还是得指明使用的哪个版本的位置

env.java.home: /usr/lib/jvm/jdk1.8

# JobManager的rpc地址

jobmanager.rpc.address: flink01 # The RPC port where the JobManager is reachable.

# rpc 端口号 jobmanager.rpc.port: 6123 # The heap size for the JobManager JVM # JobManager的 JVM 的堆的大小 jobmanager.heap.size: 1024m # The heap size for the TaskManager JVM # TaskManager 的 堆的大小 taskmanager.heap.size: 1024m # The number of task slots that each TaskManager offers. Each slot runs one parallel pipeline. # 每个TaskManager提供的任务槽的数量, 每个槽运行一条并行的管道 taskmanager.numberOfTaskSlots: 1 # The parallelism used for programs that did not specify and other parallelism. # 程序的并行度 parallelism.default: 1 # 高可用配置 high-availability: zookeeper high-availability.zookeeper.quorum: flink01:2181,flink01:2181,flink03:2181,flink04:2181 high-availability.zookeeper.path.root: /flink high-availability.cluster-id: /default_one # 这个必须是NameNode的8020 high-availability.zookeeper.storageDir: hdfs://flink01:8020/flink/ha

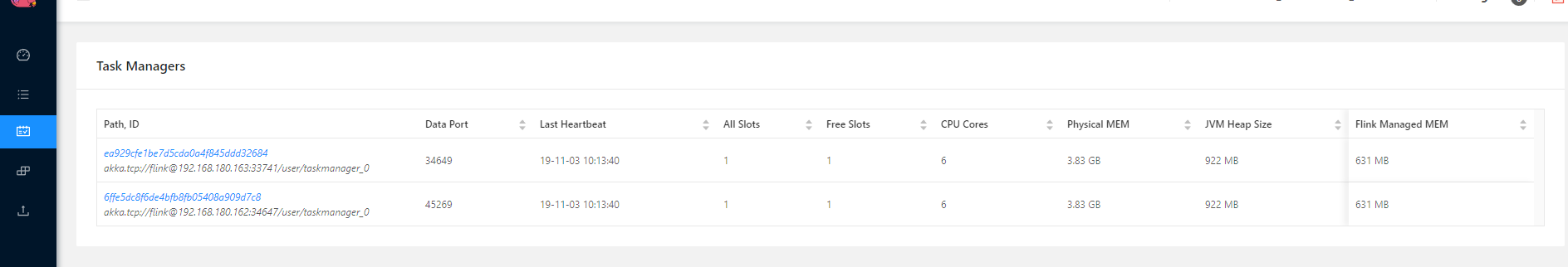

vim /opt/ronnie/flink-1.9.0/conf/masters

flink01:8081

flink02:8081

vim /opt/ronnie/flink-1.9.0/conf/slaves

flink03

flink04

vim ~/.bashrc 修改配置文件

# Flink

export FLINK_HOME=/opt/ronnie/flink-1.9.0

export PATH=$FLINK_HOME/bin:$PATH

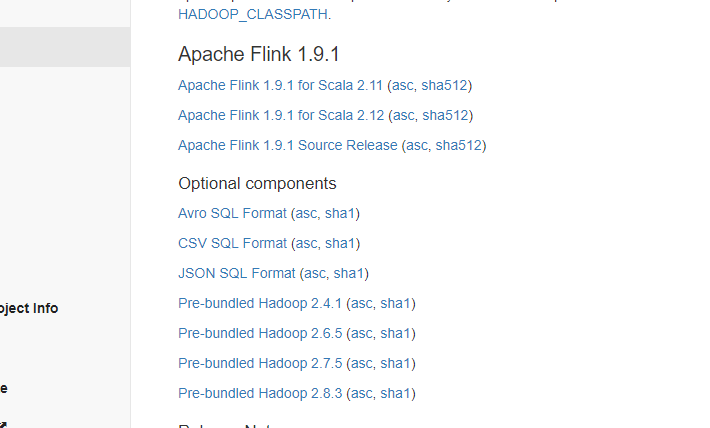

start-cluster.sh 后jps发现没有对应jobManager进程,查看flink日志发现报错:

UnsupportedFileSystemSchemeException: Hadoop is not in the classpath/dependencies.

需要去官网下载Pre-bundled Hadoop

将jar包上传至 flink 目录下的 lib目录

cd /opt/ronnie/flink-1.9.0/lib

将 jar 包 发送至其他三台虚拟机

scp flink-shaded-hadoop-2-uber-2.8.3-7.0.jar root@flink02:`pwd`

scp flink-shaded-hadoop-2-uber-2.8.3-7.0.jar root@flink03:`pwd`

scp flink-shaded-hadoop-2-uber-2.8.3-7.0.jar root@flink03:`pwd`

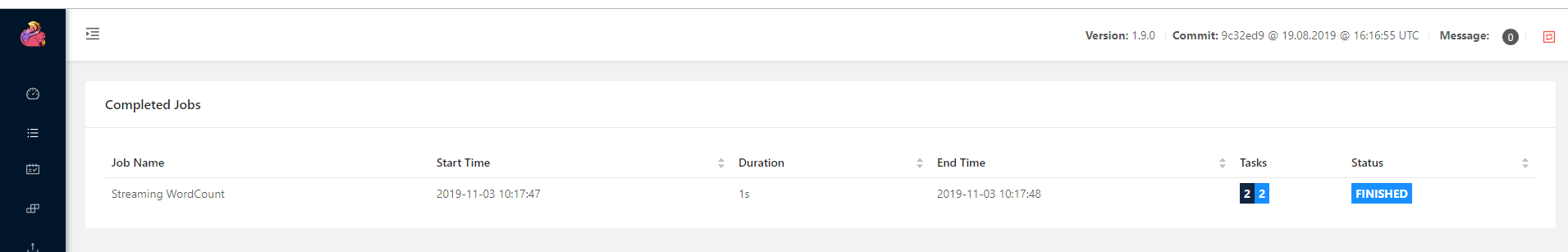

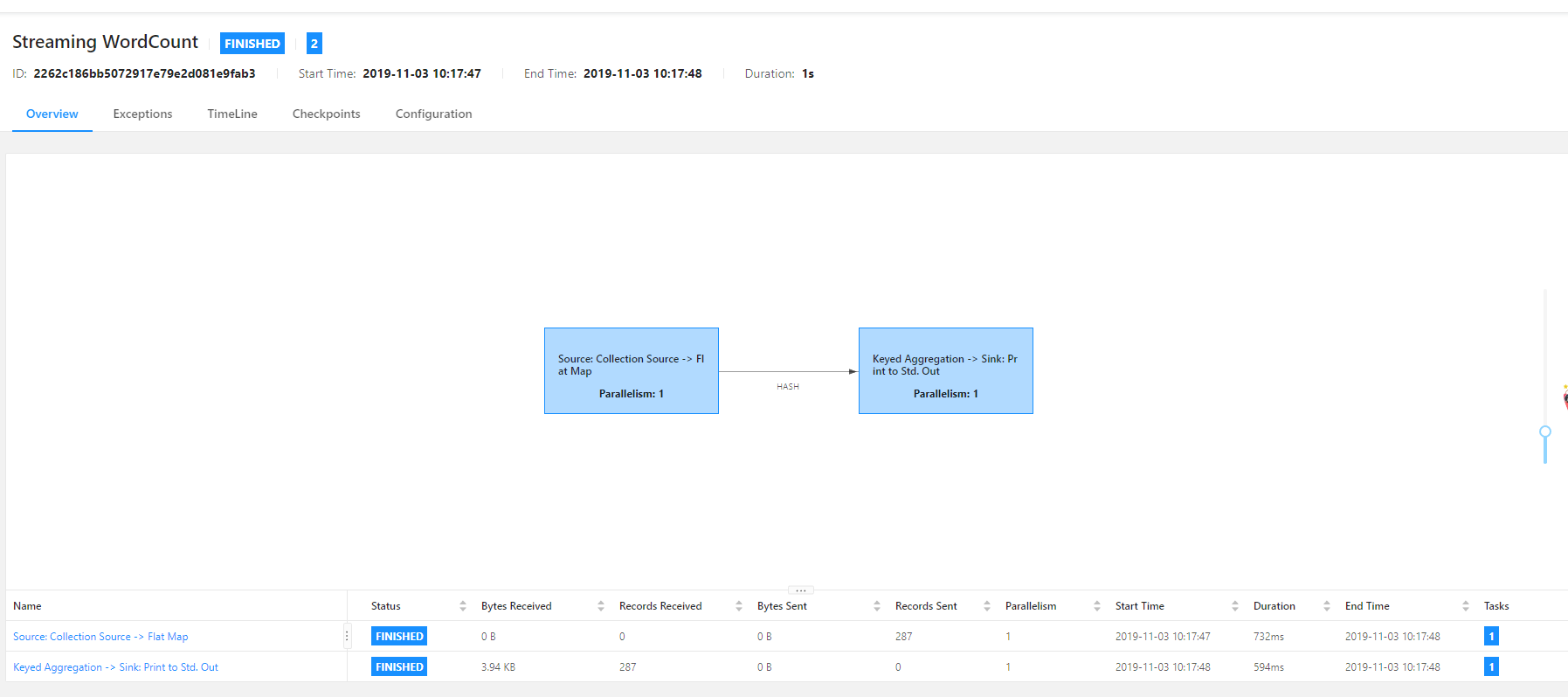

start-cluster.sh 启动 flink 集群, jps查看有对应的StandaloneSessionClusterEntrypoint进程后, 打开8081端口

运行一下自带的wordCount测试一下

cd /opt/ronnie/flink-1.9.0

flink run examples/streaming/WordCount.jar

执行结果:

Starting execution of program

Executing WordCount example with default input data set.

Use --input to specify file input.

Printing result to stdout. Use --output to specify output path.

Program execution finished

Job with JobID 2262c186bb5072917e79e2d081e9fab3 has finished.

Job Runtime: 1311 ms

UI界面

ubuntu18.04 flink-1.9.0 Standalone集群搭建的更多相关文章

- 04、Spark Standalone集群搭建

04.Spark Standalone集群搭建 4.1 集群概述 独立模式是Spark集群模式之一,需要在多台节点上安装spark软件包,并分别启动master节点和worker节点.master节点 ...

- Redis 5.0.5集群搭建

Redis 5.0.5集群搭建 一.概述 Redis3.0版本之后支持Cluster. 1.1.redis cluster的现状 目前redis支持的cluster特性: 1):节点自动发现 2):s ...

- ubuntu18.04 基于Hadoop3.1.2集群的Hbase2.0.6集群搭建

前置条件: 之前已经搭好了带有HDFS, MapReduce,Yarn 的 Hadoop 集群 链接: ubuntu18.04.2 hadoop3.1.2+zookeeper3.5.5高可用完全分布式 ...

- Hadoop2.0 HA集群搭建步骤

上一次搭建的Hadoop是一个伪分布式的,这次我们做一个用于个人的Hadoop集群(希望对大家搭建集群有所帮助): 集群节点分配: Park01 Zookeeper NameNode (active) ...

- Standalone集群搭建和Spark应用监控

注:图片如果损坏,点击文章链接:https://www.toutiao.com/i6815920501530034696/ 承接上一篇文档<Spark词频前十的统计练习> Spark on ...

- java_redis3.0.3集群搭建

redis3.0版本之后支持Cluster,具体介绍redis集群我就不多说,了解请看redis中文简介. 首先,直接访问redis.io官网,下载redis.tar.gz,现在版本3.0.3,我下面 ...

- Redis 3.0.2集群搭建以及相关问题汇总

Redis3 正式支持了 cluster,是为了解决构建redis集群时的诸多不便 (1)像操作单个redis一样操作key,不用操心key在哪个节点上(2)在线动态添加.删除redis节点,不用停止 ...

- CDH 6.0.1 集群搭建 「After install」

集群搭建完成之后其实还有很多配置工作要做,这里我列举一些我去做的一些. 首先是去把 zk 的角色重新分配一下,不知道是不是我在配置的时候遗漏了什么在启动之后就有报警说目前只能检查到一个节点.去将 zk ...

- CDH 6.0.1 集群搭建 「Before install」

从这一篇文章开始会有三篇文章依次介绍集群搭建 「Before install」 「Process」 「After install」 继上一篇使用 docker 部署单机 CDH 的文章,当我们使用 d ...

随机推荐

- 安装centos8

一. 镜像下载 国内源下载镜像:(推荐) http://mirrors.aliyun.com/centos/8.0.1905/isos/x86_64/CentOS-8-x86_64-1905- ...

- PLSQL常用设置-提高开发效率

1.登录后默认自动选中My Objects 默认情况下,PLSQL Developer登录后,Brower里会选择All objects,如果你登录的用户是dba,要展开tables目录,正常情况都需 ...

- WebGL简易教程(八):三维场景交互

目录 1. 概述 2. 实例 2.1. 重绘刷新 2.2. 鼠标事件调整参数 3. 结果 4. 参考 1. 概述 在上一篇教程<WebGL简易教程(七):绘制一个矩形体>中,通过一个绘制矩 ...

- 谷歌助力,快速实现 Java 应用容器化

原文地址:梁桂钊的博客 博客地址:http://blog.720ui.com 欢迎关注公众号:「服务端思维」.一群同频者,一起成长,一起精进,打破认知的局限性. Google 在 2018 年下旬开源 ...

- Java 学习笔记之 实例变量非线程安全

实例变量非线程安全: 如果多个线程共同访问1个对象中的实例变量,则可能出现“非线程安全”问题. public class UnSafeHasSelfPrivateNum { private int n ...

- Kafka常用运维操作

创建主题kafka-topics.sh --zookeeper localhost:2181 --create --topic my-topic --replication-factor 3 --pa ...

- Java8新特性之空指针异常的克星Optional类

Java8新特性系列我们已经介绍了Stream.Lambda表达式.DateTime日期时间处理,最后以"NullPointerException" 的克星Optional类的讲解 ...

- Python玩转人工智能最火框架 TensorFlow应用实践 ☝☝☝

Python玩转人工智能最火框架 TensorFlow应用实践 (一个人学习或许会很枯燥,但是寻找更多志同道合的朋友一起,学习将会变得更加有意义✌✌) 全民人工智能时代,不甘心只做一个旁观者,那就现在 ...

- [JZOJ100047] 【NOIP2017提高A组模拟7.14】基因变异

Description 21 世纪是生物学的世纪,以遗传与进化为代表的现代生物理论越来越多的 进入了我们的视野. 如同大家所熟知的,基因是遗传因子,它记录了生命的基本构造和性能. 因此生物进化与基因的 ...

- [JOYOI1510] 专家复仇 - Floyd

题目限制 时间限制 内存限制 评测方式 题目来源 1000ms 131072KiB 标准比较器 Local 题目背景 外星人完成对S国的考察后,准备返回,可他们的飞碟已经没燃料了……S国的专家暗自窃喜 ...