QTI EAS学习之find_energy_efficient_cpu

Energy Awareness Scheduler是由ARM和Linaro开发的新的linux kernel调度器。

原先CFS调度器是基于policy进行调度,并有不同的吞吐量。例如,有一个新的task创建,同时也有一个idle cpu时,CFS始终会把新的task放到这个idle cpu上运行。但是,这样对节省功耗来说,并不是一个最好的决定。而EAS就是为了解决这样的问题。在不影响性能的前提下,EAS会在调度时实现节省功耗。

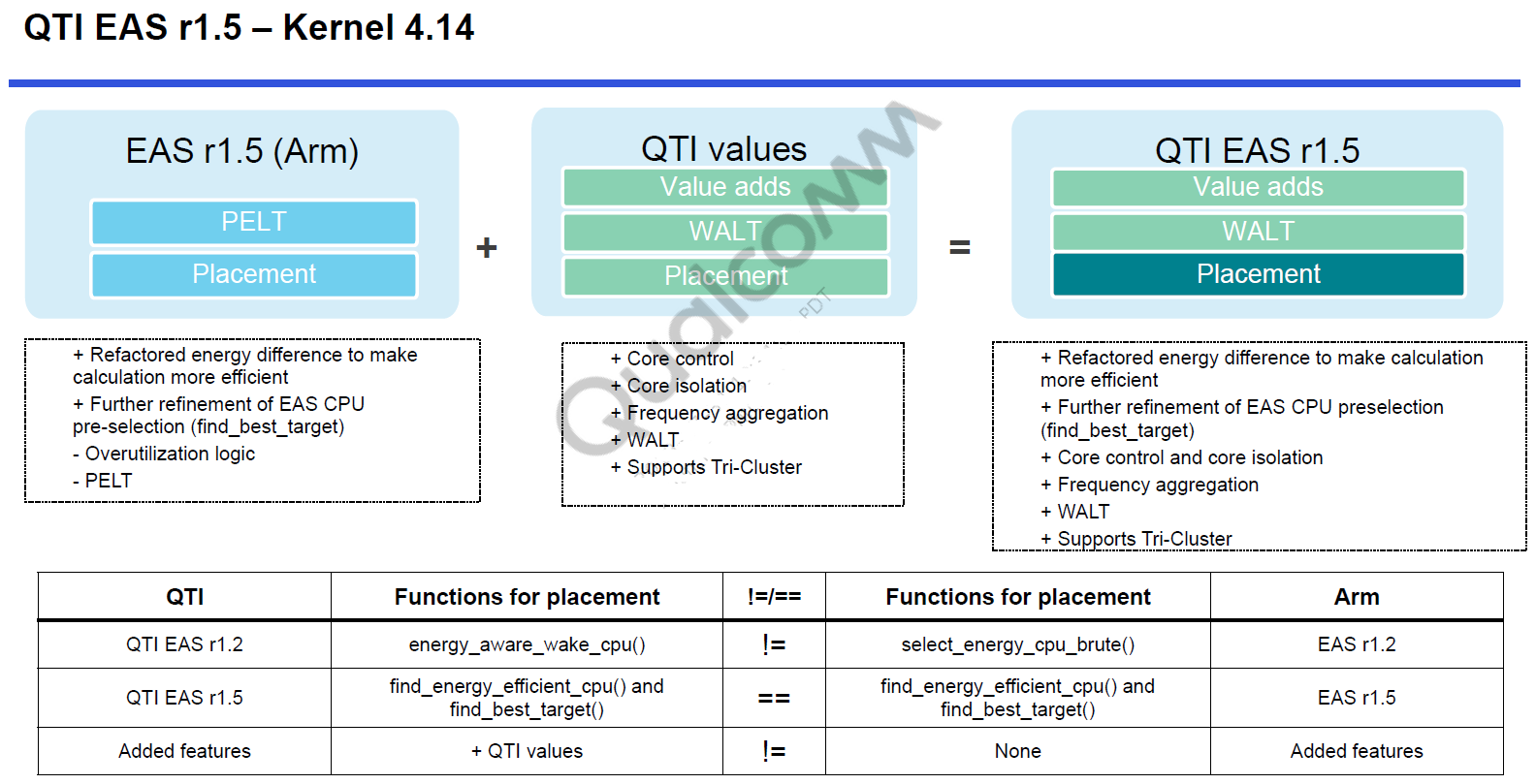

从SDM845开始,QTI在EAS基础上进行了一些修改,以满足移动市场的需要。所以QTI在EAS基础上添加了一些feature,来获得更好的性能和功耗。

Energy model

在dts中,针对不同的cpu平台,已定义好不同的energy model。模型主要是由【频率,能量】的数组构成,对应了CPU和cluster不同的OOP(Operating Performance Point);同时也提供了不同idle state的能量消耗:idle cost。

CPU0: cpu@ {

device_type = "cpu";

compatible = "arm,armv8";

reg = <0x0 0x0>;

enable-method = "psci";

efficiency = <>;

cache-size = <0x8000>;

cpu-release-addr = <0x0 0x90000000>;

qcom,lmh-dcvs = <&lmh_dcvs0>;

#cooling-cells = <>;

next-level-cache = <&L2_0>;

sched-energy-costs = <&CPU_COST_0 &CLUSTER_COST_0>; //小核都用CPU_COST_0 CLUSTER_COST_0

。。。。。。

CPU4: cpu@ {

device_type = "cpu";

compatible = "arm,armv8";

reg = <0x0 0x400>;

enable-method = "psci";

efficiency = <>;

cache-size = <0x20000>;

cpu-release-addr = <0x0 0x90000000>;

qcom,lmh-dcvs = <&lmh_dcvs1>;

#cooling-cells = <>;

next-level-cache = <&L2_400>;

sched-energy-costs = <&CPU_COST_1 &CLUSTER_COST_1>; //大核都用CPU_COST_1 CLUSTER_COST_1

。。。。。。。

对应的数组如下,

energy_costs: energy-costs {

compatible = "sched-energy";

CPU_COST_0: core-cost0 {

busy-cost-data = <

/* speedbin 0,1 */

/* speedbin 2 */

/* speedbin 0,1 */

/* speedbin 2 */

>;

idle-cost-data = <

>;

};

CPU_COST_1: core-cost1 {

busy-cost-data = <

/* speedbin 1,2 */

/* speedbin 1 */

/* speedbin 2 */

/* speedbin 2 */

>;

idle-cost-data = <

>;

};

CLUSTER_COST_0: cluster-cost0 {

busy-cost-data = <

/* speedbin 0,1 */

/* speedbin 2 */

/* speedbin 0,1 */

/* speedbin 2 */

>;

idle-cost-data = <

>;

};

CLUSTER_COST_1: cluster-cost1 {

busy-cost-data = <

/* speedbin 1,2 */

/* speedbin 1 */

/* speedbin 2 */

/* speedbin 2 */

>;

idle-cost-data = <

>;

};

}; /* energy-costs */

在代码kernel/sched/energy.c中遍历所有cpu,并读取dts中的数据

for_each_possible_cpu(cpu) {

cn = of_get_cpu_node(cpu, NULL);

if (!cn) {

pr_warn("CPU device node missing for CPU %d\n", cpu);

return;

}

if (!of_find_property(cn, "sched-energy-costs", NULL)) {

pr_warn("CPU device node has no sched-energy-costs\n");

return;

}

for_each_possible_sd_level(sd_level) {

cp = of_parse_phandle(cn, "sched-energy-costs", sd_level);

if (!cp)

break;

prop = of_find_property(cp, "busy-cost-data", NULL);

if (!prop || !prop->value) {

pr_warn("No busy-cost data, skipping sched_energy init\n");

goto out;

}

sge = kcalloc(, sizeof(struct sched_group_energy),

GFP_NOWAIT);

if (!sge)

goto out;

nstates = (prop->length / sizeof(u32)) / ;

cap_states = kcalloc(nstates,

sizeof(struct capacity_state),

GFP_NOWAIT);

if (!cap_states) {

kfree(sge);

goto out;

}

for (i = , val = prop->value; i < nstates; i++) { //将读取的[freq,energy]数组存放起来

cap_states[i].cap = SCHED_CAPACITY_SCALE;

cap_states[i].frequency = be32_to_cpup(val++);

cap_states[i].power = be32_to_cpup(val++);

}

sge->nr_cap_states = nstates; //state为[freq,energy]组合个数,就是支持多少个状态:将所有数据flatten之后,再处以2

sge->cap_states = cap_states;

prop = of_find_property(cp, "idle-cost-data", NULL);

if (!prop || !prop->value) {

pr_warn("No idle-cost data, skipping sched_energy init\n");

kfree(sge);

kfree(cap_states);

goto out;

}

nstates = (prop->length / sizeof(u32));

idle_states = kcalloc(nstates,

sizeof(struct idle_state),

GFP_NOWAIT);

if (!idle_states) {

kfree(sge);

kfree(cap_states);

goto out;

}

for (i = , val = prop->value; i < nstates; i++)

idle_states[i].power = be32_to_cpup(val++); //将读取的idle cost data存放起来

sge->nr_idle_states = nstates; //idle state的个数,就是idle cost data的长度

sge->idle_states = idle_states;

sge_array[cpu][sd_level] = sge; //将当前cpu获取的energy模型存放再sge_array[cpu][sd_level]中。其中cpu就是对应哪个cpu,sd_level则对应是哪个sched_domain,也就是是cpu level还是cluster level

}

}

Load Tracking

QTI EAS使用的负载计算是WALT,是基于时间窗口的load统计方法,具体参考之前文章:https://www.cnblogs.com/lingjiajun/p/12317090.html

其中会跟踪计算出2个比较关键的数据,就是task_util和cpu_util

当执行wakeup task placement,scheduler就会使用task utilization和CPU utilization

可以理解为将load的情况转化为Utilization,并且将其标准化为1024的值。

Task utilization boosted = Task utilization + (1024-task_util) x boost_percent -----boost percent是使用schedtune boost时,所需要乘上的百分比

CPU utilization = 1024 x (累计的runnable均值 / window size)--------累计的runnable均值,个人理解就是rq上所有task util的总和

Task placement的主要概念:

EAS是Task placement 是EAS影响调度的主要模块。 其主要keypoint如下:

1、EAS依靠energy model来进行精确地进行选择CPU运行

2、使用energy model估算:把一个任务安排在一个CPU上,或者将任务从一个CPU迁移到另一个CPU上,所发生的能量变化

3、EAS会在不影响performance情况下(比如满足满足最低的latency),趋向于选择消耗能量最小的CPU,去运行当前的task

4、EAS仅发生在system没有overutilized的情况下

5、EAS的概念与QTI EAS的一样

6、一旦系统处于overutilized,QTI EAS仍然在wake up的path下进行energy aware。不会考虑系统overutilized的情形。

EAS核心调度算法

不同版本的EAS在不同版本下的主要task placement实现函数(针对CFS task):

Zone scheduler: select_best_cpu()

QTI EAS r1.: energy_aware_wake_cpu()

QTI EAS r1.: find_energy_efficienct_cpu()

task placement调用路径:

QTI EAS r1. (Kernel 4.14) Task wake-up: try_to_wake_up() →select_task_rq_fair() →invokes find_energy_efficient_cpu() Scheduler tick occurs: scheduler_tick() →check_for_migration() →invokes find_energy_efficient_cpu() New task arrives: do_fork() →wake_up_new_task() →select_task_rq_fair() →invokes find_energy_efficient_cpu()

EAS的task placement代码流程,主要目标是找到一个合适的cpu来运行当前这个task p。

主要代码就是find_energy_efficient_cpu()这个函数里面,如下:

/*

* find_energy_efficient_cpu(): Find most energy-efficient target CPU for the

* waking task. find_energy_efficient_cpu() looks for the CPU with maximum

* spare capacity in each performance domain and uses it as a potential

* candidate to execute the task. Then, it uses the Energy Model to figure

* out which of the CPU candidates is the most energy-efficient.

*

* The rationale for this heuristic is as follows. In a performance domain,

* all the most energy efficient CPU candidates (according to the Energy

* Model) are those for which we'll request a low frequency. When there are

* several CPUs for which the frequency request will be the same, we don't

* have enough data to break the tie between them, because the Energy Model

* only includes active power costs. With this model, if we assume that

* frequency requests follow utilization (e.g. using schedutil), the CPU with

* the maximum spare capacity in a performance domain is guaranteed to be among

* the best candidates of the performance domain.

*

* In practice, it could be preferable from an energy standpoint to pack

* small tasks on a CPU in order to let other CPUs go in deeper idle states,

* but that could also hurt our chances to go cluster idle, and we have no

* ways to tell with the current Energy Model if this is actually a good

* idea or not. So, find_energy_efficient_cpu() basically favors

* cluster-packing, and spreading inside a cluster. That should at least be

* a good thing for latency, and this is consistent with the idea that most

* of the energy savings of EAS come from the asymmetry of the system, and

* not so much from breaking the tie between identical CPUs. That's also the

* reason why EAS is enabled in the topology code only for systems where

* SD_ASYM_CPUCAPACITY is set.

*

* NOTE: Forkees are not accepted in the energy-aware wake-up path because

* they don't have any useful utilization data yet and it's not possible to

* forecast their impact on energy consumption. Consequently, they will be

* placed by find_idlest_cpu() on the least loaded CPU, which might turn out

* to be energy-inefficient in some use-cases. The alternative would be to

* bias new tasks towards specific types of CPUs first, or to try to infer

* their util_avg from the parent task, but those heuristics could hurt

* other use-cases too. So, until someone finds a better way to solve this,

* let's keep things simple by re-using the existing slow path.

*/ static int find_energy_efficient_cpu(struct task_struct *p, int prev_cpu,

int sync, int sibling_count_hint)

{

unsigned long prev_energy = ULONG_MAX, best_energy = ULONG_MAX;

struct root_domain *rd = cpu_rq(smp_processor_id())->rd;

int weight, cpu = smp_processor_id(), best_energy_cpu = prev_cpu; //cpu:当前执行的cpu

unsigned long cur_energy;

struct perf_domain *pd;

struct sched_domain *sd;

cpumask_t *candidates;

bool is_rtg;

struct find_best_target_env fbt_env;

bool need_idle = wake_to_idle(p); //是否set flag PF_WAKE_UP_IDLE

int placement_boost = task_boost_policy(p); //获取task sched boost policy:none/on_big/on_all 与sched_boost、schedtune设置也有关

u64 start_t = ;

int delta = ;

int task_boost = per_task_boost(p); //仅网络有打开该boost,这里可以认为没有boost

int boosted = (schedtune_task_boost(p) > ) || (task_boost > ); //查看task的schedtune有没有打开boost

int start_cpu = get_start_cpu(p); //获取从哪个cpu core开始,尝试作为target cpu if (start_cpu < )

goto eas_not_ready; is_rtg = task_in_related_thread_group(p); //判断task是否在一个group内 fbt_env.fastpath = ; if (trace_sched_task_util_enabled())

start_t = sched_clock(); //trace log /* Pre-select a set of candidate CPUs. */

candidates = this_cpu_ptr(&energy_cpus);

cpumask_clear(candidates); if (need_idle)

sync = ; if (sysctl_sched_sync_hint_enable && sync &&

bias_to_this_cpu(p, cpu, start_cpu)) { //满足3个调节:sync hint enable/flag:sync=1/bias to当前cpu

best_energy_cpu = cpu; //当前执行的cpu

fbt_env.fastpath = SYNC_WAKEUP;

goto done;

} if (is_many_wakeup(sibling_count_hint) && prev_cpu != cpu && //sibling_count_hint代表有多少个thread在当前event中唤醒

bias_to_this_cpu(p, prev_cpu, start_cpu)) {

best_energy_cpu = prev_cpu; //选择prev cpu

fbt_env.fastpath = MANY_WAKEUP;

goto done;

} rcu_read_lock();

pd = rcu_dereference(rd->pd);

if (!pd)

goto fail; /*

* Energy-aware wake-up happens on the lowest sched_domain starting

* from sd_asym_cpucapacity spanning over this_cpu and prev_cpu.

*/

sd = rcu_dereference(*this_cpu_ptr(&sd_asym_cpucapacity));

while (sd && !cpumask_test_cpu(prev_cpu, sched_domain_span(sd)))

sd = sd->parent;

if (!sd)

goto fail; sync_entity_load_avg(&p->se); //更新task所在sched_entity的PELT load

if (!task_util_est(p))

goto unlock; if (sched_feat(FIND_BEST_TARGET)) { //检查FIND_BEST_TARGET这个调度特性是否打开:目前是打开的

fbt_env.is_rtg = is_rtg;

fbt_env.placement_boost = placement_boost;

fbt_env.need_idle = need_idle;

fbt_env.start_cpu = start_cpu;

fbt_env.boosted = boosted;

fbt_env.strict_max = is_rtg &&

(task_boost == TASK_BOOST_STRICT_MAX);

fbt_env.skip_cpu = is_many_wakeup(sibling_count_hint) ?

cpu : -; find_best_target(NULL, candidates, p, &fbt_env); //(1)核心函数,最终是将找到的target_cpu和backup_cpu都存放进了candidates中

} else {

select_cpu_candidates(sd, candidates, pd, p, prev_cpu);

} /* Bail out if no candidate was found. */

weight = cpumask_weight(candidates); //判断如果没有找到target cpu和backup cpu时,直接goto unlock

if (!weight)

goto unlock; /* If there is only one sensible candidate, select it now. */

cpu = cpumask_first(candidates);

if (weight == && ((schedtune_prefer_idle(p) && idle_cpu(cpu)) || //如果只找到了1个cpu,task是prefer_idle并且这个cpu也是idle的;或者cpu就是prev_cpu

(cpu == prev_cpu))) {

best_energy_cpu = cpu; //那么就选这个cpu为【best_energy_cpu】

goto unlock;

} #ifdef CONFIG_SCHED_WALT

if (p->state == TASK_WAKING) //如果是新唤醒的task,获取task_util

delta = task_util(p);

#endif

if (task_placement_boost_enabled(p) || need_idle || boosted || //满足一下条件之一,那么第一个candidate cpu就作为【best_energy_cpu】不再考虑计算energy

is_rtg || __cpu_overutilized(prev_cpu, delta) || //打开了sched_boost、need_idle(PF_WAKE_UP_IDLE)、开了schedtune boost、related_thread_group限制使用小核、prev_cpu+delta没有overutil、

!task_fits_max(p, prev_cpu) || cpu_isolated(prev_cpu)) { //p放在prev_cpu上会misfit、prev_cpu处于isolated

best_energy_cpu = cpu;

goto unlock;

} if (cpumask_test_cpu(prev_cpu, &p->cpus_allowed)) //根据prev_cpu是否在task p的cpuset范围内

prev_energy = best_energy = compute_energy(p, prev_cpu, pd); //(2)在范围内,则计算p在prev_cpu上的energy

else

prev_energy = best_energy = ULONG_MAX; //不匹配,energy就设为最大,代表不合适 /* Select the best candidate energy-wise. */ //通过比较energy,挑选出best_energy_cpu、best_energy

for_each_cpu(cpu, candidates) {

if (cpu == prev_cpu) //过滤prev_cpu

continue;

cur_energy = compute_energy(p, cpu, pd); //计算p迁移到candidate cpu上的energy

trace_sched_compute_energy(p, cpu, cur_energy, prev_energy,

best_energy, best_energy_cpu);

if (cur_energy < best_energy) {

best_energy = cur_energy;

best_energy_cpu = cpu;

} else if (cur_energy == best_energy) {

if (select_cpu_same_energy(cpu, best_energy_cpu, //当candidate cpu的energy与best_cpu一样的话,怎么选

prev_cpu)) {

best_energy = cur_energy;

best_energy_cpu = cpu;

}

}

}

unlock:

rcu_read_unlock(); /*

* Pick the prev CPU, if best energy CPU can't saves at least 6% of

* the energy used by prev_cpu.

*/

if ((prev_energy != ULONG_MAX) && (best_energy_cpu != prev_cpu) && //找到了非prev_cpu的best_energy_cpu、且省电下来的energy要大于在prev_energy上的6%,那么best_energy_cpu则满足条件;否则仍然使用prev_cpu

((prev_energy - best_energy) <= prev_energy >> )) //这里巧妙地使用了位移:右移1位代表÷2,所以prev_energy/2/2/2/2 = prev_energy*6%

best_energy_cpu = prev_cpu; done: trace_sched_task_util(p, cpumask_bits(candidates)[], best_energy_cpu,

sync, need_idle, fbt_env.fastpath, placement_boost,

start_t, boosted, is_rtg, get_rtg_status(p), start_cpu); return best_energy_cpu; fail:

rcu_read_unlock();

eas_not_ready:

return -;

}

(1)find_best_target()

static void find_best_target(struct sched_domain *sd, cpumask_t *cpus,

struct task_struct *p,

struct find_best_target_env *fbt_env)

{

unsigned long min_util = boosted_task_util(p); //获取p的boosted_task_util

unsigned long target_capacity = ULONG_MAX;

unsigned long min_wake_util = ULONG_MAX;

unsigned long target_max_spare_cap = ;

unsigned long best_active_util = ULONG_MAX;

unsigned long best_active_cuml_util = ULONG_MAX;

unsigned long best_idle_cuml_util = ULONG_MAX;

bool prefer_idle = schedtune_prefer_idle(p); //获取task prefer_idle配置

bool boosted = fbt_env->boosted;

/* Initialise with deepest possible cstate (INT_MAX) */

int shallowest_idle_cstate = INT_MAX;

struct sched_domain *start_sd;

struct sched_group *sg;

int best_active_cpu = -;

int best_idle_cpu = -;

int target_cpu = -;

int backup_cpu = -;

int i, start_cpu;

long spare_wake_cap, most_spare_wake_cap = ;

int most_spare_cap_cpu = -;

int prev_cpu = task_cpu(p);

bool next_group_higher_cap = false;

int isolated_candidate = -; /*

* In most cases, target_capacity tracks capacity_orig of the most

* energy efficient CPU candidate, thus requiring to minimise

* target_capacity. For these cases target_capacity is already

* initialized to ULONG_MAX.

* However, for prefer_idle and boosted tasks we look for a high

* performance CPU, thus requiring to maximise target_capacity. In this

* case we initialise target_capacity to 0.

*/

if (prefer_idle && boosted)

target_capacity = ; if (fbt_env->strict_max)

most_spare_wake_cap = LONG_MIN; /* Find start CPU based on boost value */

start_cpu = fbt_env->start_cpu;

/* Find SD for the start CPU */

start_sd = rcu_dereference(per_cpu(sd_asym_cpucapacity, start_cpu)); //找到start cpu所在的sched domain,sd_asym_cpucapacity表示是非对称cpu capacity级别,应该就是DIE level,所以domain是cpu0-7

if (!start_sd)

goto out; /* fast path for prev_cpu */

if (((capacity_orig_of(prev_cpu) == capacity_orig_of(start_cpu)) || //prev cpu和start cpu的当前max_policy_freq下的capacity相等

asym_cap_siblings(prev_cpu, start_cpu)) &&

!cpu_isolated(prev_cpu) && cpu_online(prev_cpu) &&

idle_cpu(prev_cpu)) { if (idle_get_state_idx(cpu_rq(prev_cpu)) <= ) { //prev cpu idle state的index <1,说明休眠不深

target_cpu = prev_cpu; fbt_env->fastpath = PREV_CPU_FASTPATH;

goto target;

}

} /* Scan CPUs in all SDs */

sg = start_sd->groups;

do { //do-while循环,针对start cpu的调度域中的所有调度组进行遍历,由于domain是cpu0-7,那么调度组就是2个大小cluster:cpu0-3,cpu4-7

for_each_cpu_and(i, &p->cpus_allowed, sched_group_span(sg)) { //寻找task允许的cpuset和调度组可用cpu范围内

unsigned long capacity_curr = capacity_curr_of(i); //当前freq的cpu_capacity

unsigned long capacity_orig = capacity_orig_of(i); //当前max_policy_freq的cpu_capacity, >=capacity_curr

unsigned long wake_util, new_util, new_util_cuml;

long spare_cap;

int idle_idx = INT_MAX; trace_sched_cpu_util(i); if (!cpu_online(i) || cpu_isolated(i)) //cpu处于非online,或者isolate状态,则直接不考虑

continue; if (isolated_candidate == -)

isolated_candidate = i; /*

* This CPU is the target of an active migration that's

* yet to complete. Avoid placing another task on it.

* See check_for_migration()

*/

if (is_reserved(i)) //已经有task要迁移到上面,但是还没有迁移完成。所以这样的cpu不考虑

continue; if (sched_cpu_high_irqload(i)) //高irq load的cpu不考虑。irq load可以参考之前WALT文章:https://www.cnblogs.com/lingjiajun/p/12317090.html

continue; if (fbt_env->skip_cpu == i) //当前活动的cpu是否有很多event一起wakeup,如果有,那么也不考虑该cpu

continue; /*

* p's blocked utilization is still accounted for on prev_cpu

* so prev_cpu will receive a negative bias due to the double

* accounting. However, the blocked utilization may be zero.

*/

wake_util = cpu_util_without(i, p); //计算没有除了p以外的cpu_util(p不在该cpu rq的情况下,实际就是当前cpu_util)

new_util = wake_util + task_util_est(p); //计算cpu_util + p的task_util(p的task_util就是walt统计的demand_scaled)

spare_wake_cap = capacity_orig - wake_util; //剩余的capacity = capacity_orig - p以外的cpu_util if (spare_wake_cap > most_spare_wake_cap) {

most_spare_wake_cap = spare_wake_cap; //在循环中,找到有剩余capacity最多(最空闲)的cpu = i,并保存剩余的capacity

most_spare_cap_cpu = i;

} if (per_task_boost(cpu_rq(i)->curr) == //cpu【i】当前running_task的task_boost == TASK_BOOST_STRICT_MAX,那么不适合作为tager_cpu

TASK_BOOST_STRICT_MAX)

continue;

/*

* Cumulative demand may already be accounting for the

* task. If so, add just the boost-utilization to

* the cumulative demand of the cpu.

*/

if (task_in_cum_window_demand(cpu_rq(i), p)) //计算新的cpu【i】的cpu_util = cpu_util_cum + p的boosted_task_util

new_util_cuml = cpu_util_cum(i, ) + //特别地,如果p已经在cpu【i】的rq中,或者p的部分demand被统计在了walt中。那么防止统计2次,所以要减去p的task_util(denamd_scaled)

min_util - task_util(p);

else

new_util_cuml = cpu_util_cum(i, ) + min_util; /*

* Ensure minimum capacity to grant the required boost.

* The target CPU can be already at a capacity level higher

* than the one required to boost the task.

*/

new_util = max(min_util, new_util); //取 p的booted_task_util、加入p之后的cpu_util,之间的较大值

if (new_util > capacity_orig) //与capacity_orig比较,大于capacity_orig的情况下,不适合作为target_cpu

continue; /*

* Pre-compute the maximum possible capacity we expect

* to have available on this CPU once the task is

* enqueued here.

*/

spare_cap = capacity_orig - new_util; //预计算当p迁移到cpu【i】上后,剩余的可能最大capacity if (idle_cpu(i)) //判断当前cpu【i】是否处于idle,并获取idle index(idle的深度)

idle_idx = idle_get_state_idx(cpu_rq(i)); /*

* Case A) Latency sensitive tasks

*

* Unconditionally favoring tasks that prefer idle CPU to

* improve latency.

*

* Looking for:

* - an idle CPU, whatever its idle_state is, since

* the first CPUs we explore are more likely to be

* reserved for latency sensitive tasks.

* - a non idle CPU where the task fits in its current

* capacity and has the maximum spare capacity.

* - a non idle CPU with lower contention from other

* tasks and running at the lowest possible OPP.

*

* The last two goals tries to favor a non idle CPU

* where the task can run as if it is "almost alone".

* A maximum spare capacity CPU is favoured since

* the task already fits into that CPU's capacity

* without waiting for an OPP chance.

*

* The following code path is the only one in the CPUs

* exploration loop which is always used by

* prefer_idle tasks. It exits the loop with wither a

* best_active_cpu or a target_cpu which should

* represent an optimal choice for latency sensitive

* tasks.

*/

if (prefer_idle) { //对lantency有要求的task

/*

* Case A.1: IDLE CPU

* Return the best IDLE CPU we find:

* - for boosted tasks: the CPU with the highest

* performance (i.e. biggest capacity_orig)

* - for !boosted tasks: the most energy

* efficient CPU (i.e. smallest capacity_orig)

*/

if (idle_cpu(i)) { //如果cpu【i】是idle的

if (boosted &&

capacity_orig < target_capacity) //对于boosted task,cpu需要选择最大capacity_orig,不满足要continue

continue;

if (!boosted &&

capacity_orig > target_capacity) //对于非boosted task,cpu选择最小capacity_orig,不满足要continue

continue;

/*

* Minimise value of idle state: skip

* deeper idle states and pick the

* shallowest.

*/

if (capacity_orig == target_capacity &&

sysctl_sched_cstate_aware &&

idle_idx >= shallowest_idle_cstate) //包括下面的continue,都是为了挑选出处于idle最浅的cpu

continue; target_capacity = capacity_orig;

shallowest_idle_cstate = idle_idx;

best_idle_cpu = i; //选出【prefer_idle】best_idle_cpu

continue;

}

if (best_idle_cpu != -) //过滤上面已经找到best_idle_cpu的情况,不需要走下面流程了

continue; /*

* Case A.2: Target ACTIVE CPU

* Favor CPUs with max spare capacity.

*/

if (capacity_curr > new_util &&

spare_cap > target_max_spare_cap) { //找到capacity_curr满足包含进程p的cpu_util,并且找到空闲capacity最多的那个cpu

target_max_spare_cap = spare_cap;

target_cpu = i; //选出【prefer_idle】target_cpu

continue;

}

if (target_cpu != -) //如果cpu条件不满足,则continue,继续找target_cpu

continue; /*

* Case A.3: Backup ACTIVE CPU

* Favor CPUs with:

* - lower utilization due to other tasks

* - lower utilization with the task in

*/

if (wake_util > min_wake_util) //找出除了p以外的cpu_util最小的cpu

continue; /*

* If utilization is the same between CPUs,

* break the ties with WALT's cumulative

* demand

*/

if (new_util == best_active_util &&

new_util_cuml > best_active_cuml_util) //如果包含p的cpu_util相等,那么就挑选cpu_util + p的boosted_task_util最小的那个cpu

continue;

min_wake_util = wake_util;

best_active_util = new_util;

best_active_cuml_util = new_util_cuml;

best_active_cpu = i; //选出【prefer_idle】best_active_cpu

continue;

} /*

* Skip processing placement further if we are visiting

* cpus with lower capacity than start cpu

*/

if (capacity_orig < capacity_orig_of(start_cpu)) //cpu【i】capacity_orig < 【start_cpu】capacity_orig的不考虑

continue; /*

* Case B) Non latency sensitive tasks on IDLE CPUs.

*

* Find an optimal backup IDLE CPU for non latency

* sensitive tasks.

*

* Looking for:

* - minimizing the capacity_orig,

* i.e. preferring LITTLE CPUs

* - favoring shallowest idle states

* i.e. avoid to wakeup deep-idle CPUs

*

* The following code path is used by non latency

* sensitive tasks if IDLE CPUs are available. If at

* least one of such CPUs are available it sets the

* best_idle_cpu to the most suitable idle CPU to be

* selected.

*

* If idle CPUs are available, favour these CPUs to

* improve performances by spreading tasks.

* Indeed, the energy_diff() computed by the caller67jkkk

* will take care to ensure the minimization of energy

* consumptions without affecting performance.

*/ //对latency要求不高的task,并要求idle cpu作为target的情况

if (idle_cpu(i)) { //判断cpu【i】是否idle

/*

* Prefer shallowest over deeper idle state cpu,

* of same capacity cpus.

*/

if (capacity_orig == target_capacity && //选出capacity相同情况下,idle最浅的cpu

sysctl_sched_cstate_aware &&

idle_idx > shallowest_idle_cstate)

continue; if (shallowest_idle_cstate == idle_idx &&

target_capacity == capacity_orig &&

(best_idle_cpu == prev_cpu ||

(i != prev_cpu &&

new_util_cuml > best_idle_cuml_util))) //best_idle_cpu非prev_cpu,并且挑选cpu_util + p的boosted_task_util最小的

continue; target_capacity = capacity_orig;

shallowest_idle_cstate = idle_idx;

best_idle_cuml_util = new_util_cuml;

best_idle_cpu = i; //选出【normal-idle】best_idle_cpu

continue;

} /*

* Consider only idle CPUs for active migration.

*/

if (p->state == TASK_RUNNING) //task p正在运行,则不进行下面流程

continue; /*

* Case C) Non latency sensitive tasks on ACTIVE CPUs.

*

* Pack tasks in the most energy efficient capacities.

*

* This task packing strategy prefers more energy

* efficient CPUs (i.e. pack on smaller maximum

* capacity CPUs) while also trying to spread tasks to

* run them all at the lower OPP.

*

* This assumes for example that it's more energy

* efficient to run two tasks on two CPUs at a lower

* OPP than packing both on a single CPU but running

* that CPU at an higher OPP.

*

* Thus, this case keep track of the CPU with the

* smallest maximum capacity and highest spare maximum

* capacity.

*/ //对latency要求不高,并需要ACTIVE cpu作为target的情况 /* Favor CPUs with maximum spare capacity */

if (spare_cap < target_max_spare_cap) //找到迁移p之后,剩余capacity最多的cpu

continue; target_max_spare_cap = spare_cap;

target_capacity = capacity_orig;

target_cpu = i; //找出【normal-ACTIVe】的target_cpu

} //到此就是一个调度组(cluster)内cpu的循环查找 next_group_higher_cap = (capacity_orig_of(group_first_cpu(sg)) <

capacity_orig_of(group_first_cpu(sg->next))); //尝试查找下一个capacity更大的big cluster /*

* If we've found a cpu, but the boost is ON_ALL we continue

* visiting other clusters. If the boost is ON_BIG we visit

* next cluster if they are higher in capacity. If we are

* not in any kind of boost, we break.

*

* And always visit higher capacity group, if solo cpu group

* is not in idle.

*/

if (!prefer_idle && !boosted && //上面找到cpu但是boost=ON_ALL,那么还要查找其他cluster

((target_cpu != - && (sg->group_weight > || //上面找到cpu但是boost=ON_BIG,那么还要在capacity更大的cluster中查找

!next_group_higher_cap)) || //上面找到了cpu,并且不在任何boost。那么break

best_idle_cpu != -) && //如果上面group中,没有cpu是idle,那么always在capacity更大的cluster中查找

(fbt_env->placement_boost == SCHED_BOOST_NONE ||

!is_full_throttle_boost() ||

(fbt_env->placement_boost == SCHED_BOOST_ON_BIG &&

!next_group_higher_cap)))

break; /*

* if we are in prefer_idle and have found an idle cpu,

* break from searching more groups based on the stune.boost and

* group cpu capacity. For !prefer_idle && boosted case, don't

* iterate lower capacity CPUs unless the task can't be

* accommodated in the higher capacity CPUs.

*/

if ((prefer_idle && best_idle_cpu != -) || //如果设置了prefer_idle,并且找到了一个idle cpu;根据schedtune是否打开boost和是否有更大capacity的cluster进行判断是否break

(boosted && (best_idle_cpu != - || target_cpu != - || //没有prefer_idle,但是打开boost的情况,除非high capacity的cpu不能接受task,否则不用再遍历low capacity的cpu

(fbt_env->strict_max && most_spare_cap_cpu != -)))) {

if (boosted) {

if (!next_group_higher_cap)

break;

} else {

if (next_group_higher_cap)

break;

}

} } while (sg = sg->next, sg != start_sd->groups); adjust_cpus_for_packing(p, &target_cpu, &best_idle_cpu, //计算将task放在target_cpu时,在考虑20%的余量,和sched_load_boost之后,看capacity是否满足target_cpu当前freq的capacity

shallowest_idle_cstate, //另外检查rtg,看是否不考虑idle cpu

fbt_env, boosted); /*

* For non latency sensitive tasks, cases B and C in the previous loop,

* we pick the best IDLE CPU only if we was not able to find a target

* ACTIVE CPU. //latency要求不高的task选择cpu优先级:ACTIVE cpu > idle cpu;没有ACITVE,则选idle cpu

*

* Policies priorities:

*

* - prefer_idle tasks: //prefer_idle的task选择cpu优先级:idle cpu > ACTIVE cpu(包含task之后又更多spare capacity) > ACTIVE cpu(更小cpu_util+boosted_task_util)

*

* a) IDLE CPU available: best_idle_cpu

* b) ACTIVE CPU where task fits and has the bigger maximum spare

* capacity (i.e. target_cpu)

* c) ACTIVE CPU with less contention due to other tasks

* (i.e. best_active_cpu)

*

* - NON prefer_idle tasks: //非prefer_idle的task选择cpu优先级:ACTIVE cpu > idle cpu

*

* a) ACTIVE CPU: target_cpu

* b) IDLE CPU: best_idle_cpu

*/ if (prefer_idle && (best_idle_cpu != -)) { //prefer_idle的task,直接选择best_idle_cpu作为target

target_cpu = best_idle_cpu;

goto target;

} if (target_cpu == -) //假如target没有找到,那么重新找target:

target_cpu = prefer_idle

? best_active_cpu //1、prefer_idle的task选择best_active_cpu;

: best_idle_cpu; //2、而非prefer_idle的task选择best_idle_cpu

else

backup_cpu = prefer_idle //假如找到了target,那么再选backup_cpu:

? best_active_cpu //1、prefer_idle的task选择 best_active_cpu

: best_idle_cpu; //2、非prefer_idle的task选择 best_idle_cpu if (target_cpu == - && most_spare_cap_cpu != - &&

/* ensure we use active cpu for active migration */ //active migration(misfit task迁移)情况只选择active cpu

!(p->state == TASK_RUNNING && !idle_cpu(most_spare_cap_cpu)))

target_cpu = most_spare_cap_cpu; if (target_cpu == - && isolated_candidate != - && //假如没有找到target_cpu,prev_cpu又处于isolated,而task允许的所有cpu中有online并且unisolated的

cpu_isolated(prev_cpu))

target_cpu = isolated_candidate; //那么就选择最后一个online并unisolated的cpu作为target if (backup_cpu >= )

cpumask_set_cpu(backup_cpu, cpus); //将backup_cpu存放进cpus中

if (target_cpu >= ) {

target:

cpumask_set_cpu(target_cpu, cpus); //将找出的target cpu存放进cpus中

} out:

trace_sched_find_best_target(p, prefer_idle, min_util, start_cpu,

best_idle_cpu, best_active_cpu,

most_spare_cap_cpu,

target_cpu, backup_cpu);

}

(2)计算energy

/*

* compute_energy(): Estimates the energy that would be consumed if @p was

* migrated to @dst_cpu. compute_energy() predicts what will be the utilization

* landscape of the * CPUs after the task migration, and uses the Energy Model

* to compute what would be the energy if we decided to actually migrate that

* task.

*/

static long

compute_energy(struct task_struct *p, int dst_cpu, struct perf_domain *pd)

{

long util, max_util, sum_util, energy = ;

int cpu; for (; pd; pd = pd->next) {

max_util = sum_util = ;

/*

* The capacity state of CPUs of the current rd can be driven by

* CPUs of another rd if they belong to the same performance

* domain. So, account for the utilization of these CPUs too

* by masking pd with cpu_online_mask instead of the rd span.

*

* If an entire performance domain is outside of the current rd,

* it will not appear in its pd list and will not be accounted

* by compute_energy().

*/

for_each_cpu_and(cpu, perf_domain_span(pd), cpu_online_mask) { //在perf domain的cpu中找出online的

#ifdef CONFIG_SCHED_WALT

util = cpu_util_next_walt(cpu, p, dst_cpu); //计算迁移task p之后,每个cpu的util情况

#else

util = cpu_util_next(cpu, p, dst_cpu);

util += cpu_util_rt(cpu_rq(cpu));

util = schedutil_energy_util(cpu, util);

#endif

max_util = max(util, max_util); //找到perf domain中cpu util最大的值(同perf domain,即cluster,最大的util决定了freq的设定)

sum_util += util; //统计迁移之后,perf domain内的总util

} energy += em_pd_energy(pd->em_pd, max_util, sum_util); //计算perf domain的energy,并累计大小cluster的energy,就是整个系统energy

} return energy;

}

获取perf domain内的energy,在其中有2个重要的结构体:

/**

* em_cap_state - Capacity state of a performance domain

* @frequency: The CPU frequency in KHz, for consistency with CPUFreq

* @power: The power consumed by 1 CPU at this level, in milli-watts

* @cost: The cost coefficient associated with this level, used during

* energy calculation. Equal to: power * max_frequency / frequency

*/

struct em_cap_state {

unsigned long frequency;

unsigned long power;

unsigned long cost;

}; /**

* em_perf_domain - Performance domain

* @table: List of capacity states, in ascending order

* @nr_cap_states: Number of capacity states

* @cpus: Cpumask covering the CPUs of the domain

*

* A "performance domain" represents a group of CPUs whose performance is

* scaled together. All CPUs of a performance domain must have the same

* micro-architecture. Performance domains often have a 1-to-1 mapping with

* CPUFreq policies.

*/

struct em_perf_domain {

struct em_cap_state *table;

int nr_cap_states;

unsigned long cpus[];

};

em_pd_energy函数可以得到perf domain的energy。

/**

* em_pd_energy() - Estimates the energy consumed by the CPUs of a perf. domain

* @pd : performance domain for which energy has to be estimated

* @max_util : highest utilization among CPUs of the domain

* @sum_util : sum of the utilization of all CPUs in the domain

*

* Return: the sum of the energy consumed by the CPUs of the domain assuming

* a capacity state satisfying the max utilization of the domain.

*/

static inline unsigned long em_pd_energy(struct em_perf_domain *pd,

unsigned long max_util, unsigned long sum_util)

{

unsigned long freq, scale_cpu;

struct em_cap_state *cs;

int i, cpu; if (!sum_util)

return ; /*

* In order to predict the capacity state, map the utilization of the

* most utilized CPU of the performance domain to a requested frequency,

* like schedutil.

*/

cpu = cpumask_first(to_cpumask(pd->cpus));

scale_cpu = arch_scale_cpu_capacity(NULL, cpu); //获取cpu的max_capacity

cs = &pd->table[pd->nr_cap_states - ]; //获取capacity state,是为了获取最大频点(因为cs的table是升序排列的,所以最后一个配置就是最大的频点)

freq = map_util_freq(max_util, cs->frequency, scale_cpu); //利用上面获取的最大频点、max_capacity,根据当前的cpu util映射到当前的cpu freq /*

* Find the lowest capacity state of the Energy Model above the

* requested frequency.

*/

for (i = ; i < pd->nr_cap_states; i++) { //通过循环找到能满足当前cpu freq的最小的频点,及其对应的capacity state

cs = &pd->table[i]; //同样因为cs的table是升序排列的,所以递增找到第一个满足的,就是满足条件的最小频点

if (cs->frequency >= freq)

break;

} /*

* The capacity of a CPU in the domain at that capacity state (cs)

* can be computed as:

*

* cs->freq * scale_cpu

* cs->cap = -------------------- (1)

* cpu_max_freq

*

* So, ignoring the costs of idle states (which are not available in

* the EM), the energy consumed by this CPU at that capacity state is

* estimated as:

*

* cs->power * cpu_util

* cpu_nrg = -------------------- (2)

* cs->cap

*

* since 'cpu_util / cs->cap' represents its percentage of busy time.

*

* NOTE: Although the result of this computation actually is in

* units of power, it can be manipulated as an energy value

* over a scheduling period, since it is assumed to be

* constant during that interval.

*

* By injecting (1) in (2), 'cpu_nrg' can be re-expressed as a product

* of two terms:

*

* cs->power * cpu_max_freq cpu_util

* cpu_nrg = ------------------------ * --------- (3)

* cs->freq scale_cpu

*

* The first term is static, and is stored in the em_cap_state struct

* as 'cs->cost'.

*

* Since all CPUs of the domain have the same micro-architecture, they

* share the same 'cs->cost', and the same CPU capacity. Hence, the

* total energy of the domain (which is the simple sum of the energy of

* all of its CPUs) can be factorized as:

*

* cs->cost * \Sum cpu_util

* pd_nrg = ------------------------ (4)

* scale_cpu

*/

return cs->cost * sum_util / scale_cpu; //通过上面的注释以及公式,推导出energy计算公式,并计算出perf doamin的总energy

}

总结

1、find_best_target()函数主要是根据当前情况,找到task迁移的candidate cpu(target_cpu、backup cpu、prev_cpu)

2、在find_energy_efficient_cpu()后半段,计算task迁移到每个candidate cpu后的系统总energy。然后会通过总energy计算对比,找到省电又不影响性能的best_energy_cpu

补充:

在energy model与energy计算,目前还未弄清楚如何联系起来,后续需要找到如何联系。

QTI EAS学习之find_energy_efficient_cpu的更多相关文章

- Sched_Boost小结

之前遇到一个耗电问题,最后发现是/proc/sys/kernel/sched_boost节点设置异常,一直处于boost状态.导致所有场景功耗上升. 现在总结一下sched_boost的相关知识. S ...

- AgileEAS.NET SOA 中间件平台5.2版本下载、配置学习(四):开源的Silverlight运行容器的编译、配置

一.前言 AgileEAS.NET SOA 中间件平台是一款基于基于敏捷并行开发思想和Microsoft .Net构件(组件)开发技术而构建的一个快速开发应用平台.用于帮助中小型软件企业建立一条适合市 ...

- PHP学习之输出语句、注释、算数运算符

今天学习了PHP的输出语句:

- AgileEAS.NET SOA 中间件平台5.2版本下载、配置学习(三):配置ActiveXForm运行环境

一.前言 AgileEAS.NET SOA 中间件平台是一款基于基于敏捷并行开发思想和Microsoft .Net构件(组件)开发技术而构建的一个快速开发应用平台.用于帮助中小型软件企业建立一条适合市 ...

- AgileEAS.NET SOA 中间件平台5.2版本下载、配置学习(二):配置WinClient分布式运行环境

一.前言 AgileEAS.NET SOA 中间件平台是一款基于基于敏捷并行开发思想和Microsoft .Net构件(组件)开发技术而构建的一个快速开发应用平台.用于帮助中小型软件企业建立一条适合市 ...

- Java学习-038-JavaWeb_007 -- JSP 动作标识 - plugin

plugin 动作时用来在 JSP 页面中加载 Java Applet 或者 JavaBean 组件,语法格式如下所示: <jsp:plugin type="bean|applet&q ...

- Jquery基础教程第二版学习记录

本文仅为个人jquery基础的学习,简单的记录以备忘. 在线手册:http://www.php100.com/manual/jquery/第一章:jquery入门基础jquery知识:jquery能做 ...

- 音视频 学习&开发&测试 资源

一.FFmpeg 学习 1. 官方API文档 FFmpeg Documentation:http://www.ffmpeg.org/doxygen/trunk/index.html 2. 优秀开源项目 ...

- [Reinforcement Learning] 强化学习介绍

随着AlphaGo和AlphaZero的出现,强化学习相关算法在这几年引起了学术界和工业界的重视.最近也翻了很多强化学习的资料,有时间了还是得自己动脑筋整理一下. 强化学习定义 先借用维基百科上对强化 ...

随机推荐

- Algorithms - Priority Queue - 优先队列

Priority queue - 优先队列 相关概念 Priority queue优先队列是一种用来维护由一组元素构成的集合S的数据结构, 其中的每一种元素都有一个相关的值,称为关键字(key). 一 ...

- 介绍一个船新的 PHP SDK + Runtime: PeachPie

前言 这几天想基于 .NET Core 搞一个自己的博客网站,于是在网上搜刮各种博客引擎,找到了这些候选:Blogifier.Miniblog 以及 edi 写的 Moonglate. Blogifi ...

- jvm入门及理解(五)——运行时数据区(虚拟机栈)和本地方法接口

一.虚拟机栈背景 由于跨平台性的设计,java的指令都是根据栈来设计的.不同平台CPU架构不同,所以不能设计为基于寄存器的. 优点是跨平台,指令集小,编译器容易实现,缺点是性能下降,实现同样的功能需要 ...

- Matlab中 awgn 函数输入参数带有‘measured’ 时snr的含义

MATLAB中awgn 函数可以为输入信号x 添加一定大小的噪声. out = awgn(in,snr,'measured'); 是一种常见的使用方法,意思是在添加噪声前先测量一下输入信号的功率,再 ...

- NoSQL之一:Memcached

一.NoSQL简介 NoSQL并不是No SQL(不再需要SQL),而是指Not Only SQL(不仅仅只有SQL).NoSQL并不是用来替代关系型数据库的,而是在某些使用关系型数据库不合适的场景中 ...

- c++内存管理学习纲要

本系列文章,主要是学习c++内存管理这一块的学习笔记. 时间:6.7-21 之下以技术内幕的开头语,带入到学习C++内存管理的技术中吧: 内存管理是C++最令人切齿痛恨的问题,也是C++最有争议的问题 ...

- security安全框架,用户登录安全认证与退出

一.创建用户表及实体类 二.编写security配置文件 <?xml version="1.0" encoding="UTF-8"?><bea ...

- zip压缩文件(二)

普通压缩文件以20M大小的文件为例 public static void main(String[] args) { String source = "F:\\demo\\择天记 第5季 第 ...

- nginx default server

配合server_name _ 可以匹配所有的域名,在设置default server 可以轻松屏蔽一些非域名访问的请求. 配置如下 server { listen 80 default_server ...

- 4.4 Go goto continue break

4.4 Go goto continue break Go语言的goto语句可以无条件的跳转到指定的代码行执行. goto语句一般与条件语句结合,实现条件转义,跳出循环体等. Go程序不推荐使用got ...