kubernetes学习笔记之十二:资源指标API及自定义指标API

第一章、前言

以前是用heapster来收集资源指标才能看,现在heapster要废弃了

从1.8以后引入了资源api指标监视

资源指标:metrics-server(核心指标)

自定义指标:prometheus,k8s-prometheus-adapter(将Prometheus采集的数据转换为指标格式)

k8s的中的prometheus需要k8s-prometheus-adapter转换一下才可以使用

新一代架构:

核心指标流水线:

kubelet,metrics-service以及API service提供api组成;cpu累计使用率,内存实时使用率,pod的资源占用率和容器磁盘占用率;

监控流水线:

用于从系统收集各种指标数据并提供终端用户,存储系统以及HPA,他们包括核心指标以及很多非核心指标,非核心指标本身不能被k8s解析

第二章、安装部署metrics-server

1、下载yaml文件,并安装

项目地址:https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/metrics-server,选择与版本对应的分支,我的是v1.10.0,所以这里我选择v1.10.0分支

[root@k8s-master_01 manifests]# mkdir metrics-server

[root@k8s-master_01 manifests]# cd metrics-server

[root@k8s-master_01 metrics-server]# for file in auth-delegator.yaml auth-reader.yaml metrics-apiservice.yaml metrics-server-deployment.yaml metrics-server-service.yaml resource-reader.yaml;do wget https://raw.githubusercontent.com/kubernetes/kubernetes/v1.10.0/cluster/addons/metrics-server/$file;done #记住,下载raw格式的文件

[root@k8s-master_01 metrics-server]# grep image: ./* #查看使用的镜像,如果可以科学上网,那么忽略,如果不可用那么需要提前下载,通过修改配置文件或修改镜像的名称的方式加载镜像,镜像可以到阿里云上去搜索

./metrics-server-deployment.yaml: image: k8s.gcr.io/metrics-server-amd64:v0.2.1

./metrics-server-deployment.yaml: image: k8s.gcr.io/addon-resizer:1.8.1

[root@k8s-node_01 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/criss/addon-resizer:1.8.1 #手动在所有的node节点上下载镜像,注意版本号没有v

[root@k8s-node_01 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/k8s-kernelsky/metrics-server-amd64:v0.2.1

[root@k8s-master_01 metrics-server]# grep image: metrics-server-deployment.yaml

image: registry.cn-hangzhou.aliyuncs.com/k8s-kernelsky/metrics-server-amd64:v0.2.1

image: registry.cn-hangzhou.aliyuncs.com/criss/addon-resizer:1.8.1

[root@k8s-master_01 metrics-server]# kubectl apply -f .

[root@k8s-master_01 metrics-server]# kubectl get pod -n kube-system

2、验证

[root@k8s-master01 ~]# kubectl api-versions |grep metrics

metrics.k8s.io/v1beta1

[root@k8s-node01 ~]# kubectl proxy --port= #重新打开一个终端,启动代理功能

[root@k8s-master_01 metrics-server]# curl http://localhost:8080/apis/metrics.k8s.io/v1beta1 #查看这个资源组包含哪些组件

[root@k8s-master_01 metrics-server]# curl http://localhost:8080/apis/metrics.k8s.io/v1beta1/pods #可能需要等待一会在会有数据

[root@k8s-master_01 metrics-server]# curl http://localhost:8080/apis/metrics.k8s.io/v1beta1/nodes

[root@k8s-node01 ~]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master01 176m % 3064Mi %

k8s-node01 62m % 4178Mi %

k8s-node02 65m % 2141Mi %

[root@k8s-node01 ~]# kubectl top pods

NAME CPU(cores) MEMORY(bytes)

node-affinity-pod 0m 1Mi

3.注意事项

1.#在更新的版本中,如v1.11及以上会出现问题,这是因为metric-service默认从kubernetes的summary_api中获取数据,而summary_api默认使用10255端口来获

取数据,但是10255是一个http协议的端口,可能官方认为http协议不安全所以封禁了10255端口改为使用10250端口,而10250是一个https协议端口,所以我们需要修改一下连接方式:

由 - --source=kubernetes.summary_api:''

修改为 - --source=kubernetes.summary_api:https://kubernetes.default?kubeletHttps=true&kubeletPort=10250&insecure-true #表示虽然我使用https协议来通信,并且端口也是10250,但是如果证书不能认证依然可以通过非安全不加密的方式来通信

[root@k8s-node01 deploy]# grep source=kubernetes metrics-server-deployment.yaml

2.[root@k8s-node01 deploy]# grep nodes/stats resource-reader.yaml #在新的版本中,授权文内没有 node/stats 的权限,需要手动去添加

[root@k8s-node01 deploy]# cat resource-reader.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats #添加这一行

- namespaces

3.在1.12.3版本中测试发现,需要进行如下修改才能成功部署(权限依然需要修改,其他版本暂未测试)

[root@k8s-master-01 metrics-server]# vim metrics-server-deployment.yaml

command: #metrics-server命令参数修改为如下参数

- /metrics-server

- --metric-resolution=30s

- --kubelet-port=10250

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP

command: #metrics-server-nanny 命令参数修改为如下参数

- /pod_nanny

- --config-dir=/etc/config

- --cpu=40m

- --extra-cpu=0.5m

- --memory=40Mi

- --extra-memory=4Mi

- --threshold=5

- --deployment=metrics-server-v0.3.1

- --container=metrics-server

- --poll-period=300000

- --estimator=exponential

第三章、安装部署prometheus

项目地址:https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/prometheus(由于prometheus只有v1.11.0及以上才有,所有我选择v1.11.0来部署)

1.下载yaml文件及部署前操作

[root@k8s-node01 ~]# cd /mnt/

[root@k8s-node01 mnt]# git clone https://github.com/kubernetes/kubernetes.git #我嫌麻烦就直接克隆kubernetes整个项目了

[root@k8s-node01 mnt]# cd kubernetes/cluster/addons/prometheus/

[root@k8s-node01 prometheus]# git checkout v1.11.0

[root@k8s-node01 prometheus]# cd ..

[root@k8s-node01 addons]# cp -r prometheus /root/manifests/

[root@k8s-node01 manifests]# cd prometheus/

[root@k8s-node01 prometheus]# grep -w "namespace: kube-system" ./* #默认prometheus使用的是kube-system名称空间,我们把它单独部署到一个名称空间中,方便之后的管理

./alertmanager-configmap.yaml: namespace: kube-system

......

[root@k8s-node01 prometheus]# sed -i 's/namespace: kube-system/namespace\: k8s-monitor/g' ./*

[root@k8s-node01 prometheus]# grep storage: ./* #安装需要两个pv,等下我们需要创建一下

./alertmanager-pvc.yaml: storage: "2Gi"

./prometheus-statefulset.yaml: storage: "16Gi"

[root@k8s-node01 prometheus]# cat pv.yaml #注意第二pv的storageClassName

apiVersion: v1

kind: PersistentVolume

metadata:

name: alertmanager

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

- ReadWriteMany

persistentVolumeReclaimPolicy: Recycle

nfs:

path: /data/volumes/v1

server: 172.16.150.158

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: standard

spec:

capacity:

storage: 25Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

storageClassName: standard #storageClassName与prometheus-statefulset.yaml中volumeClaimTemplates下定义的需要保持一致

nfs:

path: /data/volumes/v2

server: 172.16.150.158

[root@k8s-node01 prometheus]# kubectl create namespace k8s-monitor

[root@k8s-node01 prometheus]# mkdir node-exporter kube-state-metrics alertmanager prometheus #将每个组件单独放入一个目录中,方便部署及管理

[root@k8s-node01 prometheus]# mv node-exporter-* node-exporter

[root@k8s-node01 prometheus]# mv alertmanager-* alertmanager

[root@k8s-node01 prometheus]# mv kube-state-metrics-* kube-state-metrics

[root@k8s-node01 prometheus]# mv prometheus-* prometheus

2.安装node-exporter(用于收集节点的数据指标)

[root@k8s-node01 prometheus]# grep -r image: node-exporter/*

node-exporter/node-exporter-ds.yml: image: "prom/node-exporter:v0.15.2" #非官方镜像,不能科学上网的也可以下载,所以不需要提前下载

[root@k8s-node01 prometheus]# kubectl apply -f node-exporter/

daemonset.extensions "node-exporter" created

service "node-exporter" created

[root@k8s-node01 prometheus]# kubectl get pod -n k8s-monitor

NAME READY STATUS RESTARTS AGE

node-exporter-l5zdw 1/1 Running 0 1m

node-exporter-vwknx 1/1 Running 0 1m

3.安装prometheus

[root@k8s-master_01 prometheus]# kubectl apply -f pv.yaml

persistentvolume "alertmanager" configured

persistentvolume "standard" created

[root@k8s-master_01 prometheus]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

alertmanager 5Gi RWO,RWX Recycle Available 9s standard 25Gi RWO Recycle Available 9s

[root@k8s-node01 prometheus]# grep -i image prometheus/* #查看镜像是否需要下载

[root@k8s-node01 prometheus]# vim prometheus-service.yaml #默认prometheus的service端口类型为ClusterIP,为了可以集群外访问,修改为NodePort

...

type: NodePort

ports:

- name: http

port: 9090

protocol: TCP

targetPort: 9090

nodePort: 30090

...

[root@k8s-node01 prometheus]# kubectl apply -f prometheus/

[root@k8s-node01 prometheus]# kubectl get pod -n k8s-monitor

NAME READY STATUS RESTARTS AGE

node-exporter-l5zdw 1/1 Running 0 24m

node-exporter-vwknx 1/1 Running 0 24m

prometheus-0 2/2 Running 0 1m

[root@k8s-node01 prometheus]# kubectl get svc -n k8s-monitor

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

node-exporter ClusterIP None <none> 9100/TCP 25m

prometheus NodePort 10.96.9.121 <none> 9090:30090/TCP 22m

[root@k8s-master_01 prometheus]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

alertmanager 5Gi RWO,RWX Recycle Available 1h

standard 25Gi RWO Recycle Bound k8s-monitor/prometheus-data-prometheus-0 standard 1h

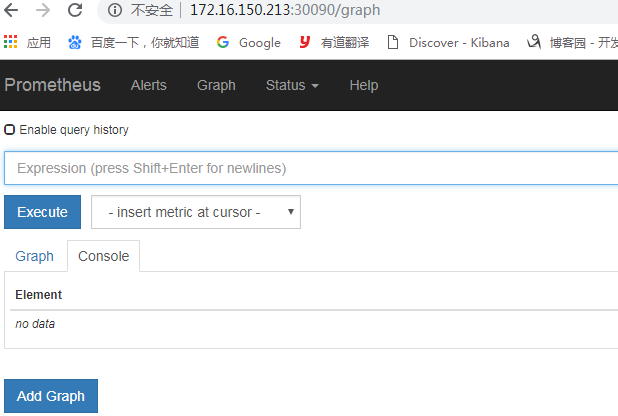

访问prometheus(node节点IP:端口)

4.部署metrics适配器(将prometheus数据转换为k8s可以识别的数据)

[root@k8s-node01 kube-state-metrics]# grep image: ./*

./kube-state-metrics-deployment.yaml: image: quay.io/coreos/kube-state-metrics:v1.3.0

./kube-state-metrics-deployment.yaml: image: k8s.gcr.io/addon-resizer:1.7

[root@k8s-node02 ~]# docker pull registry.cn-hangzhou.aliyuncs.com/ccgg/addon-resizer:1.7

[root@k8s-node01 kube-state-metrics]# vim kube-state-metrics-deployment.yaml #修改镜像地址

[root@k8s-node01 kube-state-metrics]# kubectl apply -f kube-state-metrics-deployment.yaml

deployment.extensions "kube-state-metrics" configured

[root@k8s-node01 kube-state-metrics]# kubectl get pod -n k8s-monitor

NAME READY STATUS RESTARTS AGE

kube-state-metrics-54849b96b4-dmqtk 2/2 Running 0 23s

node-exporter-l5zdw 1/1 Running 0 2h

node-exporter-vwknx 1/1 Running 0 2h

prometheus-0 2/2 Running 0 1h

5.部署k8s-prometheus-adapter(将数据输出为一个API服务)

项目地址:https://github.com/DirectXMan12/k8s-prometheus-adapter

[root@k8s-master01 ~]# cd /etc/kubernetes/pki/

[root@k8s-master01 pki]#(umask 077; openssl genrsa -out serving.key 2048)

[root@k8s-master01 pki]#openssl req -new -key serving.key -out serving.csr -subj "/CN=serving" #CN必须为serving

[root@k8s-master01 pki]#openssl x509 -req -in serving.csr -CA ./ca.crt -CAkey ./ca.key -CAcreateserial -out serving.crt -days 3650

[root@k8s-master01 pki]# kubectl create secret generic cm-adapter-serving-certs --from-file=serving.crt=./serving.crt --from-file=serving.key=./serving.key -n k8s-monitor #证书名称必须为cm-adapter-serving-certs

[root@k8s-master01 pki]#kubectl get secret -n k8s-monitor

[root@k8s-master01 pki]# cd

[root@k8s-node01 ~]# git clone https://github.com/DirectXMan12/k8s-prometheus-adapter.git

[root@k8s-node01 ~]# cd k8s-prometheus-adapter/deploy/manifests/

[root@k8s-node01 manifests]# grep namespace: ./* #处理role-binding之外的namespace的名称改为k8s-monitor

[root@k8s-node01 manifests]# grep image: ./* #镜像不需要下载

[root@k8s-node01 ~]# sed -i 's/namespace\: custom-metrics/namespace\: k8s-monitor/g' ./* #rolebinding的不要替换

[root@k8s-node01 ~]# kubectl apply -f ./

[root@k8s-node01 ~]# kubectl get pod -n k8s-monitor

[root@k8s-node01 ~]#kubectl get svc -n k8s-monitor

kubectl api-versions |grep custom

第四章、部署prometheus+grafana

[root@k8s-master01 ~]# wget https://raw.githubusercontent.com/kubernetes-retired/heapster/master/deploy/kube-config/influxdb/grafana.yaml #找不到grafana的yaml文件,所以到heapster里面掏了一个下来用用

[root@k8s-master01 ~]#egrep -i "influxdb|namespace|nodeport" grafana.yaml #注释掉influxdb环境变量,修改namespace及port类型

[root@k8s-master01 ~]#kubectl apply -f grafana.yaml

[root@k8s-master01 ~]#kubectl get svc -n k8s-monitor

[root@k8s-master01 ~]#kubectl get pod -n k8s-monitor

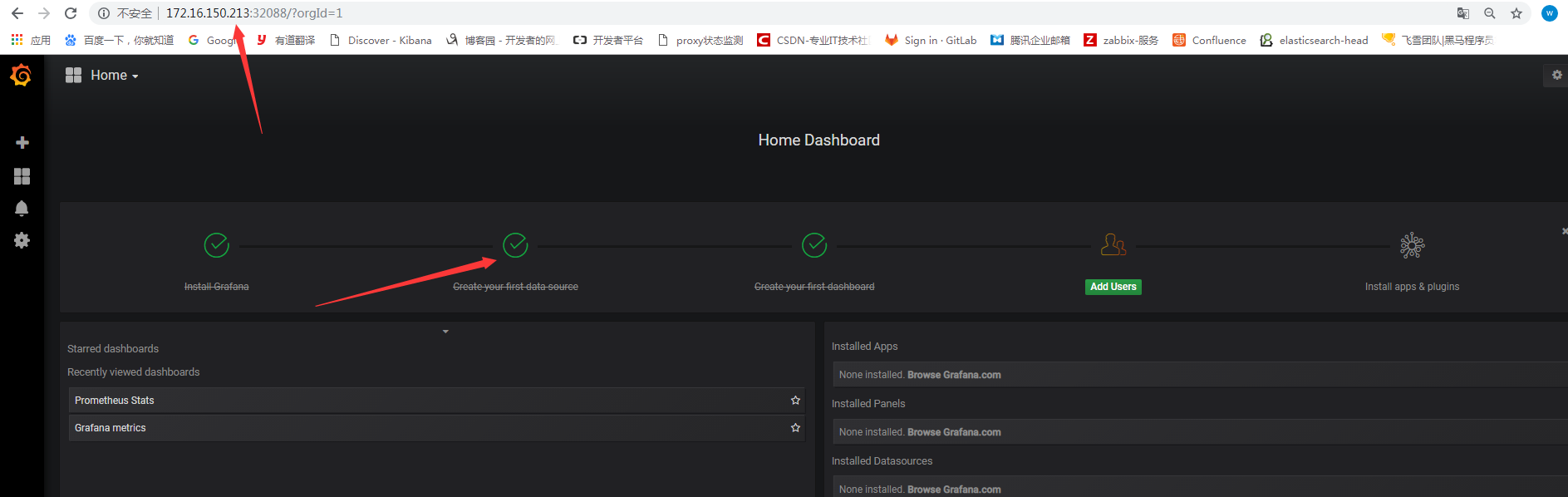

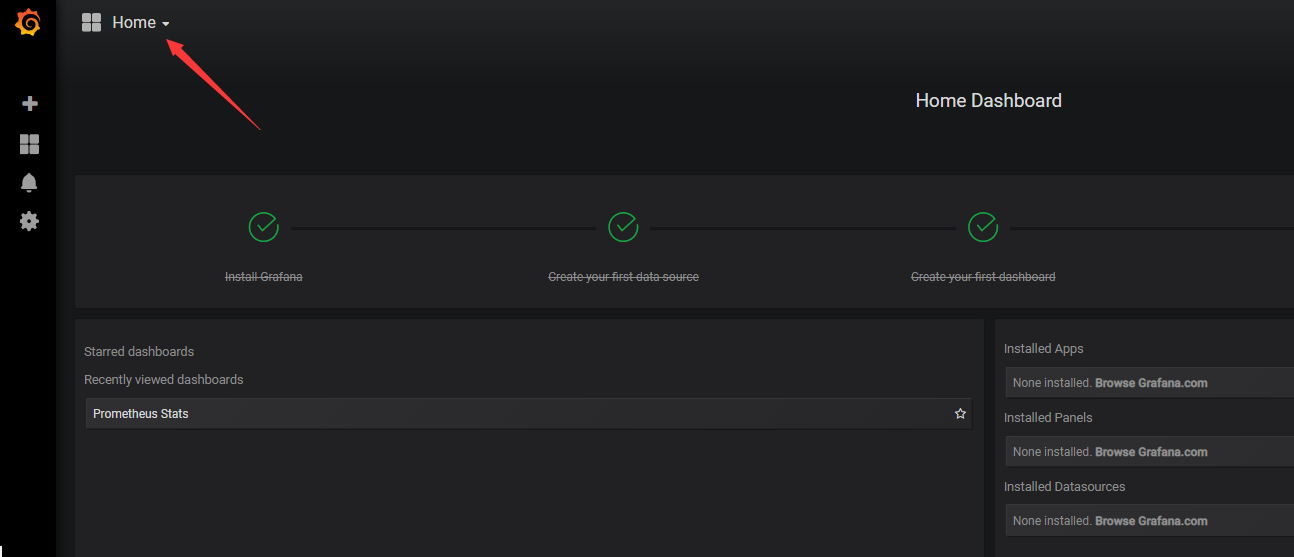

登录grafana,并修改数据源

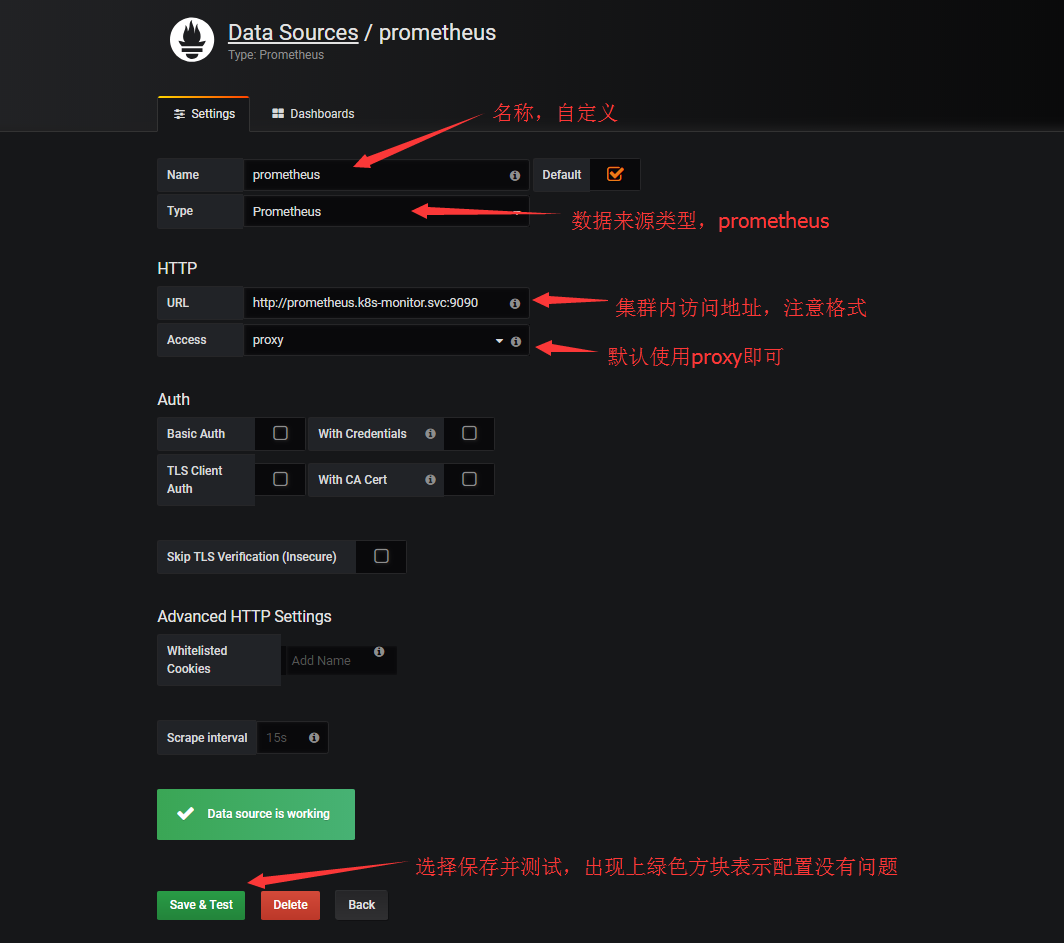

配置数据源

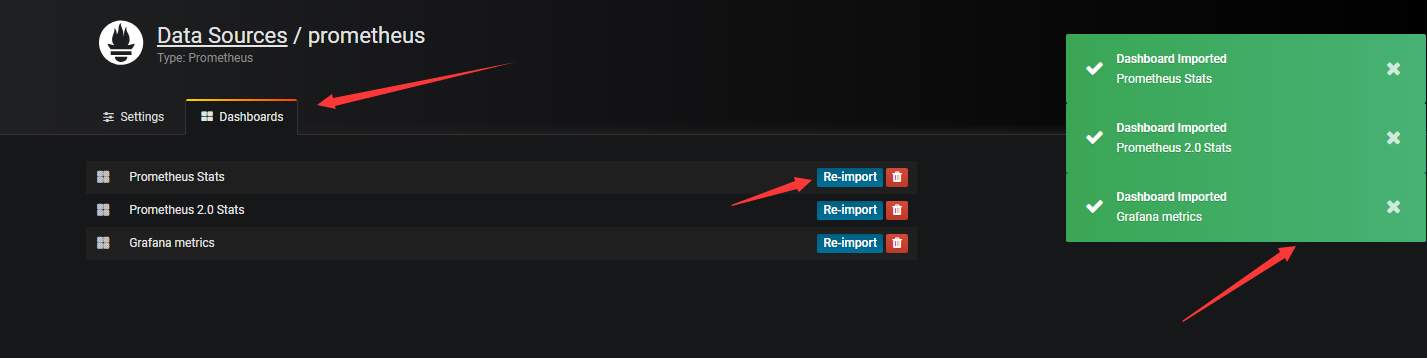

点击右侧的Dashborads,可以导入grafana自带的prometheus的模板

回到home下,下拉选择对应的模板查看数据

例如:

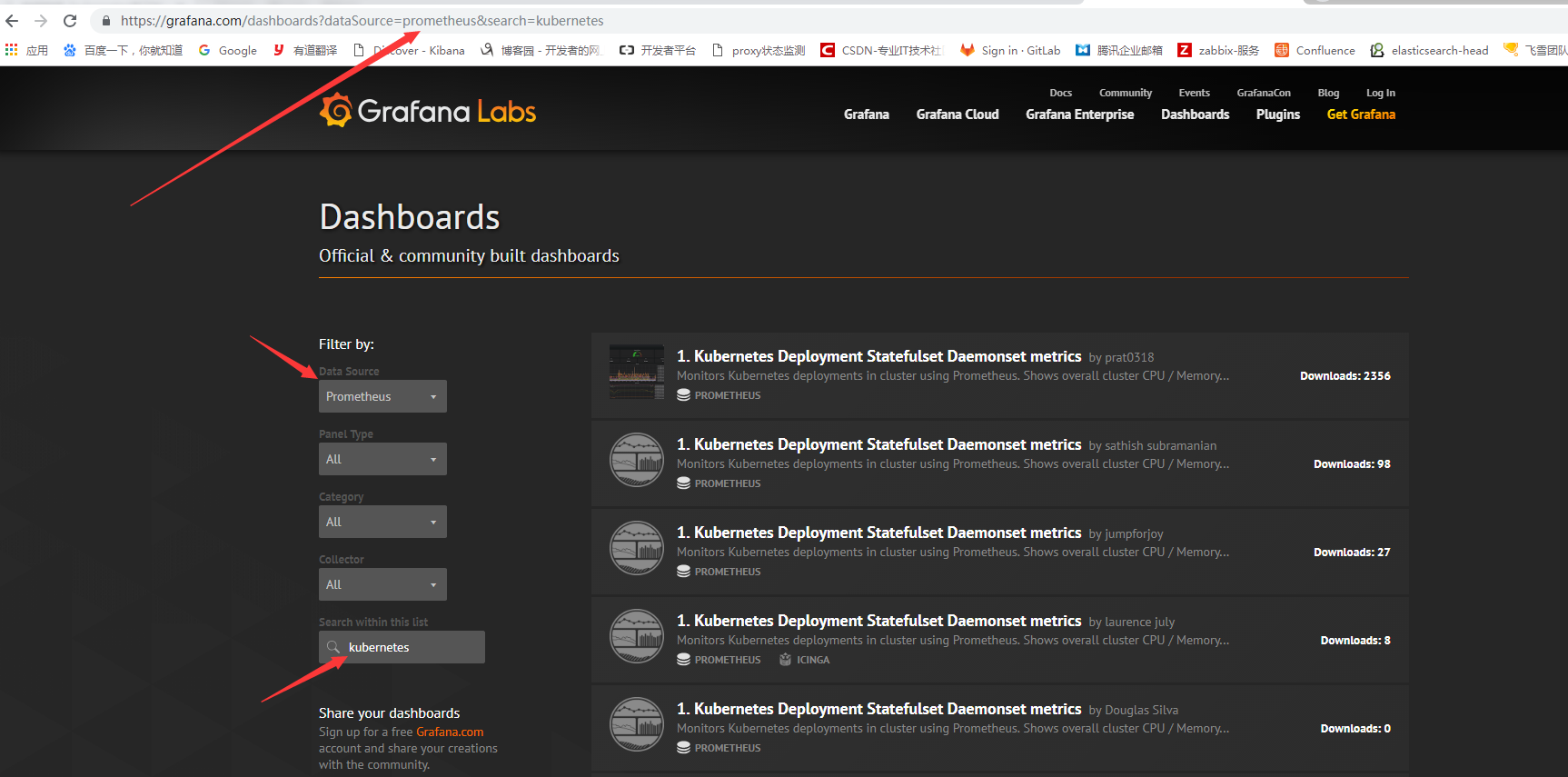

但是,grafana自带的模板和数据有些不匹配,我们可以去grafana官网去下载应用于k8s使用的模板,地址为:https://grafana.com/dashboards

访问grafana官网搜索k8s相关模板,有时搜索框点击没有反应,可以直接在URL后面加上搜索内容即可

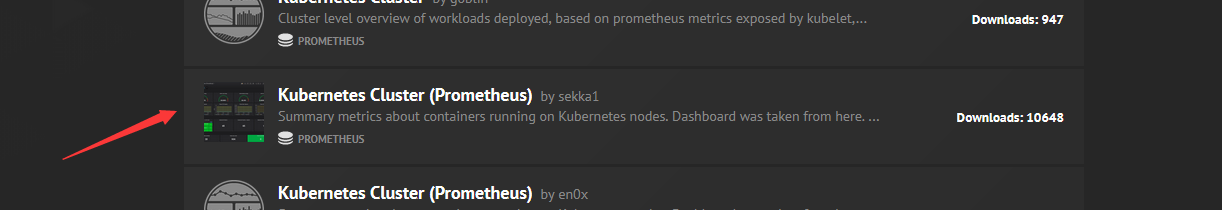

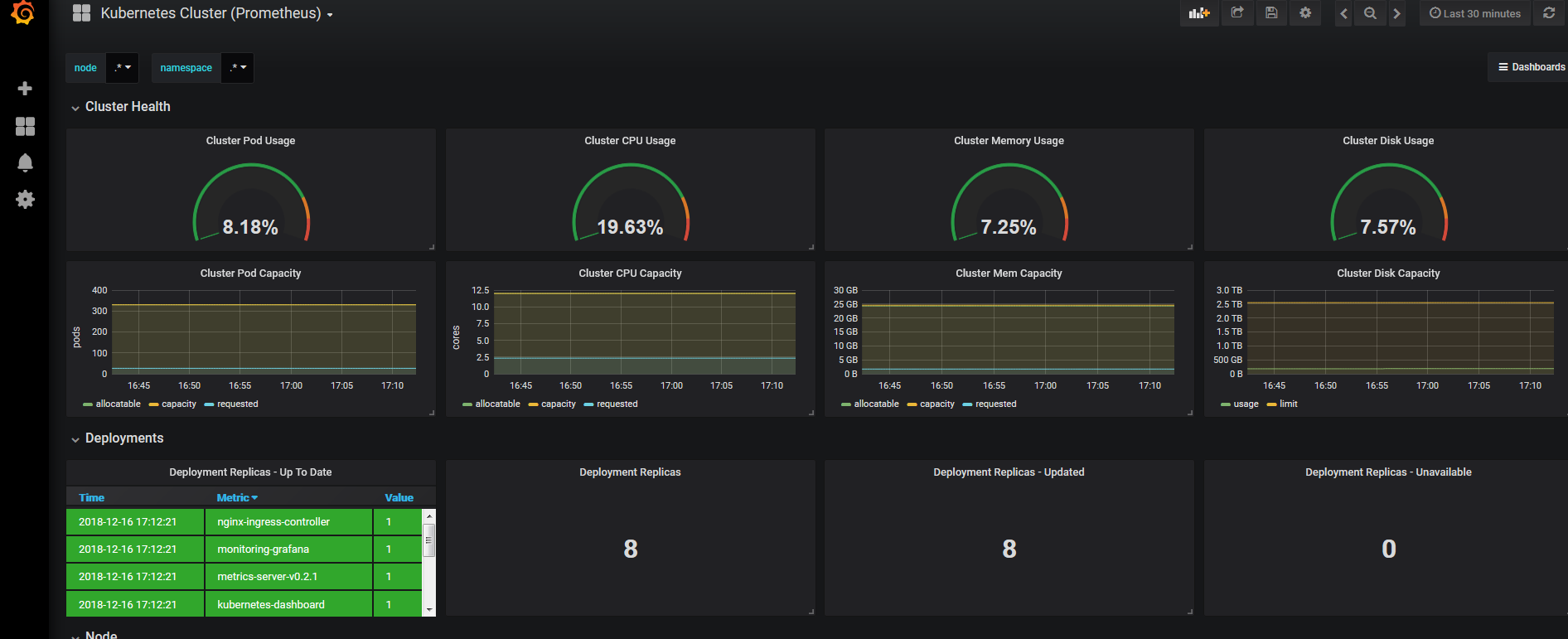

我们选择kubernetes cluster(prometheus)作为测试

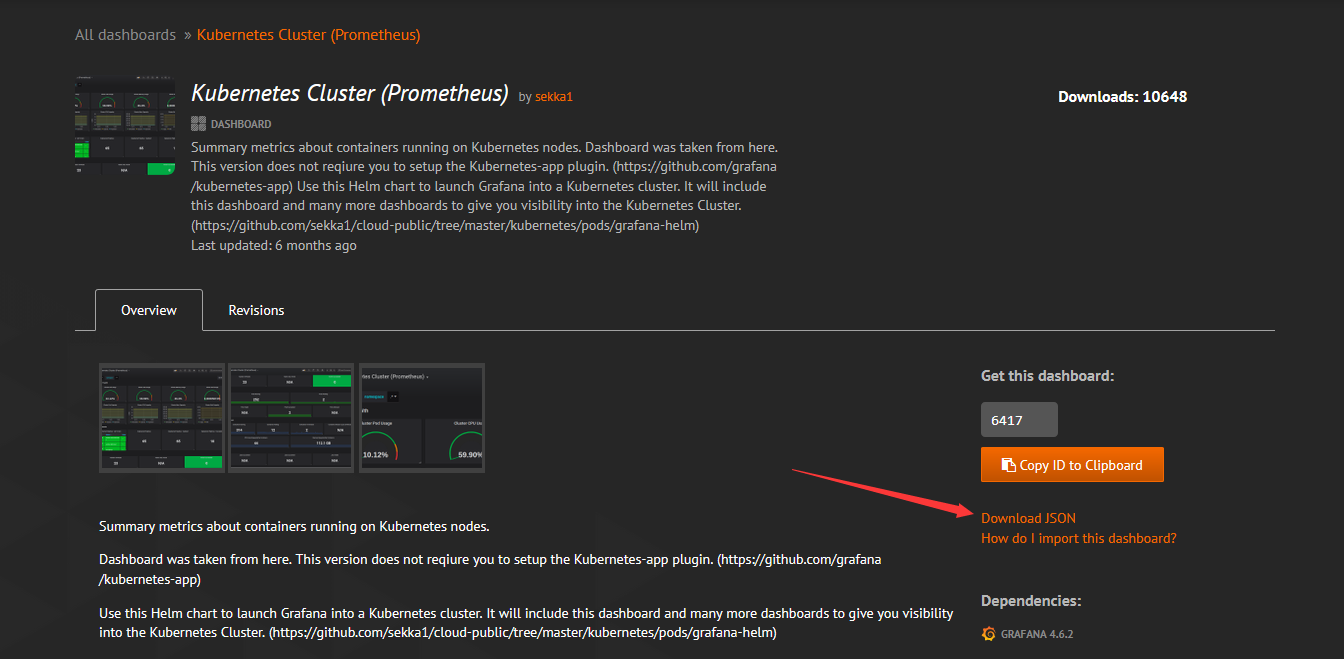

点击需要下载的模板,并下载json文件

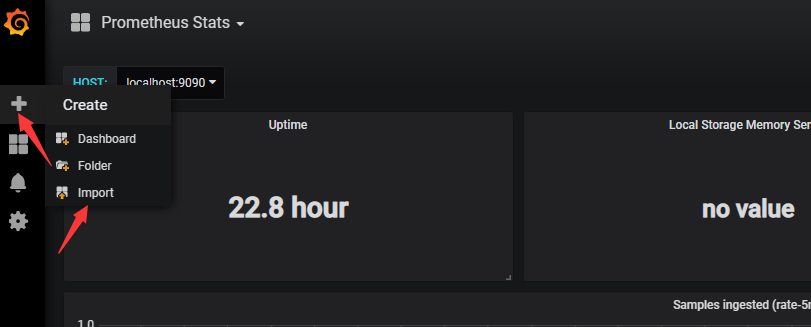

下载完成后,导入文件

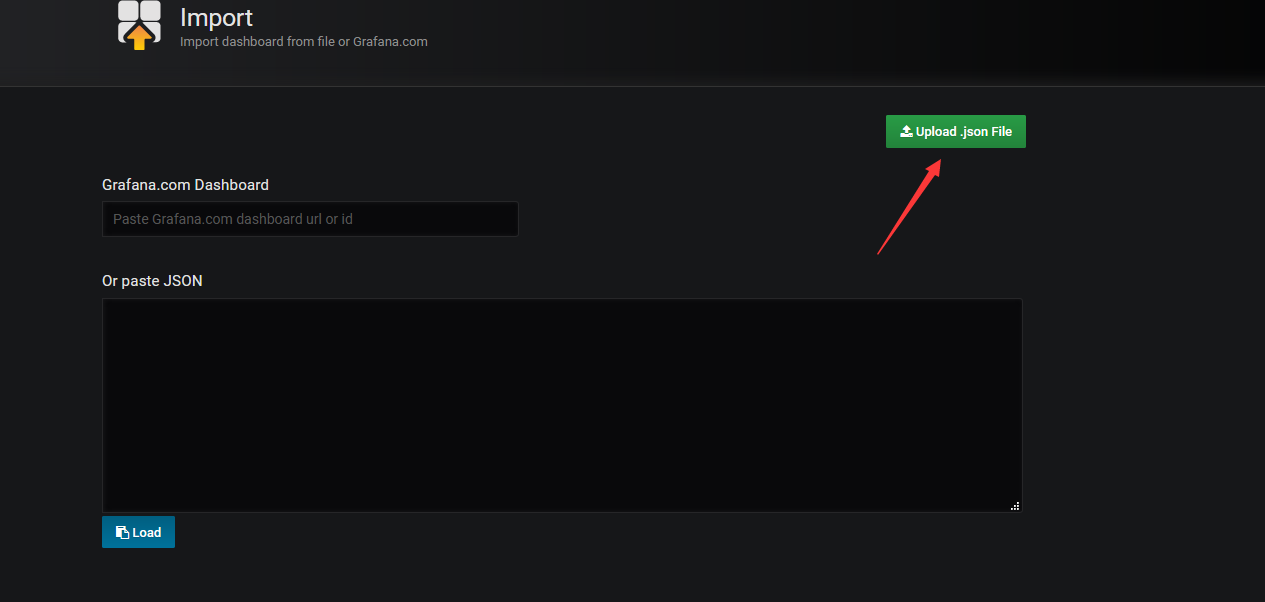

选择上传文件

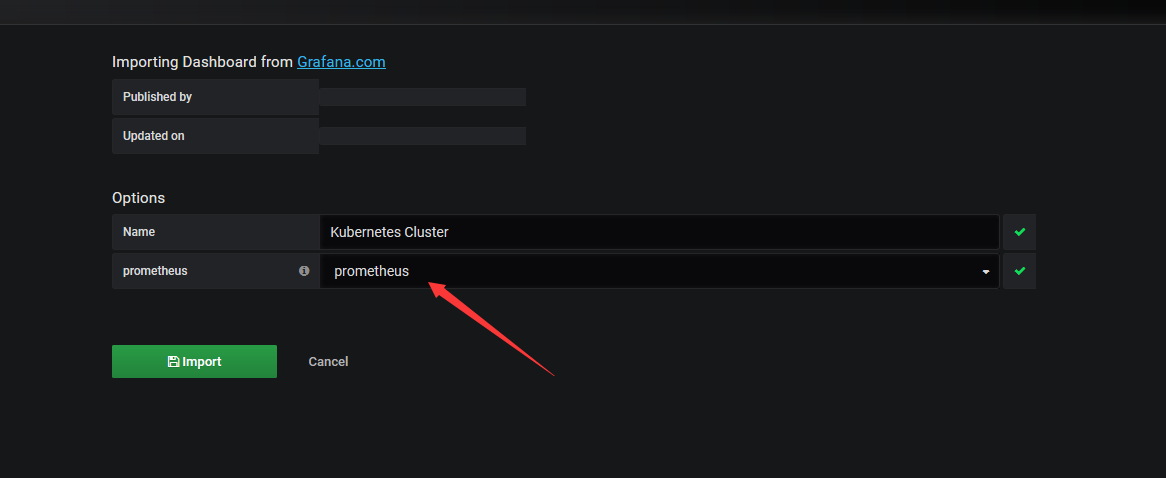

导入后选择数据源

导入后展示的界面

第五章、实现HPA

1、使用v1版本测试

[root@k8s-master01 alertmanager]# kubectl api-versions |grep autoscaling

autoscaling/v1

autoscaling/v2beta1

[root@k8s-master01 manifests]# cat deploy-demon.yaml

apiVersion: v1

kind: Service

metadata:

name: myapp

namespace: default

spec:

selector:

app: myapp

type: NodePort

ports:

- name: http

port:

targetPort:

nodePort: ---

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deploy

spec:

replicas:

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v2

ports:

- name: httpd

containerPort:

resources:

requests:

memory: "64Mi"

cpu: "100m"

limits:

memory: "128Mi"

cpu: "200m"

[root@k8s-master01 manifests]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> /TCP 47d

my-nginx NodePort 10.104.13.148 <none> :/TCP 19d

myapp NodePort 10.100.76.180 <none> :/TCP 16s

tomcat ClusterIP 10.106.222.72 <none> /TCP,/TCP 19d

[root@k8s-master01 manifests]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-deploy-5db497dbfb-h7zcb / Running 16s

myapp-deploy-5db497dbfb-tvsf5 / Running 16s

测试

[root@k8s-master01 manifests]# kubectl autoscale deployment myapp-deploy --min= --max= --cpu-percent=

deployment.apps "myapp-deploy" autoscaled

[root@k8s-master01 manifests]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

myapp-deploy Deployment/myapp-deploy <unknown>/% 22s

[root@k8s-master01 pod-dir]# yum install http-tools -y

[root@k8s-master01 pod-dir]# ab -c -n http://172.16.150.213:32222/index.html

[root@k8s-master01 ~]# kubectl describe hpa

Name: myapp-deploy

Namespace: default

Labels: <none>

Annotations: <none>

CreationTimestamp: Sun, Dec :: +

Reference: Deployment/myapp-deploy

Metrics: ( current / target )

resource cpu on pods (as a percentage of request): % (178m) / %

Min replicas:

Max replicas:

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale False BackoffBoth the time since the previous scale is still within both the downscale and upscale forbidden windows

ScalingActive True ValidMetricFound the HPA was able to successfully calculate a replica count from cpu resource utilization (percentage of request)

ScalingLimited True ScaleUpLimit the desired replica count is increasing faster than the maximum scale rate

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulRescale 19m horizontal-pod-autoscaler New size: ; reason: All metrics below target

Normal SuccessfulRescale 2m horizontal-pod-autoscaler New size: ; reason: cpu resource utilization (percentage of request) above target

[root@k8s-master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-deploy-5db497dbfb-6kssf / Running 2m

myapp-deploy-5db497dbfb-h7zcb / Running 24m

[root@k8s-master01 ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

myapp-deploy Deployment/myapp-deploy %/% 20m

2、使用v2beat1

[root@k8s-master01 pod-dir]# cat hpa-demo.yaml

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: myapp-hpa-v2

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: myapp-deploy

minReplicas:

maxReplicas:

metrics:

- type: Resource

resource:

name: cpu

targetAverageUtilization:

- type: Resource

resource:

name: memory

targetAverageValue: 100Mi

[root@k8s-master01 pod-dir]# kubectl delete hpa myapp-deploy

horizontalpodautoscaler.autoscaling "myapp-deploy" deleted

[root@k8s-master01 pod-dir]# kubectl apply -f hpa-demo.yaml

horizontalpodautoscaler.autoscaling "myapp-hpa-v2" created

[root@k8s-master01 pod-dir]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

myapp-hpa-v2 Deployment/myapp-deploy <unknown>/100Mi, <unknown>/% 6s

测试

[root@k8s-master01 ~]# kubectl describe hpa

Name: myapp-hpa-v2

Namespace: default

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"autoscaling/v2beta1","kind":"HorizontalPodAutoscaler","metadata":{"annotations":{},"name":"myapp-hpa-v2","namespace":"default"},"spec":{...

CreationTimestamp: Sun, Dec :: +

Reference: Deployment/myapp-deploy

Metrics: ( current / target )

resource memory on pods: / 100Mi

resource cpu on pods (as a percentage of request): % (200m) / %

Min replicas:

Max replicas:

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale True SucceededRescale the HPA controller was able to update the target scale to

ScalingActive True ValidMetricFound the HPA was able to successfully calculate a replica count from cpu resource utilization (percentage of request)

ScalingLimited False DesiredWithinRange the desired count is within the acceptable range

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulRescale 18s horizontal-pod-autoscaler New size: ; reason: cpu resource utilization (percentage of request) above target

[root@k8s-master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-deploy-5db497dbfb-5n885 / Running 26s

myapp-deploy-5db497dbfb-h7zcb / Running 40m

myapp-deploy-5db497dbfb-z2tqd / Running 26s

myapp-deploy-5db497dbfb-zkjhw / Running 26s

[root@k8s-master01 ~]# kubectl describe hpa

Name: myapp-hpa-v2

Namespace: default

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"autoscaling/v2beta1","kind":"HorizontalPodAutoscaler","metadata":{"annotations":{},"name":"myapp-hpa-v2","namespace":"default"},"spec":{...

CreationTimestamp: Sun, Dec :: +

Reference: Deployment/myapp-deploy

Metrics: ( current / target )

resource memory on pods: / 100Mi

resource cpu on pods (as a percentage of request): % () / %

Min replicas:

Max replicas:

Conditions:

Type Status Reason Message

---- ------ ------ -------

AbleToScale False BackoffBoth the time since the previous scale is still within both the downscale and upscale forbidden windows

ScalingActive True ValidMetricFound the HPA was able to successfully calculate a replica count from memory resource

ScalingLimited False DesiredWithinRange the desired count is within the acceptable range

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulRescale 6m horizontal-pod-autoscaler New size: ; reason: cpu resource utilization (percentage of request) above target

Normal SuccessfulRescale 34s horizontal-pod-autoscaler New size: ; reason: All metrics below target

[root@k8s-master01 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

myapp-deploy-5db497dbfb-h7zcb / Running 46m

3.使用v2beat1测试自定义选项

[root@k8s-master01 pod-dir]# cat ../deploy-demon-metrics.yaml

apiVersion: v1

kind: Service

metadata:

name: myapp

namespace: default

spec:

selector:

app: myapp

type: NodePort

ports:

- name: http

port:

targetPort:

nodePort: ---

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deploy

spec:

replicas:

selector:

matchLabels:

app: myapp

template:

metadata:

labels:

app: myapp

spec:

containers:

- name: myapp

image: ikubernetes/metrics-app #测试镜像

ports:

- name: httpd

containerPort:

[root@k8s-master01 pod-dir]# kubectl apply -f deploy-demon-metrics.yaml

[root@k8s-master01 pod-dir]# cat hpa-custom.yaml

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: myapp-hpa-v2

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: myapp-deploy

minReplicas:

maxReplicas:

metrics:

- type: Pods #注意类型

pods:

metricName: http_requests #容器中自定义的参数

targetAverageValue: 800m #m表示个数,即800个并发数

[root@k8s-master01 pod-dir]# kubectl apply -f hpa-custom.yaml

[root@k8s-master01 pod-dir]# kubectl describe hpa myapp-hpa-v2

Name: myapp-hpa-v2

Namespace: default

Labels: <none>

Annotations: kubectl.kubernetes.io/last-applied-configuration={"apiVersion":"autoscaling/v2beta1","ks":{},"name":"myapp-hpa-v2","namespace":"default"},"spec":{...

CreationTimestamp: Sun, Dec :: +

Reference: Deployment/myapp-deploy

Metrics: ( current / target )

"http_requests" on pods: <unknown> / 800m

Min replicas:

Max replicas:

Events: <none>

[root@k8s-master01 pod-dir]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

myapp-hpa-v2 Deployment/myapp-deploy <unknown>/800m 5m

测试:

#好像镜像有点问题,待解决

kubernetes学习笔记之十二:资源指标API及自定义指标API的更多相关文章

- VSTO 学习笔记(十二)自定义公式与Ribbon

原文:VSTO 学习笔记(十二)自定义公式与Ribbon 这几天工作中在开发一个Excel插件,包含自定义公式,根据条件从数据库中查询结果.这次我们来做一个简单的测试,达到类似的目的. 即在Excel ...

- 汇编入门学习笔记 (十二)—— int指令、port

疯狂的暑假学习之 汇编入门学习笔记 (十二)-- int指令.port 參考: <汇编语言> 王爽 第13.14章 一.int指令 1. int指令引发的中断 int n指令,相当于引 ...

- Binder学习笔记(十二)—— binder_transaction(...)都干了什么?

binder_open(...)都干了什么? 在回答binder_transaction(...)之前,还有一些基础设施要去探究,比如binder_open(...),binder_mmap(...) ...

- java之jvm学习笔记六-十二(实践写自己的安全管理器)(jar包的代码认证和签名) (实践对jar包的代码签名) (策略文件)(策略和保护域) (访问控制器) (访问控制器的栈校验机制) (jvm基本结构)

java之jvm学习笔记六(实践写自己的安全管理器) 安全管理器SecurityManager里设计的内容实在是非常的庞大,它的核心方法就是checkPerssiom这个方法里又调用 AccessCo ...

- Android学习笔记(十二)——实战:制作一个聊天界面

//此系列博文是<第一行Android代码>的学习笔记,如有错漏,欢迎指正! 运用简单的布局知识,我们可以来尝试制作一个聊天界面. 一.制作 Nine-Patch 图片 : Nine-Pa ...

- MySQL数据库学习笔记(十二)----开源工具DbUtils的使用(数据库的增删改查)

[声明] 欢迎转载,但请保留文章原始出处→_→ 生命壹号:http://www.cnblogs.com/smyhvae/ 文章来源:http://www.cnblogs.com/smyhvae/p/4 ...

- ASP.NET Core 2 学习笔记(十二)REST-Like API

Restful几乎已算是API设计的标准,通过HTTP Method区分新增(Create).查询(Read).修改(Update)和删除(Delete),简称CRUD四种数据存取方式,简约又直接的风 ...

- 如鹏网学习笔记(十二)HTML5

一.HTML5简介 HTML5是HTML语言第五次修改产生的新的HTML语言版本 改进主要包括: 增加新的HTML标签或者属性.新的CSS样式属性.新的JavaScript API等.同时删除了一些过 ...

- Dynamic CRM 2013学习笔记(十二)实现子表合计(汇总,求和)功能的通用插件

上一篇 Dynamic CRM 2013学习笔记(十一)利用Javascript实现子表合计(汇总,求和)功能 , 介绍了如何用js来实现子表合计功能,这种方法要求在各个表单上添加js方法,如果有很多 ...

随机推荐

- 关于规范NOIP试题管理办法的通知

由CCF主办的NOIP赛事举行在即,保密起见,现将有关规定发给各省赛区组织单位. 1.NOI各省组织单位负责试题保密工作. 2.NOIP初赛试卷为纸质版,复赛试卷为电子版. 3.在初赛进行中,如有选手 ...

- 厨娘ui设计文档

厨娘ui设计文档 一.概述 中国的饮食文化从古到今源远流长.在生活日益丰富的今天,人们对饮食的要求不仅仅是温饱,更讲究健康和美味.近年来,饮食甚至成为娱乐的一部分,关于吃的流行用语层出不穷,可见在当今 ...

- 深入理解之 Android Handler

深入理解之 Android Handler 一,相关概念 在Android中如果通过用户界面(如button)来来启动线程,然后再线程中的执行代码将状态信息输出到用户界面(如文本框),这时候就会抛 ...

- python非技术性问题整理

针对软件安装包,安装时出现下类问题, 解决方法时, 以管理员身份运行命令行, 可以看到,包 正常安装. 在使用bs4,分析html或者xml文档时, 代码无法识别lxml 指令, 可能是python ...

- 2019.4 sigfox EMC

干扰源: ------- Leakage Sensor 有-30dB的谐波 1在NPN 基级加100pF 电容 从VCC到GND,一级级整改.

- 【转】FMX 动态创建及销毁(释放free)对象

http://www.2pascal.com/thread-3037-1-1.html这是原文地址. (* ********************************************** ...

- Matlab_xcorr_互相关函数的讨论

假设两个平稳信号 $\textbf{x}$ 和 $\textbf{y}$ ,如果 $x\left(t+\tau\right)= y\left(t\right)$ ,则可通过互相关求 $\tau$ .由 ...

- 返回指针的函数 ------ 指针函数(pointer function)

指针函数: 其本质是一个函数, 其函数返回值为某一类型的指针. 定义形式: 类型 *指针变量名(参数列表): 例如: int *p(int i,int j); p是一个函数名,该函数有2个整形参数,返 ...

- C高级第二次作业

PTA作业第一部分 6-7 删除字符串中数字字符(10 分) 删除一个字符串中的所有数字字符. 函数接口定义: void delnum(char *s); 其中 s是用户传入的参数. 函数的功能是删除 ...

- C++学习(三十八)(C语言部分)之 排序(冒泡 选择 插入 快排)

算法是解决一类问题的方法排序算法 根据元素大小关系排序 从小到大 从大到小冒泡 选择 插入 快排希尔排序 归并排序 堆排序 冒泡排序 从头到尾比较 每一轮将最大的数沉底 或者最小数字上浮 选择排序 1 ...