Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十四)定义一个avro schema使用comsumer发送avro字符流,producer接受avro字符流并解析

参考《在Kafka中使用Avro编码消息:Consumer篇》、《在Kafka中使用Avro编码消息:Producter篇》

在了解如何avro发送到kafka,再从kafka解析avro数据之前,我们可以先看下如何使用操作字符串:

producer:

package com.spark; import org.apache.kafka.clients.producer.Producer;

import org.apache.kafka.clients.producer.ProducerRecord; import java.util.Properties; /**

* Created by Administrator on 2017/8/29.

*/

public class KafkaProducer {

public static void main(String[] args) throws InterruptedException {

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("acks", "all");

props.put("retries", 0);

props.put("batch.size", 16384);

props.put("linger.ms", 1);

props.put("buffer.memory", 33554432);

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

Producer<String, String> producer = new org.apache.kafka.clients.producer.KafkaProducer(props);

int i=0;

while (true) {

producer.send(new ProducerRecord<String, String>("my-topic", Integer.toString(i), Integer.toString(i)));

System.out.println(i++);

Thread.sleep(1000);

}

// producer.close(); }

}

consumer:

package com.spark; import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer; import java.util.Arrays;

import java.util.Properties; /**

* Created by Administrator on 2017/8/29.

*/

public class MyKafkaConsumer { public static void main(String[] args) {

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("group.id", "test");

props.put("enable.auto.commit", "true");

props.put("auto.commit.interval.ms", "1000");

props.put("session.timeout.ms", "30000");

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(props);

consumer.subscribe(Arrays.asList("my-topic"));

while (true) {

ConsumerRecords<String, String> records = consumer.poll(100);

for (ConsumerRecord<String, String> record : records){

System.out.printf("offset = %d, key = %s, value = %s \n", record.offset(), record.key(), record.value());

} } }

}

Avro操作工程pom.xml:

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>0.10.0.1</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-simple</artifactId>

<version>1.7.21</version>

</dependency>

<dependency>

<groupId>org.apache.avro</groupId>

<artifactId>avro</artifactId>

<version>1.8.0</version>

</dependency> <dependency>

<groupId>com.twitter</groupId>

<artifactId>bijection-avro_2.10</artifactId>

<version>0.9.2</version>

</dependency>

<dependency>

<groupId>org.apache.avro</groupId>

<artifactId>avro</artifactId>

<version>1.7.4</version>

</dependency>

需要依赖于avro的包,同时这里是需要使用kafka api。

在使用 Avro 之前,我们需要先定义模式(schemas)。模式通常使用 JSON 来编写,我们不需要再定义相关的类,这篇文章中,我们将使用如下的模式:

{

"fields": [

{ "name": "str1", "type": "string" },

{ "name": "str2", "type": "string" },

{ "name": "int1", "type": "int" }

],

"name": "Iteblog",

"type": "record"

}

上面的模式中,我们定义了一种 record 类型的对象,名字为 Iteblog,这个对象包含了两个字符串和一个 int 类型的fields。定义好模式之后,我们可以使用 avro 提供的相应方法来解析这个模式:

Schema.Parser parser = new Schema.Parser();

Schema schema = parser.parse(USER_SCHEMA);

这里的 USER_SCHEMA 变量存储的就是上面定义好的模式。

解析好模式定义的对象之后,我们需要将这个对象序列化成字节数组,或者将字节数组转换成对象。Avro 提供的 API 不太易于使用,所以本文使用 twitter 开源的 Bijection 库来方便地实现这些操作。我们先创建 Injection 对象来讲对象转换成字节数组:

Injection<GenericRecord, byte[]> recordInjection = GenericAvroCodecs.toBinary(schema);

现在我们可以根据之前定义好的模式来创建相关的 Record,并使用 recordInjection 来序列化这个 Record :

GenericData.Record record = new GenericData.Record(schema);

avroRecord.put("str1", "My first string");

avroRecord.put("str2", "My second string");

avroRecord.put("int1", 42); byte[] bytes = recordInjection.apply(record);

Producter实现

有了上面的介绍之后,我们现在就可以在 Kafka 中使用 Avro 来序列化我们需要发送的消息了:

package example.avro; import java.util.Properties; import org.apache.avro.Schema;

import org.apache.avro.generic.GenericData;

import org.apache.avro.generic.GenericRecord;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerRecord; import com.twitter.bijection.Injection;

import com.twitter.bijection.avro.GenericAvroCodecs; public class AvroKafkaProducter {

public static final String USER_SCHEMA =

"{"

+ "\"type\":\"record\","

+ "\"name\":\"Iteblog\","

+ "\"fields\":["

+ " { \"name\":\"str1\", \"type\":\"string\" },"

+ " { \"name\":\"str2\", \"type\":\"string\" },"

+ " { \"name\":\"int1\", \"type\":\"int\" }"

+ "]}";

public static final String TOPIC = "t-testavro"; public static void main(String[] args) throws InterruptedException {

Properties props = new Properties();

props.put("bootstrap.servers", "192.168.0.121:9092,192.168.0.122:9092");

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.ByteArraySerializer"); Schema.Parser parser = new Schema.Parser();

Schema schema = parser.parse(USER_SCHEMA);

Injection<GenericRecord, byte[]> recordInjection = GenericAvroCodecs.toBinary(schema); KafkaProducer<String, byte[]> producer = new KafkaProducer<String, byte[]>(props); for (int i = 0; i < 1000; i++) {

GenericData.Record avroRecord = new GenericData.Record(schema);

avroRecord.put("str1", "Str 1-" + i);

avroRecord.put("str2", "Str 2-" + i);

avroRecord.put("int1", i); byte[] bytes = recordInjection.apply(avroRecord); ProducerRecord<String, byte[]> record = new ProducerRecord<String, byte[]>(TOPIC, "" + i, bytes);

producer.send(record);

System.out.println(">>>>>>>>>>>>>>>>>>" + i);

} producer.close();

System.out.println("complete...");

}

}

因为我们使用到 Avro 和 Bijection 类库,所有我们需要在 pom.xml 文件里面引入以下依赖:

<dependency>

<groupId>org.apache.avro</groupId>

<artifactId>avro</artifactId>

<version>1.8.0</version>

</dependency> <dependency>

<groupId>com.twitter</groupId>

<artifactId>bijection-avro_2.10</artifactId>

<version>0.9.2</version>

</dependency>

从 Kafka 中读取 Avro 格式的消息

从 Kafka 中读取 Avro 格式的消息和读取其他类型的类型一样,都是创建相关的流,然后迭代:

ConsumerConnector consumer = ...;

Map<String, List<KafkaStream<byte[], byte[]>>> consumerStreams = consumer.createMessageStreams(topicCount);

List<KafkaStream<byte[], byte[]>> streams = consumerStreams.get(topic);

for (final KafkaStream stream : streams) {

....

}

关键在于如何将读出来的 Avro 类型字节数组转换成我们要的数据。这里还是使用到我们之前介绍的模式解释器:

Schema.Parser parser = new Schema.Parser();

Schema schema = parser.parse(USER_SCHEMA);

Injection<GenericRecord, byte[]> recordInjection = GenericAvroCodecs.toBinary(schema);

上面的 USER_SCHEMA 就是上边介绍的消息模式,我们创建了一个 recordInjection 对象,这个对象就可以利用刚刚解析好的模式将读出来的字节数组反序列化成我们写入的数据:

GenericRecord record = recordInjection.invert(message).get();

然后我们就可以通过下面方法获取写入的数据:

record.get("str1")

record.get("str2")

record.get("int1")

Kafka 0.9.x 版本Consumer实现

package example.avro; import java.util.Collections;

import java.util.Properties; import org.apache.avro.Schema;

import org.apache.avro.generic.GenericRecord;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.serialization.ByteArrayDeserializer;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory; import com.twitter.bijection.Injection;

import com.twitter.bijection.avro.GenericAvroCodecs; public class AvroKafkaConsumer {

public static void main(String[] args) {

Logger logger = LoggerFactory.getLogger("AvroKafkaConsumer");

Properties props = new Properties();

props.put("bootstrap.servers", "192.168.0.121:9092,192.168.0.122:9092");

props.put("group.id", "testgroup");

props.put("key.deserializer", StringDeserializer.class.getName());

props.put("value.deserializer", ByteArrayDeserializer.class.getName()); KafkaConsumer<String, byte[]> consumer = new KafkaConsumer<String, byte[]>(props); consumer.subscribe(Collections.singletonList(AvroKafkaProducter.TOPIC));

Schema.Parser parser = new Schema.Parser();

Schema schema = parser.parse(AvroKafkaProducter.USER_SCHEMA);

Injection<GenericRecord, byte[]> recordInjection = GenericAvroCodecs.toBinary(schema); try {

while (true) {

ConsumerRecords<String, byte[]> records = consumer.poll(1000);

for (ConsumerRecord<String, byte[]> record : records) {

GenericRecord genericRecord = recordInjection.invert(record.value()).get();

String info = String.format(String.format("topic = %s, partition = %s, offset = %d, customer = %s,country = %s\n", record.topic(), record.partition(), record.offset(), record.key(), genericRecord.get("str1")));

logger.info(info);

}

}

} finally {

consumer.close();

}

} }

测试:

producer:

[main] INFO org.apache.kafka.common.utils.AppInfoParser - Kafka version : 0.10.0.1

[main] INFO org.apache.kafka.common.utils.AppInfoParser - Kafka commitId : a7a17cdec9eaa6c5

>>>>>>>>>>>>>>>>>>0

>>>>>>>>>>>>>>>>>>1

>>>>>>>>>>>>>>>>>>2

>>>>>>>>>>>>>>>>>>3

>>>>>>>>>>>>>>>>>>4

>>>>>>>>>>>>>>>>>>5

>>>>>>>>>>>>>>>>>>6

>>>>>>>>>>>>>>>>>>7

>>>>>>>>>>>>>>>>>>8

>>>>>>>>>>>>>>>>>>9

>>>>>>>>>>>>>>>>>>10

...

>>>>>>>>>>>>>>>>>>997

>>>>>>>>>>>>>>>>>>998

>>>>>>>>>>>>>>>>>>999

[main] INFO org.apache.kafka.clients.producer.KafkaProducer - Closing the Kafka producer with timeoutMillis = 9223372036854775807 ms.

complete...

consumer:

[main] INFO AvroKafkaConsumer - topic = t-testavro, partition = 0, offset = 4321, customer = 165,country = Str 1-165

[main] INFO AvroKafkaConsumer - topic = t-testavro, partition = 0, offset = 4322, customer = 166,country = Str 1-166

[main] INFO AvroKafkaConsumer - topic = t-testavro, partition = 0, offset = 4323, customer = 167,country = Str 1-167

[main] INFO AvroKafkaConsumer - topic = t-testavro, partition = 0, offset = 4324, customer = 168,country = Str 1-168

[main] INFO AvroKafkaConsumer - topic = t-testavro, partition = 0, offset = 4325, customer = 169,country = Str 1-169

[main] INFO AvroKafkaConsumer - topic = t-testavro, partition = 0, offset = 4326, customer = 170,country = Str 1-170

[main] INFO AvroKafkaConsumer - topic = t-testavro, partition = 0, offset = 4327, customer = 171,country = Str 1-171

GenericRecord打印:

import org.apache.avro.Schema;

import org.apache.avro.generic.GenericData;

import org.apache.avro.generic.GenericRecord; public class AvroToJson {

public static void main(String[] args) {

String avroSchema =

"{"

+ "\"type\": \"record\", "

+ "\"name\": \"LongList\","

+ "\"aliases\": [\"LinkedLongs\"],"

+ "\"fields\" : ["

+ " {\"name\": \"name\", \"type\": \"string\"},"

+ " {\"name\": \"favorite_number\", \"type\": [\"null\", \"long\"]},"

+ " {\"name\": \"favorite_color\", \"type\": [\"null\", \"string\"]}"

+ " ]"

+ "}"; Schema schema = new Schema.Parser().parse(avroSchema);

GenericRecord user1 = new GenericData.Record(schema);

user1.put("name", "Format");

user1.put("favorite_number", 666);

user1.put("favorite_color", "red"); GenericData genericData = new GenericData(); String result = genericData.toString(user1);

System.out.println(result); }

}

打印结果:

{"name": "Format", "favorite_number": , "favorite_color": "red"}

该信息在调试,想查看avro对象内容时,十分实用。

一次有趣的测试:

avro schema:

{

"type":"record",

"name":"My",

"fields":[

{"name":"id","type":["null", "string"]},

{"name":"start_time","type":["null", "string"]},

{"name":"stop_time","type":["null", "string"]},

{"name":"insert_time","type":["null", "string"]},

{"name":"eid","type":["null", "string"]},

{"name":"V_00","type":["null", "string"]},

{"name":"V_01","type":["null", "string"]},

{"name":"V_02","type":["null", "string"]},

{"name":"V_03","type":["null", "string"]},

{"name":"V_04","type":["null", "string"]},

{"name":"V_05","type":["null", "string"]},

{"name":"V_06","type":["null", "string"]},

{"name":"V_07","type":["null", "string"]},

{"name":"V_08","type":["null", "string"]},

{"name":"V_09","type":["null", "string"]}

]

}

测试程序:

public static void main(String[] args) throws StreamingQueryException, IOException {

String filePathString = "E:\\work\\my.avsc";

Schema.Parser parser = new Schema.Parser();

InputStream inputStream = new FileInputStream(filePathString);

Schema schema = parser.parse(inputStream);

inputStream.close();

Injection<GenericRecord, byte[]> recordInjection = GenericAvroCodecs.toBinary(schema);

GenericData.Record avroRecord = new GenericData.Record(schema);

avroRecord.put("id", "9238234");

avroRecord.put("start_time", "2018-08-12T12:09:04.987");

avroRecord.put("stop_time", "2018-08-12T12:09:04.987");

avroRecord.put("insert_time", "2018-08-12T12:09:04.987");

avroRecord.put("eid", "23434");

avroRecord.put("V_00", "0");

avroRecord.put("V_01", "1");

avroRecord.put("V_02", "2");

avroRecord.put("V_09", "9");

byte[] bytes = recordInjection.apply(avroRecord);

String byteString = byteArrayToStr(bytes);

//String byteString= bytes.toString();

System.out.println(">>>>>>>>>>>>>>arvo字节流转化为字符串。。。。");

System.out.println(byteString);

System.out.println(">>>>>>>>>>>>>>arvo字节流转化为字符串。。。。");

System.out.println(">>>>>>>>>>>>>>arvo字符串转化为字节流。。。");

byte[] data = strToByteArray(byteString);

GenericRecord record = recordInjection.invert(data).get();

for (Schema.Field field : schema.getFields()) {

String value = record.get(field.name()) == null ? "" : record.get(field.name()).toString();

System.out.println(field.name() + "," + value);

}

System.out.println(">>>>>>>>>>>>>>arvo字符串转化为字节流。。。");

}

public static String byteArrayToStr(byte[] byteArray) {

if (byteArray == null) {

return null;

}

String str = new String(byteArray);

return str;

}

public static byte[] strToByteArray(String str) {

if (str == null) {

return null;

}

byte[] byteArray = str.getBytes();

return byteArray;

}

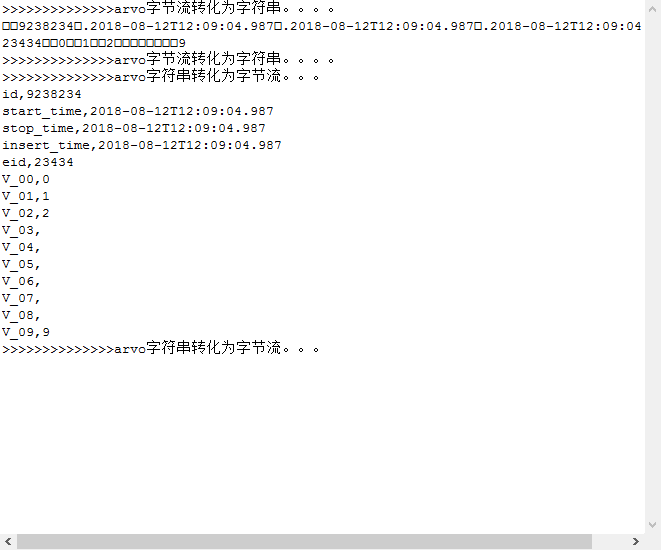

经过测试,可以正常运行,输出信息为:

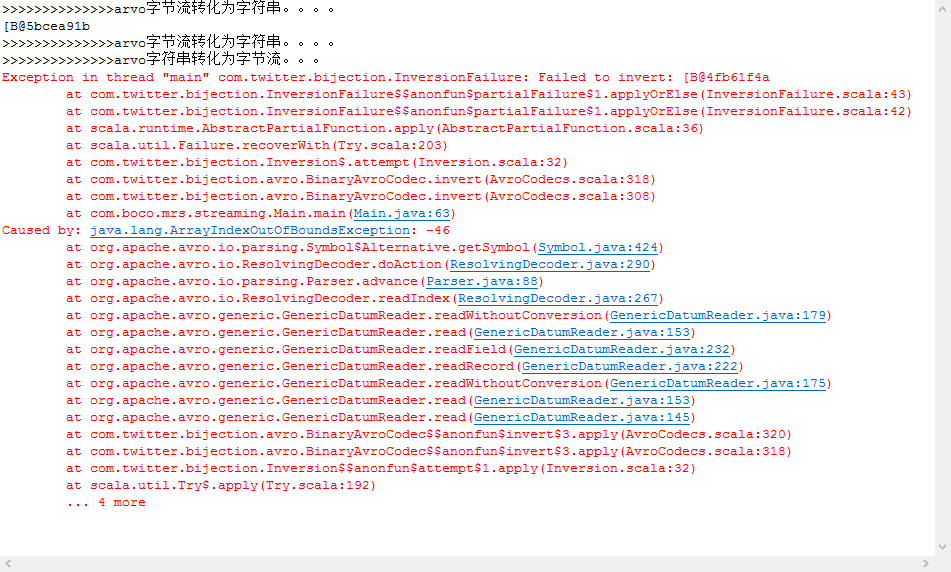

但是如果把代码中的byte转化为字符代码修改为:

//String byteString = byteArrayToStr(bytes);

String byteString= bytes.toString();

就抛出错误了:

Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十四)定义一个avro schema使用comsumer发送avro字符流,producer接受avro字符流并解析的更多相关文章

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(四)针对hadoop2.9.0启动执行start-all.sh出现异常:failed to launch: nice -n 0 /bin/spark-class org.apache.spark.deploy.worker.Worker

启动问题: 执行start-all.sh出现以下异常信息: failed to launch: nice -n 0 /bin/spark-class org.apache.spark.deploy.w ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(二)安装hadoop2.9.0

如何搭建配置centos虚拟机请参考<Kafka:ZK+Kafka+Spark Streaming集群环境搭建(一)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网.& ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(二十一)NIFI1.7.1安装

一.nifi基本配置 1. 修改各节点主机名,修改/etc/hosts文件内容. 192.168.0.120 master 192.168.0.121 slave1 192.168.0.122 sla ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十三)kafka+spark streaming打包好的程序提交时提示虚拟内存不足(Container is running beyond virtual memory limits. Current usage: 119.5 MB of 1 GB physical memory used; 2.2 GB of 2.1 G)

异常问题:Container is running beyond virtual memory limits. Current usage: 119.5 MB of 1 GB physical mem ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十二)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网。

Centos7出现异常:Failed to start LSB: Bring up/down networking. 按照<Kafka:ZK+Kafka+Spark Streaming集群环境搭 ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十一)定制一个arvo格式文件发送到kafka的topic,通过Structured Streaming读取kafka的数据

将arvo格式数据发送到kafka的topic 第一步:定制avro schema: { "type": "record", "name": ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十)安装hadoop2.9.0搭建HA

如何搭建配置centos虚拟机请参考<Kafka:ZK+Kafka+Spark Streaming集群环境搭建(一)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网.& ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(九)安装kafka_2.11-1.1.0

如何搭建配置centos虚拟机请参考<Kafka:ZK+Kafka+Spark Streaming集群环境搭建(一)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网.& ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(八)安装zookeeper-3.4.12

如何搭建配置centos虚拟机请参考<Kafka:ZK+Kafka+Spark Streaming集群环境搭建(一)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网.& ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(三)安装spark2.2.1

如何搭建配置centos虚拟机请参考<Kafka:ZK+Kafka+Spark Streaming集群环境搭建(一)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网.& ...

随机推荐

- sTM32 使用TIMx_CH1作为 Tx1F_ED 计数器时钟

环境:iar arm 5.3 stm32f103vbt6 使用PA.8 外部输入10Mhz的方波.可从systick中断得到数据4. 4×5000(预分频值)×1000(tick中断时间)=20MHz ...

- [Go] 通过 17 个简短代码片段,切底弄懂 channel 基础

关于管道 Channel Channel 用来同步并发执行的函数并提供它们某种传值交流的机制. Channel 的一些特性:通过 channel 传递的元素类型.容器(或缓冲区)和 传递的方向由“&l ...

- sigmod2017.org

http://sigmod2017.org/sigmod-program/#ssession20

- Java 微服务实践 - Spring Boot 系列

https://segmentfault.com/l/1500000009515571

- mexHttpBinding协议 【发布元数据终结点】

我们需要知道很多东西才能使用微软通信基础架构(WCF)来开发应用程序.尽管这本书已经试着囊括普通开发人员需要了解的WCF所有内容,也还是有一些内容没有讨论到.附录的主要目的是填充这些罅隙. 发布元数据 ...

- Delph 两个对立程序使用消息进行控制通信

在实际应用中,总是会遇到两个独立的程序进行通信,其实通信的方式有好几种,比如进程间通信,消息通信. 项目中用到了此功能, 此功能用于锁屏程序, 下面把实现的流程和大家分享一下. 1. 在锁屏程序中,自 ...

- The main reborn ASP.NET MVC4.0: using CheckBoxListHelper and RadioBoxListHelper

The new Helpers folder in the project, to create the CheckBoxListHelper and RadioBoxListHelper class ...

- 面试题07_用两个栈实现队列——剑指offer系列

题目描写叙述: 用两个栈实现一个队列. 队列的声明例如以下,请实现它的两个函数appendTail 和 deleteHead.分别完毕在队列尾部插入结点和在队列头部删除结点的功能. 解题思路: 栈的特 ...

- 窗体的Alpha通道透明色支持

参考: http://www.delphibbs.com/delphibbs/dispq.asp?lid=2190768 Windows 2000后,为了支持类似MAC界面的Alpha通道混合效果,提 ...

- Spring boot配置多个Redis数据源操作实例

原文:https://www.jianshu.com/p/c79b65b253fa Spring boot配置多个Redis数据源操作实例 在SpringBoot是项目中整合了两个Redis的操作实例 ...