Kubernetes的安装部署

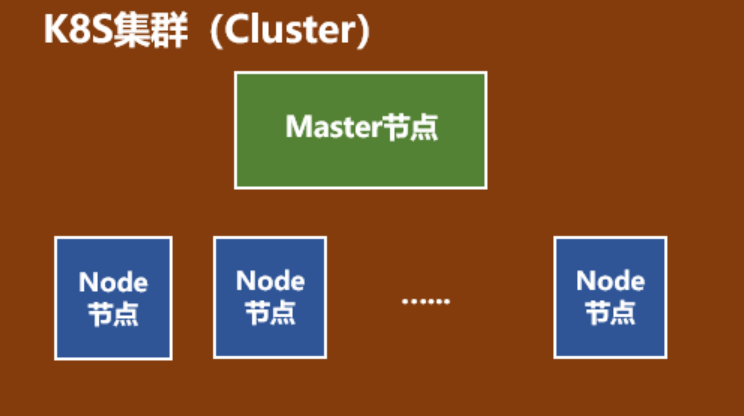

前言:简述kubernetes(k8s)集群

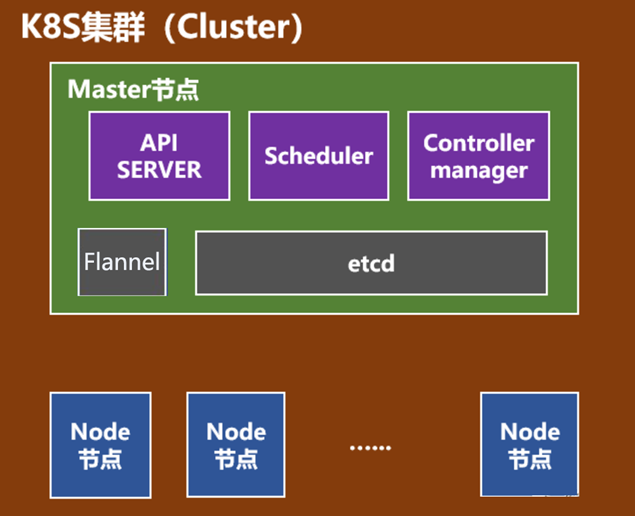

k8s集群基本功能组件由master和node组成。

master节点上主要有kube-apiserver、kube-scheduler、kube-controller-manager组件组成,及Etcd和pod网络( flannel )。

1、master节点各组件介绍:

API Server(kube-apiserver):负责和用户交互

API Server作为k8s的前端接口,各种客户端工具以及k8s其他组件可以通过它管理集群的各种资源。(接受管理命令,对外接受命令结构)

Scheduler(kube-scheduler) :负责资源调度

scheduer负责决定将pod放在哪个node上运行。scheduler在调度时会充分考虑集群的架构,当前各个节点的负载,以及应用对高可用、性能、数据亲和性的需求。

Controller Manager(kube-controller-manager):负责维护集群的状态,比如故障检测、自动扩展、滚动更新等。

Controller Manager由多种controller组成,包括replication controller、endpoints controller、namespace controller、serviceaccounts controller 等。

不同的controller管理不同的资源。例如replication controller管理Deployment、StatefulSet、DaemonSet的生命周期,namespace controller管理Namespace 资源。

etcd:负责保存k8s集群的配置信息和各种资源的状态信息(类似数据库)

K8S中所有的服务节点的信息数据、配置数据都是存储在ETCD中,当数据发生变化时,etcd会快速的通知k8s相关组件。

pod网络(如:flannel):pod网络架构复制pod间相互通信

k8s集群必须掌握pod网络, flannel是其中一个可选的方案。

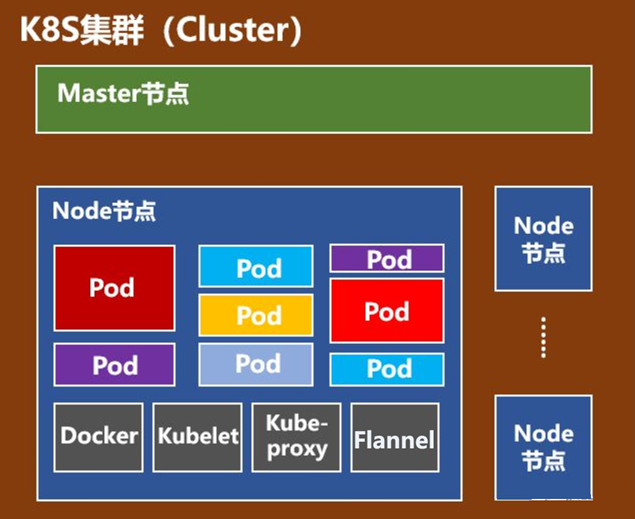

2、node节点组件介绍:

kubelet是node的agent,当scheduler去确定在某个node上运行pod后,会将pod的具体配置信息发送给该节点的kubelet,kubelet会根据这些信息创建和运行容器,并向master报告运行状态。

kube-proxy的工作职责为:service 接收到的请求时,proxy配合service实现从pod到service,以及从外部的node port到service的访问。service在逻辑上代表了后端的多个Pod,外界通过service访问Pod。

每个Node都会运行kube-proxy服务,它负责将访问service的TCP/UPD数据流转发到后端的容器。如果有多个副本,kube-proxy会实现负载均衡。

pod网络

以上已介绍,不再赘述

下图为k8s整体运行架构

正文:

一、K8s集群部署前的准备工作有:

1、规划环境

主机名/IP

k8smaster 192.168.217.61

k8snode1 192.168.217.63

k8snode2 192.168.217.64

2、部署K8s需先在各节点安装docker

cd /etc/yum.repos.d/

wget http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install docker-ce -y

mkdir /etc/docker

vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://68rmyzg7.mirror.aliyuncs.com"]

}

systemctl daemon-reload

systemctl restart docker

systemctl enable docker

docker version

docker info

3、配置k8s的yum源

vim k8s.repo

[k8s]

name=k8s

enabled=1

gpgcheck=0

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

4、安装kubelet、kubeadm、kubectl工具

yum install kubelet kubeadm kubectl -y

此时因为集群还没有配置起来,暂不启动kubelet,仅设置开机自启动

systemctl enable kubelet

5、k8s集群各节点基础配置

(1)hosts文件修改

vim /etc/hosts

127.0.0.1 localhost

192.168.217.151 master

192.168.217.102 node1

192.168.217.103 node2

(2)在每个节点打开内置的桥功能

vim /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

注意:需要安装docker,并启动docker服务,才会有/proc/sys/net/bridge/bridge-nf-call-iptables这个内存参数

systemctl restart docker

cat /proc/sys/net/bridge/bridge-nf-call-iptables

1

(3)在每个节点禁用swap

swapoff -a && sysctl -w vm.swappiness=0

vm.swappiness = 0

修改/etc/fstab文件,注释掉swap项内容

vim /etc/fstab

#UUID=100389a1-8f1b-4b19-baea-523a95849ee1 swap swap defaults 0 0

(4)关闭防火墙和selinux

vim /etc/selinux/config

SELINUX=disabled

setenforce 0

iptables -F

systemctl stop firewalld

systemctl disable firewalld

小结:至此,K8s集群部署前的准备工作完成。

正文:

二、在master节点上面初始化集群

1、查看安装k8s的相关镜像

kubeadm config print init-defaults

查看安装k8s的相关信息,主要是安装源和版本

kubeadm config images list --image-repository registry.aliyuncs.com/google_containers

默认是从k8s.gcr.io这个地址下载镜像,此地址国内无法访问,因此指定阿里云镜像站地址,查看需要下载的镜像在阿里云镜像站中是否都有

示例:

[root@k8smaster ~]# kubeadm config images list --image-repository registry.aliyuncs.com/google_containers

W1203 10:42:44.376170 5104 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

registry.aliyuncs.com/google_containers/kube-apiserver:v1.19.4

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.19.4

registry.aliyuncs.com/google_containers/kube-scheduler:v1.19.4

registry.aliyuncs.com/google_containers/kube-proxy:v1.19.4

registry.aliyuncs.com/google_containers/pause:3.2

registry.aliyuncs.com/google_containers/etcd:3.4.13-0

registry.aliyuncs.com/google_containers/coredns:1.7.0

2、开始初始化k8s集群

命令为:

kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.19.4 --apiserver-advertise-address=192.169.217.61 --pod-network-cidr=10.244.0.0/16

命令解析:

--image-repository :指定哪个仓库拉取镜像(1.13版本才有的),默认值是k8s.gcr.io,这里指定为国内阿里云镜像地址。

--kubernetes-version=:指定kubernetes版本号,默认值下载最新的版本号,也可指定为固定版本(v1.19.4)来跳过网络请求。

--apiserver-advertise-address=:指定Master网络接口与集群的其他节点通信。如果Master有多个网络接口,建议明确指定,如果不指定,kubeadm会自动选择有默认网关的网络接口设备。这里指定为本机ip

--pod-network-cidr=:指定Pod网络的范围。Kubernetes支持多种网络方案,这里设置为10.244.0.0/16,是因为我们将使用flannel网络方案,必须设置成这个CIDR。

执行命令后,等待k8s自动初始化,若成功,会显示如下信息:

Your Kubernetes master has initialized successfully!

同时,提示下一步的操作,而如果失败,请使用如下代码清除后重新初始化:

# kubeadm reset

# iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

# ifconfig cni0 down

# ip link delete cni0

# ifconfig flannel.1 down

# ip link delete flannel.1

# rm -rf /var/lib/cni/

# rm -rf /var/lib/etcd/*

这里提示,若出现报错,需先执行kubeadm reset,然后重新初始化。

示例:

[root@k8smaster ~]# kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.19.4 --pod-network-cidr=10.244.0.0/16

W1207 15:50:10.490192 2290 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.19.4

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8smaster kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.217.61]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8smaster localhost] and IPs [192.168.217.61 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8smaster localhost] and IPs [192.168.217.61 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 12.009147 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.19" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8smaster as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8smaster as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: wvq4ve.nh8wtthnsr38fija

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.217.61:6443 --token wvq4ve.nh8wtthnsr38fija \

--discovery-token-ca-cert-hash sha256:de6b5ec135657b53f766bddf9e18b47e0cca43339fba8b021ad2b49b1ea7be13

注意:在24小时内记录此验证码 kubeadm join 192.168.217.61:6443 --token wvq4ve.nh8wtthnsr38fija \

--discovery-token-ca-cert-hash sha256:de6b5ec135657b53f766bddf9e18b47e0cca43339fba8b021ad2b49b1ea7be13

之后添加节点需要!

按要求执行相关信息:

[root@k8smaster ~]# mkdir -p $HOME/.kube

[root@k8smaster ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8smaster ~]# chown $(id -u):$(id -g) $HOME/.kube/config

为了使用便捷,启用kubectl命令的自动补全功能。

echo "source <(kubectl completion bash)" >> ~/.bashrc

验证master节点:

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster NotReady master 4m9s v1.19.4

3、安装pod网络

要让Kubernetes集群能够工作,必须安装Pod网络,否则Pod之间无法通信。

在master节点上安装flannel网络:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml(网站下载安装不一定可执行,可以下载一个kube-flannel.yml文件自行安装)

rz上传文件kube-flannel.yml

kubectl apply -f kube-flannel.yml

完成后,在每个节点启动kubelet,执行如下操作:

systemctl restart kubelet

等镜像下载完成以后,看到node的状态会变成ready,执行如下命令查看:

kubectl get nodes

示例:

NAME STATUS ROLES AGE VERSION

k8smaster Ready master 64m v1.19.4

k8snode1 Ready <none> 18m v1.19.4

k8snode2 Ready <none> 13m v1.19.4

验证pod信息

kubectl get pods -n kube-system

示例:

NAME READY STATUS RESTARTS AGE

coredns-6d56c8448f-bdkb7 1/1 Running 0 94m

coredns-6d56c8448f-z4nlq 1/1 Running 0 94m

etcd-k8smaster 1/1 Running 0 94m

kube-apiserver-k8smaster 1/1 Running 0 94m

kube-controller-manager-k8smaster 1/1 Running 0 73m

kube-flannel-ds-amd64-9rj9n 1/1 Running 0 32m

kube-flannel-ds-amd64-lzklk 1/1 Running 0 32m

kube-flannel-ds-amd64-mt5q4 1/1 Running 0 32m

kube-proxy-55wch 1/1 Running 0 43m

kube-proxy-k6dg7 1/1 Running 0 94m

kube-proxy-t98kx 1/1 Running 0 48m

kube-scheduler-k8smaster 1/1 Running 0 72m

如果没有安装flannel网络,STATUS会NotReady

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster NotReady master 18m v1.19.4

kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

etcd-0 Healthy {"health":"true"}

这里需要处理小bug(1.18.5以后版本需要处理此bug)

vim /etc/kubernetes/manifests/kube-controller-manager.yaml

删除- --port=0

vim /etc/kubernetes/manifests/kube-scheduler.yaml

删除- --port=0

再次查看

kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

4、其他节点加入集群:

示例:

[root@k8snode1 ~]# kubeadm join 192.168.217.61:6443 --token wvq4ve.nh8wtthnsr38fija \

> --discovery-token-ca-cert-hash sha256:de6b5ec135657b53f766bddf9e18b47e0cca43339fba8b021ad2b49b1ea7be13

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileContent--proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

提示--proc-sys-net-ipv4-ip_forward未设置为转发状态

echo "1" > /proc/sys/net/ipv4/ip_forward

另外注意,此时在node节点上执行kubeadm join 可能会因为之前的--token信息超时而改变(token是有24小时有效期的),需要在master节点上通过如下命令找回:

kubeadm token create --ttl 0 --print-join-command

W1207 16:37:39.128892 15664 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

kubeadm join 192.168.217.61:6443 --token smfwoy.yix3lbaz436yltw5 --discovery-token-ca-cert-hash sha256:de6b5ec135657b53f766bddf9e18b47e0cca43339fba8b021ad2b49b1ea7be13

再次在node节点执行

kubeadm join 192.168.217.61:6443 --token smfwoy.yix3lbaz436yltw5 --discovery-token-ca-cert-hash sha256:de6b5ec135657b53f766bddf9e18b47e0cca43339fba8b021ad2b49b1ea7be13

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

节点添加成功后,在master节点执行kubectl get nodes 查看状态

示例:

[root@k8smaster app]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster Ready master 64m v1.19.4

k8snode1 Ready <none> 18m v1.19.4

k8snode2 Ready <none> 13m v1.19.4

[root@k8smaster app]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6d56c8448f-bdkb7 1/1 Running 0 94m

coredns-6d56c8448f-z4nlq 1/1 Running 0 94m

etcd-k8smaster 1/1 Running 0 94m

kube-apiserver-k8smaster 1/1 Running 0 94m

kube-controller-manager-k8smaster 1/1 Running 0 73m

kube-flannel-ds-amd64-9rj9n 1/1 Running 0 32m

kube-flannel-ds-amd64-lzklk 1/1 Running 0 32m

kube-flannel-ds-amd64-mt5q4 1/1 Running 0 32m

kube-proxy-55wch 1/1 Running 0 43m

kube-proxy-k6dg7 1/1 Running 0 94m

kube-proxy-t98kx 1/1 Running 0 48m

kube-scheduler-k8smaster 1/1 Running 0 72m

[root@k8smaster ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-6d56c8448f-bdkb7 1/1 Running 0 18h 10.244.2.2 k8snode2 <none> <none>

coredns-6d56c8448f-z4nlq 1/1 Running 0 18h 10.244.2.3 k8snode2 <none> <none>

etcd-k8smaster 1/1 Running 0 18h 192.168.217.61 k8smaster <none> <none>

kube-apiserver-k8smaster 1/1 Running 0 18h 192.168.217.61 k8smaster <none> <none>

kube-controller-manager-k8smaster 1/1 Running 1 18h 192.168.217.61 k8smaster <none> <none>

kube-flannel-ds-amd64-9rj9n 1/1 Running 0 17h 192.168.217.63 k8snode1 <none> <none>

kube-flannel-ds-amd64-lzklk 1/1 Running 0 17h 192.168.217.64 k8snode2 <none> <none>

kube-flannel-ds-amd64-mt5q4 1/1 Running 0 17h 192.168.217.61 k8smaster <none> <none>

kube-proxy-55wch 1/1 Running 0 17h 192.168.217.64 k8snode2 <none> <none>

kube-proxy-k6dg7 1/1 Running 0 18h 192.168.217.61 k8smaster <none> <none>

kube-proxy-t98kx 1/1 Running 0 17h 192.168.217.63 k8snode1 <none> <none>

kube-scheduler-k8smaster 1/1 Running 1 18h 192.168.217.61 k8smaster <none> <none>

5、删除节点

步骤1:在master节点上,先将节点设置为维护模式(以k8snode1节点为例)

[root@k8smaster ~]# kubectl drain k8snode1 --delete-local-data --force --ignore-daemonsets

node/k8snode1 cordoned

WARNING: ignoring DaemonSet-managed Pods: kube-system/kube-flannel-ds-amd64-9rj9n, kube-system/kube-proxy-t98kx

node/k8snode1 drained

步骤2:删除节点,执行如下命令:

[root@k8smaster ~]# kubectl delete node k8snode1

node "k8snode1" deleted

步骤3:此时查看集群节点状态:

[root@k8smaster ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster Ready master 19h v1.19.4

k8snode2 Ready <none> 18h v1.19.4

node1节点已经被删除

若再想添加进来这个node,在需要添加进来的节点上执行操作:

步骤1:停掉kubelet

[root@k8snode1 ~]# systemctl stop kubelet

步骤2:删除相关文件

[root@k8snode1 ~]# kubeadm reset

[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.

[reset] Are you sure you want to proceed? [y/N]: y

[preflight] Running pre-flight checks

W1208 10:54:38.764013 50664 removeetcdmember.go:79] [reset] No kubeadm config, using etcd pod spec to get data directory

[reset] No etcd config found. Assuming external etcd

[reset] Please, manually reset etcd to prevent further issues

[reset] Stopping the kubelet service

[reset] Unmounting mounted directories in "/var/lib/kubelet"

[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki]

[reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf]

[reset] Deleting contents of stateful directories: [/var/lib/kubelet /var/lib/dockershim /var/run/kubernetes /var/lib/cni]

The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.d

The reset process does not reset or clean up iptables rules or IPVS tables.

If you wish to reset iptables, you must do so manually by using the "iptables" command.

If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)

to reset your system's IPVS tables.

The reset process does not clean your kubeconfig files and you must remove them manually.

Please, check the contents of the $HOME/.kube/config file.

[root@k8snode1 ~]# rm -rf /etc/cni/net.d

[root@k8snode1 ~]# iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

步骤3:添加节点

[root@k8snode1 ~]# kubeadm join 192.168.217.61:6443 --token smfwoy.yix3lbaz436yltw5 --discovery-token-ca-cert-hash sha256:de6b5ec135657b53f766bddf9e18b47e0cca43339fba8b021ad2b49b1ea7be13

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

步骤4:此时在master节点上查看集群节点状态:

[root@k8smaster ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster Ready master 19h v1.19.4

k8snode1 Ready <none> 15s v1.19.4

k8snode2 Ready <none> 18h v1.19.4

------------------------------

小结:

关于kubelet、kubeadm、kubectl几个命令的区别

kubelet运行在所有的节点上,负责启动pod和容器,以系统服务形式出现,可以被systemd管理。

systemctl start kubelet

systemctl enable kubelet

kubeadm是k8s集群构建管理工具:初始化、构建集群、查看k8s的相关镜像、重置等。

kubeadm init ...

kubeadm config ...

kubeadm join ...

kubeadm token ...

kubeadm reset ...

kubectl是命令行工具,通过kubectl可以部署和管理应用,查看各种资源,创建,删除和更新组件。

kubectl get nodes

kubectl get pod --all-namespaces #获取所有的pod

kubectl apply ...

kubectl drain ...

kubectl delete ...

Kubernetes的安装部署的更多相关文章

- Kubernetes单机安装部署

系统环境: Ubuntu 16.04.2 LTS 软件环境: Docker 1.12.6 Go 1.8.3 Etcd 3.1.8 Flannel 0.7.1 Kubernetes master 1.7 ...

- Kubeadm 安装部署 Kubernetes 集群

阅读目录: 准备工作 部署 Master 管理节点 部署 Minion 工作节点 部署 Hello World 应用 安装 Dashboard 插件 安装 Heapster 插件 后记 相关文章:Ku ...

- kubernetes 集群的安装部署

本文来自我的github pages博客http://galengao.github.io/ 即www.gaohuirong.cn 摘要: 首先kubernetes得官方文档我自己看着很乱,信息很少, ...

- 安装部署 Kubernetes 集群

安装部署 Kubernetes 集群 阅读目录: 准备工作 部署 Master 管理节点 部署 Minion 工作节点 部署 Hello World 应用 安装 Dashboard 插件 安装 Hea ...

- Kubernetes集群部署关键知识总结

Kubernetes集群部署需要安装的组件东西很多,过程复杂,对服务器环境要求很苛刻,最好是能连外网的环境下安装,有些组件还需要连google服务器下载,这一点一般很难满足,因此最好是能提前下载好准备 ...

- centos7使用kubeadm安装部署kubernetes 1.14

应用背景: 截止目前为止,高热度的kubernetes版本已经发布至1.14,在此记录一下安装部署步骤和过程中的问题排查. 部署k8s一般两种方式:kubeadm(官方称目前已经GA,可以在生产环境使 ...

- kubernetes系列03—kubeadm安装部署K8S集群

本文收录在容器技术学习系列文章总目录 1.kubernetes安装介绍 1.1 K8S架构图 1.2 K8S搭建安装示意图 1.3 安装kubernetes方法 1.3.1 方法1:使用kubeadm ...

- Kubernetes 集群安装部署

etcd集群配置 master节点配置 1.安装kubernetes etcd [root@k8s ~]# yum -y install kubernetes-master etcd 2.配置 etc ...

- kubernetes安装部署-day01

一.基础环境的准备: 1.1.安装docker: docker的官网是:https://www.docker.com/ 1.1.1.rpm包安装: 官方下载地址:https://download.do ...

随机推荐

- webrtc编译

webrtc编译 最近研究 libmediasoupclient,而 libmediasoupclient 又依赖 libwebrtc,所以首先就想着先在windows上编译一个webrtc的库,先是 ...

- 创建函数,传递一个数字n,返回斐波那契数列的第n的值。

斐波那契数列 第1项和第2项的值是1,从第3项开始,每项的值是前两项相加的和 1 1 2 3 5 8 13 21...... 法1: function fn(n) ...

- OpenFaaS实战之七:java11模板解析

欢迎访问我的GitHub https://github.com/zq2599/blog_demos 内容:所有原创文章分类汇总及配套源码,涉及Java.Docker.Kubernetes.DevOPS ...

- 用python将word转pdf、doc转docx等

word ==> pdf def doc2pdf(file_path): """ word格式转换doc|docx ==> pdf :return: &quo ...

- expect命令和here document免交互

目录 一.Here Document免交互 1.1 概述 1.2 语法格式 1.3 简单案例 1.4 支持变量替换 1.5 多行注释 1.6 完成自动划分磁盘免交互 二.Expect进行免交互 2.1 ...

- 计算机网络part2——物理层

物理层概述 1.物理层基本概念 物理层解决如何在连接各种计算机的传输媒体上传输数据比特流,而不是指具体的传输媒体. 主要任务:确定与传输媒体接口有关的一些特性 特性: 机械特性 电气特性 功能特性 规 ...

- DVWA靶场练习-暴力破解

一.暴力破解 (Brute Force) 暴力破解是Web安全领域的一个基础技能,破解方法论为:构建常见用户名及弱口令 因此需要好的字典,对应破解场景构建特定的用户名密码,以及还需要具有灵活编写 ...

- MapReduce框架原理--Shuffle机制

Shuffle机制 Mapreduce确保每个reducer的输入都是按键排序的.系统执行排序的过程(Map方法之后,Reduce方法之前的数据处理过程)称之为Shuffle. partition分区 ...

- spring boot 整合JPA bean注入失败

有时候报的错误让你匪夷所思,找错误得学会找根.源头在哪里? 比如:我们刚开始看的错误就是 org.springframework.beans.factory.UnsatisfiedDependency ...

- UWP App Data存储和获取

这篇博客介绍如何在UWP开发时,如何存储App Data和获取. App Data是指用户的一些设定,偏好等.例如,App的主题,是否接收推送,离线接收消息等.需要区分下App Data和User D ...