Spark教程——(1)安装Spark

Cloudera Manager介绍

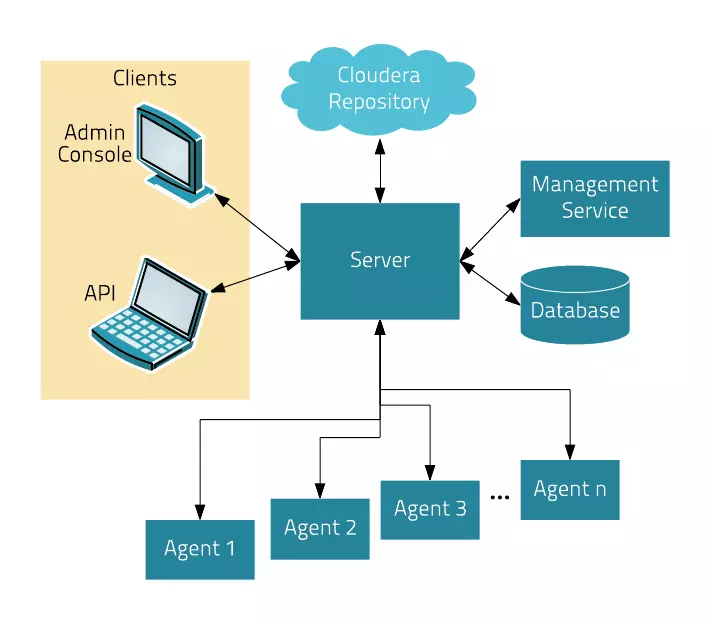

CM技术架构

Management Service:由一组执行各种监控,警报和报告功能角色的服务。

Database:存储配置和监视信息。通常情况下,多个逻辑数据库在一个或多个数据库服务器上运行。例如,Cloudera的管理服务器和监控角色使用不同的逻辑数据库。

Cloudera Repository:软件由Cloudera 管理分布存储库。

Clients:是用于与服务器进行交互的接口:

Admin Console :基于Web的用户界面与管理员管理集群和Cloudera管理。

API :与开发人员创建自定义的Cloudera Manager应用程序的API。

CM四大功能

管理:对集群进行管理,如添加、删除节点等操作。

监控:监控集群的健康情况,对设置的各种指标和系统运行情况进行全面监控。

诊断:对集群出现的问题进行诊断,对出现的问题给出建议解决方案。

集成:对hadoop的多组件进行整合。

需要安装的组件

Cloudera Manager

CDH

JDK

Mysql(主节点) + JDBC

需要注意的是CDH的版本需要等于或者小于CM的版本

相关的设置

主机名和hosts文件

关闭防火墙

ssh无密码登陆

配置NTP服务

关闭SElinux状态

配置集群

配置数据库

一些安装过程

主节点安装cloudera manager

把我们下载好的cloudera-manager-*.tar.gz包和mysql驱动包mysql-connector-java-*-bin.jar放到主节点cm0的/opt中。

Cloudera Manager建立数据库

使用命令 cp mysql-connector-java-5.1.40-bin.jar /opt/cm-5.8.2/share/cmf/lib/ 把mysql-connector-java-5.1.40-bin.jar放到/opt/cm-5.8.2/share/cmf/lib/中。

使用命令 /opt/cm-5.8.2/share/cmf/schema/scm_prepare_database.sh mysql cm -h cm0 -u root -p 123456 --scm-host cm0 scm scm scm 在主节点初始化CM5的数据库。

实际位置:/usr/share/cmf/schema/scm_prepare_database.sh

Agent配置

使用命令 vim /opt/cm-5.8.2/etc/cloudera-scm-agent/config.ini 主节点修改agent配置文件。

在主节点cm0用命令 scp -r /opt/cm-5.8.2 root@cm1:/opt/ 同步Agent到其他所有节点。

实际位置:/etc/cloudera-scm-agent/config.ini

在所有节点创建cloudera-scm用户

使用命令 useradd --system --home=/opt/cm-5.8.2/run/cloudera-scm-server/ --no-create-home --shell=/bin/false --comment "Cloudera SCM User" cloudera-scm

启动cm和agent

主节点cm0通过命令 /opt/cm-5.8.2/etc/init.d/cloudera-scm-server start 启动服务端。

所有节点通过命令 /opt/cm-5.8.2/etc/init.d/cloudera-scm-agent start 启动Agent服务。(所有节点都要启动Agent服务,包括服务端)

Cloudera Manager Server和Agent都启动以后,就可以进行尝试访问了。http://master:7180/cmf/login

实际位置:/etc/rc.d/init.d/cloudera-scm-server 和 /etc/rc.d/init.d/cloudera-scm-agent

补充:/etc/init.d 是 /etc/rc.d/init.d 的软链接(soft link)。/etc/init.d里的shell脚本能够响应start,stop,restart,reload命令来管理某个具体的应用。比如经常看到的命令: /etc/init.d/networking start 这些脚本也可被其他trigger直接激活执行,这些trigger被软连接在/etc/rcN.d/中。这些原理似乎可以用来写daemon程序,让某些程序在开关机时运行。

CDH的安装和集群配置

安装parcel,安装过程中有什么问题,可以用 /opt/cm-5.8.2/etc/init.d/cloudera-scm-agent status , /opt/cm-5.8.2/etc/init.d/cloudera-scm-server status 查看服务器客户端状态。

也可以通过 /var/log/cloudera-scm-server/cloudera-scm-server.log , /var/log/cloudera-scm-agent/cloudera-scm-agent.log 查看日志。

如果上面的路径找不到则在日志文件夹"/opt/cm-5.8.2/log"查看日志,里面包含server和agent的log,使用命令如下:

tail -f /opt/cm-5.8.2/log/cloudera-scm-server/cloudera-scm-server.log

tail -f /opt/cm-5.8.2/log/cloudera-scm-agent/cloudera-scm-agent.log

实际位置: /var/log/cloudera-scm-server/cloudera-scm-server.log 和 /var/log/cloudera-scm-agent/cloudera-scm-agent.log

遇到的一些问题

问题一:

- [root@node1 ~]# spark-shell

- Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/fs/FSDataInputStream

- at org.apache.spark.deploy.SparkSubmitArguments$$anonfun$mergeDefaultSparkProperties$.apply(SparkSubmitArguments.scala:)

- at org.apache.spark.deploy.SparkSubmitArguments$$anonfun$mergeDefaultSparkProperties$.apply(SparkSubmitArguments.scala:)

- at scala.Option.getOrElse(Option.scala:)

- at org.apache.spark.deploy.SparkSubmitArguments.mergeDefaultSparkProperties(SparkSubmitArguments.scala:)

- at org.apache.spark.deploy.SparkSubmitArguments.<init>(SparkSubmitArguments.scala:)

- at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:)

- at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

- Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.fs.FSDataInputStream

- at java.net.URLClassLoader.findClass(URLClassLoader.java:)

- at java.lang.ClassLoader.loadClass(ClassLoader.java:)

- at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:)

- at java.lang.ClassLoader.loadClass(ClassLoader.java:)

- ... more

查找spark-env.sh文件的位置:

- [root@node1 /]# find / -name spark-env.sh

- ’: No such file or directory

- /run/cloudera-scm-agent/process/-spark2_on_yarn-SPARK2_YARN_HISTORY_SERVER/aux/client/spark-env.sh

- /run/cloudera-scm-agent/process/-spark2_on_yarn-SPARK2_YARN_HISTORY_SERVER/spark2-conf/spark-env.sh

- ……

- /run/cloudera-scm-agent/process/-spark_on_yarn-SPARK_YARN_HISTORY_SERVER-SparkUploadJarCommand/aux/client/spark-env.sh

- /run/cloudera-scm-agent/process/-spark_on_yarn-SPARK_YARN_HISTORY_SERVER-SparkUploadJarCommand/spark-conf/spark-env.sh

- /gvfs’: Permission denied

- /etc/spark/conf.cloudera.spark_on_yarn/spark-env.sh

- /etc/spark2/conf.cloudera.spark2_on_yarn/spark-env.sh

- /opt/cloudera/parcels/CDH--.cdh5./etc/spark/conf.dist/spark-env.sh

打开 /etc/spark2/conf.cloudera.spark2_on_yarn/spark-env.sh ,查看到一些内容:

- export SPARK_HOME=/opt/cloudera/parcels/SPARK2-.cloudera4-.cdh5./lib/spark2

- export HADOOP_HOME=/opt/cloudera/parcels/CDH--.cdh5./lib/hadoop

- export SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:$(paste -sd: "$SELF/classpath.txt")"

并在文件的最后追加内容:

- export SPARK_DIST_CLASSPATH=$(hadoop classpath)

问题没有解决!

打开 /opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/etc/spark/conf.dist/spark-env.sh ,查看到一些内容:

- export STANDALONE_SPARK_MASTER_HOST=`hostname`

- export SPARK_MASTER_IP=$STANDALONE_SPARK_MASTER_HOST

- export SPARK_MASTER_PORT=

- export SPARK_WORKER_PORT=

- export SPARK_WORKER_DIR=/var/run/spark/work

- export SPARK_LOG_DIR=/var/log/spark

- export SPARK_PID_DIR='/var/run/spark/'

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:$SPARK_LIBRARY_PATH/spark-assembly.jar"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/usr/lib/hadoop/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/usr/lib/hadoop/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/usr/lib/hadoop-hdfs/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/usr/lib/hadoop-hdfs/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/usr/lib/hadoop-mapreduce/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/usr/lib/hadoop-mapreduce/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/usr/lib/hadoop-yarn/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/usr/lib/hadoop-yarn/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/usr/lib/hive/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/usr/lib/flume-ng/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/usr/lib/parquet/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/usr/lib/avro/*"

- 在 /etc/spark2/conf.cloudera.spark2_on_yarn/spark-env.sh 文件的末尾追加以下内容:

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/spark/lib/spark-assembly.jar"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop-hdfs/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop-hdfs/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop-mapreduce/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop-mapreduce/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop-yarn/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop-yarn/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hive/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/flume-ng/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/parquet/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/avro/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/jars/*"

- export SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/jars/*"

问题依然没有解决!

在 /opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/etc/spark/conf.dist/spark-env.sh 文件的末尾追加以下内容:

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/spark/lib/spark-assembly.jar"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop-hdfs/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop-hdfs/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop-mapreduce/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop-mapreduce/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop-yarn/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hadoop-yarn/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/hive/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/flume-ng/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/parquet/lib/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/lib/avro/*"

- SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/jars/*"

- export SPARK_DIST_CLASSPATH="$SPARK_DIST_CLASSPATH:/opt/cloudera/parcels/CDH-5.14.2-1.cdh5.14.2.p0.3/jars/*"

- 问题解决!执行命令 spark-shell ,返回信息如下:

- [root@node1 ~]# spark-shell

- Setting default log level to "WARN".

- To adjust logging level use sc.setLogLevel(newLevel).

- SLF4J: Class path contains multiple SLF4J bindings.

- SLF4J: Found binding -.cdh5./jars/slf4j-log4j12-.jar!/org/slf4j/impl/StaticLoggerBinder.class]

- SLF4J: Found binding -.cdh5./jars/avro-tools--cdh5.14.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

- SLF4J: Found binding -.cdh5./jars/pig--cdh5.14.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

- SLF4J: Found binding -.cdh5./jars/slf4j-simple-.jar!/org/slf4j/impl/StaticLoggerBinder.class]

- SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

- SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

- Welcome to

- ____ __

- / __/__ ___ _____/ /__

- _\ \/ _ \/ _ `/ __/ '_/

- /___/ .__/\_,_/_/ /_/\_\ version

- /_/

- Using Scala version (Java HotSpot(TM) -Bit Server VM, Java 1.8.0_131)

- Type in expressions to have them evaluated.

- Type :help for more information.

- Spark context available as sc (master = local[*], app ).

- // :: WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

- SQL context available as sqlContext.

- scala>

- 执行Spark自带的案例程序:

- spark-submit --class org.apache.spark.examples.SparkPi --executor-memory 500m --total-executor-cores /opt/cloudera/parcels/SPARK2-.cloudera4-.cdh5./lib/spark2/examples/jars/spark-examples_2.-.cloudera4.jar

返回信息如下:

- [root@node1 ~]# spark-submit --class org.apache.spark.examples.SparkPi --executor-memory 500m --total-executor-cores /opt/cloudera/parcels/SPARK2-.cloudera4-.cdh5./lib/spark2/examples/jars/spark-examples_2.-.cloudera4.jar

- SLF4J: Class path contains multiple SLF4J bindings.

- ……

- // :: INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriverActorSystem@10.200.101.131:38370]

- // :: INFO ui.SparkUI: Started SparkUI at http://10.200.101.131:4040

- // :: INFO spark.SparkContext: Added JAR .cloudera4-.cdh5./lib/spark2/examples/jars/spark-examples_2.-.cloudera4.jar at spark://10.200.101.131:37025/jars/spark-examples_2.11-2.1.0.cloudera4.jar with timestamp 1556186427964

- // :: INFO scheduler.DAGScheduler: Got job (reduce at SparkPi.scala:) with output partitions

- // :: INFO scheduler.DAGScheduler: Final stage: ResultStage (reduce at SparkPi.scala:)

- // :: INFO executor.Executor: Fetching spark://10.200.101.131:37025/jars/spark-examples_2.11-2.1.0.cloudera4.jar with timestamp 1556186427964

- // :: INFO util.Utils: Fetching spark://10.200.101.131:37025/jars/spark-examples_2.11-2.1.0.cloudera4.jar to /tmp/spark-c8bb0344-ce1a-4acc-98d8-dfdebd6b95d6/userFiles-c39c4a70-c9b0-40f7-b48f-cf0f4d46042e/fetchFileTemp8308576688554785370.tmp

- // :: INFO executor.Executor: Adding -.cloudera4.jar to class loader

- ……

- Pi is roughly 3.134555672778364

- ……

- // :: INFO ui.SparkUI: Stopped Spark web UI at http://10.200.101.131:4040

- // :: INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

- // :: INFO storage.MemoryStore: MemoryStore cleared

- // :: INFO storage.BlockManager: BlockManager stopped

- // :: INFO storage.BlockManagerMaster: BlockManagerMaster stopped

- // :: INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

- // :: INFO spark.SparkContext: Successfully stopped SparkContext

- // :: INFO util.ShutdownHookManager: Shutdown hook called

- // :: INFO util.ShutdownHookManager: Deleting directory /tmp/spark-c8bb0344-ce1a-4acc-98d8-dfdebd6b95d6

从上面的信息可以看出,案例程序的运算结果为“Pi is roughly 3.134555672778364”

参考:

Spark教程——(1)安装Spark的更多相关文章

- Ubuntu 14.04 LTS 安装 spark 1.6.0 (伪分布式)-26号开始

需要下载的软件: 1.hadoop-2.6.4.tar.gz 下载网址:http://hadoop.apache.org/releases.html 2.scala-2.11.7.tgz 下载网址:h ...

- Spark学习笔记——安装和WordCount

1.去清华的镜像站点下载文件spark-2.1.0-bin-without-hadoop.tgz,不要下spark-2.1.0-bin-hadoop2.7.tgz 2.把文件解压到/usr/local ...

- CentOS6.5 安装Spark集群

一.安装依赖软件Scala(所有节点) 1.下载Scala:http://www.scala-lang.org/files/archive/scala-2.10.4.tgz 2.解压: [root@H ...

- RedHat6.5安装Spark单机

版本号: RedHat6.5 RHEL 6.5系统安装配置图解教程(rhel-server-6.5) JDK1.8 http://blog.csdn.net/chongxin1/arti ...

- RedHat6.5安装Spark集群

版本号: RedHat6.5 RHEL 6.5系统安装配置图解教程(rhel-server-6.5) JDK1.8 http://blog.csdn.net/chongxin1/arti ...

- [转] Spark快速入门指南 – Spark安装与基础使用

[From] https://blog.csdn.net/w405722907/article/details/77943331 Spark快速入门指南 – Spark安装与基础使用 2017年09月 ...

- spark教程(九)-操作数据库

数据库也是 spark 数据源创建 df 的一种方式,因为比较重要,所以单独算一节. 本文以 postgres 为例 安装 JDBC 首先需要 安装 postgres 的客户端驱动,即 JDBC 驱动 ...

- 学习记录(安装spark)

根据林子雨老师提供的教程安装spark,用的是网盘里下载的课程软件 将文件通过ftp传到ubantu中 根据教程修改配置文件,并成功安装spark 在修改配置文件的时候出现了疏忽,导致找不到该文件,最 ...

- 2.安装Spark与Python练习

一.安装Spark <Spark2.4.0入门:Spark的安装和使用> 博客地址:http://dblab.xmu.edu.cn/blog/1307-2/ 1.1 基础环境 1.1.1 ...

- 安装spark ha集群

安装spark ha集群 1.默认安装好hadoop+zookeeper 2.安装scala 1.解压安装包 tar zxvf scala-2.11.7.tgz 2.配置环境变量 vim /etc/p ...

随机推荐

- 微信公众平台接口获取时间戳为10位,java开发需转为13位

问题1:为什么会生成13位的时间戳,13位的时间戳和10时间戳分别是怎么来的 ? java的date默认精度是毫秒,也就是说生成的时间戳就是13位的,而像c++或者php生成的时间戳默认就是10位的, ...

- Spring 的 Bean 生命周期,11 张高清流程图及代码,深度解析

在网上已经有跟多Bean的生命周期的博客,但是很多都是基于比较老的版本了,最近吧整个流程化成了一个流程图.待会儿使用流程图,说明以及代码的形式来说明整个声明周期的流程.注意因为代码比较多,这里的流程图 ...

- CSS样式的引入&区别&权重&CSS层叠性&CSS样式的来源

CSS样式的引入: 内部样式: 内部样式:写在当前页面style标签中的样式 内联样式:写在style属性中的样式 外部样式: link标签引入的CSS文件 @import引入的CSS文件,需要写在c ...

- Hibernate学习过程出现的问题

1 核心配置文件hibernate.cfg.xml添加了约束但是无法自动获取属性值 解决方案:手动将DTD文件导入 步骤:倒开Eclipse,找到[window]->[preference]- ...

- 洛谷P1301 魔鬼之城 题解

想找原题请点击这里:传送门 题目描述 在一个被分割为N*M个正方形房间的矩形魔鬼之城中,一个探险者必须遵循下列规则才能跳跃行动.他必须从(, )进入,从(N, M)走出:在每一房间的墙壁上都写了一个魔 ...

- mysql服务删除成功,依然存在

重启电脑, 这可能是缓存, mysqld remove 删除后,偶尔,mysql服务依然存在,重启电脑,解决,

- ssh paramiko && subprocess

subprocess: #!/usr/bin/python3 import paramiko import os import sys import subprocess curPath = os.p ...

- Steam 游戏 《The Vagrant(流浪者)》修改器制作-[先使用CE写,之后有时间的话改用CheatMaker](2020年寒假小目标08)

日期:2020.02.07 博客期:146 星期五 [温馨提示]: 只是想要修改器的网友,可以直接点击此链接下载: 只是想拿CT文件的网友,可以直接点击此链接下载: 没有博客园账号的网友,可以将页面下 ...

- 开发中,GA、Beta、GA、Trial到底是什么含义

前言 用过maven的都应该知道,创建maven项目时,其版本号默认会以SNAPSHOT结尾,如下: 通过英文很容易就可以知道这是一个快照版本.但是,在开发中,或者使用别的软件的时候,我们常常会见到各 ...

- 吴裕雄--天生自然ORACLE数据库学习笔记:常用SQL*Plus命令

set pause on set pause '按<enter>键继续' select user_id,username,account_status from dba_users; sh ...