Kubeadm安装Kubernetes环境

Kubeadm方式号称一键安装部署,很多人也试过并且顺利成功,可到了我这里因为折腾系统问题,倒腾出不少的坑出来。

- kubeadm好处是自动配置了必要的服务,以及缺省配置了安全的认证,etcd,apiserver,controller-manager,Schedule,kube-proxy都变成pod而非操作系统进程可以不断检测其状态并且进行迁移(能否迁移不确定)

- kubeadm上有很多组件配置直接拿来可用。

- 缺点是缺乏集群高可用模式,以及目前的定位是beta版。

[kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters.

- 准备工作

关掉selinux

vi /etc/selinux/config

disabled

关掉firewalld,iptables

systemctl disable firewalld

systemctl stop firewalld

systemctl disable iptables

systemctl stop iptables

先设置主机名

hostnamectl set-hostname k8s-

修改/etc/hosts文件

cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

:: localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.0.105 k8s-

192.168.0.106 k8s-

192.168.0.107 k8s-

修改网络配置成静态ip,然后

service network restart

- 安装docker,kubectl,kubelet,kubeadm

安装docker

yum install docker

验证docker version

[root@k8s-master1 ~]# service docker start

Redirecting to /bin/systemctl start docker.service

[root@k8s-master1 ~]# docker version

Client:

Version: 1.12.

API version: 1.24

Package version: docker-1.12.-.git85d7426.el7.centos.x86_64

Go version: go1.8.3

Git commit: 85d7426/1.12.

Built: Tue Oct ::

OS/Arch: linux/amd64 Server:

Version: 1.12.

API version: 1.24

Package version: docker-1.12.-.git85d7426.el7.centos.x86_64

Go version: go1.8.3

Git commit: 85d7426/1.12.

Built: Tue Oct ::

OS/Arch: linux/amd64

开机启动

[root@k8s-master1 ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

[root@k8s-master1 ~]# systemctl start docker

编辑生成kubernetes的yum源

[root@k8s- network-scripts]# cat /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=

gpgcheck=

安装kubelet,kubectl,kubenetes-cni,kubeadm,缺省安装的是1.7.5版本

yum install kubectl kubelet kubernetes-cni kubeadm

sysctl net.bridge.bridge-nf-call-iptables=1

如果需要安装其他版本,可以用yum remove移除

修改kubelet启动配置文件,主要是将--cgroup-driver改为cgroupfs(确保和/usr/lib/systemd/system/docker.service的用户一致就可以了,不需要修改!)

[root@k8s- bin]# cat /etc/systemd/system/kubelet.service.d/-kubeadm.conf

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--kubeconfig=/etc/kubernetes/kubelet.conf --require-kubeconfig=true"

Environment="KUBELET_SYSTEM_PODS_ARGS=--pod-manifest-path=/etc/kubernetes/manifests --allow-privileged=true"

Environment="KUBELET_NETWORK_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

Environment="KUBELET_DNS_ARGS=--cluster-dns=10.96.0.10 --cluster-domain=cluster.local"

Environment="KUBELET_AUTHZ_ARGS=--authorization-mode=Webhook --client-ca-file=/etc/kubernetes/pki/ca.crt"

Environment="KUBELET_CADVISOR_ARGS=--cadvisor-port=0"

Environment="KUBELET_CGROUP_ARGS=--cgroup-driver=cgroupfs"

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_SYSTEM_PODS_ARGS $KUBELET_NETWORK_ARGS $KUBELET_DNS_ARGS $KUBELET_AUTHZ_ARGS $KUBELET_CADVISOR_ARGS $KUBELET_CGROUP_ARGS $KUBELET_EXTRA_ARGS

启动docker和kubelet

systemctl enable docker

systemctl enable kubelet

systemctl start docker

systemctl start kubelet

- 下载镜像

在运行kubeadm之前,需要在本地先下载一系列images,这些images名称和版本,可以运行kubeadm init,然后中断运行得到

具体会生成在/etc/kubernetes/manifest目录下,通过grep命令可以列出,比如

cat etcd.yaml | grep gcr*

image: gcr.io/google_containers/etcd-amd64:3.0.

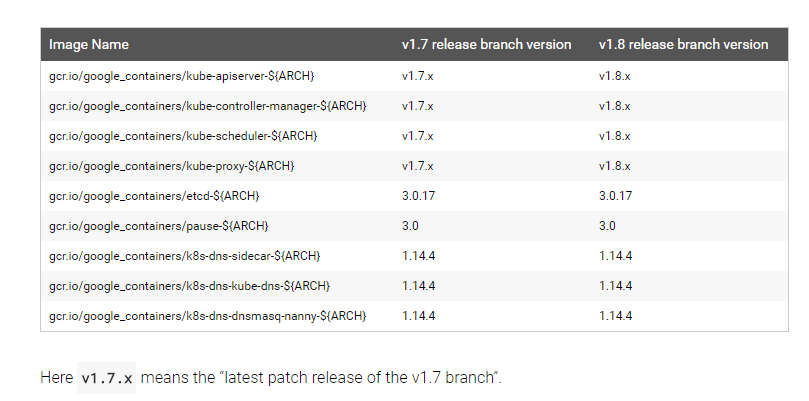

那具体需要下载哪些images和相应的版本呢? 可以参照kubernetes kubeadm手册,具体地址

https://kubernetes.io/docs/admin/kubeadm/

这里就有比较清楚的版本和对应关系。

如何获取镜像

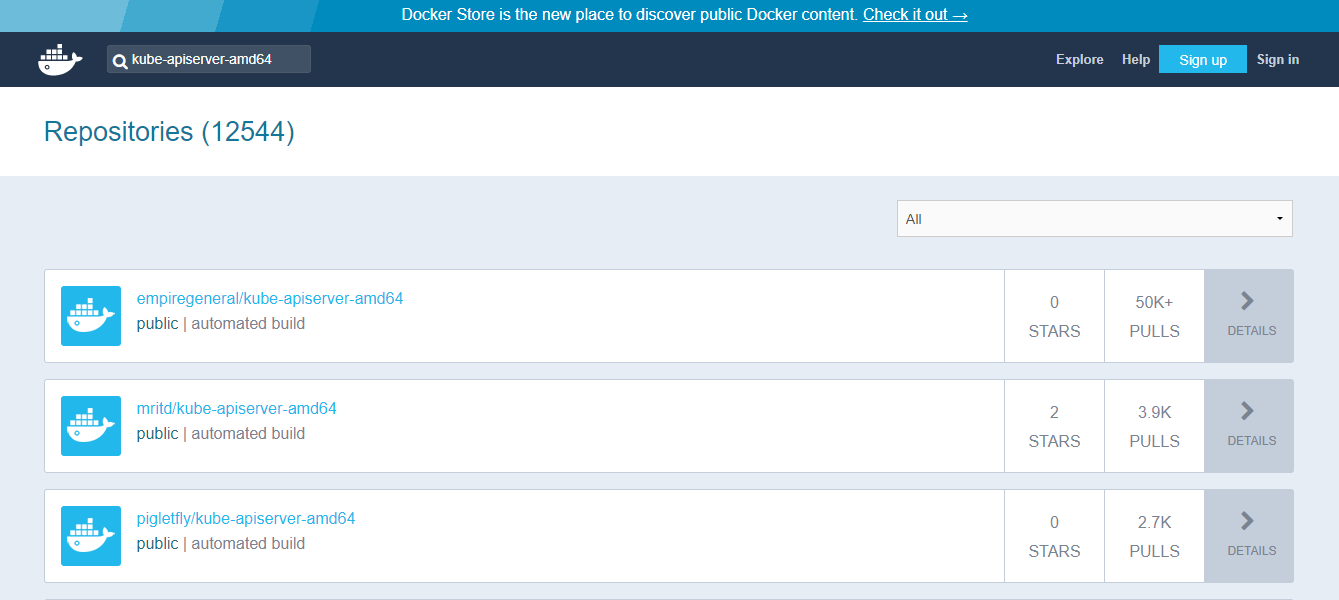

国内因为gcr.io被墙,所以要么通过代理翻墙获取,要么寻找其他办法。我的办法是访问

https://hub.docker.com/,然后搜索kube-apiserver-amd64,会列出各位大神已经build好的images

选择相应的版本,进行pull

docker pull cloudnil/etcd-amd64:3.0.

docker pull cloudnil/pause-amd64:3.0

docker pull cloudnil/kube-proxy-amd64:v1.7.2

docker pull cloudnil/kube-scheduler-amd64:v1.7.2

docker pull cloudnil/kube-controller-manager-amd64:v1.7.2

docker pull cloudnil/kube-apiserver-amd64:v1.7.2

docker pull cloudnil/kubernetes-dashboard-amd64:v1.6.1

docker pull cloudnil/k8s-dns-sidecar-amd64:1.14.

docker pull cloudnil/k8s-dns-kube-dns-amd64:1.14.

docker pull cloudnil/k8s-dns-dnsmasq-nanny-amd64:1.14. docker tag cloudnil/etcd-amd64:3.0. gcr.io/google_containers/etcd-amd64:3.0.

docker tag cloudnil/pause-amd64:3.0 gcr.io/google_containers/pause-amd64:3.0

docker tag cloudnil/kube-proxy-amd64:v1.7.2 gcr.io/google_containers/kube-proxy-amd64:v1.7.2

docker tag cloudnil/kube-scheduler-amd64:v1.7.2 gcr.io/google_containers/kube-scheduler-amd64:v1.7.2

docker tag cloudnil/kube-controller-manager-amd64:v1.7.2 gcr.io/google_containers/kube-controller-manager-amd64:v1.7.2

docker tag cloudnil/kube-apiserver-amd64:v1.7.2 gcr.io/google_containers/kube-apiserver-amd64:v1.7.2

docker tag cloudnil/kubernetes-dashboard-amd64:v1.6.1 gcr.io/google_containers/kubernetes-dashboard-amd64:v1.6.1

docker tag cloudnil/k8s-dns-sidecar-amd64:1.14. gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.

docker tag cloudnil/k8s-dns-kube-dns-amd64:1.14. gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.

docker tag cloudnil/k8s-dns-dnsmasq-nanny-amd64:1.14. gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.

最后

[root@k8s- ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

gcr.io/google_containers/kube-apiserver-amd64 v1.7.2 25c5958099a8 months ago 186.1 MB

gcr.io/google_containers/kube-controller-manager-amd64 v1.7.2 83d607ba9358 months ago MB

gcr.io/google_containers/kube-scheduler-amd64 v1.7.2 6282cca6de74 months ago 77.18 MB

gcr.io/google_containers/kube-proxy-amd64 v1.7.2 69f8faa3d08d months ago 114.7 MB

gcr.io/google_containers/k8s-dns-kube-dns-amd64 1.14. 2d6a3bea02c4 months ago 49.38 MB

gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64 1.14. 13117b1d461f months ago 41.41 MB

gcr.io/google_containers/k8s-dns-sidecar-amd64 1.14. c413c7235eb4 months ago 41.81 MB

gcr.io/google_containers/etcd-amd64 3.0. 393e48d05c4e months ago 168.9 MB

gcr.io/google_containers/kubernetes-dashboard-amd64 v1.6.1 c14ffb751676 months ago 134.4 MB

gcr.io/google_containers/pause-amd64 3.0 66c684b679d2 months ago 746.9 kB

- 主节点初始化

镜像准备完成,准备开始init

kubeadm init --kubernetes-version=v1.7.2 --pod-network-cidr=10.244.0.0/ --apiserver-advertise-address=0.0.0.0 --apiserver-cert-extra-sans=192.168.0.105,192.168.0.106,192.168.0.107,127.0.0.1,k8s-,k8s-,k8s-,192.168.0.1 --skip-preflight-checks

[root@k8s- network-scripts]# kubeadm init --kubernetes-version=v1.7.2 --pod-network-cidr=10.244.0.0/ --apiserver-advertise-address=0.0.0.0 --apiserver-cert-extra-sans=192.168.0.105,192.168.0.106,192.168.0.107,127.0.0.1,k8s-,k8s-,k8s-,192.168.0.1 --skip-preflight-checks

[kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters.

[init] Using Kubernetes version: v1.7.2

[init] Using Authorization modes: [Node RBAC]

[preflight] Skipping pre-flight checks

[kubeadm] WARNING: starting in 1.8, tokens expire after hours by default (if you require a non-expiring token use --token-ttl )

[certificates] Using the existing CA certificate and key.

[certificates] Using the existing API Server certificate and key.

[certificates] Using the existing API Server kubelet client certificate and key.

[certificates] Using the existing service account token signing key.

[certificates] Using the existing front-proxy CA certificate and key.

[certificates] Using the existing front-proxy client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Using existing up-to-date KubeConfig file: "/etc/kubernetes/scheduler.conf"

[kubeconfig] Using existing up-to-date KubeConfig file: "/etc/kubernetes/admin.conf"

[kubeconfig] Using existing up-to-date KubeConfig file: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Using existing up-to-date KubeConfig file: "/etc/kubernetes/controller-manager.conf"

[apiclient] Created API client, waiting for the control plane to become ready

坑来了。。。卡在这一句上,通过journalctl看日志

journalctl -xeu kubelet > a

Oct :: k8s- systemd[]: Starting kubelet: The Kubernetes Node Agent...

-- Subject: Unit kubelet.service has begun start-up

-- Defined-By: systemd

-- Support: http://lists.freedesktop.org/mailman/listinfo/systemd-devel

--

-- Unit kubelet.service has begun starting up.

Oct :: k8s- kubelet[]: I1030 ::30.326586 feature_gate.go:] feature gates: map[]

Oct :: k8s- kubelet[]: error: failed to run Kubelet: invalid kubeconfig: stat /etc/kubernetes/kubelet.conf: no such file or directory

Oct :: k8s- systemd[]: kubelet.service: main process exited, code=exited, status=/FAILURE

Oct :: k8s- systemd[]: Unit kubelet.service entered failed state.

Oct :: k8s- systemd[]: kubelet.service failed.

Oct :: k8s- systemd[]: kubelet.service holdoff time over, scheduling restart.

Oct :: k8s- systemd[]: Started kubelet: The Kubernetes Node Agent.

-- Subject: Unit kubelet.service has finished start-up

-- Defined-By: systemd

-- Support: http://lists.freedesktop.org/mailman/listinfo/systemd-devel

--

-- Unit kubelet.service has finished starting up.

--

-- The start-up result is done.

Oct :: k8s- systemd[]: Starting kubelet: The Kubernetes Node Agent...

-- Subject: Unit kubelet.service has begun start-up

-- Defined-By: systemd

-- Support: http://lists.freedesktop.org/mailman/listinfo/systemd-devel

--

-- Unit kubelet.service has begun starting up.

Oct :: k8s- kubelet[]: I1030 ::40.709684 feature_gate.go:] feature gates: map[]

Oct :: k8s- kubelet[]: I1030 ::40.712602 client.go:] Connecting to docker on unix:///var/run/docker.sock

Oct :: k8s- kubelet[]: I1030 ::40.712647 client.go:] Start docker client with request timeout=2m0s

Oct :: k8s- kubelet[]: W1030 ::40.714086 cni.go:] Unable to update cni config: No networks found in /etc/cni/net.d

Oct :: k8s- kubelet[]: I1030 ::40.725461 manager.go:] cAdvisor running in container: "/"

Oct :: k8s- kubelet[]: W1030 ::40.752809 manager.go:] unable to connect to Rkt api service: rkt: cannot tcp Dial rkt api service: dial tcp [::]:: getsockopt: connection refused

Oct :: k8s- kubelet[]: I1030 ::40.762789 fs.go:] Filesystem partitions: map[/dev/mapper/cl-root:{mountpoint:/ major: minor: fsType:xfs blockSize:} /dev/sda1:{mountpoint:/boot major: minor: fsType:xfs blockSize:}]

Oct :: k8s- kubelet[]: I1030 ::40.763579 manager.go:] Machine: {NumCores: CpuFrequency: MemoryCapacity: MachineID:a146a47b0c6b4c28a794c88309119e62 SystemUUID:B9DF3269-4A23-458F--21EC1D216DD4 BootID:62e18038-ea14-438f--e6a4abf265a1 Filesystems:[{Device:/dev/mapper/cl-root DeviceMajor: DeviceMinor: Capacity: Type:vfs Inodes: HasInodes:true} {Device:/dev/sda1 DeviceMajor: DeviceMinor: Capacity: Type:vfs Inodes: HasInodes:true}] DiskMap:map[::{Name:dm- Major: Minor: Size: Scheduler:none} ::{Name:dm- Major: Minor: Size: Scheduler:none} ::{Name:sda Major: Minor: Size: Scheduler:cfq} ::{Name:dm- Major: Minor: Size: Scheduler:none}] NetworkDevices:[{Name:enp0s3 MacAddress::::e2:ae:0a Speed: Mtu:} {Name:virbr0 MacAddress::::ed:: Speed: Mtu:} {Name:virbr0-nic MacAddress::::ed:: Speed: Mtu:}] Topology:[{Id: Memory: Cores:[{Id: Threads:[] Caches:[{Size: Type:Data Level:} {Size: Type:Instruction Level:} {Size: Type:Unified Level:}]}] Caches:[{Size: Type:Unified Level:}]}] CloudProvider:Unknown InstanceType:Unknown InstanceID:None}

Oct :: k8s- kubelet[]: I1030 ::40.765607 manager.go:] Version: {KernelVersion:3.10.-514.21..el7.x86_64 ContainerOsVersion:CentOS Linux (Core) DockerVersion:1.12. DockerAPIVersion:1.24 CadvisorVersion: CadvisorRevision:}

Oct :: k8s- kubelet[]: I1030 ::40.766218 server.go:] --cgroups-per-qos enabled, but --cgroup-root was not specified. defaulting to /

Oct :: k8s- kubelet[]: W1030 ::40.767731 container_manager_linux.go:] Running with swap on is not supported, please disable swap! This will be a fatal error by default starting in K8s v1.! In the meantime, you can opt-in to making this a fatal error by enabling --experimental-fail-swap-on.

Oct :: k8s- kubelet[]: I1030 ::40.767779 container_manager_linux.go:] container manager verified user specified cgroup-root exists: /

Oct :: k8s- kubelet[]: I1030 ::40.767789 container_manager_linux.go:] Creating Container Manager object based on Node Config: {RuntimeCgroupsName: SystemCgroupsName: KubeletCgroupsName: ContainerRuntime:docker CgroupsPerQOS:true CgroupRoot:/ CgroupDriver:cgroupfs ProtectKernelDefaults:false NodeAllocatableConfig:{KubeReservedCgroupName: SystemReservedCgroupName: EnforceNodeAllocatable:map[pods:{}] KubeReserved:map[] SystemReserved:map[] HardEvictionThresholds:[{Signal:memory.available Operator:LessThan Value:{Quantity:100Mi Percentage:} GracePeriod:0s MinReclaim:<nil>} {Signal:nodefs.available Operator:LessThan Value:{Quantity:<nil> Percentage:0.1} GracePeriod:0s MinReclaim:<nil>} {Signal:nodefs.inodesFree Operator:LessThan Value:{Quantity:<nil> Percentage:0.05} GracePeriod:0s MinReclaim:<nil>}]} ExperimentalQOSReserved:map[]}

Oct :: k8s- kubelet[]: I1030 ::40.767924 kubelet.go:] Adding manifest file: /etc/kubernetes/manifests

Oct :: k8s- kubelet[]: I1030 ::40.767935 kubelet.go:] Watching apiserver

Oct :: k8s- kubelet[]: E1030 ::40.782325 reflector.go:] k8s.io/kubernetes/pkg/kubelet/kubelet.go:: Failed to list *v1.Node: Get https://192.168.0.105:6443/api/v1/nodes?fieldSelector=metadata.name%3Dk8s-1&resourceVersion=0: dial tcp 192.168.0.105:6443: getsockopt: connection refused

Oct :: k8s- kubelet[]: E1030 ::40.782380 reflector.go:] k8s.io/kubernetes/pkg/kubelet/kubelet.go:: Failed to list *v1.Service: Get https://192.168.0.105:6443/api/v1/services?resourceVersion=0: dial tcp 192.168.0.105:6443: getsockopt: connection refused

Oct :: k8s- kubelet[]: E1030 ::40.782413 reflector.go:] k8s.io/kubernetes/pkg/kubelet/config/apiserver.go:: Failed to list *v1.Pod: Get https://192.168.0.105:6443/api/v1/pods?fieldSelector=spec.nodeName%3Dk8s-1&resourceVersion=0: dial tcp 192.168.0.105:6443: getsockopt: connection refused

Oct :: k8s- kubelet[]: W1030 ::40.783607 kubelet_network.go:] Hairpin mode set to "promiscuous-bridge" but kubenet is not enabled, falling back to "hairpin-veth"

Oct :: k8s- kubelet[]: I1030 ::40.783625 kubelet.go:] Hairpin mode set to "hairpin-veth"

Oct :: k8s- kubelet[]: W1030 ::40.784179 cni.go:] Unable to update cni config: No networks found in /etc/cni/net.d

orks found in /etc/cni/net.d

Oct :: k8s- kubelet[]: W1030 ::40.784915 cni.go:] Unable to update cni config: No networks found in /etc/cni/net.d

Oct :: k8s- kubelet[]: W1030 ::40.793823 cni.go:] Unable to update cni config: No networks found in /etc/cni/net.d

Oct :: k8s- kubelet[]: I1030 ::40.793839 docker_service.go:] Docker cri networking managed by cni

Oct :: k8s- kubelet[]: I1030 ::40.798395 docker_service.go:] Setting cgroupDriver to cgroupfs

Oct :: k8s- kubelet[]: I1030 ::40.804276 remote_runtime.go:] Connecting to runtime service unix:///var/run/dockershim.sock

Oct :: k8s- kubelet[]: I1030 ::40.806221 kuberuntime_manager.go:] Container runtime docker initialized, version: 1.12., apiVersion: 1.24.

Oct :: k8s- kubelet[]: I1030 ::40.807620 server.go:] Started kubelet v1.7.5

Oct :: k8s- kubelet[]: E1030 ::40.808001 kubelet.go:] Image garbage collection failed once. Stats initialization may not have completed yet: unable to find data for container /

Oct :: k8s- kubelet[]: I1030 ::40.808008 kubelet_node_status.go:] Setting node annotation to enable volume controller attach/detach

Oct :: k8s- kubelet[]: I1030 ::40.808464 server.go:] Starting to listen on 0.0.0.0:

Oct :: k8s- kubelet[]: I1030 ::40.809166 server.go:] Adding debug handlers to kubelet server.

Oct :: k8s- kubelet[]: E1030 ::40.811544 event.go:] Unable to write event: 'Post https://192.168.0.105:6443/api/v1/namespaces/default/events: dial tcp 192.168.0.105:6443: getsockopt: connection refused' (may retry after sleeping)

Oct :: k8s- kubelet[]: E1030 ::40.818965 kubelet.go:] Failed to check if disk space is available for the runtime: failed to get fs info for "runtime": unable to find data for container /

Oct :: k8s- kubelet[]: E1030 ::40.818965 kubelet.go:] Failed to check if disk space is available on the root partition: failed to get fs info for "root": unable to find data for container /

Oct :: k8s- kubelet[]: I1030 ::40.826012 fs_resource_analyzer.go:] Starting FS ResourceAnalyzer

Oct :: k8s- kubelet[]: I1030 ::40.826058 status_manager.go:] Starting to sync pod status with apiserver

Oct :: k8s- kubelet[]: I1030 ::40.826130 kubelet.go:] Starting kubelet main sync loop.

Oct :: k8s- kubelet[]: I1030 ::40.826196 kubelet.go:] skipping pod synchronization - [container runtime is down PLEG is not healthy: pleg was last seen active 2562047h47m16.854775807s ago; threshold is 3m0s]

Oct :: k8s- kubelet[]: W1030 ::40.826424 container_manager_linux.go:] CPUAccounting not enabled for pid:

Oct :: k8s- kubelet[]: W1030 ::40.826429 container_manager_linux.go:] MemoryAccounting not enabled for pid:

Oct :: k8s- kubelet[]: W1030 ::40.826465 container_manager_linux.go:] CPUAccounting not enabled for pid:

Oct :: k8s- kubelet[]: W1030 ::40.826429 container_manager_linux.go:] MemoryAccounting not enabled for pid:

Oct :: k8s- kubelet[]: W1030 ::40.826465 container_manager_linux.go:] CPUAccounting not enabled for pid:

Oct :: k8s- kubelet[]: W1030 ::40.826468 container_manager_linux.go:] MemoryAccounting not enabled for pid:

Oct :: k8s- kubelet[]: E1030 ::40.826495 container_manager_linux.go:] [ContainerManager]: Fail to get rootfs information unable to find data for container /

Oct :: k8s- kubelet[]: I1030 ::40.826504 volume_manager.go:] Starting Kubelet Volume Manager

Oct :: k8s- kubelet[]: W1030 ::40.829827 cni.go:] Unable to update cni config: No networks found in /etc/cni/net.d

Oct :: k8s- kubelet[]: E1030 ::40.829892 kubelet.go:] Container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

Oct :: k8s- kubelet[]: E1030 ::40.844934 factory.go:] devicemapper filesystem stats will not be reported: usage of thin_ls is disabled to preserve iops

Oct :: k8s- kubelet[]: I1030 ::40.845787 factory.go:] Registering Docker factory

看起来是cni初始化的问题,网上帖子一大堆,但解决方案都不work。

=============================================================================

反复折腾搞不定,觉得可能是自己的OS有问题,重新安装了个CentOS7.4,步骤一样,结果秒过,真的是崩溃啊,前面那个问题折腾了一天!

同时抄了个脚本,自动化一下镜像下载

images=(etcd-amd64:3.0. pause-amd64:3.0 kube-proxy-amd64:v1.7.2 kube-scheduler-amd64:v1.7.2 kube-controller-manager-amd64:v1.7.2 kube-apiserver-amd64:v1.7.2 kubernetes-dashboard-amd64:v1.6.1 k8s-dns-sidecar-amd64:1.14. k8s-dns-kube-dns-amd64:1.14. k8s-dns-dnsmasq-nanny-amd64:1.14.)

for imageName in ${images[@]} ; do

docker pull cloudnil/$imageName

docker tag cloudnil/$imageName gcr.io/google_containers/$imageName

docker rmi cloudnil/$imageName

done

[root@k8s- ~]# kubeadm init --kubernetes-version=v1.7.2 --pod-network-cidr=10.244.0.0/ --apiserver-advertise-address=0.0.0.0 --apiserver-cert-extra-sans=192.168.0.105,192.168.0.106,192.168.0.107,127.0.0.1,k8s-,k8s-,k8s-,192.168.0.1

[kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters.

[init] Using Kubernetes version: v1.7.2

[init] Using Authorization modes: [Node RBAC]

[preflight] Running pre-flight checks

[kubeadm] WARNING: starting in 1.8, tokens expire after hours by default (if you require a non-expiring token use --token-ttl )

[certificates] Generated CA certificate and key.

[certificates] Generated API server certificate and key.

[certificates] API Server serving cert is signed for DNS names [k8s- kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local k8s- k8s- k8s-] and IPs [192.168.0.105 192.168.0.106 192.168.0.107 127.0.0.1 192.168.0.1 10.96.0.1 192.168.0.105]

[certificates] Generated API server kubelet client certificate and key.

[certificates] Generated service account token signing key and public key.

[certificates] Generated front-proxy CA certificate and key.

[certificates] Generated front-proxy client certificate and key.

[certificates] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[apiclient] Created API client, waiting for the control plane to become ready

[apiclient] All control plane components are healthy after 55.001211 seconds

[token] Using token: 22d578.d921a7cf51352441

[apiconfig] Created RBAC rules

[addons] Applied essential addon: kube-proxy

[addons] Applied essential addon: kube-dns Your Kubernetes master has initialized successfully! To start using your cluster, you need to run (as a regular user): mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

http://kubernetes.io/docs/admin/addons/ You can now join any number of machines by running the following on each node

as root: kubeadm join --token 22d578.d921a7cf51352441 192.168.0.105:

然后

export KUBECONFIG=/etc/kubernetes/admin.conf [root@k8s- ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system etcd-k8s- / Running 5m

kube-system kube-apiserver-k8s- / Running 4m

kube-system kube-controller-manager-k8s- / Running 4m

kube-system kube-dns--j8mnw / Pending 5m

kube-system kube-proxy-6k4sb / Running 5m

kube-system kube-scheduler-k8s- / Running 4m

- 安装flanneld网络

启动kube-dns的服务无法启动,因为网络尚未配置。

配置flannel网络

在https://github.com/winse/docker-hadoop/tree/master/kube-deploy/kubeadm 中下载kube-flannel.yml和kube-flannel-rbac.yml

然后运行:

[root@k8s- ~]# kubectl apply -f kube-flannel.yml

serviceaccount "flannel" created

configmap "kube-flannel-cfg" created

daemonset "kube-flannel-ds" created

[root@k8s- ~]# kubectl apply -f kube-flannel-rbac.yml

clusterrole "flannel" created

clusterrolebinding "flannel" created

等待一段时间后pod启动,配置完成

[root@k8s- ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system etcd-k8s- / Running 3h

kube-system kube-apiserver-k8s- / Running 3h

kube-system kube-controller-manager-k8s- / Running 3h

kube-system kube-dns--j8mnw / Running 3h

kube-system kube-flannel-ds-j491k / Running 1h

kube-system kube-proxy-6k4sb / Running 3h

kube-system kube-scheduler-k8s- / Running 3h

节点

安装images

images=(pause-amd64:3.0 kube-proxy-amd64:v1.7.2)

for imageName in ${images[@]} ; do

docker pull cloudnil/$imageName

docker tag cloudnil/$imageName gcr.io/google_containers/$imageName

docker rmi cloudnil/$imageName

done

root@k8s- ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

gcr.io/google_containers/kube-proxy-amd64 v1.7.2 69f8faa3d08d months ago 114.7 MB

gcr.io/google_containers/pause-amd64 3.0 66c684b679d2 months ago 746.9 kB

加入集群

[root@k8s- ~]# kubeadm join --token 22d578.d921a7cf51352441 192.168.0.105:

[kubeadm] WARNING: kubeadm is in beta, please do not use it for production clusters.

[preflight] Running pre-flight checks

[discovery] Trying to connect to API Server "192.168.0.105:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://192.168.0.105:6443"

[discovery] Cluster info signature and contents are valid, will use API Server "https://192.168.0.105:6443"

[discovery] Successfully established connection with API Server "192.168.0.105:6443"

[bootstrap] Detected server version: v1.7.2

[bootstrap] The server supports the Certificates API (certificates.k8s.io/v1beta1)

[csr] Created API client to obtain unique certificate for this node, generating keys and certificate signing request

[csr] Received signed certificate from the API server, generating KubeConfig...

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf" Node join complete:

* Certificate signing request sent to master and response

received.

* Kubelet informed of new secure connection details. Run 'kubectl get nodes' on the master to see this machine join.

验证

[root@k8s- ~]# kubectl get nodes

NAME STATUS AGE VERSION

k8s- Ready 4h v1.7.5

k8s- Ready 1m v1.7.5

加入节点3后验证

[root@k8s- ~]# kubectl get nodes

NAME STATUS AGE VERSION

k8s- Ready 4h v1.7.5

k8s- Ready 5m v1.7.5

k8s- Ready 50s v1.7.5

[root@k8s- ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE

etcd-k8s- / Running 4h 192.168.0.105 k8s-

kube-apiserver-k8s- / Running 4h 192.168.0.105 k8s-

kube-controller-manager-k8s- / Running 4h 192.168.0.105 k8s-

kube-dns--j8mnw / Running 4h 10.244.0.2 k8s-

kube-flannel-ds-d8vvr / Running 1m 192.168.0.107 k8s-

kube-flannel-ds-fgvr1 / Running 5m 192.168.0.106 k8s-

kube-flannel-ds-j491k / Running 1h 192.168.0.105 k8s-

kube-proxy-6k4sb / Running 4h 192.168.0.105 k8s-

kube-proxy-p6v69 / Running 5m 192.168.0.106 k8s-

kube-proxy-tk2jq / Running 1m 192.168.0.107 k8s-

kube-scheduler-k8s- / Running 4h 192.168.0.105 k8s-

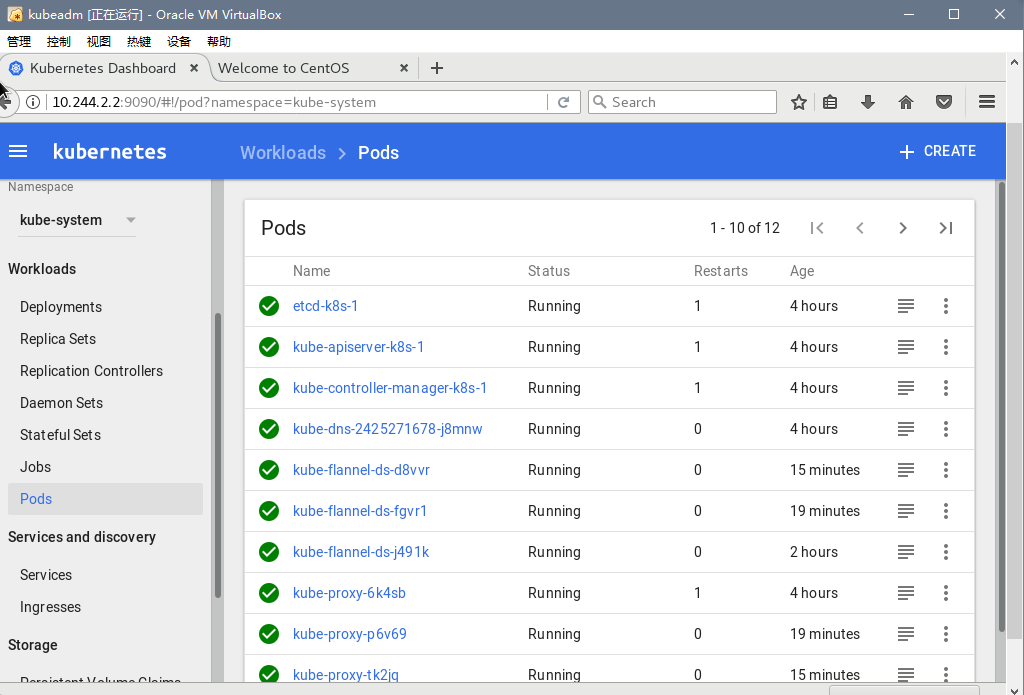

- 建立一个dashborad

在三台机器上运行

images=(kubernetes-dashboard-amd64:v1.6.0)

for imageName in ${images[@]} ; do

docker pull k8scn/$imageName

docker tag k8scn/$imageName gcr.io/google_containers/$imageName

docker rmi k8scn/$imageName

done

然后再https://github.com/winse/docker-hadoop/tree/master/kube-deploy/kubeadm下载一个kubernetes-dashboard.yaml文件

root@k8s- ~]# kubectl create -f kubernetes-dashboard.yaml

serviceaccount "kubernetes-dashboard" created

clusterrolebinding "kubernetes-dashboard" created

deployment "kubernetes-dashboard" created

service "kubernetes-dashboard" created

[root@k8s- ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE

etcd-k8s- / Running 4h 192.168.0.105 k8s-

kube-apiserver-k8s- / Running 4h 192.168.0.105 k8s-

kube-controller-manager-k8s- / Running 4h 192.168.0.105 k8s-

kube-dns--j8mnw / Running 4h 10.244.0.2 k8s-

kube-flannel-ds-d8vvr / Running 13m 192.168.0.107 k8s-

kube-flannel-ds-fgvr1 / Running 18m 192.168.0.106 k8s-

kube-flannel-ds-j491k / Running 2h 192.168.0.105 k8s-

kube-proxy-6k4sb / Running 4h 192.168.0.105 k8s-

kube-proxy-p6v69 / Running 18m 192.168.0.106 k8s-

kube-proxy-tk2jq / Running 13m 192.168.0.107 k8s-

kube-scheduler-k8s- / Running 4h 192.168.0.105 k8s-

kubernetes-dashboard--42k3c / Running 4s 10.244.2.2 k8s-

firefox上运行http://10.244.2.2:9090/,秒出这一大堆的Pods.

谢谢帮助我指引我爬坑的大神们:

http://www.cnblogs.com/liangDream/p/7358847.html

http://www.winseliu.com/blog/2017/08/13/kubeadm-install-k8s-on-centos7-with-resources/

Kubeadm安装Kubernetes环境的更多相关文章

- 看kubelet的日志 + Kubeadm安装Kubernetes环境

1.通过journalctl看日志 journalctl -xeu kubelet > a参考:https://www.cnblogs.com/ericnie/p/7749588.html

- kubeadm安装kubernetes V1.11.1 集群

之前测试了离线环境下使用二进制方法安装配置Kubernetes集群的方法,安装的过程中听说 kubeadm 安装配置集群更加方便,因此试着折腾了一下.安装过程中,也有一些坑,相对来说操作上要比二进制方 ...

- Centos7 使用 kubeadm 安装Kubernetes 1.13.3

目录 目录 什么是Kubeadm? 什么是容器存储接口(CSI)? 什么是CoreDNS? 1.环境准备 1.1.网络配置 1.2.更改 hostname 1.3.配置 SSH 免密码登录登录 1.4 ...

- 使用kubeadm安装Kubernetes 1.12

使用kubeadm安装Kubernetes 1.12 https://blog.frognew.com/2018/10/kubeadm-install-kubernetes-1.12.html 测试环 ...

- 使用kubeadm安装kubernetes v1.14.1

使用kubeadm安装kubernetes v1.14.1 一.环境准备 操作系统:Centos 7.5 ⼀ 一台或多台运⾏行行着下列列系统的机器器: Ubuntu 16.04+ Debi ...

- 使用kubeadm 安装 kubernetes 1.15.1

简介: Kubernetes作为Google开源的容器运行平台,受到了大家的热捧.搭建一套完整的kubernetes平台,也成为试用这套平台必须迈过的坎儿.kubernetes1.5版本以及之前,安装 ...

- 使用 kubeadm 安装 kubernetes v1.16.0

近日通过kubeadm 安装 kubernetes v1.16.0,踩过不少坑,现记录下安装过程. 安装环境: 系 统:CentOS Linux release 7.6 Docke ...

- Kubernetes(K8s) 安装(使用kubeadm安装Kubernetes集群)

背景: 由于工作发生了一些变动,很长时间没有写博客了. 概述: 这篇文章是为了介绍使用kubeadm安装Kubernetes集群(可以用于生产级别).使用了Centos 7系统. 一.Centos7 ...

- kubeadm 安装Kubernetes 1.16.3 (CentOS7+IPVS+Calico)

目录 · . 一.更新系统内核(全部节点) · . 二.基础环境设置(全部节点) · . 1.修改 Host · . 2.修改 Hostname · . 3.主机时间同步 · . 4.关闭 ...

随机推荐

- 【LOJ】#2527. 「HAOI2018」染色

题解 简单容斥题 至少选了\(k\)个颜色恰好出现\(S\)次方案数是 \(F[k] = \binom{M}{k} \frac{N!}{(S!)^{k}(N - i * S)!}(M - k)^{N ...

- rhev 虚拟化

引用自:https://blog.csdn.net/Jmilk/article/details/50964121#rhev-hhypervisor-%E8%99%9A%E6%8B%9F%E6%9C%B ...

- C++Primer,C++标准IO库阅读心得

IO 标准库类型和头文件 iostream istream 从流中读取 ostream 写到流中去 iostream 对流进行读写:从 istream 和 ostream 派生而来fstream if ...

- MySQL数据库之存储过程与存储函数

1 引言 存储过程和存储函数类似于面向对象程序设计语言中的方法,可以简化代码,提高代码的重用性.本文主要介绍如何创建存储过程和存储函数,以及存储过程与函数的使用.修改.删除等操作. 2 存储过程与存储 ...

- ArduinoYun教程之Arduino编程环境搭建

ArduinoYun教程之Arduino编程环境搭建 Arduino编程环境搭建 通常,我们所说的Arduino一般是指我们可以实实在在看到的一块开发板,他可以是Arduino UNO.Arduino ...

- DPDK+OpenvSwitch-centos7.4安装

系统版本 [root@controller ~]# cat /etc/redhat-release CentOS Linux release 7.4.1708 (Core) DPDK版本: dpdk- ...

- 使用GSON和泛型解析约定格式的JSON串(转)

时间紧张,先记一笔,后续优化与完善. 解决的问题: 使用GSON和泛型解析约定格式的JSON串. 背景介绍: 1.使用GSON来进行JSON串与java代码的互相转换. 2.JSON的格式如下三种: ...

- HDU 4772 Zhuge Liang's Password (2013杭州1003题,水题)

Zhuge Liang's Password Time Limit: 2000/1000 MS (Java/Others) Memory Limit: 32768/32768 K (Java/O ...

- HDU 4762 Cut the Cake (2013长春网络赛1004题,公式题)

Cut the Cake Time Limit: 2000/1000 MS (Java/Others) Memory Limit: 32768/32768 K (Java/Others)Tota ...

- mongodb chunk 大小设置

默认是64MB,取值范围是1 MB 到 1024 MB. 那改动会造成什么?下表简单总结: chunk size 调节 splitting次数(碎片数) 数据跨shard数目 数据均匀 网络传输次数 ...