kafka 集群安装过程

1.下载需要的安装包

http://kafka.apache.org/downloads.html

本文使用的

- Scala 2.9.2 - kafka_2.9.2-0.8.2.2.tgz (asc, md5)

其他的自由选择吧

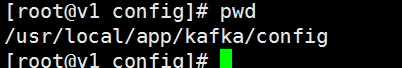

2.上传解压改名字三部曲

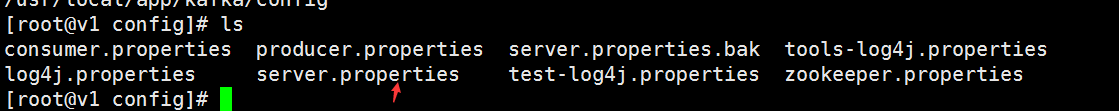

3.修改配置文件

下面就是配置文件的全文 重点修改的都写了!

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# see kafka.server.KafkaConfig for additional details and defaults ############################# Server Basics ############################# # The id of the broker. This must be set to a unique integer for each broker.

#全局唯一的id

broker.id=0 ############################# Socket Server Settings ############################# # The port the socket server listens on

#消费者和生产者 监听的端口

port=9092 # Hostname the broker will bind to. If not set, the server will bind to all interfaces

#host.name=localhost # Hostname the broker will advertise to producers and consumers. If not set, it uses the

# value for "host.name" if configured. Otherwise, it will use the value returned from

# java.net.InetAddress.getCanonicalHostName().

#advertised.host.name=<hostname routable by clients> # The port to publish to ZooKeeper for clients to use. If this is not set,

# it will publish the same port that the broker binds to.

#advertised.port=<port accessible by clients> # The number of threads handling network requests

#处理网络请求的线程数量

num.network.threads=3 # The number of threads doing disk I/O

#用来出来磁盘IO的数量

num.io.threads=8 #发送字节的缓冲大小

# The send buffer (SO_SNDBUF) used by the socket server

socket.send.buffer.bytes=102400 # The receive buffer (SO_RCVBUF) used by the socket server

socket.receive.buffer.bytes=102400 # The maximum size of a request that the socket server will accept (protection against OOM)

socket.request.max.bytes=104857600 ############################# Log Basics ############################# # A comma seperated list of directories under which to store log files

#运行的日志路径

log.dirs=/usr/local/app/kafka/los/tmp/kafka-logs # The default number of log partitions per topic. More partitions allow greater

# parallelism for consumption, but this will also result in more files across

# the brokers.

#当然爱你的brokers 分片数量

num.partitions=2 # The number of threads per data directory to be used for log recovery at startup and flushing at shutdown.

# This value is recommended to be increased for installations with data dirs located in RAID array.

num.recovery.threads.per.data.dir=1 ############################# Log Flush Policy ############################# # Messages are immediately written to the filesystem but by default we only fsync() to sync

# the OS cache lazily. The following configurations control the flush of data to disk.

# There are a few important trade-offs here:

# 1. Durability: Unflushed data may be lost if you are not using replication.

# 2. Latency: Very large flush intervals may lead to latency spikes when the flush does occur as there will be a lot of data to flush.

# 3. Throughput: The flush is generally the most expensive operation, and a small flush interval may lead to exceessive seeks.

# The settings below allow one to configure the flush policy to flush data after a period of time or

# every N messages (or both). This can be done globally and overridden on a per-topic basis. # The number of messages to accept before forcing a flush of data to disk

#log.flush.interval.messages=10000 # The maximum amount of time a message can sit in a log before we force a flush

#log.flush.interval.ms=1000 ############################# Log Retention Policy ############################# # The following configurations control the disposal of log segments. The policy can

# be set to delete segments after a period of time, or after a given size has accumulated.

# A segment will be deleted whenever *either* of these criteria are met. Deletion always happens

# from the end of the log. # The minimum age of a log file to be eligible for deletion

log.retention.hours=168 # A size-based retention policy for logs. Segments are pruned from the log as long as the remaining

# segments don't drop below log.retention.bytes.

#log.retention.bytes=1073741824 # The maximum size of a log segment file. When this size is reached a new log segment will be created.

log.segment.bytes=1073741824 # The interval at which log segments are checked to see if they can be deleted according

# to the retention policies

log.retention.check.interval.ms=300000 # By default the log cleaner is disabled and the log retention policy will default to just delete segments after their retention expires.

# If log.cleaner.enable=true is set the cleaner will be enabled and individual logs can then be marked for log compaction.

log.cleaner.enable=false ############################# Zookeeper ############################# # Zookeeper connection string (see zookeeper docs for details).

# This is a comma separated host:port pairs, each corresponding to a zk

# server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002".

# You can also append an optional chroot string to the urls to specify the

# root directory for all kafka znodes.

zookeeper.connect=v1:2181,v2:2181,v3:2181 # Timeout in ms for connecting to zookeeper

zookeeper.connection.timeout.ms=6000

然后把kafka 发到其他机器上! 修改配置上的id ,记得创建日志目录

启动!那么坑就要冒出来了! 心中总有那句MMP !

启动前 先保证你则zk 都启动了

启动命令。后台启动

nohup bin/kafka-server-start.sh config/server.properties&

常见错误

Java HotSpot(TM) Server VM warning: INFO: os::commit_memory(0x67e00000, 1073741824, 0) failed; error='Cannot allocate memory' (errno=12) # # There is insufficient memory for the Java Runtime Environment to continue. # Native memory allocation (mmap) failed to map 1073741824 bytes for committing reserved memory. # An error report file with more information is saved as: # /opt/kafka_2.11-0.9.0.1/hs_err_pid2249.log

解决办法:

错误原因:

Kafka默认使用-Xmx1G -Xms1G的JVM内存配置,如果机器内存较小,需要调整启动配置。

打开/config/kafka-server-start.sh,修改 export KAFKA_HEAP_OPTS="-Xmx1G -Xms1G"

为适合当前服务器的配置,例如export KAFKA_HEAP_OPTS="-Xmx256M -Xms128M"

kafka 集群安装过程的更多相关文章

- KafKa集群安装详细步骤

最近在使用Spring Cloud进行分布式微服务搭建,顺便对集成KafKa的方案做了一些总结,今天详细介绍一下KafKa集群安装过程: 1. 在根目录创建kafka文件夹(service1.serv ...

- kafka集群安装部署

kafka集群安装 使用的版本 系统:centos6.5 centos6.7 jdk:1.7.0_79 zookeeper:3.4.9 kafka:2.10-0.10.1.0 一.环境准备[只列,不具 ...

- zookeeper+kafka集群安装之二

zookeeper+kafka集群安装之二 此为上一篇文章的续篇, kafka安装需要依赖zookeeper, 本文与上一篇文章都是真正分布式安装配置, 可以直接用于生产环境. zookeeper安装 ...

- zookeeper+kafka集群安装之一

zookeeper+kafka集群安装之一 准备3台虚拟机, 系统是RHEL64服务版. 1) 每台机器配置如下: $ cat /etc/hosts ... # zookeeper hostnames ...

- zookeeper+kafka集群安装之中的一个

版权声明:本文为博主原创文章.未经博主同意不得转载. https://blog.csdn.net/cheungmine/article/details/26678877 zookeeper+kafka ...

- hadoop1.2.1+zk-3.4.5+hbase-0.94.1集群安装过程详解

hadoop1.2.1+zk-3.4.5+hbase-0.94.1集群安装过程详解 一,环境: 1,主机规划: 集群中包括3个节点:hadoop01为Master,其余为Salve,节点之间局域网连接 ...

- Kafka 集群安装

Kafka 集群安装 环境: Linux 7.X kafka_2.x 在linux操作系统中,kafka安装在 /u04/app目录中 1. 下载 # wget https://mirrors.cnn ...

- KafKa集群安装、配置

一.事前准备 1.kafka官网:http://kafka.apache.org/downloads. 2.选择使用版本下载. 3.kafka集群环境准备:(linux) 192.168.145.12 ...

- Centos7.4 kafka集群安装与kafka-eagle1.3.9的安装

Centos7.4 kafka集群安装与kafka-eagle1.3.9的安装 集群规划: hostname Zookeeper Kafka kafka-eagle kafka01 √ √ √ kaf ...

随机推荐

- php开发第一课

开发环境:wampserver IDE工具:sublime2 问题记录: 1.如何避免在php中中文乱码? 在php头部加入:<meta charset="utf-8"> ...

- Android代码实现控件闪烁效果

代码地址如下:http://www.demodashi.com/demo/13162.html 前言 在项目开发过程中,我们有时会遇到需要控件闪烁和停止的问题,这个用xml是可以实现的,但是为了在使用 ...

- iOS开发-Swift获取手机设备信息(UIDevice)

使用UiDevice获取设备信息 获取设备名称 let name = UIDevice.currentDevice().name 获取设备系统名称 let systemName = UIDevice. ...

- python 利用numpy进行数据分析

一.numpy.loadtxt读取数据 data=numpy.loadtxt('数据路径.txt',delimiter=',',usecols=(0,1,2,3) , dtype=float)#读取后 ...

- Jenkins2.x Pipeline持续集成交互

原文地址:http://blog.csdn.net/aixiaoyang168/article/details/72818804 Pipeline的几个基本概念: Stage: 阶段,一个Pipeli ...

- 解决window10系统电脑插入耳机之后没有声音的问题

其实办法也是从百度百科上查到的 ⁄(⁄ ⁄•⁄ω⁄•⁄ ⁄)⁄ 可能是因为自己某个不小心的操作更改了设置 1. 首先要点开设置按钮,在搜索栏输入控制面板 (当然知道控制面板在哪里的小伙伴就不用 ...

- 将 ASP.NET Core 2.0 项目升级至 ASP.NET Core 2.1

主要升级步骤如下: 将 .csproj 项目文件中的 target framework 改为 netcoreapp2.1 <TargetFramework>netcoreapp2.1< ...

- Python技术公众号100天了

公众号100天了,是个值得一提的日子! 我从2017年10月31日开始做这个公众号,到今天2018年2月7日,差不多100天时间 .虽然公众号很早就申请了,但直到去年10月31日,我才有真正把这个公众 ...

- 在Telerik for silverlight控件radtreeview中如何通过路径得到节点(转载)

页面<telerik:RadTreeView Margin="8" x:Name="radTreeView" SelectionChanged=" ...

- vivado与modelsim的联合仿真(一)

vivado软件中也自带仿真工具,但用了几天之后感觉仿真速度有点慢,至少比modelsim慢挺多的.而modelsim是我比较熟悉的一款仿真软件,固然选它作为设计功能的验证.为了将vivado和mod ...