部署Kubernetes v1.22.10高可用集群

一、概述

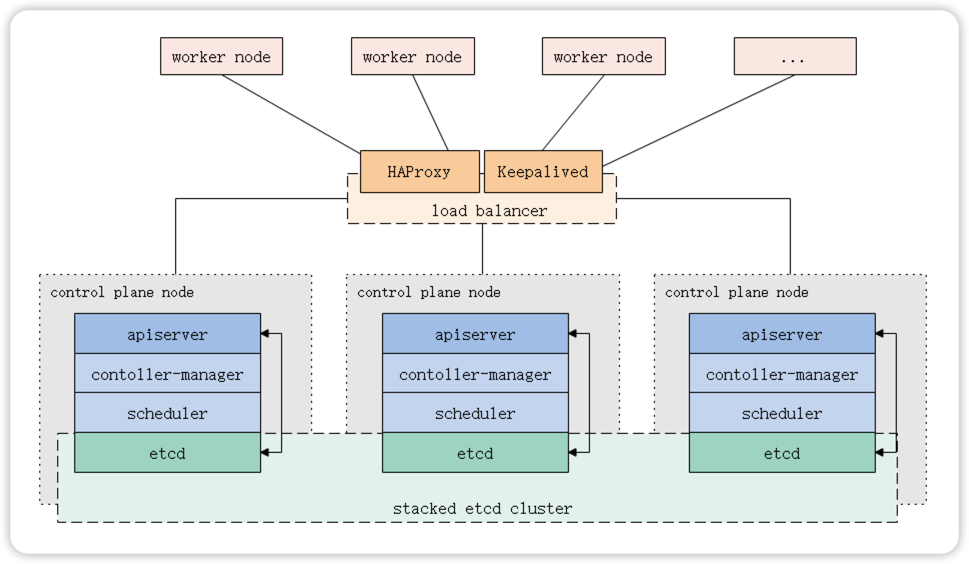

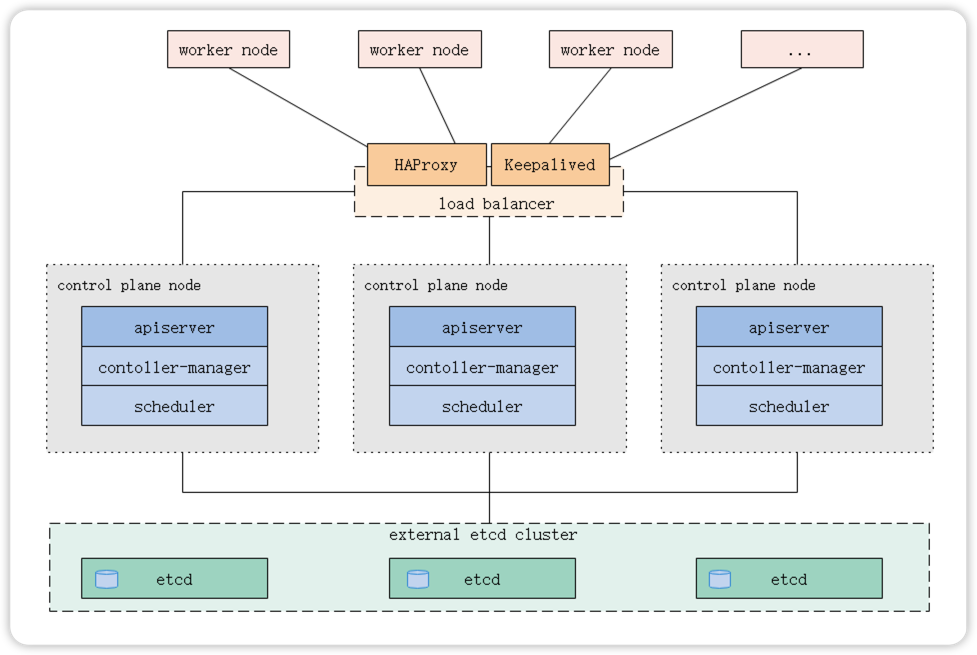

Kubernetes集群控制平面(Master)节点右数据库服务(Etcd)+其它服务组件(Apiserver、Controller-manager、Scheduler等)组成;整个集群系统运行的交互数据都将存储到数据库服务(Etcd)中,所以Kubernetes集群的高可用性取决于数据库服务(Etcd)在多个控制平面(Master)节点构建的数据同步复制关系。由此搭建Kubernetes的高可用集群可以选择以下两种部署方式:

- 使用堆叠的控制平面(Master)节点,其中etcd与组成控制平面的其他组件在同台机器上;

- 使用外部Etcd节点,其中Etcd与控制平台的其他组件在不同的机器上。

参考文档:https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/high-availability/

1.1 堆叠Etcd拓扑(推荐)

Etcd与其他组件共同运行在多台控制平面(Master)机器上,构建Etcd集群关系以形成高可用的Kubernetes集群。

先决条件:

- 最少三个或更多奇数Master节点;

- 最少三个或更多Node节点;

- 集群中所有机器之间的完整网络连接(公共或专用网络);

- 使用超级用户权限;

- 在集群中的任何一个节点上都可以使用SSH远程访问;

- Kubeadm和Kubelet已经安装到机器上。

使用这种方案可以减少要使用机器的数量,降低成本,降低部署复杂度;多组件服务之间竞争主机资源,可能导致性能瓶颈,以及当Master主机发生故障时影响到所有组件正常工作。

在实际应用中,你可以选择部署更多数量>3的Master主机,则该拓扑的劣势将会减弱!

这是kubeadm中的默认拓扑,kubeadm会在Master节点上自动创建本地etcd成员。

1.2 外部Etcd拓扑

控制平面的Etcd组件运行在外部主机上,其他组件连接到外部的Etcd集群以形成高可用的Kubernetes集群。

先决条件:

- 最少三个或更多奇数Master主机;

- 最少三个或更多Node主机;

- 还需要三台或更多奇数Etcd主机。

- 集群中所有主机之间的完整网络连接(公共或专用网络);

- 使用超级用户权限;

- 在集群中的任何一个节点主机上都可以使用SSH远程访问;

- Kubeadm和Kubelet已经安装到机器上。

使用外部主机搭建起来的Etcd集群,拥有更多的主机资源和可扩展性,以及故障影响范围缩小,但更多的机器将导致增加部署成本。

二、部署规划

主机系统:CentOS Linux release 7.7.1908 (Core)

Kubernetes版本:1.22.10

Docker CE版本:20.10.17

管理节点运行服务:etcd、kube-apiserver、kube-scheduler、kube-controller-manager、docker、kubelet、keepalived、haproxy

管理节点配置:4vCPU / 8GB内存 / 200G存储

|

主机名 |

主机地址 |

VIP地址 |

主机角色 |

|

k8s-master01 |

192.168.0.5 |

192.168.0.10 |

Master(Control Plane) |

|

k8s-master02 |

192.168.0.6 |

Master(Control Plane) |

|

|

k8s-master03 |

192.168.0.7 |

Master(Control Plane) |

注:确保服务器为全新安装的系统,未安装其它软件仅用于Kubernetes运行。

可使用如下命令检查端口是否被占用:

ss -alnupt |grep -E '6443|10250|10259|10257|2379|2380'ss -alnupt |grep -E '10250|3[0-2][0-7][0-6][0-7]'

三、搭建Kubernetes集群

3.1 内核升级(可选)

CentOS 7.x 版本的系统默认内核是3.10,该版本的内核在Kubernetes社区有很多已知的Bug(如:内核内存泄漏错误),建议升级成4.17+版本以上。

官方镜像仓库下载地址:http://mirrors.coreix.net/elrepo-archive-archive/kernel/el7/x86_64/RPMS/

# 安装4.19.9-1版本内核$ rpm -ivh http://mirrors.coreix.net/elrepo-archive-archive/kernel/el7/x86_64/RPMS/kernel-ml-4.19.9-1.el7.elrepo.x86_64.rpm$ rpm -ivh http://mirrors.coreix.net/elrepo-archive-archive/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.9-1.el7.elrepo.x86_64.rpm# 查看内核启动顺序$ awk -F \' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg0 : CentOS Linux (3.10.0-1062.12.1.el7.x86_64) 7 (Core)1 : CentOS Linux (4.19.9-1.el7.elrepo.x86_64) 7 (Core)2 : CentOS Linux (3.10.0-862.el7.x86_64) 7 (Core)3 : CentOS Linux (0-rescue-ef219b153e8049718c374985be33c24e) 7 (Core)# 设置系统启动默认内核$ grub2-set-default "CentOS Linux (4.19.9-1.el7.elrepo.x86_64) 7 (Core)"$ grub2-mkconfig -o /boot/grub2/grub.cfg# 查看默认内核$ grub2-editenv listCentOS Linux (4.19.9-1.el7.elrepo.x86_64) 7 (Core)# 重启系统使其生效$ reboot

3.2 系统初始化

3.2.1 设置主机名

### 在master01上执行$ hostnamectl set-hostname k8s-master01# 在master02上执行$ hostnamectl set-hostname k8s-master02# 在master03上执行$ hostnamectl set-hostname k8s-master03

3.2.2 添加hosts名称解析

### 在所有主机上执行$ cat >> /etc/hosts << EOF192.168.0.5 k8s-master01192.168.0.6 k8s-master02192.168.0.7 k8s-master03EOF

3.2.3 安装常用软件

### 在所有主机上执行$ yum -y install epel-release.noarch nfs-utils net-tools bridge-utils \ntpdate vim chrony wget lrzsz

3.2.4 设置主机时间同步

在k8s-master01上设置从公共时间服务器上同步时间

[root@k8s-master01 ~]# systemctl stop ntpd[root@k8s-master01 ~]# timedatectl set-timezone Asia/Shanghai[root@k8s-master01 ~]# ntpdate ntp.aliyun.com && /usr/sbin/hwclock[root@k8s-master01 ~]# vim /etc/ntp.conf# 当该节点丢失网络连接,采用本地时间作为时间服务器为集群中的其他节点提供时间同步server 127.127.1.0Fudge 127.127.1.0 stratum 10# 注释掉默认时间服务器,改为如下地址server cn.ntp.org.cn prefer iburst minpoll 4 maxpoll 10server ntp.aliyun.com iburst minpoll 4 maxpoll 10server time.ustc.edu.cn iburst minpoll 4 maxpoll 10server ntp.tuna.tsinghua.edu.cn iburst minpoll 4 maxpoll 10[root@k8s-master01 ~]# systemctl start ntpd[root@k8s-master01 ~]# systemctl enable ntpd[root@k8s-master01 ~]# ntpstatsynchronised to NTP server (203.107.6.88) at stratum 3time correct to within 202 mspolling server every 64 s

配置其它主机从k8s-master01同步时间

### 在除k8s-master01以外的所有主机上执行$ systemctl stop ntpd$ timedatectl set-timezone Asia/Shanghai$ ntpdate k8s-master01 && /usr/sbin/hwclock$ vim /etc/ntp.conf# 注释掉默认时间服务器,改为如下地址server k8s-master01 prefer iburst minpoll 4 maxpoll 10$ systemctl start ntpd$ systemctl enable ntpd$ ntpstatsynchronised to NTP server (192.168.0.5) at stratum 4time correct to within 217 mspolling server every 16 s

3.2.5 关闭防火墙

### 在所有节点上执行# 关闭SElinux$ sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config$ setenforce 0# 关闭Fileworld防火墙$ systemctl stop firewalld.service$ systemctl disable firewalld.service

3.2.6 系统优化

### 在所有节点上执行# 关闭swap$ swapoff -a$ sed -i "s/^[^#].*swap/#&/g" /etc/fstab# 启用bridge-nf功能$ cat > /etc/modules-load.d/k8s.conf << EOFoverlaybr_netfilterEOF$ modprobe overlay && modprobe br_netfilter# 设置内核参数$ cat > /etc/sysctl.d/k8s.conf << EOF# 配置转发 IPv4 并让 iptables 看到桥接流量net.ipv4.ip_forward = 1net.bridge.bridge-nf-call-iptables = 1net.bridge.bridge-nf-call-ip6tables = 1# 加强握手队列能力net.ipv4.tcp_max_syn_backlog = 10240net.core.somaxconn = 10240net.ipv4.tcp_syncookies = 1# 调整系统级别的能够打开的文件句柄的数量fs.file-max=1000000# 配置arp cache 大小net.ipv4.neigh.default.gc_thresh1 = 1024net.ipv4.neigh.default.gc_thresh2 = 4096net.ipv4.neigh.default.gc_thresh3 = 8192# 令TCP窗口和状态追踪更加宽松net.netfilter.nf_conntrack_tcp_be_liberal = 1net.netfilter.nf_conntrack_tcp_loose = 1# 允许的最大跟踪连接条目,是在内核内存中netfilter可以同时处理的“任务”(连接跟踪条目)net.netfilter.nf_conntrack_max = 10485760net.netfilter.nf_conntrack_tcp_timeout_established = 300net.netfilter.nf_conntrack_buckets = 655360# 每个网络接口接收数据包的速率比内核处理这些包的速率快时,允许送到队列的数据包的最大数目。net.core.netdev_max_backlog = 10000# 默认值: 128 指定了每一个real user ID可创建的inotify instatnces的数量上限fs.inotify.max_user_instances = 524288# 默认值: 8192 指定了每个inotify instance相关联的watches的上限fs.inotify.max_user_watches = 524288EOF$ sysctl --system# 修改文件打开数$ ulimit -n 65545$ cat >> /etc/sysctl.d/limits.conf << EOF* soft nproc 65535* hard nproc 65535* soft nofile 65535* hard nofile 65535EOF$ sed -i '/nproc/ s/4096/65535/' /etc/security/limits.d/20-nproc.conf

3.3 安装Docker

### 在所有节点上执行# 安装Docker$ yum install -y yum-utils device-mapper-persistent-data lvm2$ yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo$ sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo && yum makecache fast$ yum -y install docker-ce-20.10.17# 优化docker配置$ mkdir -p /etc/docker && cat > /etc/docker/daemon.json <<EOF{"registry-mirrors": ["https://hub-mirror.c.163.coma","https://docker.mirrors.ustc.edu.cn","https://p6902cz5.mirror.aliyuncs.com"],"exec-opts": ["native.cgroupdriver=systemd"],"log-driver": "json-file","log-opts": {"max-size": "100m"},"storage-driver": "overlay2","storage-opts": ["overlay2.override_kernel_check=true"],"bip": "172.38.16.1/24"}EOF# 启动并配置开机自启$ systemctl enable docker$ systemctl restart docker$ docker version

3.4 安装Kubernetes

### 在所有Master节点执行# 配置yum源cat > /etc/yum.repos.d/kubernetes.repo <<EOF[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/enabled=1gpgcheck=1repo_gpgcheck=1gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF# 安装kubeadm、kubelet和kubectl$ yum install -y kubelet-1.22.10 kubeadm-1.22.10 kubectl-1.22.10 --disableexcludes=kubernetes --nogpgcheck$ systemctl enable --now kubelet# 配置kubelet参数$ cat > /etc/sysconfig/kubelet <<EOFKUBELET_EXTRA_ARGS="--fail-swap-on=false"EOF

可以参考:https://www.yuque.com/wubolive/ops/ugomse 修改kubeadm源码更改证书签发时长。

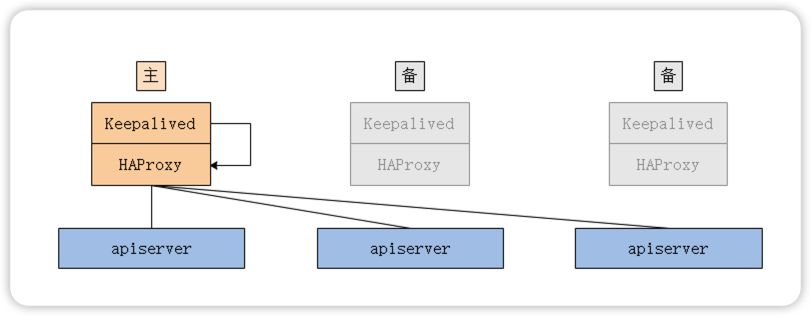

3.5 配置HA负载均衡

当存在多个控制平面时,kube-apiserver也存在多个,可以使用HAProxy+Keepalived这个组合,因为HAProxy可以提高更高性能的四层负载均衡功能。

官方文档提供了两种运行方式(此案例使用选项2):

- 选项1:在操作系统上运行服务

- 选项2:将服务作为静态pod运行

3.5.1 配置keepalived

将keepalived作为静态pod运行,在引导过程中,kubelet将启动这些进程,以便集群可以在启动时使用它们。这是一个优雅的解决方案,特别是在堆叠(Stacked)etcd 拓扑下描述的设置。

创建keepalived.conf配置文件

### 在k8s-master01上设置:$ mkdir /etc/keepalived && cat > /etc/keepalived/keepalived.conf <<EOF! /etc/keepalived/keepalived.conf! Configuration File for keepalivedglobal_defs {router_id k8s-master01}vrrp_script check_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 3weight -2fall 10rise 2}vrrp_instance VI_1 {state MASTERinterface eth0virtual_router_id 51priority 100authentication {auth_type PASSauth_pass 123456}virtual_ipaddress {192.168.0.10}track_script {check_apiserver}}EOF### 在k8s-master02上设置:$ mkdir /etc/keepalived && cat > /etc/keepalived/keepalived.conf <<EOF! /etc/keepalived/keepalived.conf! Configuration File for keepalivedglobal_defs {router_id k8s-master02}vrrp_script check_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 3weight -2fall 10rise 2}vrrp_instance VI_1 {state BACKUPinterface eth0virtual_router_id 51priority 99authentication {auth_type PASSauth_pass 123456}virtual_ipaddress {192.168.0.10}track_script {check_apiserver}}EOF### 在k8s-master03上设置:$ mkdir /etc/keepalived && cat > /etc/keepalived/keepalived.conf <<EOF! /etc/keepalived/keepalived.conf! Configuration File for keepalivedglobal_defs {router_id k8s-master03}vrrp_script check_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 3weight -2fall 10rise 2}vrrp_instance VI_1 {state BACKUPinterface eth0virtual_router_id 51priority 98authentication {auth_type PASSauth_pass 123456}virtual_ipaddress {192.168.0.10}track_script {check_apiserver}}EOF

创建健康检查脚本

### 在所有Master控制节点上执行$ cat > /etc/keepalived/check_apiserver.sh << 'EOF'#!/bin/sherrorExit() {echo "*** $*" 1>&2exit 1}curl --silent --max-time 2 --insecure https://localhost:9443/ -o /dev/null || errorExit "Error GET https://localhost:9443/"if ip addr | grep -q 192.168.0.10; thencurl --silent --max-time 2 --insecure https://192.168.0.10:9443/ -o /dev/null || errorExit "Error GET https://192.168.0.10:9443/"fiEOF

3.5.2 配置haproxy

### 在所有Master管理节点执行$ mkdir /etc/haproxy && cat > /etc/haproxy/haproxy.cfg << 'EOF'# /etc/haproxy/haproxy.cfg#---------------------------------------------------------------------# Global settings#---------------------------------------------------------------------globallog /dev/log local0log /dev/log local1 noticedaemon#---------------------------------------------------------------------# common defaults that all the 'listen' and 'backend' sections will# use if not designated in their block#---------------------------------------------------------------------defaultsmode httplog globaloption httplogoption dontlognulloption http-server-closeoption forwardfor except 127.0.0.0/8option redispatchretries 1timeout http-request 10stimeout queue 20stimeout connect 5stimeout client 20stimeout server 20stimeout http-keep-alive 10stimeout check 10s#---------------------------------------------------------------------# Haproxy Monitoring panel#---------------------------------------------------------------------listen admin_statusbind 0.0.0.0:8888mode httplog 127.0.0.1 local3 errstats refresh 5sstats uri /admin?statsstats realm itnihao\ welcomestats auth admin:adminstats hide-versionstats admin if TRUE#---------------------------------------------------------------------# apiserver frontend which proxys to the control plane nodes#---------------------------------------------------------------------frontend apiserverbind *:9443mode tcpoption tcplogdefault_backend apiserver#---------------------------------------------------------------------# round robin balancing for apiserver#---------------------------------------------------------------------backend apiserveroption httpchk GET /healthzhttp-check expect status 200mode tcpoption ssl-hello-chkbalance roundrobinserver k8s-master01 192.168.0.5:6443 checkserver k8s-master02 192.168.0.6:6443 checkserver k8s-master03 192.168.0.7:6443 checkEOF

3.5.3 配置静态Pod运行

对于此设置,需要在其中创建两个清单文件/etc/kubernetes/manifests(首先创建目录)。

### 仅在k8s-master01上创建$ mkdir -p /etc/kubernetes/manifests# 配置keepalived清单$ cat > /etc/kubernetes/manifests/keepalived.yaml << 'EOF'apiVersion: v1kind: Podmetadata:creationTimestamp: nullname: keepalivednamespace: kube-systemspec:containers:- image: osixia/keepalived:2.0.17name: keepalivedresources: {}securityContext:capabilities:add:- NET_ADMIN- NET_BROADCAST- NET_RAWvolumeMounts:- mountPath: /usr/local/etc/keepalived/keepalived.confname: config- mountPath: /etc/keepalived/check_apiserver.shname: checkhostNetwork: truevolumes:- hostPath:path: /etc/keepalived/keepalived.confname: config- hostPath:path: /etc/keepalived/check_apiserver.shname: checkstatus: {}EOF# 配置haproxy清单cat > /etc/kubernetes/manifests/haproxy.yaml << 'EOF'apiVersion: v1kind: Podmetadata:name: haproxynamespace: kube-systemspec:containers:- image: haproxy:2.1.4name: haproxylivenessProbe:failureThreshold: 8httpGet:host: localhostpath: /healthzport: 9443scheme: HTTPSvolumeMounts:- mountPath: /usr/local/etc/haproxy/haproxy.cfgname: haproxyconfreadOnly: truehostNetwork: truevolumes:- hostPath:path: /etc/haproxy/haproxy.cfgtype: FileOrCreatename: haproxyconfstatus: {}EOF

3.6 部署Kubernetes集群

3.6.1 准备镜像

由于国内访问k8s.gcr.io存在某些原因下载不了镜像,所以我们可以在国内的镜像仓库中下载它们(比如使用阿里云镜像仓库。阿里云代理镜像仓库地址:registry.aliyuncs.com/google_containers

### 在所有Master控制节点执行$ kubeadm config images pull --kubernetes-version=v1.22.10 --image-repository=registry.aliyuncs.com/google_containers

3.6.2 准备ini配置文件

### 在k8s-master01上执行$ kubeadm config print init-defaults > kubeadm-init.yaml$ vim kubeadm-init.yamlapiVersion: kubeadm.k8s.io/v1beta3bootstrapTokens:- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authenticationkind: InitConfigurationlocalAPIEndpoint:advertiseAddress: 192.168.0.5bindPort: 6443nodeRegistration:criSocket: /var/run/dockershim.sockimagePullPolicy: IfNotPresentname: k8s-master01taints: null---controlPlaneEndpoint: "192.168.0.10:9443"apiServer:timeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta3certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrollerManager: {}dns: {}etcd:local:dataDir: /var/lib/etcdimageRepository: registry.aliyuncs.com/google_containerskind: ClusterConfigurationkubernetesVersion: 1.22.10networking:dnsDomain: cluster.localserviceSubnet: 10.96.0.0/12scheduler: {}

配置说明:

localAPIEndpoint.advertiseAddress:本机apiserver监听的IP地址。localAPIEndpoint.bindPort:本机apiserver监听的端口。controlPlaneEndpoint:控制平面入口点地址(负载均衡器VIP地址+负载均衡器端口)。imageRepository:部署集群时要使用的镜像仓库地址。kubernetesVersion:部署集群的kubernetes版本。

3.6.3 初始化控制平面节点

kubeadm在初始化控制平面时会生成部署Kubernetes集群中各个组件所需的相关配置文件在/etc/kubernetes目录下,可以供我们参考。

### 在k8s-master01上执行# 由于kubeadm命令为源码安装,需要配置一下kubelet服务。$ kubeadm init phase kubelet-start --config kubeadm-init.yaml# 初始化kubernetes控制平面$ kubeadm init --config kubeadm-init.yaml --upload-certsYour Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/You can now join any number of the control-plane node running the following command on each as root:kubeadm join 192.168.0.10:9443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:b30e986e80423da7b6b1cbf43ece58598074b2a8b86295517438942e9a47ab0d \--control-plane --certificate-key 57360054608fa9978864124f3195bc632454be4968b5ccb577f7bb9111d96597Please note that the certificate-key gives access to cluster sensitive data, keep it secret!As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.0.10:9443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:b30e986e80423da7b6b1cbf43ece58598074b2a8b86295517438942e9a47ab0d

3.6.4 将其它节点加入集群

将控制平面节点加入集群

### 在另外两台Master控制节点执行:$ kubeadm join 192.168.0.10:9443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:b30e986e80423da7b6b1cbf43ece58598074b2a8b86295517438942e9a47ab0d \--control-plane --certificate-key 57360054608fa9978864124f3195bc632454be4968b5ccb577f7bb9111d96597

将工作节点加入集群(可选)

### 如有Node工作节点可使用如下命令$ kubeadm join 192.168.0.10:9443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:b30e986e80423da7b6b1cbf43ece58598074b2a8b86295517438942e9a47ab0d

将keepalived和haproxy复制到其它Master控制节点

$ scp /etc/kubernetes/manifests/{haproxy.yaml,keepalived.yaml} root@k8s-master02:/etc/kubernetes/manifests/$ scp /etc/kubernetes/manifests/{haproxy.yaml,keepalived.yaml} root@k8s-master03:/etc/kubernetes/manifests/

去掉master污点(可选)

$ kubectl taint nodes --all node-role.kubernetes.io/master-

3.6.5 验证集群状态

### 可在任意Master控制节点执行# 配置kubectl认证$ mkdir -p $HOME/.kube$ cp -i /etc/kubernetes/admin.conf $HOME/.kube/config# 查看节点状态$ kubectl get nodesNAME STATUS ROLES AGE VERSIONk8s-master01 NotReady control-plane,master 13m v1.22.10k8s-master02 NotReady control-plane,master 3m55s v1.22.10k8s-master03 NotReady control-plane,master 113s v1.22.10# 查看pod状态$ kubectl get pod -n kube-systemNAMESPACE NAME READY STATUS RESTARTS AGEkube-system coredns-7f6cbbb7b8-96hp9 0/1 Pending 0 18mkube-system coredns-7f6cbbb7b8-kfmnn 0/1 Pending 0 18mkube-system etcd-k8s-master01 1/1 Running 0 18mkube-system etcd-k8s-master02 1/1 Running 0 9m21skube-system etcd-k8s-master03 1/1 Running 0 7m18skube-system haproxy-k8s-master01 1/1 Running 0 18mkube-system haproxy-k8s-master02 1/1 Running 0 3m27skube-system haproxy-k8s-master03 1/1 Running 0 3m16skube-system keepalived-k8s-master01 1/1 Running 0 18mkube-system keepalived-k8s-master02 1/1 Running 0 3m27skube-system keepalived-k8s-master03 1/1 Running 0 3m16skube-system kube-apiserver-k8s-master01 1/1 Running 0 18mkube-system kube-apiserver-k8s-master02 1/1 Running 0 9m24skube-system kube-apiserver-k8s-master03 1/1 Running 0 7m23skube-system kube-controller-manager-k8s-master01 1/1 Running 0 18mkube-system kube-controller-manager-k8s-master02 1/1 Running 0 9m24skube-system kube-controller-manager-k8s-master03 1/1 Running 0 7m22skube-system kube-proxy-cvdlr 1/1 Running 0 7m23skube-system kube-proxy-gnl7t 1/1 Running 0 9m25skube-system kube-proxy-xnrt7 1/1 Running 0 18mkube-system kube-scheduler-k8s-master01 1/1 Running 0 18mkube-system kube-scheduler-k8s-master02 1/1 Running 0 9m24skube-system kube-scheduler-k8s-master03 1/1 Running 0 7m22s# 查看kubernetes证书有效期$ kubeadm certs check-expirationCERTIFICATE EXPIRES RESIDUAL TIME CERTIFICATE AUTHORITY EXTERNALLY MANAGEDadmin.conf Oct 25, 2122 07:40 UTC 99y ca noapiserver Oct 25, 2122 07:40 UTC 99y ca noapiserver-etcd-client Oct 25, 2122 07:40 UTC 99y etcd-ca noapiserver-kubelet-client Oct 25, 2122 07:40 UTC 99y ca nocontroller-manager.conf Oct 25, 2122 07:40 UTC 99y ca noetcd-healthcheck-client Oct 25, 2122 07:40 UTC 99y etcd-ca noetcd-peer Oct 25, 2122 07:40 UTC 99y etcd-ca noetcd-server Oct 25, 2122 07:40 UTC 99y etcd-ca nofront-proxy-client Oct 25, 2122 07:40 UTC 99y front-proxy-ca noscheduler.conf Oct 25, 2122 07:40 UTC 99y ca noCERTIFICATE AUTHORITY EXPIRES RESIDUAL TIME EXTERNALLY MANAGEDca Oct 22, 2032 07:40 UTC 99y noetcd-ca Oct 22, 2032 07:40 UTC 99y nofront-proxy-ca Oct 22, 2032 07:40 UTC 99y no

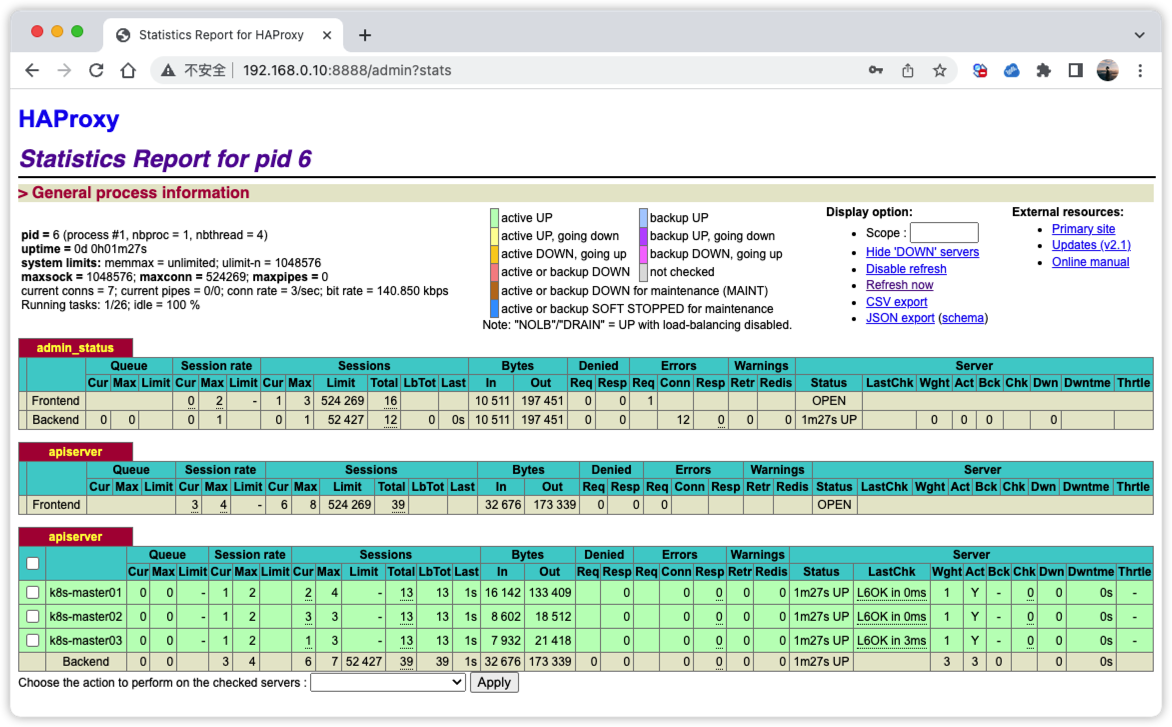

查看HAProxy控制台集群状态

访问:http://192.168.0.10:8888/admin?stats 账号密码都为admin

3.6.6 安装CNA插件(calico)

Calico是一个开源的虚拟化网络方案,支持基础的Pod网络通信和网络策略功能。

官方文档:https://projectcalico.docs.tigera.io/getting-started/kubernetes/quickstart

### 在任意Master控制节点执行# 下载最新版本编排文件$ kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml# 下载指定版本编排文件(可选)$ curl https://raw.githubusercontent.com/projectcalico/calico/v3.24.0/manifests/calico.yaml -O# 部署calico$ kubectl apply -f calico.yaml# 验证安装$ kubectl get pod -n kube-system | grep calicocalico-kube-controllers-86c9c65c67-j7pv4 1/1 Running 0 17mcalico-node-8mzpk 1/1 Running 0 17mcalico-node-tkzs2 1/1 Running 0 17mcalico-node-xbwvp 1/1 Running 0 17m

四、集群优化及组件安装

4.1 集群优化

4.1.1 修改NodePort端口范围(可选)

### 在所有Master管理节点执行$ sed -i '/- --secure-port=6443/a\ - --service-node-port-range=1-32767' /etc/kubernetes/manifests/kube-apiserver.yaml

4.1.2 解决kubectl get cs显示异常问题

### 在所有Master管理节点执行$ sed -i 's/^[^#].*--port=0$/#&/g' /etc/kubernetes/manifests/{kube-scheduler.yaml,kube-controller-manager.yaml}# 验证$ kubectl get csWarning: v1 ComponentStatus is deprecated in v1.19+NAME STATUS MESSAGE ERRORscheduler Healthy okcontroller-manager Healthy oketcd-0 Healthy {"health":"true","reason":""}

4.1.3 解决调度器监控不显示问题

### 在所有Master管理节点执行$ sed -i 's#bind-address=127.0.0.1#bind-address=0.0.0.0#g' /etc/kubernetes/manifests/kube-controller-manager.yaml$ sed -i 's#bind-address=127.0.0.1#bind-address=0.0.0.0#g' /etc/kubernetes/manifests/kube-scheduler.yaml

4.2 安装Metric-Server

指标服务Metrices-Server是Kubernetes中的一个常用插件,它类似于Top命令,可以查看Kubernetes中Node和Pod的CPU和内存资源使用情况。Metrices-Server每15秒收集一次指标,它在集群中的每个节点中运行,可扩展支持多达5000个节点的集群。

参考文档:https://github.com/kubernetes-sigs/metrics-server

### 在任意Master管理节点执行$ wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml -O metrics-server.yaml# 修改配置$ vim metrics-server.yaml......spec:containers:- args:- --cert-dir=/tmp- --secure-port=4443- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname- --kubelet-use-node-status-port- --metric-resolution=15s- --kubelet-insecure-tls # 不要验证由Kubelets提供的CA或服务证书。image: bitnami/metrics-server:0.6.1 # 修改成docker.io镜像imagePullPolicy: IfNotPresent......# 部署metrics-server$ kubectl apply -f metrics-server.yaml# 查看启动状态$ kubectl get pod -n kube-system -l k8s-app=metrics-server -wNAME READY STATUS RESTARTS AGEmetrics-server-655d65c95-lvb7z 1/1 Running 0 103s# 查看集群资源状态$ kubectl top nodesNAME CPU(cores) CPU% MEMORY(bytes) MEMORY%k8s-master01 193m 4% 2144Mi 27%k8s-master02 189m 4% 1858Mi 23%k8s-master03 268m 6% 1934Mi 24%

五、附录

5.1 重置节点(危险操作)

当在使用kubeadm init或kubeadm join部署节点出现失败状况时,可以使用以下操作对节点进行重置!

注:重置会将节点恢复到未部署前状态,若集群已正常工作则无需重置,否则将引起不可恢复的集群故障!

$ kubeadm reset -f$ ipvsadm --clear$ iptables -F && iptables -X && iptables -Z

5.2 常用查询命令

# 查看Token列表$ kubeadm token listTOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPSabcdef.0123456789abcdef 22h 2022-10-26T07:43:01Z authentication,signing <none> system:bootstrappers:kubeadm:default-node-tokenjgqg88.6mskuadei41o0s2d 40m 2022-10-25T09:43:01Z <none> Proxy for managing TTL for the kubeadm-certs secret <none># 查询节点运行状态$ kubectl get nodesNAME STATUS ROLES AGE VERSIONk8s-master01 Ready control-plane,master 81m v1.22.10k8s-master02 Ready control-plane,master 71m v1.22.10k8s-master03 Ready control-plane,master 69m v1.22.10# 查看证书到期时间$ kubeadm certs check-expirationCERTIFICATE EXPIRES RESIDUAL TIME CERTIFICATE AUTHORITY EXTERNALLY MANAGEDadmin.conf Oct 25, 2122 07:40 UTC 99y ca noapiserver Oct 25, 2122 07:40 UTC 99y ca noapiserver-etcd-client Oct 25, 2122 07:40 UTC 99y etcd-ca noapiserver-kubelet-client Oct 25, 2122 07:40 UTC 99y ca nocontroller-manager.conf Oct 25, 2122 07:40 UTC 99y ca noetcd-healthcheck-client Oct 25, 2122 07:40 UTC 99y etcd-ca noetcd-peer Oct 25, 2122 07:40 UTC 99y etcd-ca noetcd-server Oct 25, 2122 07:40 UTC 99y etcd-ca nofront-proxy-client Oct 25, 2122 07:40 UTC 99y front-proxy-ca noscheduler.conf Oct 25, 2122 07:40 UTC 99y ca noCERTIFICATE AUTHORITY EXPIRES RESIDUAL TIME EXTERNALLY MANAGEDca Oct 22, 2032 07:40 UTC 99y noetcd-ca Oct 22, 2032 07:40 UTC 99y nofront-proxy-ca Oct 22, 2032 07:40 UTC 99y no# 查看kubeadm初始化控制平面配置信息$ kubeadm config print init-defaultsapiVersion: kubeadm.k8s.io/v1beta3bootstrapTokens:- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authenticationkind: InitConfigurationlocalAPIEndpoint:advertiseAddress: 1.2.3.4bindPort: 6443nodeRegistration:criSocket: /var/run/dockershim.sockimagePullPolicy: IfNotPresentname: nodetaints: null---apiServer:timeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta3certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrollerManager: {}dns: {}etcd:local:dataDir: /var/lib/etcdimageRepository: k8s.gcr.iokind: ClusterConfigurationkubernetesVersion: 1.22.0networking:dnsDomain: cluster.localserviceSubnet: 10.96.0.0/12scheduler: {}# 查看kube-system空间Pod运行状态$ kubectl get pod --namespace=kube-systemNAME READY STATUS RESTARTS AGEcalico-kube-controllers-86c9c65c67-j7pv4 1/1 Running 0 47mcalico-node-8mzpk 1/1 Running 0 47mcalico-node-tkzs2 1/1 Running 0 47mcalico-node-xbwvp 1/1 Running 0 47mcoredns-7f6cbbb7b8-96hp9 1/1 Running 0 82mcoredns-7f6cbbb7b8-kfmnn 1/1 Running 0 82metcd-k8s-master01 1/1 Running 0 82metcd-k8s-master02 1/1 Running 0 72metcd-k8s-master03 1/1 Running 0 70mhaproxy-k8s-master01 1/1 Running 0 36mhaproxy-k8s-master02 1/1 Running 0 67mhaproxy-k8s-master03 1/1 Running 0 66mkeepalived-k8s-master01 1/1 Running 0 82mkeepalived-k8s-master02 1/1 Running 0 67mkeepalived-k8s-master03 1/1 Running 0 66mkube-apiserver-k8s-master01 1/1 Running 0 82mkube-apiserver-k8s-master02 1/1 Running 0 72mkube-apiserver-k8s-master03 1/1 Running 0 70mkube-controller-manager-k8s-master01 1/1 Running 0 23mkube-controller-manager-k8s-master02 1/1 Running 0 23mkube-controller-manager-k8s-master03 1/1 Running 0 23mkube-proxy-cvdlr 1/1 Running 0 70mkube-proxy-gnl7t 1/1 Running 0 72mkube-proxy-xnrt7 1/1 Running 0 82mkube-scheduler-k8s-master01 1/1 Running 0 23mkube-scheduler-k8s-master02 1/1 Running 0 23mkube-scheduler-k8s-master03 1/1 Running 0 23mmetrics-server-5786d84b7c-5v4rv 1/1 Running 0 8m38s

部署Kubernetes v1.22.10高可用集群的更多相关文章

- kubeadm使用外部etcd部署kubernetes v1.17.3 高可用集群

文章转载自:https://mp.weixin.qq.com/s?__biz=MzI1MDgwNzQ1MQ==&mid=2247483891&idx=1&sn=17dcd7cd ...

- kubeadm 使用 Calico CNI 以及外部 etcd 部署 kubernetes v1.23.1 高可用集群

文章转载自:https://mp.weixin.qq.com/s/2sWHt6SeCf7GGam0LJEkkA 一.环境准备 使用服务器 Centos 8.4 镜像,默认操作系统版本 4.18.0-3 ...

- 使用睿云智合开源 Breeze 工具部署 Kubernetes v1.12.3 高可用集群

一.Breeze简介 Breeze 项目是深圳睿云智合所开源的Kubernetes 图形化部署工具,大大简化了Kubernetes 部署的步骤,其最大亮点在于支持全离线环境的部署,且不需要FQ获取 G ...

- K8S学习笔记之二进制部署Kubernetes v1.13.4 高可用集群

0x00 概述 本次采用二进制文件方式部署,本文过程写成了更详细更多可选方案的ansible部署方案 https://github.com/zhangguanzhang/Kubernetes-ansi ...

- lvs+keepalived部署k8s v1.16.4高可用集群

一.部署环境 1.1 主机列表 主机名 Centos版本 ip docker version flannel version Keepalived version 主机配置 备注 lvs-keepal ...

- Centos7.6部署k8s v1.16.4高可用集群(主备模式)

一.部署环境 主机列表: 主机名 Centos版本 ip docker version flannel version Keepalived version 主机配置 备注 master01 7.6. ...

- 使用开源Breeze工具部署Kubernetes 1.12.1高可用集群

Breeze项目是深圳睿云智合所开源的Kubernetes图形化部署工具,大大简化了Kubernetes部署的步骤,其最大亮点在于支持全离线环境的部署,且不需要FQ获取Google的相应资源包,尤其适 ...

- Breeze 部署 Kubernetes 1.12.1高可用集群

今天看文章介绍了一个开源部署 K8S 的工具,有空研究下~ Github 地址: https://github.com/wise2c-devops/breeze

- kubernetes之手动部署k8s 1.14.1高可用集群

1. 架构信息 系统版本:CentOS 7.6 内核:3.10.0-957.el7.x86_64 Kubernetes: v1.14.1 Docker-ce: 18.09.5 推荐硬件配置:4核8G ...

- 部署kubernetes1.8.4+contiv高可用集群

原理和架构图参考上一篇,这里只记录操作步骤.由于东西较多,篇幅也会较长. etcd version: 3.2.11 kube version: 1.8.4 contiv version: 1.1.7 ...

随机推荐

- redisson分布式锁原理剖析

redisson分布式锁原理剖析 相信使用过redis的,或者正在做分布式开发的童鞋都知道redisson组件,它的功能很多,但我们使用最频繁的应该还是它的分布式锁功能,少量的代码,却实现了加锁. ...

- php7怎么安装memcache扩展

php7安装memcache扩展 1.下载文件,解压缩 memcache windows php7下载地址: https://github.com/nono303/PHP7-memcache-dll ...

- 关于CSDN微信登录接口的研究

代码 import requests import re from threading import Thread import time import requests from io import ...

- 关于Wegame页面空白的问题解决

前言 前几天帮亲戚家装电脑系统,装好后发现 wegame 所有页面都不能正确加载(全部是空白页面),很神奇,在网上找了很多种解决办法都没有效果,后来不过细心的我发现360浏览器一直提示我证书不安全过期 ...

- C# Math 中的常用的数学运算

〇.动态库 System.Math.dll 引入动态库 using System.Math; Math 为通用数学函数.对数函数.三角函数等提供常数和静态方法,使用起来非常方便,下边简单列一下常用 ...

- oracle第二步创建表空间、用户、授权

Windows+r→键入sqlplus,输入已安装好的oracle数据库超级管理员账号密码登录.显示: 成功. 创建表空间: 创建用户并默认表空间: 授权该创建用户对数据库的操作: 代码: SQL&g ...

- 【每日一题】【map存值】2022年2月25日-NC112 进制转换

描述给定一个十进制数 M ,以及需要转换的进制数 N .将十进制数 M 转化为 N 进制数. 当 N 大于 10 以后, 应在结果中使用大写字母表示大于 10 的一位,如 'A' 表示此位为 10 , ...

- 【py模板】missingno画缺失直观图,matplotlib和sns画箱线图

import missingno as msn import pandas as pd train = pd.read_csv('cupHaveHead1.csv') msn.matrix(train ...

- 【深入浅出SpringCloud原理及实战】「SpringCloud-Alibaba系列」微服务模式搭建系统基础架构实战指南及版本规划踩坑分析

Spring Cloud Alibaba Nacos Discovery Spring Boot 应用程序在服务注册与发现方面提供和 Nacos 的无缝集成. 通过一些简单的注解,您可以快速来注册一个 ...

- 电脑无法自动获取ip地址

1.按下win+r,输入cmd,打开命令提示符;2.执行ipconfig命令看下能否获取到ip地址:3.若不能,执行ipconfig /renew命令重新获取ip:4.执行ipconfig命令看下能否 ...