【转载】 机器学习的高维数据可视化技术(t-SNE 介绍) 外文博客原文:How t-SNE works and Dimensionality Reduction

原文地址:

https://www.displayr.com/using-t-sne-to-visualize-data-before-prediction/

该文是网上传的比较多的一个 t-SNE 技术介绍的博客,原文是英文,国内的很多博客将其翻译成中文,这里直接将原文转过来了,以备以后学习使用时查找。

========================================

t-SNE is a machine learning technique for dimensionality reduction that helps you to identify relevant patterns. The main advantage of t-SNE is the ability to preserve local structure. This means, roughly, that points which are close to one another in the high-dimensional data set will tend to be close to one another in the chart. t-SNE also produces beautiful looking visualizations.

When setting up a predictive model, the first step should always be to understand the data. Although scanning raw data and calculating basic statistics can lead to some insights, nothing beats a chart. However, fitting multiple dimensions of data into a simple chart is always a challenge (dimensionality reduction). This is where t-SNE (or, t-distributed stochastic neighbor embedding for long) comes in.

In this blog post, I explain how t-SNE works, and how to conduct and interpret your own t-SNE.

The t-SNE algorithm explained

This post is about how to use t-SNE so I'll be brief with the details here. You can easily skip this section and still produce beautiful visualizations.

The t-SNE algorithm models the probability distribution of neighbors around each point. Here, the term neighbors refers to the set of points which are closest to each point. In the original, high-dimensional space this is modeled as a Gaussian distribution. In the 2-dimensional output space this is modeled as a t-distribution. The goal of the procedure is to find a mapping onto the 2-dimensional space that minimizes the differences between these two distributions over all points. The fatter tails of a t-distribution compared to a Gaussian help to spread the points more evenly in the 2-dimensional space.

The main parameter controlling the fitting is called perplexity. Perplexity is roughly equivalent to the number of nearest neighbors considered when matching the original and fitted distributions for each point. A low perplexity means we care about local scale and focus on the closest other points. High perplexity takes more of a "big picture" approach.

Because the distributions are distance based, all the data must be numeric. You should convert categorical variables to numeric ones by binary encoding or a similar method. It is also often useful to normalize the data, so each variable is on the same scale. This avoids variables with a larger numeric range dominating the analysis.

Note that t-SNE only works with the data it is given. It does not produce a model that you can then apply to new data.

t-SNE visualizations

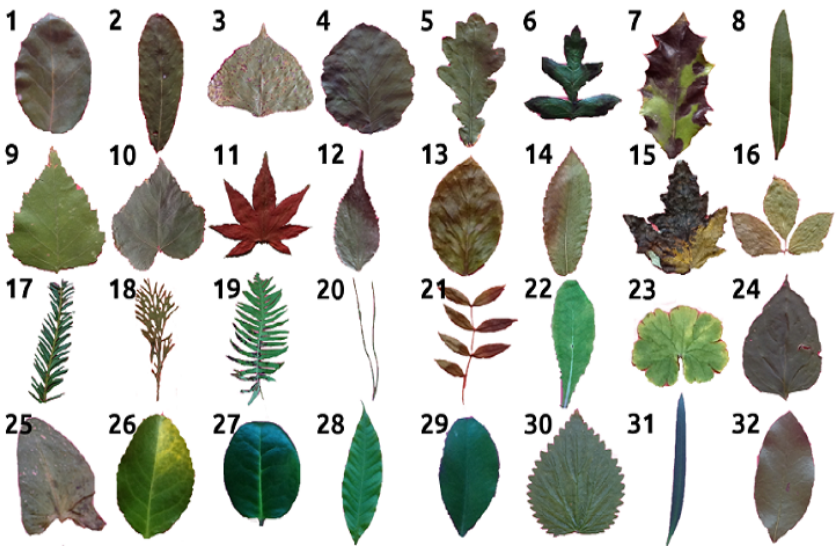

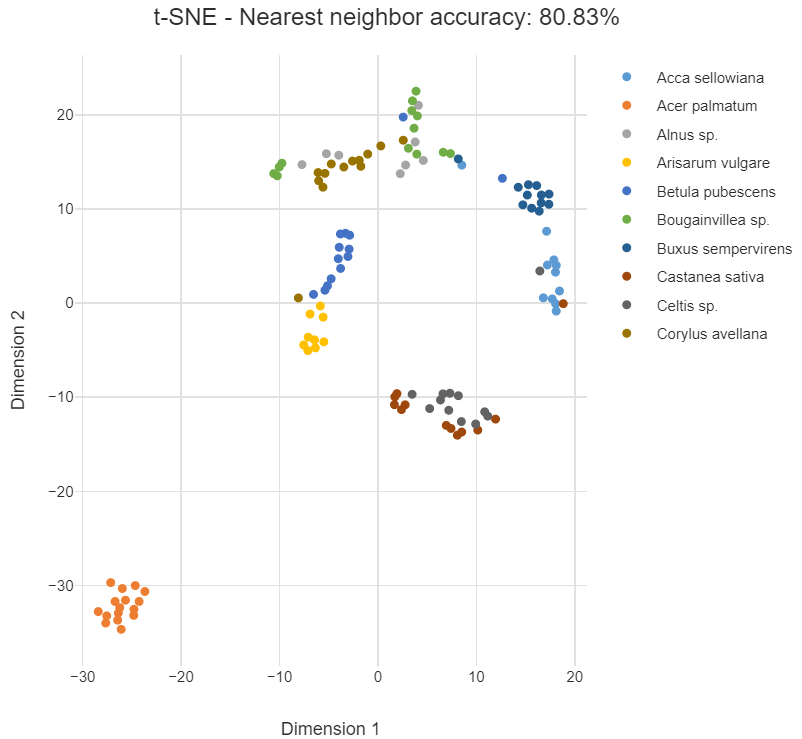

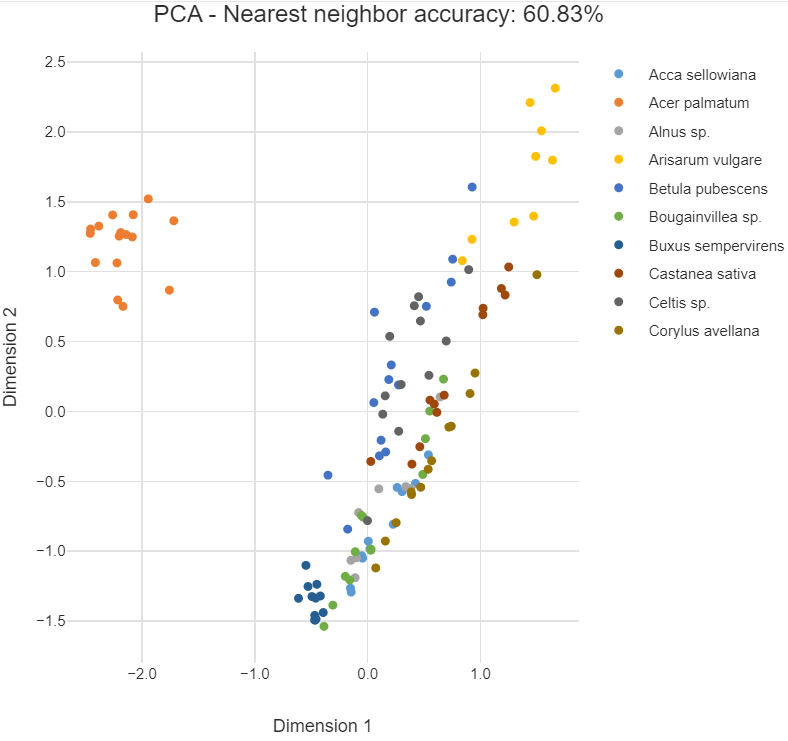

The first data set I am going to use contains the classification of 10 different types of leaf based on their physical characteristics. In this case t-SNE takes as input 14 numeric variables. These include the elongation and aspect ratio of the leaves. The following chart shows the 2-dimensional output. The species of the plant determines the labels (and colors) of the points.

The data points for the species Acer palmatum form a cluster of orange points in the lower left. This indicates that those leaves are quite distinct from the leaves of the other species. The categories in this example are generally well grouped. Points from the same species (same color) tend to be grouped close to one another. However, in the middle points from Castanea sativa and Celtis sp. overlap, implying that they are similar.

The nearest neighbor accuracy gives the probability that a random point has the same species as its closest neighbor. This would be close to 100% if the points were perfectly grouped according to their species. A high nearest neighbor accuracy implies that the data can be cleanly separated into groups.

Perplexity

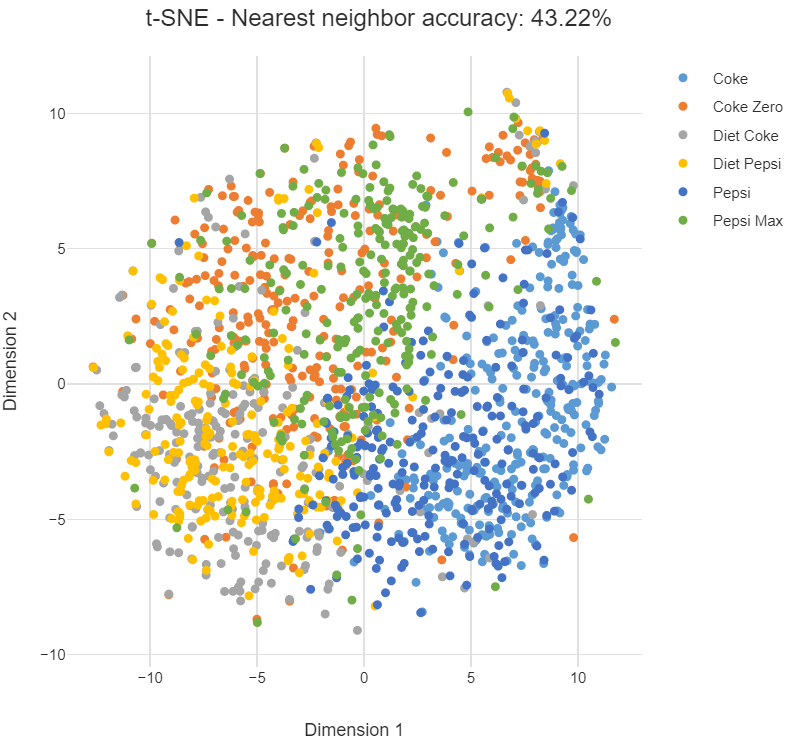

Next, I perform a similar analysis with cola brand data. In this example, the data corresponds to whether or not people in a survey associated 30 or so attributes with the different cola brands. To demonstrate the impact of perplexity, I start by setting it to a low value of 2. The mapping of each point considers only its very closest neighbors. We tend to see many small groups of a few points.

Now I'll rerun the t-SNE with a high perplexity of 100. Below we see the points are more evenly spread out, as though they are less-strongly attracted to each other.

In either case, the cola data is less separable than the leaves. Although there are regions where one brand is more concentrated, there are no clear boundaries.

Note that there is no "correct" value for perplexity, although numbers in the range from 5 to 50 often produce the most appealing output. Within this range of perplexity, t-SNE is known for being relatively robust.

Insights into prediction

Measuring the distances or angles between points in these charts do not allow us to deduce anything specific and quantitative about the data. So is there more to this than pretty visualizations? Absolutely yes.

Discovering patterns at an early stage helps to guide the next steps of data science. If categories are well-separated by t-SNE, machine learning is likely to be able to find a mapping from an unseen new data point to its category. Given the right prediction algorithm, we can then expect to achieve high accuracy.

In the Acer palmatum example above one category is isolated. This can mean that if all we want to do is distinguish this category from the remainder, a simple model will suffice.

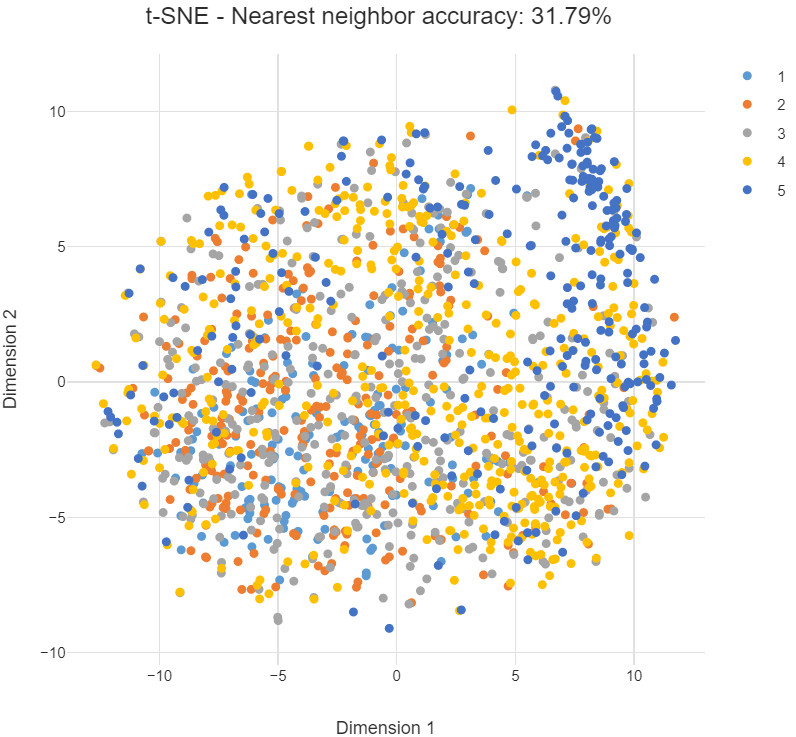

In contrast, if the categories are overlapping, machine learning may not be so successful. At the very least you can expect to have to work harder and be more creative to make decent predictions. This is the case below, which is the same as the previous plot except that now we are grouping by the strength of preference for a brand (on a scale from 1 to 5). The fact that the categories are more diffuse suggests that strength of preference will be harder to predict than cola brand. The nearest neighbor accuracy is also lower.

Comparison to PCA

It's natural to ask how t-SNE compares to other dimension reduction techniques. The most popular of these is principal components analysis (PCA). PCA finds new dimensions that explain most of the variance in the data. It is best at positioning those points that are far apart from each other because they are the drivers of the variance.

The chart below plots the first 2 dimensions of PCA for the leaf data. We see that Acer palmatum is also isolated but the other categories are more diffuse. This is because PCA cares relatively little about local neighbors. It is also a linear method, meaning that if the relationship between the variables is nonlinear it performs poorly. Such an example is where the data are on the surface of a sphere in 3 dimensions. All is not lost, however, as PCA is more useful than t-SNE for compressing data to create a smaller number of features for input to predictive algorithms.

Summary

t-SNE is a user-friendly method for visualizing high dimensional space. It often produces more insightful charts than the alternatives. Next time you have new data to analyze, try t-SNE first and see where it leads you!

=======================================

【转载】 机器学习的高维数据可视化技术(t-SNE 介绍) 外文博客原文:How t-SNE works and Dimensionality Reduction的更多相关文章

- 前端er必须掌握的数据可视化技术

又是一月结束,打工人准时准点的汇报工作如期和大家见面啦.提到汇报,必不可少的一部分就是数据的汇总.分析. 作为一名合格的社会人,我们每天都在工作.生活.学习中和数字打交道.小到量化的工作内容,大到具体 ...

- 新鲜:阿里云的DataV数据可视化技术可以用起来

直接通过拖拽+关联的方式就可以比较方便的做出下面这种大屏展示数据的界面 只要阿里云上购买DataV数据可视化套件(https://data.aliyun.com/experience/case8? ...

- 用Python的Plotly画出炫酷的数据可视化(含各类图介绍,附代码)

前言 本文的文字及图片来源于网络,仅供学习.交流使用,不具有任何商业用途,版权归原作者所有,如有问题请及时联系我们以作处理. 作者: 我被狗咬了 在谈及数据可视化的时候,我们通常都会使用到matplo ...

- 数据可视化 gojs 简单使用介绍

目录 1. gojs 简介 2. gojs 应用场景 3. 为什么选用 gojs: 4. gojs 上手指南 5. 小技巧(非常实用哦) 6. 实践:实现节点分组关系可视化交互图 最后 本文是关于如何 ...

- iOS开发数据持久化技术02——plist介绍

有疑问的请加qq交流群:390438081 我的QQ:604886384(注明来意) 微信:niuting823 1. 简单介绍:属性列表是一种xml格式的文件.扩展名.plist: 2. 特性:pl ...

- 自动驾驶汽车数据不再封闭,Uber 开源新的数据可视化系统

日前,Uber 开源了基于 web 的自动驾驶可视化系统(AVS),称该系统为自动驾驶行业带来理解和共享数据的新方式.AVS 由Uber旗下负责自动驾驶汽车研发的技术事业群(ATG)开发,目前该系统已 ...

- 地理数据可视化:Simple,Not Easy

如果要给2015年的地理信息行业打一个标签,地理大数据一定是其中之一.在信息技术飞速发展的今天,“大数据”作为一种潮流铺天盖地的席卷了各行各业,从央视的春运迁徙图到旅游热点预测,从大数据工程师奇货可居 ...

- Android实现数据存储技术

转载:Android实现数据存储技术 本文介绍Android中的5种数据存储方式. 数据存储在开发中是使用最频繁的,在这里主要介绍Android平台中实现数据存储的5种方式,分别是: 1 使用Shar ...

- Python调用matplotlib实现交互式数据可视化图表案例

交互式的数据可视化图表是 New IT 新技术的一个应用方向,在过去,用户要在网页上查看数据,基本的实现方式就是在页面上显示一个表格出来,的而且确,用表格的方式来展示数据,显示的数据量会比较大,但是, ...

- PoPo数据可视化周刊第4期

PoPo数据可视化 聚焦于Web数据可视化与可视化交互领域,发现可视化领域有意思的内容.不想错过可视化领域的精彩内容, 就快快关注我们吧 :) 微信号:popodv_com 由于国庆节的原因,累计 ...

随机推荐

- 别想宰我,怎么查看云厂商是否超卖?详解 cpu steal time

据说有些云厂商会超卖,宿主有 96 个核心,结果卖出去 100 多个 vCPU,如果这些虚机负载都不高,大家相安无事,如果这些虚机同时运行一些高负载的任务,相互之间就会抢占 CPU,对应用程序有较大影 ...

- Nuxt 3组件开发与管理

title: Nuxt 3组件开发与管理 date: 2024/6/20 updated: 2024/6/20 author: cmdragon excerpt: 摘要:本文深入探讨了Nuxt 3的组 ...

- python重拾第十一天-REDIS缓存数据库

缓存数据库介绍 NoSQL(NoSQL = Not Only SQL ),意即"不仅仅是SQL",泛指非关系型的数据库,随着互联网web2.0网站的兴起,传统的关系数据库在应付we ...

- Kubernetes(七)数据存储

数据存储 容器的生命周期可能很短,会被频繁地创建和销毁.容器在销毁时,保存在容器中的数据也会被清除.这种结果对用户来说,在某些情况下是不乐意看到的.为了持久化保存容器的数据,kubernetes引入了 ...

- Swin Transformer:最佳论文,准确率和性能双佳的视觉Transformer | ICCV 2021

论文提出了经典的Vision Transormer模型Swin Transformer,能够构建层级特征提高任务准确率,而且其计算复杂度经过各种加速设计,能够与输入图片大小成线性关系.从实验结果来看, ...

- 全志科技T3国产工业评估板规格书(四核ARM Cortex-A7,主频1.2GHz)

1 评估板简介 创龙科技TLT3-EVM是一款基于全志科技T3处理器设计的4核ARM Cortex-A7高性能低功耗国产评估板,每核主频高达1.2GHz,由核心板和评估底板组成. 评估板接口资源丰富, ...

- Swagger注解说明

常用注解: - @Api()用于类: 表示标识这个类是swagger的资源 - @ApiOperation()用于方法: 表示一个http请求的操作 - @ApiParam()用于方法,参数,字段说明 ...

- 基于vsftpd搭建项目文件服务器

vsftpd 是"very secure FTP daemon"的缩写,安全性是它的一个最大的特点.vsftpd 是一个 UNIX 类操作系统上运行的服务器的名字,它可以运行在诸如 ...

- Linux自己制作rpm包

制作rpm包 由源码包---->rpm包 安装制作rpm包工具包rpm-build 在制作过程中需要源码包和配置文件 rpmbuild制作rpm包的原理: 1.首先rpmbuild会先将源码包进 ...

- oeasy教您玩转vim - 1 - # 存活下来 🥊

存活下来 更新 apt 源,升级 vim vim 是什么 vim 是类 unix 系统上的一个文本编辑神器,在 Linux 系统环境中也被许多程序员使用,书写程序和文档. 我们本次课程将围绕 Vim ...