使用kubeadm安装Kubernetes 1.15.3 并开启 ipvs

一、安装前准备

机器列表

| 主机名 | IP |

|---|---|

| node-1(master) | 1.1.1.101 |

| node-2(node) | 1.1.1.102 |

| node-3(node) | 1.1.1.103 |

设置时区

cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

timedatectl set-timezone Asia/Shanghai

关闭防火墙及SELINUX

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i 's/=enforcing/=disabled/g' /etc/selinux/config

关闭 swap 分区

swapoff -a

sed -i '/swap/s/^/#/' /etc/fstab

默认情况下,Kubelet不允许所在的主机存在交换分区,后期规划的时候,可以考虑在系统安装的时候不创建交换分区,针对已经存在交换分区的可以设置忽略禁止使用Swap的限制,不然无法启动Kubelet。

[root@node-1 ~]# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

加载 br_netfilter 模块,并创建/etc/sysctl.d/k8s.conf文件

lsmod | grep br_netfilter

modprobe br_netfilter

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl --system

ipvs准备

由于ipvs已经加入到了内核的主干,所以为kube-proxy开启ipvs的前提需要加载以下的内核模块:

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

在所有的Kubernetes节点上执行以下脚本:

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

上面脚本创建了的/etc/sysconfig/modules/ipvs.modules文件,保证在节点重启后能自动加载所需模块。 使用lsmod | grep -e ip_vs -e nf_conntrack_ipv4命令查看是否已经正确加载所需的内核模块。

# 检查加载的模块

lsmod | grep -e ipvs -e nf_conntrack_ipv4

# 或者

cut -f1 -d " " /proc/modules | grep -e ip_vs -e nf_conntrack_ipv4

接下来还需要确保各个节点上已经安装了ipset软件包。 为了便于查看ipvs的代理规则,最好安装一下管理工具ipvsadm。

yum install ipset ipvsadm -y

如果以上前提条件如果不满足,则即使kube-proxy的配置开启了ipvs模式,也会退回到iptables模式。

准备 k8s的yum仓库

[root@node-1 yum.repos.d]# cat kubernetes.repo

[kubernetes]

name=Kubernetes Repo

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

enabled=1

准备docker-ce 的仓库

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

二、集群部署

安装 docker-ce 和 kubeadm

yum install docker-ce kubelet kubeadm kubectl

启动docker、kubelet并设置开机启动

注意,此时kubelet是无法正常启动的,可以查看/var/log/messages有报错信息,等待执行初始化之后即可正常,为正常现象。

systemctl enable docker && systemctl start docker

systemctl enable kubelet && systemctl start kubelet

各节点提前下载镜像

[root@node-2 opt]# cat k8s-image-download.sh

#!/bin/bash

# liyongjian5179@163.com

# download k8s 1.15.3 images

# get image-list by 'kubeadm config images list --kubernetes-version=v1.15.3'

# gcr.azk8s.cn/google-containers == k8s.gcr.io

if [ $# -ne 1 ];then

echo "please user in: ./`basename $0` KUBERNETES-VERSION"

exit 1

fi

version=$1

images=`kubeadm config images list --kubernetes-version=${version} |awk -F'/' '{print $2}'`

for imageName in ${images[@]};do

docker pull gcr.azk8s.cn/google-containers/$imageName

docker tag gcr.azk8s.cn/google-containers/$imageName k8s.gcr.io/$imageName

docker rmi gcr.azk8s.cn/google-containers/$imageName

done

[root@node-2 opt]#./k8s-image-download.sh 1.15.3

主节点执行

kubeadm init --kubernetes-version=v1.15.3 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap --ignore-preflight-errors=NumCPU --apiserver-advertise-address=1.1.1.101

- --apiserver-advertise-address:指定用 Master 的哪个IP地址与 Cluster的其他节点通信。

- --service-cidr:指定Service网络的范围,即负载均衡VIP使用的IP地址段。

- --pod-network-cidr:指定Pod网络的范围,即Pod的IP地址段。

- --image-repository:Kubenetes默认Registries地址是 k8s.gcr.io,一般在国内并不能访问 gcr.io,可以将其指定为阿里云镜像地址:registry.aliyuncs.com/google_containers。

- --kubernetes-version=v1.15.3:指定要安装的版本号。

- --ignore-preflight-errors=:忽略运行时的错误,例如执行时存在[ERROR NumCPU]和[ERROR Swap],忽略这两个报错就是增加--ignore-preflight-errors=NumCPU 和--ignore-preflight-errors=Swap的配置即可。

如果有多个网卡,最好指定一下 apiserver-advertise 地址

[root@node-1 ~]# kubeadm init --kubernetes-version=v1.15.3 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap --ignore-preflight-errors=NumCPU --apiserver-advertise-address=1.1.1.101

[init] Using Kubernetes version: v1.15.3

[preflight] Running pre-flight checks

[WARNING NumCPU]: the number of available CPUs 1 is less than the required 2

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [node-1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 1.1.1.101]

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [node-1 localhost] and IPs [1.1.1.101 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [node-1 localhost] and IPs [1.1.1.101 127.0.0.1 ::1]

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 22.503724 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.15" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node node-1 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node node-1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: z1609x.bg2tkrsrfwlrl3rb

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 1.1.1.101:6443 --token z1609x.bg2tkrsrfwlrl3rb \

--discovery-token-ca-cert-hash sha256:0753a3d2f04c6c34c5ad88d4be3bc508b1e5b9d00908b29442f7068645521703

初始化操作主要经历了下面15个步骤,每个阶段均输出均使用[步骤名称]作为开头:

- [init]:指定版本进行初始化操作

- [preflight] :初始化前的检查和下载所需要的Docker镜像文件。

- [kubelet-start] :生成kubelet的配置文件”/var/lib/kubelet/config.yaml”,没有这个文件kubelet无法启动,所以初始化之前的kubelet实际上启动失败。

- [certificates]:生成Kubernetes使用的证书,存放在/etc/kubernetes/pki目录中。

- [kubeconfig] :生成 KubeConfig 文件,存放在/etc/kubernetes目录中,组件之间通信需要使用对应文件。

- [control-plane]:使用/etc/kubernetes/manifest目录下的YAML文件,安装 Master 组件。

- [etcd]:使用/etc/kubernetes/manifest/etcd.yaml安装Etcd服务。

- [wait-control-plane]:等待control-plan部署的Master组件启动。

- [apiclient]:检查Master组件服务状态。

- [uploadconfig]:更新配置

- [kubelet]:使用configMap配置kubelet。

- [patchnode]:更新CNI信息到Node上,通过注释的方式记录。

- [mark-control-plane]:为当前节点打标签,打了角色Master,和不可调度标签,这样默认就不会使用Master节点来运行Pod。

- [bootstrap-token]:生成token记录下来,后边使用kubeadm join往集群中添加节点时会用到

- [addons]:安装附加组件CoreDNS和kube-proxy

kubectl默认会在执行的用户家目录下面的.kube目录下寻找config文件。这里是将在初始化时[kubeconfig]步骤生成的admin.conf拷贝到.kube/config。

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

安装网络插件

在此处,安装 flannel

[root@node-1 ~]# mkdir k8s

[root@node-1 ~]# cd k8s/ && wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

--2019-08-27 11:51:28-- https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.0.133, 151.101.64.133, 151.101.128.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.0.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 12487 (12K) [text/plain]

Saving to: ‘kube-flannel.yml’

100%[=========================================================================>] 12,487 --.-K/s in 0s

2019-08-27 11:51:29 (52.9 MB/s) - ‘kube-flannel.yml’ saved [12487/12487]

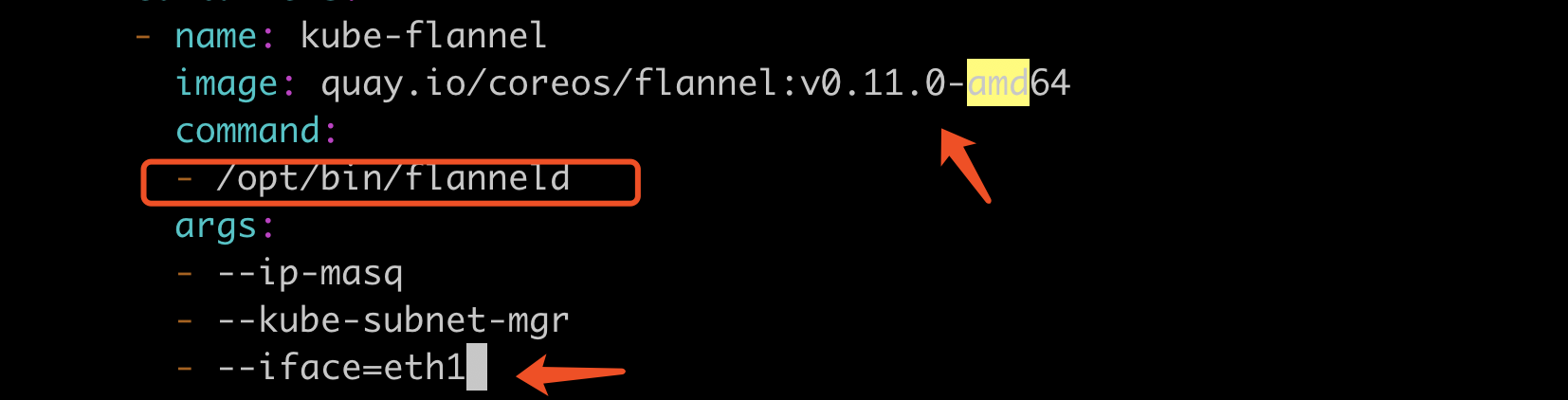

如果Node有多个网卡的话,参考flannel issues 39701,目前需要在kube-flannel.yml中使用--iface参数指定集群主机内网网卡的名称,否则可能会出现dns无法解析。需要将kube-flannel.yml下载到本地,flanneld启动参数加上--iface=<iface-name>

[root@node-1 k8s]# vim kube-flannel.yml

找到 /opt/bin/flanneld 这一行,在下面加入

“- --iface=eth1” 指定使用eth1网卡 (有很多个)

启动 flannel

[root@node-1 k8s]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

node-2 节点加入集群

[root@node-2 ~]# kubeadm join 1.1.1.101:6443 --token z1609x.bg2tkrsrfwlrl3rb \

> --discovery-token-ca-cert-hash sha256:0753a3d2f04c6c34c5ad88d4be3bc508b1e5b9d00908b29442f7068645521703

[preflight] Running pre-flight checks

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.15" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

node-3节点加入集群

[root@node-3 opt]# kubeadm join 1.1.1.101:6443 --token z1609x.bg2tkrsrfwlrl3rb \

> --discovery-token-ca-cert-hash sha256:0753a3d2f04c6c34c5ad88d4be3bc508b1e5b9d00908b29442f7068645521703

[preflight] Running pre-flight checks

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.15" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

查看集群健康状态

确认个组件都处于healthy状态

[root@node-1 ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

集群初始化如果遇到问题,可以使用下面的命令进行清理:

kubeadm reset

ifconfig cni0 down

ip link delete cni0

ifconfig flannel.1 down

ip link delete flannel.1

rm -rf /var/lib/cni/

查看集群节点状态, 已经都变成 Ready 状态了

[root@node-1 k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node-1 Ready master 99m v1.15.3

node-2 Ready <none> 23m v1.15.3

node-3 Ready <none> 23m v1.15.3

查看集群 pod 状态都是Running状态

[root@node-1 k8s]# kubectl get pod --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-5c98db65d4-2n6vq 1/1 Running 0 100m 10.244.0.2 node-1 <none> <none>

kube-system coredns-5c98db65d4-6lpr2 1/1 Running 0 100m 10.244.1.2 node-3 <none> <none>

kube-system etcd-node-1 1/1 Running 0 99m 1.1.1.101 node-1 <none> <none>

kube-system kube-apiserver-node-1 1/1 Running 0 99m 1.1.1.101 node-1 <none> <none>

kube-system kube-controller-manager-node-1 1/1 Running 0 99m 1.1.1.101 node-1 <none> <none>

kube-system kube-flannel-ds-amd64-clrjj 1/1 Running 0 2m19s 1.1.1.102 node-2 <none> <none>

kube-system kube-flannel-ds-amd64-hth2m 1/1 Running 0 2m19s 1.1.1.101 node-1 <none> <none>

kube-system kube-flannel-ds-amd64-wmqnf 1/1 Running 0 2m19s 1.1.1.103 node-3 <none> <none>

kube-system kube-proxy-8kgdr 1/1 Running 0 25m 1.1.1.102 node-2 <none> <none>

kube-system kube-proxy-g456v 1/1 Running 0 25m 1.1.1.103 node-3 <none> <none>

kube-system kube-proxy-gdtqx 1/1 Running 0 100m 1.1.1.101 node-1 <none> <none>

kube-system kube-scheduler-node-1 1/1 Running 0 99m 1.1.1.101 node-1 <none> <none>

测试 dns 解析是否正常

[root@node-1 k8s]# kubectl run -it busybox --image=radial/busyboxplus:curl

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

If you don't see a command prompt, try pressing enter.

[ root@busybox-c86c47799-qr9wq:/ ]$ nslookup kubernetes

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

[ root@busybox-c86c47799-qr9wq:/ ]$ nslookup kubernetes.default

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes.default

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

删除节点

在master节点上执行:kubectl drain node-3 --delete-local-data --force --ignore-daemonsets

当节点变成不可调度状态时候 SchedulingDisabled,执行 kubectl delete node node-3

[root@node-1 k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node-1 Ready master 125m v1.15.3

node-2 Ready <none> 49m v1.15.3

node-3 Ready <none> 49m v1.15.3

[root@node-1 k8s]# kubectl drain node-3 --delete-local-data --force --ignore-daemonsets

node/node-3 cordoned

WARNING: ignoring DaemonSet-managed Pods: kube-system/kube-flannel-ds-amd64-wmqnf, kube-system/kube-proxy-g456v

evicting pod "coredns-5c98db65d4-6lpr2"

evicting pod "nginx-deploy-7689897d8d-kfc7v"

pod/nginx-deploy-7689897d8d-kfc7v evicted

pod/coredns-5c98db65d4-6lpr2 evicted

node/node-3 evicted

[root@node-1 k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node-1 Ready master 125m v1.15.3

node-2 Ready <none> 50m v1.15.3

node-3 Ready,SchedulingDisabled <none> 50m v1.15.3

[root@node-1 k8s]# kubectl delete node node-3

node "node-3" deleted

[root@node-1 k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node-1 Ready master 126m v1.15.3

node-2 Ready <none> 50m v1.15.3

在 node-3 上执行

kubeadm reset

ifconfig cni0 down

ip link delete cni0

ifconfig flannel.1 down

ip link delete flannel.1

rm -rf /var/lib/cni/

systemctl stop kubelet

kube-proxy 开启 ipvs

修改ConfigMap的kube-system/kube-proxy中的config.conf,mode: “ipvs”

[root@node-1 k8s]# kubectl edit cm kube-proxy -n kube-system

...

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: ""

strictARP: false

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

resourceContainer: /kube-proxy

...

configmap/kube-proxy edited

对于Kubernetes来说,可以直接将这三个Pod删除之后,会自动重建。

[root@node-1 k8s]# kubectl get pods -n kube-system|grep proxy

kube-proxy-8kgdr 1/1 Running 0 79m

kube-proxy-dq8zz 1/1 Running 0 24m

kube-proxy-gdtqx 1/1 Running 0 155m

批量删除 kube-proxy

kubectl get pod -n kube-system | grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'

由于你已经通过ConfigMap修改了kube-proxy的配置,所以后期增加的Node节点,会直接使用ipvs模式。

查看日志

[root@node-1 k8s]# kubectl get pods -n kube-system|grep proxy

kube-proxy-84mgz 1/1 Running 0 16s

kube-proxy-r8sxj 1/1 Running 0 15s

kube-proxy-wjdmp 1/1 Running 0 12s

[root@node-1 k8s]# kubectl logs kube-proxy-84mgz -n kube-system

I0827 04:59:16.916862 1 server_others.go:170] Using ipvs Proxier.

W0827 04:59:16.917140 1 proxier.go:401] IPVS scheduler not specified, use rr by default

I0827 04:59:16.917748 1 server.go:534] Version: v1.15.3

I0827 04:59:16.927407 1 conntrack.go:52] Setting nf_conntrack_max to 131072

I0827 04:59:16.929217 1 config.go:187] Starting service config controller

I0827 04:59:16.929236 1 controller_utils.go:1029] Waiting for caches to sync for service config controller

I0827 04:59:16.929561 1 config.go:96] Starting endpoints config controller

I0827 04:59:16.929577 1 controller_utils.go:1029] Waiting for caches to sync for endpoints config controller

I0827 04:59:17.029899 1 controller_utils.go:1036] Caches are synced for endpoints config controller

I0827 04:59:17.029954 1 controller_utils.go:1036] Caches are synced for service config controller

日志中打印出了Using ipvs Proxier,说明ipvs模式已经开启。

使用ipvsadm测试,可以查看之前创建的Service已经使用LVS创建了集群。

[root@node-1 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 1.1.1.101:6443 Masq 1 0 0

TCP 10.96.0.10:53 rr

-> 10.244.0.2:53 Masq 1 0 0

-> 10.244.2.8:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.0.2:9153 Masq 1 0 0

-> 10.244.2.8:9153 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.0.2:53 Masq 1 0 0

-> 10.244.2.8:53 Masq 1 0 0

附:通过配置文件启动主节点

在 master 节点配置 kubeadm 初始化文件,可以通过如下命令导出默认的初始化配置:

[root@node-1 ~]# kubeadm config print init-defaults > kubeadm.yaml

然后根据我们自己的需求修改配置,比如修改 imageRepository 的值,kube-proxy 的模式为 ipvs,另外需要注意的是我们这里是准备安装 flannel 网络插件的,需要将 networking.podSubnet 设置为 10.244.0.0/16:

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 1.1.1.101

bindPort: 6443

#controlPlaneEndpoint: 1.1.1.100 #如果前面配置了负载均衡,此处填写vip地址

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: node-1

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS #dns 类型

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: gcr.azk8s.cn/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.15.3

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs # kube-proxy 模式

执行

kubeadm init --config kubeadm.yaml

如果忘记了执行后的 join 命令,可以使用命令 kubeadm token create--print-join-command重新获取。

使用kubeadm安装Kubernetes 1.15.3 并开启 ipvs的更多相关文章

- 使用kubeadm 安装 kubernetes 1.15.1

简介: Kubernetes作为Google开源的容器运行平台,受到了大家的热捧.搭建一套完整的kubernetes平台,也成为试用这套平台必须迈过的坎儿.kubernetes1.5版本以及之前,安装 ...

- 在CentOS 7.6 以 kubeadm 安装 Kubernetes 1.15 最佳实践

前言 Kubernetes作为容器编排工具,简化容器管理,提升工作效率而颇受青睐.很多新手部署Kubernetes由于"scientifically上网"问题举步维艰,本文以实战经 ...

- Centos 使用kubeadm安装Kubernetes 1.15.3

本来没打算搞这个文章的,第一里面有瑕疵(没搞定的地方),第二在我的Ubuntu 18 Kubernetes集群的安装和部署 以及Helm的安装 也有安装,第三 和社区的问文章比较雷同 https:// ...

- Kubeadm安装Kubernetes 1.15.1

一.实验环境准备 服务器虚拟机准备 IP CPU 内存 hostname 192.168.198.200 >=2c >=2G master 192.168.198.201 >=2c ...

- kubeadm安装Kubernetes 1.15 实践

原地址参考github 一.环境准备(在全部设备上进行) 3 台 centos7.5 服务器,网络使用 Calico. IP地址 节点角色 CPU 内存 Hostname 10.0.1.45 mast ...

- 使用kubeadm安装kubernetes 1.15

1.主机准备篇 使用vmware Workstation 10创建一台虚拟机,配置为2C/2G/50G,操作系统为CentOS Linux release 7.6.1810 (Core). IP地址为 ...

- Centos7 使用 kubeadm 安装Kubernetes 1.13.3

目录 目录 什么是Kubeadm? 什么是容器存储接口(CSI)? 什么是CoreDNS? 1.环境准备 1.1.网络配置 1.2.更改 hostname 1.3.配置 SSH 免密码登录登录 1.4 ...

- 使用 kubeadm 安装 kubernetes v1.16.0

近日通过kubeadm 安装 kubernetes v1.16.0,踩过不少坑,现记录下安装过程. 安装环境: 系 统:CentOS Linux release 7.6 Docke ...

- kubeadm安装kubernetes V1.11.1 集群

之前测试了离线环境下使用二进制方法安装配置Kubernetes集群的方法,安装的过程中听说 kubeadm 安装配置集群更加方便,因此试着折腾了一下.安装过程中,也有一些坑,相对来说操作上要比二进制方 ...

随机推荐

- js 测试题

//身份证号码为15位或者18位,15位时全为数字,18位前17位为数字,最后一位是校验位,可能为数字或字母x function isCardNo(card) { }$)|(^\d{}(\d|X|x) ...

- Docker 部署web项目

1.查找Docker Hub上的tomcat镜像 # docker search tomcat 2.拉取官方的镜像 # docker pull tomcat 提示:Using default ...

- Python 的 Pandas 对矩阵的行进行求和

Python 的 Pandas 对矩阵的行进行求和: 若使用 df.apply(sum) 方法的话,只能对矩阵的列进行求和,要对矩阵的行求和,可以先将矩阵转置,然后应用 df.apply(sum) 即 ...

- ArcGIS Server浏览地图服务无响应原因分析说明

1.问题描述 从4月17号下午5时起,至18号晚9点,客户单位部分通过ArcGIS Server发布的地图服务(该部分地图服务的数据源为数据库SJZX)无法加载浏览,表现为长时间无响应.同时,通过Ar ...

- 利用setenv进行tomcat 内存设置

part.1 系统环境及版本 系统环境: centos 7 版本: tomcat 7.0.78 part.2 步骤流程 2.1 新建setenv.sh # cd /usr/local/tomcat/b ...

- Sitecore 内容版本设计

Sitecore内容变化的跟踪显着偏离既定规范.了解Sitecore中版本控制和工作流程的细节,该产品是对这些发布工具的回答. 在出版界,实时跟踪内容变化很常见,可能是由于Microsoft Word ...

- MarkDown添加图片的三种方式【华为云技术分享】

Markdown插图片有三种方法,各种Markdown编辑器的插图方式也都包含在这三种方法之内. 插图最基础的格式就是: