Paper Reading - Deep Captioning with Multimodal Recurrent Neural Networks ( m-RNN ) ( ICLR 2015 ) ★

Link of the Paper: https://arxiv.org/pdf/1412.6632.pdf

Main Points:

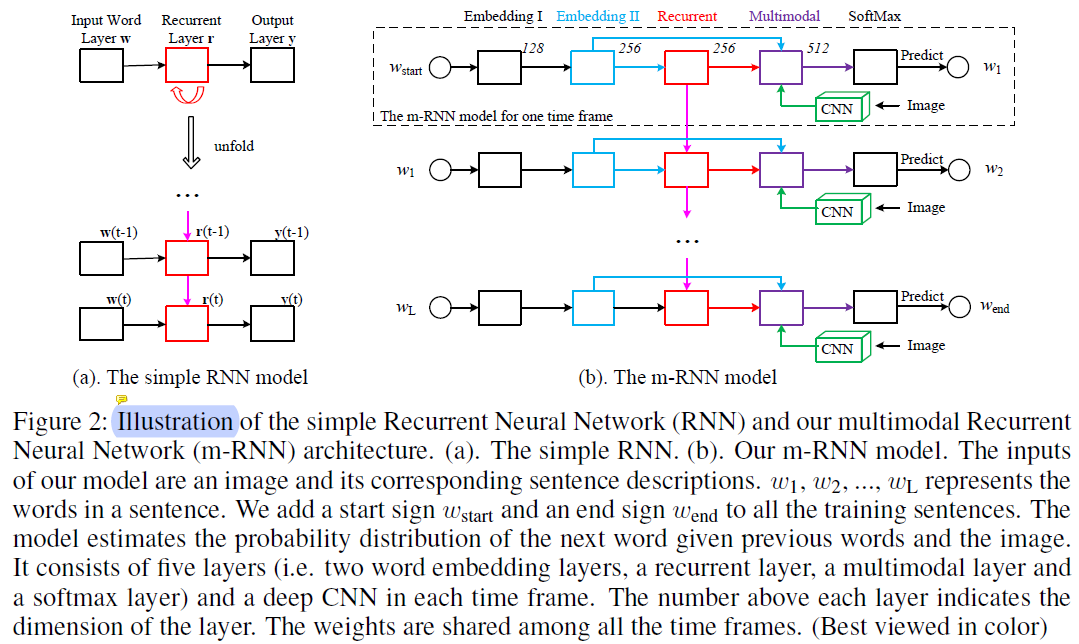

- The authors propose a multimodal Recurrent Neural Networks ( AlexNet/VGGNet + a multimodal layer + RNNs ). Their work has two major differences from these methods. Firstly, they incorporate a two-layer word embedding system in the m-RNN network structure which learns the word representation more efficiently than the single-layer word embedding. Secondly, they do not use the recurrent layer to store the visual information. The image representation is inputted to the m-RNN model along with every word in the sentence description.

- Most of the sentence-image multimodal models use pre-computed word embedding vectors as the initialization of their models. In contrast, the authors randomly initialize their word embedding layers and learn them from the training data.

- The m-RNN model is trained using a log-likelihood cost function. The errors can be backpropagated to the three parts ( the vision part, the language part, and the ) of the m-RNN model to update the model parameters simultaneously.

- The hyperparameters, such as layer dimensions and the choice of the non-linear activation functions, are tuned via cross-validation on Flickr8K dataset and are then fixed across all the experiments.

Other Key Points:

- Applications for Image Captioning: early childhood education, image retrieval, and navigation for the blind.

- There are generally three categories of methods for generating novel sentence descriptions for images. The first category assumes a specific rule of the language grammer. They parse the sentence and divide it into several parts. This kind of method generates sentences that are syntactically correct. The second category retrieves similar captioned images, and generates new descriptions by generalizing and re-composing the retrieved captions. The third category of methods, which is more related to our method, learns a probability density over the space of multimodal inputs, using for example, Deep Boltzmann Machines, and topic models. They generate sentences with richer and more flexible structure than the first group. The probability of generating sentences using the model can serve as the affinity metric for retrieval.

- Many previous methods treat the task of describing images as a retrieval task and formulate the problem as a ranking or embedding learning problem. They first extract the word and sentence features ( e.g. Socher et al.(2014) uses dependency tree Recursive Neural Network to extract sentence features ) as well as the image features. Then they optimize a ranking cost to learn an embedding model that maps both the sentence feature and the image feature to a common semantic feature space ( the same semantic space ). In this way, they can directly calculate the distance between images and sentences. These methods genarate image captions by retrieving them from a sentence database. Thus, they lack the ability of generating novel sentences or describing images that contain novel combinations of objects and scenes.

- Benchmark datasets for Image Captioning: IAPR TC-12 ( Grubinger et al.(2006) ), Flickr8K ( Rashtchian et al.(2010) ), Flickr30K ( Young et al.(2014) ) and MS COCO ( Lin et al.(2014) ).

- Evaluation Metrics for Sentence Generation: Sentence perplexity and BLUE scores.

- Tasks related to Image Captioning: Generating Novel Sentences, Retrieving Images Given a Sentence, Retrieving Sentences Given an Image.

- The m-RNN model is trained using Baidu's internal deep learning platform PADDLE.

Paper Reading - Deep Captioning with Multimodal Recurrent Neural Networks ( m-RNN ) ( ICLR 2015 ) ★的更多相关文章

- Paper Reading - Sequence to Sequence Learning with Neural Networks ( NIPS 2014 )

Link of the Paper: https://arxiv.org/pdf/1409.3215.pdf Main Points: Encoder-Decoder Model: Input seq ...

- 递归神经网络(Recurrent Neural Networks,RNN)

在深度学习领域,传统的多层感知机(MLP)具有出色的表现,取得了许多成功,它曾在许多不同的任务上——包括手写数字识别和目标分类上创造了记录.甚至到了今天,MLP在解决分类任务上始终都比其他方法要略胜一 ...

- Paper Reading - Deep Visual-Semantic Alignments for Generating Image Descriptions ( CVPR 2015 )

Link of the Paper: https://arxiv.org/abs/1412.2306 Main Points: An Alignment Model: Convolutional Ne ...

- Paper Reading - Mind’s Eye: A Recurrent Visual Representation for Image Caption Generation ( CVPR 2015 )

Link of the Paper: https://ieeexplore.ieee.org/document/7298856/ A Correlative Paper: Learning a Rec ...

- Hyperspectral Image Classification Using Similarity Measurements-Based Deep Recurrent Neural Networks

用RNN来做像素分类,输入是一系列相近的像素,长度人为指定为l,相近是利用像素相似度或是范围相似度得到的,计算个欧氏距离或是SAM. 数据是两个高光谱数据 1.Pavia University,Ref ...

- Attention and Augmented Recurrent Neural Networks

Attention and Augmented Recurrent Neural Networks CHRIS OLAHGoogle Brain SHAN CARTERGoogle Brain Sep ...

- The Unreasonable Effectiveness of Recurrent Neural Networks (RNN)

http://karpathy.github.io/2015/05/21/rnn-effectiveness/ There’s something magical about Recurrent Ne ...

- 课程五(Sequence Models),第一 周(Recurrent Neural Networks) —— 1.Programming assignments:Building a recurrent neural network - step by step

Building your Recurrent Neural Network - Step by Step Welcome to Course 5's first assignment! In thi ...

- (zhuan) Attention in Long Short-Term Memory Recurrent Neural Networks

Attention in Long Short-Term Memory Recurrent Neural Networks by Jason Brownlee on June 30, 2017 in ...

随机推荐

- springmvc整合mybatis框架源码 bootstrap html5 mysql oracle maven SSM

A 调用摄像头拍照,自定义裁剪编辑头像 [新录针对本系统的视频教程,手把手教开发一个模块,快速掌握本系统]B 集成代码生成器 [正反双向](单表.主表.明细表.树形表,开发利器)+快速构建表单; 技 ...

- JDK的跳表源码分析

JDK源码中的跳表实现类: ConcurrentSkipListMap和ConcurrentSkipListSet. 其中ConcurrentSkipListSet的实现是基于ConcurrentSk ...

- Android系统架构(一)

一.Android系统版本简介 Android操作系统已占据了手机操作系统的大半壁江山,截至本文写作时,Android操作系统系统版本及其详细信息,已发生了变化,具体信息见下表,当然也可以访问http ...

- 如何使用tomcat,使用域名直接访问javaweb项目首页

准备工作: 1:一台虚拟机 2:配置好jdk,将tomcat上传到服务器并解压 3:将项目上传到tomcat的webaap目录下 4:配置tomcat的conf目录下的server.xml文件 确保8 ...

- Oracle 执行计划的查看方式

访问数据的方法:一.访问表的方法:1.全表扫描,2.ROWID扫描 二.访问索引的方法:1.索引唯一性扫描,2.索引范围扫描,3.索引全扫 ...

- volatile、static

谈到 volatile.static 就必须说多线程. 1.一个线程在开始执行的时候,会开启一片自己的工作内存(自己线程私有),同时将主内存中的数据复制到自己 的工作内存,从此读写数据都是自己的工作内 ...

- MySQL学习【第十二篇事务中的锁与隔离级别】

一.事务中的锁 1.啥是锁? 顾名思义,锁就是锁定的意思 2.锁的作用是什么? 在事务ACID的过程中,‘锁’和‘隔离级别’一起来实现‘I’隔离性的作用 3.锁的种类 共享锁:保证在多事务工作期间,数 ...

- 将变量做为一个对象的key,push新增进一个数组

var orgnIdListValue=["0","2"]; function arrayField(a,b){ let arrayMes=[]; for(va ...

- 树莓派3B+学习笔记:13、不间断会话服务screen

screen是一款能够实现多窗口远程控制的开源服务程序,简单来说就是为了解决网络异常中断或为了同时控制多个远程终端窗口而设计的程序.用户还可以使用screen服务程序同时在多个远程会话中自由切换,能够 ...

- CVE-2018-8174 EXP 0day python

usage: CVE-2018-8174.py [-h] -u URL -o OUTPUT [-i IP] [-p PORT] Exploit for CVE-2018-8174 optional a ...