Docker容器网络篇

Docker容器网络篇

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.Docker的网络模型概述

如上图所示,Docker有四种网络模型:

封闭式网络(Closed container):

封闭式容器,只有本地回环接口(Loopback interface,和咱们服务器看到的lo接口类似),无法与外界进行通信。 桥接式网络(Bridge container A):

桥接容器,除了有一块本地回环接口(Loopback interface)外,还有一块私有接口(Private interface)通过容器虚拟接口(Container virtual interface)连接到桥接虚拟接口(Docker bridge virtual interface),之后通过逻辑主机接口(Logical host interface)连接到主机物理网络(Physical network interface)。

桥接网卡默认会分配到172.17.0./16的IP地址段。

如果我们在创建容器时没有指定网络模型,默认就是(Nat)桥接网络,这也就是为什么我们在登录到一个容器后,发现IP地址段都在172.17.0.0/16网段的原因啦。 联盟式网络(Joined container A | Joined container B):

每个容器都各有一部分名称空间(Mount,PID,User),另外一部分名称空间是共享的(UTS,Net,IPC)。由于他们的网络是共享的,因此各个容器可以通过本地回环接口(Loopback interface)进行通信。除了共享同一组本地回环接口(Loopback interface)外,还有一块一块私有接口(Private interface)通过联合容器虚拟接口(Joined container virtual interface)连接到桥接虚拟接口(Docker bridge virtual interface),之后通过逻辑主机接口(Logical host interface)连接到主机物理网络(Physical network interface)。

开放式容器(Open container):

比联盟式网络更开放,我们知道联盟式网络是多个容器共享网络(Net),而开放式容器(Open contaner)就直接共享了宿主机的名称空间。因此物理网卡有多少个,那么该容器就能看到多少网卡信息。我们可以说Open container是联盟式容器的衍生。

二.容器虚拟化网络概述

1>.查看docker支持的网络模型

[root@node102.yinzhengjie.org.cn ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

33d001d8b94d bridge bridge local #如果我们创建一个容器式未指定网络模型,默认就是桥接式网络哟~

9f539144f682 host host local

e10670abb710 none null local

[root@node102.yinzhengjie.org.cn ~]#

[root@node102.yinzhengjie.org.cn ~]#

2>.查看桥接式网络元数据信息

[root@node102.yinzhengjie.org.cn ~]# docker network inspect bridge

[

{

"Name": "bridge",

"Id": "33d001d8b94d4080411e06c711a1b6d322115aebbe1253ecef58a9a70e05bdd7",

"Created": "2019-10-18T17:27:49.282236251+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16", #这里就是默认的桥接式网络的网段地址,既然式默认那自然式可以修改的。

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0", #看这里,告诉咱们bridge默认的网卡名称为"docker0"

"com.docker.network.driver.mtu": ""

},

"Labels": {}

}

]

[root@node102.yinzhengjie.org.cn ~]#

三.使用ip命令网络名称空间(netns)来模拟容器间通信

1>.查看帮助信息

[root@node101.yinzhengjie.org.cn ~]# rpm -q iproute

iproute-4.11.-.el7.x86_64

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ip netns help #注意:当我们使用ip命令去管理网络名称时,其它名称都是共享的,这和容器所说的六个名称空间都是隔离的有所不同哟~

Usage: ip netns list

ip netns add NAME

ip netns set NAME NETNSID

ip [-all] netns delete [NAME]

ip netns identify [PID]

ip netns pids NAME

ip [-all] netns exec [NAME] cmd ...

ip netns monitor

ip netns list-id

[root@node101.yinzhengjie.org.cn ~]#

2>.添加2个网络名称空间

[root@node103.yinzhengjie.org.cn ~]# ip netns add r1 #添加一个r1网络名称空间

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns add r2

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns list #查看已经存在的网络名称空间列表

r2

r1

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns add r1 #添加一个r1网络名称空间

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r1 ifconfig -a #我们发现创建的r1网络名称空间并没有网卡,仅有本地回环地址,目前还没有绑定任何网络接口设备。

lo: flags=<LOOPBACK> mtu

loop txqueuelen (Local Loopback)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions [root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r2 ifconfig -a #和r1同理。

lo: flags=<LOOPBACK> mtu

loop txqueuelen (Local Loopback)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions [root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r1 ifconfig -a #我们发现创建的r1网络名称空间并没有网卡,仅有本地回环地址,目前还没有绑定任何网络接口设备。

3>.创建虚拟网卡对

[root@node103.yinzhengjie.org.cn ~]# ip link help #查看该命令的帮助信息

Usage: ip link add [link DEV] [ name ] NAME

[ txqueuelen PACKETS ]

[ address LLADDR ]

[ broadcast LLADDR ]

[ mtu MTU ] [index IDX ]

[ numtxqueues QUEUE_COUNT ]

[ numrxqueues QUEUE_COUNT ]

type TYPE [ ARGS ] ip link delete { DEVICE | dev DEVICE | group DEVGROUP } type TYPE [ ARGS ] ip link set { DEVICE | dev DEVICE | group DEVGROUP }

[ { up | down } ]

[ type TYPE ARGS ]

[ arp { on | off } ]

[ dynamic { on | off } ]

[ multicast { on | off } ]

[ allmulticast { on | off } ]

[ promisc { on | off } ]

[ trailers { on | off } ]

[ carrier { on | off } ]

[ txqueuelen PACKETS ]

[ name NEWNAME ]

[ address LLADDR ]

[ broadcast LLADDR ]

[ mtu MTU ]

[ netns { PID | NAME } ]

[ link-netnsid ID ]

[ alias NAME ]

[ vf NUM [ mac LLADDR ]

[ vlan VLANID [ qos VLAN-QOS ] [ proto VLAN-PROTO ] ]

[ rate TXRATE ]

[ max_tx_rate TXRATE ]

[ min_tx_rate TXRATE ]

[ spoofchk { on | off} ]

[ query_rss { on | off} ]

[ state { auto | enable | disable} ] ]

[ trust { on | off} ] ]

[ node_guid { eui64 } ]

[ port_guid { eui64 } ]

[ xdp { off |

object FILE [ section NAME ] [ verbose ] |

pinned FILE } ]

[ master DEVICE ][ vrf NAME ]

[ nomaster ]

[ addrgenmode { eui64 | none | stable_secret | random } ]

[ protodown { on | off } ] ip link show [ DEVICE | group GROUP ] [up] [master DEV] [vrf NAME] [type TYPE] ip link xstats type TYPE [ ARGS ] ip link afstats [ dev DEVICE ] ip link help [ TYPE ] TYPE := { vlan | veth | vcan | dummy | ifb | macvlan | macvtap |

bridge | bond | team | ipoib | ip6tnl | ipip | sit | vxlan |

gre | gretap | ip6gre | ip6gretap | vti | nlmon | team_slave |

bond_slave | ipvlan | geneve | bridge_slave | vrf | macsec }

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip link help #查看该命令的帮助信息

[root@node103.yinzhengjie.org.cn ~]# ip link add name veth1. type veth peer name veth1. #创建一对类型为虚拟以太网网卡(veth),两端名称分别为veth1.1和veth1.

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip link show #我们可以看到veth1.@veth1.2表示veth1.1和veth1.2为一对网卡,目前他们都在宿主机且状态为DOWN。

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN mode DEFAULT group default qlen

link/loopback ::::: brd :::::

: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP mode DEFAULT group default qlen

link/ether :::ef:: brd ff:ff:ff:ff:ff:ff

: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP mode DEFAULT group default qlen

link/ether :::3a:da:a7 brd ff:ff:ff:ff:ff:ff

: veth1.@veth1.: <BROADCAST,MULTICAST,M-DOWN> mtu qdisc noop state DOWN mode DEFAULT group default qlen

link/ether :1e:::: brd ff:ff:ff:ff:ff:ff

: veth1.@veth1.: <BROADCAST,MULTICAST,M-DOWN> mtu qdisc noop state DOWN mode DEFAULT group default qlen

link/ether ::e0:::cd brd ff:ff:ff:ff:ff:ff

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip link add name veth1.1 type veth peer name veth1.2 #创建一对类型为虚拟以太网网卡(veth),两端名称分别为veth1.1和veth1.2

4>.将虚拟网卡移动到指定名称空间并实现网络互通

[root@node103.yinzhengjie.org.cn ~]# ip link show

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN mode DEFAULT group default qlen

link/loopback ::::: brd :::::

: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP mode DEFAULT group default qlen

link/ether :::ef:: brd ff:ff:ff:ff:ff:ff

: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP mode DEFAULT group default qlen

link/ether :::3a:da:a7 brd ff:ff:ff:ff:ff:ff

: veth1.@veth1.: <BROADCAST,MULTICAST,M-DOWN> mtu qdisc noop state DOWN mode DEFAULT group default qlen

link/ether :1e:::: brd ff:ff:ff:ff:ff:ff

: veth1.@veth1.: <BROADCAST,MULTICAST,M-DOWN> mtu qdisc noop state DOWN mode DEFAULT group default qlen

link/ether ::e0:::cd brd ff:ff:ff:ff:ff:ff

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip link set dev veth1. netns r2 #我们将veth1.2这块虚拟网卡移动到r2名称空间。

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip link show #此时,我们发现veth1.2不见啦,因此我们可以说一块网卡只能归属一个名称空间,注意观察此时veth1.1的名称由"veth1.1@veth1.2"变为"veth1.1@if4"了哟~

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN mode DEFAULT group default qlen

link/loopback ::::: brd :::::

: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP mode DEFAULT group default qlen

link/ether :::ef:: brd ff:ff:ff:ff:ff:ff

: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP mode DEFAULT group default qlen

link/ether :::3a:da:a7 brd ff:ff:ff:ff:ff:ff

: veth1.@if4: <BROADCAST,MULTICAST> mtu qdisc noop state DOWN mode DEFAULT group default qlen

link/ether ::e0:::cd brd ff:ff:ff:ff:ff:ff link-netnsid

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip link set dev veth1.2 netns r2 #我们将veth1.2这块虚拟网卡移动到r2名称空间。

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r2 ifconfig -a #于此同时,我们上面说将宿主机的veth1.2移动到了r2名称空间里啦,那我们就来看看,发现的确存在。

lo: flags=<LOOPBACK> mtu

loop txqueuelen (Local Loopback)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions veth1.: flags=<BROADCAST,MULTICAST> mtu #果不其然,这里的确有该虚拟网卡设备呢!

ether :1e:::: txqueuelen (Ethernet)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions [root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r2 ip link set dev veth1. name eth0 #于此同时我们将veth1.2更名为eth0,便于规范化。

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r2 ifconfig -a #veth1.2成功更名为eth0,默认网卡是没有激活的,因此我们需要使用-a选项查看哟

eth0: flags=<BROADCAST,MULTICAST> mtu

ether :1e:::: txqueuelen (Ethernet)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions lo: flags=<LOOPBACK> mtu

loop txqueuelen (Local Loopback)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions [root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r2 ip link set dev veth1.2 name eth0 #于此同时我们将veth1.2更名为eth0,便于规范化。

[root@node103.yinzhengjie.org.cn ~]# ip link show

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN mode DEFAULT group default qlen

link/loopback ::::: brd :::::

: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP mode DEFAULT group default qlen

link/ether :::ef:: brd ff:ff:ff:ff:ff:ff

: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP mode DEFAULT group default qlen

link/ether :::3a:da:a7 brd ff:ff:ff:ff:ff:ff

: veth1.@if4: <BROADCAST,MULTICAST> mtu qdisc noop state DOWN mode DEFAULT group default qlen

link/ether ::e0:::cd brd ff:ff:ff:ff:ff:ff link-netnsid

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ifconfig veth1. 10.1.0.1/ up #给宿主机端的veth1.1虚拟网卡分配临时IP地址并激活该网卡

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ifconfig #此时我们可以使用ifconfig命令看到veth1.1的网卡配置信息啦

enp0s3: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 10.0.2.15 netmask 255.255.255.0 broadcast 10.0.2.255

ether :::ef:: txqueuelen (Ethernet)

RX packets bytes (3.1 KiB)

RX errors dropped overruns frame

TX packets bytes (7.9 KiB)

TX errors dropped overruns carrier collisions enp0s8: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 172.30.1.103 netmask 255.255.255.0 broadcast 172.30.1.255

ether :::3a:da:a7 txqueuelen (Ethernet)

RX packets bytes (293.6 KiB)

RX errors dropped overruns frame

TX packets bytes (1.1 MiB)

TX errors dropped overruns carrier collisions lo: flags=<UP,LOOPBACK,RUNNING> mtu

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen (Local Loopback)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions veth1.: flags=<UP,BROADCAST,MULTICAST> mtu

inet 10.1.0.1 netmask 255.255.255.0 broadcast 10.1.0.255

ether ::e0:::cd txqueuelen (Ethernet)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions [root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ifconfig veth1.1 10.1.0.1/24 up #给宿主机端的veth1.1虚拟网卡分配临时IP地址并激活该网卡

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r2 ifconfig -a

eth0: flags=<BROADCAST,MULTICAST> mtu

ether :1e:::: txqueuelen (Ethernet)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions lo: flags=<LOOPBACK> mtu

loop txqueuelen (Local Loopback)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions [root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r2 ifconfig eth0 10.1.0.102/ up #我们为r2网络名称空间的eth0虚拟网卡分配临时IP地址并激活

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r2 ifconfig

eth0: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 10.1.0.102 netmask 255.255.255.0 broadcast 10.1.0.255

inet6 fe80::341e:54ff:fe37: prefixlen scopeid 0x20<link>

ether :1e:::: txqueuelen (Ethernet)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (516.0 B)

TX errors dropped overruns carrier collisions [root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r2 ifconfig eth0 10.1.0.102/24 up #我们为r2网络名称空间的eth0虚拟网卡分配临时IP地址并激活

[root@node103.yinzhengjie.org.cn ~]# ifconfig #宿主机已激活网卡信息

enp0s3: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 10.0.2.15 netmask 255.255.255.0 broadcast 10.0.2.255

ether :::ef:: txqueuelen (Ethernet)

RX packets bytes (3.1 KiB)

RX errors dropped overruns frame

TX packets bytes (7.9 KiB)

TX errors dropped overruns carrier collisions enp0s8: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 172.30.1.103 netmask 255.255.255.0 broadcast 172.30.1.255

ether :::3a:da:a7 txqueuelen (Ethernet)

RX packets bytes (323.7 KiB)

RX errors dropped overruns frame

TX packets bytes (1.1 MiB)

TX errors dropped overruns carrier collisions lo: flags=<UP,LOOPBACK,RUNNING> mtu

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen (Local Loopback)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions veth1.: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 10.1.0.1 netmask 255.255.255.0 broadcast 10.1.0.255

ether ::e0:::cd txqueuelen (Ethernet)

RX packets bytes (1.4 KiB)

RX errors dropped overruns frame

TX packets bytes (868.0 B)

TX errors dropped overruns carrier collisions [root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r2 ifconfig #r2名称空间已激活网卡信息

eth0: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 10.1.0.102 netmask 255.255.255.0 broadcast 10.1.0.255

inet6 fe80::341e:54ff:fe37: prefixlen scopeid 0x20<link>

ether :1e:::: txqueuelen (Ethernet)

RX packets bytes (868.0 B)

RX errors dropped overruns frame

TX packets bytes (1.4 KiB)

TX errors dropped overruns carrier collisions [root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# [root@node103.yinzhengjie.org.cn ~]# ping 10.1.0.102 #我们发现宿主机可以和r2名称空间的eth0虚拟网卡通信啦。

PING 10.1.0.102 (10.1.0.102) () bytes of data.

bytes from 10.1.0.102: icmp_seq= ttl= time=0.037 ms

bytes from 10.1.0.102: icmp_seq= ttl= time=0.018 ms

bytes from 10.1.0.102: icmp_seq= ttl= time=0.022 ms

bytes from 10.1.0.102: icmp_seq= ttl= time=0.020 ms

bytes from 10.1.0.102: icmp_seq= ttl= time=0.019 ms

bytes from 10.1.0.102: icmp_seq= ttl= time=0.040 ms

bytes from 10.1.0.102: icmp_seq= ttl= time=0.022 ms

bytes from 10.1.0.102: icmp_seq= ttl= time=0.047 ms

^C

--- 10.1.0.102 ping statistics ---

packets transmitted, received, % packet loss, time 7024ms

rtt min/avg/max/mdev = 0.018/0.028/0.047/0.010 ms

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ping 10.1.0.102 #我们发现宿主机可以和r2名称空间的eth0虚拟网卡通信啦。

5>.实现两个虚拟网络名称空间的网络互通

[root@node103.yinzhengjie.org.cn ~]# ip link show

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN mode DEFAULT group default qlen

link/loopback ::::: brd :::::

: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP mode DEFAULT group default qlen

link/ether :::ef:: brd ff:ff:ff:ff:ff:ff

: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP mode DEFAULT group default qlen

link/ether :::3a:da:a7 brd ff:ff:ff:ff:ff:ff

: veth1.@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc noqueue state UP mode DEFAULT group default qlen

link/ether ::e0:::cd brd ff:ff:ff:ff:ff:ff link-netnsid

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip link set dev veth1. netns r1 #将veth1.1虚拟网卡移动到r1网络名称空间

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip link show

: lo: <LOOPBACK,UP,LOWER_UP> mtu qdisc noqueue state UNKNOWN mode DEFAULT group default qlen

link/loopback ::::: brd :::::

: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP mode DEFAULT group default qlen

link/ether :::ef:: brd ff:ff:ff:ff:ff:ff

: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu qdisc pfifo_fast state UP mode DEFAULT group default qlen

link/ether :::3a:da:a7 brd ff:ff:ff:ff:ff:ff

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r1 ifconfig -a

lo: flags=<LOOPBACK> mtu

loop txqueuelen (Local Loopback)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions veth1.: flags=<BROADCAST,MULTICAST> mtu

ether ::e0:::cd txqueuelen (Ethernet)

RX packets bytes (1.4 KiB)

RX errors dropped overruns frame

TX packets bytes (868.0 B)

TX errors dropped overruns carrier collisions [root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip link set dev veth1.1 netns r1 #将veth1.1虚拟网卡移动到r1网络名称空间

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r1 ifconfig -a

lo: flags=<LOOPBACK> mtu

loop txqueuelen (Local Loopback)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions veth1.: flags=<BROADCAST,MULTICAST> mtu

ether ::e0:::cd txqueuelen (Ethernet)

RX packets bytes (1.4 KiB)

RX errors dropped overruns frame

TX packets bytes (868.0 B)

TX errors dropped overruns carrier collisions [root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r1 ifconfig veth1. 10.1.0.101/ up #为r1网络名称空间的veth1.1虚拟网卡分配IP地址并激活

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r1 ifconfig

veth1.: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 10.1.0.101 netmask 255.255.255.0 broadcast 10.1.0.255

inet6 fe80:::e0ff:fe83:48cd prefixlen scopeid 0x20<link>

ether ::e0:::cd txqueuelen (Ethernet)

RX packets bytes (1.4 KiB)

RX errors dropped overruns frame

TX packets bytes (1.3 KiB)

TX errors dropped overruns carrier collisions [root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r1 ifconfig veth1.1 10.1.0.101/24 up #为r1网络名称空间的veth1.1虚拟网卡分配IP地址并激活

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r1 ifconfig

veth1.: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 10.1.0.101 netmask 255.255.255.0 broadcast 10.1.0.255

inet6 fe80:::e0ff:fe83:48cd prefixlen scopeid 0x20<link>

ether ::e0:::cd txqueuelen (Ethernet)

RX packets bytes (2.1 KiB)

RX errors dropped overruns frame

TX packets bytes (2.1 KiB)

TX errors dropped overruns carrier collisions [root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r2 ifconfig

eth0: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 10.1.0.102 netmask 255.255.255.0 broadcast 10.1.0.255

inet6 fe80::341e:54ff:fe37: prefixlen scopeid 0x20<link>

ether :1e:::: txqueuelen (Ethernet)

RX packets bytes (2.1 KiB)

RX errors dropped overruns frame

TX packets bytes (2.1 KiB)

TX errors dropped overruns carrier collisions [root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r1 ping 10.1.0.102 #我们使用r1名称空间去ping 一下r2名称空间的虚拟网卡地址,发现是可以互通的!

PING 10.1.0.102 (10.1.0.102) () bytes of data.

bytes from 10.1.0.102: icmp_seq= ttl= time=0.017 ms

bytes from 10.1.0.102: icmp_seq= ttl= time=0.019 ms

bytes from 10.1.0.102: icmp_seq= ttl= time=0.041 ms

bytes from 10.1.0.102: icmp_seq= ttl= time=0.019 ms

bytes from 10.1.0.102: icmp_seq= ttl= time=0.048 ms

bytes from 10.1.0.102: icmp_seq= ttl= time=0.020 ms

^C

--- 10.1.0.102 ping statistics ---

packets transmitted, received, % packet loss, time 4999ms

rtt min/avg/max/mdev = 0.017/0.027/0.048/0.013 ms

[root@node103.yinzhengjie.org.cn ~]#

[root@node103.yinzhengjie.org.cn ~]# ip netns exec r1 ping 10.1.0.102 #我们使用r1名称空间去ping 一下r2名称空间的虚拟网卡地址,发现是可以互通的!

四.主机名及主机列表解析相关配置案例

1>.启动一个容器时指定网络模型

[root@node101.yinzhengjie.org.cn ~]# docker run --name test1 -it --network bridge --rm busybox:latest #启动一个名称为test1的容器,网络模型为bridge,其中"-it"表示启动一个交互式终端,"-rm"表示程序允许结束后自动删除容器。

/ #

/ # hostname #大家初次看到这个主机名是否有疑虑为什么主机名会是一个随机字符串,其实不然,它默认是容器ID(CONTAINER ID)的名称,可以在开一个终端使用"docker ps"命令进行验证。

9441fef2264c

/ #

/ # exit

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker run --name test1 -it --network bridge -h node102.yinzhengjie.org.cn --rm busybox:latest #我们在启动容器时使用"-h"参数来指定虚拟机的主机名,但该主机名并不会修改"docker ps"中的"CONTAINER ID"的名称哟~

/ #

/ # hostname

node102.yinzhengjie.org.cn

/ #

2>.启动容器时指定dns地址

[root@node101.yinzhengjie.org.cn ~]# docker run --name test1 -it --network bridge -h node102.yinzhengjie.org.cn --rm busybox:latest

/ #

/ # hostname

node102.yinzhengjie.org.cn

/ #

/ # cat /etc/resolv.conf

# Generated by NetworkManager

search yinzhengjie.org.cn

nameserver 172.30.1.254

/ #

/ # exit

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker run --name test1 -it --network bridge -h node102.yinzhengjie.org.cn --dns 114.114.114.114 --rm busybox:latest

/ #

/ # cat /etc/resolv.conf

search yinzhengjie.org.cn

nameserver 114.114.114.114

/ #

[root@node101.yinzhengjie.org.cn ~]# docker run --name test1 -it --network bridge -h node102.yinzhengjie.org.cn --dns 114.114.114.114 --rm busybox:latest

3>.启动容器时自定义dns search

[root@node101.yinzhengjie.org.cn ~]# docker run --name test1 -it --network bridge -h node102.yinzhengjie.org.cn --dns 114.114.114.114 --rm busybox:latest

/ #

/ # cat /etc/resolv.conf

search yinzhengjie.org.cn

nameserver 114.114.114.114

/ #

/ #

/ # exit

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker run --name test1 -it --network bridge -h node102.yinzhengjie.org.cn --dns 114.114.114.114 --dns-search ilinux.io --rm busybox:latest

/ #

/ # cat /etc/resolv.conf

search ilinux.io

nameserver 114.114.114.114

/ #

[root@node101.yinzhengjie.org.cn ~]# docker run --name test1 -it --network bridge -h node102.yinzhengjie.org.cn --dns 114.114.114.114 --dns-search ilinux.io --rm busybox:latest

4>.启动容器时自定义主机解析列表

[root@node101.yinzhengjie.org.cn ~]# docker run --name test1 -it --network bridge -h node102.yinzhengjie.org.cn --dns 114.114.114.114 --dns-search ilinux.io --rm busybox:latest

/ #

/ # cat /etc/hosts

127.0.0.1 localhost

:: localhost ip6-localhost ip6-loopback

fe00:: ip6-localnet

ff00:: ip6-mcastprefix

ff02:: ip6-allnodes

ff02:: ip6-allrouters

172.17.0.2 node102.yinzhengjie.org.cn node102

/ #

/ # exit

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker run --name test1 -it --network bridge -h node102.yinzhengjie.org.cn --dns 114.114.114.114 --dns-search ilinux.io --add-host node101.yinzhengjie.org.cn:172.30.1.101 --rm busybox:latest

/ #

/ # cat /etc/hosts

127.0.0.1 localhost

:: localhost ip6-localhost ip6-loopback

fe00:: ip6-localnet

ff00:: ip6-mcastprefix

ff02:: ip6-allnodes

ff02:: ip6-allrouters

172.30.1.101 node101.yinzhengjie.org.cn

172.17.0.2 node102.yinzhengjie.org.cn node102

/ #

/ #

[root@node101.yinzhengjie.org.cn ~]# docker run --name test1 -it --network bridge -h node102.yinzhengjie.org.cn --dns 114.114.114.114 --dns-search ilinux.io --add-host node101.yinzhengjie.org.cn:172.30.1.101 --rm busybox:latest

五.打开入站通信(opening inbound communication)

1>.“-p”选项的使用格式

-P<containerPort>

将指定的容器端口映射至主机所有地址的一个动态端口。 -p<hostPort>:<containerPort>

将容器端口<containerPort>映射至指定的主机端口<hostPort>。 -p<ip>::<containerPort>

将指定的容器端口<containerPort>映射至主机指定<ip>的动态端口。 -p<ip>:<hostPort>:<containerPort>

将指定的容器端口<containerPort>映射至主机指定<ip>的端口<hostPort> 注意:"动态端口"指随机端口,具体的映射结果可使用"docker port"命令查看。

2>.“-P <containerPort>”案例展示

[root@node101.yinzhengjie.org.cn ~]# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

jason/httpd v0. 78fb6601880f hours ago .22MB

jason/httpd v0.- 76d5e6c143b2 hours ago .22MB

busybox latest 19485c79a9bb weeks ago .22MB

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker run --name myweb --rm -p jason/httpd:v0.2 #我们使用咱们之前自定义的镜像来做实验,启动容器后会自动启动httpd服务

[root@node101.yinzhengjie.org.cn ~]# docker inspect myweb | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "172.17.0.2",

"IPAddress": "172.17.0.2",

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# curl 172.17.0.2 #另启动一个终端,可以正常访问咱们的容器服务。

<h1>Busybox httpd server.[Jason Yin dao ci yi you !!!]</h1>

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# curl 172.17.0.2 #另启动一个终端,可以正常访问咱们的容器服务。

[root@node101.yinzhengjie.org.cn ~]# iptables -t nat -vnL #注意观察"Chain Docker"那一列,其实我们发现所谓的端口暴露无非是Docker底层调用了DNAT实现的。

Chain PREROUTING (policy ACCEPT packets, bytes)

pkts bytes target prot opt in out source destination

PREROUTING_direct all -- * * 0.0.0.0/ 0.0.0.0/

PREROUTING_ZONES_SOURCE all -- * * 0.0.0.0/ 0.0.0.0/

PREROUTING_ZONES all -- * * 0.0.0.0/ 0.0.0.0/

DOCKER all -- * * 0.0.0.0/ 0.0.0.0/ ADDRTYPE match dst-type LOCAL Chain INPUT (policy ACCEPT packets, bytes)

pkts bytes target prot opt in out source destination Chain OUTPUT (policy ACCEPT packets, bytes)

pkts bytes target prot opt in out source destination

OUTPUT_direct all -- * * 0.0.0.0/ 0.0.0.0/

DOCKER all -- * * 0.0.0.0/ !127.0.0.0/ ADDRTYPE match dst-type LOCAL Chain POSTROUTING (policy ACCEPT packets, bytes)

pkts bytes target prot opt in out source destination

MASQUERADE all -- * !docker0 172.17.0.0/ 0.0.0.0/

RETURN all -- * * 192.168.122.0/ 224.0.0.0/

RETURN all -- * * 192.168.122.0/ 255.255.255.255

MASQUERADE tcp -- * * 192.168.122.0/ !192.168.122.0/ masq ports: -

MASQUERADE udp -- * * 192.168.122.0/ !192.168.122.0/ masq ports: -

MASQUERADE all -- * * 192.168.122.0/ !192.168.122.0/

POSTROUTING_direct all -- * * 0.0.0.0/ 0.0.0.0/

POSTROUTING_ZONES_SOURCE all -- * * 0.0.0.0/ 0.0.0.0/

POSTROUTING_ZONES all -- * * 0.0.0.0/ 0.0.0.0/

MASQUERADE tcp -- * * 172.17.0.2 172.17.0.2 tcp dpt: Chain DOCKER ( references)

pkts bytes target prot opt in out source destination

RETURN all -- docker0 * 0.0.0.0/ 0.0.0.0/

DNAT tcp -- !docker0 * 0.0.0.0/ 0.0.0.0/ tcp dpt: to:172.17.0.2: #看到这一行了没有?我们发现docker其实底层调用了iptable来帮它实现端口暴露。 Chain OUTPUT_direct ( references)

pkts bytes target prot opt in out source destination Chain POSTROUTING_ZONES ( references)

pkts bytes target prot opt in out source destination

POST_public all -- * ens33 0.0.0.0/ 0.0.0.0/ [goto]

POST_public all -- * + 0.0.0.0/ 0.0.0.0/ [goto] Chain POSTROUTING_ZONES_SOURCE ( references)

pkts bytes target prot opt in out source destination Chain POSTROUTING_direct ( references)

pkts bytes target prot opt in out source destination Chain POST_public ( references)

pkts bytes target prot opt in out source destination

POST_public_log all -- * * 0.0.0.0/ 0.0.0.0/

POST_public_deny all -- * * 0.0.0.0/ 0.0.0.0/

POST_public_allow all -- * * 0.0.0.0/ 0.0.0.0/ Chain POST_public_allow ( references)

pkts bytes target prot opt in out source destination Chain POST_public_deny ( references)

pkts bytes target prot opt in out source destination Chain POST_public_log ( references)

pkts bytes target prot opt in out source destination Chain PREROUTING_ZONES ( references)

pkts bytes target prot opt in out source destination

PRE_public all -- ens33 * 0.0.0.0/ 0.0.0.0/ [goto]

PRE_public all -- + * 0.0.0.0/ 0.0.0.0/ [goto] Chain PREROUTING_ZONES_SOURCE ( references)

pkts bytes target prot opt in out source destination Chain PREROUTING_direct ( references)

pkts bytes target prot opt in out source destination Chain PRE_public ( references)

pkts bytes target prot opt in out source destination

PRE_public_log all -- * * 0.0.0.0/ 0.0.0.0/

PRE_public_deny all -- * * 0.0.0.0/ 0.0.0.0/

PRE_public_allow all -- * * 0.0.0.0/ 0.0.0.0/ Chain PRE_public_allow ( references)

pkts bytes target prot opt in out source destination Chain PRE_public_deny ( references)

pkts bytes target prot opt in out source destination Chain PRE_public_log ( references)

pkts bytes target prot opt in out source destination

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#iptables -t nat #注意观察"Chain Docker"那一列,其实我们发现所谓的端口暴露无非是Docker底层调用了DNAT实现的。

[root@node101.yinzhengjie.org.cn ~]# docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9c020aca98dc jason/httpd:v0. "/bin/httpd -f -h /d…" minutes ago Up minutes 0.0.0.0:->/tcp myweb

[root@node101.yinzhengjie.org.cn ~]#

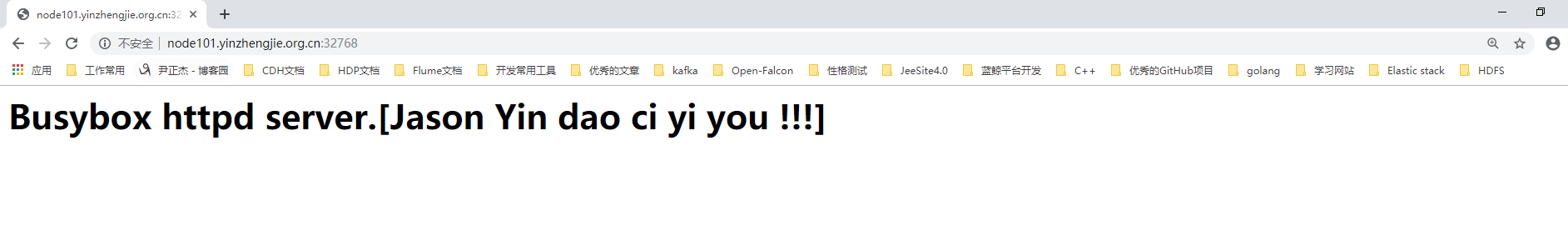

[root@node101.yinzhengjie.org.cn ~]# docker port myweb #我们发现docker帮我们做了一次映射,myweb容器将httpd服务绑定到了物理机所有网卡可用地址的32768端口进行服务暴露,我们可以直接使用物理机访问它,如下图所示。

/tcp -> 0.0.0.0:

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# hostname -i

172.30.1.101

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9c020aca98dc jason/httpd:v0. "/bin/httpd -f -h /d…" hours ago Up hours 0.0.0.0:->/tcp myweb

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker kill myweb #停止容器运行后iptables中的nat表记录也会跟着清除

myweb

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# iptables -t nat -vnL

Chain PREROUTING (policy ACCEPT packets, bytes)

pkts bytes target prot opt in out source destination

PREROUTING_direct all -- * * 0.0.0.0/ 0.0.0.0/

PREROUTING_ZONES_SOURCE all -- * * 0.0.0.0/ 0.0.0.0/

PREROUTING_ZONES all -- * * 0.0.0.0/ 0.0.0.0/

DOCKER all -- * * 0.0.0.0/ 0.0.0.0/ ADDRTYPE match dst-type LOCAL Chain INPUT (policy ACCEPT packets, bytes)

pkts bytes target prot opt in out source destination Chain OUTPUT (policy ACCEPT packets, bytes)

pkts bytes target prot opt in out source destination

OUTPUT_direct all -- * * 0.0.0.0/ 0.0.0.0/

DOCKER all -- * * 0.0.0.0/ !127.0.0.0/ ADDRTYPE match dst-type LOCAL Chain POSTROUTING (policy ACCEPT packets, bytes)

pkts bytes target prot opt in out source destination

MASQUERADE all -- * !docker0 172.17.0.0/ 0.0.0.0/

RETURN all -- * * 192.168.122.0/ 224.0.0.0/

RETURN all -- * * 192.168.122.0/ 255.255.255.255

MASQUERADE tcp -- * * 192.168.122.0/ !192.168.122.0/ masq ports: -

MASQUERADE udp -- * * 192.168.122.0/ !192.168.122.0/ masq ports: -

MASQUERADE all -- * * 192.168.122.0/ !192.168.122.0/

POSTROUTING_direct all -- * * 0.0.0.0/ 0.0.0.0/

POSTROUTING_ZONES_SOURCE all -- * * 0.0.0.0/ 0.0.0.0/

POSTROUTING_ZONES all -- * * 0.0.0.0/ 0.0.0.0/ Chain DOCKER ( references)

pkts bytes target prot opt in out source destination

RETURN all -- docker0 * 0.0.0.0/ 0.0.0.0/ Chain OUTPUT_direct ( references)

pkts bytes target prot opt in out source destination Chain POSTROUTING_ZONES ( references)

pkts bytes target prot opt in out source destination

POST_public all -- * ens33 0.0.0.0/ 0.0.0.0/ [goto]

POST_public all -- * + 0.0.0.0/ 0.0.0.0/ [goto] Chain POSTROUTING_ZONES_SOURCE ( references)

pkts bytes target prot opt in out source destination Chain POSTROUTING_direct ( references)

pkts bytes target prot opt in out source destination Chain POST_public ( references)

pkts bytes target prot opt in out source destination

POST_public_log all -- * * 0.0.0.0/ 0.0.0.0/

POST_public_deny all -- * * 0.0.0.0/ 0.0.0.0/

POST_public_allow all -- * * 0.0.0.0/ 0.0.0.0/ Chain POST_public_allow ( references)

pkts bytes target prot opt in out source destination Chain POST_public_deny ( references)

pkts bytes target prot opt in out source destination Chain POST_public_log ( references)

pkts bytes target prot opt in out source destination Chain PREROUTING_ZONES ( references)

pkts bytes target prot opt in out source destination

PRE_public all -- ens33 * 0.0.0.0/ 0.0.0.0/ [goto]

PRE_public all -- + * 0.0.0.0/ 0.0.0.0/ [goto] Chain PREROUTING_ZONES_SOURCE ( references)

pkts bytes target prot opt in out source destination Chain PREROUTING_direct ( references)

pkts bytes target prot opt in out source destination Chain PRE_public ( references)

pkts bytes target prot opt in out source destination

PRE_public_log all -- * * 0.0.0.0/ 0.0.0.0/

PRE_public_deny all -- * * 0.0.0.0/ 0.0.0.0/

PRE_public_allow all -- * * 0.0.0.0/ 0.0.0.0/ Chain PRE_public_allow ( references)

pkts bytes target prot opt in out source destination Chain PRE_public_deny ( references)

pkts bytes target prot opt in out source destination Chain PRE_public_log ( references)

pkts bytes target prot opt in out source destination

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker kill myweb #停止容器运行后iptables中的nat表记录也会跟着清除

3>.“-p<hostPort>:<containerPort>”案例展示

[root@node101.yinzhengjie.org.cn ~]# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

jason/httpd v0. 78fb6601880f hours ago .22MB

jason/httpd v0.- 76d5e6c143b2 hours ago .22MB

busybox latest 19485c79a9bb weeks ago .22MB

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker run --name myweb --rm -p : jason/httpd:v0.2 #运行时阻塞在当前终端,需要重新开启一个终端查看容器运行情况

[root@node101.yinzhengjie.org.cn ~]# docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4e508f5351e9 jason/httpd:v0. "/bin/httpd -f -h /d…" About a minute ago Up About a minute 0.0.0.0:->/tcp myweb

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker port myweb #我们发现容器的80端口被绑定到物理机所有可用网卡的80端口啦。

/tcp -> 0.0.0.0:

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

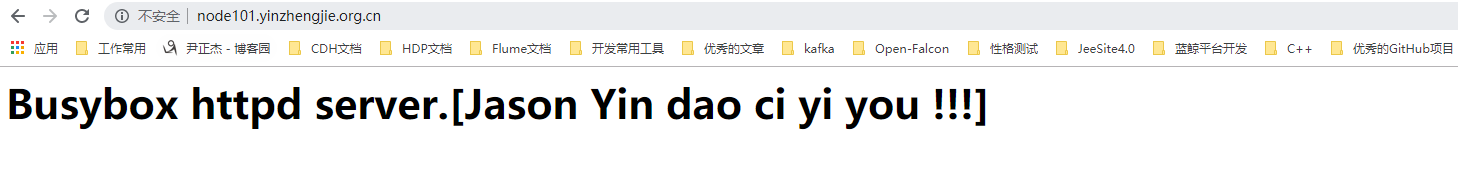

[root@node101.yinzhengjie.org.cn ~]# docker port myweb #我们发现容器的80端口被绑定到物理机所有可用网卡的80端口啦,服务可以正常访问,如下图所示。

[root@node101.yinzhengjie.org.cn ~]# docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4e508f5351e9 jason/httpd:v0. "/bin/httpd -f -h /d…" minutes ago Up minutes 0.0.0.0:->/tcp myweb

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker kill myweb #终止myweb容器运行

myweb

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker kill myweb #终止myweb容器运行

4>.“-p <ip>::<containerPort>”案例展示

[root@node101.yinzhengjie.org.cn ~]# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

jason/httpd v0. 78fb6601880f hours ago .22MB

jason/httpd v0.- 76d5e6c143b2 days ago .22MB

busybox latest 19485c79a9bb weeks ago .22MB

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker run --name myweb --rm -p 172.30.1.101:: jason/httpd:v0.2 #启动容器时指定绑定的具体IP,另外启动一个终端查看对应的port信息

[root@node101.yinzhengjie.org.cn ~]# docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS N

AMES9dab293691ef jason/httpd:v0. "/bin/httpd -f -h /d…" seconds ago Up seconds 172.30.1.101:->/tcp myweb

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker port myweb #很明显,此时myweb容器的80端口被动态绑定到172.30.1.101这块网卡的32768端口啦

/tcp -> 172.30.1.101:

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# curl 172.30.1.101: #服务依然是可用正常访问的

<h1>Busybox httpd server.[Jason Yin dao ci yi you !!!]</h1>

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker kill myweb #停止容器运行

myweb

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker port myweb #很明显,此时myweb容器的80端口被动态绑定到172.30.1.101这块网卡的32768端口啦

4>.“-p<ip>:<hostPort>:<containerPort>”

[root@node101.yinzhengjie.org.cn ~]# docker run --name myweb --rm -p 172.30.1.101:: jason/httpd:v0.2 #启动容器时指定具体IP和其对应的端口映射容器主机的80端口,运行时程序会阻塞。

[root@node101.yinzhengjie.org.cn ~]# docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS N

AMES4bb5e9b96599 jason/httpd:v0. "/bin/httpd -f -h /d…" About a minute ago Up About a minute 172.30.1.101:->/tcp

myweb[root@node101.yinzhengjie.org.cn ~]#

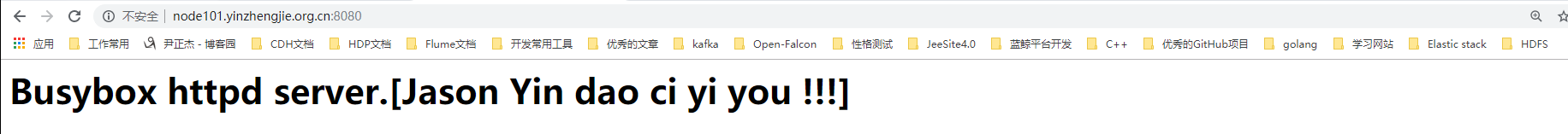

[root@node101.yinzhengjie.org.cn ~]# docker port myweb #发现配置生效啦,物理机访问结果如下图所示。

/tcp -> 172.30.1.101:

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

六.基于host的网络模型案例

1>.两个虚拟机公用相同网络名称空间

[root@node101.yinzhengjie.org.cn ~]# docker run --name c1 -it --rm busybox #启动第一个容器,注意观察IP地址

/ #

/ # ifconfig

eth0 Link encap:Ethernet HWaddr ::AC:::

inet addr:172.17.0.2 Bcast:172.17.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (656.0 B) TX bytes: (0.0 B) lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (0.0 B) TX bytes: (0.0 B) / #

[root@node101.yinzhengjie.org.cn ~]# docker run --name c1 -it --rm busybox #启动第一个容器,注意观察IP地址

[root@node101.yinzhengjie.org.cn ~]# docker run --name c2 -it --network container:c1 --rm busybox #启动第二个容器,网络名称空间和c1容器共享,注意观察2个容器的IP地址,发现c2容器的IP地址和c1的竟然是一样的。

/ #

/ # ifconfig

eth0 Link encap:Ethernet HWaddr ::AC:::

inet addr:172.17.0.2 Bcast:172.17.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (656.0 B) TX bytes: (0.0 B) lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (0.0 B) TX bytes: (0.0 B) / #

/ # echo "hello world" > /tmp/index.html

/ #

/ # ls /tmp/

index.html

/ #

/ # httpd -h /tmp/ #我们在c2容器中启动一个http服务

/ #

/ # netstat -ntl

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp ::: :::* LISTEN

/ #

[root@node101.yinzhengjie.org.cn ~]# docker run --name c1 -it --rm busybox

/ #

.....

/ # wget -O - -q 127.0.0.1 #我们在c1容器完全是可以访问到c2容器的服务的,因为它们公用的是相同的网络名称空间,但文件系统依旧是独立的,可以查看"/tmp"目录下是否有"index.html"文件

hello world

/ #

/ # ls /tmp/ #需要注意的是,它们的文件系统还是独立的,我们在c1的容器无法访问到c2容器的文件系统。

/ #

/ # wget -O - -q 127.0.0.1 #我们在c1容器完全是可以访问到c2容器的服务的,因为它们公用的是相同的网络名称空间,但文件系统依旧是独立的,可以查看"/tmp"目录下是否有"index.html"文件 hello world

2>.容器和宿主机公用相同的名称空间案例

[root@node101.yinzhengjie.org.cn ~]# ifconfig

docker0: flags=<UP,BROADCAST,MULTICAST> mtu

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

inet6 fe80:::5aff:fe50:fc34 prefixlen scopeid 0x20<link>

ether ::5a::fc: txqueuelen (Ethernet)

RX packets bytes (4.6 KiB)

RX errors dropped overruns frame

TX packets bytes (8.1 KiB)

TX errors dropped overruns carrier collisions ens33: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 172.30.1.101 netmask 255.255.255.0 broadcast 172.30.1.255

inet6 fe80::20c:29ff:febe:114d prefixlen scopeid 0x20<link>

ether :0c::be::4d txqueuelen (Ethernet)

RX packets bytes (1.9 MiB)

RX errors dropped overruns frame

TX packets bytes (619.9 KiB)

TX errors dropped overruns carrier collisions lo: flags=<UP,LOOPBACK,RUNNING> mtu

inet 127.0.0.1 netmask 255.0.0.0

inet6 :: prefixlen scopeid 0x10<host>

loop txqueuelen (Local Loopback)

RX packets bytes (10.7 KiB)

RX errors dropped overruns frame

TX packets bytes (10.7 KiB)

TX errors dropped overruns carrier collisions virbr0: flags=<UP,BROADCAST,MULTICAST> mtu

inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255

ether :::a9:de:9b txqueuelen (Ethernet)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker run --name c1 --network host -it --rm busybox #我们发现容器和宿主机使用的是相同网络名称空间,容器的所有网卡均和宿主机一样。

/ #

/ # ifconfig

docker0 Link encap:Ethernet HWaddr ::5A::FC:

inet addr:172.17.0.1 Bcast:172.17.255.255 Mask:255.255.0.0

inet6 addr: fe80:::5aff:fe50:fc34/ Scope:Link

UP BROADCAST MULTICAST MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (4.6 KiB) TX bytes: (8.1 KiB) ens33 Link encap:Ethernet HWaddr :0C::BE::4D

inet addr:172.30.1.101 Bcast:172.30.1.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:febe:114d/ Scope:Link

UP BROADCAST RUNNING MULTICAST MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (2.0 MiB) TX bytes: (637.1 KiB) lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::/ Scope:Host

UP LOOPBACK RUNNING MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (10.7 KiB) TX bytes: (10.7 KiB) virbr0 Link encap:Ethernet HWaddr :::A9:DE:9B

inet addr:192.168.122.1 Bcast:192.168.122.255 Mask:255.255.255.0

UP BROADCAST MULTICAST MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (0.0 B) TX bytes: (0.0 B) / #

/ #

/ # echo "hello container" > /tmp/index.html

/ #

/ # ls /tmp/

index.html

/ #

/ # httpd -h /tmp/ #启动HTTPD服务

/ #

/ # netstat -tnl

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0.0.0.0: 0.0.0.0:* LISTEN

tcp 0.0.0.0: 0.0.0.0:* LISTEN

tcp 192.168.122.1: 0.0.0.0:* LISTEN

tcp 0.0.0.0: 0.0.0.0:* LISTEN

tcp 127.0.0.1: 0.0.0.0:* LISTEN

tcp 127.0.0.1: 0.0.0.0:* LISTEN

tcp ::: :::* LISTEN

tcp ::: :::* LISTEN

tcp ::: :::* LISTEN

tcp ::: :::* LISTEN

tcp ::: :::* LISTEN

tcp ::: :::* LISTEN

/ #

/ #

[root@node101.yinzhengjie.org.cn ~]# docker run --name c1 --network host -it --rm busybox #我们发现容器和宿主机使用的是相同网络名称空间,容器的所有网卡均和宿主机一样。

[root@node101.yinzhengjie.org.cn ~]# netstat -ntl

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State

tcp 0.0.0.0: 0.0.0.0:* LISTEN

tcp 0.0.0.0: 0.0.0.0:* LISTEN

tcp 192.168.122.1: 0.0.0.0:* LISTEN

tcp 0.0.0.0: 0.0.0.0:* LISTEN

tcp 127.0.0.1: 0.0.0.0:* LISTEN

tcp 127.0.0.1: 0.0.0.0:* LISTEN

tcp6 ::: :::* LISTEN

tcp6 ::: :::* LISTEN

tcp6 ::: :::* LISTEN

tcp6 ::: :::* LISTEN

tcp6 ::: :::* LISTEN

tcp6 ::: :::* LISTEN

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# curl 127.0.0.1: #在容器内部启动httpd服务后,在宿主机上也是可以正常访问到的,因为它们使用的是相同的网络名称空间。

hello container

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# curl 127.0.0.1:80 #在容器内部启动httpd服务后,在宿主机上也是可以正常访问到的,因为它们使用的是相同的网络名称空间。

七.自定义docker0桥的网络属性信息

1>.修改配置文件

[root@node101.yinzhengjie.org.cn ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# systemctl stop docker

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# vi /etc/docker/daemon.json

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# cat /etc/docker/daemon.json #注意,下面的核心选项为"bip",即"bridge ip"之意,用于指定docker0桥自身的IP地址;其它选项可通过此计算出来,当然DNS咱们得单独指定哈~

{

"registry-mirrors": ["https://tuv7rqqq.mirror.aliyuncs.com"],

"bip":"192.168.100.254/24",

"dns":["219.141.139.10","219.141.140.10"]

}

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# systemctl start docker

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ifconfig docker0 #重启docker服务后,发现配置生效啦!

docker0: flags=<UP,BROADCAST,MULTICAST> mtu

inet 192.168.100.254 netmask 255.255.255.0 broadcast 192.168.100.255

inet6 fe80:::5aff:fe50:fc34 prefixlen scopeid 0x20<link>

ether ::5a::fc: txqueuelen (Ethernet)

RX packets bytes (4.6 KiB)

RX errors dropped overruns frame

TX packets bytes (8.1 KiB)

TX errors dropped overruns carrier collisions [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

2>.创建一个docker容器观察上一步的配置是否生效

[root@node101.yinzhengjie.org.cn ~]# docker run --name c1 -it --rm busybox #和咱们想想的一样,配置已经生效啦!

/ #

/ # ifconfig

eth0 Link encap:Ethernet HWaddr ::C0:A8::

inet addr:192.168.100.1 Bcast:192.168.100.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (516.0 B) TX bytes: (0.0 B) lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (0.0 B) TX bytes: (0.0 B) / #

/ # cat /etc/resolv.conf

search yinzhengjie.org.cn

nameserver 219.141.139.10

nameserver 219.141.140.10

/ #

/ # route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.100.254 0.0.0.0 UG eth0

192.168.100.0 0.0.0.0 255.255.255.0 U eth0

/ #

[root@node101.yinzhengjie.org.cn ~]# docker run --name c1 -it --rm busybox #和咱们想想的一样,配置已经生效啦!

八.修改docker默认的监听方式

1>.查看docker默认监听Unix Socket格式的地址

[root@node101.yinzhengjie.org.cn ~]# ll /var/run/docker.sock #我们知道Unix socket文件只支持本地通信,想要跨主机支持就得用别的方式实现啦!而默认就是基于Unix socket套接字实现。

srw-rw----. root docker Oct : /var/run/docker.sock

[root@node101.yinzhengjie.org.cn ~]#

2>.修改配置文件

[root@node101.yinzhengjie.org.cn ~]# docker container ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e249bc41f2fd busybox "sh" minutes ago Up minutes c1

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker kill c1

c1

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker container ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# systemctl stop docker

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# vi /etc/docker/daemon.json

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://tuv7rqqq.mirror.aliyuncs.com"],

"bip":"192.168.100.254/24",

"dns":["219.141.139.10","219.141.140.10"],

"hosts":["tcp://0.0.0.0:8888","unix:///var/run/docker.sock"] #此处我们绑定了docker启动基于tcp启动便于其它主机访问,基于unix套接字启动便于本地访问

}

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# systemctl start docker

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# netstat -ntl | grep

tcp6 ::: :::* LISTEN

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# systemctl restart docker #记一次启动服务报错,附有详细解决流程。

Warning: docker.service changed on disk. Run 'systemctl daemon-reload' to reload units.

Job for docker.service failed because the control process exited with error code. See "systemctl status docker.service" and "journalctl

-xe" for details. [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# tail -100f /var/log/messages #启动docker服务时最好是一边启动服务一边查看日志信息。启动时发现有以下报错信息

......

Oct :: node101 dockerd: unable to configure the Docker daemon with file /etc/docker/daemon.json: the following directives are

specified both as a flag and in the configuration file: hosts: (from flag: [fd://], from file: [tcp://0.0.0.0:2375 unix:///var/run/docker.sock]) ....

初次看到这个信息给我的感觉是配置文件出错了,但是始终找不到配置文件哪里有错,最后查看官方文档有关报错信息提到了类似的说明,链接地址为:https://docs.docker.com/config/daemon/ 报错分析说咱们在配置文件指定了"hosts"这个key,“启动时始终使用主机标志dockerd。如果在中指定 hosts条目,则将daemon.json导致配置冲突并且Docker无法启动。” 具体解决步骤如下所示:

[root@node101.yinzhengjie.org.cn ~]# mkdir -pv /etc/systemd/system/docker.service.d/

mkdir: created directory ‘/etc/systemd/system/docker.service.d/’

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# vi /etc/systemd/system/docker.service.d/docker.conf

[root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# cat /etc/systemd/system/docker.service.d/docker.conf

[Service]

ExecStart=

ExecStart=/usr/bin/dockerd

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# systemctl daemon-reload #这个操作必须做

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# systemctl restart docker #经过上述操作,发现问题得到解决

[root@node101.yinzhengjie.org.cn ~]# [root@node101.yinzhengjie.org.cn ~]# netstat -ntl | grep

tcp6 ::: :::* LISTEN

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# systemctl restart docker #记一次启动服务报错,附有详细解决流程。

3>.此时配置是否成功(docker客户端连接其它docker daemon进程)

[root@node101.yinzhengjie.org.cn ~]# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

redis latest de25a81a5a0b hours ago .2MB

busybox latest 19485c79a9bb weeks ago .22MB

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker run --name c1 -it --rm busybox #我们在node101.yinzhengjie.org.cn节点上运行一个容器,于此同时,我们可以在另一个节点来访问当前节点的docker服务

/ #

/ # ifconfig

eth0 Link encap:Ethernet HWaddr ::C0:A8::

inet addr:192.168.100.1 Bcast:192.168.100.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (0.0 B) TX bytes: (0.0 B) lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (0.0 B) TX bytes: (0.0 B) / #

/ # cat /etc/resolv.conf

search www.tendawifi.com yinzhengjie.org.cn

nameserver 219.141.139.10

nameserver 219.141.140.10

/ #

[root@node101.yinzhengjie.org.cn ~]# docker run --name c1 -it --rm busybox #我们在node101.yinzhengjie.org.cn节点上运行一个容器,于此同时,我们可以在另一个节点来访问当前节点的docker服务

[root@node102.yinzhengjie.org.cn ~]# systemctl start docker

[root@node102.yinzhengjie.org.cn ~]#

[root@node102.yinzhengjie.org.cn ~]# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

[root@node102.yinzhengjie.org.cn ~]#

[root@node102.yinzhengjie.org.cn ~]# docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

[root@node102.yinzhengjie.org.cn ~]#

[root@node102.yinzhengjie.org.cn ~]# systemctl stop docker #我们停掉node102.yinzhengjie.org.cn节点的docker服务

[root@node102.yinzhengjie.org.cn ~]#

[root@node102.yinzhengjie.org.cn ~]# docker -H node101.yinzhengjie.org.cn: image ls #我们访问node101.yinzhengjie.org.cn节点的docker服务,查看该节点的镜像发现是可以查看到数据的。

REPOSITORY TAG IMAGE ID CREATED SIZE

redis latest de25a81a5a0b hours ago .2MB

busybox latest 19485c79a9bb weeks ago .22MB

[root@node102.yinzhengjie.org.cn ~]#

[root@node102.yinzhengjie.org.cn ~]# docker -H node101.yinzhengjie.org.cn: container ls #同理访问容器信息也可以查看到!注意:端口号必须得指定,如果你设置的是2375端口则可以不指定端口哟~

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

68db1d8192ad busybox "sh" minutes ago Up minutes c1

[root@node102.yinzhengjie.org.cn ~]#

[root@node102.yinzhengjie.org.cn ~]#

九.创建自定义的网络模型

1>.查看已有的网络模型

[root@node101.yinzhengjie.org.cn ~]# docker network ls #默认的网络模型,创建容器时若不指定网络模型,默认使用"bridge"

NETWORK ID NAME DRIVER SCOPE

7ad23e4ff4b3 bridge bridge local

8aeb2bc6b3fe host host local

7e83f7595aac none null local

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker info | grep Network

Network: bridge host ipvlan macvlan null overlay #我们发现其实网络模型不仅仅只有上面默认的bridge,host和null,docker还支持macvlan以及overlay技术。

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

2>.通过创建自定义的网络模型启动一个容器

[root@node101.yinzhengjie.org.cn ~]# docker network create --help #查看创建网络驱动的帮助信息 Usage: docker network create [OPTIONS] NETWORK Create a network Options:

--attachable Enable manual container attachment

--aux-address map Auxiliary IPv4 or IPv6 addresses used by Network driver (default map[])

--config-from string The network from which copying the configuration

--config-only Create a configuration only network

-d, --driver string Driver to manage the Network (default "bridge")

--gateway strings IPv4 or IPv6 Gateway for the master subnet

--ingress Create swarm routing-mesh network

--internal Restrict external access to the network

--ip-range strings Allocate container ip from a sub-range

--ipam-driver string IP Address Management Driver (default "default")

--ipam-opt map Set IPAM driver specific options (default map[])

--ipv6 Enable IPv6 networking

--label list Set metadata on a network

-o, --opt map Set driver specific options (default map[])

--scope string Control the network's scope

--subnet strings Subnet in CIDR format that represents a network segment

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker network create --help #查看创建网络驱动的帮助信息

[root@node101.yinzhengjie.org.cn ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

7ad23e4ff4b3 bridge bridge local

8aeb2bc6b3fe host host local

7e83f7595aac none null local

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker network create -d bridge --subnet "192.168.200.0/24" --gateway "192.168.200.254" mybr0 #基于bridge创建一块mybr0的网络模型

3d42817e3691bc9f4275b6a222ef6d792b1e0817817e97af77d35dfdfbfe7e24

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker network ls #注意观察多出来了一行"mybr0"的信息

NETWORK ID NAME DRIVER SCOPE

7ad23e4ff4b3 bridge bridge local

8aeb2bc6b3fe host host local

3d42817e3691 mybr0 bridge local

7e83f7595aac none null local

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ifconfig #注意观察多出来了一块网卡,而且网卡的地址就是咱们上面配置的"192.168.200.254"

br-3d42817e3691: flags=<UP,BROADCAST,MULTICAST> mtu

inet 192.168.200.254 netmask 255.255.255.0 broadcast 192.168.200.255

ether ::db:6f::5d txqueuelen (Ethernet)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions docker0: flags=<UP,BROADCAST,MULTICAST> mtu

inet 192.168.100.254 netmask 255.255.255.0 broadcast 192.168.100.255

ether :::4f::da txqueuelen (Ethernet)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions enp0s3: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 10.0.2.15 netmask 255.255.255.0 broadcast 10.0.2.255

ether :::e0:bb: txqueuelen (Ethernet)

RX packets bytes (132.7 MiB)

RX errors dropped overruns frame

TX packets bytes (840.4 KiB)

TX errors dropped overruns carrier collisions enp0s8: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 172.30.1.101 netmask 255.255.255.0 broadcast 172.30.1.255

ether :::c1:c7: txqueuelen (Ethernet)

RX packets bytes (195.3 KiB)

RX errors dropped overruns frame

TX packets bytes (381.9 KiB)

TX errors dropped overruns carrier collisions lo: flags=<UP,LOOPBACK,RUNNING> mtu

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen (Local Loopback)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# docker network create -d bridge --subnet "192.168.200.0/24" --gateway "192.168.200.254" mybr0 #基于bridge创建一块mybr0的网络模型

[root@node101.yinzhengjie.org.cn ~]# ifconfig

br-3d42817e3691: flags=<UP,BROADCAST,MULTICAST> mtu

inet 192.168.200.254 netmask 255.255.255.0 broadcast 192.168.200.255

ether ::db:6f::5d txqueuelen (Ethernet)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions docker0: flags=<UP,BROADCAST,MULTICAST> mtu

inet 192.168.100.254 netmask 255.255.255.0 broadcast 192.168.100.255

ether :::4f::da txqueuelen (Ethernet)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions enp0s3: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 10.0.2.15 netmask 255.255.255.0 broadcast 10.0.2.255

ether :::e0:bb: txqueuelen (Ethernet)

RX packets bytes (132.7 MiB)

RX errors dropped overruns frame

TX packets bytes (840.4 KiB)

TX errors dropped overruns carrier collisions enp0s8: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 172.30.1.101 netmask 255.255.255.0 broadcast 172.30.1.255

ether :::c1:c7: txqueuelen (Ethernet)

RX packets bytes (206.9 KiB)

RX errors dropped overruns frame

TX packets bytes (399.0 KiB)

TX errors dropped overruns carrier collisions lo: flags=<UP,LOOPBACK,RUNNING> mtu

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen (Local Loopback)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ifconfig br-3d42817e3691 down #关掉网卡后改名

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ip link set dev br-3d42817e3691 name docker1 #将咱们自定义的网卡名称改为docker1

[root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ifconfig -a

docker0: flags=<UP,BROADCAST,MULTICAST> mtu

inet 192.168.100.254 netmask 255.255.255.0 broadcast 192.168.100.255

ether :::4f::da txqueuelen (Ethernet)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions docker1: flags=<BROADCAST,MULTICAST> mtu

inet 192.168.200.254 netmask 255.255.255.0 broadcast 192.168.200.255

ether ::db:6f::5d txqueuelen (Ethernet)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions enp0s3: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 10.0.2.15 netmask 255.255.255.0 broadcast 10.0.2.255

ether :::e0:bb: txqueuelen (Ethernet)

RX packets bytes (132.7 MiB)

RX errors dropped overruns frame

TX packets bytes (840.4 KiB)

TX errors dropped overruns carrier collisions enp0s8: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 172.30.1.101 netmask 255.255.255.0 broadcast 172.30.1.255

ether :::c1:c7: txqueuelen (Ethernet)

RX packets bytes (214.4 KiB)

RX errors dropped overruns frame

TX packets bytes (409.6 KiB)

TX errors dropped overruns carrier collisions lo: flags=<UP,LOOPBACK,RUNNING> mtu

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen (Local Loopback)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions [root@node101.yinzhengjie.org.cn ~]#

[root@node101.yinzhengjie.org.cn ~]# ip link set dev br-3d42817e3691 name docker1 #将咱们自定义的网卡名称改为docker1

[root@node101.yinzhengjie.org.cn ~]# docker run --name c1 -it --net mybr0 --rm busybox:latest #基于咱们自定义的网卡mybr0创建一个容器名称为c1

/ #

/ # ifconfig

eth0 Link encap:Ethernet HWaddr ::C0:A8:C8:

inet addr:192.168.200.1 Bcast:192.168.200.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (0.0 B) TX bytes: (0.0 B) lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (0.0 B) TX bytes: (0.0 B) / #

[root@node101.yinzhengjie.org.cn ~]# docker run --name c1 -it --net mybr0 --rm busybox:latest #基于咱们自定义的网卡mybr0创建一个容器名称为c1

[root@node101.yinzhengjie.org.cn ~]# docker run --name c2 -it --net bridge --rm busybox #使用默认的bridge网络创建一个c2的容器

/ #

/ # ifconfig

eth0 Link encap:Ethernet HWaddr ::C0:A8::

inet addr:192.168.100.1 Bcast:192.168.100.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (0.0 B) TX bytes: (0.0 B) lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU: Metric:

RX packets: errors: dropped: overruns: frame:

TX packets: errors: dropped: overruns: carrier:

collisions: txqueuelen:

RX bytes: (0.0 B) TX bytes: (0.0 B) / #

/ # ping 192.168.200.1 #此时我们发现无法和咱们自定义的"mybr0"网络模型的容器c1进行通信,如果路由转发("/proc/sys/net/ipv4/ip_forward")参数是开启的话,估计问题就出现在iptables上了,需要自行添加放行语句。

PING 192.168.200.1 (192.168.200.1): data bytes

Docker容器网络篇的更多相关文章

- Docker容器网络-基础篇

开源Linux 一个执着于技术的公众号 Docker的技术依赖于Linux内核的虚拟化技术的发展,Docker使用到的网络技术有Network Namespace.Veth设备对.Iptables/N ...

- 【转】理解Docker容器网络之Linux Network Namespace

原文:理解Docker容器网络之Linux Network Namespace 由于2016年年中调换工作的原因,对容器网络的研究中断过一段时间.随着当前项目对Kubernetes应用的深入,我感觉之 ...

- docker容器网络bridge

我们知道docker利用linux内核特性namespace实现了网络的隔离,让每个容器都处于自己的小世界里面,当这个小世界需要与外界(宿主机或其他容器)通信的时候docker的网络就发挥作用了,这篇 ...

- 两台主机间docker容器网络互通

服务器1: 网络172.30.0.0/16 服务器2: 网络172.31.0.0/16 服务器1和服务器2上的docker容器网络之间是无法互通的,如果需要互通,需要做以下配置: 服务器1上执行: i ...

- Docker容器网络配置

Docker容器网络配置 1.Linux内核实现名称空间的创建 1.1 ip netns命令 可以借助ip netns命令来完成对 Network Namespace 的各种操作.ip netns命令 ...

- Docker容器网络-实现篇

通常,Linux容器的网络是被隔离在它自己的Network Namespace中,其中就包括:网卡(Network Interface).回环设备(Loopback Device).路由表(Routi ...

- 5、Docker容器网络

使用Linux进行IP层网络管理的指 http://linux-ip.net/html/ # yum install iproute http://linux-ip.net/html/tool ...

- docker容器网络—单主机容器网络

当我们在单台物理机或虚拟机中运行多个docker容器应用时,这些容器之间是如何进行通信的呢,或者外界是如何访问这些容器的? 这里就涉及了单机容器网络相关的知识.docker 安装后默认 情况下会在宿主 ...

- docker容器网络

1.我们在使用docker run创建Docker容器时,可以用--net选项指定容器的网络模式,Docker有以下4种网络模式: · host模式,使用--net=host指定 · containe ...

随机推荐

- Java 动态代理与AOP

动态代理与AOP 代理模式 代理模式给某一个目标对象(target)提供代理对象(proxy),并由代理对象控制对target对象的引用. 模式图: 代理模式中的角色有: 抽象对象角色(Abstrac ...

- [转]java 根据模板文件生成word文档

链接地址:https://blog.csdn.net/ai_0922/article/details/82773466

- mybatis plus 支持达梦DM 数据库啦

最近由于公司项目需要使用DM数据库,现在就官方源码修改了,完美支持达梦数据库的代码生成器.官方说的v3.0.RELEASE版本支持达梦数据库,不知道说的支持包括支持代码生成器么? 怀着兴奋的心情,兴高 ...

- Kubernetes之在k8s中部署Java应用

部署好了k8s以后 部署参考https://www.cnblogs.com/minseo/p/12055731.html 怎么在k8s部署应用 项目迁移到k8s平台是怎样的流程 1,制作镜像 2,控制 ...

- SonarQube - 安装与运行SonarQube

1 - 下载SonarQube SonarQube有多个版本,其中CE(Community Edition)版本免费开源,其余的开发者版本.企业版本和数据中心版本都是收费版本. 官网下载:https: ...

- 【maven学习】pom.xml文件详解

环境 apache-maven-3.6.1 jdk 1.8 eclipse 4.7 POM是项目对象模型(Project Object Model)的简称,它是Maven项目中的文件,使用XML表示, ...

- 【C/C++开发】C++11:右值引用和转发型引用

右值引用 为了解决移动语义及完美转发问题,C++11标准引入了右值引用(rvalue reference)这一重要的新概念.右值引用采用T&&这一语法形式,比传统的引用T&(如 ...

- IntelliJ IDEA重新打开后把字母隐藏怎么办

默认Font的字体melno不行,r会隐形 解决方案:更换idea字体,点击apply按钮即可正常显示 修改Font为的consoleas Size:16 line spacing:1.25

- C++自主测试题目

下面是题目 后面有代码 1.键盘输入3个整数a,b,c值,求一元二次方程a*X∧2+b*X+c=0(a≠0)的根,结果保留两位小树. 2.编写一个口令输入程序,让用户不停输入口令,直到输对为止,假设口 ...

- 一秒可生成500万ID的分布式自增ID算法—雪花算法 (Snowflake,Delphi 版)

概述 分布式系统中,有一些需要使用全局唯一ID的场景,这种时候为了防止ID冲突可以使用36位的UUID,但是UUID有一些缺点,首先他相对比较长,另外UUID一般是无序的. 有些时候我们希望能使用一种 ...