DeepFM

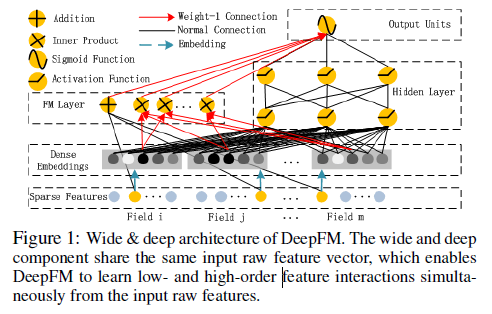

DeepFM integrates the architectures of FM and deep neural networks (DNN). It models low-order feature interactions like FM(二阶组合特征) and models high-order feature interactions like DNN(高阶组合特征). FM部分和Deep部分输入的特征一样,都是one-hot后的特征,共享同一个embedding层,cvr类型的特征需要离散化处理(每个field对应一个embedding层,如何定义layer,使得连接权重全部为常数1,输入节点两两组合进行Inner Product?)

FM部分和Deep部分输入的特征一样,都是one-hot后的特征,共享同一个embedding层,cvr类型的特征需要离散化处理(每个field对应一个embedding层,如何定义layer,使得连接权重全部为常数1,输入节点两两组合进行Inner Product?)

FM部分:

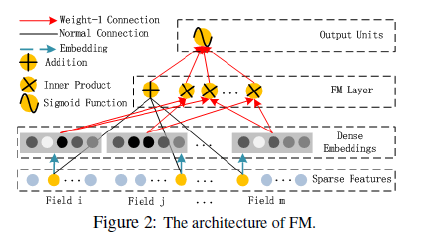

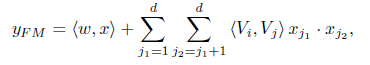

The FM component is a factorization machine,which is proposed to learn feature interactions for recommendation. Besides a linear (order-1) interactions among features, FM models pairwise (order-2) feature interactions as inner product of respective feature latent vectors(FM通过矩阵分解,每个特征对应一个隐向量,任意两个特征组合对应两个隐向量的点积). It can capture order-2 feature interactions much more effectively than previous approaches especially when the dataset is sparse. In previous approaches, the parameter of an interaction of features i and j can be trained only when feature i and feature j both appear in the same data record(两两特征进行组合,训练需要大量的两特征都存在的样本). While in FM, it is measured via the inner product of their latent vectors Vi and Vj . Thanks to this flexible design, FM can train latent vector Vi (Vj ) whenever i (or j) appears in a data record. Therefore, feature interactions, which are never or rarely appeared in the training data, are better learnt by FM.(FM中学习组合特征参数,就是学习特征对应的隐向量,由下面的式子可知,隐向量的训练不需要样本中两个特征同时都出现,这需要隐向量对应的特征存在即可。)

参考: http://www.cnblogs.com/ljygoodgoodstudydaydayup/p/6340129.html

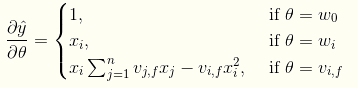

FM训练的时候,要求解的梯度为:

对应的公式:

对应的公式:

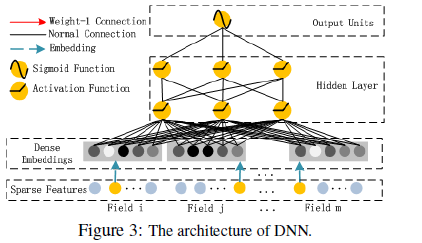

A Normal Connection in black refers to a connection with weight to be learned; a Weight-1 Connection, red arrow, is a connection with weight 1 by default(隐向量在Inner Product神经元中计算点积 ); Embedding,blue dashed arrow, means a latent vector to be learned(每个特征对应一个隐向量,embedding层其实就是一个全连接层,该全连接层有k个神经元,k是隐向量的维度,每个神经元的维度等于输入特征向量的维度。每个field生成一个词向量); Addition means adding all input together; Product, including Innerand Outer-Product, means the output of this unit is the product of two input vector; Sigmoid Function is used as the output function in CTR prediction; Activation Functions, such as relu and tanh, are used for non-linearly transforming the signal.

Deep 部分:

The raw feature input vector for CTR prediction is usually highly sparse, super high-dimensional, categorical-continuous-mixed, and grouped in fields。 This suggests an embedding layer to compress the input vector to a low dimensional, dense real-value vector before further feeding into the first hidden layer, otherwise the network can be overwhelming to train.

每个Field对应一个特征one-hot后的稀疏向量(维度:1 * n),embedding层有k个神经元,神经元的维度为n * 1,Field对应的稀疏向量与这k个神经元都连接,每个神经元上输出一个实数,则embedding层输出的结果为1*k的向量。

1) while the lengths of different input field vectors can be different(不同特征的特征值数目不同,所以one-hot向量的维度也不同),their embeddings are of the same size (k);

2) the latent feature vectors (V ) in FM now server as network weights which are learned and used to compress the input field vectors to the embedding vectors.

It is worth pointing out that FM component and deep component share the same feature embedding, which brings two important benefits: 1) it learns both low- and high-order feature interactions from raw features; 2) there is no need for expertise feature engineering of the input, as required in Wide & Deep 。

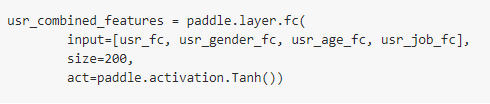

每个field对应一个词向量: http://book.paddlepaddle.org/index.cn.html

usr_age_id = paddle.layer.data(

name='age_id',

type=paddle.data_type.integer_value(

len(paddle.dataset.movielens.age_table)))

usr_age_emb = paddle.layer.embedding(input=usr_age_id, size=16)

usr_age_fc = paddle.layer.fc(input=usr_age_emb, size=16) usr_job_id = paddle.layer.data(

name='job_id',

type=paddle.data_type.integer_value(

paddle.dataset.movielens.max_job_id() + 1))

usr_job_emb = paddle.layer.embedding(input=usr_job_id, size=16)

usr_job_fc = paddle.layer.fc(input=usr_job_emb, size=16)

Relationship with the other Neural Networks:

FNN

PNN

Wide & Deep

Parameter Settings:

1) dropout : 0.5

Dropout refers to the probability that a neuron is kept in the network.Dropout is a regularization technique to compromise the precision and the complexity of the neural network.We set the dropout to be 1.0, 0.9, 0.8, 0.7, 0.6,0.5. The result shows that adding reasonable randomness to model can strengthen model's robustness.

2) network structure: 400-400-400

3) optimizer: Adam

4) activation function: relu https://arxiv.org/pdf/1611.00144.pdf

5) the latent dimension: 10

6) number of hidden layers

As presented in Figure 10, increasing number of hidden layers improves the performance of the models at the beginning, however, their performance is degraded if the number of hidden layers keeps increasing. This phenomenon is also because of overfitting.

注意激活层使用的激活函数以及BN层、 dropout层(隐层中设置dropout值就行了吧?)的位置

https://github.com/Leavingseason/OpenLearning4DeepRecsys

dnn ctr预估模型:

http://www.52cs.org/?p=1046

http://www.52cs.org/?p=1851

DeepFM的更多相关文章

- DeepCTR专题:DeepFM论文学习和实现及感悟

论文地址:https://arxiv.org/pdf/1703.04247.pdf CTR预估我们知道在比较多的应用场景下都有使用.如:搜索排序.推荐系统等都有广泛的应用.并且CTR具有极其重要的 地 ...

- CTR预估算法之FM, FFM, DeepFM及实践

https://blog.csdn.net/john_xyz/article/details/78933253 目录目录CTR预估综述Factorization Machines(FM)算法原理代码实 ...

- 用 tensorflow实现DeepFM

http://www.fabwrite.com/deepfm 文章DeepFM: A Factorization-Machine based Neural Network for CTR Predic ...

- DeepFM算法解析及Python实现

1. DeepFM算法的提出 由于DeepFM算法有效的结合了因子分解机与神经网络在特征学习中的优点:同时提取到低阶组合特征与高阶组合特征,所以越来越被广泛使用. 在DeepFM中,FM算法负责对一阶 ...

- DeepFM模型理论及代码实现

论文地址:DeepFM: A Factorization-Machine based Neural Network for CTR Prediction

- (读论文)推荐系统之ctr预估-DeepFM模型解析

今天第二篇(最近更新的都是Deep模型,传统的线性模型会后面找个时间更新的哈).本篇介绍华为的DeepFM模型 (2017年),此模型在 Wide&Deep 的基础上进行改进,成功解决了一些问 ...

- CTR@DeepFM

1. DeepFM算法 结合FM算法和DNN算法,同时提取低阶特征和高阶特征,然后组合.FM算法负责对一阶特征及由一阶特征两两组合成的二阶特征进行特征提取:DNN算法负责对由输入的一阶特征进行全连接等 ...

- 个性化排序算法实践(三)——deepFM算法

FM通过对于每一位特征的隐变量内积来提取特征组合,最后的结果也不错,虽然理论上FM可以对高阶特征组合进行建模,但实际上因为计算复杂度原因,一般都只用到了二阶特征组合.对于高阶特征组合来说,我们很自然想 ...

- tfserving 调用deepfm 并预测 java 【参考】

https://blog.csdn.net/luoyexuge/article/details/79941565?utm_source=blogxgwz8 首先是libsvm格式数据生成java代码, ...

随机推荐

- Android Studio问题记录

1>Android Studio中module是什么,? 答:Android Studio是基于intellij,跟eclipse不太一样.对应关系如下: intellij的project -- ...

- docker系列之基础命令-1

1.docker基础命令 docker images 显示镜像列表 docker ps 显示容器列表 docker run IMAGE_ID 指定镜像, 运行一个容器 docker start/sto ...

- RN与现有的原生app集成

https://facebook.github.io/react-native/docs/integration-with-existing-apps.html RN可以很好地支持往一个原生的app上 ...

- ios开发中遇到的文件和字符的问题大总结

我今天遇到的NSString问题 今天遇到一个字符串空指针问题,让我明白了许多 其实我们定义一个NSString * string,其实是定义了一个字符串指针,现在string没有指向任何地方,我们必 ...

- Hive 将本地数据导入hive表中

# 导入 load data local inpath '/root/mr/The_Man_of_Property.txt' insert into table article; # 提示 FAILE ...

- spring junit4 单元测试运行正常,但是数据库并无变化

解决方案 http://blog.csdn.net/molingduzun123/article/details/49383235 原因:Spring Juint为了不污染数据,对数据的删除和更新操作 ...

- C++ char数组和string类简单使用总结

使用char数组,进行字符串的操作,是c风格的操作方式. string是C++的风格,感觉string本质上就是一个vector<char> 以下代码详细展示了字符串的常见操作 #incl ...

- TOJ 4244: Sum

4244: Sum Time Limit(Common/Java):3000MS/9000MS Memory Limit:65536KByteTotal Submit: 63 ...

- 3.ruby语法基础,全部变量,实例变量,类变量,局部变量的使用和注意的要点

1.ruby的全局变量的概念和Java的全局变量的概念不同, ruby的全局变量是以$符号开头的,如果给全局变量的初始化值为nil会出现警告. 赋值给全局变量,这是ruby不推荐的,这样会使程序变得很 ...

- SPOJ GSS1 Can you answer these queries I ——线段树

[题目分析] 线段树裸题. 注意update的操作,写结构体里好方便. 嗯,没了. [代码] #include <cstdio> #include <cstring> #inc ...