Spark进阶之路-Standalone模式搭建

Spark进阶之路-Standalone模式搭建

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

一.Spark的集群的准备环境

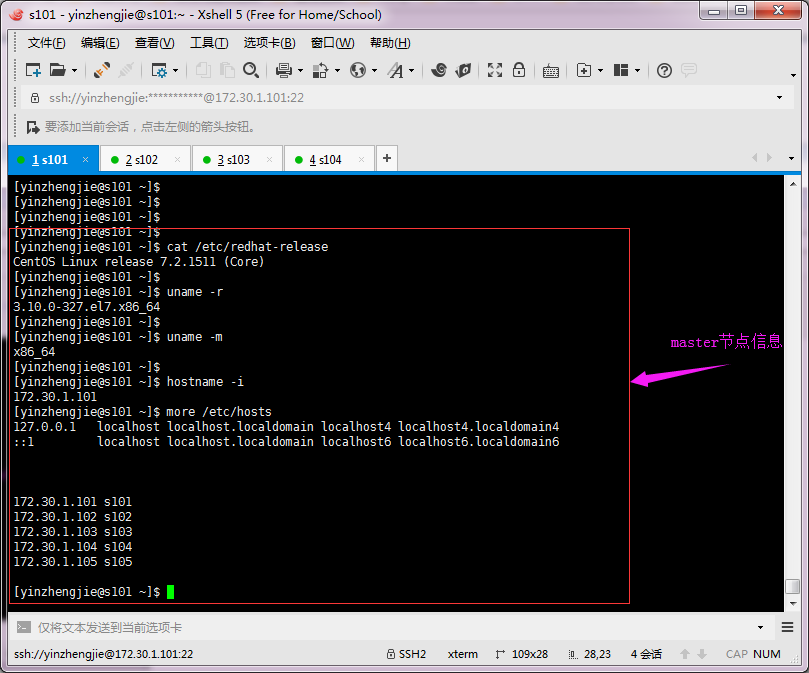

1>.master节点信息(s101)

2>.worker节点信息(s102)

3>.worker节点信息(s103)

4>.worker节点信息(s104)

二.Spark的Standalone模式搭建

1>.下载Spark安装包

Spark下载地址:https://archive.apache.org/dist/spark/

[yinzhengjie@s101 download]$ sudo yum -y install wget

[sudo] password for yinzhengjie:

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.aliyun.com

* extras: mirrors.aliyun.com

* updates: mirrors.aliyun.com

Resolving Dependencies

--> Running transaction check

---> Package wget.x86_64 :1.14-.el7_4. will be installed

--> Finished Dependency Resolution Dependencies Resolved =====================================================================================================================================================================

Package Arch Version Repository Size

=====================================================================================================================================================================

Installing:

wget x86_64 1.14-.el7_4. base k Transaction Summary

=====================================================================================================================================================================

Install Package Total download size: k

Installed size: 2.0 M

Downloading packages:

wget-1.14-.el7_4..x86_64.rpm | kB ::

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

Installing : wget-1.14-.el7_4..x86_64 /

Verifying : wget-1.14-.el7_4..x86_64 / Installed:

wget.x86_64 :1.14-.el7_4. Complete!

[yinzhengjie@s101 download]$

安装wget软件包([yinzhengjie@s101 download]$ sudo yum -y install wget)

[yinzhengjie@s101 download]$ wget https://archive.apache.org/dist/spark/spark-2.1.1/spark-2.1.1-bin-hadoop2.7.tgz #下载你想要下载的版本

2>.解压配置文件

[yinzhengjie@s101 download]$ ll

total

-rw-r--r--. yinzhengjie yinzhengjie Aug hadoop-2.7..tar.gz

-rw-r--r--. yinzhengjie yinzhengjie May jdk-8u131-linux-x64.tar.gz

-rw-r--r--. yinzhengjie yinzhengjie Jul spark-2.1.-bin-hadoop2..tgz

-rw-r--r--. yinzhengjie yinzhengjie Jun : zookeeper-3.4..tar.gz

[yinzhengjie@s101 download]$

[yinzhengjie@s101 download]$ tar -xf spark-2.1.-bin-hadoop2..tgz -C /soft/ #加压Spark安装包到指定目录

[yinzhengjie@s101 download]$ ll /soft/

total

lrwxrwxrwx. yinzhengjie yinzhengjie Aug : hadoop -> /soft/hadoop-2.7./

drwxr-xr-x. yinzhengjie yinzhengjie Aug : hadoop-2.7.

lrwxrwxrwx. yinzhengjie yinzhengjie Aug : jdk -> /soft/jdk1..0_131/

drwxr-xr-x. yinzhengjie yinzhengjie Mar jdk1..0_131

drwxr-xr-x. yinzhengjie yinzhengjie Apr spark-2.1.-bin-hadoop2.

lrwxrwxrwx. yinzhengjie yinzhengjie Aug : zk -> /soft/zookeeper-3.4./

drwxr-xr-x. yinzhengjie yinzhengjie Mar : zookeeper-3.4.

[yinzhengjie@s101 download]$ ll /soft/spark-2.1.-bin-hadoop2./ #查看目录结构

total

drwxr-xr-x. yinzhengjie yinzhengjie Apr bin

drwxr-xr-x. yinzhengjie yinzhengjie Apr conf

drwxr-xr-x. yinzhengjie yinzhengjie Apr data

drwxr-xr-x. yinzhengjie yinzhengjie Apr examples

drwxr-xr-x. yinzhengjie yinzhengjie Apr jars

-rw-r--r--. yinzhengjie yinzhengjie Apr LICENSE

drwxr-xr-x. yinzhengjie yinzhengjie Apr licenses

-rw-r--r--. yinzhengjie yinzhengjie Apr NOTICE

drwxr-xr-x. yinzhengjie yinzhengjie Apr python

drwxr-xr-x. yinzhengjie yinzhengjie Apr R

-rw-r--r--. yinzhengjie yinzhengjie Apr README.md

-rw-r--r--. yinzhengjie yinzhengjie Apr RELEASE

drwxr-xr-x. yinzhengjie yinzhengjie Apr sbin

drwxr-xr-x. yinzhengjie yinzhengjie Apr yarn

[yinzhengjie@s101 download]$

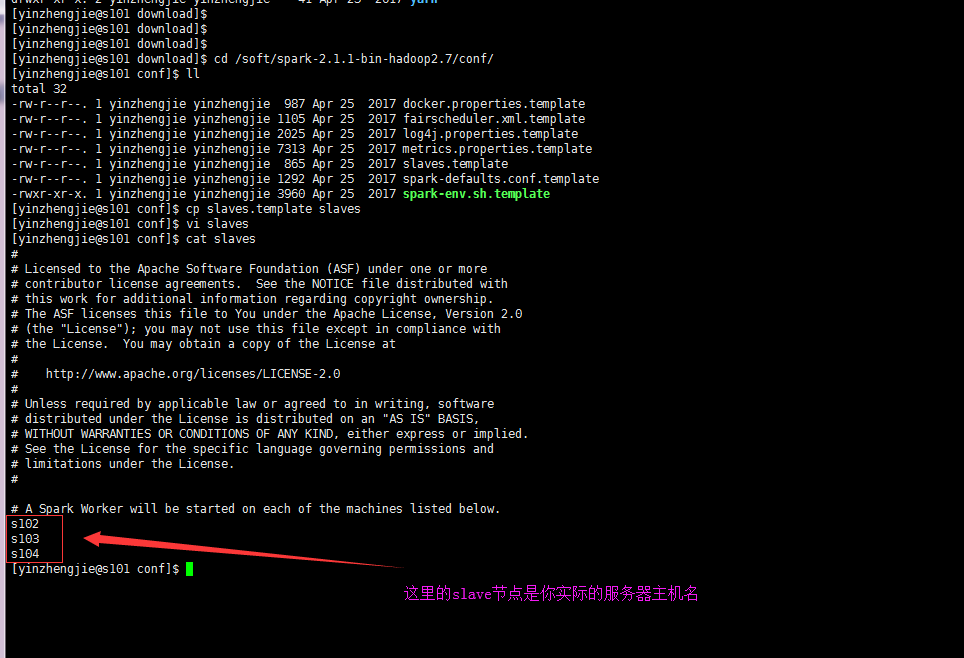

3>.编辑slaves配置文件,将worker的节点主机名输入,默认是localhost

[yinzhengjie@s101 download]$ cd /soft/spark-2.1.-bin-hadoop2./conf/

[yinzhengjie@s101 conf]$ ll

total

-rw-r--r--. yinzhengjie yinzhengjie Apr docker.properties.template

-rw-r--r--. yinzhengjie yinzhengjie Apr fairscheduler.xml.template

-rw-r--r--. yinzhengjie yinzhengjie Apr log4j.properties.template

-rw-r--r--. yinzhengjie yinzhengjie Apr metrics.properties.template

-rw-r--r--. yinzhengjie yinzhengjie Apr slaves.template

-rw-r--r--. yinzhengjie yinzhengjie Apr spark-defaults.conf.template

-rwxr-xr-x. yinzhengjie yinzhengjie Apr spark-env.sh.template

[yinzhengjie@s101 conf]$ cp slaves.template slaves

[yinzhengjie@s101 conf]$ vi slaves

[yinzhengjie@s101 conf]$ cat slaves

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# # A Spark Worker will be started on each of the machines listed below.

s102

s103

s104

[yinzhengjie@s101 conf]$

4>.编辑spark-env.sh文件,指定master节点和端口号

[yinzhengjie@s101 ~]$ cp /soft/spark/conf/spark-env.sh.template /soft/spark/conf/spark-env.sh

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ echo export JAVA_HOME=/soft/jdk >> /soft/spark/conf/spark-env.sh

[yinzhengjie@s101 ~]$ echo SPARK_MASTER_HOST=s101 >> /soft/spark/conf/spark-env.sh

[yinzhengjie@s101 ~]$ echo SPARK_MASTER_PORT= >> /soft/spark/conf/spark-env.sh

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ grep -v ^# /soft/spark/conf/spark-env.sh | grep -v ^$

export JAVA_HOME=/soft/jdk

SPARK_MASTER_HOST=s101

SPARK_MASTER_PORT=

[yinzhengjie@s101 ~]$

5>.将s101的spark配置信息分发到worker节点

[yinzhengjie@s101 ~]$ more `which xrsync.sh`

#!/bin/bash

#@author :yinzhengjie

#blog:http://www.cnblogs.com/yinzhengjie

#EMAIL:y1053419035@qq.com #判断用户是否传参

if [ $# -lt ];then

echo "请输入参数";

exit

fi #获取文件路径

file=$@ #获取子路径

filename=`basename $file` #获取父路径

dirpath=`dirname $file` #获取完整路径

cd $dirpath

fullpath=`pwd -P` #同步文件到DataNode

for (( i=;i<=;i++ ))

do

#使终端变绿色

tput setaf

echo =========== s$i %file ===========

#使终端变回原来的颜色,即白灰色

tput setaf

#远程执行命令

rsync -lr $filename `whoami`@s$i:$fullpath

#判断命令是否执行成功

if [ $? == ];then

echo "命令执行成功"

fi

done

[yinzhengjie@s101 ~]$

需要配置无秘钥登录,之后执行启动脚本进行同步([yinzhengjie@s101 ~]$ more `which xrsync.sh`)

关于配置无秘钥登录请参考我之间的笔记:https://www.cnblogs.com/yinzhengjie/p/9065191.html。配置好无秘钥登录后,直接执行上面的脚本进行同步数据。

[yinzhengjie@s101 ~]$ xrsync.sh /soft/spark-2.1.-bin-hadoop2./

=========== s102 %file ===========

命令执行成功

=========== s103 %file ===========

命令执行成功

=========== s104 %file ===========

命令执行成功

[yinzhengjie@s101 ~]$

6>.修改配置文件,将spark运行脚本添加至系统环境变量

[yinzhengjie@s101 ~]$ ln -s /soft/spark-2.1.-bin-hadoop2./ /soft/spark #这里做一个软连接,方便简写目录名称

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ sudo vi /etc/profile #修改系统环境变量的配置文件

[sudo] password for yinzhengjie:

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ tail - /etc/profile

#ADD SPARK_PATH by yinzhengjie

export SPARK_HOME=/soft/spark

export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ source /etc/profile #重写加载系统配置文件,使其变量在当前shell生效。

[yinzhengjie@s101 ~]$

7>.启动spark集群

[yinzhengjie@s101 ~]$ more `which xcall.sh`

#!/bin/bash

#@author :yinzhengjie

#blog:http://www.cnblogs.com/yinzhengjie

#EMAIL:y1053419035@qq.com #判断用户是否传参

if [ $# -lt ];then

echo "请输入参数"

exit

fi #获取用户输入的命令

cmd=$@ for (( i=;i<=;i++ ))

do

#使终端变绿色

tput setaf

echo ============= s$i $cmd ============

#使终端变回原来的颜色,即白灰色

tput setaf

#远程执行命令

ssh s$i $cmd

#判断命令是否执行成功

if [ $? == ];then

echo "命令执行成功"

fi

done

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ more `which xcall.sh`

[yinzhengjie@s101 ~]$ /soft/spark/sbin/start-all.sh #启动spark集群

starting org.apache.spark.deploy.master.Master, logging to /soft/spark/logs/spark-yinzhengjie-org.apache.spark.deploy.master.Master--s101.out

s102: starting org.apache.spark.deploy.worker.Worker, logging to /soft/spark/logs/spark-yinzhengjie-org.apache.spark.deploy.worker.Worker--s102.out

s103: starting org.apache.spark.deploy.worker.Worker, logging to /soft/spark/logs/spark-yinzhengjie-org.apache.spark.deploy.worker.Worker--s103.out

s104: starting org.apache.spark.deploy.worker.Worker, logging to /soft/spark/logs/spark-yinzhengjie-org.apache.spark.deploy.worker.Worker--s104.out

[yinzhengjie@s101 ~]$

[yinzhengjie@s101 ~]$ xcall.sh jps #查看进程master和slave节点是否起来了

============= s101 jps ============

Jps

Master

命令执行成功

============= s102 jps ============

Jps

Worker

命令执行成功

============= s103 jps ============

Jps

Worker

命令执行成功

============= s104 jps ============

Jps

Worker

命令执行成功

[yinzhengjie@s101 ~]$

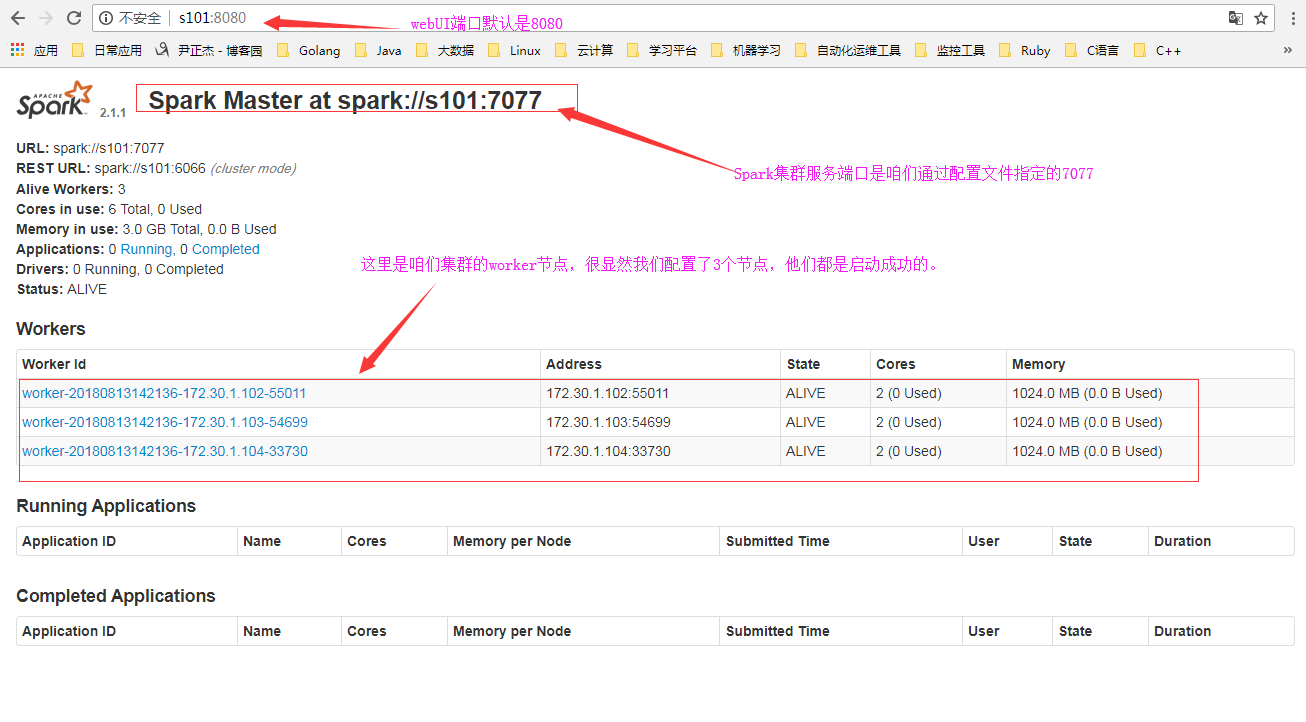

8>.检查Spark的webUI界面

9>.启动spark-shell

三.在Spark集群中执行Wordcount

1>.链接到master集群([yinzhengjie@s101 ~]$ spark-shell --master spark://s101:7077)

2>.登录webUI,查看正在运行的APP

3>.查看应用细节

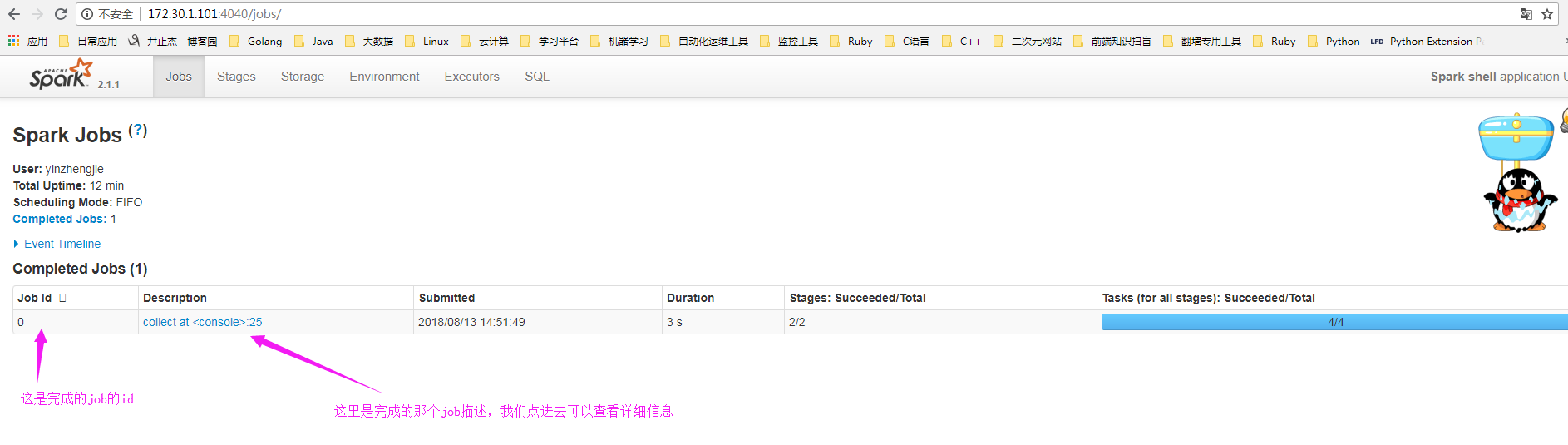

4>.查看job的信息

5>.查看stage

6>.查看具体的详细信息

7>.退出spark-shell

8>.查看spark的完成应用,发现日志没了?

那么问题来了。如果看日志呢?详情请参考:https://www.cnblogs.com/yinzhengjie/p/9410989.html。

Spark进阶之路-Standalone模式搭建的更多相关文章

- Spark进阶之路-Spark HA配置

Spark进阶之路-Spark HA配置 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 集群部署完了,但是有一个很大的问题,那就是Master节点存在单点故障,要解决此问题,就要借 ...

- Spark进阶之路-日志服务器的配置

Spark进阶之路-日志服务器的配置 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 如果你还在纠结如果配置Spark独立模式(Standalone)集群,可以参考我之前分享的笔记: ...

- Redis进阶:Redis的哨兵模式搭建

Redis进阶:Redis的哨兵模式搭建 哨兵机制介绍 单机版的Redis存在性能瓶颈,Redis通过提高主从复制实现读写分离,提高了了Redis的可用性,另一方便也能实现数据在多个Redis直接的备 ...

- 【SSH进阶之路】Hibernate搭建开发环境+简单实例(二)

Hibernate是很典型的持久层框架,持久化的思想是很值得我们学习和研究的.这篇博文,我们主要以实例的形式学习Hibernate,不深究Hibernate的思想和原理,否则,一味追求,苦学思想和原理 ...

- spark 源码编译 standalone 模式部署

本文介绍如何编译 spark 的源码,并且用 standalone 的方式在单机上部署 spark. 步骤如下: 1. 下载 spark 并且解压 本文选择 spark 的最新版本 2.2.0 (20 ...

- 【Spark】Spark-shell案例——standAlone模式下读取HDFS上存放的文件

目录 可以先用local模式读取一下 步骤 一.先将做测试的数据上传到HDFS 二.开发scala代码 standAlone模式查看HDFS上的文件 步骤 一.退出local模式,重新进入Spark- ...

- Spark环境搭建(七)-----------spark的Local和standalone模式启动

spark的启动方式有两种,一种单机模式(Local),另一种是多机器的集群模式(Standalone) Standalone 搭建: 准备:hadoop001,hadoop002两台安装spark的 ...

- Spark进阶之路-Spark提交Jar包执行

Spark进阶之路-Spark提交Jar包执行 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 在实际开发中,使用spark-submit提交jar包是很常见的方式,因为用spark ...

- Spark3.0.1各种集群模式搭建

对于spark前来围观的小伙伴应该都有所了解,也是现在比较流行的计算框架,基本上是有点规模的公司标配,所以如果有时间也可以补一下短板. 简单来说Spark作为准实时大数据计算引擎,Spark的运行需要 ...

随机推荐

- mysql外键关联

主键:是唯一标识一条记录,不能有重复的,不允许为空,用来保证数据完整性 外键:是另一表的主键, 外键可以有重复的, 可以是空值,用来和其他表建立联系用的.所以说,如果谈到了外键,一定是至少涉及到两张表 ...

- QT下opencv的编译和使用

需要的文件 qt-opensource-windows-x86-mingw491_opengl-5.4.0.exe cmake-3.12.0-rc1-win64-x64.msi opencv-2.4. ...

- iOS推送证书生成pem文件(详细步骤)

1.pem文件概述 pem文件是服务器向苹果服务器做推送时候需要的文件,主要是给php向苹果服务器验证时使用,下面介绍一下pem文件的生成. 2.生成pem文件步骤 1.打开钥匙串,选择需要生成的推送 ...

- mybatis分页 -----PageHelper插件

对查询结果进行分页 一,使用limit进行分页 1.mybatis 的sql语句: <if test="page !=null and rows !=null"> li ...

- Using svn in CLI with Batch

del %~n0.txt@echo offsetlocal EnableDelayedExpansionfor /f "delims=" %%i in ('DIR /A:D /B' ...

- Typecho博客迁移

在新的机器上先搭建好一个新的Typecho博客,数据库名称和原博客相同(可以省不少事). 备份原来博客的usr目录. 备份mysql数据库,命令: mysqldump -uroot -p --all- ...

- 暂时刷完leetcode的一点小体会

两年前,在实习生笔试的时候,笔试百度,对试卷上很多问题感到不知所云,毫无悬念的挂了 读研两年,今年代笔百度,发现算法题都见过,或者有思路,但一时之间居然都想不到很好的解法,而且很少手写思路,手写代码, ...

- [转]curl的详细使用

转自:http://www.cnblogs.com/gbyukg/p/3326825.html 下载单个文件,默认将输出打印到标准输出中(STDOUT)中 curl http://www.centos ...

- Oracle数据库导入导出 imp/exp备份还原

Oracle数据导入导出imp/exp Oracle数据导入导出imp/exp 在cmd的dos命令提示符下执行,而不是在sqlplus里面,但是格式一定要类似于: imp/exp 用户名/密 ...

- BZOJ3152[Ctsc2013]组合子逻辑——堆+贪心

题目链接: BZOJ3152 题目大意: 一开始有一个括号包含[1,n],你需要加一些括号,使得每个括号(包括一开始的)所包含的元素个数要<=这个括号左端点那个数的大小,当一个括号包含另一个括号 ...