4、Work-Queue

Work Queues

using the Java Client

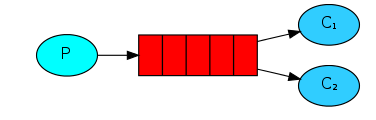

In the first tutorial we wrote programs to send and receive messages from a named queue. In this one we'll create a Work Queue that will be used to distribute time-consuming tasks among multiple workers.

The main idea behind Work Queues (aka: Task Queues) is to avoid doing a resource-intensive task immediately and having to wait for it to complete. Instead we schedule the task to be done later.We encapsulate a task as a message and send it to a queue.A worker process running in the background will pop the tasks and eventually execute the job.When you run many workers the tasks will be shared between them.

This concept is especially useful in web applications where it's impossible to handle a complex task during a short HTTP request window.

Preparation

In the previous part of this tutorial we sent a message containing "Hello World!". Now we'll be sending strings that stand for complex tasks.We don't have a real-world task, like images to be resized or pdf files to be rendered, so let's fake it by just pretending we're busy - by using the Thread.sleep() function.We'll take the number of dots in the string as its complexity; every dot will account for one second of "work". For example, a fake task described by Hello... will take three seconds.

We will slightly modify the Send.java code from our previous example, to allow arbitrary messages to be sent from the command line.This program will schedule tasks to our work queue, so let's name it NewTask.java:

public class NewTask {private static final String TASK_QUEUE_NAME = "task_queue";public static void main(String[] argv) throws Exception {ConnectionFactory factory = new ConnectionFactory();factory.setHost("localhost");Connection connection = factory.newConnection();Channel channel = connection.createChannel();channel.queueDeclare(TASK_QUEUE_NAME, true, false, false, null);String message="message";//String message = getMessage(argv);for (int i=0;i<5;i++) {message+=".";channel.basicPublish("", TASK_QUEUE_NAME,MessageProperties.PERSISTENT_TEXT_PLAIN,message.getBytes("UTF-8"));System.out.println(" [x] Sent '" + message + "'");}channel.close();connection.close();}

Our old Recv.java program also requires some changes: it needs to fake a second of work for every dot in the message body. It will handle delivered messages and perform the task, so let's call it Worker.java:

public class Worker {private static final String TASK_QUEUE_NAME = "task_queue";public static void main(String[] argv) throws Exception {ConnectionFactory factory = new ConnectionFactory();factory.setHost("localhost");final Connection connection = factory.newConnection();final Channel channel = connection.createChannel();channel.queueDeclare(TASK_QUEUE_NAME, true, false, false, null);System.out.println(" [*] Waiting for messages. To exit press CTRL+C");channel.basicQos(1);final Consumer consumer = new DefaultConsumer(channel) {@Overridepublic void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException {String message = new String(body, "UTF-8");System.out.println(" [x] Received '" + message + "'");try {doWork(message);} finally {System.out.println(" [x] Done");//channel.basicAck(envelope.getDeliveryTag(), false);}}};boolean autoAck = true; // acknowledgment is covered belowchannel.basicConsume(TASK_QUEUE_NAME, autoAck, consumer);}private static void doWork(String task) {for (char ch : task.toCharArray()) {if (ch == '.') {try {Thread.sleep(10000);} catch (InterruptedException _ignored) {Thread.currentThread().interrupt();}}}}

}

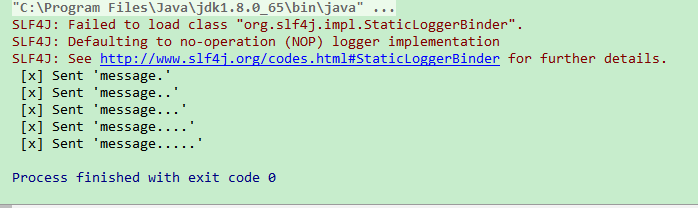

result:

Round-robin dispatching循环分发

- One of the advantages of using a Task Queue is the ability to easily parallelise work.If we are building up a backlog of work,we can just add more workers and that way,scale easily.

- First,run two worker instances at the same time.

- Sencond,publish new tasks.

- 结果如上图。

- By default,RabbitMQ will send each message to the next consumer,in sequence.On average every consumer will get the same number of messages.This way of distributing message is called round-robin.

Message acknowledgment

- Doing a task can take a few seconds.You may wonder what happens if one of the consumers starts a long task and dies with it only partly done.With our current code,once RabbitMQ delivers a message to the costomer it immediately marks it for detetion.In this case ,if you kill a worker we will lose the message it was just processing.We'll also lose all the messsages that were dispatched to this particular worker but were not yet handled.

- But we don't want to lose any tasks.If a worker dies,we'd like the task to be delivered to another workere.

- In order to make sure a message is never lost.RabbitMQ supports message acknowledgments.An ack is sent back by the consumer to tell RabbitMQ that a particular message has been received,processed and that RabbitMQ and that RabbitMQ is free to delete it.

- If a consumer dies(its channel is closed,connection is closed,or TCP connection is lost) without sending an ack.RabbitMQ will understand that a message wasn't processed fully and re-queue it.If there are other consumers online at the same time ,it will then quickly redeliver it to another consumer.That way you can be sure that no message is lost,even if workers occasionally die.

- There aren't any message timeouts;RabbitMQ will redeliver the message when the consumer dies.It's fine even if processing a message takes a very,very long time .

- Manual message acknowledgments are turned on by default .In previous examples we explicitly turned them off via the autoAck-true flag.It's time to set this flag to false and send a proper acknowledgment form the worker,once we're done with a task.

public static void main(String[] argv) throws Exception {ConnectionFactory factory = new ConnectionFactory();factory.setHost("localhost");final Connection connection = factory.newConnection();final Channel channel = connection.createChannel();channel.queueDeclare(TASK_QUEUE_NAME, true, false, false, null);System.out.println(" [*] Waiting for messages. To exit press CTRL+C");channel.basicQos(1);final Consumer consumer = new DefaultConsumer(channel) {@Overridepublic void handleDelivery(String consumerTag, Envelope envelope, AMQP.BasicProperties properties, byte[] body) throws IOException {String message = new String(body, "UTF-8");System.out.println(" [x] Received '" + message + "'");try {doWork(message);} finally {System.out.println(" [x] Done");channel.basicAck(envelope.getDeliveryTag(), false);}}};//boolean autoAck = true; // acknowledgment is covered belowchannel.basicConsume(TASK_QUEUE_NAME, false, consumer);}

- Using this code we can be sure that even if you kill a worker while it processing a message,nothing will be lost.Soon after the worker dies all unacknowledged message will be redelivered.

- Acknowledgment must be sent on same channel the delivery it is for was received on.Attempts to acknowledgment using a different channel will result in a channel-level protocol exception..

- Forgotten acknowledgments

- It's a common mistake to miss the basicAck .It's an easy error,but the consequences are serious.Messages will be redelivered when your client quits,but RabbitMQ will eat more and more memory as it won't be able to release any unacked messages.

Message durability

- We have learned how to make sure that even if the consumer dies,the task isn't lost.But our tasks will still be lost if RabbitMQ server stops.

- When RabbitMQ quits or crashs it will forget the queues and messages unless you tell it not to.Two things are required to make sure that messages aren't lost:we need to mark both the queue and messages as durable.

- First,we need to make sure that RabbitMQ will nerver lose our queue .In order to do so,we need to declare it as durable:

boolean durable = true;channel.queueDeclare("hello", durable, false, false, null);

- Although this command is correct by itself ,it won't work in our present setup.That's because we've already defined a queue called 'hello' which is not durable.RabbitMQ doesn't allow you to redefine an existing queue with different parameters and will return an error to any programs that tries to do that.But there is a quick workaround -Let's declare a queue with different name,for example task_queue.

- This queueDeclare change needs to be applied to both the producer and consumer code .

- As this point we're sure that the task_queue queue won't be lost even if RabbitMQ restarts.Now we need to mark our messages as persistent-by setting MessageProperties to the value PERSISTENT_TEXT_PLAIN.

import com.rabbitmq.client.MessageProperties;channel.basicPublish("", "task_queue",MessageProperties.PERSISTENT_TEXT_PLAIN,message.getBytes());

- Note on message persistence

- Marking messages as persistent doesn't fully guarantee that a message won't be lost.Although it tells RabbitMQ to save the message to disk,there is still a short time window when RabbitMQ has accepted a message and hasn't saved it yet .Also,RabbitMQ doesn't do fsync(2) for every message it may be just saved to cache and not really written to the disk.The persistence guarantee aren't strong,but it's more than enough for our simple task queue.

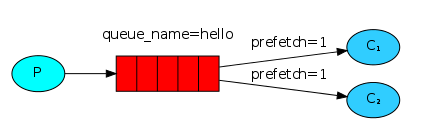

Fair dispatch

- You might have noticed that the dispatching still doesn't work exactly as we want.For example in a situation with two workers,when all odd messages are heavy and even message are light,one worker will be constantly busy and the other one will do hardly any work.Well,RabbitMQ doesn't konw anything that and will still dispatch messages evently.

- This happens because RabbitMQ just dispatches a message when the message enters the queue.It doesn't look at the number of unacknowledged messages for a consumer.It just blindly dispatches every n-th message to the n-th consumer.

- In order to defeat that we can use the basicQos method with the prefetchCount=1 setting.This tells RabbitMQ not to give more than one message to a worker at a time.Or in other words,don't dispatch a new message to a worker until it has processed an acknowledged the previous one.Instead ,it will dispatch it to the next worker that is not still busy.

int prefetchCount = 1;channel.basicQos(prefetchCount);

总结

- Task Queue

- 循环分发 Round-robin dispatch

- 消息确认

- 消息持久化

- 队列持久化channel.queueDeclare(...,true,...)声明队列时指定

- 消息持久化channel.basicPublish(...,MessageProperties.PERSISTENT_TEXT_PLAIN,...)发送消息时通过MessageProperties指定

- 平均分发 fair dispatch (可能导致一个worker busy 另一个free)

- channel.basicQos(1)//等得到ack时在分发下一条消息

4、Work-Queue的更多相关文章

- Python进阶(3)_进程与线程中的lock(线程中互斥锁、递归锁、信号量、Event对象、队列queue)

1.同步锁 (Lock) 当全局资源(counter)被抢占的情况,问题产生的原因就是没有控制多个线程对同一资源的访问,对数据造成破坏,使得线程运行的结果不可预期.这种现象称为“线程不安全”.在开发过 ...

- STL学习笔记6 -- 栈stack 、队列queue 和优先级priority_queue 三者比较

栈stack .队列queue 和优先级priority_queue 三者比较 默认下stack 和queue 基于deque 容器实现,priority_queue 则基于vector 容器实现 ...

- Python之路(第四十五篇)线程Event事件、 条件Condition、定时器Timer、线程queue

一.事件Event Event(事件):事件处理的机制:全局定义了一个内置标志Flag,如果Flag值为 False,那么当程序执行 event.wait方法时就会阻塞,如果Flag值为True,那么 ...

- 并发编程(五)——GIL全局解释器锁、死锁现象与递归锁、信号量、Event事件、线程queue

GIL.死锁现象与递归锁.信号量.Event事件.线程queue 一.GIL全局解释器锁 1.什么是全局解释器锁 GIL本质就是一把互斥锁,相当于执行权限,每个进程内都会存在一把GIL,同一进程内的多 ...

- Python并发编程06 /阻塞、异步调用/同步调用、异步回调函数、线程queue、事件event、协程

Python并发编程06 /阻塞.异步调用/同步调用.异步回调函数.线程queue.事件event.协程 目录 Python并发编程06 /阻塞.异步调用/同步调用.异步回调函数.线程queue.事件 ...

- [操作系统知识储备,进程相关概念,开启进程的两种方式、 进程Queue介绍]

[操作系统知识储备,进程相关概念,开启进程的两种方式.进程Queue介绍] 操作系统知识回顾 为什么要有操作系统. 程序员无法把所有的硬件操作细节都了解到,管理这些硬件并且加以优化使用是非常繁琐的工作 ...

- Message、Handler、Message Queue、Looper 之间的关系

单线程模型中Message.Handler.Message Queue.Looper之间的关系 1.Message Message即为消息,可以理解为线程间交流的信息.处理数据后台线程需要更新UI,你 ...

- [数据结构]——链表(list)、队列(queue)和栈(stack)

在前面几篇博文中曾经提到链表(list).队列(queue)和(stack),为了更加系统化,这里统一介绍着三种数据结构及相应实现. 1)链表 首先回想一下基本的数据类型,当需要存储多个相同类型的数据 ...

- Python成长笔记 - 基础篇 (十一)----RabbitMQ、Redis 、线程queue

本节内容: 1.RabbitMQ 消息队列 2.Redis 3.Mysql PY 中的线程queue(threading Queue):用于多个线程之间进行数据交换,不能在进程间进行通信 进程qu ...

- 单线程模型中Message、Handler、Message Queue、Looper之间的关系

1. Android进程 在了解Android线程之前得先了解一下Android的进程.当一个程序第一次启动的时候,Android会启动一个LINUX进程和一个主线程.默认的情况下,所有该程序的组件都 ...

随机推荐

- appium----Monkey测试

做过app测试的应该都知道Monkey测试,今天简单的介绍下Monkey如何测试 什么是Monkey monkey测试的原理就是利用socket通讯的方式来模拟用户的按键输入,触摸屏输入,手势输入等, ...

- docker镜像导入导出备份迁移

导出: docker save -o centos.tar centos:latest #将centos:latest镜像导出为centos.tar文件 导入: docker load -i cent ...

- 判断101-200之间有多少个素数,并输出所有素数,方法:用一个数分别去除2到sqrt(这个数),如果能被整除, 则表明此数不是素数,反之是素数。

<?php$sum=0;for($i=101;$i<=200;$i++){ for($j=2;$j<=sqrt($i);$j++) { if($i%$j==0 ...

- CF1041C Coffee Break

CF1041C Coffee Break 题目大意: 给定nn个数和一个kk,这nn个数都不超过mm 每次从没被去掉的数里面选一个数aa,去掉aa,然后可以任意一个b(b>a+k)b(b> ...

- Codeforces Round #552 (Div. 3) EFG(链表+set,dp,枚举公因数)

E https://codeforces.com/contest/1154/problem/E 题意 一个大小为n(1e6)的数组\(a[i]\)(n),两个人轮流选数,先找到当前数组中最大的数然后选 ...

- 【CF280D】k-Maximum Subsequence Sum(大码量多细节线段树)

点此看题面 大致题意: 给你一个序列,让你支持单点修改以及询问给定区间内选出至多\(k\)个不相交子区间和的最大值. 题意转换 这道题看似很不可做,实际上可以通过一个简单转换让其变可做. 考虑每次选出 ...

- 360安全浏览器右击不显示审查元素 或按F12不弹出开发人员工具的原因和解决方法:设为极速模式

IE兼容模式 会显示 IE的开发人员工具 极速模式 才会显示谷歌的那种方式 IE调试模式不怎么习惯,如下图 正常调试模式如下图

- 9.28 csp-s模拟测试54 x+y+z

T1 x 求出每个数的质因数,并查集维护因子相同的数,最后看一共有多少个联通块,$ans=2^{cnt}-2$ 但是直接分解会$T$,埃筛是个很好的选择,或者利用每个数最多只会有1个大于$\sqrt{ ...

- [LOJ 2721][UOJ 396][BZOJ 5418][NOI 2018]屠龙勇士

[LOJ 2721][UOJ 396][BZOJ 5418][NOI 2018]屠龙勇士 题意 题面好啰嗦啊直接粘LOJ题面好了 小 D 最近在网上发现了一款小游戏.游戏的规则如下: 游戏的目标是按照 ...

- Mac操作:Mac系统移动鼠标显示桌面(移动鼠标到角落)

很多朋友都发现,有的人在用Mac的时候,鼠标一划就可以显示桌面,或者显示Launchpad.其实很简单,下面就介绍这个方法. 首先打开系统偏好设置: 然后点击红色圈中的图标:MissionContro ...