Keepalived+HAProxy基于ACL实现单IP多域名负载功能

- 编译安装 HAProxy 新版 LTS 版本,编译安装 Keepalived

- 开启HAProxy多线程,线程数与CPU核心数保持一致,并绑定CPU核心

- 因业务较多避免配置文件误操作,需要按每业务一个配置文件并统一保存至/etc/haproxy/conf.d目录中

- 基于ACL实现单IP多域名负载功能,两个域名的业务: www.yanlinux.org 和 www.yanlinux.edu

- 实现MySQL主从复制

- 对 www.yanlinux.edu 域名基于HAProxy+Nginx+Tomcat+MySQL,并实现Jpress的JAVA应用

- 对 www.yanlinux.org 域名基于HAProxy+Nginx+PHP+MySQL+Redis,实现phpMyadmin的PHP应用,并实现Session会话保持统一保存到Redis

1 DNS服务器配置

在10.0.0.7主机上搭建www.yanlinux.org(VIP:10.0.0.100)和www.yanlinux.edu(VIP:10.0.0.200)的DNS解析。

配置的关键:

- 在主配置文件

/etc/named.conf中要将listen-on port 53 { 127.0.0.1; }中的127.0.0.1改为localhost;还需要将allow-query { localhost; };前面加上//注释掉,或者将其中的localhost改为any,或者在后面加上各个网段信息。 - 各个域名解析库文件的权限应改为641,属组为

named

#利用脚本自动搭建www.yanlinux.org的dns解析配置

[root@dns ~]$ cat install_dns.sh

#!/bin/bash

DOMAIN=yanlinux.org

HOST=www

HOST_IP=10.0.0.100

CPUS=`lscpu |awk '/^CPU\(s\)/{print $2}'`

. /etc/os-release

color () {

RES_COL=60

MOVE_TO_COL="echo -en \\033[${RES_COL}G"

SETCOLOR_SUCCESS="echo -en \\033[1;32m"

SETCOLOR_FAILURE="echo -en \\033[1;31m"

SETCOLOR_WARNING="echo -en \\033[1;33m"

SETCOLOR_NORMAL="echo -en \E[0m"

echo -n "$1" && $MOVE_TO_COL

echo -n "["

if [ $2 = "success" -o $2 = "0" ] ;then

${SETCOLOR_SUCCESS}

echo -n $" OK "

elif [ $2 = "failure" -o $2 = "1" ] ;then

${SETCOLOR_FAILURE}

echo -n $"FAILED"

else

${SETCOLOR_WARNING}

echo -n $"WARNING"

fi

${SETCOLOR_NORMAL}

echo -n "]"

echo

}

install_dns () {

if [ $ID = 'centos' -o $ID = 'rocky' ];then

yum install -y bind bind-utils

elif [ $ID = 'ubuntu' ];then

color "不支持Ubuntu操作系统,退出!" 1

exit

#apt update

#apt install -y bind9 bind9-utils

else

color "不支持此操作系统,退出!" 1

exit

fi

}

config_dns () {

sed -i -e '/listen-on/s/127.0.0.1/localhost/' -e '/allow-query/s/localhost/any/' /etc/named.conf

cat >> /etc/named.rfc1912.zones <<EOF

zone "$DOMAIN" IN {

type master;

file "$DOMAIN.zone";

};

EOF

cat > /var/named/$DOMAIN.zone <<EOF

\$TTL 1D

@ IN SOA master admin.$DOMAIN (

1 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

NS master

master A `hostname -I`

$HOST A $HOST_IP

EOF

#修改权限和属组

chmod 640 /var/named/$DOMAIN.zone

chgrp named /var/named/$DOMAIN.zone

}

start_service () {

systemctl enable --now named

systemctl is-active named.service

if [ $? -eq 0 ] ;then

color "DNS 服务安装成功!" 0

else

color "DNS 服务安装失败!" 1

exit 1

fi

}

install_dns

config_dns

start_service

[root@dns ~]$ sh install_dns.sh

#yanlinux.org.zone区域数据文件信息

[root@dns ~]$ cat /var/named/yanlinux.org.zone

$TTL 1D

@ IN SOA master admin.yanlinux.org (

1 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

NS master

master A 10.0.0.7

www A 10.0.0.100

#然后拷贝yanlinux.org.zone区域子配置文件创建yanlinux.edu.zone区域子配置文件.若是自己重新创建yanlinux.edu.zone子配置文件,创建完以后需要将子配置文件的文件权限改为640以及属组改为named

[root@dns ~]$ cd /var/named

[root@dns named]$ cp -a yanlinux.org.zone yanlinux.edu.zone

#修改yanlinux.edu对应的信息

[root@dns named]$ vi yanlinux.edu.zone

$TTL 1D

@ IN SOA master admin.yanlinux.edu (

1 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

NS master

master A 10.0.0.7

www A 10.0.0.200

#两个域名对应的子配置文件已经创建好,然后在/etc/named.rfc1912.zones中添加区域子配置文件的信息

[root@dns ~]$ vi /etc/named.rfc1912.zones

......

#添加一下信息

zone "yanlinux.org" IN {

type master;

file "yanlinux.org.zone";

};

zone "yanlinux.edu" IN {

type master;

file "yanlinux.edu.zone";

};

#重新加载配置信息

[root@dns ~]$ rndc reload

server reload successful

[root@dns ~]$ dig www.yanlinux.org

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7 <<>> www.yanlinux.org

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 56759

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 1, ADDITIONAL: 2

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; MBZ: 0x0005, udp: 4096

;; QUESTION SECTION:

;www.yanlinux.org. IN A

;; ANSWER SECTION:

www.yanlinux.org. 5 IN A 10.0.0.100

;; AUTHORITY SECTION:

yanlinux.org. 5 IN NS master.yanlinux.org.

;; ADDITIONAL SECTION:

master.yanlinux.org. 5 IN A 10.0.0.7

;; Query time: 0 msec

;; SERVER: 10.0.0.2#53(10.0.0.2)

;; WHEN: Wed Mar 08 21:48:00 CST 2023

;; MSG SIZE rcvd: 98

[root@dns ~]$ dig www.yanlinux.edu

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7 <<>> www.yanlinux.edu

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 19598

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 1, ADDITIONAL: 2

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; MBZ: 0x0005, udp: 4096

;; QUESTION SECTION:

;www.yanlinux.edu. IN A

;; ANSWER SECTION:

www.yanlinux.edu. 5 IN A 10.0.0.200

;; AUTHORITY SECTION:

yanlinux.edu. 5 IN NS master.yanlinux.edu.

;; ADDITIONAL SECTION:

master.yanlinux.edu. 5 IN A 10.0.0.7

;; Query time: 0 msec

;; SERVER: 10.0.0.2#53(10.0.0.2)

;; WHEN: Wed Mar 08 21:48:06 CST 2023

;; MSG SIZE rcvd: 98

2 客户端配置

在10.0.0.17主机上,设置DNS服务器的IP作为其DNS,做这一步之前一定要在搭建DNS服务器时,做好上面提到的第一个关键点,不然客户端不能正确解析到域名。

[root@internet ~]$ cat /etc/sysconfig/network-scripts/ifcfg-eth0

BOOTPROTO="static"

NAME="eth0"

DEVICE="eth0"

IPADDR=10.0.0.17

PREFIX=24

GATEWAY=10.0.0.2

DNS1=10.0.0.7 #改成DNS服务器的IP

#DNS2=114.114.114.114

ONBOOT="yes"

#重启网络服务

[root@internet ~]$ systemctl restart network

[root@internet network-scripts]$ cat /etc/resolv.conf

# Generated by NetworkManager

nameserver 10.0.0.7

#测试解析

[root@internet ~]$ host www.baidu.com

www.baidu.com is an alias for www.a.shifen.com.

www.a.shifen.com has address 36.152.44.95

www.a.shifen.com has address 36.152.44.96

[root@internet ~]$ dig www.yanlinux.org

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7 <<>> www.yanlinux.org

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 19011

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 1, ADDITIONAL: 2

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;www.yanlinux.org. IN A

;; ANSWER SECTION:

www.yanlinux.org. 86400 IN A 10.0.0.100

;; AUTHORITY SECTION:

yanlinux.org. 86400 IN NS master.yanlinux.org.

;; ADDITIONAL SECTION:

master.yanlinux.org. 86400 IN A 10.0.0.7

;; Query time: 0 msec

;; SERVER: 10.0.0.7#53(10.0.0.7)

;; WHEN: Thu Mar 09 10:40:06 CST 2023

;; MSG SIZE rcvd: 98

[root@internet ~]$ dig www.yanlinux.edu

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7 <<>> www.yanlinux.edu

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 64928

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 1, ADDITIONAL: 2

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;www.yanlinux.edu. IN A

;; ANSWER SECTION:

www.yanlinux.edu. 86400 IN A 10.0.0.200

;; AUTHORITY SECTION:

yanlinux.edu. 86400 IN NS master.yanlinux.edu.

;; ADDITIONAL SECTION:

master.yanlinux.edu. 86400 IN A 10.0.0.7

;; Query time: 0 msec

;; SERVER: 10.0.0.7#53(10.0.0.7)

;; WHEN: Thu Mar 09 10:40:11 CST 2023

;; MSG SIZE rcvd: 98

3 部署NFS主备服务

- 搭建主NFS服务器

[root@NFS ~]$ yum -y install nfs-utils

[root@NFS ~]$ systemctl enable --now nfs-server.service

#创建用于传输的用户

[root@NFS ~]$ groupadd -g 666 www

[root@NFS ~]$ useradd -u 666 www -g 666

#创建NFS共享文件夹

[root@NFS ~]$ mkdir /data/www -p

[root@NFS ~]$ chown -R www. /data/www/

[root@NFS ~]$ mkdir /data/web2

[root@NFS ~]$ chown -R www.www /data/web2/

#添加共享配置

[root@NFS ~]$ vi /etc/exports

/data/www *(rw,all_squash,anonuid=666,anongid=666) #具有读写权限,所有远程用户映射为666对应的用户

/data/web2 *(rw,all_squash,anonuid=666,anongid=666)

#重启

[root@NFS ~]$ systemctl restart nfs-server.service

[root@NFS ~]$ showmount -e 10.0.0.68

Export list for 10.0.0.68:

/data/web2 *

/data/www *

#下载sersync,实现数据实时备份同步到NFS备份服务器

#下载sersync,解压,设置PATH变量

[root@NFS ~]$ wget https://storage.googleapis.com/google-code-archive-downloads/v2/code.google.com/sersync/sersync2.5.4_64bit_binary_stable_final.tar.gz

[root@NFS ~]$ tar xf sersync2.5.4_64bit_binary_stable_final.tar.gz

[root@NFS ~]$ cp -a GNU-Linux-x86/ /usr/local/sersync

[root@NFS ~]$ echo "PATH=/usr/local/sersync:$PATH" > /etc/profile.d/sersync.sh

[root@NFS ~]$ source /etc/profile.d/sersync.sh

#生成验证文件

[root@NFS ~]$ echo lgq123456 > /etc/rsync.pas

[root@NFS ~]$ chmod 600 /etc/rsync.pas

#备份sersync配置文件,修改配置文件

[root@NFS ~]$ cp -a /usr/local/sersync/confxml.xml{,.bak}

##web1(可道云)共享业务配置

[root@NFS ~]$ vi /usr/local/sersync/confxml.xml

1 <?xml version="1.0" encoding="ISO-8859-1"?>

2 <head version="2.5">

3 <host hostip="localhost" port="8008"></host>

4 <debug start="false"/>

5 <fileSystem xfs="false"/>

6 <filter start="false">

7 <exclude expression="(.*)\.svn"></exclude>

8 <exclude expression="(.*)\.gz"></exclude>

9 <exclude expression="^info/*"></exclude>

10 <exclude expression="^static/*"></exclude>

11 </filter>

12 <inotify>

13 <delete start="true"/>

14 <createFolder start="true"/>

15 <createFile start="false"/>

16 <closeWrite start="true"/>

17 <moveFrom start="true"/>

18 <moveTo start="true"/>

19 <attrib start="true"/> ##修改此行为true,文件属性变化后也会同步

20 <modify start="false"/>

21 </inotify>

22

23 <sersync>

24 <localpath watch="/data/www"> ##修改此行,需要同步的源目录

25 <remote ip="10.0.0.48" name="backup"/> #修改此行,指定备份服务器地址和rsync daemon的模块名,开启了ssh start,此时name为远程的shell方式运行时的目标目录

26 <!--<remote ip="192.168.8.39" name="tongbu"/>-->

27 <!--<remote ip="192.168.8.40" name="tongbu"/>-->

28 </localpath>

29 <rsync>

30 <commonParams params="-artuz"/>

31 <auth start="true" users="rsyncuser" passwordfile="/etc/rsync.pas"/> #修改此行为true,指定备份服务器的rsync配置的用户和密码

......

#以后台方式执行同步

[root@NFS ~]$ sersync2 -dro /usr/local/sersync/confxml.xml

##web2(jpress)业务共享配置

[root@NFS ~]$ cd /usr/local/sersync/

root@NFS sersync]$ cp confxml.xml jpress.xml

###相较于web1只需修改下面标记的两处

[root@NFS sersync]$ vi jpress.xml

<?xml version="1.0" encoding="ISO-8859-1"?>

2 <head version="2.5">

3 <host hostip="localhost" port="8008"></host>

4 <debug start="false"/>

5 <fileSystem xfs="false"/>

6 <filter start="false">

7 <exclude expression="(.*)\.svn"></exclude>

8 <exclude expression="(.*)\.gz"></exclude>

9 <exclude expression="^info/*"></exclude>

10 <exclude expression="^static/*"></exclude>

11 </filter>

12 <inotify>

13 <delete start="true"/>

14 <createFolder start="true"/>

15 <createFile start="false"/>

16 <closeWrite start="true"/>

17 <moveFrom start="true"/>

18 <moveTo start="true"/>

19 <attrib start="true"/>

20 <modify start="false"/>

21 </inotify>

22

23 <sersync>

24 <localpath watch="/data/web2"> #只需要将web1中的这个共享目录改成web2的

25 <remote ip="10.0.0.78" name="web2-backup"/> #这个是备份服务器中定义对应web2的rsync daemon的模块名

26 <!--<remote ip="192.168.8.39" name="tongbu"/>-->

27 <!--<remote ip="192.168.8.40" name="tongbu"/>-->

28 </localpath>

29 <rsync>

30 <commonParams params="-artuz"/>

31 <auth start="true" users="rsyncuser" passwordfile="/etc/rsync.pas"/>

32 <userDefinedPort start="false" port="874"/><!-- port=874 -->

33 <timeout start="false" time="100"/><!-- timeout=100 -->

34 <ssh start="false"/>

35 </rsync>

#后台独立运行web2对应服务

[root@NFS sersync]$ sersync2 -dro /usr/local/sersync/jpress.xml

#为了防止服务器重启后手动执行的服务断开,将执行命令写进文件中,随开机启动

[root@NFS ~]$ echo -e "/usr/local/sersync/sersync2 -dro /usr/local/sersync/confxml.xml &> /dev/null\n/usr/local/sersync/sersync2 -dro /usr/local/sersync/jpress.xml &> /dev/null" > /etc/profile.d/sersync2.sh

[root@NFS ~]$ chmod +x /etc/profile.d/sersync2.sh

- 部署nfs备份服务器

#在10.0.0.78 NFS备份服务器以独立服务方式运行rsync并实现验证功能

[root@NFS-bak ~]$ yum -y install rsync-daemon

#创建备份目录

[root@NFS-bak ~]$ mkdir /data/backup -p

[root@NFS-bak ~]$ mkdir /data/web2-backup

#修改配置文件,添加以下信息

[root@NFS-bak ~]$ vi /etc/rsyncd.conf

uid = www #指定以哪个用户来访问共享目录,将之指定为生成的文件所有者,默认是nobody

gid = www

max connections = 0

ignore errors

exclude = lost+found/

log file = /var/log/rsyncd.log

pid file = /var/run/rsyncd.pid

lock file = /var/run/rsyncd.lock

reverse lookup = no

[backup] #每个模块名对应一个不同的path目录,如果同名后面模块生效

path = /data/backup/

comment = backup dir

read only = no #默认是yes,即只读

auth users = rsyncuser #默认anonymous可以访问rsync服务器,主服务器中指定的用户

secrets file = /etc/rsync.pas

[web2-backup]

path = /data/web2-backup/

comment = backup dir

read only = no

auth users = rsyncuser

secrets file = /etc/rsync.pas

#创建验证文件

[root@NFS-bak ~]$ echo "rsyncuser:lgq123456" > /etc/rsync.pas

#创建传输用户

[root@NFS-bak ~]$ chmod 600 /etc/rsync.pas

[root@NFS-bak ~]$ groupadd -g 666 www

[root@NFS-bak ~]$ useradd -u 666 www -g 666

[root@NFS-bak ~]$ chown www.www /data/backup/ -R

[root@NFS-bak ~]$ chown -R www.www /data/web2-backup/

#重载配置

[root@NFS-bak ~]$ rsync --daemon

#放进文件中,随主机开启自启动

[root@NFS-bak ~]$ echo "rsync --daemon" > /etc/profile.d/rsync.sh

[root@NFS-bak ~]$ chmod +x /etc/profile.d/rsync.sh

- 测试是否主备同步数据

#在NFS主服务器上共享目录创建一个test.txt文件,查看备份服务器上是否同步

[root@NFS ~]$ cd /data/www/

[root@NFS www]$ touch test.txt

[root@NFS www]$ ll

total 0

-rw-r--r-- 1 root root 0 Mar 9 22:23 test.txt

[root@NFS-bak ~]$ ll /data/backup/

total 0

-rw-r--r-- 1 www www 0 Mar 9 22:23 test.txt

4 在10.0.0.48和10.0.0.58主机上搭建MySQL主从节点

- 主节点:10.0.0.48

- 从节点:10.0.0.58

- 搭建主节点

#安装mysql

[root@mysql-master ~]$ yum -y install mysql-server

#创建二进制日志存放路径,并在配置文件中指定路径以及日子文件的前缀

[root@mysql-master ~]$ mkdir /data/binlog

[root@mysql-master ~]$ chown mysql. /data/binlog/

#设置配置文件,并启动服务

[root@mysql-master ~]$ cat /etc/my.cnf

[mysqld]

server-id=48

log_bin=/data/binlog/mysql-bin

[root@mysql-master ~]$ systemctl enable --now mysqld

#创建复制用户以及授权

[root@mysql-master ~]$ mysql -uroot -plgq123456 -e "create user 'repluser'@'10.0.0.%' identified by 'lgq123456';"

[root@mysql-master ~]$ mysql -uroot -plgq123456 -e "grant replication slave on *.* to 'repluser'@'10.0.0.%';"

#创建kodbox对应数据库以及账号

[root@mysql-master ~]$ mysql -uroot -plgq123456 -e "create database kodbox;"

[root@mysql-master ~]$ mysql -uroot -plgq123456 -e "create user kodbox@'10.0.0.%' identified by 'lgq123456';"

[root@mysql-master ~]$ mysql -uroot -plgq123456 -e "grant all on kodbox.* to kodbox@'10.0.0.%';"

#创建web2业务对应的数据库和用户

[root@mysql-master ~]$ mysql -uroot -plgq123456 -e "create database jpress;"

[root@mysql-master ~]$ mysql -uroot -plgq123456 -e "create user jpress@'10.0.0.%' identified by '123456';"

[root@mysql-master ~]$ mysql -uroot -plgq123456 -e "grant all on jpress.* to jpress@'10.0.0.%';"

#进行完全备份

[root@mysql-master ~]$ mysqldump -uroot -plgq123456 -A -F --single-transaction --master-data=1 > full_backup.sql

#拷贝备份数据到从节点

[root@mysql-master ~]$ scp full_backup.sql 10.0.0.58:

- 搭建从节点

#安装

[root@mysql-slave ~]$ yum -y install mysql-server

#修改配置文件,并启动

[root@mysql-slave ~]$ vi /etc/my.cnf

#添加下面信息

[mysqld]

server-id=58

read-only

[root@mysql-slave ~]$ systemctl enable --now mysqld

#修改备份文件,在change master to中添加主节点信息

[root@mysql-slave ~]$ vi full_backup.sql

......

CHANGE MASTER TO

MASTER_HOST='10.0.0.48', #添上主节点ip地址

MASTER_USER='repluser', #添上在主节点创建的账号

MASTER_PASSWORD='lgq123456', #添上账号密码

MASTER_PORT=3306, #添上端口号

MASTER_LOG_FILE='mysql-bin.000003',

MASTER_LOG_POS=157;

......

#还原备份

###暂时关闭二进制日志

[root@mysql-slave ~]$ mysql

mysql> set sql_log_bin=0;

###还原

mysql> source /root/full_backup.sql;

##开启主从节点的链接线程

mysql> start slave;

##查看状态

mysql> show slave status\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for source to send event

Master_Host: 10.0.0.48

Master_User: repluser

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: mysql-bin.000003

Read_Master_Log_Pos: 157

Relay_Log_File: mysql-slave-relay-bin.000002

Relay_Log_Pos: 326

Relay_Master_Log_File: mysql-bin.000003

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 157

Relay_Log_Space: 542

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 48

Master_UUID: bdcb41ce-be61-11ed-808a-000c2924e25d

Master_Info_File: mysql.slave_master_info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: Replica has read all relay log; waiting for more updates

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

Replicate_Rewrite_DB:

Channel_Name:

Master_TLS_Version:

Master_public_key_path:

Get_master_public_key: 0

Network_Namespace:

1 row in set, 1 warning (0.01 sec)

- 测试主从是否同步

#在主节点上创建一个测试数据库

mysql> create database t1;

Query OK, 1 row affected (0.00 sec)

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| kodbox |

| mysql |

| performance_schema |

| sys |

| t1 |

+--------------------+

6 rows in set (0.00 sec)

#在从节点查看是否存在

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| kodbox |

| mysql |

| performance_schema |

| sys |

| t1 |

+--------------------+

6 rows in set (0.01 sec)

##说明主从复制已经可以同步了

5 在10.0.0.88主机上部署redis

#安装redis

[root@redis ~]$ yum -y install redis

#修改配置文件

[root@redis ~]$ vi /etc/redis.conf

bind 0.0.0.0 #将此行的127.0.0.1改为0.0.0.0,实现远程访问

[root@redis ~]$ systemctl enable --now redis

6 搭建 www.yanlinux.org web1业务(可道云业务)

6.1 在10.0.0.28上搭建nginx和php-fpm

# 1.利用脚本一键编译安装nginx

[root@web1 ~]$ cat install_nginx.sh

#!/bin/bash

OS_TYPE=`awk -F'[ "]' '/^NAME/{print $2}' /etc/os-release`

OS_VERSION=`awk -F'[".]' '/^VERSION_ID/{print $2}' /etc/os-release`

CPU=`lscpu |awk '/^CPU\(s\)/{print $2}'`

SRC_DIR=/usr/local/src

read -p "$(echo -e '\033[1;32m请输入下载的版本号:\033[0m')" NUM

NGINX_FILE=nginx-${NUM}

NGINX_INSTALL_DIR=/apps/nginx

color () {

RES_COL=60

MOVE_TO_COL="echo -en \\033[${RES_COL}G"

SETCOLOR_SUCCESS="echo -en \\033[1;32m"

SETCOLOR_FAILURE="echo -en \\033[1;31m"

SETCOLOR_WARNING="echo -en \\033[1;33m"

SETCOLOR_NORMAL="echo -en \E[0m"

echo -n "$1" && $MOVE_TO_COL

echo -n "["

if [ $2 = "success" -o $2 = "0" ] ;then

${SETCOLOR_SUCCESS}

echo -n $" OK "

elif [ $2 = "failure" -o $2 = "1" ] ;then

${SETCOLOR_FAILURE}

echo -n $"FAILED"

else

${SETCOLOR_WARNING}

echo -n $"WARNING"

fi

${SETCOLOR_NORMAL}

echo -n "]"

echo

}

#下载源码

wget_package(){

[ -e ${NGINX_INSTALL_DIR} ] && { color "nginx 已安装,请卸载后再安装" 1; exit; }

cd ${SRC_DIR}

if [ -e ${NGINX_FILE}.tar.gz ];then

color "源码包已经准备好" 0

else

color "开始下载源码包" 0

wget http://nginx.org/download/${NGINX_FILE}.tar.gz

[ $? -ne 0 ] && { color "下载 ${NGINX_FILE}.tar.gz文件失败" 1; exit; }

fi

}

#编译安装

install_nginx(){

color "开始安装nginx" 0

if id nginx &> /dev/null;then

color "nginx用户已经存在" 1

else

useradd -s /sbin/nologin -r nginx

color "nginx用户账号创建完成" 0

fi

color "开始安装nginx依赖包" 0

if [ $OS_TYPE == "Centos" -a ${OS_VERSION} == '7' ];then

yum -y install make gcc pcre-devel openssl-devel zlib-devel perl-ExtUtils-Embed

elif [ $OS_TYPE == "Centos" -a ${OS_VERSION} == '8' ];then

yum -y install make gcc-c++ libtool pcre pcre-devel zlib zlib-devel openssl openssl-devel perl-ExtUtils-Embed

elif [ $OS_TYPE == "Rocky" ];then

yum -y install make gcc libtool pcre pcre-devel zlib zlib-devel openssl openssl-devel perl-ExtUtils-Embed

elif [ $OS_TYPE == "Ubuntu" ];then

apt update

apt -y install make gcc libpcre3 libpcre3-dev openssl libssl-dev zlib1g-dev

else

color '不支持此系统!' 1

exit

fi

#开始编译安装

color "开始编译安装nginx" 0

cd $SRC_DIR

tar xf ${NGINX_FILE}.tar.gz

cd ${SRC_DIR}/${NGINX_FILE}

./configure --prefix=${NGINX_INSTALL_DIR} --user=nginx --group=nginx --with-http_ssl_module --with-http_v2_module --with-http_realip_module --with-http_stub_status_module --with-http_gzip_static_module --with-pcre --with-stream --with-stream_ssl_module --with-stream_realip_module

make -j ${CPU} && make install

[ $? -eq 0 ] && color "nginx 编译安装成功" 0 || { color "nginx 编译安装失败,退出!" 1 ;exit; }

ln -s ${NGINX_INSTALL_DIR}/sbin/nginx /usr/sbin/ &> /dev/null

#创建service文件

cat > /lib/systemd/system/nginx.service <<EOF

[Unit]

Description=The nginx HTTP and reverse proxy server

After=network.target remote-fs.target nss-lookup.target

[Service]

Type=forking

PIDFile=${NGINX_INSTALL_DIR}/logs/nginx.pid

ExecStartPre=/bin/rm -f ${NGINX_INSTALL_DIR}/logs/nginx.pid

ExecStartPre=${NGINX_INSTALL_DIR}/sbin/nginx -t

ExecStart=${NGINX_INSTALL_DIR}/sbin/nginx

ExecReload=/bin/kill -s HUP \$MAINPID

KillSignal=SIGQUIT

TimeoutStopSec=5

KillMode=process

PrivateTmp=true

LimitNOFILE=100000

[Install]

WantedBy=multi-user.target

EOF

#启动服务

systemctl enable --now nginx &> /dev/null

systemctl is-active nginx &> /dev/null || { color "nginx 启动失败,退出!" 1 ; exit; }

color "nginx 安装完成" 0

}

wget_package

install_nginx

##执行脚本安装nginx

[root@web1 ~]$ sh install_nginx.sh

[root@web1 ~]$ ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:80 0.0.0.0:*

# 2.安装配置php-fpm

[root@web1 ~]$ yum -y install php-fpm

##安装php-mysql 以及php-redis所依赖的包

[root@web1 ~]$ php-mysqlnd php-json php-cli php-devel

##下载php-redis

[root@web1 ~]$ wget https://pecl.php.net/get/redis-5.3.7.tgz -P /usr/local/src/

[root@web1 ~]$ cd /usr/local/src/

[root@web1 src]$ tar xf redis-5.3.7.tgz

[root@web1 src]$ cd redis-5.3.7/

[root@web1 redis-5.3.7]$ phpize

Configuring for:

PHP Api Version: 20170718

Zend Module Api No: 20170718

Zend Extension Api No: 320170718

[root@web1 redis-5.3.7]$ ./configure

[root@web1 redis-5.3.7]$ make && make install

##创建php支持redis扩展的配置文件

[root@web1 redis-5.3.7]$ vi /etc/php.d/31-redis.ini

extension=redis #加入此行

[root@web1 redis-5.3.7]$ cd

#修改php上传限制配置

[root@web1 ~]$ vi /etc/php.ini

post_max_size = 200M #修改为200M

upload_max_filesize = 200M #改为200M,实现大文件上传

#修改配置文件

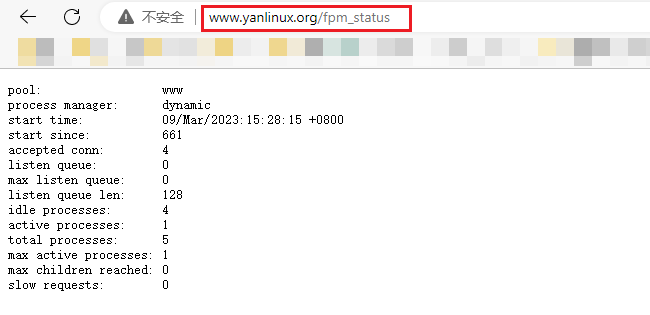

[root@web1 ~]$ vi /etc/php-fpm.d/www.conf

user = nginx #修改为nginx

group = nginx #修改为nginx

;listen = /run/php-fpm/www.sock #注释此行

listen = 127.0.0.1:9000 #添加此行,监控本机的9000端口

pm.status_path = /fpm_status #取消此行的注释,并改为fpm_status,防止与nginx服务的status冲突

ping.path = /ping #取消此行的注释

ping.response = pong #取消此行的注释

##启动服务

[root@web1 ~]$ systemctl enable --now php-fpm

# 3.配置nginx虚拟主机配置文件

##为了方便管理不同的业务,nginx支持子配置文件

##创建子配置文件目录

[root@web1 ~]$ mkdir /apps/nginx/conf/conf.d

[root@web1 ~]$ vi /apps/nginx/conf/nginx.conf

include /apps/nginx/conf/conf.d/*.conf; #在http语句块最后一行添加上这一行

##创建业务配置文件

[root@web1 ~]$ cat /apps/nginx/conf/conf.d/www.yanlinux.org.conf

server {

listen 80;

server_name www.yanlinux.org;

client_max_body_size 100M;

server_tokens off;

location / {

root /data/kodbox/;

index index.php index.html index.htm;

}

location ~ \.php$ {

root /data/kodbox/;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

fastcgi_hide_header X-Powered-By;

}

location ~ ^/(ping|fpm_status)$ {

fastcgi_pass 127.0.0.1:9000;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

}

# 4.重启服务

[root@web1 ~]$ systemctl restart nginx.service php-fpm.service

查看状态php状态页,测试服务搭建成功

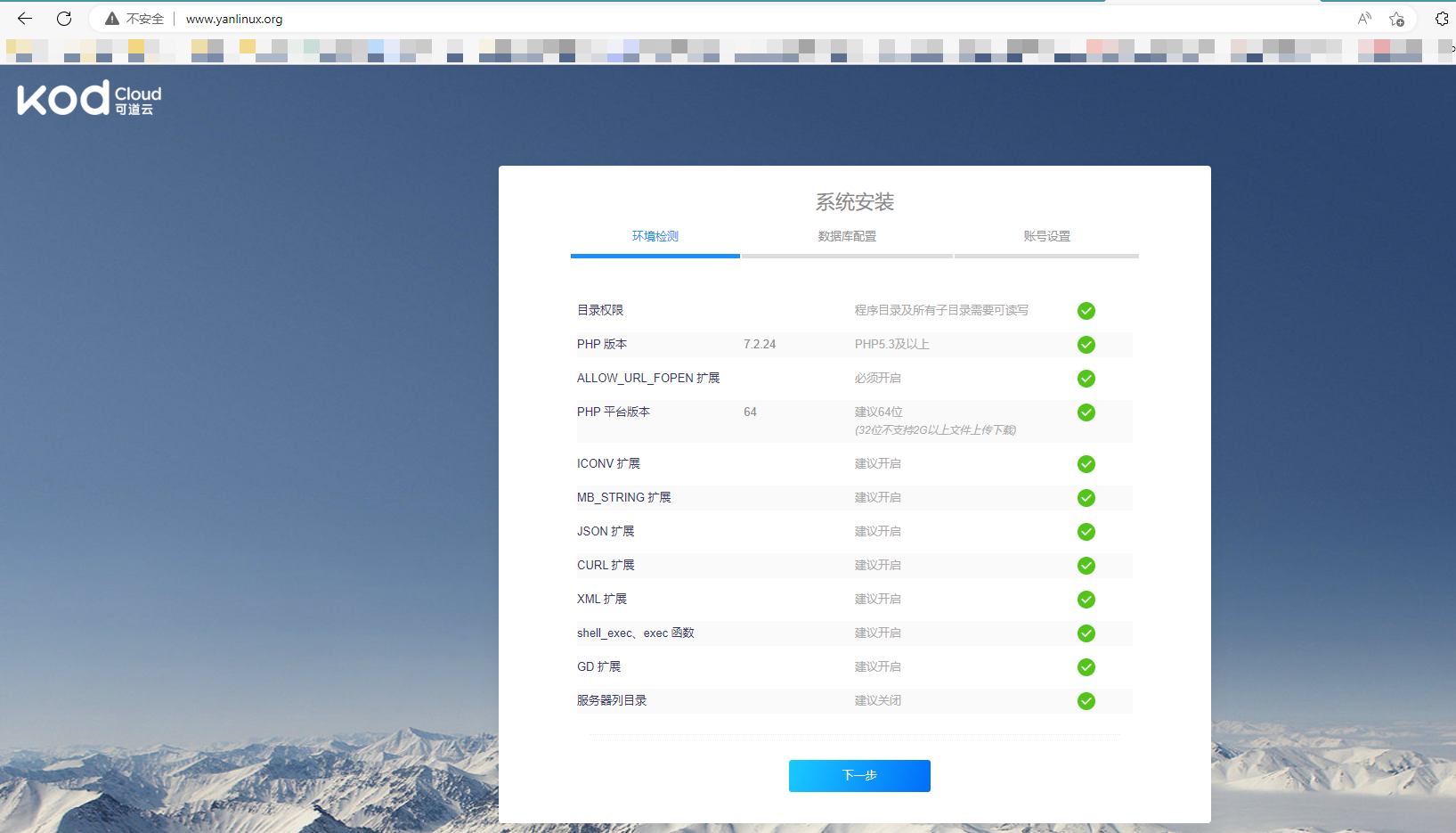

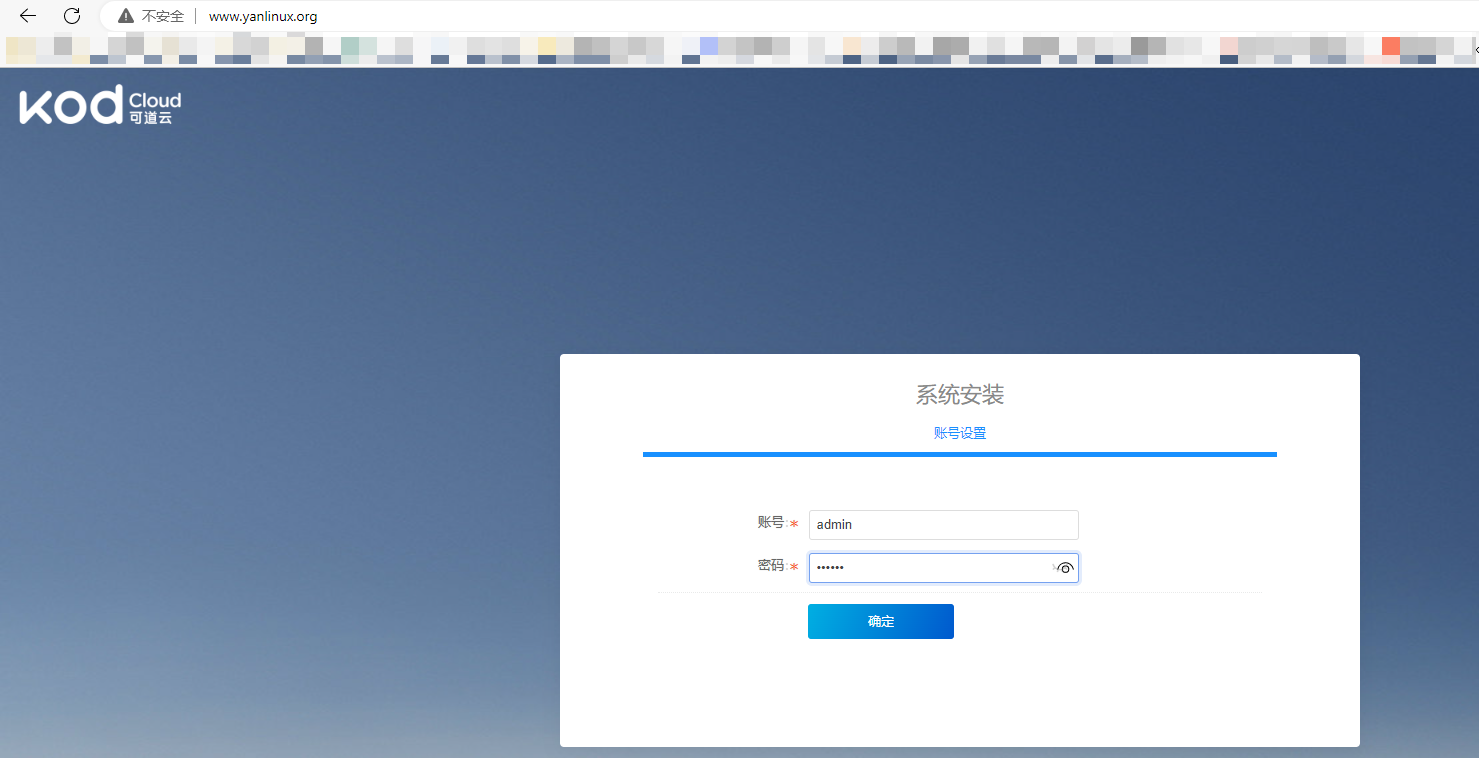

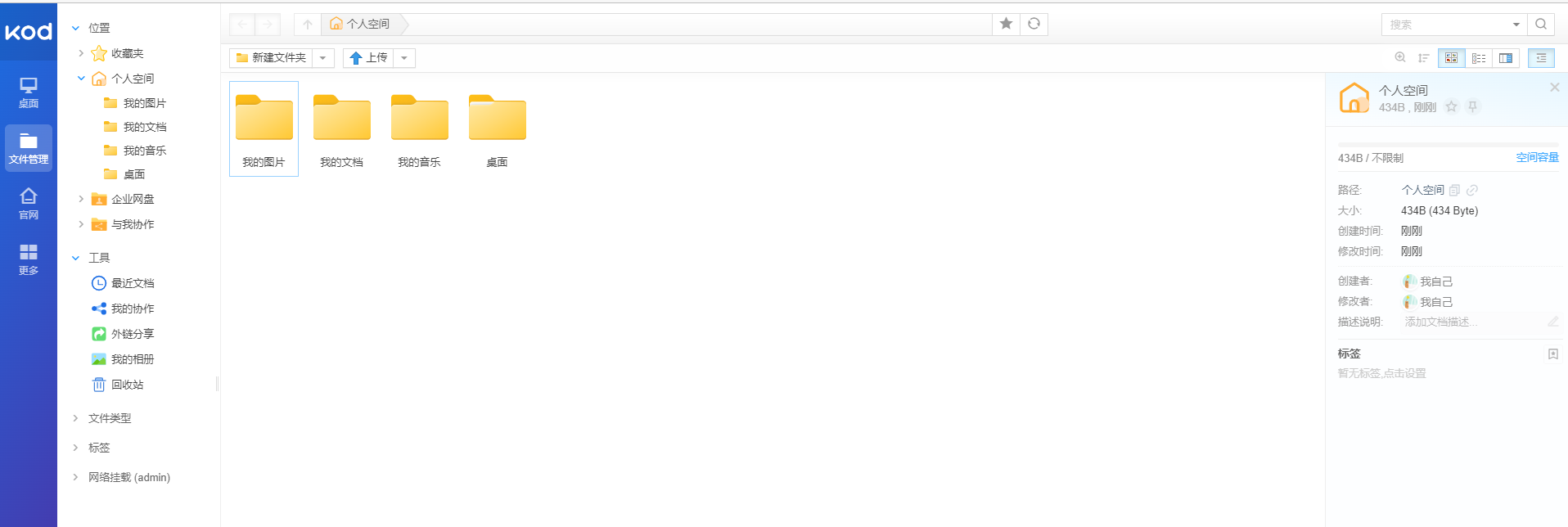

6.2 部署kodbox

##安装可道云(kodbox)所需的依赖包

[root@web1 ~]$ yum -y install php-mbstring php-xml php-gd

#下载源码包

[root@web1 ~]$ wget https://static.kodcloud.com/update/download/kodbox.1.35.zip

[root@web1 ~]$ unzip kodbox.1.35.zip -d /data/kodbox

[root@web1 ~]$ chown -R nginx.nginx /data/kodbox/

6.3 挂载NFS服务器,实现网站数据远程备份

#安装nfs-utils搭建挂载NFS

[root@web1 ~]$ yum -y install nfs-utils

[root@web1 ~]$ showmount -e 10.0.0.68

Export list for 10.0.0.68:

/data/web2 *

/data/www *

#实现永久挂载,添加以下挂载信息,利用可道云上传的数据都会存放在/data/kodbox/data/files目录下,所以讲这个目录挂载nfs

[root@web1 ~]$ vi /etc/fstab

10.0.0.68:/data/www /data/kodbox/data/files nfs _netdev 0 0

[root@web1 ~]$ mount -a

[root@web1 ~]$ df -h|grep data

10.0.0.68:/data/www 70G 2.3G 68G 4% /data/kodbox/data/files

往可道云上上传文件MyHotkeyScript.ahk,测试NFS主备服务是否都可以得到数据

#查看web1服务器上是否上传了数据

[root@web1 ~]$ ll /data/kodbox/data/files/202303/09_079920df/

total 4

-rwxrwxrwx 1 666 666 1491 Mar 9 22:51 MyHotkeyScript.ahk

#在NFS主服务器上查看

[root@NFS ~]$ ll /data/www/202303/09_079920df/

total 4

-rwxrwxrwx 1 www www 1491 Mar 9 22:51 MyHotkeyScript.ahk

#在NFS备份服务器上查看

[root@NFS-bak ~]$ ll /data/backup/202303/09_079920df/

total 4

-rwxrwxrwx 1 www www 1491 Mar 9 22:51 MyHotkeyScript.ahk

7 部署www.yanlinux.edu web2业务(JPress)

7.1 在10.0.0.38主机上搭建tomcat

#利用脚本一键安装jdk以及tomcat

[root@web2 ~]$ cat install_tomcat_jdk.sh

#!/bin/bash

DIR=`pwd`

read -p "$(echo -e '\033[1;32m请输入JDK版本号:\033[0m')" JDK_VERSION

read -p "$(echo -e '\033[1;32m请输入Tomcat版本号:\033[0m')" TOMCAT_VERSION

JDK_FILE="jdk-${JDK_VERSION}-linux-x64.tar.gz"

TOMCAT_FILE="apache-tomcat-${TOMCAT_VERSION}.tar.gz"

INSTALL_DIR="/usr/local"

color () {

RES_COL=60

MOVE_TO_COL="echo -en \\033[${RES_COL}G"

SETCOLOR_SUCCESS="echo -en \\033[1;32m"

SETCOLOR_FAILURE="echo -en \\033[1;31m"

SETCOLOR_WARNING="echo -en \\033[1;33m"

SETCOLOR_NORMAL="echo -en \E[0m"

echo -n "$2" && $MOVE_TO_COL

echo -n "["

if [ $1 = "success" -o $1 = "0" ] ;then

${SETCOLOR_SUCCESS}

echo -n $" OK "

elif [ $1 = "failure" -o $1 = "1" ] ;then

${SETCOLOR_FAILURE}

echo -n $"FAILED"

else

${SETCOLOR_WARNING}

echo -n $"WARNING"

fi

${SETCOLOR_NORMAL}

echo -n "]"

echo

}

install_jdk(){

if ! [ -f "${DIR}/${JDK_FILE}" ];then

color 1 "${JDK_FILE}不存在,请去官网下载"

exit;

elif [ -f ${INSTALL_DIR}/jdk ];then

color 1 "JDK已经安装"

exit;

else

[ -d "${INSTALL_DIR}" ] || mkdir -pv ${INSTALL_DIR}

fi

tar xf ${DIR}/${JDK_FILE} -C ${INSTALL_DIR}

cd ${INSTALL_DIR} && ln -s jdk* jdk

cat > /etc/profile.d/jdk.sh <<EOF

export JAVA_HOME=${INSTALL_DIR}/jdk

#export JRE_HOME=\$JAVA_HOME/jre

#export CLASSPATH=.:\$JAVA_HOME/lib/:\$JRE_HOME/lib/

export PATH=\$PATH:\$JAVA_HOME/bin

EOF

. /etc/profile.d/jdk.sh

java -version && color 0 "JDK安装完成" || { color 1 "JDK安装失败"; exit; }

}

install_tomcat(){

if ! [ -f "${DIR}/${TOMCAT_FILE}" ];then

color 1 "${TOMCAT_FILE}不存在,请去官网下载"

exit;

elif [ -f ${INSTALL_DIR}/tomcat ];then

color 1 "tomcat已经安装"

exit;

else

[ -d "${INSTALL_DIR}" ] || mkdir -pv ${INSTALL_DIR}

fi

tar xf ${DIR}/${TOMCAT_FILE} -C ${INSTALL_DIR}

cd ${INSTALL_DIR} && ln -s apache-tomcat-*/ tomcat

echo "PATH=${INSTALL_DIR}/tomcat/bin:"'$PATH' > /etc/profile.d/tomcat.sh

id tomcat &> /dev/null || useradd -r -s /sbin/nologin tomcat

cat > ${INSTALL_DIR}/tomcat/conf/tomcat.conf <<EOF

JAVA_HOME=${INSTALL_DIR}/jdk

EOF

chown -R tomcat.tomcat ${INSTALL_DIR}/tomcat/

cat > /lib/systemd/system/tomcat.service <<EOF

[Unit]

Description=Tomcat

#After=syslog.target network.target remote-fs.target nss-lookup.target

After=syslog.target network.target

[Service]

Type=forking

EnvironmentFile=${INSTALL_DIR}/tomcat/conf/tomcat.conf

ExecStart=${INSTALL_DIR}/tomcat/bin/startup.sh

ExecStop=${INSTALL_DIR}/tomcat/bin/shutdown.sh

RestartSec=3

PrivateTmp=true

User=tomcat

Group=tomcat

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable --now tomcat.service &> /dev/null

systemctl is-active tomcat.service &> /dev/null && color 0 "TOMCAT 安装完成" || { color 1 "TOMCAT 安装失败" ; exit; }

}

install_jdk

install_tomcat

[root@web2 ~]$ sh install_tomcat.sh

请输入JDK版本号:8u321

请输入Tomcat版本号:9.0.59

java version "1.8.0_321"

Java(TM) SE Runtime Environment (build 1.8.0_321-b07)

Java HotSpot(TM) 64-Bit Server VM (build 25.321-b07, mixed mode)

JDK安装完成 [ OK ]

TOMCAT 安装完成 [ OK ]

#创建虚拟主机

[root@web2 ~]$ vi /usr/local/tomcat/conf/server.xml

pattern="%h %l %u %t "%r" %s %b" />

</Host>

#在这一行之后添加下面几行信息

<Host name="www.yanlinux.edu" appBase="/data/jpress/" unpackWARs="true" autoDeploy="true">

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="jpress_access_log" suffix=".txt"

pattern="%h %l %u %t "%r" %s %b" />

</Host>

#以上信息就是虚拟主机的配置信息

</Engine>

</Service>

</Server>

#准备虚拟主机的数据目录,tomcat默认会在ROOT目录中找,所以需要将应用数据布置到这里面就可以避免在URL中添加应用目录来访问了。

[root@web2 ~]$ mkdir /data/webapps/ROOT -p

[root@web2 ~]$ chown -R tomcat.tomcat /data/webapps

[root@web2 ~]$ systemctl restart tomcat.service

7.2 部署nginx

#利用6.1中的安装nginx脚本来安装

[root@web2 ~]$ sh install_nginx.sh

#创建子配置目录

[root@web2 ~]$ mkdir /apps/nginx/conf/conf.d

[root@web2 ~]$ vi /apps/nginx/conf/nginx.conf

#在主配置文件中引入子配置目录

[root@web2 ~]$ tail -n2 /apps/nginx/conf/nginx.conf

include /apps/nginx/conf/conf.d/*.conf;

}

#创建业务2配置文件

[root@web2 ~]$ cat /apps/nginx/conf/conf.d/www.yanlinux.edu.conf

server {

listen 80;

server_name www.yanlinux.edu;

location / {

proxy_pass http://127.0.0.1:8080;

proxy_set_header Host $http_host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

}

[root@web2 ~]$ nginx -t

nginx: the configuration file /apps/nginx/conf/nginx.conf syntax is ok

nginx: configuration file /apps/nginx/conf/nginx.conf test is successful

[root@web2 ~]$ nginx -s reload

7.3 部署JPress应用

#上官网http://www.jpress.io/下载war包,上传到服务器

[root@web2 ~]$ cp jpress-v4.0.7.war /usr/local/tomcat/webapps/jpress.war

[root@web2 ~]$ cd /usr/local/tomcat/webapps/

#war包传到tomcat目录下就会自动解包,

[root@web2 webapps]$ ls

docs examples host-manager jpress jpress.war manager ROOT

#然后将jpress/目录下的内容拷贝到6.7.1创建tomcat虚拟主机www.yanlinux.edu的数据目录中

[root@web2 ~]$ cp -a /usr/local/tomcat/webapps/jpress/* /data/webapps/ROOT/

#数据库账号已经在6.4中创建直接连接就可以

浏览器访问

7.4 挂载NFS服务器

[root@web2 ~]$ yum -y install nfs-utils

[root@web2 ~]$ showmount -e 10.0.0.68

Export list for 10.0.0.68:

/data/web2 *

/data/www *

#永久挂载,添加挂载信息

[root@web2 ~]$ vi /etc/fstab

10.0.0.68:/data/web2 /data/webapps/ROOT/attachment/ nfs _netdev 0 0

[root@web2 ~]$ mount -a

[root@web2 ~]$ df -h |grep data

10.0.0.68:/data/web2 70G 2.2G 68G 4% /data/webapps/ROOT/attachment

#发布文章,添加一张照片测试

[root@web2 ~]$ ll /data/webapps/ROOT/attachment/20230310/

total 560

-rw-r--r-- 1 666 666 569655 Mar 10 11:10 2974a6d37fb04ebfab8c7816d0a8dadd.png

#NFS服务器上查看

[root@NFS ~]$ ll /data/web2/20230310/

total 560

-rw-r--r-- 1 www www 569655 Mar 10 11:10 2974a6d37fb04ebfab8c7816d0a8dadd.png

#NFS备份服务器查看

[root@NFS-bak ~]$ ll /data/web2-backup/20230310/

total 560

-rw-r--r-- 1 www www 569655 Mar 10 11:10 2974a6d37fb04ebfab8c7816d0a8dadd.png

7.5 利用redis实现session共享

#准备从官网下载两个jar包,上传到lib目录下,

##下载地址https://github.com/redisson/redisson/tree/master/redisson-tomcat

[root@web2 ~]$ cd /usr/local/tomcat/lib/

[root@web2 lib]$ ls redisson-*

redisson-all-3.20.0.jar redisson-tomcat-9-3.20.0.jar

#在context.xml最后一行之前加上以下内容

[root@web2 lib]$ vi ../conf/context.xml

#添加以下信息

<Manager className="org.redisson.tomcat.RedissonSessionManager"

configPath="${catalina.base}/conf/redisson.conf"

readMode="REDIS" updateMode="DEFAULT" broadcastSessionEvents="false"

keyPrefix=""/>

#以上信息就是添加的内容

</Context>

#创建redisson.conf文件

[root@web2 lib]$ vi ../conf/redisson.conf

{

"singleServerConfig":{

"idleConnectionTimeout":10000,

"connectTimeout":10000,

"timeout":3000,

"retryAttempts":3,

"retryInterval":1500,

"password":null,

"subscriptionsPerConnection":5,

"clientName":null,

"address": "redis://10.0.0.88:6379", #redis服务器地址

"subscriptionConnectionMinimumIdleSize":1,

"subscriptionConnectionPoolSize":50,

"connectionMinimumIdleSize":32,

"connectionPoolSize":64,

"database":0,

"dnsMonitoringInterval":5000

},

"threads":0,

"nettyThreads":0,

"codec":{

"class":"org.redisson.codec.JsonJacksonCodec"

},

"transportMode":"NIO"

}

[root@web2 lib]$ systemctl restart tomcat.service

8 KeepAlived+HAProxy服务搭建,实现整体架构

分别在10.0.0.8和10.0.0.18两台rocky主机上编译安装keepalived和HAProxy两个服务,实现高可用。

- 编译安装keepalived

#ka1节点编译安装

# 1.安装依赖

##centos和rocky

[root@ka1 ~]$ yum -y install gcc curl openssl-devel libnl3-devel net-snmp-devel

##ubuntu所需要的依赖下面两种:

##ubuntu18.04

[root@ubuntu1804 ~]$ apt -y install gcc curl openssl libssl-dev libpopt-dev daemon build-essential

##ubuntu20.04

[root@ubuntu2004 ~]$ apt -y install make gcc ipvsadm build-essential pkg-config automake autoconf libipset-dev libnl-3-dev libnl-genl-3-dev libssl-dev libxtables-dev libip4tc-dev libip6tc-dev libipset-dev libmagic-dev libsnmp-dev libglib2.0-dev libpcre2-dev libnftnl-dev libmnl-dev libsystemd-dev

# 2.下载源码包

[root@ka1 ~]$ wget https://keepalived.org/software/keepalived-2.2.7.tar.gz

##解压

[root@ka1 ~]$ tar xf keepalived-2.2.7.tar.gz

# 3.编译安装

[root@ka1 ~]$ cd keepalived-2.2.7/

#选项--disable-fwmark 可用于禁用iptables规则,可访止VIP无法访问,无此选项默认会启用iptables规则

[root@ka1 keepalived-2.2.7]$ ./configure --prefix=/usr/local/keepalived --disable-fwmark

[root@ka1 keepalived-2.2.7]$ make -j 2 && make install

##验证版本信息

[root@ka1 keepalived-2.2.7]$ /usr/local/keepalived/sbin/keepalived -v

Keepalived v2.2.7 (01/16,2022)

Copyright(C) 2001-2022 Alexandre Cassen, <acassen@gmail.com>

Built with kernel headers for Linux 4.18.0

Running on Linux 4.18.0-348.el8.0.2.x86_64 #1 SMP Sun Nov 14 00:51:12 UTC 2021

Distro: Rocky Linux 8.5 (Green Obsidian)

configure options: --prefix=/usr/local/keepalived --disable-fwmark

Config options: LVS VRRP VRRP_AUTH VRRP_VMAC OLD_CHKSUM_COMPAT INIT=systemd

System options: VSYSLOG MEMFD_CREATE IPV4_DEVCONF LIBNL3 RTA_ENCAP RTA_EXPIRES RTA_NEWDST RTA_PREF FRA_SUPPRESS_PREFIXLEN FRA_SUPPRESS_IFGROUP FRA_TUN_ID RTAX_CC_ALGO RTAX_QUICKACK RTEXT_FILTER_SKIP_STATS FRA_L3MDEV FRA_UID_RANGE RTAX_FASTOPEN_NO_COOKIE RTA_VIA FRA_PROTOCOL FRA_IP_PROTO FRA_SPORT_RANGE FRA_DPORT_RANGE RTA_TTL_PROPAGATE IFA_FLAGS LWTUNNEL_ENCAP_MPLS LWTUNNEL_ENCAP_ILA NET_LINUX_IF_H_COLLISION LIBIPTC_LINUX_NET_IF_H_COLLISION LIBIPVS_NETLINK IPVS_DEST_ATTR_ADDR_FAMILY IPVS_SYNCD_ATTRIBUTES IPVS_64BIT_STATS VRRP_IPVLAN IFLA_LINK_NETNSID GLOB_BRACE GLOB_ALTDIRFUNC INET6_ADDR_GEN_MODE VRF

# 4.创建service文件

##默认源码包中会有unit文件,只需要将提供的service文件拷贝到/lib/systemd/system/目录下即可

[root@ka1 keepalived-2.2.7]$ cp ./keepalived/keepalived.service /lib/systemd/system/

[root@ka1 keepalived-2.2.7]$ cat /lib/systemd/system/keepalived.service

[Unit]

Description=LVS and VRRP High Availability Monitor

After=network-online.target syslog.target

Wants=network-online.target

Documentation=man:keepalived(8)

Documentation=man:keepalived.conf(5)

Documentation=man:genhash(1)

Documentation=https://keepalived.org

[Service]

Type=forking

PIDFile=/run/keepalived.pid

KillMode=process

EnvironmentFile=-/usr/local/keepalived/etc/sysconfig/keepalived

ExecStart=/usr/local/keepalived/sbin/keepalived $KEEPALIVED_OPTIONS

ExecReload=/bin/kill -HUP $MAINPID

[Install]

WantedBy=multi-user.target

# 5.创建配置文件

##编译目录下会自动生成示例配置文件,需要在/etc目录下建一个keepalived目录存放配置文件。然后将其中配置VRRP以及real-server的示例信息删除,只留下global_defs配置语块即可。

[root@ka1 keepalived-2.2.7]$ mkdir /etc/keepalived

[root@ka1 keepalived-2.2.7]$ cp /usr/local/keepalived/etc/keepalived/keepalived.conf.sample /etc/keepalived/keepalived.conf

##按自己需求修改示例配置文件

[root@ka1 keepalived-2.2.7]$ vi /etc/keepalived/keepalived.conf

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id ka1 #每个keepalived主机唯一标识,建议使用当前主机名,如果多节点重名可能会影响切换脚本执行。在另一台keepalived主机ka2上,应该改为ka2

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

vrrp_mcast_group4 230.1.1.1

}

include /etc/keepalived/conf.d/*.conf #当生产环境复杂时, /etc/keepalived/keepalived.conf 文件中内容过多,不易管理,可以将不同集群的配置,比如:不同集群的VIP配置放在独立的子配置文件中。利用include指令可以实现包含子配置文件

##创建子配置文件目录

[root@ka1 keepalived-2.2.7]$ mkdir /etc/keepalived/conf.d/

# 6.启动服务

[root@ka1 keepalived-2.2.7]$ systemctl daemon-reload

[root@ka1 keepalived-2.2.7]$ systemctl enable --now keepalived.service

[root@ka1 keepalived-2.2.7]$ systemctl is-active keepalived

active

# 7.在ka2节点上按照ka1节点操作进行编译安装

[root@ka2 ~]$ systemctl is-active keepalived.service

active

[root@ka2 ~]$ /usr/local/keepalived/sbin/keepalived -v

Keepalived v2.2.7 (01/16,2022)

Copyright(C) 2001-2022 Alexandre Cassen, <acassen@gmail.com>

Built with kernel headers for Linux 4.18.0

Running on Linux 4.18.0-348.el8.0.2.x86_64 #1 SMP Sun Nov 14 00:51:12 UTC 2021

Distro: Rocky Linux 8.5 (Green Obsidian)

configure options: --prefix=/usr/local/keepalived --disable-fwmark

Config options: LVS VRRP VRRP_AUTH VRRP_VMAC OLD_CHKSUM_COMPAT INIT=systemd

System options: VSYSLOG MEMFD_CREATE IPV4_DEVCONF LIBNL3 RTA_ENCAP RTA_EXPIRES RTA_NEWDST RTA_PREF FRA_SUPPRESS_PREFIXLEN FRA_SUPPRESS_IFGROUP FRA_TUN_ID RTAX_CC_ALGO RTAX_QUICKACK RTEXT_FILTER_SKIP_STATS FRA_L3MDEV FRA_UID_RANGE RTAX_FASTOPEN_NO_COOKIE RTA_VIA FRA_PROTOCOL FRA_IP_PROTO FRA_SPORT_RANGE FRA_DPORT_RANGE RTA_TTL_PROPAGATE IFA_FLAGS LWTUNNEL_ENCAP_MPLS LWTUNNEL_ENCAP_ILA NET_LINUX_IF_H_COLLISION LIBIPTC_LINUX_NET_IF_H_COLLISION LIBIPVS_NETLINK IPVS_DEST_ATTR_ADDR_FAMILY IPVS_SYNCD_ATTRIBUTES IPVS_64BIT_STATS VRRP_IPVLAN IFLA_LINK_NETNSID GLOB_BRACE GLOB_ALTDIRFUNC INET6_ADDR_GEN_MODE VRF

编译安装HAProxy服务

编译安装HAProxy 2.6 LTS版本,更多源码包下载地址:http://www.haproxy.org/download/

依赖lua环境,由于CentOS7 之前版本自带的lua版本比较低并不符合HAProxy要求的lua最低版本(5.3)的要求,因此需要编译安装较新版本的lua环境,然后才能编译安装HAProxy。

#ka1节点安装HAProxy

# 1.安装依赖环境

##centos或rocky

[root@ka1 ~]$ yum -y install gcc make gcc-c++ glibc glibc-devel pcre pcre-devel openssl openssl-devel systemd-devel libtermcap-devel ncurses-devel libevent-devel readline-devel

##ubuntu

apt -y install gcc make openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev libreadline-dev libsystemd-dev

# 2.编译安装lua环境

##下载源码:参考链接http://www.lua.org/start.html

[root@ka1 ~]$ curl -R -O http://www.lua.org/ftp/lua-5.4.4.tar.gz

[root@ka1 ~]$ tar xvf lua-5.3.5.tar.gz -C /usr/local/src

[root@ka1 ~]$ cd /usr/local/src/lua-5.3.5

[root@ka1 lua-5.3.5]$ make all test

[root@ka1 lua-5.3.5]$ pwd

/usr/local/src/lua-5.3.5

[root@ka1 lua-5.3.5]$ ./src/lua -v

Lua 5.3.5 Copyright (C) 1994-2018 Lua.org, PUC-Rio

# 3.编译安装haproxy

##下载源码:官网链接:www.haproxy.org

[root@ka1 ~]$ https://www.haproxy.org/download/2.6/src/haproxy-2.6.9.tar.gz

[root@ka1 ~]$ tar xvf haproxy-2.6.9.tar.gz -C /usr/local/src

[root@ka1 ~]$ cd /usr/local/src/haproxy-2.6.9

##编译安装

[root@ka1 haproxy-2.6.9]$ make ARCH=x86_64 TARGET=linux-glibc USE_PCRE=1 USE_OPENSSL=1 USE_ZLIB=1 USE_SYSTEMD=1 USE_CPU_AFFINITY=1 USE_LUA=1 LUA_INC=/usr/local/src/lua-5.3.5/src/ LUA_LIB=/usr/local/src/lua-5.3.5/src/ PREFIX=/apps/haproxy

[root@ka1 haproxy-2.6.9]$ make install PREFIX=/apps/haproxy

##解决环境变量

[root@ka1 haproxy-2.6.9]$ ln -s /apps/haproxy/sbin/haproxy /usr/sbin/

##验证haproxy版本

[root@ka1 haproxy-2.6.9]$ which haproxy

/usr/sbin/haproxy

[root@ka1 haproxy-2.6.9]$ haproxy -v

HAProxy version 2.6.9-3a3700a 2023/02/14 - https://haproxy.org/

Status: long-term supported branch - will stop receiving fixes around Q2 2027.

Known bugs: http://www.haproxy.org/bugs/bugs-2.6.9.html

Running on: Linux 4.18.0-348.el8.0.2.x86_64 #1 SMP Sun Nov 14 00:51:12 UTC 2021 x86_64

# 4.创建HAProxy配置文件

[root@ka1 haproxy-2.6.9]$ cd

##准备配置文件目录

[root@ka1 ~]$ mkdir /etc/haproxy

[root@ka1 ~]$ cat > /etc/haproxy/haproxy.cfg <<EOF

global

maxconn 100000

stats socket /var/lib/haproxy/haproxy.sock mode 600 level admin

uid 99 #指定运行haproxy的用户身份

gid 99 #指定运行haproxy的用户身份

daemon #以守护进程运行

nbthread 2 #指定每个haproxy进程开启的线程数,默认为每个进程一个线程

cpu-map 1/all 0-1 ##haproxy2.4中启用nbthreads,在global配置中添加此选项,可以进行线程和CPU的绑定

pidfile /var/lib/haproxy/haproxy.pid

log 127.0.0.1 local3 info

defaults

option http-keep-alive #开启与客户端的会话保持

option forwardfor #透传客户端真实IP至后端web服务器

maxconn 100000

mode http #设置默认工作类型,使用TCP服务器性能更好,减少压力

timeout connect 300000ms #客户端请求从haproxy到后端server最长连接等待时间(TCP连接之前),默认单位ms

timeout client 300000ms #设置haproxy与客户端的最长非活动时间,默认单位ms,建议和timeoutserver相同

timeout server 300000ms #客户端请求从haproxy到后端服务端的请求处理超时时长(TCP连接之后),默认单位ms,如果超时,会出现502错误,此值建议设置较大些,防止出现502错误

listen stats

mode http #http协议

bind 10.0.0.8:9999 #对外发布的IP及端口。#指定HAProxy的监听地址,可以是IPV4或IPV6,可以同时监听多个IP或端口。在ka2主机上该项应该改为自己主机的IP地址

stats enable

log global

stats uri /haproxy-status

stats auth admin:123456

EOF

##准备socket文件目录

[root@ka1 ~]$ mkdir -p /var/lib/haproxy

# 5.创建用户及组

[root@ka1 ~]$ groupadd -g 99 haproxy

[root@ka1 ~]$ useradd -u 99 -g haproxy -d /var/lib/haproxy -M -r -s /sbin/nologin haproxy

# 6.创建服务启动service文件

[root@ka1 ~]$ cat > /lib/systemd/system/haproxy.service <<EOF

[Unit]

Description=HAProxy Load Balancer

After=syslog.target network.target

[Service]

ExecStartPre=/usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -c -q

ExecStart=/usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /var/lib/haproxy/haproxy.pid

ExecReload=/bin/kill -USR2 $MAINPID

[Install]

WantedBy=multi-user.target

EOF

# 7.使用子配置文件保存配置

## 当业务众多时,将所有配置都放在一个配置文件中,会造成维护困难。可以考虑按业务分类,将配置信息拆分,放在不同的子配置文件中,从而达到方便维护的目的。

##创建子配置目录

[root@ka1 ~]$ mkdir /etc/haproxy/conf.d

##添加子配置文件目录到service文件中

[root@ka1 ~]$ vi /lib/systemd/system/haproxy.service

[Unit]

Description=HAProxy Load Balancer

After=syslog.target network.target

[Service]

ExecStartPre=/usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -f /etc/haproxy/conf.d -c -q #这一行添加-f 子配置文件目录

ExecStart=/usr/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -f /etc/haproxy/conf.d -p /var/lib/haproxy/haproxy.pid #这一行添加-f 子配置文件目录

ExecReload=/bin/kill -USR2

[Install]

WantedBy=multi-user.target

# 8.修改内核参数,让haproxy bind在非本机的ip上(也就是Keepalived产生的VIP),在keepalived从节点上,刚开始是没有这个IP的,如果不修改这个内核参数,启动不了haproxy服务

[root@ka1 ~]$ vi /etc/sysctl.conf

net.ipv4.ip_nonlocal_bind = 1 #添加这一行

#使内核参数生效

[root@ka1 ~]$ sysctl -p

net.ipv4.ip_nonlocal_bind = 1

# 9.启动服务

[root@ka1 ~]$ systemctl daemon-reload

[root@ka1 ~]$ systemctl enable --now haproxy

###现在就可以在子配置目录中根据不同的业务来创建对应的配置文件了。

# 10.ka2节点按照ka1节点安装操作从头做一遍

- 创建两个不同业务的配置文件

#ka1节点配置

##keepalived子配置文件

[root@ka1 ~]$ cd /etc/keepalived/conf.d/

##创建haproxy检测脚本

[root@ka1 ~]$ cat /etc/keepalived/conf.d/check_haproxy.sh

#!/bin/bash

/usr/bin/killall -0 haproxy || systemctl restart haproxy

##添加执行权限

[root@ka1 ~]$ chmod a+x /etc/keepalived/conf.d/check_haproxy.sh

##创建邮件通知脚本

[root@ka1 ~]$ cat /etc/keepalived/notify.sh

#!/bin/bash

contact='lgq6579@163.com'

email_send='1499214187@qq.com'

email_passwd='zzvjrqnkrkafbaec'

email_smtp_server='smtp.qq.com'

. /etc/os-release

msg_error() {

echo -e "\033[1;31m$1\033[0m"

}

msg_info() {

echo -e "\033[1;32m$1\033[0m"

}

msg_warn() {

echo -e "\033[1;33m$1\033[0m"

}

color () {

RES_COL=60

MOVE_TO_COL="echo -en \\033[${RES_COL}G"

SETCOLOR_SUCCESS="echo -en \\033[1;32m"

SETCOLOR_FAILURE="echo -en \\033[1;31m"

SETCOLOR_WARNING="echo -en \\033[1;33m"

SETCOLOR_NORMAL="echo -en \E[0m"

echo -n "$1" && $MOVE_TO_COL

echo -n "["

if [ $2 = "success" -o $2 = "0" ] ;then

${SETCOLOR_SUCCESS}

echo -n $" OK "

elif [ $2 = "failure" -o $2 = "1" ] ;then

${SETCOLOR_FAILURE}

echo -n $"FAILED"

else

${SETCOLOR_WARNING}

echo -n $"WARNING"

fi

${SETCOLOR_NORMAL}

echo -n "]"

echo

}

install_sendemail () {

if [[ $ID =~ rhel|centos|rocky ]];then

rpm -q sendemail &> /dev/null || yum -y install sendemail

elif [ $ID = 'ubuntu' ];then

dpkg -l | grep -q sendemail || { apt update; apt -y install libio-socket-ssl-perl libnet-ssleay-perl sendemail; }

else

color "不支持此操作系统,退出!" 1

exit

fi

}

send_mail() {

local email_receive="$1"

local email_subject="$2"

local email_message="$3"

sendemail -f $email_send -t $email_receive -u $email_subject -m $email_message -s $email_smtp_server -o message-charset=utf-8 -o tls=yes -xu $email_send -xp $email_passwd

[ $? -eq 0 ] && color "邮件发送成功" 0 || color "邮件发送失败" 1

}

notify() {

if [[ $1 =~ ^(master|backup|fault)$ ]];then

mailsubject="$(hostname) to be $1, vip floating"

mailbody="$(date +'%F %T'): vrrp transition, $(hostname) changed to be $1"

send_mail "$contact" "$mailsubject" "$mailbody"

else

echo "Usage: $(basename $0) {master|backup|fault}"

exit 1

fi

}

install_sendemail

notify $1

##加执行权限

[root@ka1 ~]$ chmod a+x /etc/keepalived/notify.sh

##创建子配置文件

[root@ka1 conf.d]$ cat web.conf

vrrp_script check_haproxy {

script "/etc/keepalived/conf.d/check_haproxy.sh" ##shell命令或脚本路径

interval 1 #间隔时间,单位为秒,默认1秒

weight -30 #默认为0,如果设置此值为负数,当上面脚本返回值为非0时,会将此值与本节点权重相加可以降低本节点权重,即表示fall. 如果是正数,当脚本返回值为0,会将此值与本节点权重相加可以提高本节点权重,即表示 rise.通常使用负值

fall 3 #执行脚本连续几次都失败,则转换为失败,建议设为2以上

rise 2 #执行脚本连续几次都成功,把服务器从失败标记为成功

timeout 2 #超时时间

}

vrrp_instance VI_1 {

state MASTER #当前节点在此虚拟路由器上的初始状态,状态为MASTER,在ka2主机上要设置为BACKUP

interface eth0 #绑定为当前虚拟路由器使用的物理接口

virtual_router_id 51 #每个虚拟路由器唯一标识,范围:0-255,每个虚拟路由器此值必须唯一,否则服务无法启动,同属一个虚拟路由器的多个keepalived节点必须相同,务必要确认在同一网络中此值必须唯一

priority 100 #当前物理节点在此虚拟路由器的优先级,范围:1-254,每个keepalived主机节点此值不同。ka2主机要设置为80

advert_int 1 #vrrp通告的时间间隔,默认1s

authentication { #认证机制

auth_type PASS

auth_pass 1111

}

virtual_ipaddress { #虚拟IP,生产环境可能指定上百个IP地址

10.0.0.200 dev eth0 label eth0:1 #指定VIP的网卡label

}

unicast_src_ip 10.0.0.8 #指定发送单播的源IP

unicast_peer{

10.0.0.18 #指定接收单播的对方目标主机IP

}

notify_master "/etc/keepalived/notify.sh master" #当前节点成为主节点时触发的脚本

notify_backup "/etc/keepalived/notify.sh backup" #当前节点转为备节点时触发的脚本

notify_fault "/etc/keepalived/notify.sh fault" #当前节点转为“失败”状态时触发的脚本

track_script {

check_haproxy #调用脚本

}

}

##创建haproxy业务子配置文件

## 注意: 子配置文件的文件后缀必须为.cfg

[root@ka1 ~]$ cd /etc/haproxy/conf.d/

[root@ka1 conf.d]$ cat web.cfg

frontend http_80

bind 10.0.0.200:80

acl org_domain hdr_dom(host) -i www.yanlinux.org

acl edu_domain hdr_dom(host) -i www.yanlinux.edu

use_backend www.yanlinux.org if org_domain

use_backend www.yanlinux.edu if edu_domain

backend www.yanlinux.org

server 10.0.0.28 10.0.0.28:80 check inter 3000 fall 3 rise 5

backend www.yanlinux.edu

server 10.0.0.38 10.0.0.38:80 check inter 3000 fall 3 rise 5

##重启服务

[root@ka1 ~]$ systemctl restart keepalived.service haproxy.service

#ka2节点配置

##keepalived业务子配置文件

##从ka1节点上拷贝邮件通知脚本和haproxy检查脚本到本机上

[root@ka2 ~]$ scp 10.0.0.8:/etc/keepalived/notify.sh /etc/keepalived/

[root@ka2 ~]$ scp 10.0.0.8:/etc/keepalived/conf.d/check_haproxy.sh /etc/keepalived/conf.d/

##创建子配置文件,大致上与ka1节点上的配置相同

[root@ka2 ~]$ cat /etc/keepalived/conf.d/web.conf

vrrp_script check_haproxy {

script "/etc/keepalived/conf.d/check_haproxy.sh"

interval 1

weight -30

fall 3

rise 2

timeout 2

}

vrrp_instance VI_1 {

state BACKUP #这里改为BACKUP

interface eth0

virtual_router_id 51

priority 80 #改为80,因为是从节点

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.200 dev eth0 label eth0:1

}

unicast_src_ip 10.0.0.18

unicast_peer {

10.0.0.8

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault"

track_script {

check_haproxy

}

}

##创建haproxy业务子配置文件,直接从ka1节点拷贝过来就行

[root@ka2 ~]$ scp 10.0.0.8:/etc/haproxy/conf.d/web.cfg /etc/haproxy/conf.d

##重启服务

[root@ka2 ~]$ systemctl restart keepalived.service haproxy.service

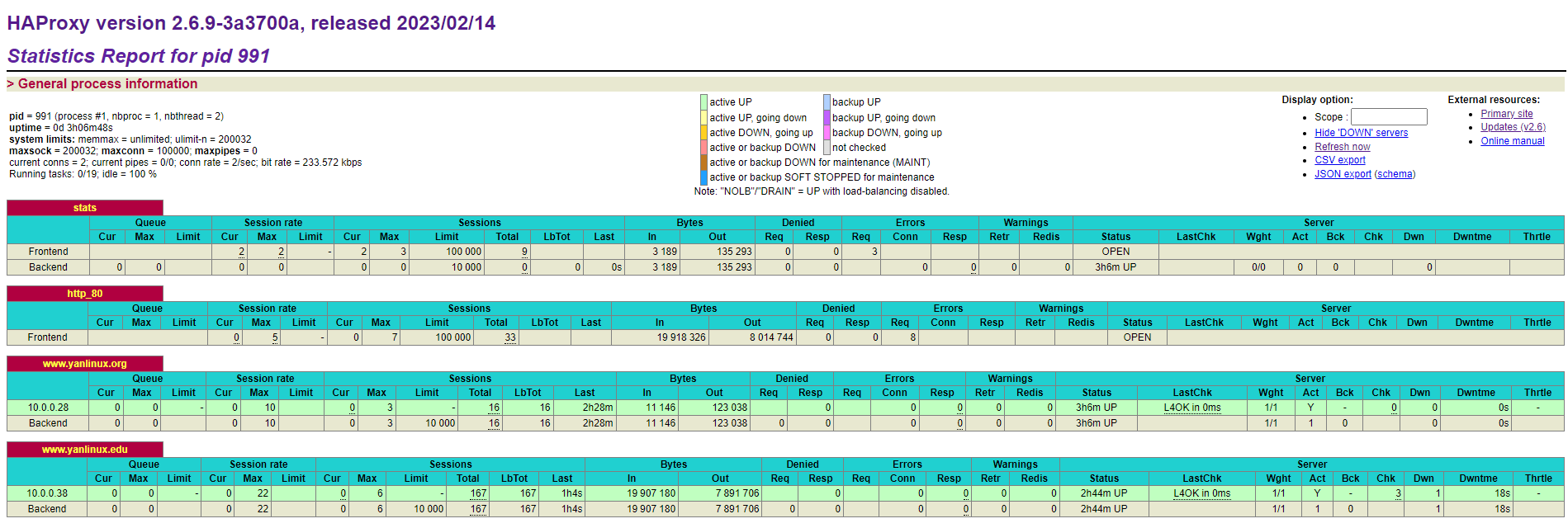

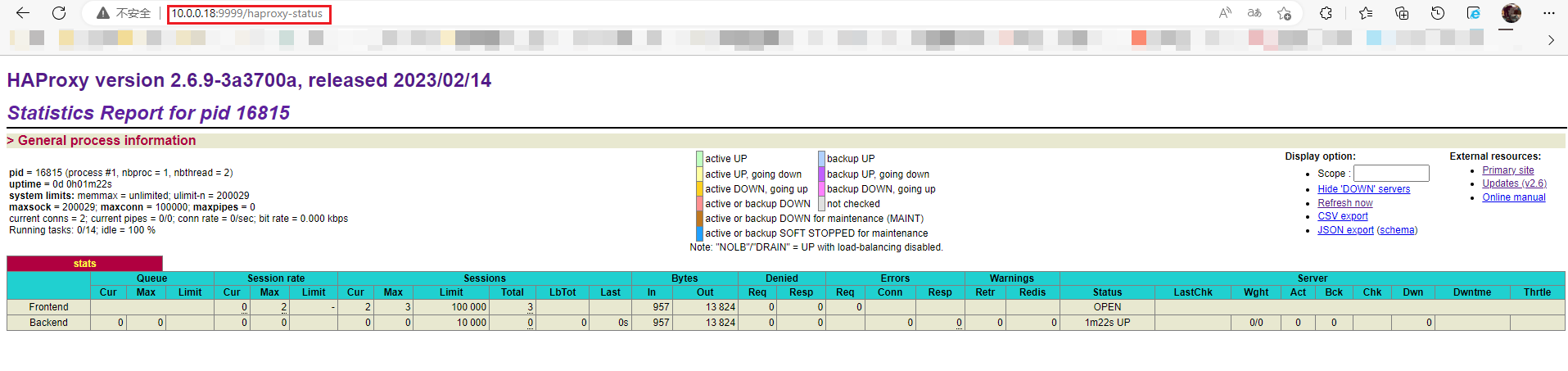

两台keepalived状态页信息,测试keepalived业务搭建成功

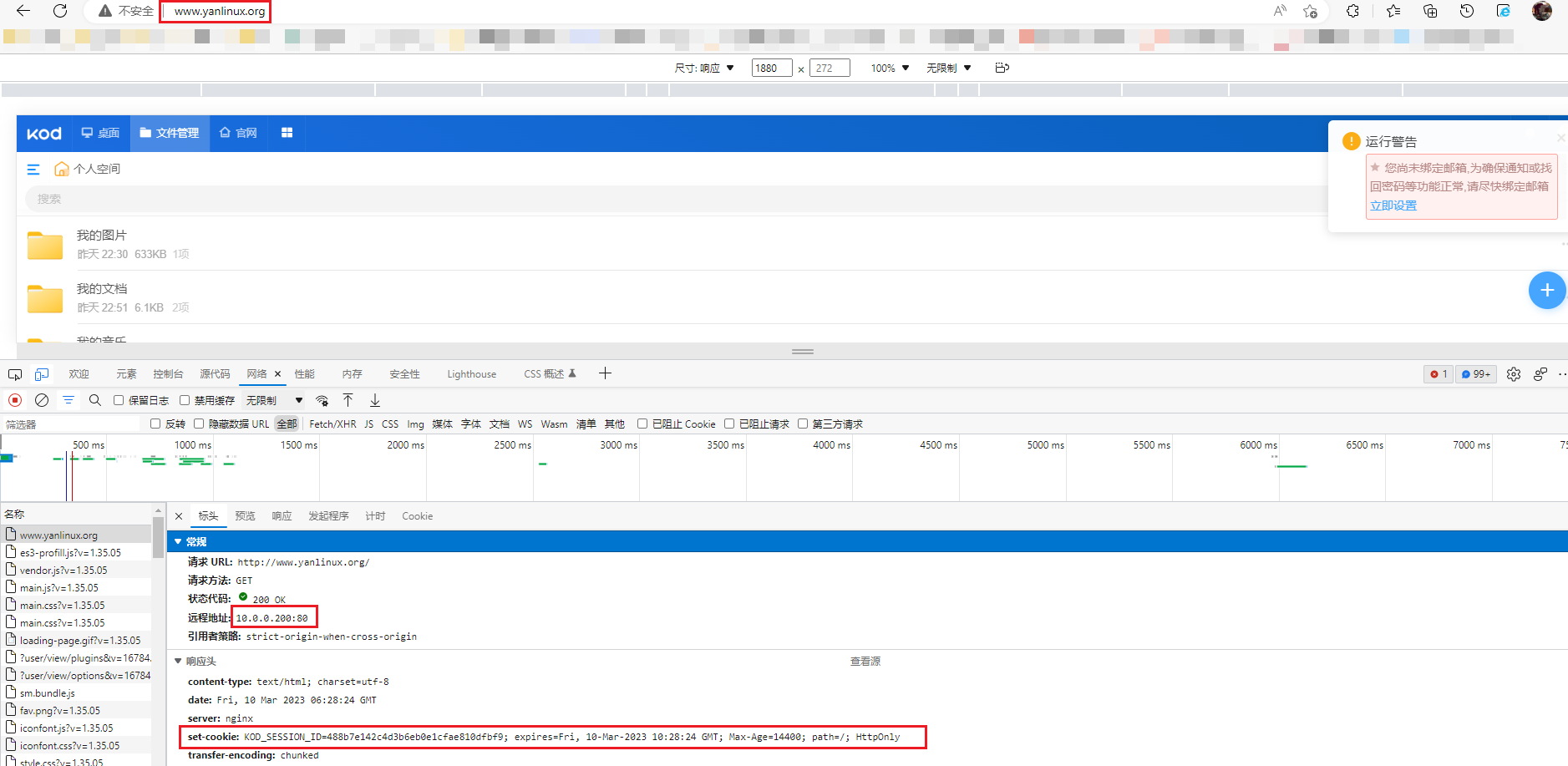

9 整体架构完成,业务访问测试

[root@internet ~]$ curl -I www.yanlinux.org

HTTP/1.1 200 OK

server: nginx

date: Fri, 10 Mar 2023 06:36:29 GMT

content-type: text/html; charset=utf-8

set-cookie: KOD_SESSION_ID=aae53db9278d6386198b98a7a0441608; expires=Fri, 10-Mar-2023 10:36:29 GMT; Max-Age=14400; path=/; HttpOnly

set-cookie: CSRF_TOKEN=FGJc4urT5PVxmrWT; expires=Fri, 17-Mar-2023 06:36:29 GMT; Max-Age=604800; path=/

[root@internet ~]$ curl -I www.yanlinux.edu

HTTP/1.1 200

server: nginx/1.22.1

date: Fri, 10 Mar 2023 06:38:27 GMT

content-type: text/html;charset=UTF-8

set-cookie: csrf_token=c871c9a8e1e34c38a7773ad96cea0f09; Path=/

网页浏览器查看

Keepalived+HAProxy基于ACL实现单IP多域名负载功能的更多相关文章

- Haproxy基于ACL做访问控制

author:JevonWei 版权声明:原创作品 haproxy配置文档 https://cbonte.github.io/haproxy-dconv/ 基于ACL做访问控制(四层代理) 网络拓扑 ...

- MySQL架构之keepalived+haproxy+mysql 实现MHA中slave集群负载均衡的高可用(原创)

MySQL的高可用方案一般有如下几种:keepalived+双主,MHA,PXC,MMM,Heartbeat+DRBD等,比较常用的是keepalived+双主,MHA和PXC. HAProxy是一款 ...

- 使用haproxy的ACL封禁IP

http://www.360doc.com/content/11/1226/13/834950_175075893.shtml 该方法,用户访问得到的是403页面 或者尝试用http-request拒 ...

- 基于netty实现单聊、群聊功能

学习资料 https://juejin.im/book/5b4bc28bf265da0f60130116/section/5b6a1a9cf265da0f87595521 收获: 转载 1. Nett ...

- apache单ip多域名多目录配置

自己的vps上放了别人的网站,那怎么样让自己的网站和别人的网站能同时被访问呢?需要使用apache的虚拟主机配置. 配置httpd.conf文件 比如原来是这种只是指向一个目录的配置 Document ...

- [环境配置] 如何为Apache绑定多IP多域名

在Apache服务器上绑定方法比较简单,主要因为Apache是个开源独立的服务器软件,而且支持跨平台安装和配置,支持丰富的API扩展,所以很多人对Apache的好感要甚于IIS,Apache的优点就不 ...

- 搭建高可用kubernetes集群(keepalived+haproxy)

序 由于单master节点的kubernetes集群,存在master节点异常之后无法继续使用的缺陷.本文参考网管流程搭建一套多master节点负载均衡的kubernetes集群.官网给出了两种拓扑结 ...

- Keepalived+HAProxy 搭建高可用负载均衡

转载自:https://mp.weixin.qq.com/s/VebiWftaRa26x1aA21Jqww 1. 概述 软件负载均衡技术是指可以为多个后端服务器节点提供前端IP流量分发调度服务的软件技 ...

- haproxy 常用acl规则与会话保持

一.常用的acl规则 haproxy的ACL用于实现基于请求报文的首部.响应报文的内容或其它的环境状态信息来做出转发决策,这大大增强了其配置弹性.其配置法则通常分为两 步,首先去定义ACL,即定义一个 ...

- LVS,Keepalived,HAproxy区别与联系

LVS,Keepalived,HAproxy区别与联系 LVS 全称Linux Virtual Server,也就是Linux虚拟服务器,由章文嵩(现就职于于淘宝,正因为如此才出现了后来的fullna ...

随机推荐

- WDA学习(22):WDA PLG,Application跳转传参

1.15 WDA PLG,Application跳转传参 本实例Outbound Plugs页面跳转传参,URL跳转Application传参. 1.创建Component:Z_TEST_WDA_L6 ...

- react native android9 axios network error

react native 发布成apk后网络请求会报 network error 是因为android9以后http协议不能用,要用htts协议.需要改成配置能兼容http协议,修改信息如下: and ...

- flutter-linux(未完成)

运行命令 flutter run -d linux --no-sound-null-safety 打包(build/linux/x64/release/bundle/) flutter build l ...

- github进不去

发现github进不去了:百度解决方案:修改hosts表,文件位置在C:\Windows\System32\drivers\etc 记事本打开,尝试在最后添加140.82.112.4 github.c ...

- Android 系统完整的权限列表

访问登记属性 android.permission.ACCESS_CHECKIN_PROPERTIES ,读取或写入登记check-in数据库属性表的权限 获取错略位置 android.perm ...

- element 换肤

官网操作 https://element.eleme.cn/#/zh-CN/component/custom-theme 然后 执行 et -i 报错了!!! 查了一下,说的是node版本过高?那我就 ...

- c++ vtdcm对于压缩模式文档图片的读取

//获取dcm数据结构,一下图像数据仅针对单通道8字节数据 DcmFileFormat fileformat; OFCondition oc = fileformat.loadFile(dcmPath ...

- java为什么要使用静态内部类

参考:https://blog.csdn.net/fengyuyeguirenenen/article/details/122696650 static内部类意味着: (1) 为创建一个static内 ...

- anaconda的环境变量

参考: (40条消息) Anaconda 环境变量手动设置(详细)_一夜星尘的博客-CSDN博客_anaconda环境变量手动设置

- mysql授权、导入等基本操作

1.授权: mysqladmin -uroot password rootpwd mysql -uroot -prootpwd mysql -e "INSERT INTO user (Hos ...