h.264直接预测

直接预测是B帧上一种独有的预测方式,其中直接预测又分为两种模式: 时域直接模式(temporal direct)、空域直接模式(spatial direct)。

在分析这两种模式之前,有一个前提概念需要了解:共同位置4x4子宏块分割块(co-located 4x4 sub-macroblock partitions),下面简称为co-located。

共同位置4x4子宏块分割块,故名思义,该块大小为4x4。co-located的主要功能是得到出该4x4块的运动向量以及参考帧,以供后面的直接预测做后续处理,如果当前宏块进行的是直接预测,无论时域或空域,都会用到该co-located,因此需要先求出该4x4co-located的具体位置。

co-located的定位

1. 定位co-located所在图像

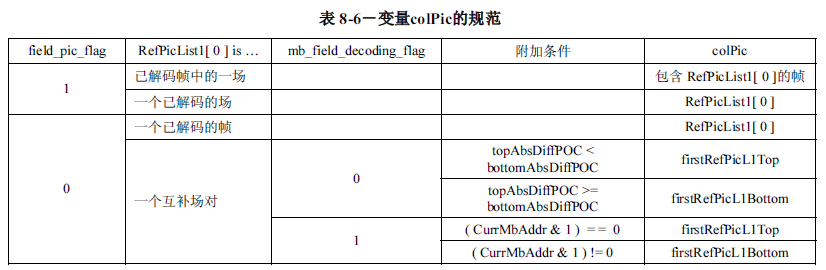

要求co-located的位置,首先需要知道co-located所在的图像colPic的位置,colPic可以确定在当前图像的第一个后向参考图像RefPicList1[ 0 ]内,但是colPic可以为帧或场,这取决于当期图像与参考图像,是以帧图像进行编码,还是以场图像进行编码,或者以互补场对(宏块级帧场自适应)方式进行编码。

- 第一项:field_pic_flag表示当前图像是以帧还是场方式进行编码

- 第二项:代表RefPicList1[0]的编码方式是帧、场、互补场对(两个场)

- 第三项:mb_field_decoding_flag代表当前宏块对是以帧、场方式进行编码(前提条件是当前图像是以帧方式进行编码,也只有当前图像选择了帧编码,才能选择宏块对(宏块帧场自适应)的编码方式)

- 第四项:

$\begin{align*}

topAbsDiffPOC &= |DiffPicOrderCnt( firstRefPicL1Top, CurrPic )|\\

bottomAbsDiffPOC &= |DiffPicOrderCnt( firstRefPicL1Bottom, CurrPic )|

\end{align*}$添加这些条件是为了在采用宏块级帧场自适编码方式时,应选择距离当前图像最近的顶场或底场作为col_Pic

- 第五项:col_Pic的取值,firstRefPicL1Top 和firstRefPicL1Bottom 分别为RefPicList1[ 0 ]中的顶场和底场

注:其实第二项RefPicList1[ 0 ]是一个被动选项,因为它是根据当前图像的编码方式是帧、场或宏块帧场自适应决定的,如果当前图像是场,那么RefPicList1[ 0 ]就有可能是已解码的场或者以解码帧中的一场;如果当前图像是帧(非MBAFF),那么RefPicList1[ 0 ]就是帧;如果当前宏块是帧并且为MBAFF,那么RefPicList1[ 0 ]就是一个互补场对(两个场可以组成一个帧)

2. 得到co-located在colPic内的位置

实际上,如果得到了colPic,就可以通过当前宏块的16个4x4块的绝对位置(以4x4块为单位相对于图像左上角(0,0)的绝对位置( opic_block_x,opic_block_y)),得到co-located在colPic的位置。

- 如果当前图像为帧,colPic为场,则co-located为(opic_block_x,opic_block_y>>1)

- 如果当前图像为场,colPic为帧,则co-located为(opic_block_x,opic_block_y<<1)

- 如果当前图像与colPic同为帧或场,则co-located为(opic_block_x,opic_block_y)

(注:像在jm18.6中,实际计算时,不会出现当前图像为场,colPic为帧的情况,因为场的参考图像都会被分为场后加入参考图像队列,所以最终计算时也会先根据当前场的是顶场或底场挑出参考帧中对应的场作为colPic )

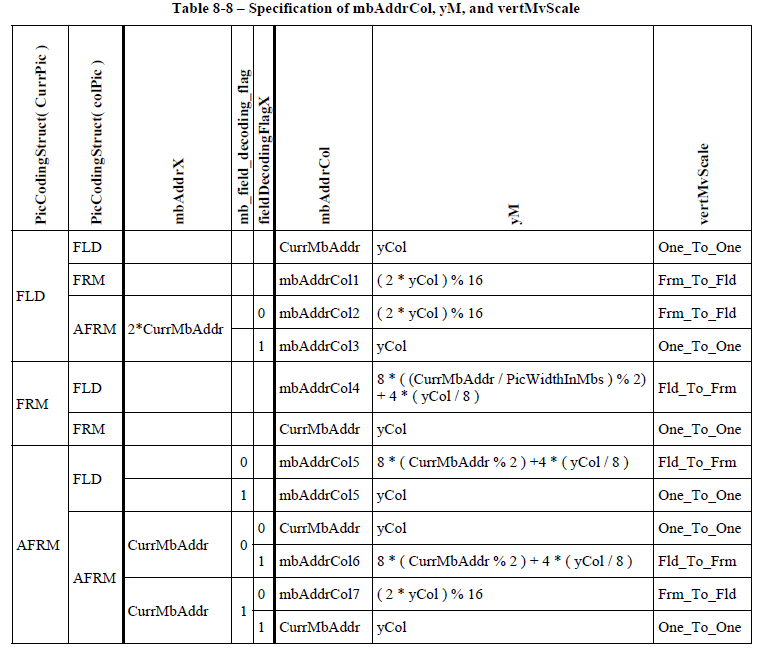

在标准中,先定位co-located所在宏块mbAddrCol,然后再定位co-located在该宏块内的地址(xcol,yM)。由于采用了不同于上述的4x4块定位方法,所以计算方式略复杂,但是得到的结果是一样的,最终会定位到同一个co-located

$\begin{align*}

$\begin{align*}

mbAddrCol1 &= 2 \times PicWidthInMbs \times( CurrMbAddr / PicWidthInMbs ) +( CurrMbAddr \ \% \ PicWidthInMbs ) + PicWidthInMbs \times ( yCol / 8 ) \\

mbAddrCol2 &= 2 \times CurrMbAddr + ( yCol / 8 ) \\

mbAddrCol3 &= 2 \times CurrMbAddr + bottom\_field\_flag \\

mbAddrCol4 &= PicWidthInMbs \times( CurrMbAddr / ( 2 \times PicWidthInMbs ) ) +( CurrMbAddr\ \% \ PicWidthInMbs ) \\

mbAddrCol5 &= CurrMbAddr / 2 \\

mbAddrCol6 &= 2 \times ( CurrMbAddr / 2 ) + ( ( topAbsDiffPOC < bottomAbsDiffPOC ) ? 0 : 1 ) \\

mbAddrCol7 &= 2 \times( CurrMbAddr / 2 ) + ( yCol / 8 )

\end{align*}$

(FAQ:为何表中没有FRM与AFRM配对?因为一个sps内只能定义为FRM或者AFRM(MBAFF),在一个序列内两者是不可能共存的;为何表中可以有FRM与FLD共存?因为sps可以定义PAFF(图像帧场自适应))

- 第一项:PicCodingStruct( CurrPic ),当前图像的编码方式

- 第二项:PicCodingStruct( colPic ),colPic的编码方式

- 第三项:mbAddrX,当colPic为AFRM(宏块帧场自适应时),该项是用于定位colPic内地址为mbAddrX的宏块对,并用该宏块判断第五项fieldDecodingFlagX,即该宏块对是用场编码还是帧编码方式

- 第四项:mb_field_decoding_flag,如果当前图像的编码方式是AFRM,该项用于判断当前宏块对是帧编码还是场编码方式

- 第五项:fieldDecodingFlagX,如果colPic的编码方式是AFRM,该项用于判断mbAddrX所在的宏块对是帧编码还是场编码方式

- 第六项:mbAddrCol,co-located所在的宏块在colPic内的地址,(注:涉及到AFRM的图像都会被当做互补场对来处理,即AFRM帧,而不是colPic)

- 第七项:yM,co-located相对于其所在宏块的地址(xCol, yM),单位为像素,即第一个4x4块为(0,0),最后一个为(12,12),(注:当前4x4块相对于其所在宏块的地址为(xCol,yCol))

- 第八项:VertMvScale,表明CurrPic与colPic的帧场对应关系

另外,为了减少计算量,还可以设定direct_8x8_inference_flag等于1,这样会导致8x8块共用一个4x4的co-located的运动向量,共用方式为一个宏块的4个8x8块分别只用该宏块4个角的4x4块作为co-located

获得co-located的运动向量与参考帧

- 如果co-located是帧内预测方式编码,那么将无法获得运动向量与参考帧,mvCol = 0,refIdxCol = -1

- 如果co-located是帧间预测编码方式,并且存在前向参考帧,那么mvCol将是co-located的前向运动向量,refIdxCol是co-located所在的8x8块中的前向参考索引

- 如果co-located是帧间预测编码方式,并且只存在后向参考帧,那么mvCol将是co-located的后向运动向量,refIdxCol是co-located所在的8x8块中的后向参考索引

(注:标准规定参考图像选择的最小单位为8x8块,也就是说8x8块中的4个4x4块共享一个参考图像索引,请参考h.264语法结构分析中的ref_idx_l0以及ref_idx_l1关键字)

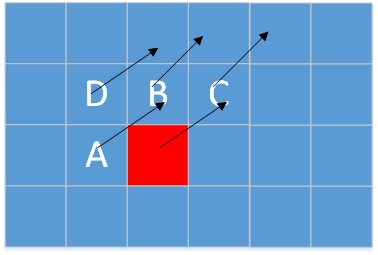

时域直接模式(temporal direct)

该模式是基于下图求出当前4x4块的前后向mvL0与mvL1,以及前向参考帧List0 reference的索引

已知的变量有:

- 后向参考图像List 1 reference (refPicList1[0])及其索引0

- 在计算co-located后得到的mvCol(mvCol需要根据VertMvScale进行调整,乘或除2),以及参考图像refColList0[refIdxCol](即List 0 reference)

- td与tb为图像之间的POC距离,既然List 0 reference、Current B、List 1 reference 已经知道,那么就容易得出td与tb的值

未知的变量有:

- List 0 reference的在当前参考图像列表中的索引

- 前后向运动向量mvL0与mvL1

求解方法:

- List 0 reference的索引,该索引是参考图像列表中图像List 0 reference所在的最小索引值(这部分由于jm18.6中对mbaff采用了独立互补场对参考列表,所以看起来更简单一点,而标准是从refPicList0参考列表开始,然后结合VertMvScale进行推导,看起来比较繁琐,但其实得到的结果是一样的)。

- mvL1 = mvCol/td*(td - tb)

- mvL0 = mvCol - mvL1 (忽略mv方向)

由于这些在标准(8.4.1.2.3)中讲的都非常细致,所以这里简要说明一下而已。

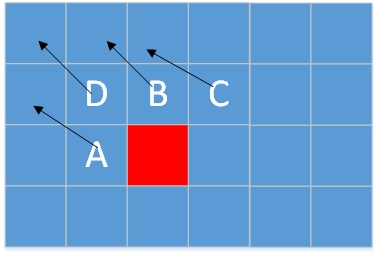

空域直接模式(spatial direct)

空域模式基于一种假设:当前编码宏块与其相邻宏块都向着同一个方向运动,大家有着类似的运动向量与参考帧(如下图)。在这种假设前提上,空域模式主要思想为采用相邻块来对当前宏块的参考索引以及运动向量进行预测。由于标准中8.4.1.3有非常详细的描述,所以在此略过。

但是上述假设也很有可能不成立,有可能当前宏块的相邻块都是运动的,但当前宏块是静止的(如下图)。比如说当前宏块是背景的一部分,而相邻块则是移动着的前景。这时候就需要判断当前宏块是否是运动的,以得到更准确的空域预测,co-located在这里就是用来判断当前宏块是否是运动宏块的。如果co-located的mvCol[0]与mvCol[1]能保证在某个范围之内,则表明当前宏块为静止宏块,那么将把当前宏块的mvL0与mvL1赋值为0,具体在标准8.4.1.2.2中描述得相当详细,不作细述。

jm18.6 mv_direct.c

- /*!

- *************************************************************************************

- * \file mv_direct.c

- *

- * \brief

- * Direct Motion Vector Generation

- *

- * \author

- * Main contributors (see contributors.h for copyright, address and affiliation details)

- * - Alexis Michael Tourapis <alexismt@ieee.org>

- *

- *************************************************************************************

- */

- #include "contributors.h"

- #include <math.h>

- #include <limits.h>

- #include <time.h>

- #include "global.h"

- #include "image.h"

- #include "mv_search.h"

- #include "refbuf.h"

- #include "memalloc.h"

- #include "mb_access.h"

- #include "macroblock.h"

- #include "mc_prediction.h"

- #include "conformance.h"

- #include "mode_decision.h"

- /*!

- ************************************************************************

- * \brief

- * Calculate Temporal Direct Mode Motion Vectors

- ************************************************************************

- */

- void Get_Direct_MV_Temporal (Macroblock *currMB)

- {

- Slice *currSlice = currMB->p_Slice;

- int block_x, block_y, pic_block_x, pic_block_y, opic_block_x, opic_block_y;

- MotionVector *****all_mvs;

- int mv_scale;

- int refList;

- int ref_idx;

- VideoParameters *p_Vid = currMB->p_Vid;

- int list_offset = currMB->list_offset;//如果当前currSlice->mb_aff_frame_flag 且 currMB->mb_field ,list_offset>0

- StorablePicture **list1 = currSlice->listX[LIST_1 + list_offset];

- PicMotionParams colocated;

- //temporal direct mode copy from decoder

- for (block_y = 0; block_y < 4; block_y++)

- {

- pic_block_y = currMB->block_y + block_y;

- opic_block_y = (currMB->opix_y >> 2) + block_y;

- for (block_x = 0; block_x < 4; block_x++)

- {

- pic_block_x = currMB->block_x + block_x;

- opic_block_x = (currMB->pix_x>>2) + block_x;

- all_mvs = currSlice->all_mv;

- if (p_Vid->active_sps->direct_8x8_inference_flag)

- {

- if(currMB->p_Inp->separate_colour_plane_flag && currMB->p_Vid->yuv_format==YUV444)

- colocated = list1[0]->JVmv_info[currMB->p_Slice->colour_plane_id][RSD(opic_block_y)][RSD(opic_block_x)];

- else

- colocated = list1[0]->mv_info[RSD(opic_block_y)][RSD(opic_block_x)];

- if(currSlice->mb_aff_frame_flag && currMB->mb_field && currSlice->listX[LIST_1][0]->coded_frame)

- {//mbaff场宏块

- int iPosBlkY;

- if(currSlice->listX[LIST_1][0]->motion.mb_field[currMB->mbAddrX] )

- //当前宏块是mbaff且为场编码,参考帧宏块为场编码,不过因为此处计算ref_pic用的是frame,即一个场宏块对应两个帧宏块

- //场宏块>>2后*8,可以对比下面帧宏块>>3后再*8

- //>>2代表一个场宏块,

- //*8代表宏块对(两个帧宏块),

- //*4代表选择对应宏块(顶宏块或者底宏块其中之一(field重建回frame时,对于宏块的mv_info,是以宏块对方式组建的?)),

- //&3代表4x4子块

- //另外由于每一个8x8块对应一个参考帧所以最后也有RSD(opic_block_x)

- iPosBlkY = (opic_block_y>>2)*8+4*(currMB->mbAddrX&1)+(opic_block_y&0x03);

- else

- //因为当前是场(mb_field)?而下面参考帧采用的是帧,则代表field->frame,所以*2

- iPosBlkY = RSD(opic_block_y)*2;

- //由于标准规定(8.4.1.2.2最后一段)在colocated中应该在其所在的8x8分割块中选取参考帧,所以需要进行以下步骤

- if(colocated.ref_idx[LIST_0]>=0)//ref_idx>=0说明有前/后向参考帧,但是下面还需要根据当前宏块与colpic进行精确定位(在8x8分割块中选取某块的参考帧)

- colocated.ref_pic[LIST_0] = list1[0]->frame->mv_info[iPosBlkY][RSD(opic_block_x)].ref_pic[LIST_0];

- if(colocated.ref_idx[LIST_1]>=0)

- colocated.ref_pic[LIST_1] = list1[0]->frame->mv_info[iPosBlkY][RSD(opic_block_x)].ref_pic[LIST_1];

- }

- }

- else

- {

- if(currMB->p_Inp->separate_colour_plane_flag && currMB->p_Vid->yuv_format==YUV444)

- colocated = list1[0]->JVmv_info[currMB->p_Slice->colour_plane_id][opic_block_y][opic_block_x];

- else

- colocated = list1[0]->mv_info[opic_block_y][opic_block_x];

- }

- if(currSlice->mb_aff_frame_flag)

- {

- if(!currMB->mb_field && ((currSlice->listX[LIST_1][0]->coded_frame && currSlice->listX[LIST_1][>motion.mb_field[currMB->mbAddrX]) ||

- (!currSlice->listX[LIST_1][0]->coded_frame)))

- {//mbaff帧宏块

- if (iabs(p_Vid->enc_picture->poc - currSlice->listX[LIST_1+4][0]->poc)> iabs(p_Vid->enc_picture->poc -rSlice->listX[LIST_1+2][0]->poc) )

- {

- if ( p_Vid->active_sps->direct_8x8_inference_flag)

- {

- if(currMB->p_Inp->separate_colour_plane_flag && currMB->p_Vid->yuv_format==YUV444)

- colocated = currSlice->listX[LIST_1+2][0]->JVmv_info[currMB->p_Slice->colour_plane_id][RSD(c_block_y)>>1][RSD(opic_block_x)];

- else

- colocated = currSlice->listX[LIST_1+2][0]->mv_info[RSD(opic_block_y)>>1][RSD(opic_block_x)];

- }

- else

- {

- if(currMB->p_Inp->separate_colour_plane_flag && currMB->p_Vid->yuv_format==YUV444)

- colocated = currSlice->listX[LIST_1+2][0]->JVmv_info[currMB->p_Slice->colour_plane_id][(c_block_y)>>1][(opic_block_x)];

- else

- colocated = currSlice->listX[LIST_1+2][0]->mv_info[(opic_block_y)>>1][opic_block_x];

- }

- if(currSlice->listX[LIST_1][0]->coded_frame)

- {//帧宏块,所以下面>>3后再*8,可以对比上面场宏块>>2后再*8

- int iPosBlkY = (RSD(opic_block_y)>>3)*8 + ((RSD(opic_block_y)>>1) & 0x03);

- if(colocated.ref_idx[LIST_0] >=0) // && !colocated.ref_pic[LIST_0])

- colocated.ref_pic[LIST_0] = currSlice->listX[LIST_1+2][0]->frame->mv_info[iPosBlkY][RSD(opic_block_x)].ref_pic[T_0];

- if(colocated.ref_idx[LIST_1] >=0) // && !colocated.ref_pic[LIST_1])

- colocated.ref_pic[LIST_1] = currSlice->listX[LIST_1+2][0]->frame->mv_info[iPosBlkY][RSD(opic_block_x)].ref_pic[T_1];

- }

- }

- else

- {

- if (p_Vid->active_sps->direct_8x8_inference_flag )

- {

- if(currMB->p_Inp->separate_colour_plane_flag && currMB->p_Vid->yuv_format==YUV444)

- colocated = currSlice->listX[LIST_1+4][0]->JVmv_info[currMB->p_Slice->colour_plane_id][RSD(c_block_y)>>1][RSD(opic_block_x)];

- else

- colocated = currSlice->listX[LIST_1+4][0]->mv_info[RSD(opic_block_y)>>1][RSD(opic_block_x)];

- }

- else

- {

- if(currMB->p_Inp->separate_colour_plane_flag && currMB->p_Vid->yuv_format==YUV444)

- colocated = currSlice->listX[LIST_1+4][0]->JVmv_info[currMB->p_Slice->colour_plane_id][(c_block_y)>>1][opic_block_x];

- else

- colocated = currSlice->listX[LIST_1+4][0]->mv_info[(opic_block_y)>>1][opic_block_x];

- }

- if(currSlice->listX[LIST_1][0]->coded_frame)

- {

- int iPosBlkY = (RSD(opic_block_y)>>3)*8 + ((RSD(opic_block_y)>>1) & 0x03)+4;

- if(colocated.ref_idx[LIST_0] >=0) // && !colocated.ref_pic[LIST_0])

- colocated.ref_pic[LIST_0] = currSlice->listX[LIST_1+4][0]->frame->mv_info[iPosBlkY][RSD(opic_block_x)].ref_pic[T_0];

- if(colocated.ref_idx[LIST_1] >=0)// && !colocated.ref_pic[LIST_1])

- colocated.ref_pic[LIST_1] = currSlice->listX[LIST_1+4][0]->frame->mv_info[iPosBlkY][RSD(opic_block_x)].ref_pic[T_1];

- }

- }

- }

- }

- else if(!p_Vid->active_sps->frame_mbs_only_flag && !currSlice->structure && !currSlice->listX[LIST_1][>coded_frame)

- {//图像级帧场自适应帧图像编码(即list1[0]有可能为场编码)

- if (iabs(p_Vid->enc_picture->poc - list1[0]->bottom_field->poc)> iabs(p_Vid->enc_picture->poc -list1[>top_field->poc) )

- {

- colocated = p_Vid->active_sps->direct_8x8_inference_flag ?

- list1[0]->top_field->mv_info[RSD(opic_block_y)>>1][RSD(opic_block_x)] : list1[0]->top_field->mv_info[(c_block_y)>>1][opic_block_x];

- }

- else

- {

- colocated = p_Vid->active_sps->direct_8x8_inference_flag ?

- list1[0]->bottom_field->mv_info[RSD(opic_block_y)>>1][RSD(opic_block_x)] : list1[0]->bottom_field->mv_info[(c_block_y)>>1][opic_block_x];

- }

- }

- else if(!p_Vid->active_sps->frame_mbs_only_flag && currSlice->structure && list1[0]->coded_frame)

- {//场图像编码

- int iPosBlkY;

- int currentmb = 2*(list1[0]->size_x>>4) * (opic_block_y >> 2)+ (opic_block_x>>2)*2 + ((c_block_y>>1) & 0x01);

- if(currSlice->structure!=list1[0]->structure)

- {

- if (currSlice->structure == TOP_FIELD)

- {

- colocated = p_Vid->active_sps->direct_8x8_inference_flag ?

- list1[0]->frame->top_field->mv_info[RSD(opic_block_y)][RSD(opic_block_x)] : list1[>frame->top_field->mv_info[opic_block_y][opic_block_x];

- }

- else

- {

- colocated = p_Vid->active_sps->direct_8x8_inference_flag ?

- list1[0]->frame->bottom_field->mv_info[RSD(opic_block_y)][RSD(opic_block_x)] : list1[>frame->bottom_field->mv_info[opic_block_y][opic_block_x];

- }

- }

- if(!currSlice->listX[LIST_1][0]->frame->mb_aff_frame_flag || !list1[0]->frame->motion.mb_field[currentmb])

- iPosBlkY = 2*(RSD(opic_block_y));

- else

- iPosBlkY = (RSD(opic_block_y)>>2)*8 + (RSD(opic_block_y) & 0x03)+4*(currSlice->structure == BOTTOM_FIELD);

- if(colocated.ref_idx[LIST_0] >=0) // && !colocated.ref_pic[LIST_0])

- colocated.ref_pic[LIST_0] = list1[0]->frame->mv_info[iPosBlkY][RSD(opic_block_x)].ref_pic[LIST_0];

- if(colocated.ref_idx[LIST_1] >=0)// && !colocated.ref_pic[LIST_1])

- colocated.ref_pic[LIST_1] = list1[0]->frame->mv_info[iPosBlkY][RSD(opic_block_x)].ref_pic[LIST_1];

- }

- //最后剩下的是全序列采用帧图像编码(frame_mbs_only_flag = 1),这样的话colocated._pic肯定是存在的,并且不用进行帧场转换,也就是采用原来的就可以,因此不用重新赋值

- //colocated在开头判断direct_8x8_inference_flag的两个分支处即可获得

- refList = ((colocated.ref_idx[LIST_0] == -1 || (p_Vid->view_id && colocated.ref_idx[LIST_0]==list1[0]->ref_pic_na[)? LIST_1 : LIST_0);

- ref_idx = colocated.ref_idx[refList];

- // next P is intra mode

- if (ref_idx == -1 || (p_Vid->view_id && ref_idx==list1[0]->ref_pic_na[refList]))

- {

- all_mvs[LIST_0][0][0][block_y][block_x] = zero_mv;

- all_mvs[LIST_1][0][0][block_y][block_x] = zero_mv;

- currSlice->direct_ref_idx[pic_block_y][pic_block_x][LIST_0] = 0;

- currSlice->direct_ref_idx[pic_block_y][pic_block_x][LIST_1] = 0;

- currSlice->direct_pdir[pic_block_y][pic_block_x] = 2;

- }

- // next P is skip or inter mode

- else

- {

- int mapped_idx=INVALIDINDEX;

- int iref;

- if (colocated.ref_pic[refList] == NULL)

- {

- printf("invalid index found\n");

- }

- else

- { //得到前向参考帧ref0,即下面的mapped_idx

- //Let refIdxL0Frm be the lowest valued reference index in the current reference picture list RefPicList0 that

- //references the frame or complementary field pair that contains the field refPicCol

- if( (currSlice->mb_aff_frame_flag && ( (currMB->mb_field && colocated.ref_pic[List]->structure==FRAME) || //currSlice :mbaff & mb_field, ref_pic :frame

- (!currMB->mb_field && colocated.ref_pic[refList]->structure!=FRAME))) //currSlice :mb_frame , ref_pic :field

- (!currSlice->mb_aff_frame_flag && ((currSlice->structure==FRAME && colocated.ref_pic[List]->structure!=FRAME)|| //currSlice :frame only , ref_pic :field

- (currSlice->structure!=FRAME && colocated.ref_pic[refList]->structure==FRAME))) //currSlice :field , ref_pic :frame

- {//该判断条件表示currSlice与ref_pic用不同的帧场编码方式

- //! Frame with field co-located

- for (iref = 0; iref < imin(currSlice->num_ref_idx_active[LIST_0], currSlice->listXsize[LIST_0 + list_offset]); f++)

- {

- if (currSlice->listX[LIST_0 + list_offset][iref]->top_field == colocated.ref_pic[refList] ||

- currSlice->listX[LIST_0 + list_offset][iref]->bottom_field == colocated.ref_pic[refList] ||

- currSlice->listX[LIST_0 + list_offset][iref]->frame == colocated.ref_pic[refList] )

- {

- if ((p_Vid->field_picture==1) && (currSlice->listX[LIST_0 + list_offset][iref]->structure != rSlice->structure))

- {//currSlice :field , ref_pic :frame 会进来这里?

- mapped_idx=INVALIDINDEX;

- }

- else

- {

- mapped_idx = iref;

- break;

- }

- }

- else //! invalid index. Default to zero even though this case should not happen

- mapped_idx=INVALIDINDEX;

- }

- }

- else

- {//currSlice 与 ref_pic 采用相同帧场编码方式

- for (iref = 0; iref < imin(currSlice->num_ref_idx_active[LIST_0], currSlice->listXsize[LIST_0 + list_offset]);iref++)

- {

- if(currSlice->listX[LIST_0 + list_offset][iref] == colocated.ref_pic[refList])

- {

- mapped_idx = iref;

- break;

- }

- else //! invalid index. Default to zero even though this case should not happen

- {

- mapped_idx=INVALIDINDEX;

- }

- }

- }

- }

- if (mapped_idx != INVALIDINDEX)

- {

- MotionVector mv = colocated.mv[refList];

- mv_scale = currSlice->mvscale[LIST_0 + list_offset][mapped_idx];

- if((currSlice->mb_aff_frame_flag && !currMB->mb_field && colocated.ref_pic[refList]->structure!=FRAME)

- (!currSlice->mb_aff_frame_flag && currSlice->structure==FRAME && colocated.ref_pic[List]->structure!=FRAME))

- mv.mv_y *= 2;

- else if((currSlice->mb_aff_frame_flag && currMB->mb_field && colocated.ref_pic[List]->structure==FRAME) ||

- (!currSlice->mb_aff_frame_flag && currSlice->structure!=FRAME && colocated.ref_pic[List]->structure==FRAME))

- mv.mv_y /= 2;

- //mvL0,mvL1

- if (mv_scale==9999)

- {

- // forward

- all_mvs[LIST_0][0][0][block_y][block_x] = mv;

- // backward

- all_mvs[LIST_1][0][0][block_y][block_x] = zero_mv;

- }

- else

- {

- //mv_scale = DistScaleFactor (in function compute_colocated)

- // forward

- all_mvs[LIST_0][mapped_idx][0][block_y][block_x].mv_x = (short) ((mv_scale * mv.mv_x + 128) >> 8);

- all_mvs[LIST_0][mapped_idx][0][block_y][block_x].mv_y = (short) ((mv_scale * mv.mv_y + 128) >> 8);

- // backward

- all_mvs[LIST_1][ 0][0][block_y][block_x].mv_x = (short) (((mv_scale - 256) * mv.mv_x + 128) >> 8);

- all_mvs[LIST_1][ 0][0][block_y][block_x].mv_y = (short) (((mv_scale - 256) * mv.mv_y + 128) >> 8);

- }

- //refIdx0,refIdx1

- // Test Level Limits if satisfied.

- if ( out_of_bounds_mvs(p_Vid, &all_mvs[LIST_0][mapped_idx][0][block_y][block_x])|| out_of_bounds_mvs(p_Vid, &all_mvs[T_1][0][0][block_y][block_x]))

- {

- currSlice->direct_ref_idx[pic_block_y][pic_block_x][LIST_0] = -1;

- currSlice->direct_ref_idx[pic_block_y][pic_block_x][LIST_1] = -1;

- currSlice->direct_pdir[pic_block_y][pic_block_x] = -1;

- }

- else

- {

- currSlice->direct_ref_idx[pic_block_y][pic_block_x][LIST_0] = (char) mapped_idx;

- currSlice->direct_ref_idx[pic_block_y][pic_block_x][LIST_1] = 0;

- currSlice->direct_pdir[pic_block_y][pic_block_x] = 2;

- }

- }

- else

- {

- currSlice->direct_ref_idx[pic_block_y][pic_block_x][LIST_0] = -1;

- currSlice->direct_ref_idx[pic_block_y][pic_block_x][LIST_1] = -1;

- currSlice->direct_pdir[pic_block_y][pic_block_x] = -1;

- }

- }

- if (p_Vid->active_pps->weighted_bipred_idc == 1 && currSlice->direct_pdir[pic_block_y][pic_block_x] == 2)

- {

- int weight_sum, i;

- short l0_refX = currSlice->direct_ref_idx[pic_block_y][pic_block_x][LIST_0];

- short l1_refX = currSlice->direct_ref_idx[pic_block_y][pic_block_x][LIST_1];

- for (i=0;i< (p_Vid->active_sps->chroma_format_idc == YUV400 ? 1 : 3); i++)

- {

- weight_sum = currSlice->wbp_weight[0][l0_refX][l1_refX][i] + currSlice->wbp_weight[1][l0_refX][l1_refX][i];

- if (weight_sum < -128 || weight_sum > 127)

- {

- currSlice->direct_ref_idx[pic_block_y][pic_block_x][LIST_0] = -1;

- currSlice->direct_ref_idx[pic_block_y][pic_block_x][LIST_1] = -1;

- currSlice->direct_pdir [pic_block_y][pic_block_x] = -1;

- break;

- }

- }

- }

- }

- }

- }

- static inline void set_direct_references(const PixelPos *mb, char *l0_rFrame, char *l1_rFrame, PicMotionParams **mv_info)

- {

- if (mb->available)

- {

- char *ref_idx = mv_info[mb->pos_y][mb->pos_x].ref_idx;

- *l0_rFrame = ref_idx[LIST_0];

- *l1_rFrame = ref_idx[LIST_1];

- }

- else

- {

- *l0_rFrame = -1;

- *l1_rFrame = -1;

- }

- }

- static void set_direct_references_mb_field(const PixelPos *mb, char *l0_rFrame, char *l1_rFrame, PicMotionParams **mv_info, Macroblock _data)

- {

- if (mb->available)

- {

- char *ref_idx = mv_info[mb->pos_y][mb->pos_x].ref_idx;

- if (mb_data[mb->mb_addr].mb_field)

- {

- *l0_rFrame = ref_idx[LIST_0];

- *l1_rFrame = ref_idx[LIST_1];

- }

- else

- {

- *l0_rFrame = (ref_idx[LIST_0] < 0) ? ref_idx[LIST_0] : ref_idx[LIST_0] * 2;

- *l1_rFrame = (ref_idx[LIST_1] < 0) ? ref_idx[LIST_1] : ref_idx[LIST_1] * 2;

- }

- }

- else

- {

- *l0_rFrame = -1;

- *l1_rFrame = -1;

- }

- }

- static void set_direct_references_mb_frame(const PixelPos *mb, char *l0_rFrame, char *l1_rFrame, PicMotionParams **mv_info, Macroblock _data)

- {

- if (mb->available)

- {

- char *ref_idx = mv_info[mb->pos_y][mb->pos_x].ref_idx;

- if (mb_data[mb->mb_addr].mb_field)

- {

- *l0_rFrame = (ref_idx[LIST_0] >> 1);

- *l1_rFrame = (ref_idx[LIST_1] >> 1);

- }

- else

- {

- *l0_rFrame = ref_idx[LIST_0];

- *l1_rFrame = ref_idx[LIST_1];

- }

- }

- else

- {

- *l0_rFrame = -1;

- *l1_rFrame = -1;

- }

- }

- static void test_valid_direct(Slice *currSlice, seq_parameter_set_rbsp_t *active_sps, char *direct_ref_idx, short l0_refX, short refX, int pic_block_y, int pic_block_x)

- {

- int weight_sum, i;

- Boolean invalid_wp = FALSE;

- for (i=0;i< (active_sps->chroma_format_idc == YUV400 ? 1 : 3); i++)

- {

- weight_sum = currSlice->wbp_weight[0][l0_refX][l1_refX][i] + currSlice->wbp_weight[1][l0_refX][l1_refX][i];

- if (weight_sum < -128 || weight_sum > 127)

- {

- invalid_wp = TRUE;

- break;

- }

- }

- if (invalid_wp == FALSE)

- currSlice->direct_pdir[pic_block_y][pic_block_x] = 2;

- else

- {

- direct_ref_idx[LIST_0] = -1;

- direct_ref_idx[LIST_1] = -1;

- currSlice->direct_pdir[pic_block_y][pic_block_x] = -1;

- }

- }

- /*!

- *************************************************************************************

- * \brief

- * Temporary function for colocated info when direct_inference is enabled.

- *

- *************************************************************************************

- */

- int get_colocated_info(Macroblock *currMB, StorablePicture *list1, int i, int j)

- {

- if (list1->is_long_term)

- return 1;

- else

- {

- Slice *currSlice = currMB->p_Slice;

- VideoParameters *p_Vid = currMB->p_Vid;

- if( (currSlice->mb_aff_frame_flag) ||

- (!p_Vid->active_sps->frame_mbs_only_flag && ((!currSlice->structure && !list1->coded_frame) || (rSlice->structure!=list1->structure && list1->coded_frame))))

- {

- int jj = RSD(j);

- int ii = RSD(i);

- int jdiv = (jj>>1);

- int moving;

- PicMotionParams *fs = &list1->mv_info[jj][ii];

- if(currSlice->structure && currSlice->structure!=list1->structure && list1->coded_frame)

- {

- if(currSlice->structure == TOP_FIELD)

- fs = list1->top_field->mv_info[jj] + ii;

- else

- fs = list1->bottom_field->mv_info[jj] + ii;

- }

- else

- {

- if( (currSlice->mb_aff_frame_flag && ((!currMB->mb_field && list1->motion.mb_field[currMB->mbAddrX])

- (!currMB->mb_field && !list1->coded_frame)))

- || (!currSlice->mb_aff_frame_flag))

- {

- if (iabs(p_Vid->enc_picture->poc - list1->bottom_field->poc)> iabs(p_Vid->enc_picture->poc -t1->top_field->poc) )

- {

- fs = list1->top_field->mv_info[jdiv] + ii;

- }

- else

- {

- fs = list1->bottom_field->mv_info[jdiv] + ii;

- }

- }

- }

- moving = !((((fs->ref_idx[LIST_0] == 0)

- && (iabs(fs->mv[LIST_0].mv_x)>>1 == 0)

- && (iabs(fs->mv[LIST_0].mv_y)>>1 == 0)))

- || ((fs->ref_idx[LIST_0] == -1)

- && (fs->ref_idx[LIST_1] == 0)

- && (iabs(fs->mv[LIST_1].mv_x)>>1 == 0)

- && (iabs(fs->mv[LIST_1].mv_y)>>1 == 0)));

- return moving;

- }

- else

- {

- PicMotionParams *fs = &list1->mv_info[RSD(j)][RSD(i)];

- int moving;

- if(currMB->p_Vid->yuv_format == YUV444 && !currSlice->P444_joined)

- fs = &list1->JVmv_info[(int)(p_Vid->colour_plane_id)][RSD(j)][RSD(i)];

- moving= !((((fs->ref_idx[LIST_0] == 0)

- && (iabs(fs->mv[LIST_0].mv_x)>>1 == 0)

- && (iabs(fs->mv[LIST_0].mv_y)>>1 == 0)))

- || ((fs->ref_idx[LIST_0] == -1)

- && (fs->ref_idx[LIST_1] == 0)

- && (iabs(fs->mv[LIST_1].mv_x)>>1 == 0)

- && (iabs(fs->mv[LIST_1].mv_y)>>1 == 0)));

- return moving;

- }

- }

- }

- /*!

- *************************************************************************************

- * \brief

- * Colocated info <= direct_inference is disabled.

- *************************************************************************************

- */

- int get_colocated_info_4x4(Macroblock *currMB, StorablePicture *list1, int i, int j)

- {

- if (list1->is_long_term)

- return 1;

- else

- {

- PicMotionParams *fs = &list1->mv_info[j][i];

- int moving = !((((fs->ref_idx[LIST_0] == 0)

- && (iabs(fs->mv[LIST_0].mv_x)>>1 == 0)

- && (iabs(fs->mv[LIST_0].mv_y)>>1 == 0)))

- || ((fs->ref_idx[LIST_0] == -1)

- && (fs->ref_idx[LIST_1] == 0)

- && (iabs(fs->mv[LIST_1].mv_x)>>1 == 0)

- && (iabs(fs->mv[LIST_1].mv_y)>>1 == 0)));

- return moving;

- }

- }

- /*!

- ************************************************************************

- * \brief

- * Calculate Spatial Direct Mode Motion Vectors

- ************************************************************************

- */

- void Get_Direct_MV_Spatial_Normal (Macroblock *currMB)

- {

- Slice *currSlice = currMB->p_Slice;

- VideoParameters *p_Vid = currMB->p_Vid;

- PicMotionParams **mv_info = p_Vid->enc_picture->mv_info;

- char l0_refA, l0_refB, l0_refC;

- char l1_refA, l1_refB, l1_refC;

- char l0_refX,l1_refX;

- MotionVector pmvfw = zero_mv, pmvbw = zero_mv;

- int block_x, block_y, pic_block_x, pic_block_y, opic_block_x, opic_block_y;

- MotionVector *****all_mvs;

- char *direct_ref_idx;

- StorablePicture **list1 = currSlice->listX[LIST_1];

- PixelPos mb[4];

- get_neighbors(currMB, mb, 0, 0, 16);

- set_direct_references(&mb[0], &l0_refA, &l1_refA, mv_info);

- set_direct_references(&mb[1], &l0_refB, &l1_refB, mv_info);

- set_direct_references(&mb[2], &l0_refC, &l1_refC, mv_info);

- l0_refX = (char) imin(imin((unsigned char) l0_refA, (unsigned char) l0_refB), (unsigned char) l0_refC);

- l1_refX = (char) imin(imin((unsigned char) l1_refA, (unsigned char) l1_refB), (unsigned char) l1_refC);

- if (l0_refX >= 0)

- currMB->GetMVPredictor (currMB, mb, &pmvfw, l0_refX, mv_info, LIST_0, 0, 0, 16, 16);

- if (l1_refX >= 0)

- currMB->GetMVPredictor (currMB, mb, &pmvbw, l1_refX, mv_info, LIST_1, 0, 0, 16, 16);

- if (l0_refX == -1 && l1_refX == -1)

- {

- for (block_y=0; block_y<4; block_y++)

- {

- pic_block_y = currMB->block_y + block_y;

- for (block_x=0; block_x<4; block_x++)

- {

- pic_block_x = currMB->block_x + block_x;

- direct_ref_idx = currSlice->direct_ref_idx[pic_block_y][pic_block_x];

- currSlice->all_mv[LIST_0][0][0][block_y][block_x] = zero_mv;

- currSlice->all_mv[LIST_1][0][0][block_y][block_x] = zero_mv;

- direct_ref_idx[LIST_0] = direct_ref_idx[LIST_1] = 0;

- if (p_Vid->active_pps->weighted_bipred_idc == 1)

- test_valid_direct(currSlice, currSlice->active_sps, direct_ref_idx, 0, 0, pic_block_y, pic_block_x);

- else

- currSlice->direct_pdir[pic_block_y][pic_block_x] = 2;

- }

- }

- }

- else if (l0_refX == 0 || l1_refX == 0)

- {

- int (*get_colocated)(Macroblock *currMB, StorablePicture *list1, int i, int j) =

- p_Vid->active_sps->direct_8x8_inference_flag ? get_colocated_info : get_colocated_info_4x4;

- int is_moving_block;

- for (block_y = 0; block_y < 4; block_y++)

- {

- pic_block_y = currMB->block_y + block_y;

- opic_block_y = (currMB->opix_y >> 2) + block_y;

- for (block_x=0; block_x<4; block_x++)

- {

- pic_block_x = currMB->block_x + block_x;

- direct_ref_idx = currSlice->direct_ref_idx[pic_block_y][pic_block_x];

- opic_block_x = (currMB->pix_x >> 2) + block_x;

- all_mvs = currSlice->all_mv;

- is_moving_block = (get_colocated(currMB, list1[0], opic_block_x, opic_block_y) == 0);

- if (l0_refX < 0)

- {

- all_mvs[LIST_0][0][0][block_y][block_x] = zero_mv;

- direct_ref_idx[LIST_0] = -1;

- }

- else if ((l0_refX == 0) && is_moving_block)

- {

- all_mvs[LIST_0][0][0][block_y][block_x] = zero_mv;

- direct_ref_idx[LIST_0] = 0;

- }

- else

- {

- all_mvs[LIST_0][(short) l0_refX][0][block_y][block_x] = pmvfw;

- direct_ref_idx[LIST_0] = (char)l0_refX;

- }

- if (l1_refX < 0)

- {

- all_mvs[LIST_1][0][0][block_y][block_x] = zero_mv;

- direct_ref_idx[LIST_1] = -1;

- }

- else if((l1_refX == 0) && is_moving_block)

- {

- all_mvs[LIST_1][0][0][block_y][block_x] = zero_mv;

- direct_ref_idx[LIST_1] = 0;

- }

- else

- {

- all_mvs[LIST_1][(short) l1_refX][0][block_y][block_x] = pmvbw;

- direct_ref_idx[LIST_1] = (char)l1_refX;

- }

- if (direct_ref_idx[LIST_1] == -1)

- currSlice->direct_pdir[pic_block_y][pic_block_x] = 0;

- else if (direct_ref_idx[LIST_0] == -1)

- currSlice->direct_pdir[pic_block_y][pic_block_x] = 1;

- else if (p_Vid->active_pps->weighted_bipred_idc == 1)

- test_valid_direct(currSlice, currSlice->active_sps, direct_ref_idx, l0_refX, l1_refX, pic_block_y, pic_block_x);

- else

- currSlice->direct_pdir[pic_block_y][pic_block_x] = 2;

- }

- }

- }

- else

- {

- for (block_y=0; block_y<4; block_y++)

- {

- pic_block_y = currMB->block_y + block_y;

- for (block_x=0; block_x<4; block_x++)

- {

- pic_block_x = currMB->block_x + block_x;

- direct_ref_idx = currSlice->direct_ref_idx[pic_block_y][pic_block_x];

- all_mvs = currSlice->all_mv;

- if (l0_refX > 0)

- {

- all_mvs[LIST_0][(short) l0_refX][0][block_y][block_x] = pmvfw;

- direct_ref_idx[LIST_0]= (char)l0_refX;

- }

- else

- {

- all_mvs[LIST_0][0][0][block_y][block_x] = zero_mv;

- direct_ref_idx[LIST_0]=-1;

- }

- if (l1_refX > 0)

- {

- all_mvs[LIST_1][(short) l1_refX][0][block_y][block_x] = pmvbw;

- direct_ref_idx[LIST_1] = (char)l1_refX;

- }

- else

- {

- all_mvs[LIST_1][0][0][block_y][block_x] = zero_mv;

- direct_ref_idx[LIST_1] = -1;

- }

- if (direct_ref_idx[LIST_1] == -1)

- currSlice->direct_pdir[pic_block_y][pic_block_x] = 0;

- else if (direct_ref_idx[LIST_0] == -1)

- currSlice->direct_pdir[pic_block_y][pic_block_x] = 1;

- else if (p_Vid->active_pps->weighted_bipred_idc == 1)

- test_valid_direct(currSlice, currSlice->active_sps, direct_ref_idx, l0_refX, l1_refX, pic_block_y, pic_block_x);

- else

- currSlice->direct_pdir[pic_block_y][pic_block_x] = 2;

- }

- }

- }

- }

- /*!

- ************************************************************************

- * \brief

- * Calculate Spatial Direct Mode Motion Vectors

- ************************************************************************

- */

- void Get_Direct_MV_Spatial_MBAFF (Macroblock *currMB)

- {

- char l0_refA, l0_refB, l0_refC;

- char l1_refA, l1_refB, l1_refC;

- char l0_refX,l1_refX;

- MotionVector pmvfw = zero_mv, pmvbw = zero_mv;

- int block_x, block_y, pic_block_x, pic_block_y, opic_block_x, opic_block_y;

- MotionVector *****all_mvs;

- char *direct_ref_idx;

- int is_moving_block;

- Slice *currSlice = currMB->p_Slice;

- VideoParameters *p_Vid = currMB->p_Vid;

- PicMotionParams **mv_info = p_Vid->enc_picture->mv_info;

- StorablePicture **list1 = currSlice->listX[LIST_1 + currMB->list_offset];

- int (*get_colocated)(Macroblock *currMB, StorablePicture *list1, int i, int j) =

- p_Vid->active_sps->direct_8x8_inference_flag ? get_colocated_info : get_colocated_info_4x4;

- PixelPos mb[4];

- get_neighbors(currMB, mb, 0, 0, 16);

- if (currMB->mb_field)

- {

- set_direct_references_mb_field(&mb[0], &l0_refA, &l1_refA, mv_info, p_Vid->mb_data);

- set_direct_references_mb_field(&mb[1], &l0_refB, &l1_refB, mv_info, p_Vid->mb_data);

- set_direct_references_mb_field(&mb[2], &l0_refC, &l1_refC, mv_info, p_Vid->mb_data);

- }

- else

- {

- set_direct_references_mb_frame(&mb[0], &l0_refA, &l1_refA, mv_info, p_Vid->mb_data);

- set_direct_references_mb_frame(&mb[1], &l0_refB, &l1_refB, mv_info, p_Vid->mb_data);

- set_direct_references_mb_frame(&mb[2], &l0_refC, &l1_refC, mv_info, p_Vid->mb_data);

- }

- l0_refX = (char) imin(imin((unsigned char) l0_refA, (unsigned char) l0_refB), (unsigned char) l0_refC);

- l1_refX = (char) imin(imin((unsigned char) l1_refA, (unsigned char) l1_refB), (unsigned char) l1_refC);

- if (l0_refX >=0)

- currMB->GetMVPredictor (currMB, mb, &pmvfw, l0_refX, mv_info, LIST_0, 0, 0, 16, 16);

- if (l1_refX >=0)

- currMB->GetMVPredictor (currMB, mb, &pmvbw, l1_refX, mv_info, LIST_1, 0, 0, 16, 16);

- for (block_y=0; block_y<4; block_y++)

- {

- pic_block_y = currMB->block_y + block_y;

- opic_block_y = (currMB->opix_y >> 2) + block_y;

- for (block_x=0; block_x<4; block_x++)

- {

- pic_block_x = currMB->block_x + block_x;

- direct_ref_idx = currSlice->direct_ref_idx[pic_block_y][pic_block_x];

- opic_block_x = (currMB->pix_x >> 2) + block_x;

- is_moving_block = (get_colocated(currMB, list1[0], opic_block_x, opic_block_y) == 0);

- all_mvs = currSlice->all_mv;

- if (l0_refX >=0)

- {

- if (!l0_refX && is_moving_block)

- {

- all_mvs[LIST_0][0][0][block_y][block_x] = zero_mv;

- direct_ref_idx[LIST_0] = 0;

- }

- else

- {

- all_mvs[LIST_0][(short) l0_refX][0][block_y][block_x] = pmvfw;

- direct_ref_idx[LIST_0] = (char)l0_refX;

- }

- }

- else

- {

- all_mvs[LIST_0][0][0][block_y][block_x] = zero_mv;

- direct_ref_idx[LIST_0] = -1;

- }

- if (l1_refX >=0)

- {

- if(l1_refX==0 && is_moving_block)

- {

- all_mvs[LIST_1][0][0][block_y][block_x] = zero_mv;

- direct_ref_idx[LIST_1] = (char)l1_refX;

- }

- else

- {

- all_mvs[LIST_1][(short) l1_refX][0][block_y][block_x] = pmvbw;

- direct_ref_idx[LIST_1] = (char)l1_refX;

- }

- }

- else

- {

- all_mvs[LIST_1][0][0][block_y][block_x] = zero_mv;

- direct_ref_idx[LIST_1] = -1;

- }

- // Test Level Limits if satisfied.

- // Test Level Limits if satisfied.

- if ((out_of_bounds_mvs(p_Vid, &all_mvs[LIST_0][l0_refX < 0? 0 : l0_refX][0][block_y][block_x])

- || out_of_bounds_mvs(p_Vid, &all_mvs[LIST_1][l1_refX < 0? 0 : l1_refX][0][block_y][block_x])))

- {

- direct_ref_idx[LIST_0] = -1;

- direct_ref_idx[LIST_1] = -1;

- currSlice->direct_pdir [pic_block_y][pic_block_x] = -1;

- }

- else

- {

- if (l0_refX < 0 && l1_refX < 0)

- {

- direct_ref_idx[LIST_0] = direct_ref_idx[LIST_1] = 0;

- l0_refX = 0;

- l1_refX = 0;

- }

- if (direct_ref_idx[LIST_1] == -1)

- currSlice->direct_pdir[pic_block_y][pic_block_x] = 0;

- else if (direct_ref_idx[LIST_0] == -1)

- currSlice->direct_pdir[pic_block_y][pic_block_x] = 1;

- else if (p_Vid->active_pps->weighted_bipred_idc == 1)

- test_valid_direct(currSlice, currSlice->active_sps, direct_ref_idx, l0_refX, l1_refX, pic_block_y, pic_block_x);

- else

- currSlice->direct_pdir[pic_block_y][pic_block_x] = 2;

- }

- }

- }

- }

h.264直接预测的更多相关文章

- h.264加权预测

帧间运动是基于视频亮度(luma)不发生改变的一个假设,而在视频序列中经常能遇到亮度变化的场景,比如淡入淡出.镜头光圈调整.整体或局部光源改变等,在这些场景中,简单帧间运动补偿的效果可想而知(实际编码 ...

- h.264宏块与子宏块类型

宏块类型mb_type 宏块类型表示的是宏块不同的分割和编码方式,在h.264的语法结构中,宏块类型在宏块层(macroblock_layer)中用mb_type表示(请参考h.264语法结构分析中的 ...

- h.264语法结构分析

NAL Unit Stream Network Abstraction Layer,简称NAL. h.264把原始的yuv文件编码成码流文件,生成的码流文件就是NAL单元流(NAL unit Stre ...

- H.264 White Paper学习笔记(二)帧内预测

为什么要有帧内预测?因为一般来说,对于一幅图像,相邻的两个像素的亮度和色度值之间经常是比较接近的,也就是颜色是逐渐变化的,不会一下子突变成完全不一样的颜色.而进行视频编码,目的就是利用这个相关性,来进 ...

- 【HEVC帧间预测论文】P1.2 An Efficient Inter Mode Decision Approach for H.264 Video Codin

参考:An Efficient Inter Mode Decision Approach for H.264 Video Coding <HEVC标准介绍.HEVC帧间预测论文笔记>系列博 ...

- H.264学习笔记3——帧间预测

帧间预测主要包括运动估计(运动搜索方法.运动估计准则.亚像素插值和运动矢量估计)和运动补偿. 对于H.264,是对16x16的亮度块和8x8的色度块进行帧间预测编码. A.树状结构分块 H.264的宏 ...

- (转载)H.264码流的RTP封包说明

H.264的NALU,RTP封包说明(转自牛人) 2010-06-30 16:28 H.264 RTP payload 格式 H.264 视频 RTP 负载格式 1. 网络抽象层单元类型 (NALU) ...

- H.264的优势和主要特点

H.264,同时也是MPEG-4第十部分,是由ITU-T视频编码专家组(VCEG)和ISO/IEC动态图像专家组(MPEG)联合组成的联合视频组(JVT,Joint Video Team)提出的高度压 ...

- x264 - 高品质 H.264 编码器

转自:http://www.5i01.cn/topicdetail.php?f=510&t=3735840&r=18&last=48592660 H.264 / MPEG-4 ...

随机推荐

- Hadoop平台提供离线数据和Storm平台提供实时数据流

1.准备工作 2.一个Storm集群的基本组件 3.Topologies 4.Stream 5.数据模型(Data Model) 6.一个简单的Topology 7.流分组策略(Stream grou ...

- Qt 学习之路:模型-视图高级技术

PathView PathView是 QtQuick 中最强大的视图,同时也是最复杂的.PathView允许创建一种更灵活的视图.在这种视图中,数据项并不是方方正正,而是可以沿着任意路径布局.沿着同一 ...

- 转载:JAVA 正则表达式 (超详细)

在Sun的JavaJDK 1.40版本中,Java自带了支持正则表达式的包,本文就抛砖引玉地介绍了如何使用Java.util.regex包. 可粗略估计一下,除了偶尔用Linux的外,其他Linu x ...

- Vim键盘图与命令图解

- iBatis 的修改一个实体

Student.xml <update id="updateStudent" parameterClass="Student" > UPDATE S ...

- java对象与xml相互转换 ---- xstream

XStream是一个Java对象和XML相互转换的工具,很好很强大.提供了所有的基础类型.数组.集合等类型直接转换的支持. XStream中的核心类就是XStream类,一般来说,熟悉这个类基本就够用 ...

- Echarts使用随笔(2)-Echarts中mapType and data

本文出处:http://blog.csdn.net/chenxiaodan_danny/article/details/39081071 series : [ { ...

- asp.net mvc生命周期学习

ASP.NET MVC是一个扩展性非常强的框架,探究其生命周期对用Mock框架来模拟某些东西,达到单元测试效果,和开发扩展我们的程序是很好的. 生命周期1:创建routetable.把URL映射到ha ...

- 在模型中获取网络数据,刷新tableView

model .h #import <Foundation/Foundation.h> #import "AFHTTPRequestOperationManager.h" ...

- C# 枚举

一.在学习枚举之前,首先来听听枚举的优点. 1.枚举能够使代码更加清晰,它允许使用描述性的名称表示整数值. 2.枚举使代码更易于维护,有助于确保给变量指定合法的.期望的值. 3.枚举使代码更易输入. ...