Calculating Stereo Pairs

Calculating Stereo PairsWritten by Paul Bourke IntroductionThe following discusses computer based generation of stereo pairs as used to create a perception of depth. Such depth perception can be useful in many fields, for example, scientific visualisation, entertainment, games, appreciation of architectural spaces, etc. Depth cuesThere are a number of cues that the human visual system uses that result in a perception of depth. Some of these are present even in two dimensional images, for example:

There are other cues that are not present in 2D images, they are:

While binocular disparity is considered the dominant depth cue in most people, if the other cues are presented incorrectly they can have a strong detrimental effect. In order to render a stereo pair one needs to create two images, one for each eye in such a way that when independently viewed they will present an acceptable image to the visual cortex and it will fuse the images and extract the depth information as it does in normal viewing. If stereo pairs are created with a conflict of depth cues then one of a number of things may occur: one cue may become dominant and it may not be the correct/intended one, the depth perception will be exaggerated or reduced, the image will be uncomfortable to watch, the stereo pairs may not fuse at all and the viewer will see two separate images. Stereographics using stereo pairs is only one of the major stereo3D dimensional display technologies, others include holographic and lenticular (barrier strip) systems both of which are autostereoscopic. Stereo pairs create a "virtual" three dimensional image, binocular disparity and convergence cues are correct but accommodation cues are inconsistent because each eye is looking at a flat image. The visual system will tolerate this conflicting accommodation to a certain extent, the classical measure is normally quoted as a maximum separation on the display of 1/30 of the distance of the viewer to the display. The case where the object is behind the projection plane is illustrated below. The projection for the left eye is on the left and the projection for the right eye is on the right, the distance between the left and right eye projections is called the horizontal parallax. Since the projections are on the same side as the respective eyes, it is called a positive parallax. Note that the maximum positive parallax occurs when the object is at infinity, at this point the horizontal parallax is equal to the interocular distance.

If an object is located in front of the projection plane then the projection for the left eye is on the right and the projection for the right eye is on the left. This is known as negative horizontal parallax. Note that a negative horizontal parallax equal to the interocular distance occurs when the object is half way between the projection plane and the center of the eyes. As the object moves closer to the viewer the negative horizontal parallax increases to infinity.

If an object lies at the projection plane then its projection onto the focal plane is coincident for both the left and right eye, hence zero parallax.

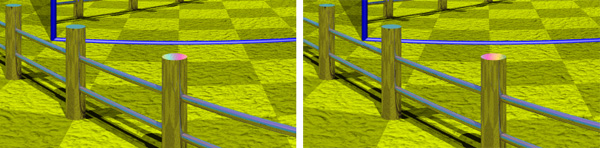

RenderingThere are a couple of methods of setting up a virtual camera and rendering two stereo pairs, many methods are strictly incorrect since they introduce vertical parallax. An example of this is called the "Toe-in" method, while incorrect it is still often used because the correct "off axis" method requires features not always supported by rendering packages. Toe-in is usually identical to methods that involve a rotation of the scene. The toe-in method is still popular for the lower cost filming because offset cameras are uncommon and it is easier than using parallel cameras which requires a subsequent trimming of the stereo pairs. Toe-in (Incorrect) In this projection the camera has a fixed and symmetric aperture, each camera is pointed at a single focal point. Images created using the "toe-in" method will still appear stereoscopic but the vertical parallax it introduces will cause increased discomfort levels. The introduced vertical parallax increases out from the center of the projection plane and is more important as the camera aperture increases.

Off-axis (Correct) This is the correct way to create stereo pairs. It introduces no vertical parallax and is therefore creates the less stressful stereo pairs. Note that it requires a non symmetric camera frustum, this is supported by some rendering packages, in particular, OpenGL.

Objects that lie in front of the projection plane will appear to be in front of the computer screen, objects that are behind the projection plane will appear to be "into" the screen. It is generally easier to view stereo pairs of objects that recede into the screen, to achieve this one would place the focal point closer to the camera than the objects of interest. Note, this doesn't lead to as dramatic an effect as objects that pop out of the screen. The degree of the stereo effect depends on both the distance of the camera to the projection plane and the separation of the left and right camera. Too large a separation can be hard to resolve and is known as hyperstereo. A good ballpark separation of the cameras is 1/20 of the distance to the projection plane, this is generally the maximum separation for comfortable viewing. Another constraint in general practice is to ensure the negative parallax (projection plane behind the object) does not exceed the eye separation. A common measure is the parallax angle defined as thea = 2 atan(DX / 2d) where DX is the horizontal separation of a projected point between the two eyes and d is the distance of the eye from the projection plane. For easy fusing by the majority of people, the absolute value of theta should not exceed 1.5 degrees for all points in the scene. Note theta is positive for points behind the scene and negative for points in front of the screen. It is not uncommon to restrict the negative value of theta to some value closer to zero since negative parallax is more difficult to fuse especially when objects cut the boundary of the projection plane.

Rule of thumb for rendering software Getting started with ones first stereo rendering can be a hit and miss affair, the following approach should ensure success. First choose the camera aperture, this should match the "sweet spot" for your viewing setup, typically between 45 and 60 degrees). Next choose a focal length, the distance at which objects in the scene will appear to be at zero parallax. Objects closer than this will appear in front of the screen, objects further than the focal length will appear behind the screen. How close objects can come to the camera depends somewhat on how good the projection system is but closer than half the focal length should be avoided. Finally, choose the eye separation to be 1/30 of the focal length. ReferencesBailey, M, Clark D Baker, J. Bos, P. et al Bos, P. et al Grotch, S.L. Hodges, L.F. and McAllister, D.F. Lipton, L, Meyer, L Lipton, L., Ackerman.,M Nakagawa, K., Tsubota, K., Yamamoto, K Roese, J.A. and McCleary, L.E. Southard, D.A Course notes, #24 Weissman, M.

Creating correct stereo pairs from any raytracerWritten by Paul Bourke Contribution by Chris Gray: AutoXidMary.mcr, a camera configuration for 3DStudioMax that uses the XidMary Camera. Introduction Many rendering packages provide the necessary tools to create correct stereo pairs (eg: OpenGL), other packages can transform their geometry appropriately (translations and shears) so that stereo pairs can be directly created, many rendering packages only provide straightforward perspective projections. The following describes a method of creating stereo pairs using such packages. While it is possible to create stereo pairs using PovRay with shear transformations, PovRay will be used to illustrate this technique which can be applied to any rendering software that supports only perspective projections (this method is often easier than the translate/shear matrix method). Basic idea Creating stereo pairs involves rendering the left and right eye views from two positions separated by a chosen eye spacing. The eye (camera) looks along parallel vectors. The frustum from each eye to each corner of the projection plane is asymmetric. The solution to creating correct stereo pairs using only symmetric fristums is to extend the frustum (horizontally) for each eye (camera) making it symmetric. After rendering, those parts of the image resulting from the extended fustrum are timmed off. The camera geometry is illustrated below, the relevant details can be translated into the camera specification for your favourite rendering package.

All that remains is to calculate the amount of trimming required for a given focal length and eye separation. Since one normally has a target image width it is usual to render the image larger so that after the trim the image is the desired size. The amount of offset depends on the desired focal length, that is, the distance at which there is no vertical parallax. The amount by which the images are extended and then trimmed (working not given) is given by the following:

Where "w" is the image width, "fo" the focal length (zero parallax), "e" the eye separation, and "a" the intended horizontal aperture. In order to get the final aperture "a" after the image is trimmed, the actual aperture "a'" needs to be modified as follows.

Note that not all rendering packages use the horizontal aperture but rather a vertical aperture eg: OpenGL. As expected as the focal length tends to infinity the amount by which the images are trimmed tends to 0, similarly as the eye separation tends to 0. To combine the images, delta is trimmed off the left of the left image and delta pixels are trimmed off the right of the right image. Example 1 Consider a PovRay model scene file for stereo pair rendering where the eye separation is 0.2, the focal length (zero parallax) is 3, the aperture 60 degrees, and the final image size is supposed to be 800 by 600....delta comes to 46. Example PovRay model files are given for each camera. Note that one could ask PovRay to render just the intended regions of each image by setting a start and end column.

Example 2

Creating stereoscopic images that are easy on the eyesWritten by Paul Bourke There are some unique considerations when creating effective stereoscopic images whether as still images, interactive environments, or animations/movies. In the discussion of these given below it will be assumed that the correct stereopairs have been created, that is, perspective projection with parallel cameras resulting is the so called offaxis projection. It should be noted that not all of these are hard and fast rules and they may be inherent in the type of image content being created.

3D Stereo Rendering

|

|

The description of the code presented here will concentrate on the stereo aspects, the example does however create a real, time varying OpenGL object, namely the pulsar model shown on the right. The example also contains examples of mouse and keyboard controls of the camera position. The example is not intended to illustrate more advanced OpenGL techniques and indeed it does not do things particularly efficiently, in particular it should, but does not, use display lists. The example does not use textures as that is a large separate topic and would only confuse the task at hand. |

|

Conventions

The example code conforms to a couple of local conventions. The first is that it can be run in a window or full screen (arcade game) mode. By convention the application runs in a window unless the "-f" command line option is specified. Full screen mode is supported in the most recent versions of the GLUT library. The decision to use full screen mode is made with the following snippet.

glutCreateWindow("Pulsar model");

glutReshapeWindow(600,400);

if (fullscreen)

glutFullScreen();

It is also useful to be able to run the application in stereo mode or mono mode, the convention is to run in mono unless the command line switch "-s" is supplied. The full usage help information in the example presented here is available by running the application with the "-h" command line option, for example:

>pulsar -h

Usage: pulsar [-h] [-f] [-s] [-c]

-h this text

-f full screen

-s stereo

-c show construction lines

Key Strokes

arrow keys rotate left/right/up/down

left mouse rotate

middle mouse roll

c toggle construction lines

i translate up

k translate down

j translate left

l translate right

[ roll clockwise

] roll anti clockwise

q quit

Stereo

The first thing that needs to be done to support stereo is to initialise the GLUT library for stereo operation. If your card/driver combination don't support stereo this will fail.

glutInit(&argc,argv);

if (!stereo)

glutInitDisplayMode(GLUT_DOUBLE | GLUT_RGB | GLUT_DEPTH);

else

glutInitDisplayMode(GLUT_DOUBLE | GLUT_RGB | GLUT_DEPTH | GLUT_STEREO);

In stereo mode this defines two buffers namely GL_BACK_LEFT and GL_BACK_RIGHT. The appropriate buffer is selected before operations that would affect it are performed, this is using the routine glDrawBuffer(). So for example to clear the two buffers:

glDrawBuffer(GL_BACK_LEFT);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

if (stereo) {

glDrawBuffer(GL_BACK_RIGHT);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

}

Note that some cards are optimised to clear both left and right buffers if GL_BACK is cleared, this can be significantly faster. In these cases one clears the buffers as follows.

glDrawBuffer(GL_BACK);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

Projection

All that's left now is to render the geometry into the appropriate buffer. There are many ways this can be organised depending on the way the particular application is written, in this example see the Display() handler. Essentially the idea is to select the appropriate buffer and render the scene with the appropriate projection.

| Toe-in Method

A common approach is the so called "toe-in" method where the camera for the left and right eye is pointed towards a single focal point and gluPerspective() is used. |

|

glMatrixMode(GL_PROJECTION);

glLoadIdentity();

gluPerspective(camera.aperture,screenwidth/(double)screenheight,0.1,10000.0); if (stereo) { CROSSPROD(camera.vd,camera.vu,right);

Normalise(&right);

right.x *= camera.eyesep / 2.0;

right.y *= camera.eyesep / 2.0;

right.z *= camera.eyesep / 2.0; glMatrixMode(GL_MODELVIEW);

glDrawBuffer(GL_BACK_RIGHT);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glLoadIdentity();

gluLookAt(camera.vp.x + right.x,

camera.vp.y + right.y,

camera.vp.z + right.z,

focus.x,focus.y,focus.z,

camera.vu.x,camera.vu.y,camera.vu.z);

MakeLighting();

MakeGeometry(); glMatrixMode(GL_MODELVIEW);

glDrawBuffer(GL_BACK_LEFT);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glLoadIdentity();

gluLookAt(camera.vp.x - right.x,

camera.vp.y - right.y,

camera.vp.z - right.z,

focus.x,focus.y,focus.z,

camera.vu.x,camera.vu.y,camera.vu.z);

MakeLighting();

MakeGeometry(); } else { glMatrixMode(GL_MODELVIEW);

glDrawBuffer(GL_BACK);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glLoadIdentity();

gluLookAt(camera.vp.x,

camera.vp.y,

camera.vp.z,

focus.x,focus.y,focus.z,

camera.vu.x,camera.vu.y,camera.vu.z);

MakeLighting();

MakeGeometry();

} /* glFlush(); This isn't necessary for double buffers */

glutSwapBuffers();

| Correct method

The Toe-in method while giving workable stereo pairs is not correct, it also introduces vertical parallax which is most noticeable for objects in the outer field of view. The correct method is to use what is sometimes known as the "parallel axis asymmetric frustum perspective projection". In this case the view vectors for each camera remain parallel and a glFrustum() is used to describe the perspective projection. |

|

/* Misc stuff */

ratio = camera.screenwidth / (double)camera.screenheight;

radians = DTOR * camera.aperture / 2;

wd2 = near * tan(radians);

ndfl = near / camera.focallength; if (stereo) { /* Derive the two eye positions */

CROSSPROD(camera.vd,camera.vu,r);

Normalise(&r);

r.x *= camera.eyesep / 2.0;

r.y *= camera.eyesep / 2.0;

r.z *= camera.eyesep / 2.0; glMatrixMode(GL_PROJECTION);

glLoadIdentity();

left = - ratio * wd2 - 0.5 * camera.eyesep * ndfl;

right = ratio * wd2 - 0.5 * camera.eyesep * ndfl;

top = wd2;

bottom = - wd2;

glFrustum(left,right,bottom,top,near,far); glMatrixMode(GL_MODELVIEW);

glDrawBuffer(GL_BACK_RIGHT);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glLoadIdentity();

gluLookAt(camera.vp.x + r.x,camera.vp.y + r.y,camera.vp.z + r.z,

camera.vp.x + r.x + camera.vd.x,

camera.vp.y + r.y + camera.vd.y,

camera.vp.z + r.z + camera.vd.z,

camera.vu.x,camera.vu.y,camera.vu.z);

MakeLighting();

MakeGeometry(); glMatrixMode(GL_PROJECTION);

glLoadIdentity();

left = - ratio * wd2 + 0.5 * camera.eyesep * ndfl;

right = ratio * wd2 + 0.5 * camera.eyesep * ndfl;

top = wd2;

bottom = - wd2;

glFrustum(left,right,bottom,top,near,far); glMatrixMode(GL_MODELVIEW);

glDrawBuffer(GL_BACK_LEFT);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glLoadIdentity();

gluLookAt(camera.vp.x - r.x,camera.vp.y - r.y,camera.vp.z - r.z,

camera.vp.x - r.x + camera.vd.x,

camera.vp.y - r.y + camera.vd.y,

camera.vp.z - r.z + camera.vd.z,

camera.vu.x,camera.vu.y,camera.vu.z);

MakeLighting();

MakeGeometry(); } else { glMatrixMode(GL_PROJECTION);

glLoadIdentity();

left = - ratio * wd2;

right = ratio * wd2;

top = wd2;

bottom = - wd2;

glFrustum(left,right,bottom,top,near,far); glMatrixMode(GL_MODELVIEW);

glDrawBuffer(GL_BACK);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glLoadIdentity();

gluLookAt(camera.vp.x,camera.vp.y,camera.vp.z,

camera.vp.x + camera.vd.x,

camera.vp.y + camera.vd.y,

camera.vp.z + camera.vd.z,

camera.vu.x,camera.vu.y,camera.vu.z);

MakeLighting();

MakeGeometry();

} /* glFlush(); This isn't necessary for double buffers */

glutSwapBuffers();

Note that sometimes it is appropriate to use the left eye position when not in stereo mode in which case the above code can be simplified. It seems more elegant and consistent when moving between mono and stereo if the point between the eyes is used when in mono.

On the off chance that you want to write the code differently and would like to test the correctness of the glFrustum() parameters, here's an explicit example.

Passive stereo

Updated in May 2002: sample code to deal with passive stereo, that is, drawing the left eye to the left half of a dual display OpenGL card and the right eye to the right half. pulsar2.c and pulsar2.h

Macintosh OS-X example

Source code and Makefile illustrating stereo under Mac OS-X using "blue line" syncing, contributed by Jamie Cate.

Demonstration stereo application for Mac OS-X from the Apple development site based upon the method and code described above: GLUTStereo. (Also uses blue line syncing)

Python Example contributed by Peter Roesch

python.zip

Cross-eye stereo modification contributed by Todd Marshall

pulsar_cross.c

Offaxis frustums - OpenGL

Written by Paul Bourke

July 2007

The following describes one (there are are a number of alternatives) system for create perspective offset frustums for stereoscopic projection using OpenGL. This is convenient for non observer tracked viewing on a single stereoscopic panel in these cases there is no clear relationship between the model dimensions and the screen. Note that for multiwall immersive displays the following is not the best approach, the appropriate method requires a knowledge of the screen geometry and the observer position and generally the model is scaled into real world coordinates.

Camera is defined by its position, view direction, up vector, eye separation, distance to zero parallax (see fo below) and the near and far cutting planes. Position, eye separation, zero parallax distance, and cutting planes are most conveniently specified in model coordinates, direction and up vector are orthonormal vectors. With regard to parameters for adjusting stereoscopic viewing I would argue that the distance to zero parallax is the most natural, not only does it relate directly to the scale of the model and the relative position of the camera, it also has a direct bearing on the stereoscopic result ... namely that objects at that distance will appear to be at the depth of the screen. In order not to burden the operators with multiple stereo controls one can usually just internally set the eye separation to 1/30 of the zero parallax distance (camera.eyesep = camera.fo / 30), this will give acceptable stereoscopic viewing in almost all situations and is independent of model scale.

The above diagram (view from above the two cameras) is intended to illustrate how the amount by which to offset the frustums is calculated. Note there is only horizontal parallax. This is intended to be a guide for OpenGL programmers, as such there are some assumptions that relate to OpenGL that may not be appropriate to other APIs. The eye separation is exaggerated in order to make the diagram clearer.

The half width on the projection plane is given by

widthdiv2 = camera.near * tan(camera.aperture/2)

This is related to world coordinates by similar triangles, the amount D by which to offset the view frustum horizontally is given by

D = 0.5 * camera.eyesep * camera.near / camera.fo

aspectratio = windowwidth / (double)windowheight; // Divide by 2 for side-by-side stereo

widthdiv2 = camera.near * tan(camera.aperture / 2); // aperture in radians

cameraright = crossproduct(camera.dir,camera.up); // Each unit vectors

right.x *= camera.eyesep / 2.0;

right.y *= camera.eyesep / 2.0;

right.z *= camera.eyesep / 2.0;

Symmetric - non stereo camera

glMatrixMode(GL_PROJECTION);

glLoadIdentity();

glViewport(0,0,windowwidth,windowheight);

top = widthdiv2;

bottom = - widthdiv2;

left = - aspectratio * widthdiv2;

right = aspectratio * widthdiv2;

glFrustum(left,right,bottom,top,camera.near,camera.far);

glMatrixMode(GL_MODELVIEW);

glLoadIdentity();

gluLookAt(camera.pos.x,camera.pos.y,camera.pos.z,

camera.pos.x + camera.dir.x,

camera.pos.y + camera.dir.y,

camera.pos.z + camera.dir.z,

camera.up.x,camera.up.y,camera.up.z);

// Create geometry here in convenient model coordinates

Asymmetric frustum - stereoscopic

// Right eye

glMatrixMode(GL_PROJECTION);

glLoadIdentity();

// For frame sequential, earlier use glDrawBuffer(GL_BACK_RIGHT);

glViewport(0,0,windowwidth,windowheight);

// For side by side stereo

//glViewport(windowwidth/2,0,windowwidth/2,windowheight);

top = widthdiv2;

bottom = - widthdiv2;

left = - aspectratio * widthdiv2 - 0.5 * camera.eyesep * camera.near / camera.fo;

right = aspectratio * widthdiv2 - 0.5 * camera.eyesep * camera.near / camera.fo;

glFrustum(left,right,bottom,top,camera.near,camera.far);

glMatrixMode(GL_MODELVIEW);

glLoadIdentity();

gluLookAt(camera.pos.x + right.x,camera.pos.y + right.y,camera.pos.z + right.z,

camera.pos.x + right.x + camera.dir.x,

camera.pos.y + right.y + camera.dir.y,

camera.pos.z + right.z + camera.dir.z,

camera.up.x,camera.up.y,camera.up.z);

// Create geometry here in convenient model coordinates // Left eye

glMatrixMode(GL_PROJECTION);

glLoadIdentity();

// For frame sequential, earlier use glDrawBuffer(GL_BACK_LEFT);

glViewport(0,0,windowwidth,windowheight);

// For side by side stereo

//glViewport(0,0,windowidth/2,windowheight);

top = widthdiv2;

bottom = - widthdiv2;

left = - aspectratio * widthdiv2 + 0.5 * camera.eyesep * camera.near / camera.fo;

right = aspectratio * widthdiv2 + 0.5 * camera.eyesep * camera.near / camera.fo;

glFrustum(left,right,bottom,top,camera.near,camera.far);

glMatrixMode(GL_MODELVIEW);

glLoadIdentity();

gluLookAt(camera.pos.x - right.x,camera.pos.y - right.y,camera.pos.z - right.z,

camera.pos.x - right.x + camera.dir.x,

camera.pos.y - right.y + camera.dir.y,

camera.pos.z - right.z + camera.dir.z,

camera.up.x,camera.up.y,camera.up.z);

// Create geometry here in convenient model coordinates

Notes

Due to the possibility of extreme negative parallax separation as objects come closer to the camera than the zero parallax distance, it is common practice to link the near cutting plane to the zero parallax distance. The exact relationship depends on the degree of ghosting of the projection system but camera.near = camera.fo / 5 is usually appropriate.

When the camera is adjusted, for example during a flight path, it is important to ensure the direction and up vectors remain orthonormal. This follows naturally when using quaternions and there are ways of ensuring this when using other systems for camera navigation.

Developers are encouraged to support both side-by-side stereo as well as frame sequential (also called quad buffer stereo). The only difference is the viewport parameters, setting the drawing buffer to either the back buffer or the left/right back buffers, and when to clear the back buffer(s).

The camera aperture above is the horizontal field of view not the more usual vertical field of view that is more conventional in OpenGL. Most end users think in terms of horizontal FOV than vertical FOV.

Calculating Stereo Pairs的更多相关文章

- Create side-by-side stereo pairs in the Unity game engine

Create side-by-side stereo pairs in the Unity game engine Paul BourkeDecember 2008 Sample Island pro ...

- Computer Generated Angular Fisheye Projections [转]

Computer GeneratedAngular Fisheye Projections Written by Paul Bourke May 2001 There are two main ide ...

- (转) Awesome Deep Learning

Awesome Deep Learning Table of Contents Free Online Books Courses Videos and Lectures Papers Tutori ...

- 计算机视觉code与软件

Research Code A rational methodology for lossy compression - REWIC is a software-based implementatio ...

- What are some good books/papers for learning deep learning?

What's the most effective way to get started with deep learning? 29 Answers Yoshua Bengio, ...

- CVPR2013总结

前不久CVPR的结果出来了,首先恭喜我一个已经毕业工作的师弟中了一篇文章.完整的文章列表已经在CVPR的主页上公布了(链接),今天把其中一些感兴趣的整理一下,虽然论文下载的链接大部分还都没出来,不过可 ...

- Halcon四 双目视觉的标定

原文作者写的一系列博客,挺不错的学习halcon:http://blog.sina.com.cn/s/blog_442bfe0e0100yjtn.html 1.get_image_pointer1(I ...

- SLAM: Inverse Depth Parametrization for Monocular SALM

首语: 此文实现客观的评测了使线性化的反转深度的效果.整篇只在表明反转可以线性化,解决距离增加带来的增长问题,有多少优势--%! 我的天呢!我竟然完整得翻译了一遍. 使用标记点地图构建SLAM的方法, ...

- PatchMatchStereo可能会需要的Rectification

在稠密三维重建中,rectification可以简化patch match的过程.在双目特征匹配等场景中其实也用得到,看了一下一篇论文叫< A Compact Algorithm for Rec ...

随机推荐

- Effective c++

static 声明在堆上申请静态存储 对于局部变量,将存储方式改为静态存储 对于全局变量,将连接方式局限在文件内 类中static变量:属于整个类,独立存储,没有this指针 inline inlin ...

- S2SH CRUD 整合

采用的框架 Struts2+Spring4+Hbiernate4. 目录结构 : EmployeeAction: package com.xx.ssh.actions; import java. ...

- Js日期函数Date格式化扩展

prototype是向对象中添加属性和方法,返回对象类型原型的引用,例如对js中日期函数Date进行扩展: Date.prototype.Format = function (fmt) { var o ...

- btrfs-snapper 实现Linux 文件系统快照回滚

###btrfs-snapper 应用 ----------####环境介绍> btrfs文件系统是从ext4过渡而来的被誉为“下一代的文件系统”.该文件系统具有高扩展性(B-tree).数据一 ...

- 查找jsp页面报错技巧

在报错跳转页面打印错误信息<div>系统执行发生错误,信息描述如下:</div> <div>错误状态代码是:${pageContext.errorData.stat ...

- etc 安装及使用

键值存储仓库,用于配置共享和服务发现. A highly-available key value store for shared configuration and service discover ...

- mybatis - resultMap

resultMap有比较强大的自动映射,下面是摘自mybatis中文官网的的片段: 当自动映射查询结果时,MyBatis会获取sql返回的列名并在java类中查找相同名字的属性(忽略大小写). 这意味 ...

- SEO优化小技巧

/** * seo优化课程 * 先谢慕课网 */ /** * SEO基本介绍 * SEO与前端工程师 */ /** * SEO基本介绍 * 搜索引擎工作原理:输入关键字------查询------显示 ...

- SQL Server执行计划的理解

详细看:http://www.cnblogs.com/kissdodog/p/3160560.html 自己总结: 扫描Scan:逐行遍历数据. 查找Seek:根据查询条件,定位到索引的局部位置,然后 ...

- ViewPager如下效果你研究过吗?

1:ViewPager实现欢迎页面动画效果 ViewPager实现欢迎页面动画滑动切换view效果,页面切换添加优美的动画, //主要代码实现 public void animateSecondScr ...