Sqoop-1.4.7-部署与常见案例

该文章是基于 Hadoop2.7.6_01_部署 、 Hive-1.2.1_01_安装部署 进行的

1. 前言

在一个完整的大数据处理系统中,除了hdfs+mapreduce+hive组成分析系统的核心之外,还需要数据采集、结果数据导出、任务调度等不可或缺的辅助系统,而这些辅助工具在hadoop生态体系中都有便捷的开源框架,如图所示:

1.1. 概述

sqoop是apache旗下一款“Hadoop和关系数据库服务器之间传送数据”的工具。

导入数据:MySQL,Oracle导入数据到Hadoop的HDFS、HIVE、HBASE等数据存储系统;

导出数据:从Hadoop的文件系统中导出数据到关系数据库

1.3. 工作机制

将导入或导出命令翻译成mapreduce程序来实现

在翻译出的mapreduce中主要是对inputformat和outputformat进行定制

2. Sqoop的安装部署

2.1. 软件部署

- [yun@mini01 software]$ pwd

- /app/software

- [yun@mini01 software]$ tar xf sqoop-1.4..bin__hadoop-2.6..tar.gz

- [yun@mini01 software]$ mv sqoop-1.4..bin__hadoop-2.6. /app/sqoop-1.4.

- [yun@mini01 software]$ cd /app/

- [yun@mini01 ~]$ ln -s sqoop-1.4./ sqoop

- [yun@mini01 ~]$ ll

- total

- …………

- lrwxrwxrwx yun yun Aug : sqoop -> sqoop-1.4./

- drwxr-xr-x yun yun Dec sqoop-1.4.

2.2. 配置修改

- [yun@mini01 conf]$ pwd

- /app/sqoop/conf

- [yun@mini01 conf]$ ll

- total

- -rw-rw-r-- yun yun Dec oraoop-site-template.xml

- -rw-rw-r-- yun yun Dec sqoop-env-template.cmd

- -rwxr-xr-x yun yun Dec sqoop-env-template.sh

- -rw-rw-r-- yun yun Dec sqoop-site-template.xml

- -rw-rw-r-- yun yun Dec sqoop-site.xml

- [yun@mini01 conf]$ cp -a sqoop-env-template.sh sqoop-env.sh

- [yun@mini01 conf]$ cat sqoop-env.sh

- # Licensed to the Apache Software Foundation (ASF) under one or more

- ………………

- # Set Hadoop-specific environment variables here.

- #Set path to where bin/hadoop is available # 修改的配置

- export HADOOP_COMMON_HOME=${HADOOP_HOME}

- #Set path to where hadoop-*-core.jar is available # 修改的配置

- export HADOOP_MAPRED_HOME=${HADOOP_HOME}

- #set the path to where bin/hbase is available

- #export HBASE_HOME=

- #Set the path to where bin/hive is available # 修改的配置

- export HIVE_HOME=${HIVE_HOME}

- #Set the path for where zookeper config dir is

- #export ZOOCFGDIR=

2.3. 加入mysql的jdbc驱动包

- [yun@mini01 software]$ pwd

- /app/software

- [yun@mini01 software]$ cp -a mysql-connector-java-5.1..jar /app/sqoop/lib

2.4. 加入hive的执行包

- [yun@mini01 lib]$ pwd

- /app/hive/lib

- [yun@mini01 lib]$ cp -a hive-exec-1.2..jar /app/sqoop/lib/

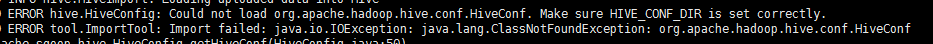

避免出现

2.5. 验证启动

- [yun@mini01 bin]$ pwd

- /app/sqoop/bin

- [yun@mini01 bin]$ ./sqoop-version

- // :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

- Sqoop 1.4.

- git commit id 2328971411f57f0cb683dfb79d19d4d19d185dd8

- Compiled by maugli on Thu Dec :: STD

- [yun@mini01 bin]$ ./sqoop help # 查看帮助

- // :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

- usage: sqoop COMMAND [ARGS]

- Available commands:

- codegen Generate code to interact with database records

- create-hive-table Import a table definition into Hive

- eval Evaluate a SQL statement and display the results

- export Export an HDFS directory to a database table

- help List available commands

- import Import a table from a database to HDFS

- import-all-tables Import tables from a database to HDFS

- import-mainframe Import datasets from a mainframe server to HDFS

- job Work with saved jobs

- list-databases List available databases on a server

- list-tables List available tables in a database

- merge Merge results of incremental imports

- metastore Run a standalone Sqoop metastore

- version Display version information

- See 'sqoop help COMMAND' for information on a specific command.

3. 数据库信息

- # 建库

- CREATE DATABASE sqoop_test DEFAULT CHARACTER SET utf8 ;

- # 建账号 数据库在mini03机器上

- grant all on sqoop_test.* to sqoop_test@'%' identified by 'sqoop_test';

- grant all on sqoop_test.* to sqoop_test@'mini03' identified by 'sqoop_test';

- # 刷新权限

- flush privileges;

3.1. 表信息

表emp:

|

id |

name |

deg |

salary |

dept |

|

1201 |

gopal |

manager |

50000 |

TP |

|

1202 |

manisha |

Proof reader |

50000 |

TP |

|

1203 |

khalil |

php dev |

30000 |

AC |

|

1204 |

prasanth |

php dev |

30000 |

AC |

|

1205 |

kranthi |

admin |

20000 |

TP |

表emp_add:

|

id |

name |

deg |

salary |

|

1201 |

288A |

vgiri |

jublee |

|

1202 |

108I |

aoc |

sec-bad |

|

1203 |

144Z |

pgutta |

hyd |

|

1204 |

78B |

old city |

sec-bad |

|

1205 |

720X |

hitec |

sec-bad |

表emp_conn:

|

id |

name |

deg |

|

1201 |

2356742 |

gopal@tp.com |

|

1202 |

1661663 |

manisha@tp.com |

|

1203 |

8887776 |

khalil@ac.com |

|

1204 |

9988774 |

prasanth@ac.com |

|

1205 |

1231231 |

kranthi@tp.com |

4. Sqoop的数据导入

“导入工具”导入单个表从RDBMS到HDFS。表中的每一行被视为HDFS的记录。所有记录都存储为文本文件的文本数据(或者Avro、sequence文件等二进制数据)

- $ sqoop import (generic-args) (import-args)

- $ sqoop-import (generic-args) (import-args)

4.1. 导入表数据到HDFS

- [yun@mini01 sqoop]$ pwd

- /app/sqoop

- [yun@mini01 sqoop]$ bin/sqoop import \

- --connect jdbc:mysql://mini03:3306/sqoop_test \

- --username sqoop_test \

- --password sqoop_test \

- --table emp \

- --m

- // :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

- // :: WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

- ………………

- // :: INFO mapreduce.Job: map % reduce %

- // :: INFO mapreduce.Job: Job job_1533196573365_0001 completed successfully

- ………………

- // :: INFO mapreduce.ImportJobBase: Transferred bytes in 47.2865 seconds (3.1933 bytes/sec)

- // :: INFO mapreduce.ImportJobBase: Retrieved records.

查看导入的数据

- [yun@mini02 ~]$ hadoop fs -ls /user/yun/emp

- Found items

- -rw-r--r-- yun supergroup -- : /user/yun/emp/_SUCCESS

- -rw-r--r-- yun supergroup -- : /user/yun/emp/part-m-

- [yun@mini02 ~]$ hadoop fs -cat /user/yun/emp/part-m-

- ,gopal,manager,,TP

- ,manisha,Proof reader,,TP

- ,khalil,php dev,,AC

- ,prasanth,php dev,,AC

- ,kranthi,admin,,TP

4.2. 导入表到HDFS指定目录

- [yun@mini01 sqoop]$ pwd

- /app/sqoop

- [yun@mini01 sqoop]$ bin/sqoop import --connect jdbc:mysql://mini03:3306/sqoop_test \

- --username sqoop_test --password sqoop_test \

- --target-dir /sqoop_test/table_emp/queryresult \

- --table emp --num-mappers

注意:如果没有目录,那么会创建

查看导入的数据

- [yun@mini02 ~]$ hadoop fs -ls /sqoop_test/table_emp/queryresult

- Found items

- -rw-r--r-- yun supergroup -- : /sqoop_test/table_emp/queryresult/_SUCCESS

- -rw-r--r-- yun supergroup -- : /sqoop_test/table_emp/queryresult/part-m-

- [yun@mini02 ~]$ hadoop fs -cat /sqoop_test/table_emp/queryresult/part-m-

- ,gopal,manager,,TP

- ,manisha,Proof reader,,TP

- ,khalil,php dev,,AC

- ,prasanth,php dev,,AC

- ,kranthi,admin,,TP

4.3. 导入关系表到HIVE

- [yun@mini01 sqoop]$ pwd

- /app/sqoop

- [yun@mini01 sqoop]$ bin/sqoop import --connect jdbc:mysql://mini03:3306/sqoop_test \

- --username sqoop_test --password sqoop_test \

- --table emp --hive-import \

- --num-mappers

- // :: INFO sqoop.Sqoop: Running Sqoop version: 1.4.

- // :: WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

- ………………

- // :: INFO mapreduce.ImportJobBase: Transferred bytes in 20.6744 seconds (7.3037 bytes/sec)

- // :: INFO mapreduce.ImportJobBase: Retrieved records.

- // :: INFO mapreduce.ImportJobBase: Publishing Hive/Hcat import job data to Listeners for table emp

- // :: INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `emp` AS t LIMIT

- // :: WARN hive.TableDefWriter: Column salary had to be cast to a less precise type in Hive

- // :: INFO hive.HiveImport: Loading uploaded data into Hive

- // :: INFO hive.HiveImport:

- // :: INFO hive.HiveImport: Logging initialized using configuration in jar:file:/app/sqoop-1.4./lib/hive-exec-1.2..jar!/hive-log4j.properties

- // :: INFO hive.HiveImport: OK

- // :: INFO hive.HiveImport: Time taken: 1.677 seconds

- // :: INFO hive.HiveImport: Loading data to table default.emp

- // :: INFO hive.HiveImport: Table default.emp stats: [numFiles=, totalSize=]

- // :: INFO hive.HiveImport: OK

- // :: INFO hive.HiveImport: Time taken: 0.629 seconds

- // :: INFO hive.HiveImport: Hive import complete.

- // :: INFO hive.HiveImport: Export directory is contains the _SUCCESS file only, removing the directory.

查看导入的数据

- hive (default)> show tables;

- OK

- emp

- Time taken: 0.031 seconds, Fetched: row(s)

- hive (default)> select * from emp;

- OK

- gopal manager 50000.0 TP

- manisha Proof reader 50000.0 TP

- khalil php dev 30000.0 AC

- prasanth php dev 30000.0 AC

- kranthi admin 20000.0 TP

- Time taken: 0.489 seconds, Fetched: row(s)

4.4. 导入表到HIVE指定库指定表

- [yun@mini01 sqoop]$ pwd

- /app/sqoop

- [yun@mini01 sqoop]$ bin/sqoop import --connect jdbc:mysql://mini03:3306/sqoop_test \

- --username sqoop_test --password sqoop_test \

- --table emp \

- --delete-target-dir \

- --fields-terminated-by '\t' \

- --hive-import \

- --hive-database sqoop_test \

- --hive-table hive_emp \

- --num-mappers

注意:hive的库sqoop_test,必须先建立。否则会报:FAILED: SemanticException [Error 10072]: Database does not exist: sqoop_test

查看导入的数据

- : jdbc:hive2://mini01:10000> use sqoop_test;

- No rows affected (0.049 seconds)

- : jdbc:hive2://mini01:10000> show tables;

- +-----------+--+

- | tab_name |

- +-----------+--+

- | hive_emp |

- +-----------+--+

- row selected (0.076 seconds)

- : jdbc:hive2://mini01:10000> select * from hive_emp;

- +--------------+----------------+---------------+------------------+----------------+--+

- | hive_emp.id | hive_emp.name | hive_emp.deg | hive_emp.salary | hive_emp.dept |

- +--------------+----------------+---------------+------------------+----------------+--+

- | | gopal | manager | 50000.0 | TP |

- | | manisha | Proof reader | 50000.0 | TP |

- | | khalil | php dev | 30000.0 | AC |

- | | prasanth | php dev | 30000.0 | AC |

- | | kranthi | admin | 20000.0 | TP |

- +--------------+----------------+---------------+------------------+----------------+--+

- rows selected (0.162 seconds)

4.5. 导入表数据子集

4.5.1. where子句的导入

- [yun@mini01 sqoop]$ pwd

- /app/sqoop

- [yun@mini01 sqoop]$ bin/sqoop import --connect jdbc:mysql://mini03:3306/sqoop_test \

- --username sqoop_test --password sqoop_test \

- --table emp_add \

- --where "city ='sec-bad'" \

- --target-dir /sqoop_test/table_emp/queryresult2 \

- --num-mappers

查看导入的数据

- [yun@mini02 ~]$ hadoop fs -ls /sqoop_test/table_emp/queryresult2

- Found items

- -rw-r--r-- yun supergroup -- : /sqoop_test/table_emp/queryresult2/_SUCCESS

- -rw-r--r-- yun supergroup -- : /sqoop_test/table_emp/queryresult2/part-m-

- [yun@mini02 ~]$ hadoop fs -cat /sqoop_test/table_emp/queryresult2/part-m-

- ,108I,aoc,sec-bad

- ,78B,old city,sec-bad

- ,720X,hitec,sec-bad

4.5.2. query按需导入

- [yun@mini01 sqoop]$ pwd

- /app/sqoop

- [yun@mini01 sqoop]$ bin/sqoop import --connect jdbc:mysql://mini03:3306/sqoop_test \

- --username sqoop_test --password sqoop_test \

- --query 'select id,name,deg from emp WHERE id>1203 and $CONDITIONS' \

- --split-by id \

- --fields-terminated-by '\t' \

- --target-dir /sqoop_test/table_emp/queryresult4 \

- --num-mappers

查看导入的数据

- [yun@mini02 ~]$ hadoop fs -cat /sqoop_test/table_emp/queryresult4/part-m-

- prasanth php dev

- kranthi admin

4.6. 增量导入

所需参数

- --check-column (col)

- --incremental (mode) # mode include append and lastmodified

- --last-value (value)

示例

- [yun@mini01 sqoop]$ pwd

- /app/sqoop

- [yun@mini01 sqoop]$ bin/sqoop import --connect jdbc:mysql://mini03:3306/sqoop_test \

- --username sqoop_test --password sqoop_test \

- --table emp \

- --incremental append \

- --check-column id \

- --last-value \

- --fields-terminated-by '\t' \

- --target-dir /sqoop_test/table_emp/queryresult4 \

- --num-mappers

查看导入的数据

- [yun@mini02 ~]$ hadoop fs -ls /sqoop_test/table_emp/queryresult4/

- Found items

- -rw-r--r-- yun supergroup -- : /sqoop_test/table_emp/queryresult4/_SUCCESS

- -rw-r--r-- yun supergroup -- : /sqoop_test/table_emp/queryresult4/part-m-

- -rw-r--r-- yun supergroup -- : /sqoop_test/table_emp/queryresult4/part-m-

- [yun@mini02 ~]$

- [yun@mini02 ~]$ hadoop fs -cat /sqoop_test/table_emp/queryresult4/part-m-

- prasanth php dev

- kranthi admin

- [yun@mini02 ~]$ hadoop fs -cat /sqoop_test/table_emp/queryresult4/part-m-

- khalil php dev AC

- prasanth php dev AC

- kranthi admin TP

5. Sqoop的数据导出

将数据从HDFS导出到RDBMS数据库

导出前,目标表必须存在于目标数据库中。

- 默认操作是从将文件中的数据使用INSERT语句插入到表中

- 更新模式下,是生成UPDATE语句更新表数据

- $ sqoop export (generic-args) (export-args)

- $ sqoop-export (generic-args) (export-args)

5.1. 示例

数据

- [yun@mini02 ~]$ hadoop fs -ls /sqoop_test/table_emp/queryresult

- Found items

- -rw-r--r-- yun supergroup -- : /sqoop_test/table_emp/queryresult/_SUCCESS

- -rw-r--r-- yun supergroup -- : /sqoop_test/table_emp/queryresult/part-m-

- [yun@mini02 ~]$ hadoop fs -cat /sqoop_test/table_emp/queryresult/part-m-

- ,gopal,manager,,TP

- ,manisha,Proof reader,,TP

- ,khalil,php dev,,AC

- ,prasanth,php dev,,AC

- ,kranthi,admin,,TP

1、首先需要手动创建mysql中的目标表

- MariaDB [(none)]> use sqoop_test;

- Database changed

- MariaDB [sqoop_test]> CREATE TABLE employee (

- id INT NOT NULL PRIMARY KEY,

- name VARCHAR(),

- deg VARCHAR(),

- salary INT,

- dept VARCHAR());

- Query OK, rows affected (0.00 sec)

- MariaDB [sqoop_test]> show tables;

- +----------------------+

- | Tables_in_sqoop_test |

- +----------------------+

- | emp |

- | emp_add |

- | emp_conn |

- | employee |

- +----------------------+

- rows in set (0.00 sec)

2、然后执行导出命令

- [yun@mini01 sqoop]$ pwd

- /app/sqoop

- [yun@mini01 sqoop]$ bin/sqoop export \

- --connect jdbc:mysql://mini03:3306/sqoop_test \

- --username sqoop_test --password sqoop_test \

- --table employee \

- --export-dir /sqoop_test/table_emp/queryresult/

3、验证表mysql命令行

- MariaDB [sqoop_test]> select * from employee;

- +------+----------+--------------+--------+------+

- | id | name | deg | salary | dept |

- +------+----------+--------------+--------+------+

- | | gopal | manager | | TP |

- | | manisha | Proof reader | | TP |

- | | khalil | php dev | | AC |

- | | prasanth | php dev | | AC |

- | | kranthi | admin | | TP |

- +------+----------+--------------+--------+------+

- rows in set (0.00 sec)

Sqoop-1.4.7-部署与常见案例的更多相关文章

- jQuery常见案例

jQuery常见案例 通过jQuery实现全选,反选取消: 选择 地址 端口 1.1.1.1 80 1.1.1.1 80 1.1.1.1 80 1.1.1.1 80 代码实现 <body> ...

- iis 部署webapi常见错误及解决方案

iis 部署webapi常见错误及解决方案 错误一: 原因:asp.net web api部署在Windows服务器上后,按照WebAPI定义的路由访问,老是出现404,但定义一个静态文件从站点访问, ...

- 上海苹果维修点分享苹果电脑MACBOOK故障维修常见案例

苹果的电子设备无论是外观和性能都是无与伦比的美丽,很多开发者都开始选用苹果电脑macbook.近年来苹果售后维修点来维修苹果电脑的用户也越来越多,我们上海苹果维修点就整理分享了一些苹果电脑MACBOO ...

- Android内存溢出、内存泄漏常见案例及最佳实践总结

内存溢出是Android开发中一个老大难的问题,相关的知识点比较繁杂,绝大部分的开发者都零零星星知道一些,但难以全面.本篇文档会尽量从广度和深度两个方面进行整理,帮助大家梳理这方面的知识点(基于Jav ...

- Azkaban-2.5.0-部署与常见案例

该文章是基于 Hadoop2.7.6_01_部署 . Hive-1.2.1_01_安装部署 进行的 1. 前言 在一个完整的大数据处理系统中,除了hdfs+mapreduce+hive组成分析系统的核 ...

- mysql 案例 ~ 常见案例汇总

一 简介:这里汇总了一些mysql常见的问题二 案例场景 问题1 mysql设置了默认慢日志记录1S,为何会记录不超过1S的sql语句 答案 mysql~log_queries_not_usi ...

- Flume-1.8.0_部署与常用案例

该文章是基于 Hadoop2.7.6_01_部署 进行的 Flume官方文档:FlumeUserGuide 常见问题:记flume部署过程中遇到的问题以及解决方法(持续更新) 1. 前言 在一个完整的 ...

- KingbaseES V8R6C5禁用root用户ssh登录图形化部署集群案例

案例说明: 对于KingbaseES V8R6C5版本在部集群时,需要建立kingbase.root用户在节点间的ssh互信,如果在生产环境禁用root用户ssh登录,则通过ssh部署会失败:在图形化 ...

- 0基础入门 docker 部署 各种 Prometheus 案例 - 程序员学点xx 总集篇

目录 大家好, 学点xx 系列也推出一段时间了.虽然 yann 能力有限,但还是收到了很多鼓励与赞赏.对这个系列 yann 还是很喜欢的,特别是 Prometheus 篇,在期间经历公众号 100 篇 ...

随机推荐

- WebView使用_WebView监听网页下载_DownloadManager使用

最近在做一个较简单的项目:通过一个webview来显示一个网页的App 这个网页有下载的功能,关于这一功能需要用到两个知识点: 1.webview监听网页的下载链接.(webview默认情况下是没有开 ...

- Android 引用文件(.db)的三种方式

1.assets —— 资产目录(该目录中的文件会被直接打包到 apk 文件中).获取该目录下的文件的方式是: InputStream is = getContext().getAssets().op ...

- 华为云数据库中间件DDM性能卓越,遥遥领先于业界

就说一句话吧,后来者居上,不服不行.

- angular监听dom渲染完成,判断ng-repeat循环完成

一.前言 最近做了一个图片懒加载的小插件,功能需要dom渲染完成后,好获取那些需要懒加载的dom元素.那么问题来了,如果只是感知静态的dom用ready,onload都可以,但项目用的angular, ...

- vscode使用汇总——常用插件、常用配置、常用快捷键

一.代码提示快捷键设置:(keybindings.json) [ { "key": "ctrl+j", "command": "- ...

- [Noip2015PJ] 求和

Description 一条狭长的纸带被均匀划分出了 \(n\) 个格子,格子编号从 \(1\) 到 \(n\) .每个格子上都染了一种颜色 \(color_i\) 用 \([1,m]\) 当中的一个 ...

- [转]git提交代码时遇到代码库有更新以及本地有更新的解决方法

本文转自:https://blog.csdn.net/myphp2012/article/details/80519156 在多人协作开发时,经常碰到同事把最新修改推送到远程库,你在本地也做了修改,这 ...

- 如何简单快速的修改Bootstrap

Bootstrap并不是单单意味着HTML/CSS界面框架,更确切的说,它改变了整个游戏规则.这个囊括了应有尽有的代码框架使得许多应用和网站的设计开发变得简便许多,而且它将大量的HTML框架普及成了产 ...

- C# 获取用户IP地址(转载)

[ASP.NET开发]获取客户端IP地址 via C# 说明:本文中的内容是我综合博客园上的博文和MSDN讨论区的资料,再通过自己的实际测试而得来,属于自己原创的内容说实话很少,写这一篇是为了记录自己 ...

- T-SQL:谓词和运算符(六)

谓词一般有 where和having,check 谓词只计算 TRUE ,FALSE或者UNKNOWN 逻辑表达式 如 AND 和OR 1.IN 谓词的用法 SELECT orderid, em ...