Spark Streaming官方文档学习--下

def getWordBlacklist(sparkContext):if ('wordBlacklist' not in globals()):globals()['wordBlacklist'] = sparkContext.broadcast(["a", "b", "c"])return globals()['wordBlacklist']def getDroppedWordsCounter(sparkContext):if ('droppedWordsCounter' not in globals()):globals()['droppedWordsCounter'] = sparkContext.accumulator(0)return globals()['droppedWordsCounter']def echo(time, rdd):# Get or register the blacklist Broadcastblacklist = getWordBlacklist(rdd.context)# Get or register the droppedWordsCounter AccumulatordroppedWordsCounter = getDroppedWordsCounter(rdd.context)# Use blacklist to drop words and use droppedWordsCounter to count themdef filterFunc(wordCount):if wordCount[0] in blacklist.value:droppedWordsCounter.add(wordCount[1])Falseelse:Truecounts = "Counts at time %s %s" % (time, rdd.filter(filterFunc).collect())wordCounts.foreachRDD(echo)

# Lazily instantiated global instance of SparkSessiondef getSparkSessionInstance(sparkConf):if ('sparkSessionSingletonInstance' not in globals()):globals()['sparkSessionSingletonInstance'] = SparkSession\.builder\.config(conf=sparkConf)\.getOrCreate()return globals()['sparkSessionSingletonInstance']...# DataFrame operations inside your streaming programwords = ... # DStream of stringsdef process(time, rdd):print("========= %s =========" % str(time))try:# Get the singleton instance of SparkSessionspark = getSparkSessionInstance(rdd.context.getConf())# Convert RDD[String] to RDD[Row] to DataFramerowRdd = rdd.map(lambda w: Row(word=w))wordsDataFrame = spark.createDataFrame(rowRdd)# Creates a temporary view using the DataFramewordsDataFrame.createOrReplaceTempView("words")# Do word count on table using SQL and print itwordCountsDataFrame = spark.sql("select word, count(*) as total from words group by word")wordCountsDataFrame.show()except:passwords.foreachRDD(process)

- Metadata checkpointing - Saving of the information defining the streaming computation to fault-tolerant storage like HDFS. This is used to recover from failure of the node running the driver of the streaming application. Metadata includes:

Configuration - The configuration that was used to create the streaming application.DStream operations - The set of DStream operations that define the streaming application.Incomplete batches - Batches whose jobs are queued but have not completed yet. - Data checkpointing - Saving of the generated RDDs to reliable storage.

- Usage of stateful transformations - If either updateStateByKey or reduceByKeyAndWindow (with inverse function) is used in the application, then the checkpoint directory must be provided to allow for periodic(周期的) RDD checkpointing.

- Recovering from failures of the driver running the application - Metadata checkpoints are used to recover with progress information.

- When the program is being started for the first time, it will create a new StreamingContext, set up all the streams and then call start().

- When the program is being restarted after failure, it will re-create a StreamingContext from the checkpoint data in the checkpoint directory.

# Function to create and setup a new StreamingContextdef functionToCreateContext():sc = SparkContext(...) # new contextssc = new StreamingContext(...)lines = ssc.socketTextStream(...) # create DStreams...ssc.checkpoint(checkpointDirectory) # set checkpoint directoryreturn ssc# Get StreamingContext from checkpoint data or create a new onecontext = StreamingContext.getOrCreate(checkpointDirectory, functionToCreateContext)# Do additional setup on context that needs to be done,# irrespective of whether it is being started or restartedcontext. ...# Start the contextcontext.start()context.awaitTermination()

StreamingContext.getOrCreate(checkpointDirectory, None).

- Cluster with a cluster manager

- Package the application JAR

If you are using spark-submit to start the application, then you will not need to provide Spark and Spark Streaming in the JAR. However, if your application uses advanced sources (e.g. Kafka, Flume), then you will have to package the extra artifact they link to, along with their dependencies, in the JAR that is used to deploy the application. - Configuring sufficient memory for the executors

Note that if you are doing 10 minute window operations, the system has to keep at least last 10 minutes of data in memory. So the memory requirements for the application depends on the operations used in it. - Configuring checkpointing

- Configuring automatic restart of the application driver

- Spark Standalone

the Standalone cluster manager can be instructed to supervise the driver, and relaunch it if the driver fails either due to non-zero exit code, or due to failure of the node running the driver. - YARN automatically restarting an application

- Mesos Marathon has been used to achieve this with Mesos

- Configuring write ahead logs

If enabled, all the data received from a receiver gets written into a write ahead log in the configuration checkpoint directory. - Setting the max receiving rate

- 更新的应用和旧的应用并行的执行,Once the new one (receiving the same data as the old one) has been warmed up and is ready for prime time, the old one be can be brought down.这要求,数据源可以向两个地方发送数据。

- 优雅的停止,就是处理完接受到的数据之后再停止。ensure data that has been received is completely processed before shutdown。Then the upgraded application can be started, which will start processing from the same point where the earlier application left off.为了实现这个需要数据源的数据是可以缓存的。

- Reducing the processing time of each batch of data by efficiently using cluster resources.

- Setting the right batch size such that the batches of data can be processed as fast as they are received (that is, data processing keeps up with the data ingestion).

Spark Streaming官方文档学习--下的更多相关文章

- Spark Streaming官方文档学习--上

官方文档地址:http://spark.apache.org/docs/latest/streaming-programming-guide.html Spark Streaming是spark ap ...

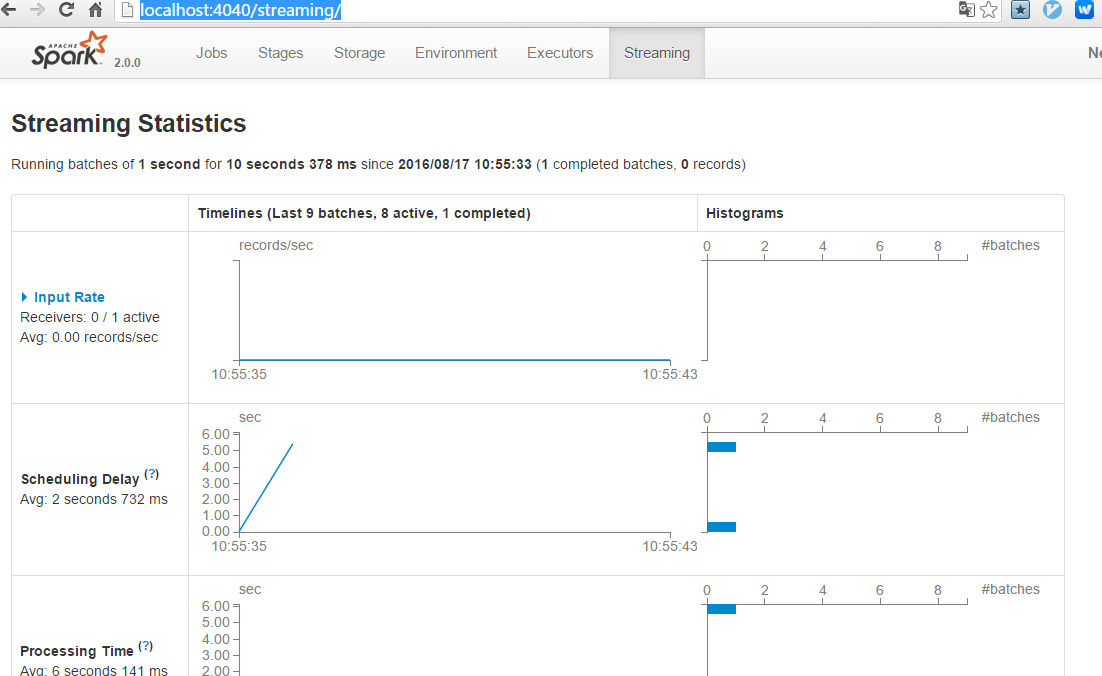

- Spark监控官方文档学习笔记

任务的监控和使用 有几种方式监控spark应用:Web UI,指标和外部方法 Web接口 每个SparkContext都会启动一个web UI,默认是4040端口,用来展示一些信息: 一系列调度的st ...

- Spring 4 官方文档学习(十一)Web MVC 框架

介绍Spring Web MVC 框架 Spring Web MVC的特性 其他MVC实现的可插拔性 DispatcherServlet 在WebApplicationContext中的特殊的bean ...

- Spark SQL 官方文档-中文翻译

Spark SQL 官方文档-中文翻译 Spark版本:Spark 1.5.2 转载请注明出处:http://www.cnblogs.com/BYRans/ 1 概述(Overview) 2 Data ...

- Spring 4 官方文档学习(十二)View技术

关键词:view technology.template.template engine.markup.内容较多,按需查用即可. 介绍 Thymeleaf Groovy Markup Template ...

- Spring 4 官方文档学习(十一)Web MVC 框架之配置Spring MVC

内容列表: 启用MVC Java config 或 MVC XML namespace 修改已提供的配置 类型转换和格式化 校验 拦截器 内容协商 View Controllers View Reso ...

- Spring Data Commons 官方文档学习

Spring Data Commons 官方文档学习 -by LarryZeal Version 1.12.6.Release, 2017-07-27 为知笔记版本在这里,带格式. Table o ...

- Spring 4 官方文档学习(十一)Web MVC 框架之resolving views 解析视图

接前面的Spring 4 官方文档学习(十一)Web MVC 框架,那篇太长,故另起一篇. 针对web应用的所有的MVC框架,都会提供一种呈现views的方式.Spring提供了view resolv ...

- Spring Boot 官方文档学习(一)入门及使用

个人说明:本文内容都是从为知笔记上复制过来的,样式难免走样,以后再修改吧.另外,本文可以看作官方文档的选择性的翻译(大部分),以及个人使用经验及问题. 其他说明:如果对Spring Boot没有概念, ...

随机推荐

- sql基础查询

2.1 指定使用中的资料库 一个资料库伺服器可以建立许多需要的资料库,所以在你执行任何资料库的操作前,通常要先指定使用的资料库.下列是指定资料库的指令: 如果你使用「MySQL Workbench」这 ...

- 从Windows 8 安装光盘安装.NET Framework 3.5.1

在安装一些应用时, 例如安装 Oracle, 可能会缺少了安装 .Net FrameWork 3.5.1 无法继续. 最简单的方法当时是,直接进 控制面板, 在添加删除程序内, 选择增加Windows ...

- scala伴生对象

package com.test.scala.test /** * 伴生对象指的是在类中建立一个object */ class AssociatedObject { private var count ...

- android 常用命令

1.查看当前手机界面的 Activity dumpsys | grep "mFocusedActivity" 查看任务栈 dumpsys | grep "Hist&q ...

- Android使用Application总结

对于application的使用,一般是 在Android源码中对他的描述是; * Base class for those who need to maintain global applicati ...

- webapi获取请求地址的IP

References required: HttpContextWrapper - System.Web.dll RemoteEndpointMessageProperty - System.Serv ...

- greenplum如何激活,同步,删除standby和恢复原始master

在Master失效时,同步程序会停止,Standby可以被在本机被激活,激活Standby时,同步日志被用来恢复Master最后一次事务成功提交时的状态.在激活Standby时还可以指定一个新的Sta ...

- Maven invalid task...

执行maven构建项目报错: Invalid task '‐DgroupId=*': you must specify a valid lifecycle phase, or a goal in th ...

- thinkphp和uploadfiy

上传页面 用的是bootstrap <div class="col-sm-6"> <div style="width: 200px; height: 1 ...

- Distinct<TSource>(IEqualityComparer<TSource> comparer) 根据列名来Distinct

1. DistinctEqualityComparer.cs public class DistinctEqualityComparer<T, V> : IEqualityComparer ...