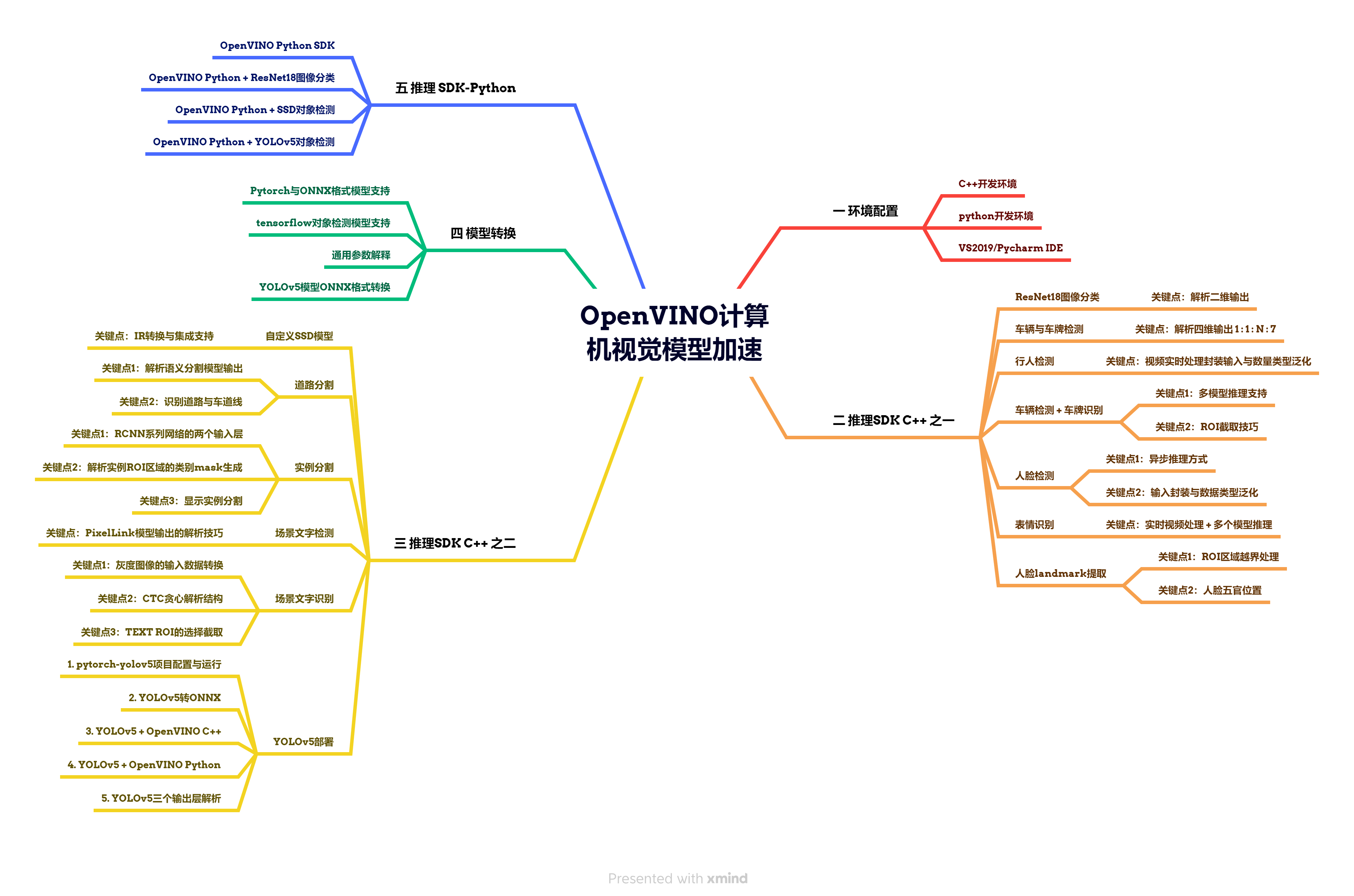

OpenVINO计算机视觉模型加速

OpenVINO计算机视觉模型加速

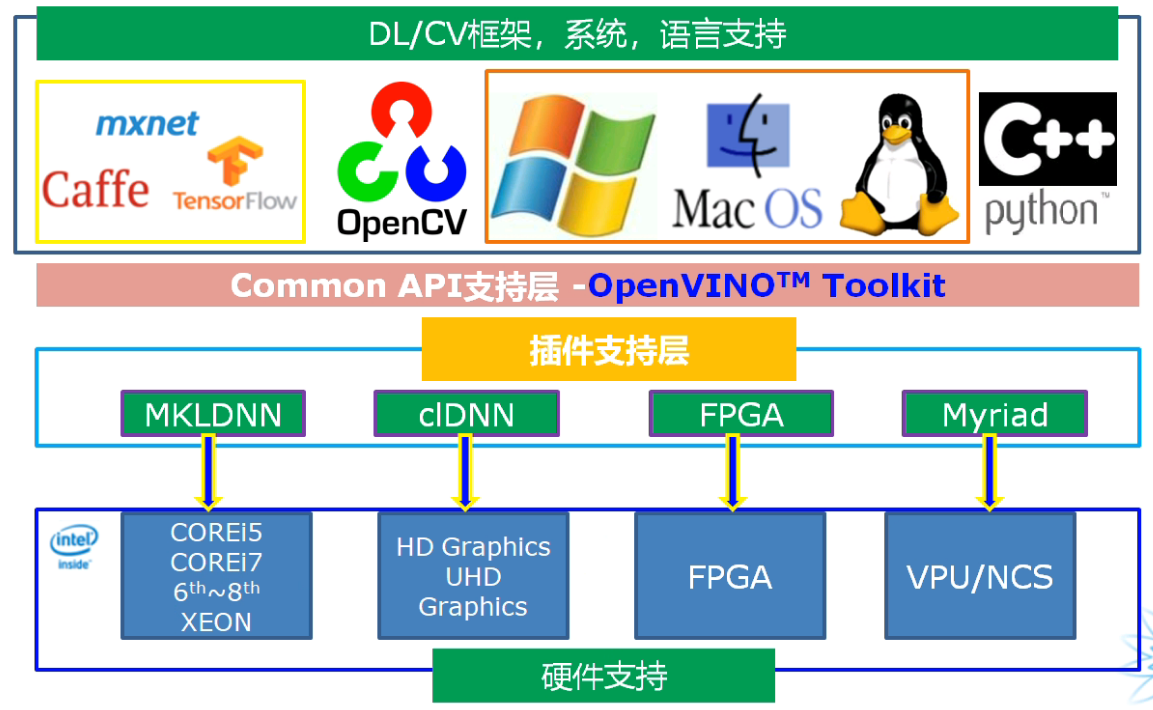

OpenVINO介绍

- 计算机视觉部署框架,支持多种边缘硬件平台

- Intel开发并开源使用的计算机视觉库

- 支持多个场景视觉任务场景的快速演示

四个主要模块:

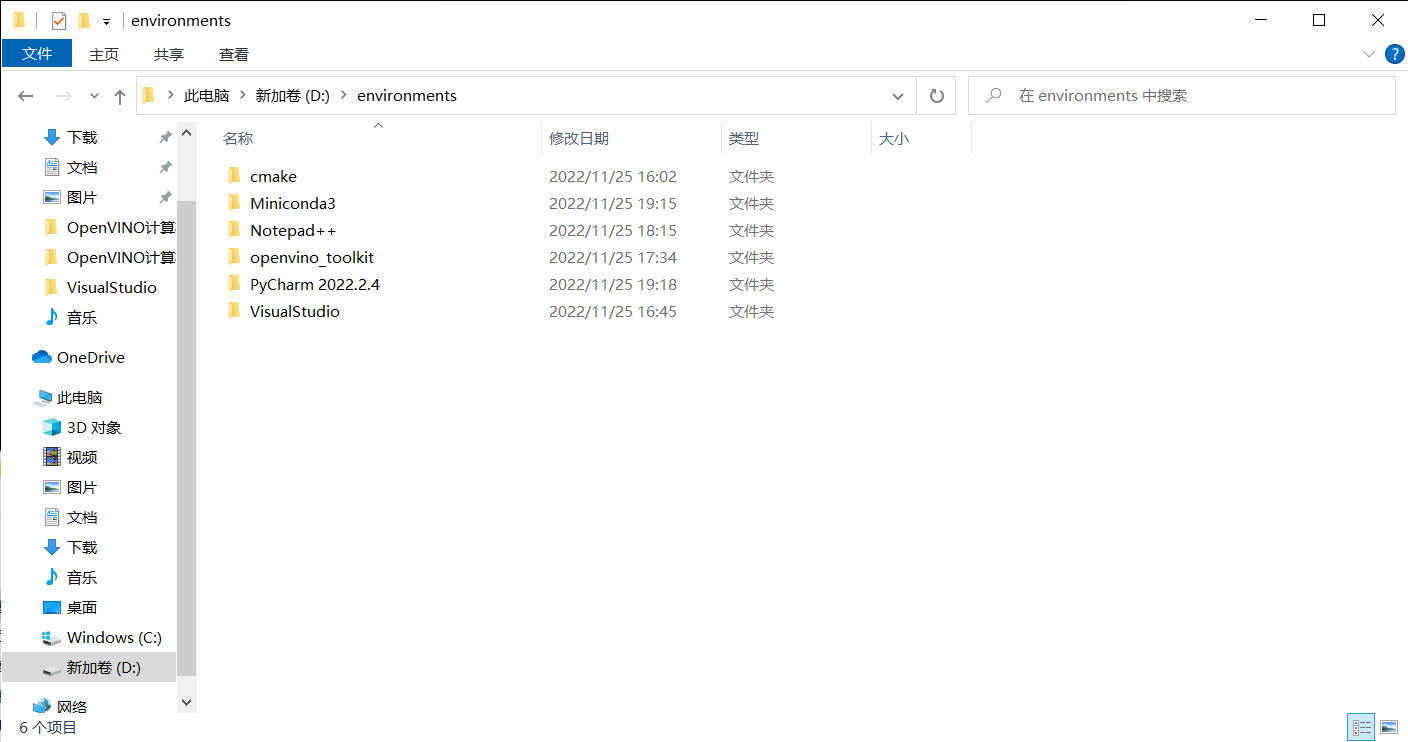

1、开发环境搭建

安装cmake、Miniconda3、Notepad++、PyCharm、VisualStudio 2019

注意:安装Miniconda3一定要设置其自动添加环境变量,需要添加5个环境变量,手动添加很可能会漏掉,排错困难

下载OpenVINO并安装:[Download Intel Distribution of OpenVINO Toolkit](https://www.intel.com/content/www/us/en/developer/tools/openvino-toolkit/download-previous-versions.html?operatingsystem=window&distributions=webdownload&version=2021 4.2 LTS&options=offline)

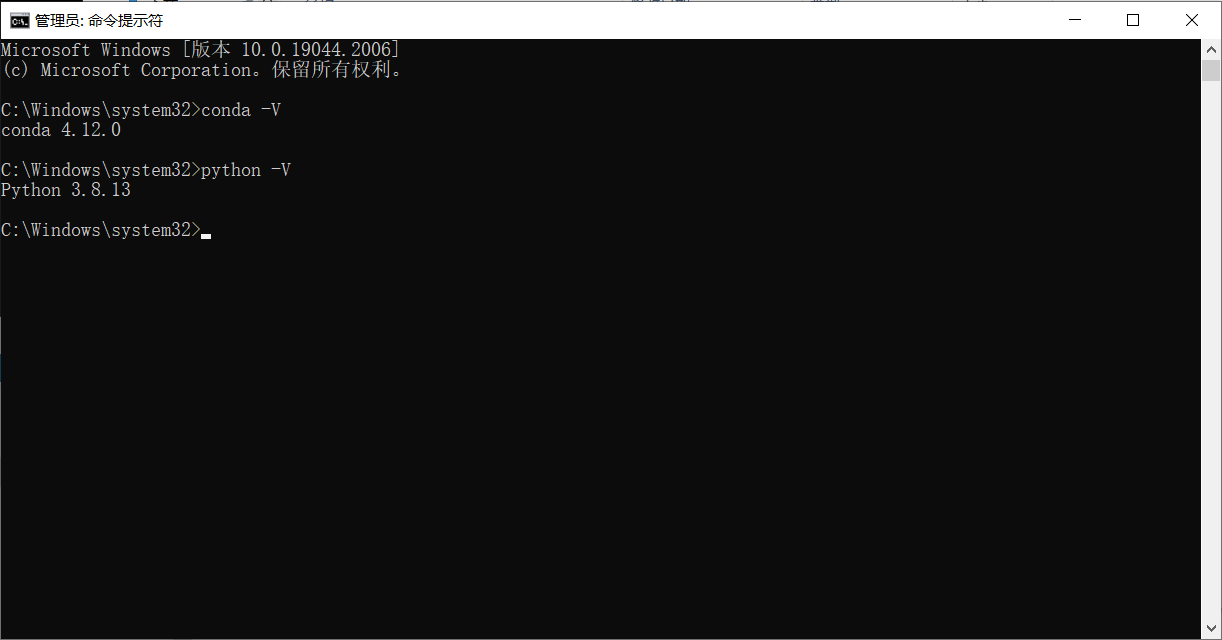

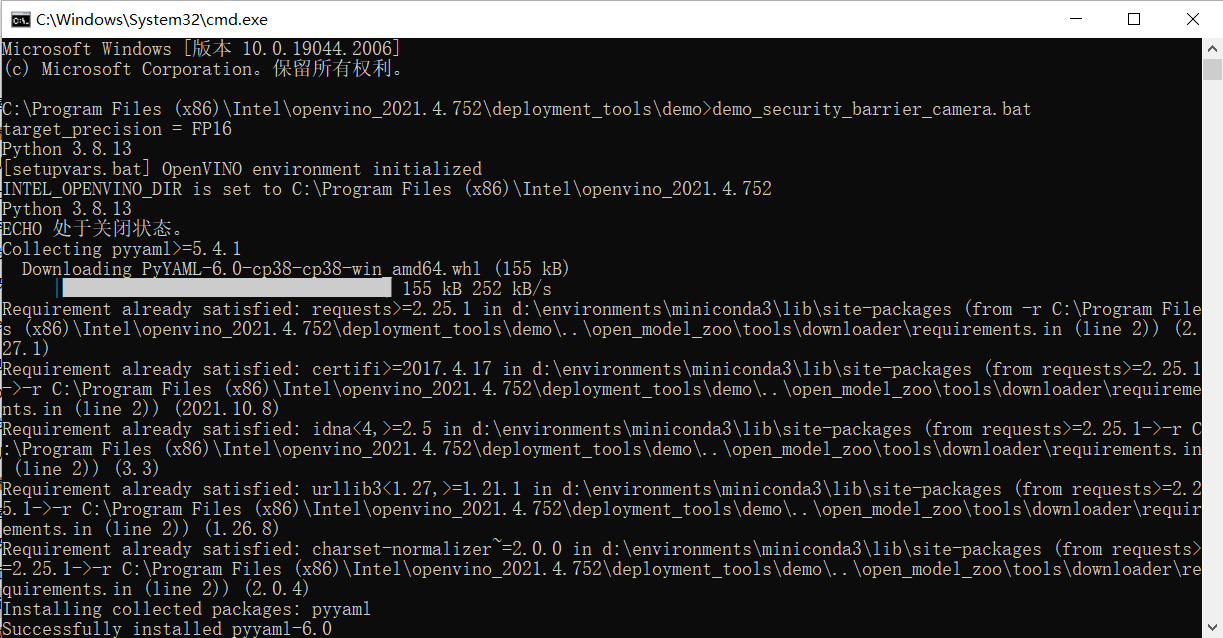

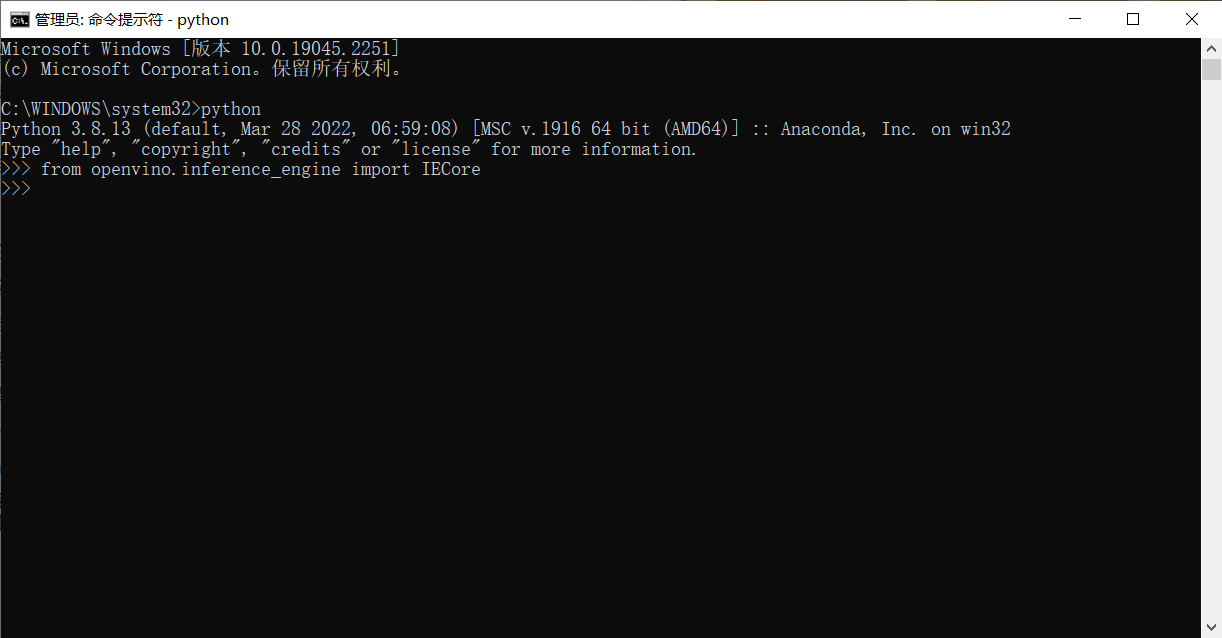

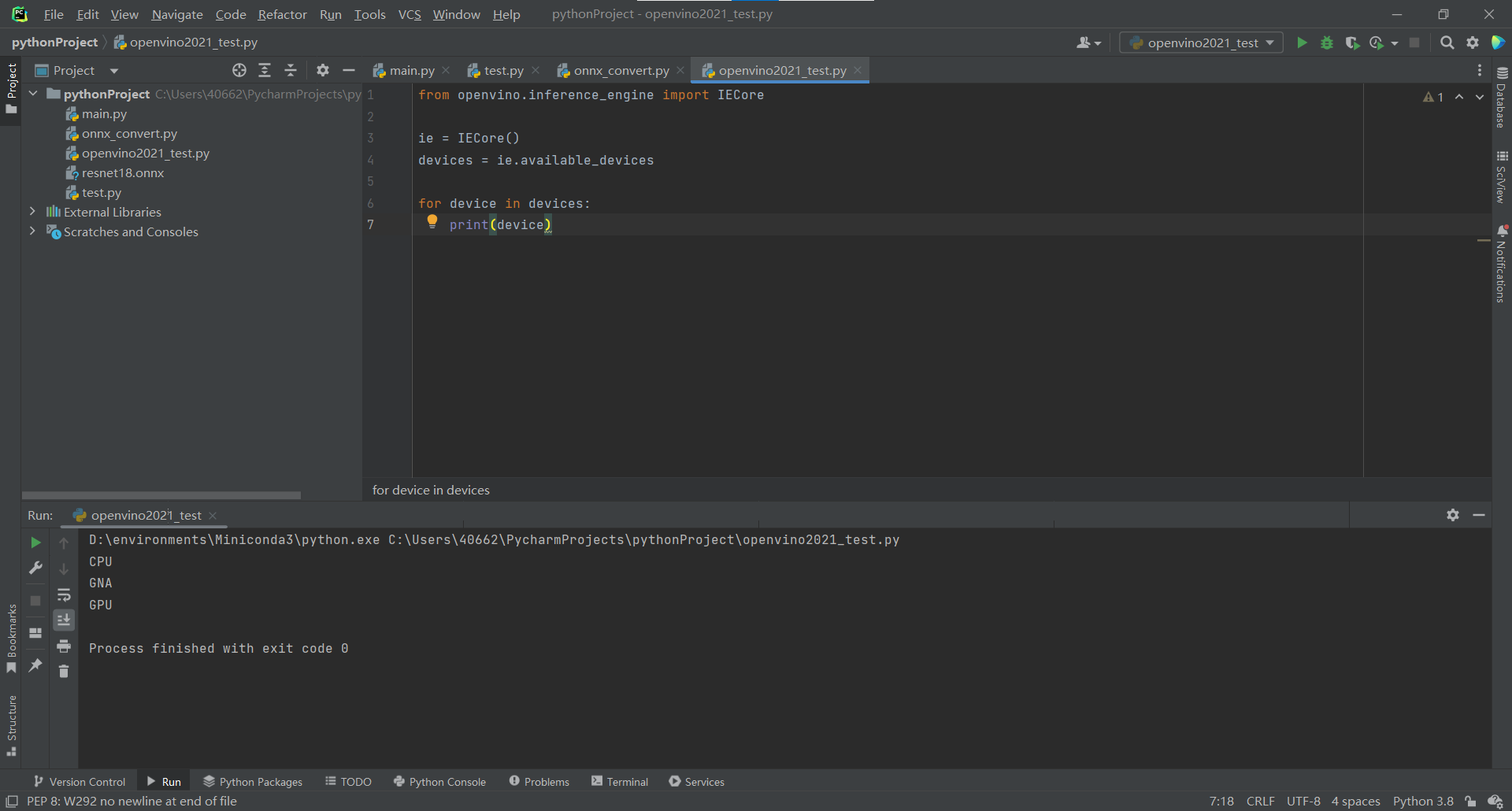

安装完毕后运行测试程序

出现下面运行结果代表安装配置成功

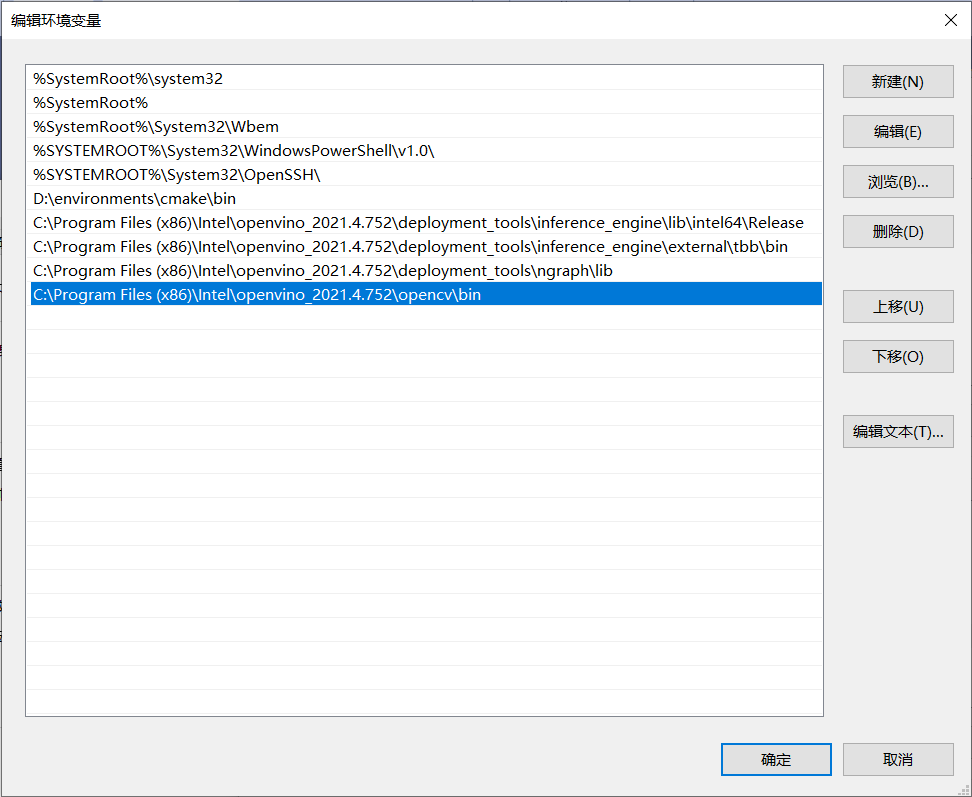

添加OpenVINO环境变量

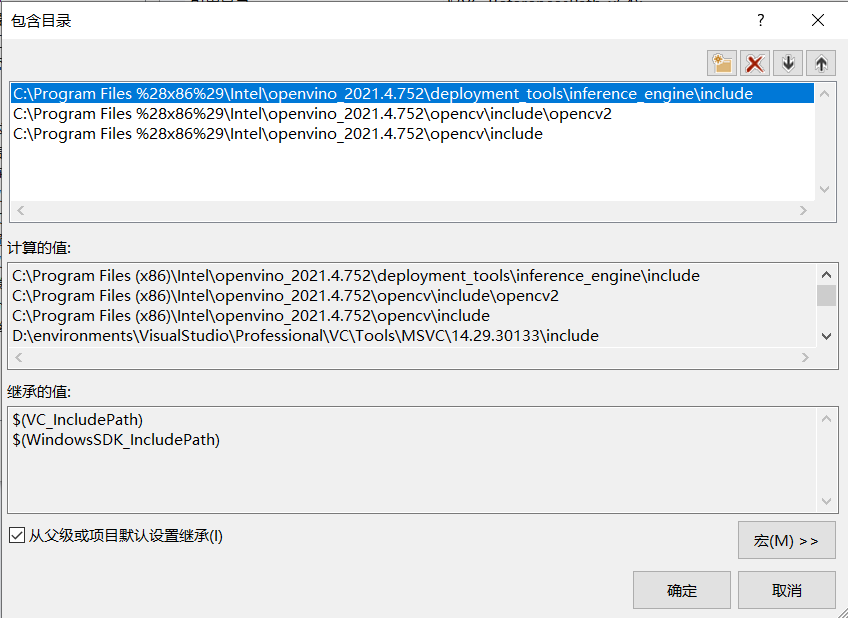

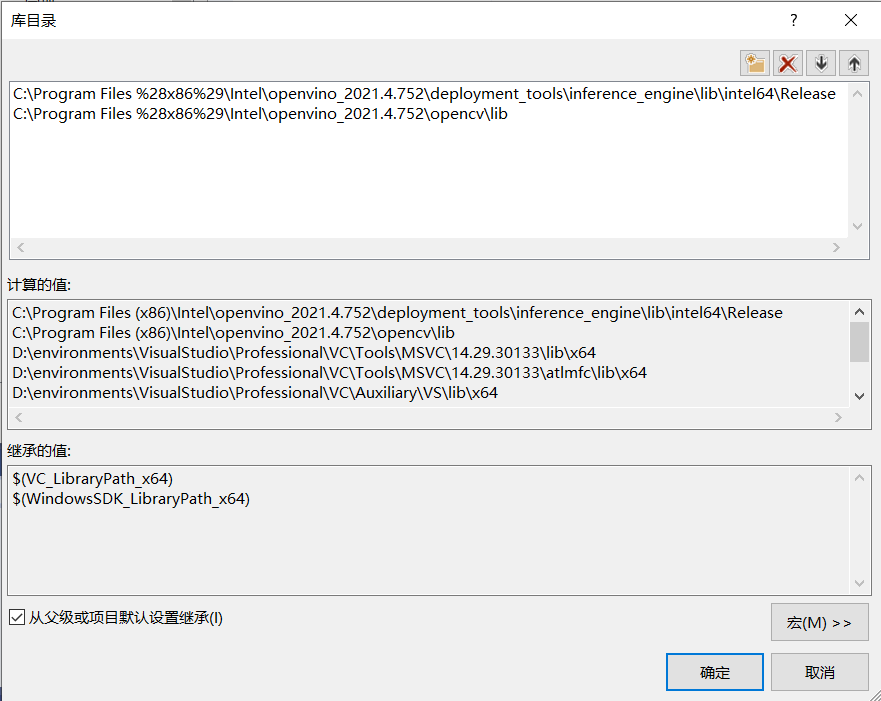

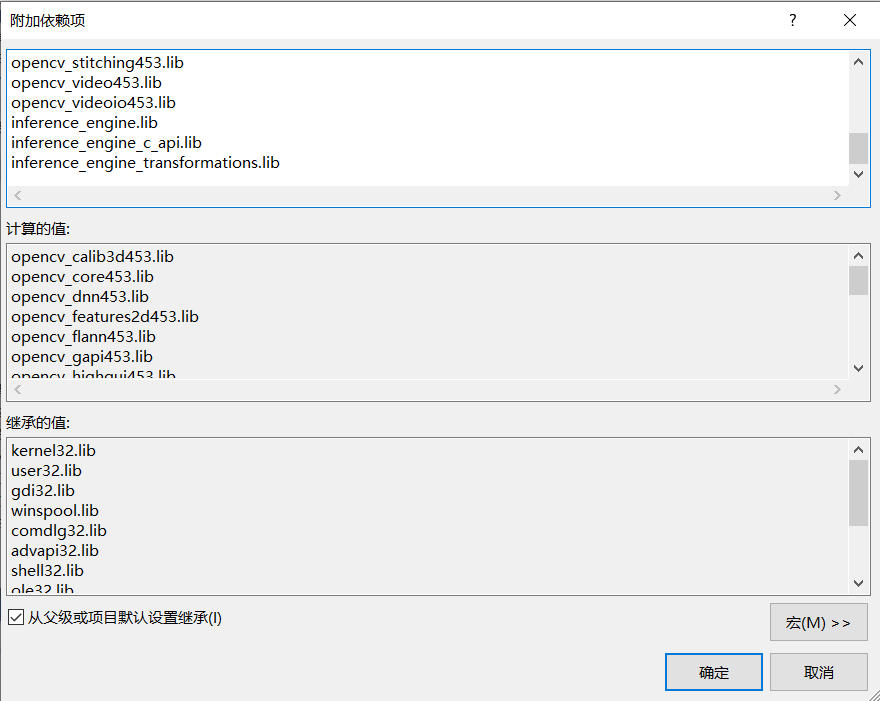

配置VisualStudio包含目录、库目录及附加依赖项

运行以下脚本自动获取附加依赖项

添加附加依赖项

至此,开发环境搭建完毕!

2、SDK介绍与开发流程

inference_engine.dll 推理引擎

依赖支持:inference_engine_transformations.dll, tbb.dll, tbbmalloc.dll, ngraph.dll

一定要把这些dll文件都添加到 C:/Windows/System32 中才可以正常运行OpenVINO程序

InferenceEngine相关API函数支持

- InferenceEngine::Core

- InferenceEngine::Blob, InferenceEngine::TBlob, InferenceEngine::NV12Blob

- InferenceEngine::BlobMap

- InferenceEngine::InputsDataMap, InferenceEngine::InputInfo

- InferenceEngine::OutputsDataMap

- InferenceEngine核心库的包装类

- InferenceEngine::CNNNetwork

- InferenceEngine::ExecutableNetwork

- InferenceEngine::InferRequest

代码实现

#include <inference_engine.hpp>

#include <iostream>

using namespace InferenceEngine;

int main(int argc, char** argv) {

InferenceEngine::Core ie; //使用推理引擎获取可用的设备及cpu全称

std::vector<std::string> devices = ie.GetAvailableDevices();

for (std::string name : devices) {

std::cout << "device name: " << name << std::endl;

}

std::string cpuName = ie.GetMetric("CPU", METRIC_KEY(FULL_DEVICE_NAME)).as<std::string>();

std::cout << "cpu full name: " << cpuName << std::endl;

return 0;

}

效果:

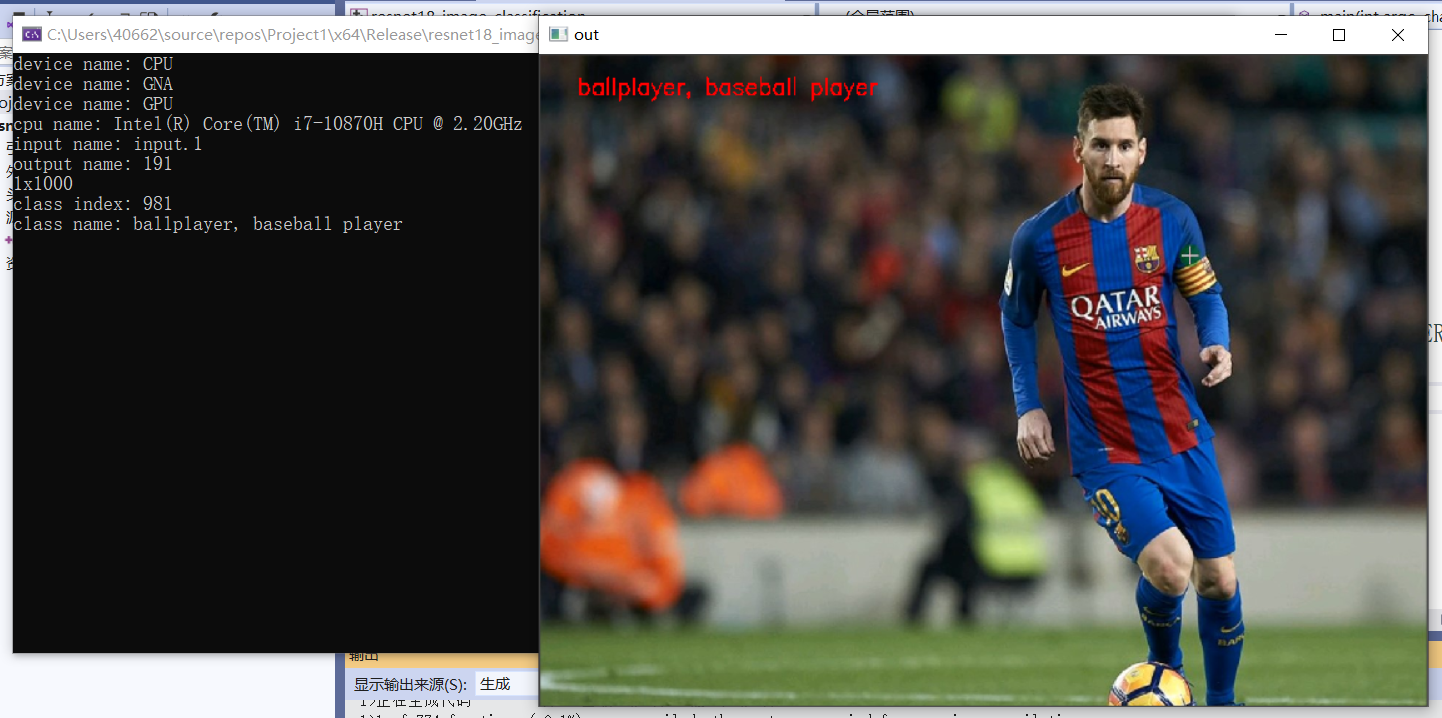

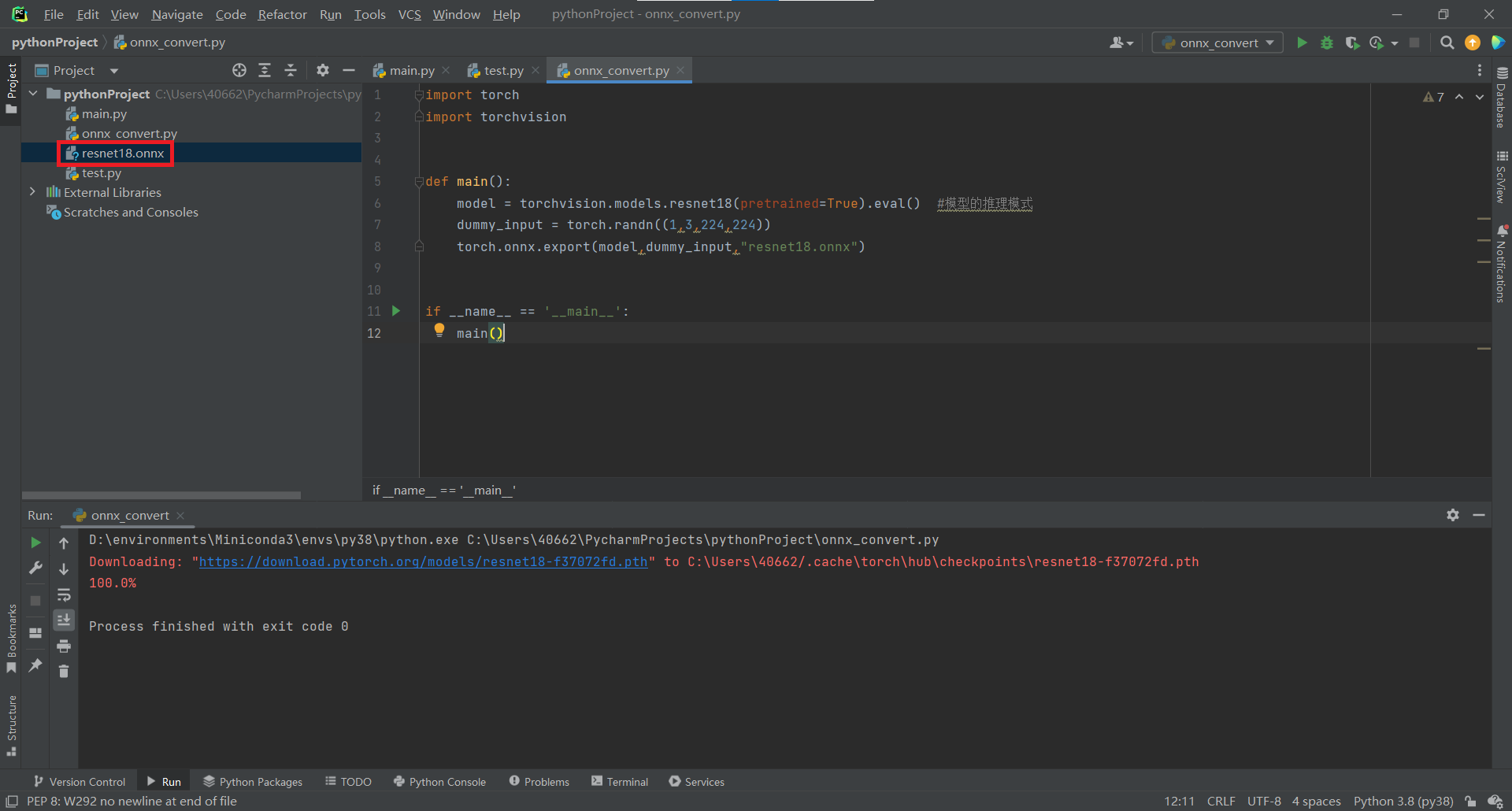

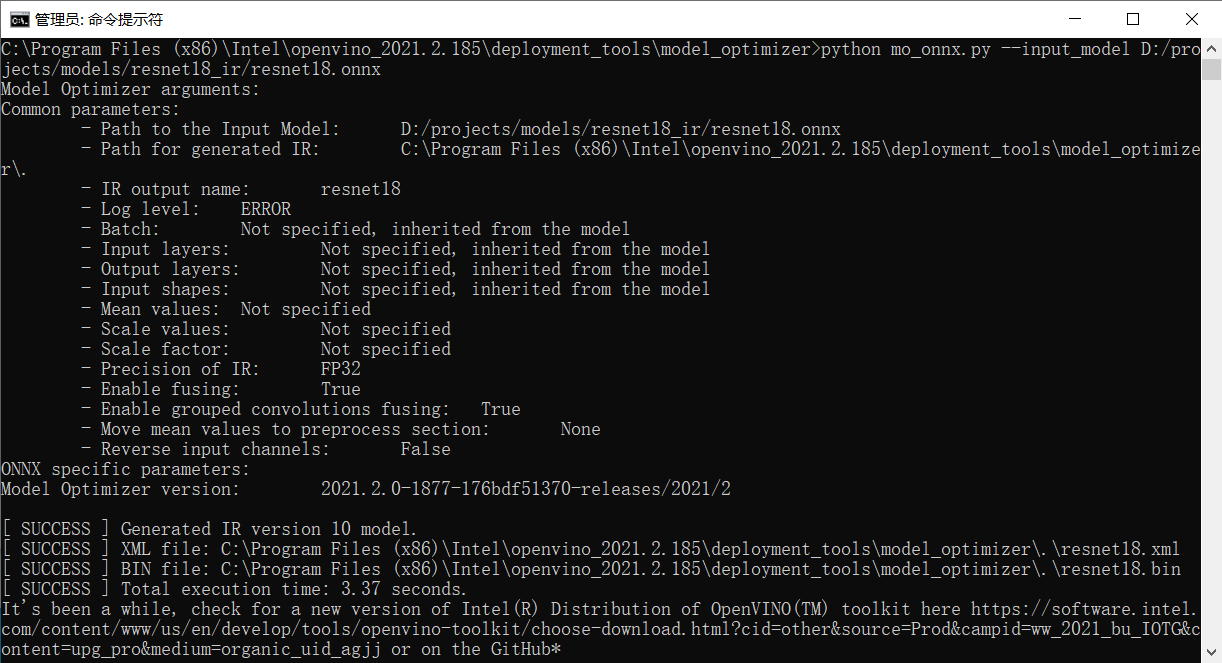

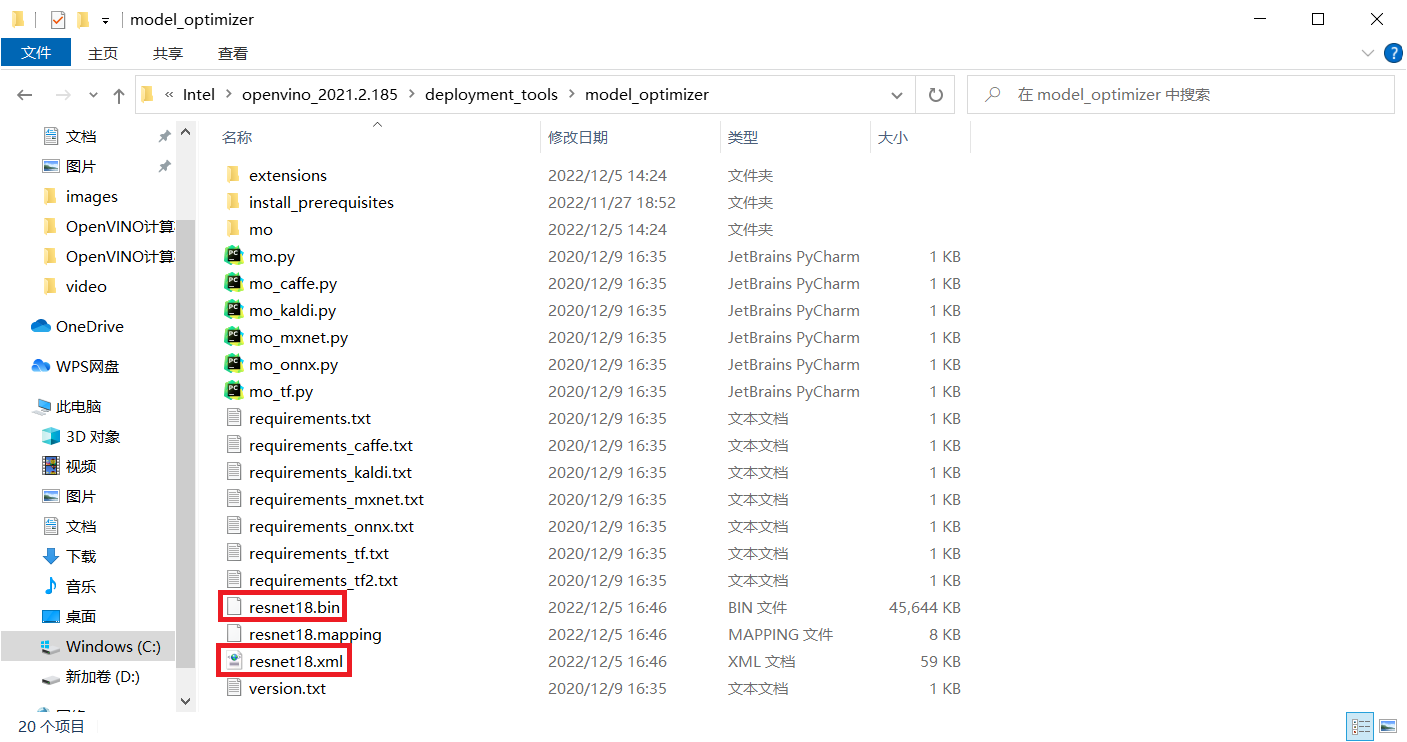

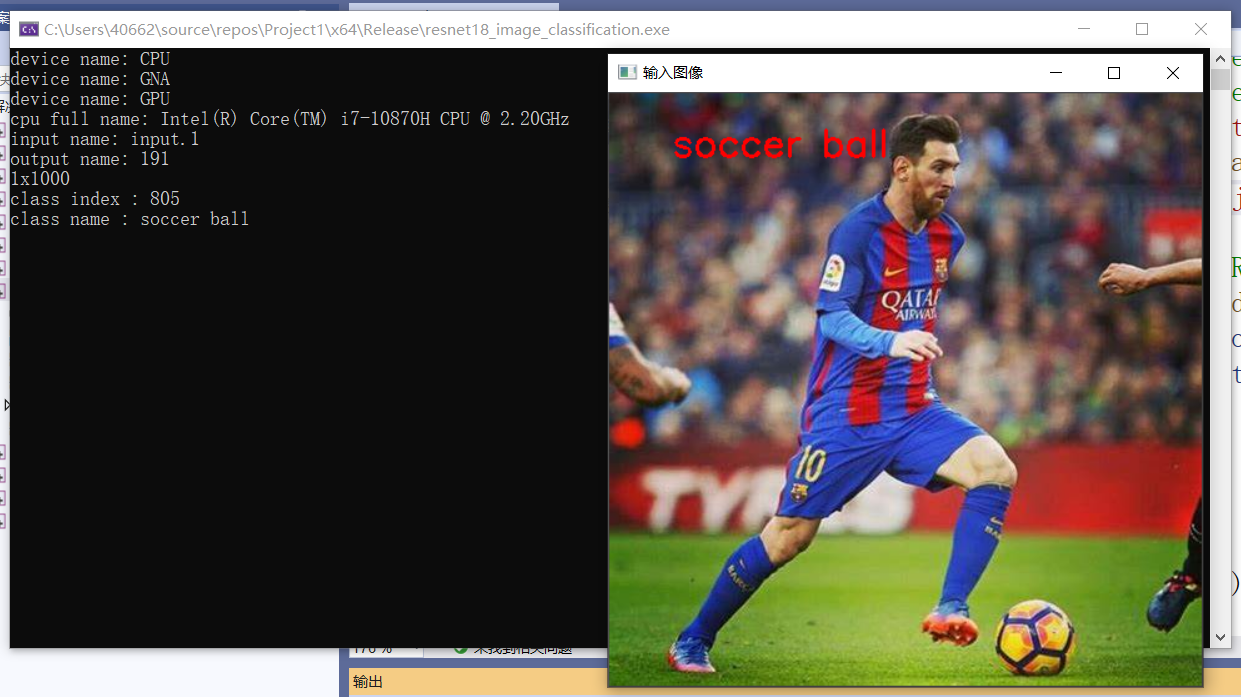

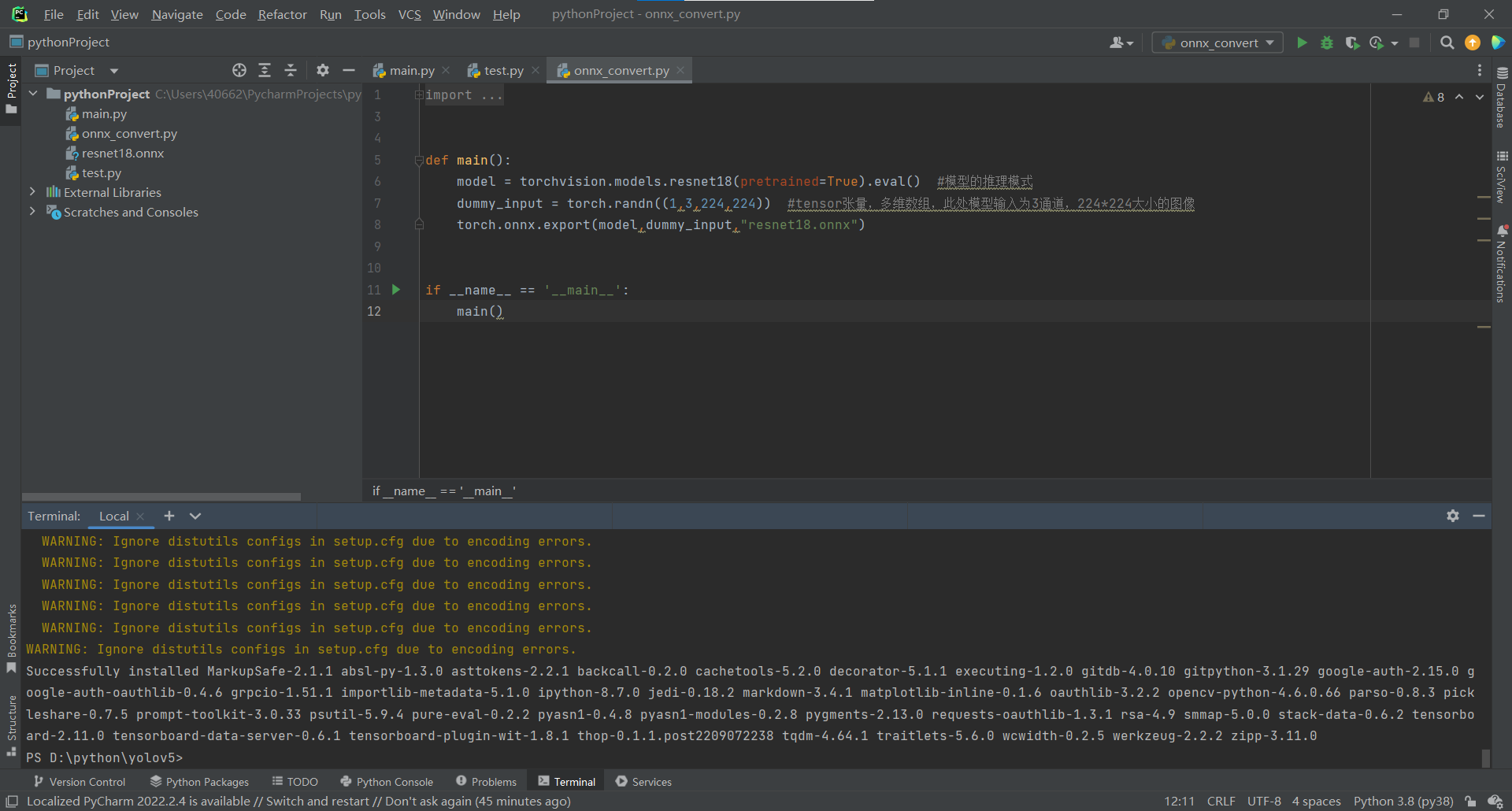

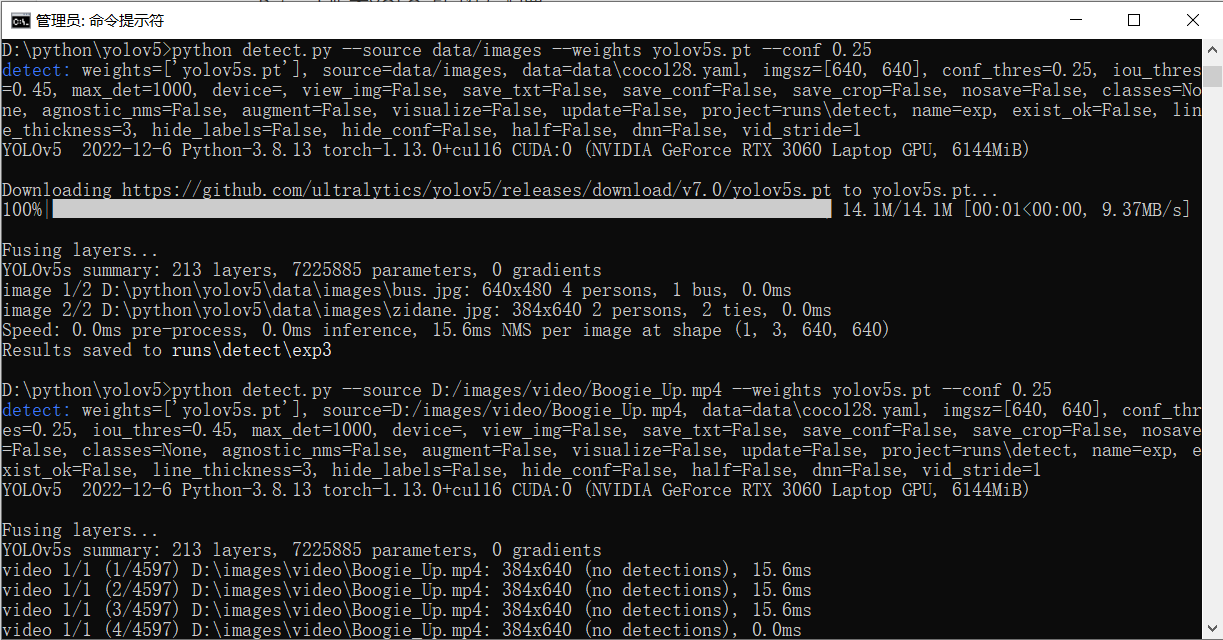

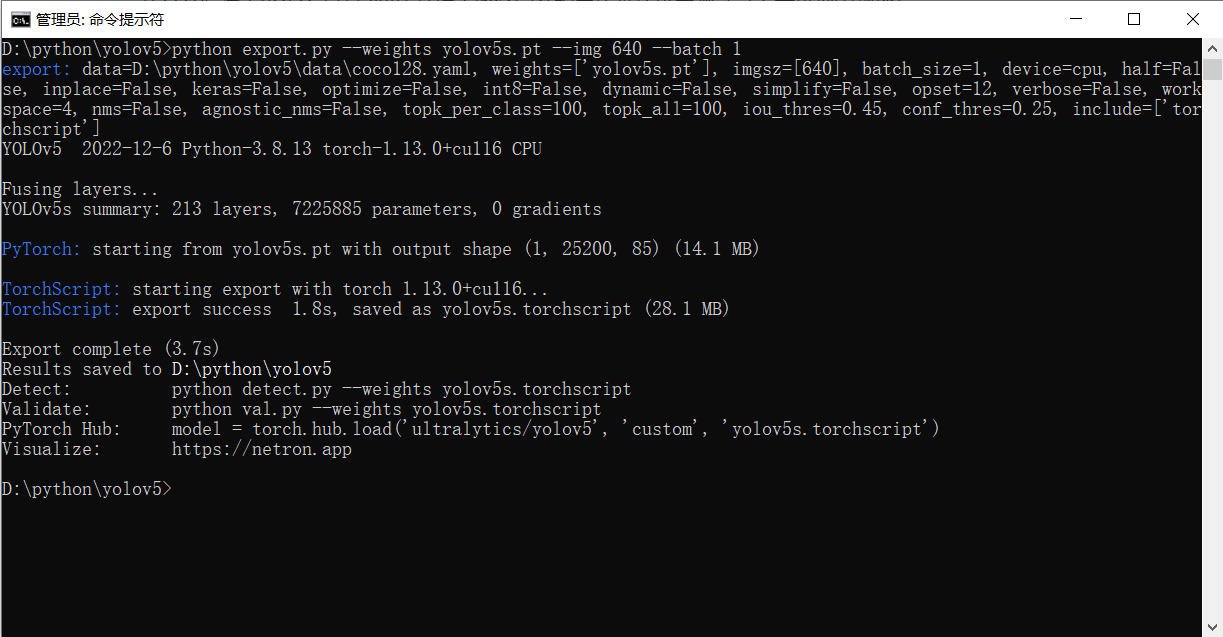

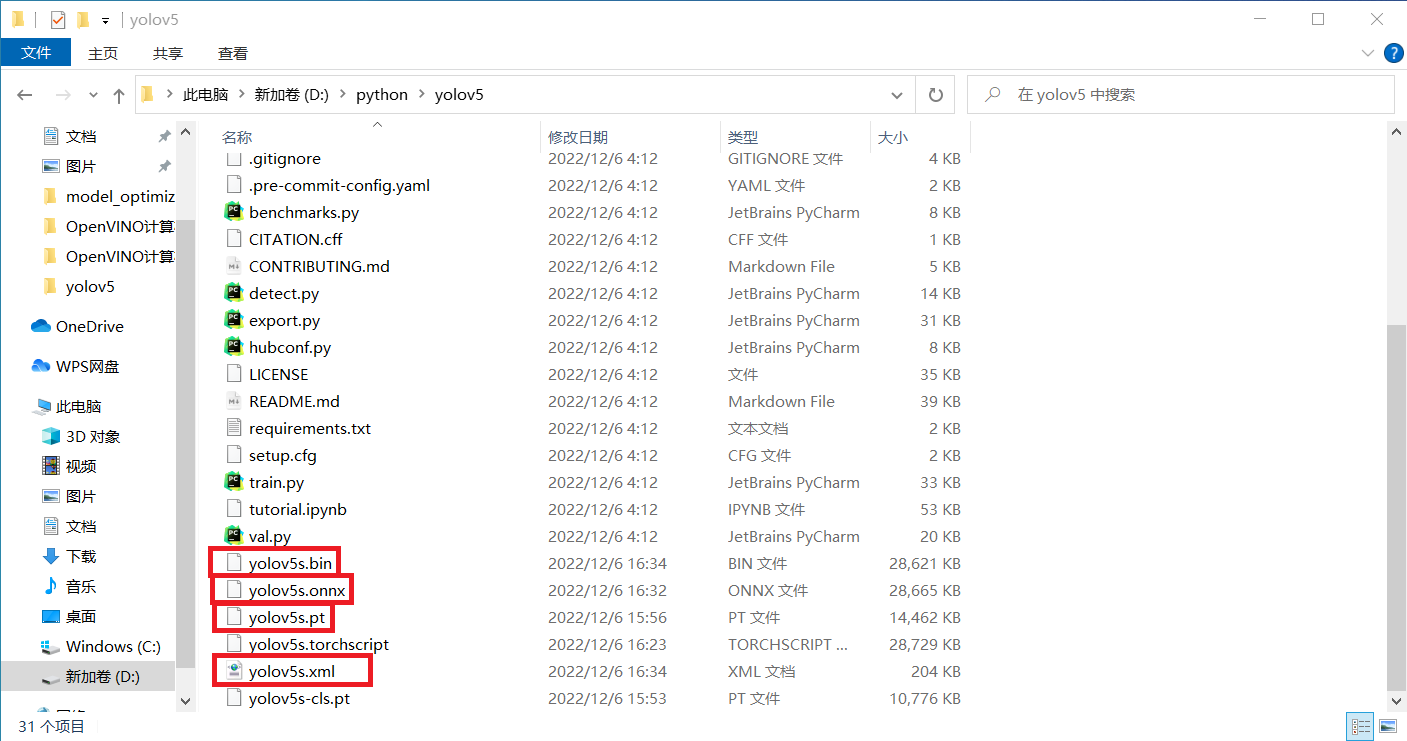

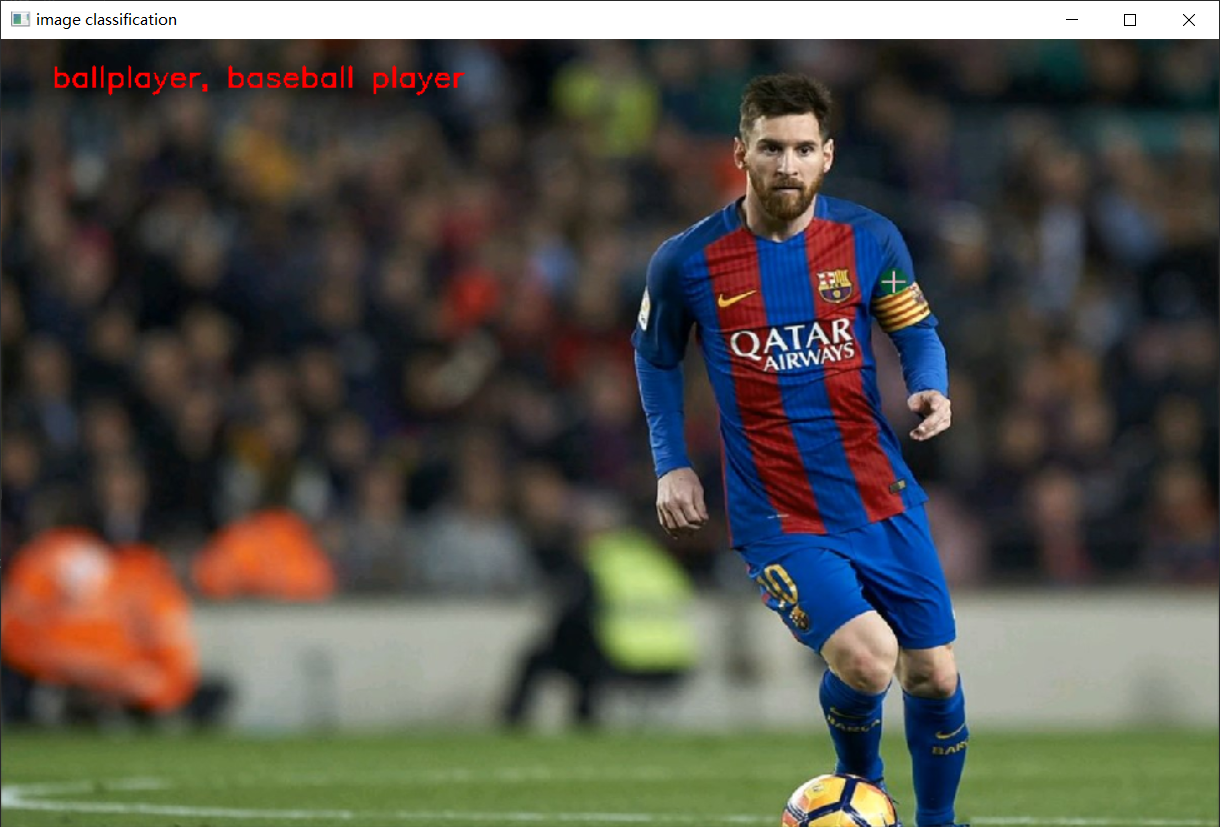

3、ResNet18实现图像分类

预训练模型介绍 - ResNet18

- 预处理图像

- mean = [0.485, 0.456, 0.406], std = [0.229, 0.224, 0.225],图像归一化后再减去均值,除以方差

- 输入:NCHW = 1 * 3 * 224 * 224 (num,channels,height,width)

- 输出格式:1 * 1000

代码实现整体步骤

- 初始化Core ie

- ie.ReadNetwork

- 获取输入与输出格式并设置精度

- 获取可执行网络并链接硬件

- auto executable_network = ie.LoadNetwork(network, "CPU");

- 创建推理请求

- auto infer_request = executable_network.CreateInferRequest();

- 设置输入数据 - 图像数据预处理

- 推理并解析输出

代码实现

#include <inference_engine.hpp>

#include <opencv2/opencv.hpp>

#include <fstream> //fstream文件读写操作,iostream为控制台操作

using namespace InferenceEngine;

std::string labels_txt_file = "D:/projects/models/resnet18_ir/imagenet_classes.txt";

std::vector<std::string> readClassNames();

int main(int argc, char** argv) {

InferenceEngine::Core ie;

std::vector<std::string> devices = ie.GetAvailableDevices();

for (std::string name : devices) {

std::cout << "device name: " << name << std::endl;

}

std::string cpuName = ie.GetMetric("CPU", METRIC_KEY(FULL_DEVICE_NAME)).as<std::string>();

std::cout << "cpu name: " << cpuName << std::endl;

std::string xml = "D:/projects/models/resnet18_ir/resnet18.xml";

std::string bin = "D:/projects/models/resnet18_ir/resnet18.bin";

std::vector<std::string> labels = readClassNames(); //读取标签

cv::Mat src = cv::imread("D:/images/messi.jpg"); //读取图像

InferenceEngine::CNNNetwork network = ie.ReadNetwork(xml, bin); //读取resnet18网络

InferenceEngine::InputsDataMap inputs = network.getInputsInfo(); //DataMap是一个Mat数组

InferenceEngine::OutputsDataMap outputs = network.getOutputsInfo(); //DataMap是一个Mat数组

std::string input_name = "";

for (auto item : inputs) { //auto可以自动推断变量类型

input_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto input_data = item.second;

input_data->setPrecision(Precision::FP32);

input_data->setLayout(Layout::NCHW);

input_data->getPreProcess().setColorFormat(ColorFormat::RGB);

std::cout << "input name: " << input_name << std::endl;

}

std::string output_name = "";

for (auto item : outputs) { //auto可以自动推断变量类型

output_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto output_data = item.second;

output_data->setPrecision(Precision::FP32);

//注意:output_data不要设置结构

std::cout << "output name: " << output_name << std::endl;

}

auto executable_network = ie.LoadNetwork(network, "CPU"); //设置运行的设备

auto infer_request = executable_network.CreateInferRequest(); //设置推理请求

//图像预处理

auto input = infer_request.GetBlob(input_name); //获取网络输入图像信息

size_t num_channels = input->getTensorDesc().getDims()[1]; //size_t 类型表示C中任何对象所能达到的最大长度,它是无符号整数

size_t h = input->getTensorDesc().getDims()[2];

size_t w = input->getTensorDesc().getDims()[3];

size_t image_size = h * w;

cv::Mat blob_image;

cv::resize(src, blob_image, cv::Size(w, h)); //将输入图片大小转换为与网络输入大小一致

blob_image.convertTo(blob_image, CV_32F); //将输入图像转换为浮点数

blob_image = blob_image / 255.0;

cv::subtract(blob_image, cv::Scalar(0.485, 0.456, 0.406), blob_image);

cv::divide(blob_image, cv::Scalar(0.229, 0.224, 0.225), blob_image);

// HWC =》NCHW 将输入图像从HWC格式转换为NCHW格式

float* data = static_cast<float*>(input->buffer()); //将图像放到buffer中,放入input中

for (size_t row = 0; row < h; row++) {

for (size_t col = 0; col < w; col++) {

for (size_t ch = 0; ch < num_channels; ch++) {

//将每个通道变成一张图,按照通道顺序

data[image_size * ch + row * w + col] = blob_image.at<cv::Vec3f>(row, col)[ch];

}

}

}

infer_request.Infer();

auto output = infer_request.GetBlob(output_name);

//转换输出数据

const float* probs = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(output->buffer());

const SizeVector outputDims = output->getTensorDesc().getDims(); //获取输出维度信息 1*1000

std::cout << outputDims[0] << "x" << outputDims[1] << std::endl;

float max = probs[0];

int max_index = 0;

for (int i = 1; i < outputDims[1]; i++) {

if (max < probs[i]) { //找到结果probs中的最大值,获取其下标

max = probs[i];

max_index = i;

}

}

std::cout << "class index: " << max_index << std::endl;

std::cout << "class name: " << labels[max_index] << std::endl;

cv::putText(src, labels[max_index], cv::Point(50, 50), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 255), 2, 8);

cv::namedWindow("out", cv::WINDOW_FREERATIO);

cv::imshow("out", src);

cv::waitKey(0);

return 0;

}

std::vector<std::string> readClassNames() { //读取文件

std::vector<std::string> classNames;

std::ifstream fp(labels_txt_file);

if (!fp.is_open()) {

printf("could not open file...\n");

exit(-1);

}

std::string name;

while (!fp.eof()) { //eof()函数判断是否读到文件末尾

std::getline(fp, name); //逐行读取文件并保存在变量中

if (name.length()) {

classNames.push_back(name);

}

}

fp.close();

return classNames;

}

效果:

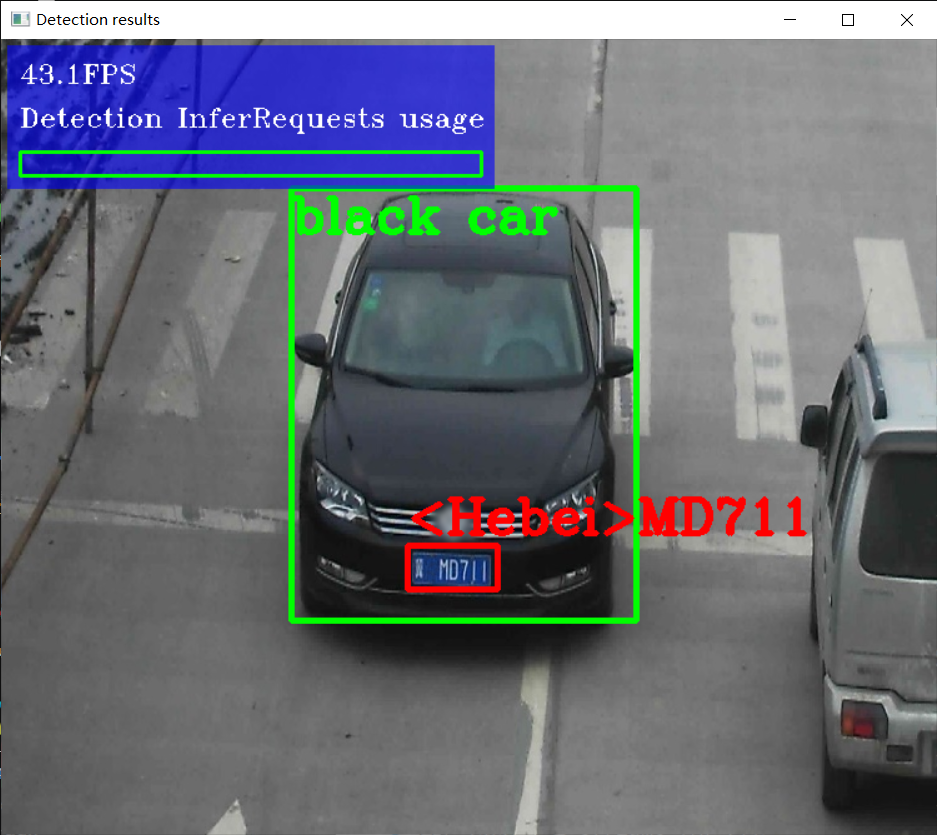

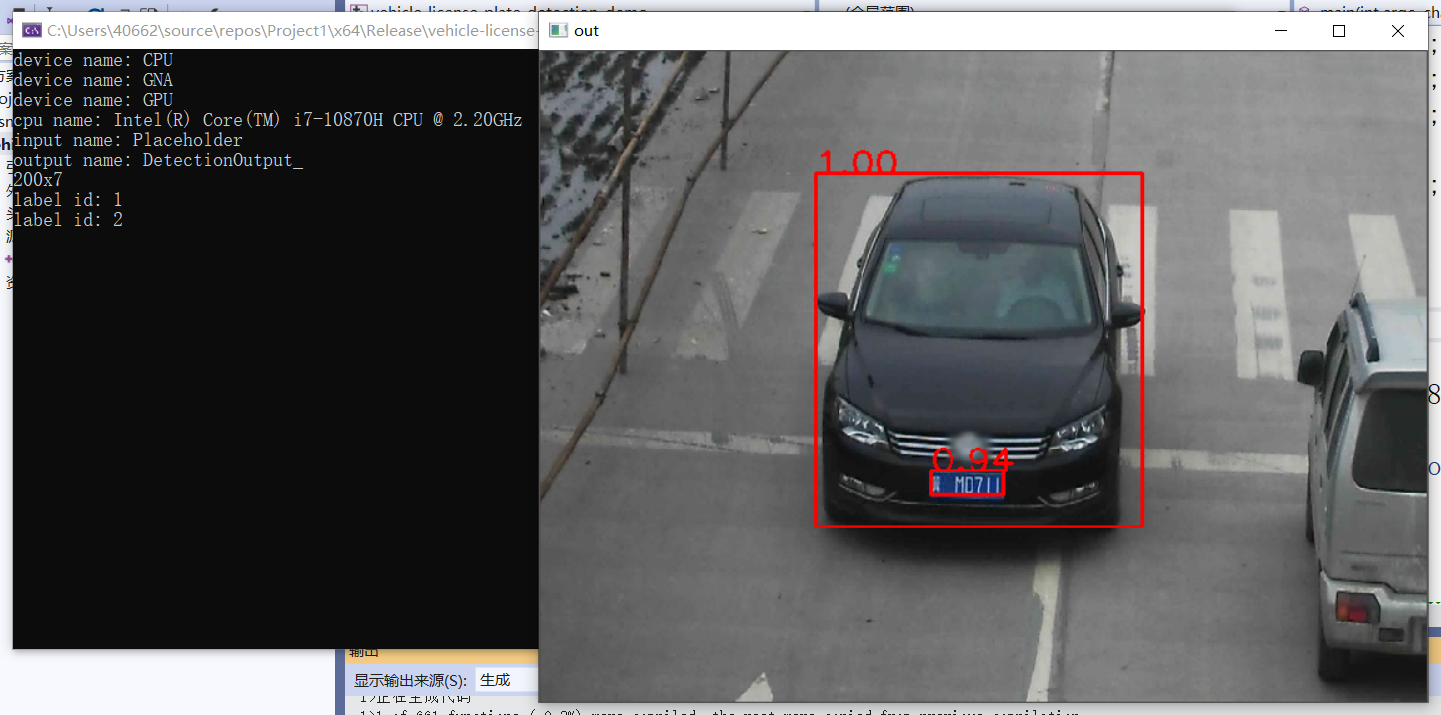

4、车辆检测与车牌识别

模型介绍

- vehicle - license - plate - detection - varrier - 0106

- 基于BIT-Vehicle数据集

- 输入 1 * 3 * 300 * 300 = NCHW

- 输出格式:[1, 1, N, 7]

- 七个值:[image_id, label, conf, x_min, y_min, x_max, y_max]

调用流程

- 加载模型

- 设置输入输出

- 构建输入

- 执行推断

- 解析输出

- 显示结果

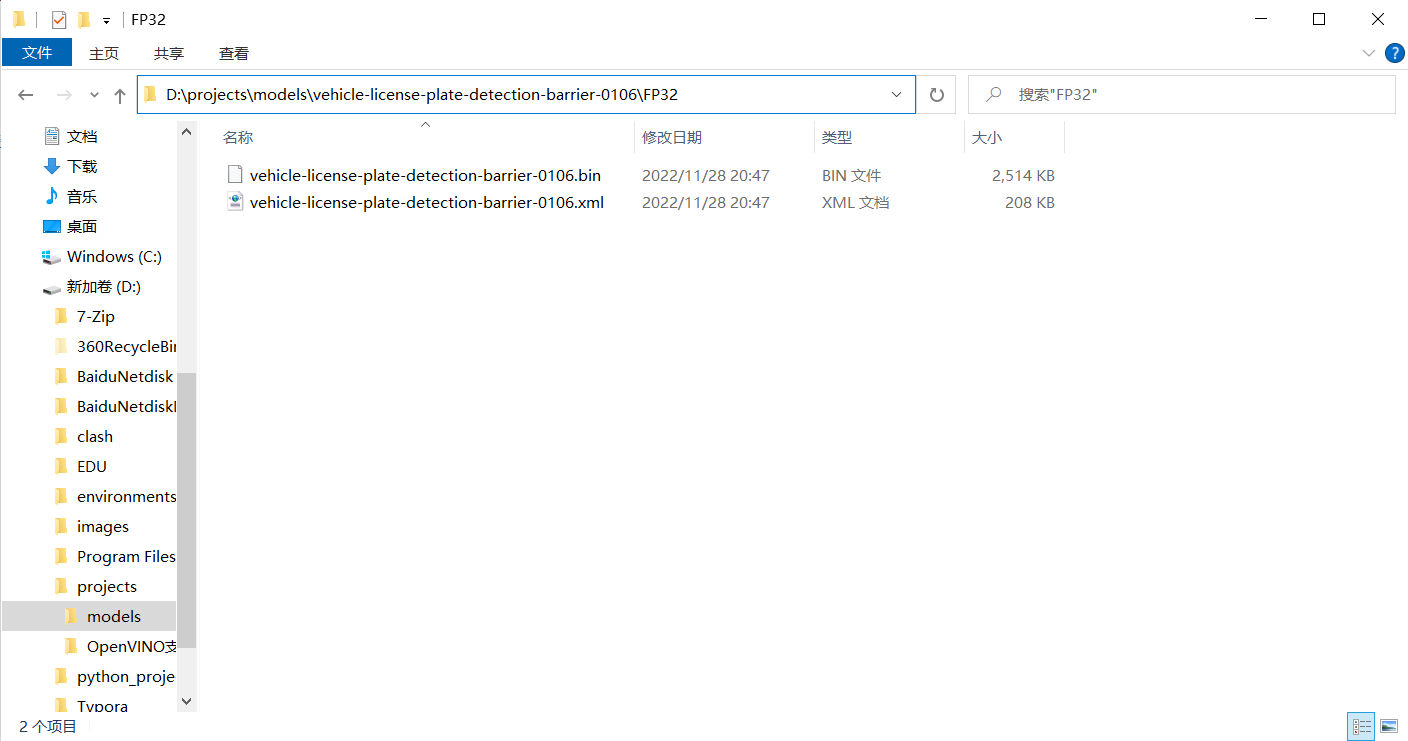

车辆及车牌检测模型下载

cd C:\Program Files (x86)\Intel\openvino_2021.2.185\deployment_tools\open_model_zoo\tools\downloader #以管理员身份运行cmd,切换到downloader文件夹下

python downloader.py --name vehicle-license-plate-detection-barrier-0106 #在该文件夹下执行该脚本,下载模型

出现下图代表下载成功:

将下载的模型文件移动到模型文件夹中:

车辆及车牌检测代码实现

#include <inference_engine.hpp>

#include <opencv2/opencv.hpp>

#include <fstream> //fstream文件读写操作,iostream为控制台操作

using namespace InferenceEngine;

int main(int argc, char** argv) {

InferenceEngine::Core ie;

std::vector<std::string> devices = ie.GetAvailableDevices();

for (std::string name : devices) {

std::cout << "device name: " << name << std::endl;

}

std::string cpuName = ie.GetMetric("CPU", METRIC_KEY(FULL_DEVICE_NAME)).as<std::string>();

std::cout << "cpu name: " << cpuName << std::endl;

std::string xml = "D:/projects/models/vehicle-license-plate-detection-barrier-0106/FP32/vehicle-license-plate-detection-barrier-0106.xml";

std::string bin = "D:/projects/models/vehicle-license-plate-detection-barrier-0106/FP32/vehicle-license-plate-detection-barrier-0106.bin";

cv::Mat src = cv::imread("D:/images/car_1.bmp"); //读取图像

int im_h = src.rows;

int im_w = src.cols;

InferenceEngine::CNNNetwork network = ie.ReadNetwork(xml, bin); //读取resnet18网络

InferenceEngine::InputsDataMap inputs = network.getInputsInfo(); //DataMap是一个Mat数组

InferenceEngine::OutputsDataMap outputs = network.getOutputsInfo(); //DataMap是一个Mat数组

std::string input_name = "";

for (auto item : inputs) { //auto可以自动推断变量类型

input_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto input_data = item.second;

input_data->setPrecision(Precision::U8); //默认为unsigned char对应U8

input_data->setLayout(Layout::NCHW);

//input_data->getPreProcess().setColorFormat(ColorFormat::BGR); 默认就是BGR

std::cout << "input name: " << input_name << std::endl;

}

std::string output_name = "";

for (auto item : outputs) { //auto可以自动推断变量类型

output_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto output_data = item.second;

output_data->setPrecision(Precision::FP32); //输出还是浮点数

//注意:output_data不要设置结构

std::cout << "output name: " << output_name << std::endl;

}

auto executable_network = ie.LoadNetwork(network, "CPU"); //设置运行的设备

auto infer_request = executable_network.CreateInferRequest(); //设置推理请求

//图像预处理

auto input = infer_request.GetBlob(input_name); //获取网络输入图像信息

size_t num_channels = input->getTensorDesc().getDims()[1]; //size_t 类型表示C中任何对象所能达到的最大长度,它是无符号整数

size_t h = input->getTensorDesc().getDims()[2];

size_t w = input->getTensorDesc().getDims()[3];

size_t image_size = h * w;

cv::Mat blob_image;

cv::resize(src, blob_image, cv::Size(w, h)); //将输入图片大小转换为与网络输入大小一致

//cv::cvtColor(blob_image, blob_image, cv::COLOR_BGR2RGB); //色彩空间转换

// HWC =》NCHW 将输入图像从HWC格式转换为NCHW格式

unsigned char* data = static_cast<unsigned char*>(input->buffer()); //将图像放到buffer中,放入input中

for (size_t row = 0; row < h; row++) {

for (size_t col = 0; col < w; col++) {

for (size_t ch = 0; ch < num_channels; ch++) {

//将每个通道变成一张图,按照通道顺序

data[image_size * ch + row * w + col] = blob_image.at<cv::Vec3b>(row, col)[ch];

}

}

}

infer_request.Infer();

auto output = infer_request.GetBlob(output_name);

//转换输出数据

const float* detection_out = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(output->buffer());

//output:[1, 1, N, 7]

//七个参数为:[image_id, label, conf, x_min, y_min, x_max, y_max]

const SizeVector outputDims = output->getTensorDesc().getDims(); //获取输出维度信息 1*1000

std::cout << outputDims[2] << "x" << outputDims[3] << std::endl;

const int max_count = outputDims[2]; //识别出的对象个数

const int object_size = outputDims[3]; //获取对象信息的个数,此处为7个

for (int n = 0; n < max_count; n++) {

float label = detection_out[n * object_size + 1];

float confidence = detection_out[n * object_size + 2];

float xmin = detection_out[n * object_size + 3] * im_w;

float ymin = detection_out[n * object_size + 4] * im_h;

float xmax = detection_out[n * object_size + 5] * im_w;

float ymax = detection_out[n * object_size + 6] * im_h;

if (confidence > 0.5) {

printf("label id: %d \n", static_cast<int>(label));

cv::Rect box;

box.x = static_cast<int>(xmin);

box.y = static_cast<int>(ymin);

box.width = static_cast<int>(xmax - xmin);

box.height = static_cast<int>(ymax - ymin);

cv::rectangle(src, box, cv::Scalar(0, 0, 255), 2, 8);

//box.tl()返回矩形左上角坐标

cv::putText(src, cv::format("%.2f", confidence), box.tl(), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 255), 2, 8);

}

}

//cv::putText(src, labels[max_index], cv::Point(50, 50), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 255), 2, 8);

cv::namedWindow("out", cv::WINDOW_FREERATIO);

cv::imshow("out", src);

cv::waitKey(0);

return 0;

}

效果:

车牌识别

- 模型名称:license-plate-recognition-barrier-0001

- 输入格式:BGR

- 1 * 3 * 24 * 94,88 * 1 = [0, 1, 1, 1, 1, ...... , 1]

- 输出格式:1 * 88 * 1 * 1

下载模型(license-plate-recognition-barrier-0001),下载方法同上,实现思路:1初始化车牌识别网络,提升输入输出值的应用范围;2调用车辆及车牌检测模型进行车牌检测;3将车牌检测的数据输入车牌识别函数,使用车牌识别网络初始化的输入输出值在该函数中进行识别,输出识别到的车牌信息。

车牌识别代码实现

#include <opencv2/opencv.hpp>

#include <inference_engine.hpp>

#include <fstream>

using namespace InferenceEngine;

static std::vector<std::string> items = {

"0","1","2","3","4","5","6","7","8","9",

"< Anhui >","< Beijing >","< Chongqing >","< Fujian >",

"< Gansu >","< Guangdong >","< Guangxi >","< Guizhou >",

"< Hainan >","< Hebei >","< Heilongjiang >","< Henan >",

"< HongKong >","< Hubei >","< Hunan >","< InnerMongolia >",

"< Jiangsu >","< Jiangxi >","< Jilin >","< Liaoning >",

"< Macau >","< Ningxia >","< Qinghai >","< Shaanxi >",

"< Shandong >","< Shanghai >","< Shanxi >","< Sichuan >",

"< Tianjin >","< Tibet >","< Xinjiang >","< Yunnan >",

"< Zhejiang >","< police >",

"A","B","C","D","E","F","G","H","I","J",

"K","L","M","N","O","P","Q","R","S","T",

"U","V","W","X","Y","Z"

};

InferenceEngine::InferRequest plate_request;

std::string plate_input_name1;

std::string plate_input_name2;

std::string plate_output_name;

void load_plate_recog_model();

void fetch_plate_text(cv::Mat &image, cv::Mat &plateROI);

int main(int argc, char** argv) {

InferenceEngine::Core ie;

load_plate_recog_model(); //调用车牌识别模型,模型信息保存到plate_input_name1/name2/output_name中

std::string xml = "D:/projects/models/vehicle-license-plate-detection-barrier-0106/FP32/vehicle-license-plate-detection-barrier-0106.xml";

std::string bin = "D:/projects/models/vehicle-license-plate-detection-barrier-0106/FP32/vehicle-license-plate-detection-barrier-0106.bin";

cv::Mat src = cv::imread("D:/images/car_1.bmp"); //读取图像

int im_h = src.rows;

int im_w = src.cols;

InferenceEngine::CNNNetwork network = ie.ReadNetwork(xml, bin); //读取resnet18网络

InferenceEngine::InputsDataMap inputs = network.getInputsInfo(); //DataMap是一个Mat数组

InferenceEngine::OutputsDataMap outputs = network.getOutputsInfo(); //DataMap是一个Mat数组

std::string input_name = "";

for (auto item : inputs) { //auto可以自动推断变量类型

input_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto input_data = item.second;

input_data->setPrecision(Precision::U8); //默认为unsigned char对应U8

input_data->setLayout(Layout::NCHW);

//input_data->getPreProcess().setColorFormat(ColorFormat::BGR); 默认就是BGR

std::cout << "input name: " << input_name << std::endl;

}

std::string output_name = "";

for (auto item : outputs) { //auto可以自动推断变量类型

output_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto output_data = item.second;

output_data->setPrecision(Precision::FP32); //输出还是浮点数

//注意:output_data不要设置结构

std::cout << "output name: " << output_name << std::endl;

}

auto executable_network = ie.LoadNetwork(network, "CPU"); //设置运行的设备

auto infer_request = executable_network.CreateInferRequest(); //设置推理请求

//图像预处理

auto input = infer_request.GetBlob(input_name); //获取网络输入图像信息

size_t num_channels = input->getTensorDesc().getDims()[1]; //size_t 类型表示C中任何对象所能达到的最大长度,它是无符号整数

size_t h = input->getTensorDesc().getDims()[2];

size_t w = input->getTensorDesc().getDims()[3];

size_t image_size = h * w;

cv::Mat blob_image;

cv::resize(src, blob_image, cv::Size(w, h)); //将输入图片大小转换为与网络输入大小一致

//cv::cvtColor(blob_image, blob_image, cv::COLOR_BGR2RGB); //色彩空间转换

// HWC =》NCHW 将输入图像从HWC格式转换为NCHW格式

unsigned char* data = static_cast<unsigned char*>(input->buffer()); //将图像放到buffer中,放入input中

for (size_t row = 0; row < h; row++) {

for (size_t col = 0; col < w; col++) {

for (size_t ch = 0; ch < num_channels; ch++) {

//将每个通道变成一张图,按照通道顺序

data[image_size * ch + row * w + col] = blob_image.at<cv::Vec3b>(row, col)[ch];

}

}

}

infer_request.Infer();

auto output = infer_request.GetBlob(output_name);

//转换输出数据

const float* detection_out = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(output->buffer());

//output:[1, 1, N, 7]

//七个参数为:[image_id, label, conf, x_min, y_min, x_max, y_max]

const SizeVector outputDims = output->getTensorDesc().getDims(); //获取输出维度信息 1*1000

std::cout << outputDims[2] << "x" << outputDims[3] << std::endl;

const int max_count = outputDims[2]; //识别出的对象个数

const int object_size = outputDims[3]; //获取对象信息的个数,此处为7个

for (int n = 0; n < max_count; n++) {

float label = detection_out[n * object_size + 1];

float confidence = detection_out[n * object_size + 2];

float xmin = detection_out[n * object_size + 3] * im_w;

float ymin = detection_out[n * object_size + 4] * im_h;

float xmax = detection_out[n * object_size + 5] * im_w;

float ymax = detection_out[n * object_size + 6] * im_h;

if (confidence > 0.5) {

printf("label id: %d \n", static_cast<int>(label));

cv::Rect box;

box.x = static_cast<int>(xmin);

box.y = static_cast<int>(ymin);

box.width = static_cast<int>(xmax - xmin);

box.height = static_cast<int>(ymax - ymin);

if (label == 2) { //将车牌用绿色表示

cv::rectangle(src, box, cv::Scalar(0, 255, 0), 2, 8);

//recognize plate

cv::Rect plate_roi;

plate_roi.x = box.x - 5;

plate_roi.y = box.y - 5;

plate_roi.width = box.width + 10;

plate_roi.height = box.height + 10;

cv::Mat roi = src(plate_roi); //需要先初始化Mat&,才能使用

//调用车牌识别方法

fetch_plate_text(src, roi);

}

else {

cv::rectangle(src, box, cv::Scalar(0, 0, 255), 2, 8);

}

//box.tl()返回矩形左上角坐标

cv::putText(src, cv::format("%.2f", confidence), box.tl(), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 255), 2, 8);

}

}

//cv::putText(src, labels[max_index], cv::Point(50, 50), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 255), 2, 8);

cv::namedWindow("out", cv::WINDOW_FREERATIO);

cv::imshow("out", src);

cv::waitKey(0);

return 0;

}

void load_plate_recog_model() {

InferenceEngine::Core ie;

std::string xml = "D:/projects/models/license-plate-recognition-barrier-0001/FP32/license-plate-recognition-barrier-0001.xml";

std::string bin = "D:/projects/models/license-plate-recognition-barrier-0001/FP32/license-plate-recognition-barrier-0001.bin";

InferenceEngine::CNNNetwork network = ie.ReadNetwork(xml, bin); //读取网络

InferenceEngine::InputsDataMap inputs = network.getInputsInfo(); //DataMap是一个Mat数组

InferenceEngine::OutputsDataMap outputs = network.getOutputsInfo(); //DataMap是一个Mat数组

int cnt = 0;

for (auto item : inputs) { //auto可以自动推断变量类型

if (cnt == 0) {

plate_input_name1 = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto input_data = item.second;

input_data->setPrecision(Precision::U8); //默认为unsigned char对应U8

input_data->setLayout(Layout::NCHW);

}

else if (cnt == 1) {

plate_input_name2 = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto input_data = item.second;

input_data->setPrecision(Precision::FP32); //默认为unsigned char对应U8

}

//input_data->getPreProcess().setColorFormat(ColorFormat::BGR); 默认就是BGR

std::cout << "input name: " << (cnt + 1) << ":" << item.first << std::endl;

cnt++;

}

std::string output_name = "";

for (auto item : outputs) { //auto可以自动推断变量类型

plate_output_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto output_data = item.second;

output_data->setPrecision(Precision::FP32); //输出还是浮点数

//注意:output_data不要设置结构

std::cout << "output name: " << plate_output_name << std::endl;

}

auto executable_network = ie.LoadNetwork(network, "CPU"); //设置运行的设备

plate_request = executable_network.CreateInferRequest(); //设置推理请求

}

void fetch_plate_text(cv::Mat &image, cv::Mat &plateROI) {

//图像预处理,使用车牌识别的方法中获取的输入输出信息,用于文本获取

auto input1 = plate_request.GetBlob(plate_input_name1); //获取网络输入图像信息

size_t num_channels = input1->getTensorDesc().getDims()[1]; //size_t 类型表示C中任何对象所能达到的最大长度,它是无符号整数

size_t h = input1->getTensorDesc().getDims()[2];

size_t w = input1->getTensorDesc().getDims()[3];

size_t image_size = h * w;

cv::Mat blob_image;

cv::resize(plateROI, blob_image, cv::Size(94, 24)); //将输入图片大小转换为与网络输入大小一致

//cv::cvtColor(blob_image, blob_image, cv::COLOR_BGR2RGB); //色彩空间转换

// HWC =》NCHW 将输入图像从HWC格式转换为NCHW格式

unsigned char* data = static_cast<unsigned char*>(input1->buffer()); //将图像放到buffer中,放入input中

for (size_t row = 0; row < h; row++) {

for (size_t col = 0; col < w; col++) {

for (size_t ch = 0; ch < num_channels; ch++) {

//将每个通道变成一张图,按照通道顺序

data[image_size * ch + row * w + col] = blob_image.at<cv::Vec3b>(row, col)[ch];

}

}

}

//使用车牌识别的方法中获取的输入输出信息,用于文本获取

auto input2 = plate_request.GetBlob(plate_input_name2);

int max_sequence = input2->getTensorDesc().getDims()[0]; //输出字符长度

float* blob2 = input2->buffer().as<float*>();

blob2[0] = 0.0;

std::fill(blob2 + 1, blob2 + max_sequence, 1.0f); //填充起止范围与填充值

plate_request.Infer(); //执行推理

auto output = plate_request.GetBlob(plate_output_name); //获取推理结果

const float* plate_data = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(output->buffer()); //获取浮点类型输出值plate_data

std::string result;

for (int i = 0; i < max_sequence; i++) {

if (plate_data[i] == -1) { //end

break;

}

result += items[std::size_t(plate_data[i])]; //类型转换,字符串拼接

}

std::cout << result << std::endl;

cv::putText(image, result.c_str(), cv::Point(50, 50), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 255), 2, 8);

}

效果:

5、行人检测、人脸检测及表情识别

视频行人检测

模型介绍

- pedestrian-detection-adas-0002

- SSD MobileNetv1

- 输入格式:[1 * 3 * 384 * 672]

- 输出格式:[1, 1, N, 7]

代码实现

#include <inference_engine.hpp>

#include <opencv2/opencv.hpp>

#include <fstream> //fstream文件读写操作,iostream为控制台操作

using namespace InferenceEngine;

void infer_process(cv::Mat &frame, InferenceEngine::InferRequest &request, std::string &input_name, std::string &output_name);

int main(int argc, char** argv) {

InferenceEngine::Core ie;

std::string xml = "D:/projects/models/pedestrian-detection-adas-0002/FP32/pedestrian-detection-adas-0002.xml";

std::string bin = "D:/projects/models/pedestrian-detection-adas-0002/FP32/pedestrian-detection-adas-0002.bin";

cv::Mat src = cv::imread("D:/images/pedestrians_test.jpg"); //读取图像

int im_h = src.rows;

int im_w = src.cols;

InferenceEngine::CNNNetwork network = ie.ReadNetwork(xml, bin); //读取车辆检测网络

//获取网络输入输出信息

InferenceEngine::InputsDataMap inputs = network.getInputsInfo(); //DataMap是一个Mat数组

InferenceEngine::OutputsDataMap outputs = network.getOutputsInfo(); //DataMap是一个Mat数组

std::string input_name = "";

for (auto item : inputs) { //auto可以自动推断变量类型

input_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto input_data = item.second;

// A->B 表示提取A中的成员B

input_data->setPrecision(Precision::U8); //默认为unsigned char对应U8

input_data->setLayout(Layout::NCHW);

//input_data->getPreProcess().setColorFormat(ColorFormat::BGR); 默认就是BGR

std::cout << "input name: " << input_name << std::endl;

}

std::string output_name = "";

for (auto item : outputs) { //auto可以自动推断变量类型

output_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto output_data = item.second;

output_data->setPrecision(Precision::FP32); //输出还是浮点数

//注意:output_data不要设置结构

std::cout << "output name: " << output_name << std::endl;

}

auto executable_network = ie.LoadNetwork(network, "CPU"); //设置运行的设备

auto infer_request = executable_network.CreateInferRequest(); //设置推理请求

//创建视频流/加载视频文件

cv::VideoCapture capture("D:/images/video/pedestrians_test.mp4");

cv::Mat frame;

while (true) {

bool ret = capture.read(frame);

if (!ret) { //视频帧为空就跳出循环

break;

}

infer_process(frame, infer_request, input_name, output_name);

cv::imshow("frame", frame);

char c = cv::waitKey(1);

if (c == 27) { //ESC

break;

}

}

//cv::putText(src, labels[max_index], cv::Point(50, 50), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 255), 2, 8);

cv::namedWindow("out", cv::WINDOW_FREERATIO);

cv::imshow("out", src);

cv::waitKey(0); //最后的视频画面静止

return 0;

}

void infer_process(cv::Mat& frame, InferenceEngine::InferRequest& request, std::string& input_name, std::string& output_name) {

//图像预处理

auto input = request.GetBlob(input_name); //获取网络输入图像信息

int im_w = frame.cols;

int im_h = frame.rows;

size_t num_channels = input->getTensorDesc().getDims()[1]; //size_t 类型表示C中任何对象所能达到的最大长度,它是无符号整数

size_t h = input->getTensorDesc().getDims()[2];

size_t w = input->getTensorDesc().getDims()[3];

size_t image_size = h * w;

cv::Mat blob_image;

cv::resize(frame, blob_image, cv::Size(w, h)); //将输入图片大小转换为与网络输入大小一致

//cv::cvtColor(blob_image, blob_image, cv::COLOR_BGR2RGB); //色彩空间转换

// HWC =》NCHW 将输入图像从HWC格式转换为NCHW格式

unsigned char* data = static_cast<unsigned char*>(input->buffer()); //将图像放到buffer中,放入input中

for (size_t row = 0; row < h; row++) {

for (size_t col = 0; col < w; col++) {

for (size_t ch = 0; ch < num_channels; ch++) {

//将每个通道变成一张图,按照通道顺序

data[image_size * ch + row * w + col] = blob_image.at<cv::Vec3b>(row, col)[ch];

}

}

}

request.Infer();

auto output = request.GetBlob(output_name);

//转换输出数据

const float* detection_out = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(output->buffer());

//output:[1, 1, N, 7]

//七个参数为:[image_id, label, conf, x_min, y_min, x_max, y_max]

const SizeVector outputDims = output->getTensorDesc().getDims(); //获取输出维度信息 1*1000

std::cout << outputDims[2] << "x" << outputDims[3] << std::endl;

const int max_count = outputDims[2]; //识别出的对象个数

const int object_size = outputDims[3]; //获取对象信息的个数,此处为7个

for (int n = 0; n < max_count; n++) {

float label = detection_out[n * object_size + 1];

float confidence = detection_out[n * object_size + 2];

float xmin = detection_out[n * object_size + 3] * im_w;

float ymin = detection_out[n * object_size + 4] * im_h;

float xmax = detection_out[n * object_size + 5] * im_w;

float ymax = detection_out[n * object_size + 6] * im_h;

if (confidence > 0.9) {

printf("label id: %d \n", static_cast<int>(label));

cv::Rect box;

box.x = static_cast<int>(xmin);

box.y = static_cast<int>(ymin);

box.width = static_cast<int>(xmax - xmin);

box.height = static_cast<int>(ymax - ymin);

if (label == 2) { //将车牌与车辆用不同颜色表示

cv::rectangle(frame, box, cv::Scalar(0, 255, 0), 2, 8);

}

else {

cv::rectangle(frame, box, cv::Scalar(0, 0, 255), 2, 8);

}

//cv::rectangle(src, box, cv::Scalar(0, 0, 255), 2, 8);

//box.tl()返回矩形左上角坐标

cv::putText(frame, cv::format("%.2f", confidence), box.tl(), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 255), 2, 8);

}

}

}

效果:

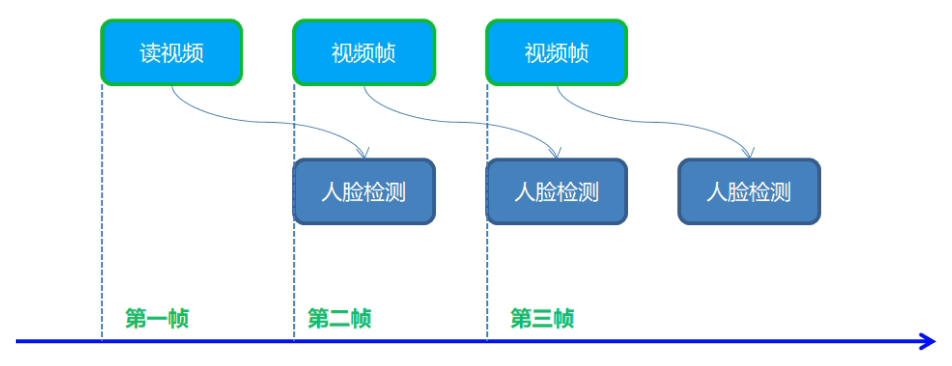

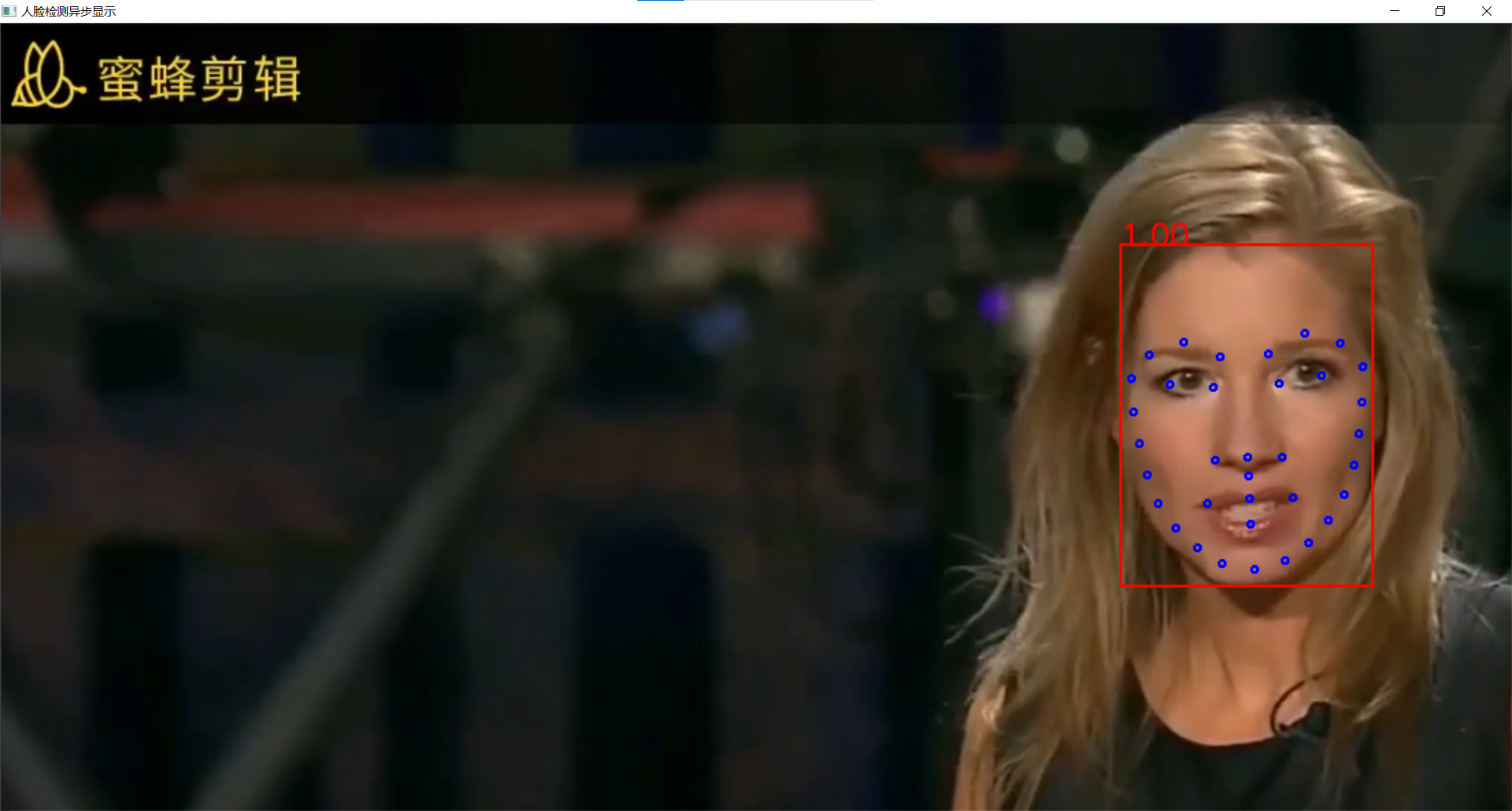

实时人脸检测之异步推理

模型介绍

- 人脸检测:face-detection-0202,SSD-MobileNetv2

- 输入格式:1 * 3 * 384 * 384

- 输出格式:[1, 1, N, 7]

- OpenVINO中人脸检测模型0202~0206

同步与异步执行

代码实现

#include <inference_engine.hpp>

#include <opencv2/opencv.hpp>

#include <fstream> //fstream文件读写操作,iostream为控制台操作

using namespace InferenceEngine;

//图像预处理函数

template <typename T>

void matU8ToBlob(const cv::Mat& orig_image, InferenceEngine::Blob::Ptr& blob, int batchIndex = 0) {

InferenceEngine::SizeVector blobSize = blob->getTensorDesc().getDims();

const size_t width = blobSize[3];

const size_t height = blobSize[2];

const size_t channels = blobSize[1];

InferenceEngine::MemoryBlob::Ptr mblob = InferenceEngine::as<InferenceEngine::MemoryBlob>(blob);

if (!mblob) {

THROW_IE_EXCEPTION << "We expect blob to be inherited from MemoryBlob in matU8ToBlob, "

<< "but by fact we were not able to cast inputBlob to MemoryBlob";

}

// locked memory holder should be alive all time while access to its buffer happens

auto mblobHolder = mblob->wmap();

T* blob_data = mblobHolder.as<T*>();

cv::Mat resized_image(orig_image);

if (static_cast<int>(width) != orig_image.size().width ||

static_cast<int>(height) != orig_image.size().height) {

cv::resize(orig_image, resized_image, cv::Size(width, height));

}

int batchOffset = batchIndex * width * height * channels;

for (size_t c = 0; c < channels; c++) {

for (size_t h = 0; h < height; h++) {

for (size_t w = 0; w < width; w++) {

blob_data[batchOffset + c * width * height + h * width + w] =

resized_image.at<cv::Vec3b>(h, w)[c];

}

}

}

}

void frameToBlob(std::shared_ptr<InferenceEngine::InferRequest>& request, cv::Mat& frame, std::string& input_name) {

//图像预处理,输入数据 ->指针获取成员方法

InferenceEngine::Blob::Ptr input = request->GetBlob(input_name); //获取网络输入图像信息

//该函数template模板类型,需要指定具体类型

matU8ToBlob<uchar>(frame, input); //使用该函数处理输入数据

}

int main(int argc, char** argv) {

InferenceEngine::Core ie;

std::vector<std::string> devices = ie.GetAvailableDevices();

for (std::string name : devices) {

std::cout << "device name: " << name << std::endl;

}

std::string cpuName = ie.GetMetric("CPU", METRIC_KEY(FULL_DEVICE_NAME)).as<std::string>();

std::cout << "cpu name: " << cpuName << std::endl;

std::string xml = "D:/projects/models/face-detection-0202/FP32/face-detection-0202.xml";

std::string bin = "D:/projects/models/face-detection-0202/FP32/face-detection-0202.bin";

//cv::Mat src = cv::imread("D:/images/mmc2.jpg"); //读取图像

//int im_h = src.rows;

//int im_w = src.cols;

InferenceEngine::CNNNetwork network = ie.ReadNetwork(xml, bin); //读取车辆检测网络

//获取网络输入输出信息并设置

InferenceEngine::InputsDataMap inputs = network.getInputsInfo(); //DataMap是一个Mat数组

InferenceEngine::OutputsDataMap outputs = network.getOutputsInfo(); //DataMap是一个Mat数组

std::string input_name = "";

for (auto item : inputs) { //auto可以自动推断变量类型

input_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto input_data = item.second;

// A->B 表示提取A中的成员B

input_data->setPrecision(Precision::U8); //默认为unsigned char对应U8

input_data->setLayout(Layout::NCHW);

//input_data->getPreProcess().setColorFormat(ColorFormat::BGR); 默认就是BGR

std::cout << "input name: " << input_name << std::endl;

}

std::string output_name = "";

for (auto item : outputs) { //auto可以自动推断变量类型

output_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto output_data = item.second;

output_data->setPrecision(Precision::FP32); //输出还是浮点数

//注意:output_data不要设置结构

std::cout << "output name: " << output_name << std::endl;

}

auto executable_network = ie.LoadNetwork(network, "CPU"); //设置运行的设备

//创建指针类型便于后续操作

auto curr_infer_request = executable_network.CreateInferRequestPtr(); //设置推理请求

auto next_infer_request = executable_network.CreateInferRequestPtr(); //设置推理请求

cv::VideoCapture capture("D:/images/video/pedestrians_test.mp4");

cv::Mat curr_frame;

cv::Mat next_frame;

capture.read(curr_frame); //先读取一帧作为当前帧

int im_h = curr_frame.rows;

int im_w = curr_frame.cols;

frameToBlob(curr_infer_request, curr_frame, input_name);

bool first_frame = true; //设置两个bool变量控制线程开启

bool last_frame = false;

//开启两个线程,curr转换显示结果,next预处理图像,预处理后交换给curr

while (true) {

int64 start = cv::getTickCount(); //计时

bool ret = capture.read(next_frame); //读取一帧作为下一帧

if (!ret) {

last_frame = true; //如果下一帧为空,则last_frame为true

}

if (!last_frame) { //如果last_frame为false则预处理下一帧图像

frameToBlob(next_infer_request, next_frame, input_name);

}

if (first_frame) { //如果first_frame为true则开启两个线程,同时修改first_frame为false,避免多次开启线程

curr_infer_request->StartAsync(); //开启线程

next_infer_request->StartAsync();

first_frame = false;

}

else { //如果first_frame与last_frame同为false表示只有下一帧不为空,则开启一个next线程

if (!last_frame) {

next_infer_request->StartAsync();

}

}

//判断当前请求是否预处理完毕

if (InferenceEngine::OK == curr_infer_request->Wait(InferenceEngine::IInferRequest::WaitMode::RESULT_READY)) {

auto output = curr_infer_request->GetBlob(output_name);

//转换输出数据

const float* detection_out = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(output->buffer());

//output:[1, 1, N, 7]

//七个参数为:[image_id, label, conf, x_min, y_min, x_max, y_max]

const SizeVector outputDims = output->getTensorDesc().getDims(); //获取输出维度信息 1*1000

std::cout << outputDims[2] << "x" << outputDims[3] << std::endl;

const int max_count = outputDims[2]; //识别出的对象个数

const int object_size = outputDims[3]; //获取对象信息的个数,此处为7个

for (int n = 0; n < max_count; n++) {

float label = detection_out[n * object_size + 1];

float confidence = detection_out[n * object_size + 2];

float xmin = detection_out[n * object_size + 3] * im_w;

float ymin = detection_out[n * object_size + 4] * im_h;

float xmax = detection_out[n * object_size + 5] * im_w;

float ymax = detection_out[n * object_size + 6] * im_h;

if (confidence > 0.5) {

printf("label id: %d \n", static_cast<int>(label));

cv::Rect box;

box.x = static_cast<int>(xmin);

box.y = static_cast<int>(ymin);

box.width = static_cast<int>(xmax - xmin);

box.height = static_cast<int>(ymax - ymin);

cv::rectangle(curr_frame, box, cv::Scalar(0, 0, 255), 2, 8);

//getTickCount()相减得到cpu走过的时钟周期数,getTickFrequency()得到cpu一秒走过的始终周期数

float t = (cv::getTickCount() - start) / static_cast<float>(cv::getTickFrequency());

std::cout << 1.0 / t << std::endl;

//box.tl()返回矩形左上角坐标

cv::putText(curr_frame, cv::format("%.2f", confidence), box.tl(), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 255), 2, 8);

}

}

}

//显示结果

cv::imshow("人脸检测异步显示", curr_frame);

char c = cv::waitKey(1);

if (c == 27) { //ESC

break;

}

if (last_frame) { //如果last_frame为true表示下一帧为空,则跳出循环

break;

}

//异步交换,下一帧复制到当前帧,当前帧请求与下一帧请求交换

next_frame.copyTo(curr_frame);

curr_infer_request.swap(next_infer_request); //指针可以使用swap方法,否则不行

}

cv::waitKey(0);

return 0;

}

效果:

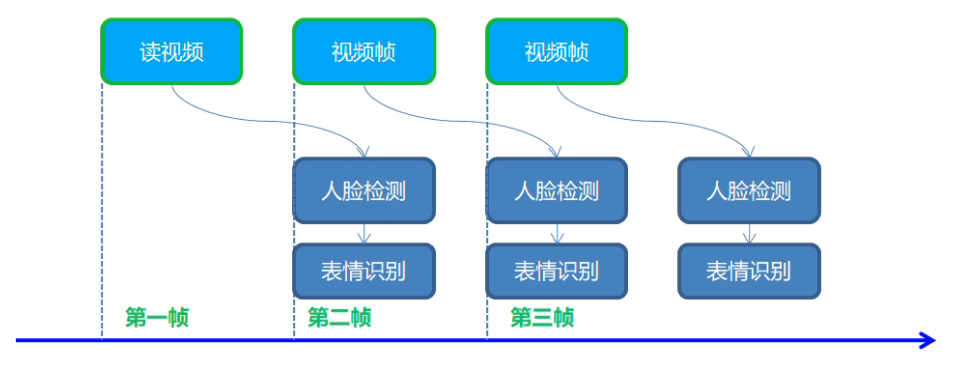

实时人脸表情识别

模型介绍

- 人脸检测:face-detection-0202,SSD-MobileNetv2

- 输入格式:1 * 3 * 384 * 384

- 输出格式:[1, 1, N, 7]

- 表情识别:emotions-recognition-retail-0003

- 1 * 3 * 64 * 64

- [1, 5, 1, 1] - ('neutral', 'happy', 'sad', 'suprise', 'anger')

- 下载模型 emotions-recognition-retail-0003 同前

同步与异步执行

代码实现

#include <inference_engine.hpp>

#include <opencv2/opencv.hpp>

#include <fstream> //fstream文件读写操作,iostream为控制台操作

using namespace InferenceEngine;

static const char *const items[] = {

"neutral","happy","sad","surprise","anger"

};

//图像预处理函数

template <typename T>

void matU8ToBlob(const cv::Mat& orig_image, InferenceEngine::Blob::Ptr& blob, int batchIndex = 0) {

InferenceEngine::SizeVector blobSize = blob->getTensorDesc().getDims();

const size_t width = blobSize[3];

const size_t height = blobSize[2];

const size_t channels = blobSize[1];

InferenceEngine::MemoryBlob::Ptr mblob = InferenceEngine::as<InferenceEngine::MemoryBlob>(blob);

if (!mblob) {

THROW_IE_EXCEPTION << "We expect blob to be inherited from MemoryBlob in matU8ToBlob, "

<< "but by fact we were not able to cast inputBlob to MemoryBlob";

}

// locked memory holder should be alive all time while access to its buffer happens

auto mblobHolder = mblob->wmap();

T* blob_data = mblobHolder.as<T*>();

cv::Mat resized_image(orig_image);

if (static_cast<int>(width) != orig_image.size().width ||

static_cast<int>(height) != orig_image.size().height) {

cv::resize(orig_image, resized_image, cv::Size(width, height));

}

int batchOffset = batchIndex * width * height * channels;

for (size_t c = 0; c < channels; c++) {

for (size_t h = 0; h < height; h++) {

for (size_t w = 0; w < width; w++) {

blob_data[batchOffset + c * width * height + h * width + w] =

resized_image.at<cv::Vec3b>(h, w)[c];

}

}

}

}

void fetch_emotion(cv::Mat& image, InferenceEngine::InferRequest& request, cv::Rect& face_roi, std::string& e_input, std::string& e_output);

void frameToBlob(std::shared_ptr<InferenceEngine::InferRequest>& request, cv::Mat& frame, std::string& input_name) {

//图像预处理,输入数据 ->指针获取成员方法

InferenceEngine::Blob::Ptr input = request->GetBlob(input_name); //获取网络输入图像信息

//该函数template模板类型,需要指定具体类型

matU8ToBlob<uchar>(frame, input); //使用该函数处理输入数据

}

int main(int argc, char** argv) {

InferenceEngine::Core ie;

//load face model

std::string xml = "D:/projects/models/face-detection-0202/FP32/face-detection-0202.xml";

std::string bin = "D:/projects/models/face-detection-0202/FP32/face-detection-0202.bin";

InferenceEngine::CNNNetwork network = ie.ReadNetwork(xml, bin); //读取车辆检测网络

//获取网络输入输出信息并设置

InferenceEngine::InputsDataMap inputs = network.getInputsInfo(); //DataMap是一个Mat数组

InferenceEngine::OutputsDataMap outputs = network.getOutputsInfo(); //DataMap是一个Mat数组

std::string input_name = "";

for (auto item : inputs) { //auto可以自动推断变量类型

input_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto input_data = item.second;

// A->B 表示提取A中的成员B

input_data->setPrecision(Precision::U8); //默认为unsigned char对应U8

input_data->setLayout(Layout::NCHW);

//input_data->getPreProcess().setColorFormat(ColorFormat::BGR); 默认就是BGR

std::cout << "input name: " << input_name << std::endl;

}

std::string output_name = "";

for (auto item : outputs) { //auto可以自动推断变量类型

output_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto output_data = item.second;

output_data->setPrecision(Precision::FP32); //输出还是浮点数

//注意:output_data不要设置结构

std::cout << "output name: " << output_name << std::endl;

}

auto executable_network = ie.LoadNetwork(network, "CPU"); //设置运行的设备

//创建指针类型便于后续操作

auto curr_infer_request = executable_network.CreateInferRequestPtr(); //设置推理请求

auto next_infer_request = executable_network.CreateInferRequestPtr(); //设置推理请求

//load emotion model

std::string em_xml = "D:/projects/models/emotions-recognition-retail-0003/FP32/emotions-recognition-retail-0003.xml";

std::string em_bin = "D:/projects/models/emotions-recognition-retail-0003/FP32/emotions-recognition-retail-0003.bin";

InferenceEngine::CNNNetwork em_network = ie.ReadNetwork(em_xml, em_bin); //读取车辆检测网络

//获取网络输入输出信息并设置

InferenceEngine::InputsDataMap em_inputs = em_network.getInputsInfo(); //DataMap是一个Mat数组

InferenceEngine::OutputsDataMap em_outputs = em_network.getOutputsInfo(); //DataMap是一个Mat数组

std::string em_input_name = "";

for (auto item : em_inputs) {

em_input_name = item.first;

//循环作用域内的变量可以不重命名,为查看更明确这里重命名

auto em_input_data = item.second;

em_input_data->setPrecision(Precision::U8);

em_input_data->setLayout(Layout::NCHW);

}

std::string em_output_name = "";

for (auto item : em_outputs) { //auto可以自动推断变量类型

em_output_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto em_output_data = item.second;

em_output_data->setPrecision(Precision::FP32); //输出还是浮点数

}

auto executable_em_network = ie.LoadNetwork(em_network, "CPU"); //设置运行的设备

//创建指针类型便于后续操作

auto em_request = executable_em_network.CreateInferRequest(); //设置推理请求

cv::VideoCapture capture("D:/images/video/face_detect.mp4");

cv::Mat curr_frame;

cv::Mat next_frame;

capture.read(curr_frame); //先读取一帧作为当前帧

int im_h = curr_frame.rows;

int im_w = curr_frame.cols;

frameToBlob(curr_infer_request, curr_frame, input_name);

bool first_frame = true; //设置两个bool变量控制线程开启

bool last_frame = false;

//开启两个线程,curr转换显示结果,next预处理图像,预处理后交换给curr

while (true) {

int64 start = cv::getTickCount(); //计时

bool ret = capture.read(next_frame); //读取一帧作为下一帧

if (!ret) {

last_frame = true; //如果下一帧为空,则last_frame为true

}

if (!last_frame) { //如果last_frame为false则预处理下一帧图像

frameToBlob(next_infer_request, next_frame, input_name);

}

if (first_frame) { //如果first_frame为true则开启两个线程,同时修改first_frame为false,避免多次开启线程

curr_infer_request->StartAsync(); //开启线程

next_infer_request->StartAsync();

first_frame = false;

}

else { //如果first_frame与last_frame同为false表示只有下一帧不为空,则开启一个next线程

if (!last_frame) {

next_infer_request->StartAsync();

}

}

//判断当前请求是否预处理完毕

if (InferenceEngine::OK == curr_infer_request->Wait(InferenceEngine::IInferRequest::WaitMode::RESULT_READY)) {

auto output = curr_infer_request->GetBlob(output_name);

//转换输出数据

const float* detection_out = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(output->buffer());

//output:[1, 1, N, 7]

//七个参数为:[image_id, label, conf, x_min, y_min, x_max, y_max]

const SizeVector outputDims = output->getTensorDesc().getDims(); //获取输出维度信息 1*1000

std::cout << outputDims[2] << "x" << outputDims[3] << std::endl;

const int max_count = outputDims[2]; //识别出的对象个数

const int object_size = outputDims[3]; //获取对象信息的个数,此处为7个

for (int n = 0; n < max_count; n++) {

float label = detection_out[n * object_size + 1];

float confidence = detection_out[n * object_size + 2];

float xmin = detection_out[n * object_size + 3] * im_w;

float ymin = detection_out[n * object_size + 4] * im_h;

float xmax = detection_out[n * object_size + 5] * im_w;

float ymax = detection_out[n * object_size + 6] * im_h;

if (confidence > 0.5) {

printf("label id: %d \n", static_cast<int>(label));

cv::Rect box;

box.x = static_cast<int>(xmin);

box.y = static_cast<int>(ymin);

xmax = xmax > im_w ? im_w : xmax; //通过判断避免越界

ymax = ymax > im_h ? im_h : ymax;

box.width = static_cast<int>(xmax - xmin);

box.height = static_cast<int>(ymax - ymin);

box.x = box.x < 0 ? 0 : box.x; //通过判断避免越界

box.y = box.y < 0 ? 0 : box.y;

box.width = box.x < 0 ? 0 : box.width;

box.height = box.x < 0 ? 0 : box.height;

cv::rectangle(curr_frame, box, cv::Scalar(0, 0, 255), 2, 8);

fetch_emotion(curr_frame, em_request, box, em_input_name, em_output_name); //获取表情

//getTickCount()相减得到cpu走过的时钟周期数,getTickFrequency()得到cpu一秒走过的始终周期数

float fps = static_cast<float>(cv::getTickFrequency()) / (cv::getTickCount() - start);

cv::putText(curr_frame, cv::format("FPS:%.2f", fps), cv::Point(50, 50), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 255), 2, 8);

}

}

}

//显示结果

cv::imshow("人脸检测异步显示", curr_frame);

char c = cv::waitKey(1);

if (c == 27) { //ESC

break;

}

if (last_frame) { //如果last_frame为true表示下一帧为空,则跳出循环

break;

}

//异步交换,下一帧复制到当前帧,当前帧请求与下一帧请求交换

next_frame.copyTo(curr_frame);

curr_infer_request.swap(next_infer_request); //指针可以使用swap方法,否则不行

}

cv::waitKey(0);

return 0;

}

//获取表情

void fetch_emotion(cv::Mat& image, InferenceEngine::InferRequest& request, cv::Rect& face_roi, std::string& e_input, std::string& e_output) {

cv::Mat faceROI = image(face_roi); //获取面部区域

//图像预处理,使用车牌识别的方法中获取的输入输出信息,用于文本获取

auto blob = request.GetBlob(e_input); //获取网络输入图像信息

matU8ToBlob<uchar>(faceROI, blob);

request.Infer(); //执行推理

auto output = request.GetBlob(e_output);

//转换输出数据

const float* probs = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(output->buffer());

const SizeVector outputDims = output->getTensorDesc().getDims(); //获取输出维度信息 1*1000

std::cout << outputDims[0] << "x" << outputDims[1] << std::endl;

float max = probs[0];

int max_index = 0;

for (int i = 1; i < outputDims[1]; i++) {

if (max < probs[i]) { //找到结果probs中的最大值,获取其下标

max = probs[i];

max_index = i;

}

}

std::cout << items[max_index] << std::endl;

cv::putText(image, items[max_index], face_roi.tl(), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 255), 2, 8);

}

效果:

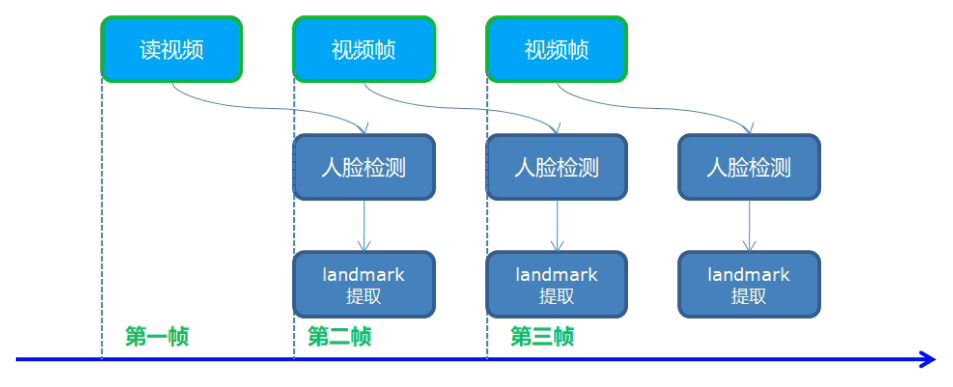

人脸关键点landmark检测

模型介绍

- face-detection-0202 - 人脸检测

- facial-landmarks-35-adas-0002 - landmark提取

- 输入格式:[1 * 3 * 60 * 60]

- 输出格式:[1, 70]

- 输出人脸35个特征点,浮点数坐标

程序流程

代码实现

#include <inference_engine.hpp>

#include <opencv2/opencv.hpp>

#include <fstream> //fstream文件读写操作,iostream为控制台操作

using namespace InferenceEngine;

//图像预处理函数

template <typename T>

void matU8ToBlob(const cv::Mat& orig_image, InferenceEngine::Blob::Ptr& blob, int batchIndex = 0) {

InferenceEngine::SizeVector blobSize = blob->getTensorDesc().getDims();

const size_t width = blobSize[3];

const size_t height = blobSize[2];

const size_t channels = blobSize[1];

InferenceEngine::MemoryBlob::Ptr mblob = InferenceEngine::as<InferenceEngine::MemoryBlob>(blob);

if (!mblob) {

THROW_IE_EXCEPTION << "We expect blob to be inherited from MemoryBlob in matU8ToBlob, "

<< "but by fact we were not able to cast inputBlob to MemoryBlob";

}

// locked memory holder should be alive all time while access to its buffer happens

auto mblobHolder = mblob->wmap();

T* blob_data = mblobHolder.as<T*>();

cv::Mat resized_image(orig_image);

if (static_cast<int>(width) != orig_image.size().width ||

static_cast<int>(height) != orig_image.size().height) {

cv::resize(orig_image, resized_image, cv::Size(width, height));

}

int batchOffset = batchIndex * width * height * channels;

for (size_t c = 0; c < channels; c++) {

for (size_t h = 0; h < height; h++) {

for (size_t w = 0; w < width; w++) {

blob_data[batchOffset + c * width * height + h * width + w] =

resized_image.at<cv::Vec3b>(h, w)[c];

}

}

}

}

void frameToBlob(std::shared_ptr<InferenceEngine::InferRequest>& request, cv::Mat& frame, std::string& input_name) {

//图像预处理,输入数据 ->指针获取成员方法

InferenceEngine::Blob::Ptr input = request->GetBlob(input_name); //获取网络输入图像信息

//该函数template模板类型,需要指定具体类型

matU8ToBlob<uchar>(frame, input); //使用该函数处理输入数据

}

InferenceEngine::InferRequest landmark_request; //提高推理请求作用域

void loadLandmarksRequest(Core& ie, std::string& land_input_name, std::string& land_output_name);

int main(int argc, char** argv) {

InferenceEngine::Core ie;

std::vector<std::string> devices = ie.GetAvailableDevices();

for (std::string name : devices) {

std::cout << "device name: " << name << std::endl;

}

std::string cpuName = ie.GetMetric("CPU", METRIC_KEY(FULL_DEVICE_NAME)).as<std::string>();

std::cout << "cpu name: " << cpuName << std::endl;

std::string xml = "D:/projects/models/face-detection-0202/FP32/face-detection-0202.xml";

std::string bin = "D:/projects/models/face-detection-0202/FP32/face-detection-0202.bin";

//cv::Mat src = cv::imread("D:/images/mmc2.jpg"); //读取图像

//int im_h = src.rows;

//int im_w = src.cols;

InferenceEngine::CNNNetwork network = ie.ReadNetwork(xml, bin); //读取车辆检测网络

//获取网络输入输出信息并设置

InferenceEngine::InputsDataMap inputs = network.getInputsInfo(); //DataMap是一个Mat数组

InferenceEngine::OutputsDataMap outputs = network.getOutputsInfo(); //DataMap是一个Mat数组

std::string input_name = "";

for (auto item : inputs) { //auto可以自动推断变量类型

input_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto input_data = item.second;

// A->B 表示提取A中的成员B

input_data->setPrecision(Precision::U8); //默认为unsigned char对应U8

input_data->setLayout(Layout::NCHW);

//input_data->getPreProcess().setColorFormat(ColorFormat::BGR); 默认就是BGR

std::cout << "input name: " << input_name << std::endl;

}

std::string output_name = "";

for (auto item : outputs) { //auto可以自动推断变量类型

output_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto output_data = item.second;

output_data->setPrecision(Precision::FP32); //输出还是浮点数

//注意:output_data不要设置结构

std::cout << "output name: " << output_name << std::endl;

}

auto executable_network = ie.LoadNetwork(network, "CPU"); //设置运行的设备

//创建指针类型便于后续操作

auto curr_infer_request = executable_network.CreateInferRequestPtr(); //设置推理请求

auto next_infer_request = executable_network.CreateInferRequestPtr(); //设置推理请求

//加载landmark模型

std::string land_input_name = "";

std::string land_output_name = "";

loadLandmarksRequest(ie, land_input_name, land_output_name);

cv::VideoCapture capture("D:/images/video/emotion_detect.mp4");

cv::Mat curr_frame;

cv::Mat next_frame;

capture.read(curr_frame); //先读取一帧作为当前帧

int im_h = curr_frame.rows;

int im_w = curr_frame.cols;

frameToBlob(curr_infer_request, curr_frame, input_name);

bool first_frame = true; //设置两个bool变量控制线程开启

bool last_frame = false;

//开启两个线程,curr转换显示结果,next预处理图像,预处理后交换给curr

while (true) {

int64 start = cv::getTickCount(); //计时

bool ret = capture.read(next_frame); //读取一帧作为下一帧

if (!ret) {

last_frame = true; //如果下一帧为空,则last_frame为true

}

if (!last_frame) { //如果last_frame为false则预处理下一帧图像

frameToBlob(next_infer_request, next_frame, input_name);

}

if (first_frame) { //如果first_frame为true则开启两个线程,同时修改first_frame为false,避免多次开启线程

curr_infer_request->StartAsync(); //开启线程

next_infer_request->StartAsync();

first_frame = false;

}

else { //如果first_frame与last_frame同为false表示只有下一帧不为空,则开启一个next线程

if (!last_frame) {

next_infer_request->StartAsync();

}

}

//判断当前请求是否预处理完毕

if (InferenceEngine::OK == curr_infer_request->Wait(InferenceEngine::IInferRequest::WaitMode::RESULT_READY)) {

auto output = curr_infer_request->GetBlob(output_name);

//转换输出数据

const float* detection_out = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(output->buffer());

//output:[1, 1, N, 7]

//七个参数为:[image_id, label, conf, x_min, y_min, x_max, y_max]

const SizeVector outputDims = output->getTensorDesc().getDims(); //获取输出维度信息 1*1000

std::cout << outputDims[2] << "x" << outputDims[3] << std::endl;

const int max_count = outputDims[2]; //识别出的对象个数

const int object_size = outputDims[3]; //获取对象信息的个数,此处为7个

for (int n = 0; n < max_count; n++) {

float label = detection_out[n * object_size + 1];

float confidence = detection_out[n * object_size + 2];

float xmin = detection_out[n * object_size + 3] * im_w;

float ymin = detection_out[n * object_size + 4] * im_h;

float xmax = detection_out[n * object_size + 5] * im_w;

float ymax = detection_out[n * object_size + 6] * im_h;

if (confidence > 0.5) {

printf("label id: %d \n", static_cast<int>(label));

cv::Rect box;

float x1 = std::min(std::max(0.0f, xmin), static_cast<float>(im_w)); //防止目标区域越界

float y1 = std::min(std::max(0.0f, ymin), static_cast<float>(im_h));

float x2 = std::min(std::max(0.0f, xmax), static_cast<float>(im_w));

float y2 = std::min(std::max(0.0f, ymax), static_cast<float>(im_h));

box.x = static_cast<int>(x1);

box.y = static_cast<int>(y1);

box.width = static_cast<int>(x2 - x1);

box.height = static_cast<int>(y2 - y1);

cv::Mat face_roi = curr_frame(box);

auto face_input_blob = landmark_request.GetBlob(land_input_name);

matU8ToBlob<uchar>(face_roi, face_input_blob);

landmark_request.Infer(); //执行推理获取目标区域面部特征点

auto land_output = landmark_request.GetBlob(land_output_name);

const float* blob_out = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(land_output->buffer());

const SizeVector land_dims = land_output->getTensorDesc().getDims();

const int b = land_dims[0];

const int cc = land_dims[1];

//共70个特征参数(x0, y0, x1, y1, ..., x34, y34),所以每次要 +2

for (int i = 0; i < cc; i += 2) {

float x = blob_out[i] * box.width + box.x;

float y = blob_out[i + 1] * box.height + box.y;

cv::circle(curr_frame, cv::Point(x, y), 3, cv::Scalar(255, 0, 0), 2, 8, 0);

}

cv::rectangle(curr_frame, box, cv::Scalar(0, 0, 255), 2, 8);

//getTickCount()相减得到cpu走过的时钟周期数,getTickFrequency()得到cpu一秒走过的始终周期数

float t = (cv::getTickCount() - start) / static_cast<float>(cv::getTickFrequency());

std::cout << 1.0 / t << std::endl;

//box.tl()返回矩形左上角坐标

cv::putText(curr_frame, cv::format("%.2f", confidence), box.tl(), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 255), 2, 8);

}

}

}

//显示结果

cv::imshow("人脸检测异步显示", curr_frame);

char c = cv::waitKey(1);

if (c == 27) { //ESC

break;

}

if (last_frame) { //如果last_frame为true表示下一帧为空,则跳出循环

break;

}

//异步交换,下一帧复制到当前帧,当前帧请求与下一帧请求交换

next_frame.copyTo(curr_frame);

curr_infer_request.swap(next_infer_request); //指针可以使用swap方法,否则不行

}

cv::waitKey(0);

return 0;

}

void loadLandmarksRequest (Core& ie, std::string& land_input_name, std::string& land_output_name) {

//下载模型同前

std::string xml = "D:/projects/models/facial-landmarks-35-adas-0002/FP32/facial-landmarks-35-adas-0002.xml";

std::string bin = "D:/projects/models/facial-landmarks-35-adas-0002/FP32/facial-landmarks-35-adas-0002.bin";

InferenceEngine::CNNNetwork network = ie.ReadNetwork(xml, bin); //读取网络

InferenceEngine::InputsDataMap inputs = network.getInputsInfo(); //DataMap是一个Mat数组

InferenceEngine::OutputsDataMap outputs = network.getOutputsInfo(); //DataMap是一个Mat数组

int cnt = 0;

for (auto item : inputs) { //auto可以自动推断变量类型

land_input_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto input_data = item.second;

input_data->setPrecision(Precision::U8); //默认为unsigned char对应U8

input_data->setLayout(Layout::NCHW);

}

for (auto item : outputs) { //auto可以自动推断变量类型

land_output_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto output_data = item.second;

output_data->setPrecision(Precision::FP32); //输出还是浮点数

}

auto executable_network = ie.LoadNetwork(network, "CPU"); //设置运行的设备

landmark_request = executable_network.CreateInferRequest(); //设置推理请求

}

效果:

6、图像语义分割与实例分割

实时道路语义分割

- 识别道路、背景、路边、标志线四个类别

道路分割模型介绍

- 模型:road-segmentation-adas-0001

- 输入格式:[B, C=3, H=512, W=896], BGR

- 输出格式:[B, C=4, H=512, W=896]

- 四个类别:BG, road, curb, mark

程序流程

代码实现

#include <inference_engine.hpp>

#include <opencv2/opencv.hpp>

#include <fstream> //fstream文件读写操作,iostream为控制台操作

using namespace InferenceEngine;

//图像预处理函数

template <typename T>

void matU8ToBlob(const cv::Mat& orig_image, InferenceEngine::Blob::Ptr& blob, int batchIndex = 0) {

InferenceEngine::SizeVector blobSize = blob->getTensorDesc().getDims();

const size_t width = blobSize[3];

const size_t height = blobSize[2];

const size_t channels = blobSize[1];

InferenceEngine::MemoryBlob::Ptr mblob = InferenceEngine::as<InferenceEngine::MemoryBlob>(blob);

if (!mblob) {

THROW_IE_EXCEPTION << "We expect blob to be inherited from MemoryBlob in matU8ToBlob, "

<< "but by fact we were not able to cast inputBlob to MemoryBlob";

}

// locked memory holder should be alive all time while access to its buffer happens

auto mblobHolder = mblob->wmap();

T* blob_data = mblobHolder.as<T*>();

cv::Mat resized_image(orig_image);

if (static_cast<int>(width) != orig_image.size().width ||

static_cast<int>(height) != orig_image.size().height) {

cv::resize(orig_image, resized_image, cv::Size(width, height));

}

int batchOffset = batchIndex * width * height * channels;

for (size_t c = 0; c < channels; c++) {

for (size_t h = 0; h < height; h++) {

for (size_t w = 0; w < width; w++) {

blob_data[batchOffset + c * width * height + h * width + w] =

resized_image.at<cv::Vec3b>(h, w)[c];

}

}

}

}

void frameToBlob(std::shared_ptr<InferenceEngine::InferRequest>& request, cv::Mat& frame, std::string& input_name) {

//图像预处理,输入数据 ->指针获取成员方法

InferenceEngine::Blob::Ptr input = request->GetBlob(input_name); //获取网络输入图像信息

//该函数template模板类型,需要指定具体类型

matU8ToBlob<uchar>(frame, input); //使用该函数处理输入数据

}

int main(int argc, char** argv) {

InferenceEngine::Core ie;

std::string xml = "D:/projects/models/road-segmentation-adas-0001/FP32/road-segmentation-adas-0001.xml";

std::string bin = "D:/projects/models/road-segmentation-adas-0001/FP32/road-segmentation-adas-0001.bin";

InferenceEngine::CNNNetwork network = ie.ReadNetwork(xml, bin); //读取车辆检测网络

//获取网络输入输出信息并设置

InferenceEngine::InputsDataMap inputs = network.getInputsInfo(); //DataMap是一个Mat数组

InferenceEngine::OutputsDataMap outputs = network.getOutputsInfo(); //DataMap是一个Mat数组

std::string input_name = "";

for (auto item : inputs) { //auto可以自动推断变量类型

input_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto input_data = item.second;

// A->B 表示提取A中的成员B

input_data->setPrecision(Precision::U8); //默认为unsigned char对应U8

input_data->setLayout(Layout::NCHW);

//input_data->getPreProcess().setColorFormat(ColorFormat::BGR); 默认就是BGR

std::cout << "input name: " << input_name << std::endl;

}

std::string output_name = "";

for (auto item : outputs) { //auto可以自动推断变量类型

output_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto output_data = item.second;

output_data->setPrecision(Precision::FP32); //输出还是浮点数

//注意:output_data不要设置结构

std::cout << "output name: " << output_name << std::endl;

}

auto executable_network = ie.LoadNetwork(network, "CPU"); //设置运行的设备

//创建指针类型便于后续操作

auto curr_infer_request = executable_network.CreateInferRequestPtr(); //设置推理请求

auto next_infer_request = executable_network.CreateInferRequestPtr(); //设置推理请求

cv::VideoCapture capture("D:/images/video/road_segmentation.mp4");

cv::Mat curr_frame;

cv::Mat next_frame;

capture.read(curr_frame); //先读取一帧作为当前帧

int im_h = curr_frame.rows;

int im_w = curr_frame.cols;

frameToBlob(curr_infer_request, curr_frame, input_name);

bool first_frame = true; //设置两个bool变量控制线程开启

bool last_frame = false;

std::vector<cv::Vec3b> color_tab; //设置分割输出图像中的不同颜色代表不同分类

color_tab.push_back(cv::Vec3b(0, 0, 0)); //背景

color_tab.push_back(cv::Vec3b(255, 0, 0)); //道路

color_tab.push_back(cv::Vec3b(0, 0, 255)); //路边

color_tab.push_back(cv::Vec3b(0, 255, 255)); //路标

//开启两个线程,curr转换显示结果,next预处理图像,预处理后交换给curr

while (true) {

int64 start = cv::getTickCount(); //计时

bool ret = capture.read(next_frame); //读取一帧作为下一帧

if (!ret) {

last_frame = true; //如果下一帧为空,则last_frame为true

}

if (!last_frame) { //如果last_frame为false则预处理下一帧图像

frameToBlob(next_infer_request, next_frame, input_name);

}

if (first_frame) { //如果first_frame为true则开启两个线程,同时修改first_frame为false,避免多次开启线程

curr_infer_request->StartAsync(); //开启线程

next_infer_request->StartAsync();

first_frame = false;

}

else { //如果first_frame与last_frame同为false表示只有下一帧不为空,则开启一个next线程

if (!last_frame) {

next_infer_request->StartAsync();

}

}

//判断当前请求是否预处理完毕

if (InferenceEngine::OK == curr_infer_request->Wait(InferenceEngine::IInferRequest::WaitMode::RESULT_READY)) {

auto output = curr_infer_request->GetBlob(output_name);

//转换输出数据

const float* detection_out = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(output->buffer());

//output:[B, C, H, W]

const SizeVector outputDims = output->getTensorDesc().getDims(); //获取输出维度信息 1*1000

//每个像素针对每种分类分别有一个识别结果数值,数值最大的为该像素的分类

//结果矩阵格式为:每种分类各有一个输出图像大小的矩阵,每个像素位置对应其在该分类的可能性

const int out_c = outputDims[1]; //分割识别的类型个数,此处为4

const int out_h = outputDims[2]; //分割网络输出图像的高

const int out_w = outputDims[3]; //分割网络输出图像的宽

cv::Mat result = cv::Mat::zeros(cv::Size(out_w, out_h), CV_8UC3);

int step = out_h * out_w;

for (int row = 0; row < out_h; row++) {

for (int col = 0; col < out_w; col++) {

int max_index = 0; //定义一个变量保存最大分类结果数值的下标

float max_prob = detection_out[row * out_w + col];

for (int cn = 1; cn < out_c; cn++) {

//比较每个像素在四种不同分类矩阵中的可能性,找到最大可能性的分类

float prob = detection_out[cn * step + row * out_w + col];

if (prob > max_prob) {

max_prob = prob;

max_index = cn;

}

}

//在结果矩阵中对应像素位置保存原图中该像素分类对应的颜色

result.at<cv::Vec3b>(row, col) = color_tab[max_index];

}

}

//先初始化一个网络输出结果大小的矩阵保存每个像素点对应的颜色,再将结果矩阵恢复到原图大小,以便最终结果显示

cv::resize(result, result, cv::Size(im_w, im_h));

//在输入图像中对应位置按比例增加结果矩阵中对应的颜色

cv::addWeighted(curr_frame, 0.5, result, 0.5, 0, curr_frame);

}

//getTickCount()相减得到cpu走过的时钟周期数,getTickFrequency()得到cpu一秒走过的始终周期数

float t = (cv::getTickCount() - start) / static_cast<float>(cv::getTickFrequency());

cv::putText(curr_frame, cv::format("FPS: %.2f", 1.0 / t), cv::Point(50, 50), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 255), 2, 8);

//显示结果

cv::imshow("道路分割异步显示", curr_frame);

char c = cv::waitKey(1);

if (c == 27) { //ESC

break;

}

if (last_frame) { //如果last_frame为true表示下一帧为空,则跳出循环

break;

}

//异步交换,下一帧复制到当前帧,当前帧请求与下一帧请求交换

next_frame.copyTo(curr_frame);

curr_infer_request.swap(next_infer_request); //指针可以使用swap方法,否则不行

}

cv::waitKey(0);

return 0;

}

效果:

黄色为路面标志,红色为路边,蓝色为道路,其余部分为背景

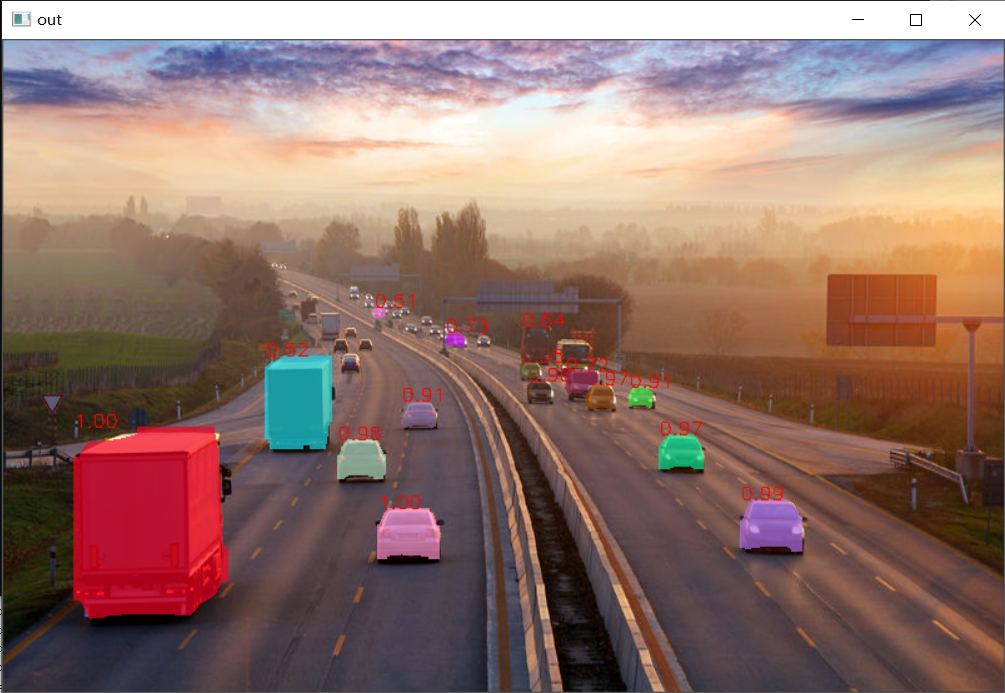

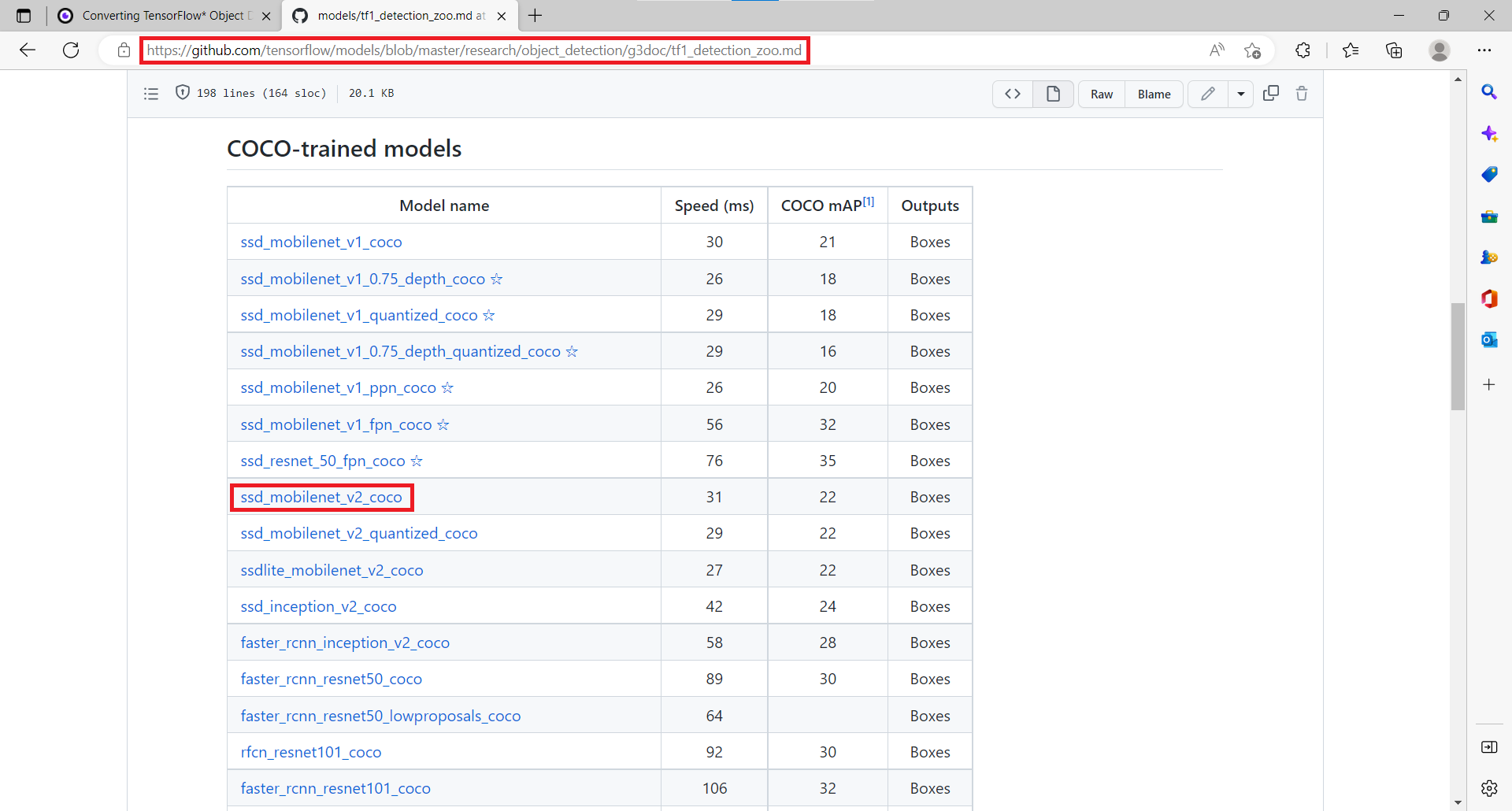

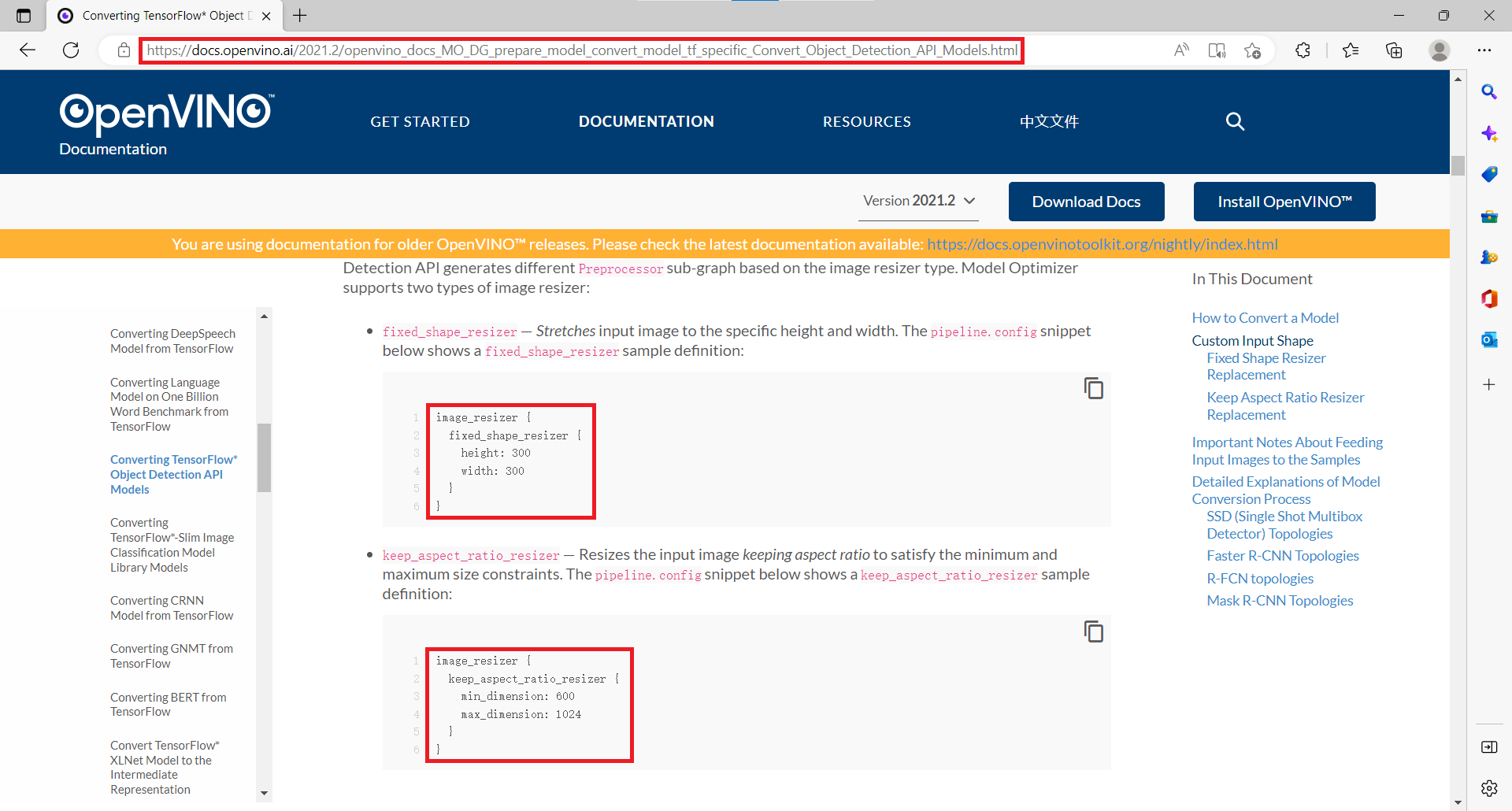

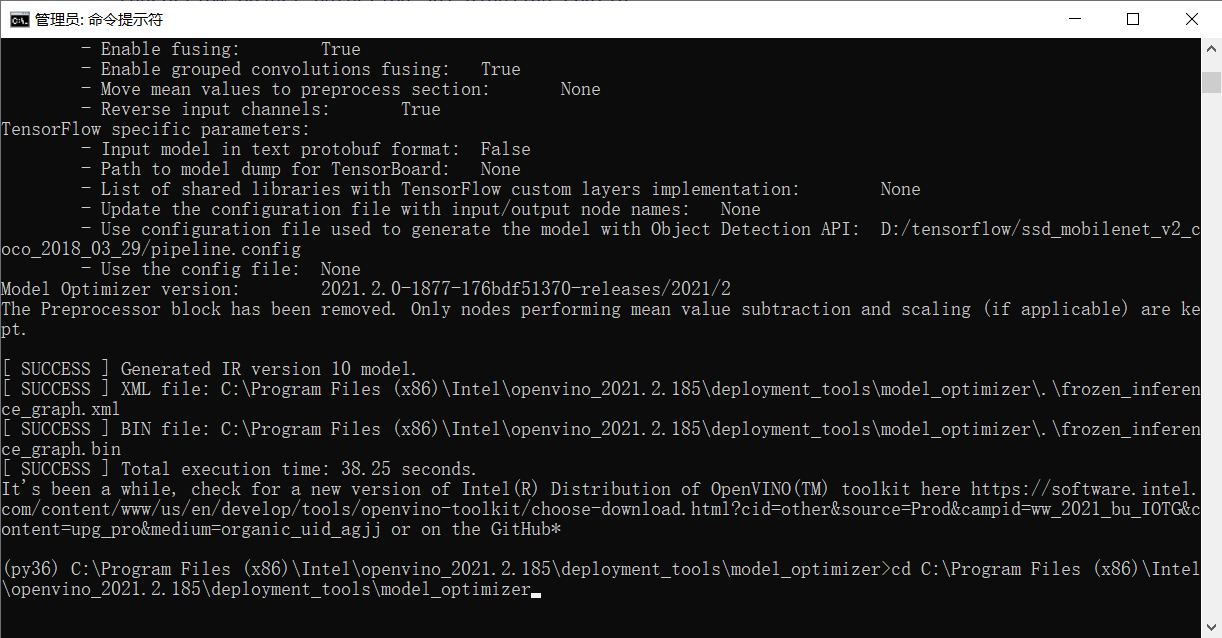

实例分割

实例分割模型介绍(Mask R-CNN)

- instance-segmentation-security-0050

- 有两个输入层:

- im_data: [1 * 3 * 480 * 480],图像数据 1 * C * H * C(num、channels、height、width)

- im_info: [1 * 3],图像信息,宽、高和scale

- 输出格式:

- classes: [100, ],最多100个实例,属于不超过80个分类

- scores: [100, ],每个检测到对象不是背景的概率

- Boxes: [100, 4],每个检测到的对象的位置(左上角及右下角坐标)

- raw_masks: [100, 81, 28, 28],实际是对每个实例都生成一个14*14的mask,对每个实例获取81个类别(80个类别+背景)的概率值,输出81个14 * 14大小的矩阵

- 实际内存中的结果矩阵是 14 * 14 * 81 * 100

代码实现

#include <inference_engine.hpp>

#include <opencv2/opencv.hpp>

#include <fstream> //fstream文件读写操作,iostream为控制台操作

using namespace InferenceEngine;

/*

void read_coco_labels(std::vector<std::string>& labels) {

std::string label_file = "D:/projects/models/coco_labels.txt";

std::ifstream fp(label_file);

if (!fp.is_open())

{

printf("could not open file...\n");

exit(-1);

}

std::string name;

while (!fp.eof())

{

std::getline(fp, name);

if (name.length())

labels.push_back(name);

}

fp.close();

}

*/

//图像预处理函数

template <typename T>

void matU8ToBlob(const cv::Mat& orig_image, InferenceEngine::Blob::Ptr& blob, int batchIndex = 0) {

InferenceEngine::SizeVector blobSize = blob->getTensorDesc().getDims();

const size_t width = blobSize[3];

const size_t height = blobSize[2];

const size_t channels = blobSize[1];

InferenceEngine::MemoryBlob::Ptr mblob = InferenceEngine::as<InferenceEngine::MemoryBlob>(blob);

if (!mblob) {

THROW_IE_EXCEPTION << "We expect blob to be inherited from MemoryBlob in matU8ToBlob, "

<< "but by fact we were not able to cast inputBlob to MemoryBlob";

}

// locked memory holder should be alive all time while access to its buffer happens

auto mblobHolder = mblob->wmap();

T* blob_data = mblobHolder.as<T*>();

cv::Mat resized_image(orig_image);

if (static_cast<int>(width) != orig_image.size().width ||

static_cast<int>(height) != orig_image.size().height) {

cv::resize(orig_image, resized_image, cv::Size(width, height));

}

int batchOffset = batchIndex * width * height * channels;

for (size_t c = 0; c < channels; c++) {

for (size_t h = 0; h < height; h++) {

for (size_t w = 0; w < width; w++) {

blob_data[batchOffset + c * width * height + h * width + w] =

resized_image.at<cv::Vec3b>(h, w)[c];

}

}

}

}

int main(int argc, char** argv) {

InferenceEngine::Core ie;

std::vector<std::string> coco_labels;

//read_coco_labels(coco_labels);

cv::RNG rng(12345);

std::string xml = "D:/projects/models/instance-segmentation-security-0050/FP32/instance-segmentation-security-0050.xml";

std::string bin = "D:/projects/models/instance-segmentation-security-0050/FP32/instance-segmentation-security-0050.bin";

cv::Mat src = cv::imread("D:/images/instance_segmentation.jpg"); //读取图像

int im_h = src.rows;

int im_w = src.cols;

InferenceEngine::CNNNetwork network = ie.ReadNetwork(xml, bin); //读取车辆检测网络

//获取网络输入输出信息

InferenceEngine::InputsDataMap inputs = network.getInputsInfo(); //DataMap是一个Mat数组

InferenceEngine::OutputsDataMap outputs = network.getOutputsInfo(); //DataMap是一个Mat数组

std::string image_input_name = "";

std::string image_info_name = "";

int in_index = 0;

//设置两个网络输入数据的参数

for (auto item : inputs) { //auto可以自动推断变量类型

if (in_index == 0) {

image_input_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto input_data = item.second;

// A->B 表示提取A中的成员B

input_data->setPrecision(Precision::U8); //默认为unsigned char对应U8,浮点类型则为FP32

input_data->setLayout(Layout::NCHW);

}

else {

image_info_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto input_data = item.second;

// A->B 表示提取A中的成员B

input_data->setPrecision(Precision::FP32); //默认为unsigned char对应U8,浮点类型则为FP32

}

in_index++;

}

for (auto item : outputs) { //auto可以自动推断变量类型

std::string output_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto output_data = item.second;

output_data->setPrecision(Precision::FP32); //输出还是浮点数

//注意:output_data不要设置结构

std::cout << "output name: " << output_name << std::endl;

}

auto executable_network = ie.LoadNetwork(network, "CPU"); //设置运行的设备

auto infer_request = executable_network.CreateInferRequest(); //设置推理请求

//图像预处理

auto input = infer_request.GetBlob(image_input_name); //获取网络输入图像信息

//将输入图像转换为网络的输入格式

matU8ToBlob<uchar>(src, input);

//设置网络的第二个输入

auto input2 = infer_request.GetBlob(image_info_name);

auto imInforDim = inputs.find(image_info_name)->second->getTensorDesc().getDims()[1];

InferenceEngine::MemoryBlob::Ptr minput2 = InferenceEngine::as<InferenceEngine::MemoryBlob>(input2);

auto minput2Holder = minput2->wmap();

float* p = minput2Holder.as<InferenceEngine::PrecisionTrait<InferenceEngine::Precision::FP32>::value_type*>();

p[0] = static_cast<float>(inputs[image_input_name]->getTensorDesc().getDims()[2]); //输入图像的高

p[1] = static_cast<float>(inputs[image_input_name]->getTensorDesc().getDims()[3]); //输入图像的宽

p[2] = 1.0f; //scale,前面图像已经转换为480*480,这里保持为1.0就可以

infer_request.Infer();

float w_rate = static_cast<float>(im_w) / 480.0; //用于通过网络输出中的坐标获取原图的坐标

float h_rate = static_cast<float>(im_h) / 480.0;

auto scores = infer_request.GetBlob("scores"); //获取网络输出中的信息

auto boxes = infer_request.GetBlob("boxes");

auto classes = infer_request.GetBlob("classes");

auto raw_masks = infer_request.GetBlob("raw_masks");

//转换输出数据

const float* scores_data = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(scores->buffer()); //强制转换数据类型为浮点型

const float* boxes_data = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(boxes->buffer());

const float* classes_data = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(classes->buffer());

const auto raw_masks_data = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(raw_masks->buffer());

const SizeVector scores_outputDims = scores->getTensorDesc().getDims(); //获取输出维度信息 1*1000

const SizeVector boxes_outputDims = boxes->getTensorDesc().getDims(); //获取输出维度信息 1*1000

const SizeVector raw_masks_outputDims = raw_masks->getTensorDesc().getDims(); //[100, 81, 28, 28]

const int max_count = scores_outputDims[0]; //识别出的对象个数

const int object_size = boxes_outputDims[1]; //获取对象信息的个数,此处为4个

printf("mask NCHW=[%d, %d, %d, %d]\n", raw_masks_outputDims[0], raw_masks_outputDims[1], raw_masks_outputDims[2], raw_masks_outputDims[3]);

int mask_h = raw_masks_outputDims[2];

int mask_w = raw_masks_outputDims[3];

size_t box_stride = mask_h * mask_w * raw_masks_outputDims[1]; //两个mask之间的距离

for (int n = 0; n < max_count; n++) {

float confidence = scores_data[n];

float xmin = boxes_data[n * object_size] * w_rate; //转换为原图中的坐标

float ymin = boxes_data[n * object_size + 1] * h_rate;

float xmax = boxes_data[n * object_size + 2] * w_rate;

float ymax = boxes_data[n * object_size + 3] * h_rate;

if (confidence > 0.5) {

cv::Scalar color(rng.uniform(0, 255), rng.uniform(0, 255), rng.uniform(0, 255));

cv::Rect box;

float x1 = std::min(std::max(0.0f, xmin), static_cast<float>(im_w)); //避免越界

float y1 = std::min(std::max(0.0f, ymin), static_cast<float>(im_h));

float x2 = std::min(std::max(0.0f, xmax), static_cast<float>(im_w));

float y2 = std::min(std::max(0.0f, ymax), static_cast<float>(im_h));

box.x = static_cast<int>(x1);

box.y = static_cast<int>(y1);

box.width = static_cast<int>(x2 - x1);

box.height = static_cast<int>(y2 - y1);

int label = static_cast<int>(classes_data[n]); //第几个实例

//std::cout << "confidence: " << confidence << "class name: " << coco_labels[label] << std::endl;

//解析mask,raw_masks_data表示所有mask起始位置,box_stride*n表示跳过遍历的实例

float* mask_arr = raw_masks_data + box_stride * n + mask_h * mask_w * label; //找到当前实例当前分类mask的起始指针

cv::Mat mask_mat(mask_h, mask_w, CV_32FC1, mask_arr); //从mask_arr指针开始取值构建Mat

cv::Mat roi_img = src(box); //创建src大小的Mat并保留box区域

cv::Mat resized_mask_mat(box.height, box.width, CV_32FC1);

cv::resize(mask_mat, resized_mask_mat, cv::Size(box.width, box.height));

cv::Mat uchar_resized_mask(box.height, box.width, CV_8UC3, color);

roi_img.copyTo(uchar_resized_mask, resized_mask_mat <= 0.5); //resized_mask_mat中像素值<=0.5的像素都不会复制到uchar_resized_mask上

cv::addWeighted(uchar_resized_mask, 0.7, roi_img, 0.3, 0.0f, roi_img);

//cv::rectangle(src, box, cv::Scalar(0, 0, 255), 2, 8);

//box.tl()返回矩形左上角坐标

cv::putText(src, cv::format("%.2f", confidence), box.tl(), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 255), 1, 8);

}

}

//cv::putText(src, labels[max_index], cv::Point(50, 50), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 255), 2, 8);

cv::namedWindow("out", cv::WINDOW_AUTOSIZE);

cv::imshow("out", src);

cv::waitKey(0);

return 0;

}

效果:

7、场景文字检测与识别

场景文字检测

模型介绍

- text-detection-0003

- PixelLink模型库,BGR顺序

- 1个输入层:[B, C, H, W] [1 * 3 * 768 * 1280]

- 2个输出层:

- model/link_logits_/add:[1x16x192x320] - 像素与周围像素的联系

- model/segm_logits/add:[1x2x192x320] - 每个像素所属分类(文本/非文本),只要解析第二个输出就可以获取文本区域

代码实现

#include <inference_engine.hpp>

#include <opencv2/opencv.hpp>

#include <fstream> //fstream文件读写操作,iostream为控制台操作

using namespace InferenceEngine;

//图像预处理函数

template <typename T>

void matU8ToBlob(const cv::Mat& orig_image, InferenceEngine::Blob::Ptr& blob, int batchIndex = 0) {

InferenceEngine::SizeVector blobSize = blob->getTensorDesc().getDims();

const size_t width = blobSize[3];

const size_t height = blobSize[2];

const size_t channels = blobSize[1];

InferenceEngine::MemoryBlob::Ptr mblob = InferenceEngine::as<InferenceEngine::MemoryBlob>(blob);

if (!mblob) {

THROW_IE_EXCEPTION << "We expect blob to be inherited from MemoryBlob in matU8ToBlob, "

<< "but by fact we were not able to cast inputBlob to MemoryBlob";

}

// locked memory holder should be alive all time while access to its buffer happens

auto mblobHolder = mblob->wmap();

T* blob_data = mblobHolder.as<T*>();

cv::Mat resized_image(orig_image);

if (static_cast<int>(width) != orig_image.size().width ||

static_cast<int>(height) != orig_image.size().height) {

cv::resize(orig_image, resized_image, cv::Size(width, height));

}

int batchOffset = batchIndex * width * height * channels;

for (size_t c = 0; c < channels; c++) {

for (size_t h = 0; h < height; h++) {

for (size_t w = 0; w < width; w++) {

blob_data[batchOffset + c * width * height + h * width + w] =

resized_image.at<cv::Vec3b>(h, w)[c];

}

}

}

}

int main(int argc, char** argv) {

InferenceEngine::Core ie;

std::string xml = "D:/projects/models/text-detection-0003/FP32/text-detection-0003.xml";

std::string bin = "D:/projects/models/text-detection-0003/FP32/text-detection-0003.bin";

cv::Mat src = cv::imread("D:/images/text_detection.png"); //读取图像

cv::imshow("input", src);

int im_h = src.rows;

int im_w = src.cols;

InferenceEngine::CNNNetwork network = ie.ReadNetwork(xml, bin); //读取车辆检测网络

//获取网络输入输出信息

InferenceEngine::InputsDataMap inputs = network.getInputsInfo(); //DataMap是一个Mat数组

InferenceEngine::OutputsDataMap outputs = network.getOutputsInfo(); //DataMap是一个Mat数组

std::string image_input_name = "";

//设置两个网络输入数据的参数

for (auto item : inputs) { //auto可以自动推断变量类型

image_input_name = item.first; //第一个参数是name,第二个参数是结构,第二个参数设置精度与结构

auto input_data = item.second;

// A->B 表示提取A中的成员B

input_data->setPrecision(Precision::U8); //默认为unsigned char对应U8,浮点类型则为FP32

input_data->setLayout(Layout::NCHW);

}

std::string output_name1 = "";

std::string output_name2 = "";

int out_index = 0;

for (auto item : outputs) { //auto可以自动推断变量类型

if (out_index == 1) {

output_name2 = item.first;

}

else {

output_name1 = item.first;

}

auto output_data = item.second;

output_data->setPrecision(Precision::FP32); //输出还是浮点数

out_index++;

}

auto executable_network = ie.LoadNetwork(network, "CPU"); //设置运行的设备

auto infer_request = executable_network.CreateInferRequest(); //设置推理请求

//图像预处理

auto input = infer_request.GetBlob(image_input_name); //获取网络输入图像信息

//将输入图像转换为网络的输入格式

matU8ToBlob<uchar>(src, input);

infer_request.Infer();

auto output = infer_request.GetBlob(output_name2); //只解析第二个输出即可

//转换输出数据

const float* detection_out = static_cast<PrecisionTrait<Precision::FP32>::value_type*>(output->buffer());

//output:[B, C, H, W] [1, 2, 192, 320]

const SizeVector outputDims = output->getTensorDesc().getDims(); //获取输出维度信息 1*1000

//每个像素针对每种分类分别有一个识别结果数值,数值最大的为该像素的分类

//结果矩阵格式为:每种分类各有一个输出图像大小的矩阵,每个像素位置对应其在该分类的可能性

const int out_c = outputDims[1]; //分割识别的类型个数,此处为2

const int out_h = outputDims[2]; //分割网络输出图像的高

const int out_w = outputDims[3]; //分割网络输出图像的宽

cv::Mat mask = cv::Mat::zeros(cv::Size(out_w, out_h), CV_32F);

int step = out_h * out_w;

for (int row = 0; row < out_h; row++) {

for (int col = 0; col < out_w; col++) {

float p1 = detection_out[row * out_w + col]; //获取每个像素最大可能的分类类别

float p2 = detection_out[step + row * out_w + col];

if (p1 < p2) {

mask.at<float>(row, col) = p2;

}

}

}

//先初始化一个网络输出结果大小的矩阵保存每个像素点对应的颜色,再将结果矩阵恢复到原图大小,以便最终结果显示

cv::resize(mask, mask, cv::Size(im_w, im_h));

mask = mask * 255;

mask.convertTo(mask, CV_8U); //把mask从浮点数转换为整数,并将范围转换为0-255

cv::threshold(mask, mask, 100, 255, cv::THRESH_BINARY); //将mask按指定范围进行二值化分割

std::vector<std::vector<cv::Point>> contours;

cv::findContours(mask, contours, cv::RETR_EXTERNAL, cv::CHAIN_APPROX_SIMPLE);

for (size_t t = 0; t < contours.size(); t++) { //绘制每个前景区域外轮廓,遍历这些外轮廓并绘制到输入图像中

cv::Rect box = cv::boundingRect(contours[t]);

cv::rectangle(src, box, cv::Scalar(0, 0, 255), 2, 8, 0);

}

//cv::putText(src, labels[max_index], cv::Point(50, 50), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 0, 255), 2, 8);

cv::namedWindow("mask", cv::WINDOW_AUTOSIZE);

cv::imshow("mask", mask);

cv::imshow("场景文字检测", src);

cv::waitKey(0);

return 0;

}

效果:

场景文字识别

模型介绍

- 模型名称:text-recognition-0012

- 输入格式 - BCHW = [1 * 1 * 32 * 120],输入的是单通道灰度图

- 输出层 - WBL = [30, 1, 37], W表示序列长度,每个字符占一行,共30行,每个字符有37种可能,所以占37列