PyCharm+Scrapy爬取安居客楼盘信息

一、说明

1.1 开发环境说明

开发环境--PyCharm

爬虫框架--Scrapy

开发语言--Python 3.6

安装第三方库--Scrapy、pymysql、matplotlib

数据库--MySQL-5.5(监听地址--127.0.0.1:3306,用户名--root,密码--root,数据库--anjuke)

1.2 程序简要说明

本程序以安居客-深圳为例,其他城市使用的是一样的结构爬取其他程序基本修改start_urls和rules中的url即可移植

本程序实现安居客新楼盘和二手房的信息爬取,还存在一些小问题,但算基本可用

程序的总体思路是:使用CrawlSpider爬虫----从start_urls开始爬行----爬行到的url如果符合某个rule就会自动调用回调函数----回调函数使用xpath解析和获取楼盘信息item----pipe将传过来的item写入数据库--report脚本从数据库中读出数据生成图表

新楼盘和二手房爬取的区别是,新楼盘没有反爬虫机制。二手房一是限制了访问频率,如果超过某个频率就需要输入验证码才能访问(我这里通过限制5秒发一个请求进行处理),二是二手房信息页面经过javascript处理,禁用javascript时信息处理div[4]启用javascript时信息被移动到div[3],scrapy默认是不运行javascript的所以需要使用禁用javascript时的路径才能获取信息。

项目源码已上传github:https://github.com/PrettyUp/Anjuke

二、创建数据库表结构

sql创建代码:

# Host: localhost (Version: 5.5.53)

# Date: 2018-06-06 18:27:08

# Generator: MySQL-Front 5.3 (Build 4.234) /*!40101 SET NAMES utf8 */; #

# Structure for table "sz_loupan_info"

# CREATE TABLE `sz_loupan_info` (

`loupan_name` varchar(255) DEFAULT NULL,

`loupan_status` varchar(255) DEFAULT NULL,

`loupan_price` int(11) DEFAULT NULL,

`loupan_discount` varchar(255) DEFAULT NULL,

`loupan_layout` varchar(255) DEFAULT NULL,

`loupan_location` varchar(255) DEFAULT NULL,

`loupan_opening` varchar(255) DEFAULT NULL,

`loupan_transfer` varchar(255) DEFAULT NULL,

`loupan_type` varchar(255) DEFAULT NULL,

`loupan_age` varchar(255) DEFAULT NULL,

`loupan_url` varchar(255) DEFAULT NULL

) ENGINE=MyISAM DEFAULT CHARSET=utf8 ROW_FORMAT=DYNAMIC; #

# Structure for table "sz_sh_house_info"

# CREATE TABLE `sz_sh_house_info` (

`house_title` varchar(255) DEFAULT NULL,

`house_cost` varchar(255) DEFAULT NULL,

`house_code` varchar(255) DEFAULT NULL,

`house_public_time` varchar(255) DEFAULT NULL,

`house_community` varchar(255) DEFAULT NULL,

`house_location` varchar(255) DEFAULT NULL,

`house_build_years` varchar(255) DEFAULT NULL,

`house_kind` varchar(255) DEFAULT NULL,

`house_layout` varchar(255) DEFAULT NULL,

`house_size` varchar(255) DEFAULT NULL,

`house_face_to` varchar(255) DEFAULT NULL,

`house_point` varchar(255) DEFAULT NULL,

`house_price` varchar(255) DEFAULT NULL,

`house_first_pay` varchar(255) DEFAULT NULL,

`house_month_pay` varchar(255) DEFAULT NULL,

`house_decorate_type` varchar(255) DEFAULT NULL,

`house_agent` varchar(255) DEFAULT NULL,

`house_agency` varchar(255) DEFAULT NULL,

`house_url` varchar(255) DEFAULT NULL

) ENGINE=MyISAM DEFAULT CHARSET=utf8;

三、程序实现

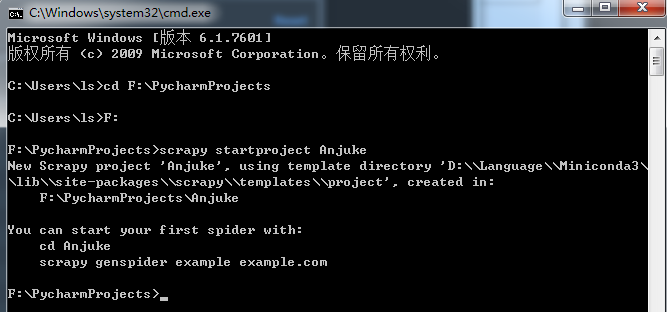

3.1 使用scrapy创建项目

打开cmd,切换到PyCharm工程目录,执行:

scrapy startproject Anjuke

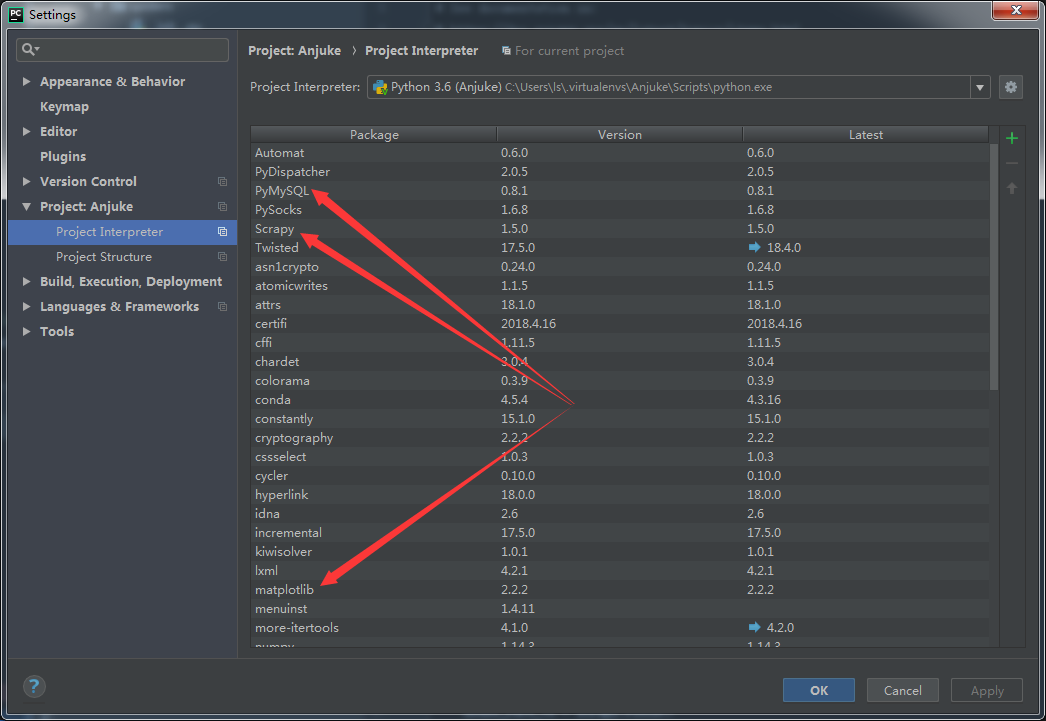

3.2 使用pycharm打开项目并安装好所需的第三方库

直接在pycharm中安装scrapy、pymysql和matplotlib(其他依赖库会自动安装);另外注意,安装scrapy安成后复制一份cmdline.py到项目主目录下

3.3 创建程序所需文件

anjuke_sz_spider.py----楼盘信息爬取脚本

anjuke_sz_sh_spider.py----二手房信息爬取脚本

anjuke_sz_report.py----楼盘信息报告图表生成脚本

anjuke_sz_sh_report.py----二手房信息报告图表生成脚本

项目目录结构如下:

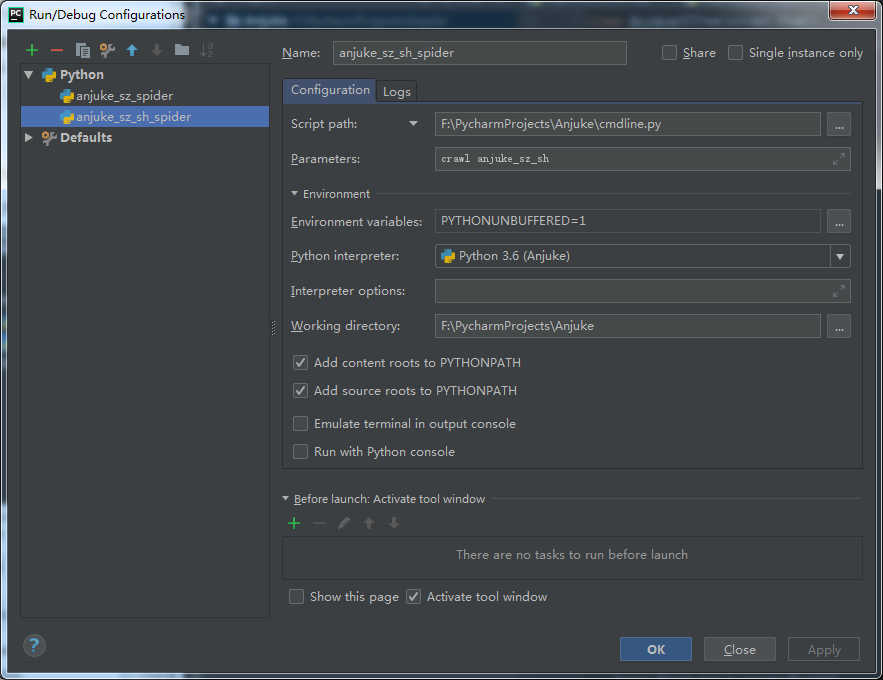

3.4 配置好scrapy调试运行环境

为anjuke_sz_sider.py和anjuke_sz_sh_spider.py配置好运行参数

3.5 各文件实现

settings.py

BOT_NAME = 'Anjuke' SPIDER_MODULES = ['Anjuke.spiders']

NEWSPIDER_MODULE = 'Anjuke.spiders'

USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:59.0) Gecko/20100101 Firefox/59.0'

ROBOTSTXT_OBEY = False

DOWNLOAD_DELAY = 5

COOKIES_ENABLED = False

ITEM_PIPELINES = {

'Anjuke.pipelines.AnjukeSZPipeline': 300,

'Anjuke.pipelines.AnjukeSZSHPipeline': 300,

}

items.py

import scrapy class AnjukeSZItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

loupan_id = scrapy.Field()

loupan_name = scrapy.Field()

loupan_status = scrapy.Field()

loupan_price = scrapy.Field()

loupan_discount = scrapy.Field()

loupan_layout = scrapy.Field()

loupan_location = scrapy.Field()

loupan_opening = scrapy.Field()

loupan_transfer = scrapy.Field()

loupan_type = scrapy.Field()

loupan_age = scrapy.Field()

loupan_url = scrapy.Field() class AnjukeSZSHItem(scrapy.Item):

house_title = scrapy.Field()

house_cost = scrapy.Field()

house_code = scrapy.Field()

house_public_time = scrapy.Field()

house_community = scrapy.Field()

house_location = scrapy.Field()

house_build_years = scrapy.Field()

house_kind = scrapy.Field()

house_layout = scrapy.Field()

house_size = scrapy.Field()

house_face_to = scrapy.Field()

house_point = scrapy.Field()

house_price = scrapy.Field()

house_first_pay = scrapy.Field()

house_month_pay = scrapy.Field()

house_decorate_type = scrapy.Field()

house_agent = scrapy.Field()

house_agency = scrapy.Field()

house_url = scrapy.Field()

pipelines.py

import pymysql class AnjukeSZPipeline(object):

def __init__(self):

self.db = pymysql.connect("localhost", "root", "root", "anjuke", charset="utf8")

self.cursor = self.db.cursor() def process_item(self, item, spider):

sql = "insert into sz_loupan_info(loupan_name,loupan_status,loupan_price,loupan_discount,loupan_layout,loupan_location,loupan_opening,loupan_transfer,loupan_type,loupan_age,loupan_url)\

values('%s','%s','%d','%s','%s','%s','%s','%s','%s','%s','%s')"\

%(item['loupan_name'],item['loupan_status'],int(item['loupan_price']),item['loupan_discount'],item['loupan_layout'],item['loupan_location'], \

item['loupan_opening'],item['loupan_transfer'],item['loupan_type'],item['loupan_age'],item['loupan_url'])

self.cursor.execute(sql)

self.db.commit()

return item def __del__(self):

self.db.close() class AnjukeSZSHPipeline(object):

def __init__(self):

self.db = pymysql.connect("localhost", "root", "root", "anjuke", charset="utf8")

self.cursor = self.db.cursor() def process_item(self, item, spider):

sql = "insert into sz_sh_house_info(house_title,house_cost,house_code,house_public_time,house_community,house_location,house_build_years,house_kind,house_layout,house_size,\

house_face_to,house_point,house_price,house_first_pay,house_month_pay,house_decorate_type,house_agent,house_agency,house_url)\

values('%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s','%s')"\

%(item['house_title'],item['house_cost'],item['house_code'],item['house_public_time'],item['house_community'],item['house_location'],\

item['house_build_years'],item['house_kind'], item['house_layout'],item['house_size'],item['house_face_to'],item['house_point'],item['house_price'],\

item['house_first_pay'],item['house_month_pay'],item['house_decorate_type'],item['house_agent'],item['house_agency'],item['house_url'])

self.cursor.execute(sql)

self.db.commit()

return item def __del__(self):

self.db.close()

anjuke_sz_spider.py

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import Rule

from Anjuke.items import AnjukeSZItem class AnjukeSpider(scrapy.spiders.CrawlSpider):

name = 'anjuke_sz' allow_domains = ["anjuke.com"] start_urls = [

'https://sz.fang.anjuke.com/loupan/all/p1/',

] rules = [

Rule(LinkExtractor(allow=("https://sz\.fang\.anjuke\.com/loupan/all/p\d{1,}"))),

Rule(LinkExtractor(allow=("https://sz\.fang\.anjuke\.com/loupan/\d{1,}")), follow=False, callback='parse_item')

] def is_number(self,s):

try:

float(s)

return True

except ValueError:

pass

try:

import unicodedata

unicodedata.numeric(s)

return True

except (TypeError, ValueError):

pass

return False def get_sellout_item(self,response):

loupan_nodes = {}

loupan_nodes['loupan_name_nodes'] = response.xpath('//*[@id="j-triggerlayer"]/text()')

loupan_nodes['loupan_status_nodes'] = response.xpath('/html/body/div[1]/div[3]/div/div[2]/i/text()')

loupan_nodes['loupan_price_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[2]/span/text()')

if loupan_nodes['loupan_price_nodes']:

if self.is_number(loupan_nodes['loupan_price_nodes'].extract()[0].strip()):

loupan_nodes['loupan_discount_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[2]/text()')

loupan_nodes['loupan_layout_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[3]/div/text()')

loupan_nodes['loupan_location_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[4]/span/text()')

loupan_nodes['loupan_opening_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[3]/p[1]/span/text()')

loupan_nodes['loupan_transfer_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[3]/p[2]/span/text()')

loupan_nodes['loupan_type_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[1]/span/text()')

loupan_nodes['loupan_age_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[2]/span/text()')

else:

loupan_nodes['loupan_price_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[1]/p/em/text()')

loupan_nodes['loupan_discount_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[2]/text()')

loupan_nodes['loupan_layout_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[3]/div/text()')

loupan_nodes['loupan_location_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[2]/span/text()')

loupan_nodes['loupan_opening_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[3]/p[1]/span/text()')

loupan_nodes['loupan_transfer_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[3]/p[2]/span/text()')

loupan_nodes['loupan_type_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[3]/div[1]/ul[1]/li/span/text()')

loupan_nodes['loupan_age_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[2]/span/text()')

else:

loupan_nodes['loupan_price_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[1]/p/em/text()')

if loupan_nodes['loupan_price_nodes']:

loupan_nodes['loupan_discount_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[2]/text()')

loupan_nodes['loupan_layout_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[2]/div/text()')

loupan_nodes['loupan_location_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[3]/span/text()')

loupan_nodes['loupan_opening_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[3]/p[1]/span/text()')

loupan_nodes['loupan_transfer_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[3]/p[2]/span/text()')

loupan_nodes['loupan_type_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[3]/div[1]/ul[1]/li/span/text()')

loupan_nodes['loupan_age_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[2]/span/text()')

else:

loupan_nodes['loupan_price_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[2]/dl/dd[1]/p/em/text()')

if loupan_nodes['loupan_price_nodes']:

loupan_nodes['loupan_discount_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[2]/text()')

loupan_nodes['loupan_layout_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[2]/dl/dd[2]/div/text()')

loupan_nodes['loupan_location_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[2]/dl/dd[3]/span/text()')

loupan_nodes['loupan_opening_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/p[1]/span/text()')

loupan_nodes['loupan_transfer_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/p[2]/span/text()')

loupan_nodes['loupan_type_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[3]/div[1]/ul[1]/li/span/text()')

loupan_nodes['loupan_age_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[2]/span/text()')

else:

loupan_nodes['loupan_price_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[2]/dl/dd[2]/span/text()')

loupan_nodes['loupan_discount_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[2]/text()')

loupan_nodes['loupan_layout_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[2]/dl/dd[3]/div/text()')

loupan_nodes['loupan_location_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[2]/dl/dd[4]/span/text()')

loupan_nodes['loupan_opening_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/p[1]/span/text()')

loupan_nodes['loupan_transfer_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/p[2]/span/text()')

loupan_nodes['loupan_type_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[3]/div[1]/ul[1]/li/span/text()')

loupan_nodes['loupan_age_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[2]/span/text()') if not loupan_nodes['loupan_location_nodes']:

loupan_nodes['loupan_location_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[3]/span/text()')

loupan_item = self.struct_loupan_item(loupan_nodes)

return loupan_item def get_sellwait_item(self,response):

loupan_nodes = {}

loupan_nodes['loupan_name_nodes'] = response.xpath('//*[@id="j-triggerlayer"]/text()')

loupan_nodes['loupan_status_nodes'] = response.xpath('/html/body/div[1]/div[3]/div/div[2]/i/text()')

loupan_nodes['loupan_price_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[2]/span/text()')

if loupan_nodes['loupan_price_nodes']:

loupan_nodes['loupan_discount_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[2]/text()')

loupan_nodes['loupan_layout_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[3]/div/text()')

loupan_nodes['loupan_location_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[4]/span/text()')

loupan_nodes['loupan_opening_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/p[1]/text()')

loupan_nodes['loupan_transfer_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/p[2]/text()')

loupan_nodes['loupan_type_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[1]/span/text()')

loupan_nodes['loupan_age_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[2]/span/text()')

else:

loupan_nodes['loupan_price_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[2]/dl/dd[2]/span/text()')

if loupan_nodes['loupan_price_nodes']:

loupan_nodes['loupan_discount_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[2]/text()')

loupan_nodes['loupan_layout_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[2]/dl/dd[3]/div/text()')

loupan_nodes['loupan_location_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[2]/dl/dd[4]/span/text()')

loupan_nodes['loupan_opening_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[5]/p[1]/text()')

loupan_nodes['loupan_transfer_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[5]/p[2]/text()')

loupan_nodes['loupan_type_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[1]/span/text()')

loupan_nodes['loupan_age_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[2]/span/text()')

else:

loupan_nodes['loupan_price_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[1]/p/em/text()')

loupan_nodes['loupan_discount_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[2]/text()')

loupan_nodes['loupan_layout_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[2]/div/text()')

loupan_nodes['loupan_location_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[3]/span/text()')

loupan_nodes['loupan_opening_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/p[1]/text()')

loupan_nodes['loupan_transfer_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/p[2]/text()')

loupan_nodes['loupan_type_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li/span/text()')

loupan_nodes['loupan_age_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[2]/span/text()') if not loupan_nodes['loupan_location_nodes']:

loupan_nodes['loupan_location_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[3]/span/text()') loupan_item = self.struct_loupan_item(loupan_nodes)

return loupan_item def get_common_item(self,response):

loupan_nodes = {}

loupan_nodes['loupan_name_nodes'] = response.xpath('//*[@id="j-triggerlayer"]/text()')

loupan_nodes['loupan_status_nodes'] = response.xpath('/html/body/div[1]/div[3]/div/div[2]/i/text()')

loupan_nodes['loupan_price_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[1]/p/em/text()')

if loupan_nodes['loupan_price_nodes']:

loupan_nodes['loupan_discount_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[2]/text()')

loupan_nodes['loupan_layout_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[3]/div/text()')

loupan_nodes['loupan_location_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[4]/span/text()')

loupan_nodes['loupan_opening_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/p[1]/text()')

loupan_nodes['loupan_transfer_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/p[2]/text()')

loupan_nodes['loupan_type_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[1]/span/text()')

loupan_nodes['loupan_age_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[2]/span/text()')

else:

loupan_nodes['loupan_price_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[2]/dl/dd[1]/p/em/text()')

if loupan_nodes['loupan_price_nodes']:

loupan_nodes['loupan_discount_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[2]/dl/dd[2]/a[1]/text()')

loupan_nodes['loupan_layout_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[2]/dl/dd[3]/div/text()')

loupan_nodes['loupan_location_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[2]/dl/dd[4]/span/text()')

loupan_nodes['loupan_opening_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[5]/p[1]/text()')

loupan_nodes['loupan_transfer_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[5]/p[2]/text()')

loupan_nodes['loupan_type_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[1]/span/text()')

loupan_nodes['loupan_age_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[2]/span/text()')

else:

loupan_nodes['loupan_price_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[2]/span/text()')

loupan_nodes['loupan_discount_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[3]/text()')

loupan_nodes['loupan_layout_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[4]/div/text()')

loupan_nodes['loupan_location_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[5]/span/text()')

loupan_nodes['loupan_opening_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/p[1]/span/text()')

loupan_nodes['loupan_transfer_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/p[2]/span/text()')

loupan_nodes['loupan_type_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[1]/span/text()')

loupan_nodes['loupan_age_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[4]/div/ul[1]/li[2]/span/text()') if not loupan_nodes['loupan_location_nodes']:

loupan_nodes['loupan_location_nodes'] = response.xpath('/html/body/div[2]/div[1]/div[2]/div[1]/dl/dd[3]/span/text()') loupan_item = self.struct_loupan_item(loupan_nodes)

return loupan_item def struct_loupan_item(self,loupan_nodes):

loupan_item = AnjukeSZItem()

if loupan_nodes['loupan_name_nodes']:

loupan_item['loupan_name'] = loupan_nodes['loupan_name_nodes'].extract()[0].strip()

if loupan_nodes['loupan_status_nodes']:

loupan_item['loupan_status'] = loupan_nodes['loupan_status_nodes'].extract()[0].strip()

else:

loupan_item['loupan_status'] = ''

if loupan_nodes['loupan_price_nodes']:

loupan_item['loupan_price'] = loupan_nodes['loupan_price_nodes'].extract()[0].strip()

else:

loupan_item['loupan_price'] = ''

if loupan_nodes['loupan_discount_nodes']:

loupan_item['loupan_discount'] = loupan_nodes['loupan_discount_nodes'].extract()[0].strip()

else:

loupan_item['loupan_discount'] = ''

if loupan_nodes['loupan_layout_nodes']:

loupan_item['loupan_layout'] = loupan_nodes['loupan_layout_nodes'].extract()[0].strip()

else:

loupan_item['loupan_layout'] = ''

if loupan_nodes['loupan_location_nodes']:

loupan_item['loupan_location'] = loupan_nodes['loupan_location_nodes'].extract()[0].strip()

else:

loupan_item['loupan_location'] = ''

if loupan_nodes['loupan_opening_nodes']:

loupan_item['loupan_opening'] = loupan_nodes['loupan_opening_nodes'].extract()[0].strip()

else:

loupan_item['loupan_opening'] = ''

if loupan_nodes['loupan_transfer_nodes']:

loupan_item['loupan_transfer'] = loupan_nodes['loupan_transfer_nodes'].extract()[0].strip()

else:

loupan_item['loupan_transfer'] = ''

if loupan_nodes['loupan_type_nodes']:

loupan_item['loupan_type'] = loupan_nodes['loupan_type_nodes'].extract()[0].strip()

else:

loupan_item['loupan_type'] = ''

if loupan_nodes['loupan_age_nodes']:

loupan_item['loupan_age'] = loupan_nodes['loupan_age_nodes'].extract()[0].strip()

else:

loupan_item['loupan_age'] = ''

return loupan_item def parse_item(self, response):

loupan_status_nodes = response.xpath('/html/body/div[1]/div[3]/div/div[2]/i/text()')

if loupan_status_nodes.extract()[0].strip() == '售罄':

loupan_item = self.get_sellout_item(response)

elif loupan_status_nodes.extract()[0].strip() == '待售':

loupan_item = self.get_sellwait_item(response)

else:

loupan_item = self.get_common_item(response) loupan_item['loupan_url'] = response.url

return loupan_item

anjuke_sz_sh_spider.py

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import Rule

from Anjuke.items import AnjukeSZSHItem class AnjukeSpider(scrapy.spiders.CrawlSpider):

name = 'anjuke_sz_sh' allow_domains = ["anjuke.com"] start_urls = [

'https://shenzhen.anjuke.com/sale/p1',

] rules = [

Rule(LinkExtractor(allow=("https://shenzhen\.anjuke\.com/sale/p\d{1,}"))),

Rule(LinkExtractor(allow=("https://shenzhen\.anjuke\.com/prop/view/A\d{1,}")), follow=False, callback='parse_item')

] def get_house_item(self,response):

house_nodes = {}

house_nodes["house_title_nodes"] = response.xpath('/html/body/div[1]/div[2]/div[3]/h3/text()')

house_nodes["house_cost_nodes"] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[1]/div[1]/span[1]/em/text()')

house_nodes["house_code_nodes"] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[1]/div[3]/h4/span[2]/text()')

house_nodes["house_community_nodes"] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[1]/div[3]/div/div[1]/div/div[1]/dl[1]/dd/a/text()')

house_nodes["house_location_nodes"] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[1]/div[3]/div/div[1]/div/div[1]/dl[2]/dd/p')

house_nodes["house_build_years_nodes"] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[1]/div[3]/div/div[1]/div/div[1]/dl[3]/dd/text()')

house_nodes["house_kind_nodes"] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[1]/div[3]/div/div[1]/div/div[1]/dl[4]/dd/text()')

house_nodes["house_layout_nodes"] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[1]/div[3]/div/div[1]/div/div[2]/dl[1]/dd/text()')

house_nodes["house_size_nodes"] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[1]/div[3]/div/div[1]/div/div[2]/dl[2]/dd/text()')

house_nodes["house_face_to_nodes"] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[1]/div[3]/div/div[1]/div/div[2]/dl[3]/dd/text()')

house_nodes["house_point_nodes"] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[1]/div[3]/div/div[1]/div/div[2]/dl[4]/dd/text()')

house_nodes["house_price_nodes"] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[1]/div[3]/div/div[1]/div/div[3]/dl[1]/dd/text()')

house_nodes["house_first_pay_nodes"] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[1]/div[3]/div/div[1]/div/div[3]/dl[2]/dd/text()')

house_nodes["house_month_pay_nodes"] = response.xpath('//*[@id="reference_monthpay"]/text()')

house_nodes["house_decorate_type_nodes"] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[1]/div[3]/div/div[1]/div/div[3]/dl[4]/dd/text()')

house_nodes['house_agent_nodes'] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[2]/div/div[1]/div[1]/div/div/text()')

house_nodes['house_agency_nodes'] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[2]/div/div[1]/div[5]/div/p[1]/a/text()')

if not house_nodes['house_agency_nodes']:

house_nodes['house_agency_nodes'] = response.xpath('/html/body/div[1]/div[2]/div[4]/div[2]/div/div[1]/div[5]/div/p/text()') house_item = self.struct_house_item(house_nodes)

return house_item def struct_house_item(self,house_nodes):

house_item = AnjukeSZSHItem()

if house_nodes['house_title_nodes']:

house_item['house_title'] = house_nodes['house_title_nodes'].extract()[0].strip()

else:

house_item['house_title'] = ''

if house_nodes['house_cost_nodes']:

house_item['house_cost'] = house_nodes['house_cost_nodes'].extract()[0].strip()

else:

house_item['house_cost'] = ''

if house_nodes['house_code_nodes']:

temp_dict = house_nodes['house_code_nodes'].extract()[0].strip().split(',')

house_item['house_code'] = temp_dict[0]

house_item['house_public_time'] = temp_dict[1]

else:

house_item['house_code'] = ''

house_item['house_public_time'] = ''

if house_nodes['house_community_nodes']:

house_item['house_community'] = house_nodes['house_community_nodes'].extract()[0].strip()

else:

house_item['house_community'] = ''

if house_nodes['house_location_nodes']:

house_item['house_location'] = house_nodes['house_location_nodes'].xpath('string(.)').extract()[0].strip().replace('\t','').replace('\n','')

else:

house_item['house_location'] = ''

if house_nodes['house_build_years_nodes']:

house_item['house_build_years'] = house_nodes['house_build_years_nodes'].extract()[0].strip()

else:

house_item['house_build_years'] = ''

if house_nodes['house_kind_nodes']:

house_item['house_kind'] = house_nodes['house_kind_nodes'].extract()[0].strip()

else:

house_item['house_kind'] = ''

if house_nodes['house_layout_nodes']:

house_item['house_layout'] = house_nodes['house_layout_nodes'].extract()[0].strip().replace('\t','').replace('\n','')

else:

house_item['house_layout'] = ''

if house_nodes['house_size_nodes']:

house_item['house_size'] = house_nodes['house_size_nodes'].extract()[0].strip()

else:

house_item['house_size'] = ''

if house_nodes['house_face_to_nodes']:

house_item['house_face_to'] = house_nodes['house_face_to_nodes'].extract()[0].strip()

else:

house_item['house_face_to'] = ''

if house_nodes['house_point_nodes']:

house_item['house_point'] = house_nodes['house_point_nodes'].extract()[0].strip()

else:

house_item['house_point'] = ''

if house_nodes['house_price_nodes']:

house_item['house_price'] = house_nodes['house_price_nodes'].extract()[0].strip()

else:

house_item['house_price'] = ''

if house_nodes['house_first_pay_nodes']:

house_item['house_first_pay'] = house_nodes['house_first_pay_nodes'].extract()[0].strip()

else:

house_item['house_first_pay'] = ''

if house_nodes['house_month_pay_nodes']:

house_item['house_month_pay'] = house_nodes['house_month_pay_nodes'].extract()[0].strip()

else:

house_item['house_month_pay'] = ''

if house_nodes['house_decorate_type_nodes']:

house_item['house_decorate_type'] = house_nodes['house_decorate_type_nodes'].extract()[0].strip()

else:

house_item['house_decorate_type'] = ''

if house_nodes['house_agent_nodes']:

house_item['house_agent'] = house_nodes['house_agent_nodes'].extract()[0].strip()

else:

house_item['house_agent'] = ''

if house_nodes['house_agency_nodes']:

house_item['house_agency'] = house_nodes['house_agency_nodes'].extract()[0].strip()

else:

house_item['house_agency'] = ''

return house_item def parse_item(self, response):

house_item = self.get_house_item(response)

house_item['house_url'] = response.url

return house_item

anjuke_sz_report.py

import matplotlib.pyplot as plt

import pymysql

import numpy as np class AjukeSZReport():

def __init__(self):

self.db = pymysql.connect('127.0.0.1', 'root', 'root', 'anjuke', charset='utf8')

self.cursor = self.db.cursor() def export_result_piture(self):

district = ['南山','宝安','福田','罗湖','光明','龙华','龙岗','坪山','盐田','大鹏','深圳','惠州','东莞']

x = np.arange(len(district))

house_price_avg = []

for district_temp in district:

if district_temp == '深圳':

sql = "select avg(loupan_price) from sz_loupan_info where loupan_location not like '%周边%' and loupan_price > 5000"

else:

sql = "select avg(loupan_price) from sz_loupan_info where loupan_location like '%" + district_temp + "%' and loupan_price > 5000"

self.cursor.execute(sql)

results = self.cursor.fetchall()

house_price_avg.append(results[0][0])

bars = plt.bar(x, house_price_avg)

plt.xticks(x, district)

plt.rcParams['font.sans-serif'] = ['SimHei']

i = 0

for bar in bars:

plt.text((bar.get_x()+bar.get_width()/2),bar.get_height(),'%d'%house_price_avg[i],ha='center',va='bottom')

i += 1

plt.show() def __del__(self):

self.db.close() if __name__ == '__main__':

anjukeSZReport = AjukeSZReport()

anjukeSZReport.export_result_piture()

anjuke_sz_sh_report.py

import matplotlib.pyplot as plt

import pymysql

import numpy as np class AjukeSZSHReport():

def __init__(self):

self.db = pymysql.connect('127.0.0.1', 'root', 'root', 'anjuke', charset='utf8')

self.cursor = self.db.cursor() def export_result_piture(self):

district = ['南山','宝安','福田','罗湖','光明','龙华','龙岗','坪山','盐田','大鹏','深圳','惠州','东莞']

x = np.arange(len(district))

house_price_avg = []

for district_temp in district:

if district_temp == '深圳':

sql = "select house_price from sz_sh_house_info where house_location not like '%周边%'"

else:

sql = "select house_price from sz_sh_house_info where house_location like '%" + district_temp + "%'"

self.cursor.execute(sql)

results = self.cursor.fetchall()

house_price_sum = 0

house_num = 0

for result in results:

house_price_dict = result[0].split(' ')

house_price_sum += int(house_price_dict[0])

house_num += 1

house_price_avg.append(house_price_sum/house_num)

bars = plt.bar(x, house_price_avg)

plt.xticks(x, district)

plt.rcParams['font.sans-serif'] = ['SimHei']

i = 0

for bar in bars:

plt.text((bar.get_x()+bar.get_width()/2),bar.get_height(),'%d'%house_price_avg[i],ha='center',va='bottom')

i += 1

plt.show() def __del__(self):

self.db.close() if __name__ == '__main__':

anjukeSZReport = AjukeSZSHReport()

anjukeSZReport.export_result_piture()

其他文件未做修改,保执自动生成时的模样不动即可

三、项目结果演示

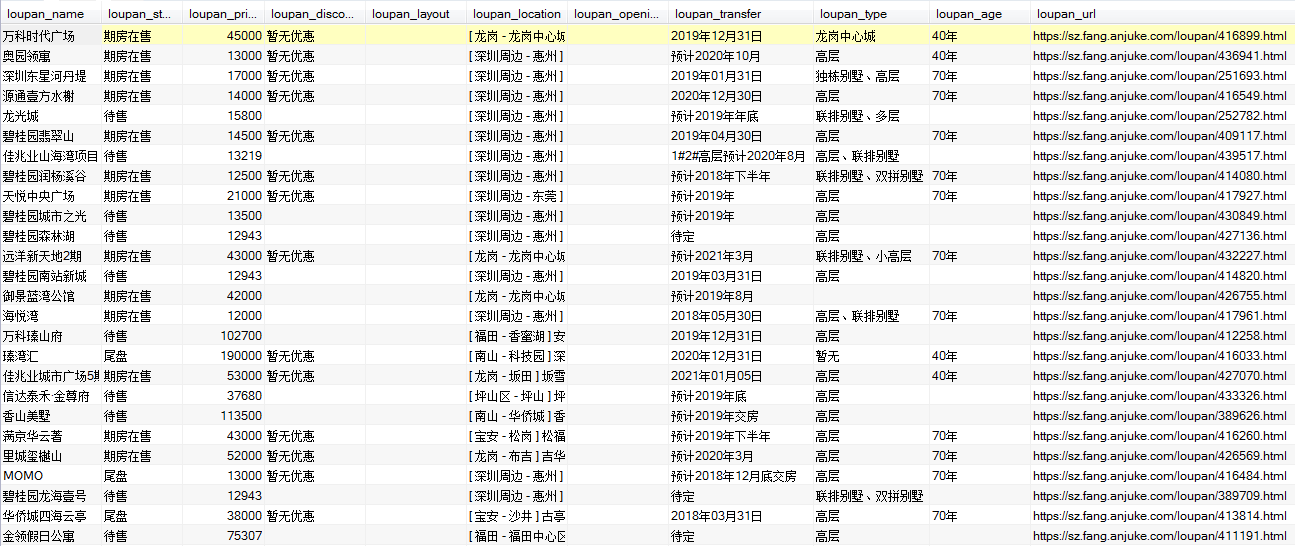

anjuke_sz_spider.py收集部份楼盘数据截图

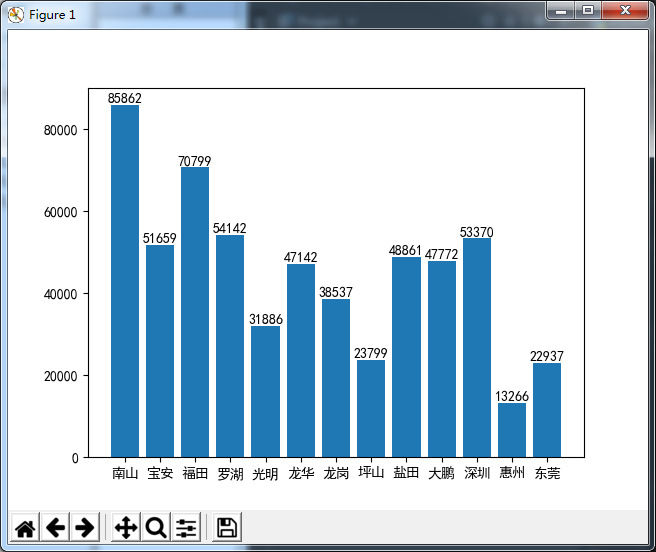

anjuke_sz_report.py生成图表截图:

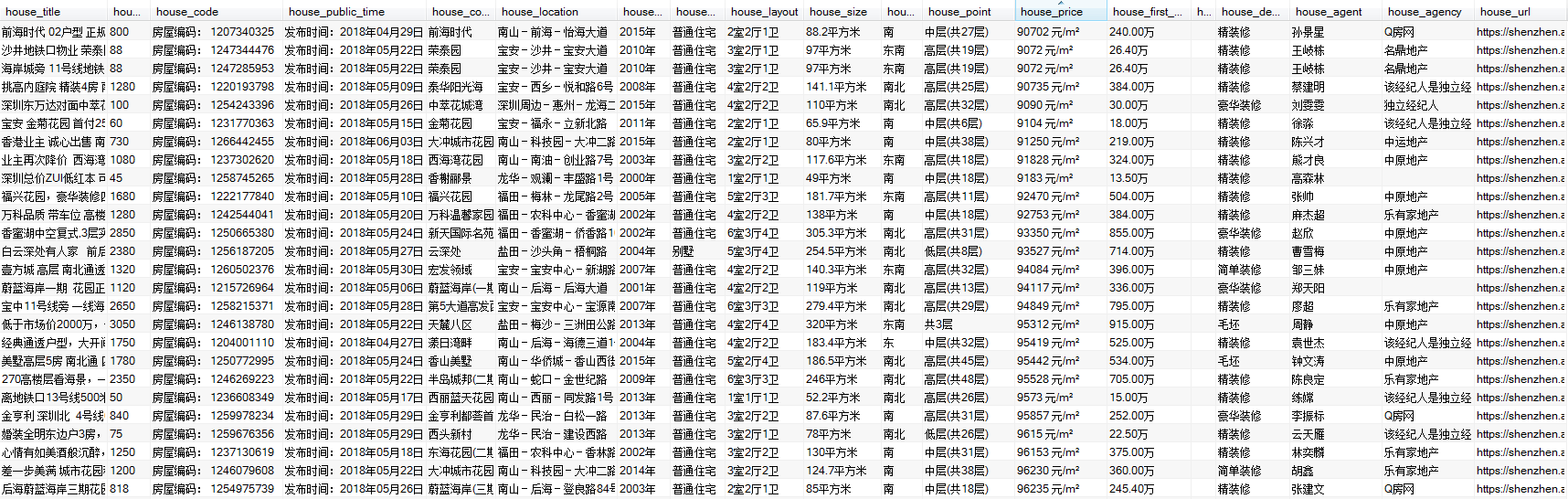

anjuke_sz_sh_spider.py收集部份二手房数据截图:

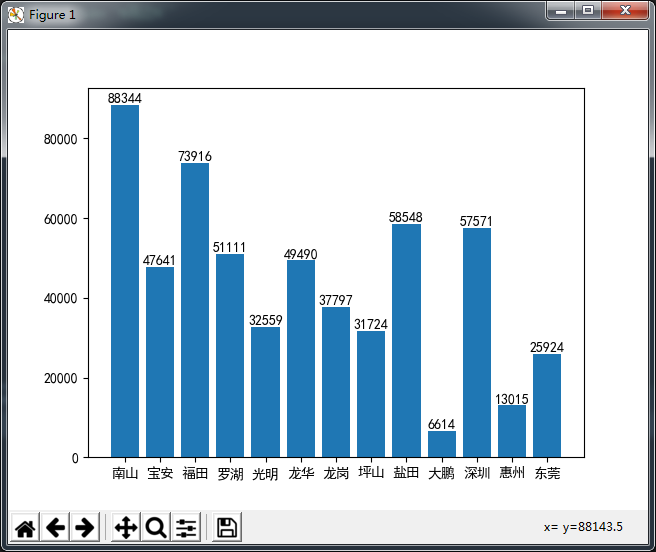

anjuke_sz_sh_report.py生成报表截图:

PyCharm+Scrapy爬取安居客楼盘信息的更多相关文章

- scrapy爬取极客学院全部课程

# -*- coding: utf-8 -*- # scrapy爬取极客学院全部课程 import scrapy from pyquery import PyQuery as pq from jike ...

- Python开发爬虫之BeautifulSoup解析网页篇:爬取安居客网站上北京二手房数据

目标:爬取安居客网站上前10页北京二手房的数据,包括二手房源的名称.价格.几室几厅.大小.建造年份.联系人.地址.标签等. 网址为:https://beijing.anjuke.com/sale/ B ...

- 爬虫(十六):scrapy爬取知乎用户信息

一:爬取思路 首先我们应该找到一个账号,这个账号被关注的人和关注的人都相对比较多的,就是下图中金字塔顶端的人,然后通过爬取这个账号的信息后,再爬取他关注的人和被关注的人的账号信息,然后爬取被关注人的账 ...

- 利用 Scrapy 爬取知乎用户信息

思路:通过获取知乎某个大V的关注列表和被关注列表,查看该大V和其关注用户和被关注用户的详细信息,然后通过层层递归调用,实现获取关注用户和被关注用户的关注列表和被关注列表,最终实现获取大量用户信息. 一 ...

- 使用python scrapy爬取知乎提问信息

前文介绍了python的scrapy爬虫框架和登录知乎的方法. 这里介绍如何爬取知乎的问题信息,并保存到mysql数据库中. 首先,看一下我要爬取哪些内容: 如下图所示,我要爬取一个问题的6个信息: ...

- 【scrapy实践】_爬取安居客_广州_新楼盘数据

需求:爬取[安居客—广州—新楼盘]的数据,具体到每个楼盘的详情页的若干字段. 难点:楼盘类型各式各样:住宅 别墅 商住 商铺 写字楼,不同楼盘字段的名称不一样.然后同一种类型,比如住宅,又分为不同的情 ...

- python3 [爬虫实战] selenium 爬取安居客

我们爬取的网站:https://www.anjuke.com/sy-city.html 获取的内容:包括地区名,地区链接: 安居客详情 一开始直接用requests库进行网站的爬取,会访问不到数据的, ...

- python爬取安居客二手房网站数据(转)

之前没课的时候写过安居客的爬虫,但那也是小打小闹,那这次呢, 还是小打小闹 哈哈,现在开始正式进行爬虫书写 首先,需要分析一下要爬取的网站的结构: 作为一名河南的学生,那就看看郑州的二手房信息吧! 在 ...

- Python-新手爬取安居客新房房源

新手,整个程序还有很多瑕疵. 1.房源访问的网址为城市的拼音+后面统一的地址.需要用到xpinyin库 2.用了2种解析网页数据的库bs4和xpath(先学习的bs4,学了xpath后部分代码改成xp ...

随机推荐

- 用tsMuxeR GUI给ts视频添加音轨

收藏比赛的都应该知道,高清的直播流录制了后一般是ts或者mkv封装,前者用tsMuxeR GUI可以对视频音频轨进行操作,后者用mkvtoolnix,两者都是无损操作. 至于其他格式就不考虑了,随便用 ...

- Django中ORM系统多表数据操作

一,多表操作之增删改查 1.在seting.py文件中配置数据库连接信息 2.创建数据库关联关系models.py from django.db import models # Create your ...

- Matlab中的基本数据类型介绍

Matlab中支持的数据类型包括: 逻辑(logical)字符(char)数值(numeric)元胞数组(cell)结构体(structure)表格(table)函数句柄(function handl ...

- [转]jsbsim基础概念

转自: 么的聊链接:https://www.jianshu.com/p/a0b4598f928a 虽然用户不需要掌握太多 JSBSim 飞行模拟器的细节,但是了解 JSBSim 的基本工作流程也会对学 ...

- Thread.Sleep(0)妙用

Thread.Sleep(0)妙用 我们可能经常会用到 Thread.Sleep 函数来使线程挂起一段时间.那么你有没有正确的理解这个函数的用法呢?思考下面这两个问题: 假设现在是 2008-4-7 ...

- 《剑指offer》第五十三题(数字在排序数组中出现的次数)

// 面试题53(一):数字在排序数组中出现的次数 // 题目:统计一个数字在排序数组中出现的次数.例如输入排序数组{1, 2, 3, 3, // 3, 3, 4, 5}和数字3,由于3在这个数组中出 ...

- 虹软2.0免费离线人脸识别 Demo [C++]

环境: win10(10.0.16299.0)+ VS2017 sdk版本:ArcFace v2.0 OPENCV3.43版本 x64平台Debug.Release配置都已通过编译 下载地址:http ...

- genome repeat sequence | 基因组重复序列

基因组里的小写字母的序列就是soft masking,也就是被标记的重复序列. 怎么把重复序列提取出来,保存为bed文件? 参考:Uppercase vs lowercase letters in r ...

- android--------自定义控件 之 属性篇

上篇介绍了自定义控件的一个简单案例,本篇文章主要介绍如何给自定义控件自定义一些属性. Android 中使用自定义属性的一般步骤: 定义declare-styleable,添加attr 使用Typed ...

- Luffy之Xadmin以及首页搭建(轮播图,导航)

1. 首页 1.1 轮播图 admin站点配置支持图片上传 pip install Pillow 默认情况下,Django会将上传的图片保存在本地服务器上,需要配置保存的路径.我们可以将上传的文件保存 ...