kafka启动时出现FATAL Fatal error during KafkaServer startup. Prepare to shutdown (kafka.server.KafkaServer) java.io.IOException: Permission denied错误解决办法(图文详解)

首先,说明,我kafk的server.properties是

kafka的server.properties配置文件参考示范(图文详解)(多种方式)

问题详情

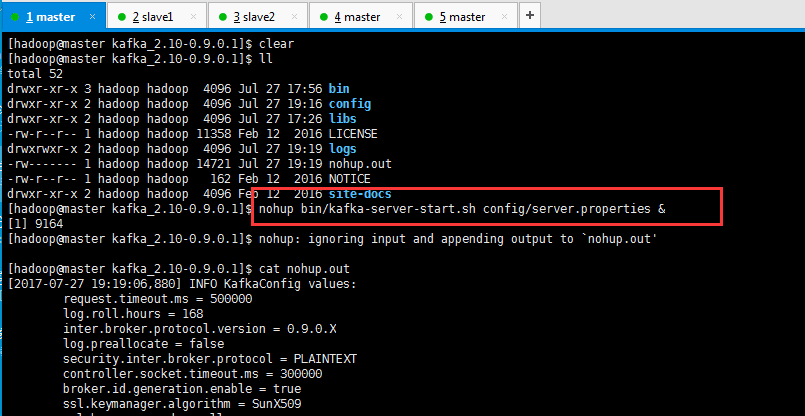

然后,我启动时,出现如下

[hadoop@master kafka_2.-0.9.0.1]$ nohup bin/kafka-server-start.sh config/server.properties &

[]

[hadoop@master kafka_2.-0.9.0.1]$ nohup: ignoring input and appending output to `nohup.out' [hadoop@master kafka_2.-0.9.0.1]$ cat nohup.out

[-- ::,] INFO KafkaConfig values:

request.timeout.ms =

log.roll.hours =

inter.broker.protocol.version = 0.9..X

log.preallocate = false

security.inter.broker.protocol = PLAINTEXT

controller.socket.timeout.ms =

broker.id.generation.enable = true

ssl.keymanager.algorithm = SunX509

ssl.key.password = null

log.cleaner.enable = false

ssl.provider = null

num.recovery.threads.per.data.dir =

background.threads =

unclean.leader.election.enable = true

sasl.kerberos.kinit.cmd = /usr/bin/kinit

replica.lag.time.max.ms =

ssl.endpoint.identification.algorithm = null

auto.create.topics.enable = false

zookeeper.sync.time.ms =

ssl.client.auth = none

ssl.keystore.password = null

log.cleaner.io.buffer.load.factor = 0.9

offsets.topic.compression.codec =

log.retention.hours =

log.dirs = /data/kafka-log/log/

ssl.protocol = TLS

log.index.size.max.bytes =

sasl.kerberos.min.time.before.relogin =

log.retention.minutes = null

connections.max.idle.ms =

ssl.trustmanager.algorithm = PKIX

offsets.retention.minutes =

max.connections.per.ip =

replica.fetch.wait.max.ms =

metrics.num.samples =

port =

offsets.retention.check.interval.ms =

log.cleaner.dedupe.buffer.size =

log.segment.bytes =

group.min.session.timeout.ms =

producer.purgatory.purge.interval.requests =

min.insync.replicas =

ssl.truststore.password = null

log.flush.scheduler.interval.ms =

socket.receive.buffer.bytes =

leader.imbalance.per.broker.percentage =

num.io.threads =

zookeeper.connect = master:,slave1:,slave2:

queued.max.requests =

offsets.topic.replication.factor =

replica.socket.timeout.ms =

offsets.topic.segment.bytes =

replica.high.watermark.checkpoint.interval.ms =

broker.id =

ssl.keystore.location = null

listeners = PLAINTEXT://:9092

log.flush.interval.messages =

principal.builder.class = class org.apache.kafka.common.security.auth.DefaultPrincipalBuilder

log.retention.ms = null

offsets.commit.required.acks = -

sasl.kerberos.principal.to.local.rules = [DEFAULT]

group.max.session.timeout.ms =

num.replica.fetchers =

advertised.listeners = null

replica.socket.receive.buffer.bytes =

delete.topic.enable = false

log.index.interval.bytes =

metric.reporters = []

compression.type = producer

log.cleanup.policy = delete

controlled.shutdown.max.retries =

log.cleaner.threads =

quota.window.size.seconds =

zookeeper.connection.timeout.ms =

offsets.load.buffer.size =

zookeeper.session.timeout.ms =

ssl.cipher.suites = null

authorizer.class.name =

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.service.name = null

controlled.shutdown.enable = true

offsets.topic.num.partitions =

quota.window.num =

message.max.bytes =

log.cleaner.backoff.ms =

log.roll.jitter.hours =

log.retention.check.interval.ms =

replica.fetch.max.bytes =

log.cleaner.delete.retention.ms =

fetch.purgatory.purge.interval.requests =

log.cleaner.min.cleanable.ratio = 0.5

offsets.commit.timeout.ms =

zookeeper.set.acl = false

log.retention.bytes =

offset.metadata.max.bytes =

leader.imbalance.check.interval.seconds =

quota.consumer.default =

log.roll.jitter.ms = null

reserved.broker.max.id =

replica.fetch.backoff.ms =

advertised.host.name = null

quota.producer.default =

log.cleaner.io.buffer.size =

controlled.shutdown.retry.backoff.ms =

log.dir = /tmp/kafka-logs

log.flush.offset.checkpoint.interval.ms =

log.segment.delete.delay.ms =

num.partitions =

num.network.threads =

socket.request.max.bytes =

sasl.kerberos.ticket.renew.window.factor = 0.8

log.roll.ms = null

ssl.enabled.protocols = [TLSv1., TLSv1., TLSv1]

socket.send.buffer.bytes =

log.flush.interval.ms =

ssl.truststore.location = null

log.cleaner.io.max.bytes.per.second = 1.7976931348623157E308

default.replication.factor =

metrics.sample.window.ms =

auto.leader.rebalance.enable = true

host.name =

ssl.truststore.type = JKS

advertised.port = null

max.connections.per.ip.overrides =

replica.fetch.min.bytes =

ssl.keystore.type = JKS

(kafka.server.KafkaConfig)

[-- ::,] INFO starting (kafka.server.KafkaServer)

[-- ::,] INFO Connecting to zookeeper on master:,slave1:,slave2: (kafka.server.KafkaServer)

[-- ::,] INFO Starting ZkClient event thread. (org.I0Itec.zkclient.ZkEventThread)

[-- ::,] INFO Client environment:zookeeper.version=3.4.-, built on // : GMT (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:host.name=master (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:java.version=1.7.0_79 (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:java.vendor=Oracle Corporation (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:java.home=/home/hadoop/app/jdk1..0_79/jre (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:java.class.path=/home/hadoop/app/jdk/lib:.:/home/hadoop/app/jdk/lib:/home/hadoop/app/jdk/jre/lib:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/scala-library-2.10..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/kafka_2.-0.9.0.1-test.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/validation-api-1.1..Final.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/slf4j-api-1.7..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jetty-io-9.2..v20150709.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/aopa

lliance-repackaged-2.4.-b31.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jopt-simple-3.2.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/javax.servlet-api-3.1..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jersey-client-2.22..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jersey-guava-2.22..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/kafka_2.-0.9.0.1.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/slf4j-log4j12-1.7..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/javax.annotation-api-1.2.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/connect-file-0.9.0.1.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/metrics-core-2.2..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/javax.inject-.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jersey-server-2.22..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/argparse4j-0.5..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/kafka-tools-0.9.0.1.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jackson-annotations-2.5..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jersey-common-2.22..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/connect-json-0.9.0.1.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jersey-media-jaxb-2.22..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jetty-util-9.2..v20150709.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/osgi-resource-locator-1.0..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/kafka_2.-0.9.0.1-sources.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/hk2-utils-2.4.-b31.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jetty-http-9.2..v20150709.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/kafka-clients-0.9.0.1.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/kafka_2.-0.9.0.1-javadoc.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jackson-databind-2.5..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/javassist-3.18.-GA.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jackson-jaxrs-base-2.5..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jackson-module-jaxb-annotations-2.5..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jackson-jaxrs-json-provider-2.5..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/javax.inject-2.4.-b31.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/connect-runtime-0.9.0.1.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jersey-container-servlet-2.22..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/kafka-log4j-appender-0.9.0.1.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/log4j-1.2..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/javax.ws.rs-api-2.0..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/zookeeper-3.4..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/hk2-api-2.4.-b31.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jetty-server-9.2..v20150709.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jetty-servlet-9.2..v20150709.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jersey-container-servlet-core-2.22..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/hk2-locator-2.4.-b31.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/connect-api-0.9.0.1.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jetty-security-9.2..v20150709.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/zkclient-0.7.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/jackson-core-2.5..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/lz4-1.2..jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/snappy-java-1.1.1.7.jar:/home/hadoop/app/kafka_2.-0.9.0.1/bin/../libs/kafka_2.-0.9.0.1-scaladoc.jar (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:java.io.tmpdir=/tmp (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:java.compiler=<NA> (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:os.name=Linux (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:os.arch=amd64 (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:os.version=2.6.-.el6.x86_64 (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:user.name=hadoop (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:user.home=/home/hadoop (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Client environment:user.dir=/home/hadoop/app/kafka_2.-0.9.0.1 (org.apache.zookeeper.ZooKeeper)

[-- ::,] INFO Initiating client connection, connectString=master:,slave1:,slave2: sessionTimeout= watcher=org.I0Itec.zkclient.ZkClient@ (org.apache.zo

okeeper.ZooKeeper)

[-- ::,] INFO Waiting for keeper state SyncConnected (org.I0Itec.zkclient.ZkClient)

[-- ::,] INFO Opening socket connection to server slave1/192.168.80.146:. Will not attempt to authenticate using SASL (unknown error) (org.apache.zookeeper.ClientCnxn)

[-- ::,] INFO Socket connection established to slave1/192.168.80.146:, initiating session (org.apache.zookeeper.ClientCnxn)

[-- ::,] INFO Session establishment complete on server slave1/192.168.80.146:, sessionid = 0x25d831488090000, negotiated timeout = (org.apache.zookeeper.ClientCnxn)

[-- ::,] INFO zookeeper state changed (SyncConnected) (org.I0Itec.zkclient.ZkClient)

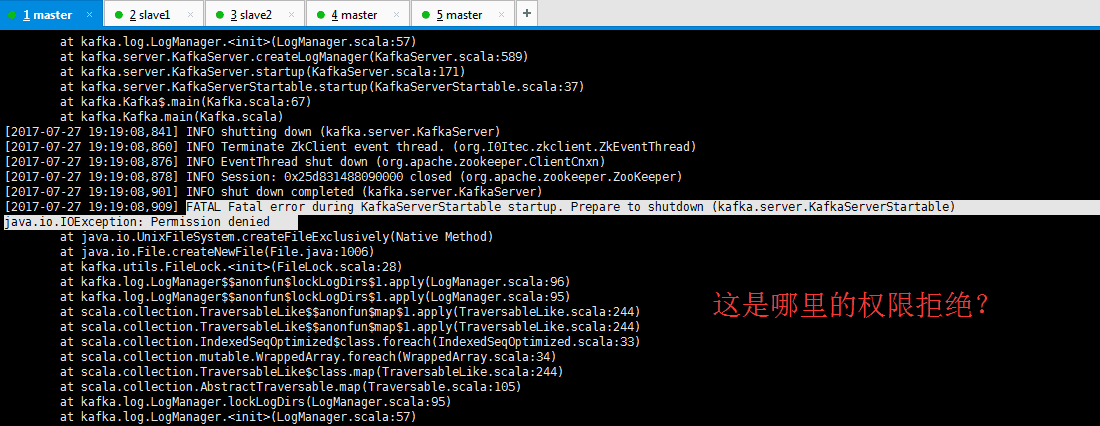

[-- ::,] FATAL Fatal error during KafkaServer startup. Prepare to shutdown (kafka.server.KafkaServer)

java.io.IOException: Permission denied

at java.io.UnixFileSystem.createFileExclusively(Native Method)

at java.io.File.createNewFile(File.java:)

at kafka.utils.FileLock.<init>(FileLock.scala:)

at kafka.log.LogManager$$anonfun$lockLogDirs$.apply(LogManager.scala:)

at kafka.log.LogManager$$anonfun$lockLogDirs$.apply(LogManager.scala:)

at scala.collection.TraversableLike$$anonfun$map$.apply(TraversableLike.scala:)

at scala.collection.TraversableLike$$anonfun$map$.apply(TraversableLike.scala:)

at scala.collection.IndexedSeqOptimized$class.foreach(IndexedSeqOptimized.scala:)

at scala.collection.mutable.WrappedArray.foreach(WrappedArray.scala:)

at scala.collection.TraversableLike$class.map(TraversableLike.scala:)

at scala.collection.AbstractTraversable.map(Traversable.scala:)

at kafka.log.LogManager.lockLogDirs(LogManager.scala:)

at kafka.log.LogManager.<init>(LogManager.scala:)

at kafka.server.KafkaServer.createLogManager(KafkaServer.scala:)

at kafka.server.KafkaServer.startup(KafkaServer.scala:)

at kafka.server.KafkaServerStartable.startup(KafkaServerStartable.scala:)

at kafka.Kafka$.main(Kafka.scala:)

at kafka.Kafka.main(Kafka.scala)

[-- ::,] INFO shutting down (kafka.server.KafkaServer)

[-- ::,] INFO Terminate ZkClient event thread. (org.I0Itec.zkclient.ZkEventThread)

问题分析

是我在新建

要么执行启动命令的用户,要么写日志的文件目录权限

写日志的文件目录权限问题,新建快了,忘记chown了

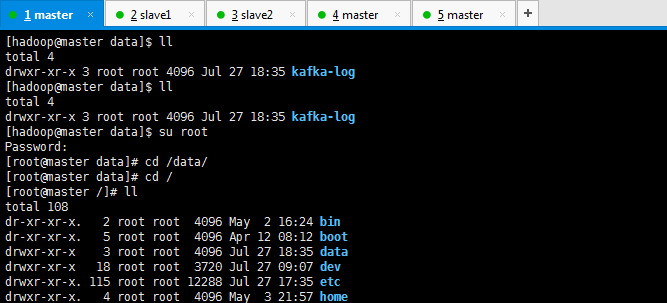

解决办法

[hadoop@master data]$ ll

total

drwxr-xr-x root root Jul : kafka-log

[hadoop@master data]$ ll

total

drwxr-xr-x root root Jul : kafka-log

[hadoop@master data]$ su root

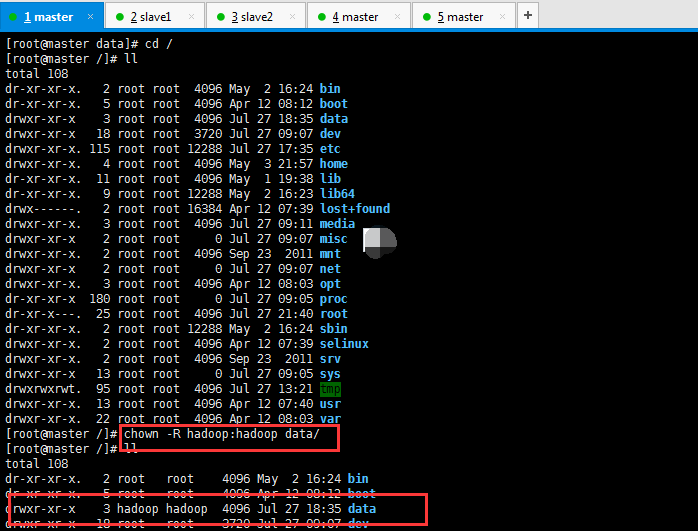

Password:

[root@master data]# cd /data/

[root@master data]# cd /

[root@master /]# ll

total

dr-xr-xr-x. root root May : bin

dr-xr-xr-x. root root Apr : boot

drwxr-xr-x root root Jul : data

drwxr-xr-x root root Jul : dev

drwxr-xr-x. root root Jul : etc

drwxr-xr-x. root root May : home

dr-xr-xr-x. root root May : lib

dr-xr-xr-x. root root May : lib64

drwx------. root root Apr : lost+found

drwxr-xr-x. root root Jul : media

drwxr-xr-x root root Jul : misc

drwxr-xr-x. root root Sep mnt

drwxr-xr-x root root Jul : net

drwxr-xr-x. root root Apr : opt

dr-xr-xr-x root root Jul : proc

dr-xr-x---. root root Jul : root

dr-xr-xr-x. root root May : sbin

drwxr-xr-x. root root Apr : selinux

drwxr-xr-x. root root Sep srv

drwxr-xr-x root root Jul : sys

drwxrwxrwt. root root Jul : tmp

drwxr-xr-x. root root Apr : usr

drwxr-xr-x. root root Apr : var

[root@master /]# chown -R hadoop:hadoop data/

[root@master /]# ll

total

dr-xr-xr-x. root root May : bin

dr-xr-xr-x. root root Apr : boot

drwxr-xr-x hadoop hadoop Jul : data

drwxr-xr-x root root Jul : dev

drwxr-xr-x. root root Jul : etc

drwxr-xr-x. root root May : home

dr-xr-xr-x. root root May : lib

dr-xr-xr-x. root root May : lib64

drwx------. root root Apr : lost+found

drwxr-xr-x. root root Jul : media

drwxr-xr-x root root Jul : misc

drwxr-xr-x. root root Sep mnt

drwxr-xr-x root root Jul : net

drwxr-xr-x. root root Apr : opt

dr-xr-xr-x root root Jul : proc

dr-xr-x---. root root Jul : root

dr-xr-xr-x. root root May : sbin

drwxr-xr-x. root root Apr : selinux

drwxr-xr-x. root root Sep srv

drwxr-xr-x root root Jul : sys

drwxrwxrwt. root root Jul : tmp

drwxr-xr-x. root root Apr : usr

drwxr-xr-x. root root Apr : var

[root@master /]#

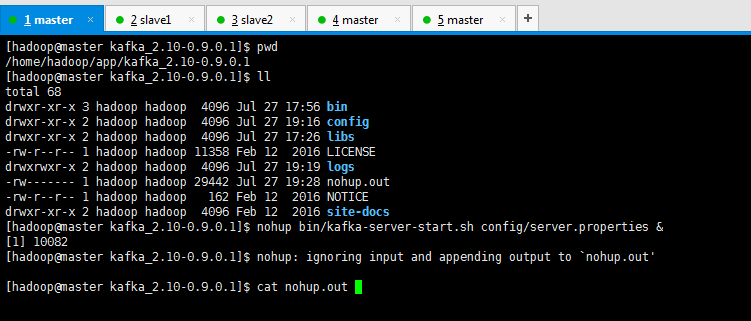

然后,再次启动

[hadoop@master kafka_2.-0.9.0.1]$ pwd

/home/hadoop/app/kafka_2.-0.9.0.1

[hadoop@master kafka_2.-0.9.0.1]$ ll

total

drwxr-xr-x hadoop hadoop Jul : bin

drwxr-xr-x hadoop hadoop Jul : config

drwxr-xr-x hadoop hadoop Jul : libs

-rw-r--r-- hadoop hadoop Feb LICENSE

drwxrwxr-x hadoop hadoop Jul : logs

-rw------- hadoop hadoop Jul : nohup.out

-rw-r--r-- hadoop hadoop Feb NOTICE

drwxr-xr-x hadoop hadoop Feb site-docs

[hadoop@master kafka_2.-0.9.0.1]$ nohup bin/kafka-server-start.sh config/server.properties &

[]

[hadoop@master kafka_2.-0.9.0.1]$ nohup: ignoring input and appending output to `nohup.out' [hadoop@master kafka_2.-0.9.0.1]$ cat nohup.out

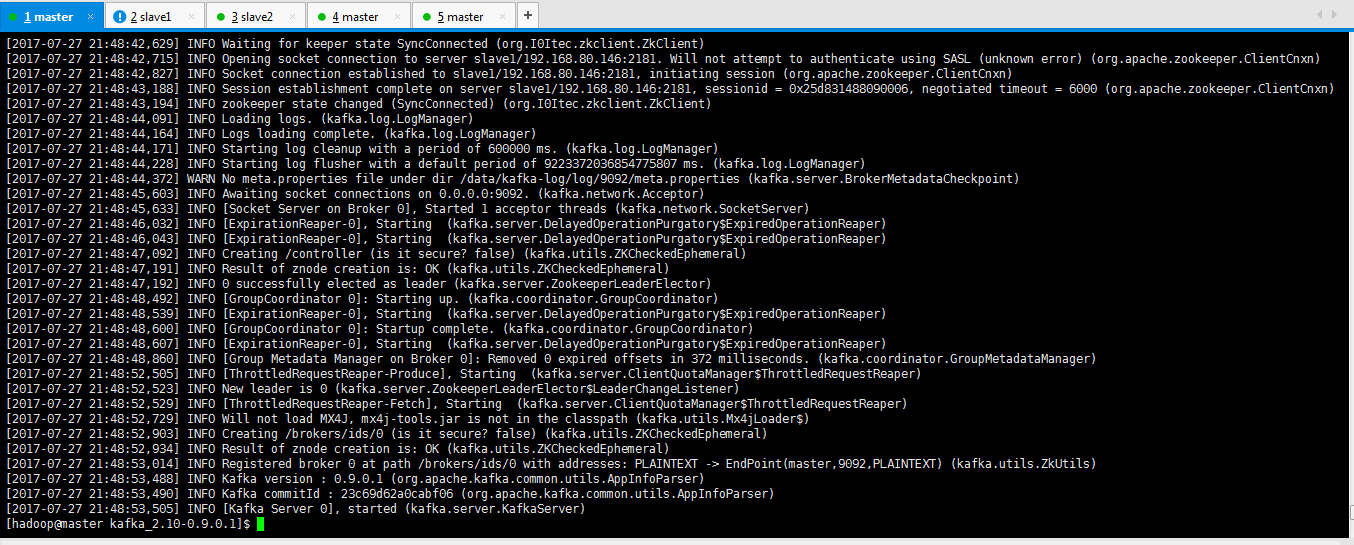

[-- ::,] INFO Waiting for keeper state SyncConnected (org.I0Itec.zkclient.ZkClient)

[-- ::,] INFO Opening socket connection to server slave1/192.168.80.146:. Will not attempt to authenticate using SASL (unknown error) (org.apache.zookeeper.ClientCnxn)

[-- ::,] INFO Socket connection established to slave1/192.168.80.146:, initiating session (org.apache.zookeeper.ClientCnxn)

[-- ::,] INFO Session establishment complete on server slave1/192.168.80.146:, sessionid = 0x25d831488090006, negotiated timeout = (org.apache.zookeeper.ClientCnxn)

[-- ::,] INFO zookeeper state changed (SyncConnected) (org.I0Itec.zkclient.ZkClient)

[-- ::,] INFO Loading logs. (kafka.log.LogManager)

[-- ::,] INFO Logs loading complete. (kafka.log.LogManager)

[-- ::,] INFO Starting log cleanup with a period of ms. (kafka.log.LogManager)

[-- ::,] INFO Starting log flusher with a default period of ms. (kafka.log.LogManager)

[-- ::,] WARN No meta.properties file under dir /data/kafka-log/log//meta.properties (kafka.server.BrokerMetadataCheckpoint)

[-- ::,] INFO Awaiting socket connections on 0.0.0.0:. (kafka.network.Acceptor)

[-- ::,] INFO [Socket Server on Broker ], Started acceptor threads (kafka.network.SocketServer)

[-- ::,] INFO [ExpirationReaper-], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [ExpirationReaper-], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO Creating /controller (is it secure? false) (kafka.utils.ZKCheckedEphemeral)

[-- ::,] INFO Result of znode creation is: OK (kafka.utils.ZKCheckedEphemeral)

[-- ::,] INFO successfully elected as leader (kafka.server.ZookeeperLeaderElector)

[-- ::,] INFO [GroupCoordinator ]: Starting up. (kafka.coordinator.GroupCoordinator)

[-- ::,] INFO [ExpirationReaper-], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [GroupCoordinator ]: Startup complete. (kafka.coordinator.GroupCoordinator)

[-- ::,] INFO [ExpirationReaper-], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [Group Metadata Manager on Broker ]: Removed expired offsets in milliseconds. (kafka.coordinator.GroupMetadataManager)

[-- ::,] INFO [ThrottledRequestReaper-Produce], Starting (kafka.server.ClientQuotaManager$ThrottledRequestReaper)

[-- ::,] INFO New leader is (kafka.server.ZookeeperLeaderElector$LeaderChangeListener)

[-- ::,] INFO [ThrottledRequestReaper-Fetch], Starting (kafka.server.ClientQuotaManager$ThrottledRequestReaper)

[-- ::,] INFO Will not load MX4J, mx4j-tools.jar is not in the classpath (kafka.utils.Mx4jLoader$)

[-- ::,] INFO Creating /brokers/ids/ (is it secure? false) (kafka.utils.ZKCheckedEphemeral)

[-- ::,] INFO Result of znode creation is: OK (kafka.utils.ZKCheckedEphemeral)

[-- ::,] INFO Registered broker at path /brokers/ids/ with addresses: PLAINTEXT -> EndPoint(master,,PLAINTEXT) (kafka.utils.ZkUtils)

[-- ::,] INFO Kafka version : 0.9.0.1 (org.apache.kafka.common.utils.AppInfoParser)

[-- ::,] INFO Kafka commitId : 23c69d62a0cabf06 (org.apache.kafka.common.utils.AppInfoParser)

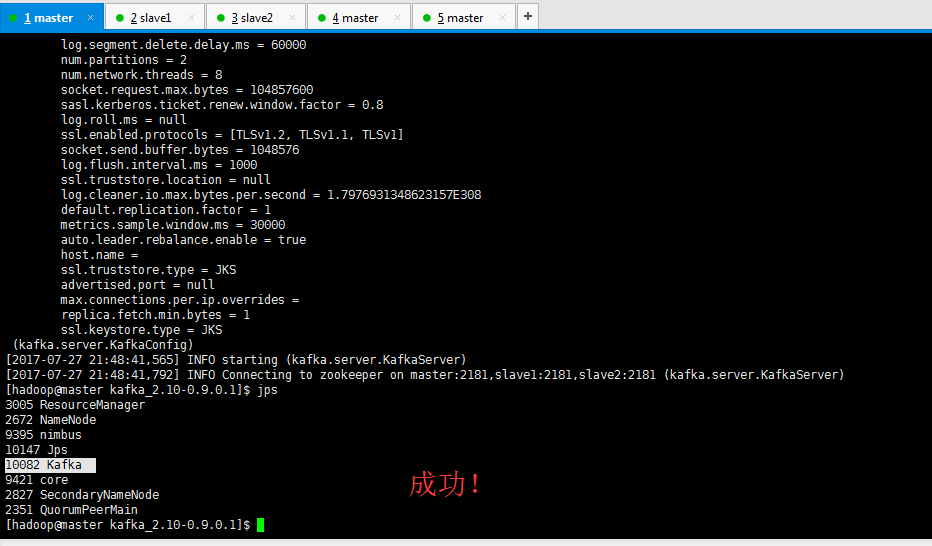

[-- ::,] INFO [Kafka Server 0], started (kafka.server.KafkaServer)

[hadoop@master kafka_2.-0.9.0.1]$

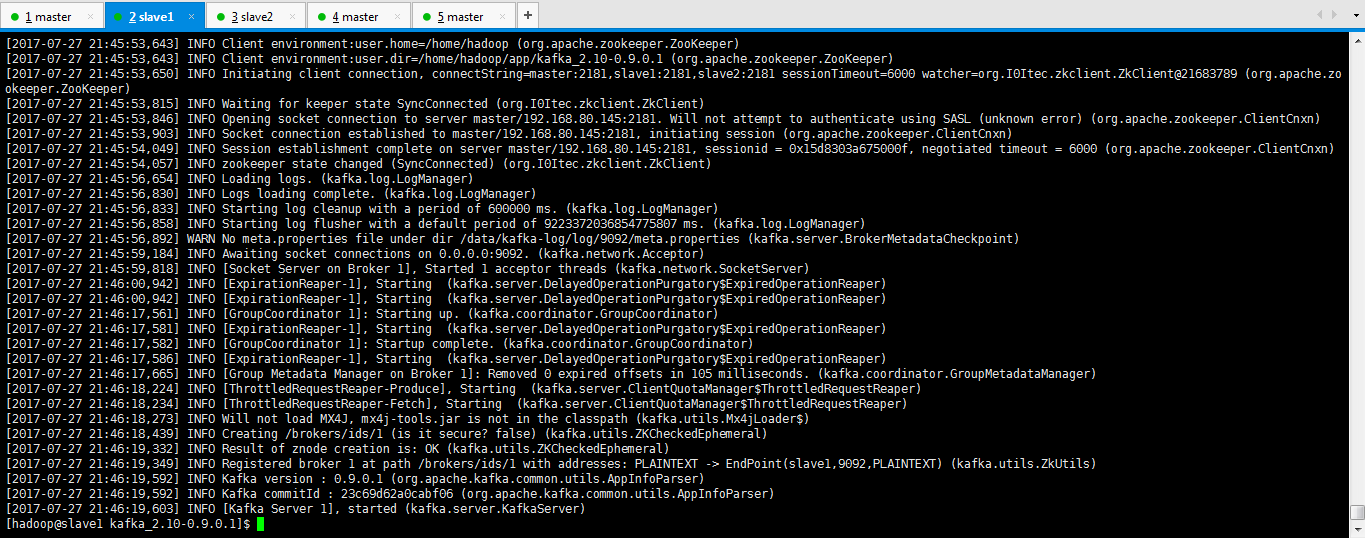

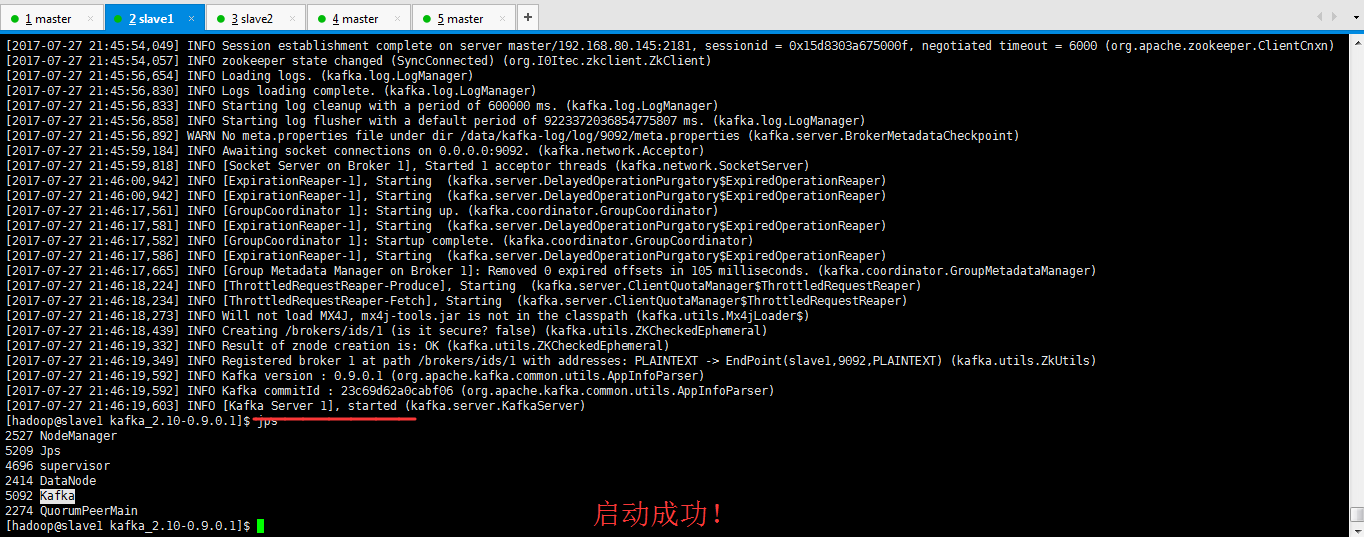

[-- ::,] INFO Session establishment complete on server master/192.168.80.145:, sessionid = 0x15d8303a675000f, negotiated timeout = (org.apache.zookeeper.ClientCnxn)

[-- ::,] INFO zookeeper state changed (SyncConnected) (org.I0Itec.zkclient.ZkClient)

[-- ::,] INFO Loading logs. (kafka.log.LogManager)

[-- ::,] INFO Logs loading complete. (kafka.log.LogManager)

[-- ::,] INFO Starting log cleanup with a period of ms. (kafka.log.LogManager)

[-- ::,] INFO Starting log flusher with a default period of ms. (kafka.log.LogManager)

[-- ::,] WARN No meta.properties file under dir /data/kafka-log/log//meta.properties (kafka.server.BrokerMetadataCheckpoint)

[-- ::,] INFO Awaiting socket connections on 0.0.0.0:. (kafka.network.Acceptor)

[-- ::,] INFO [Socket Server on Broker ], Started acceptor threads (kafka.network.SocketServer)

[-- ::,] INFO [ExpirationReaper-], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [ExpirationReaper-], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [GroupCoordinator ]: Starting up. (kafka.coordinator.GroupCoordinator)

[-- ::,] INFO [ExpirationReaper-], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [GroupCoordinator ]: Startup complete. (kafka.coordinator.GroupCoordinator)

[-- ::,] INFO [ExpirationReaper-], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [Group Metadata Manager on Broker ]: Removed expired offsets in milliseconds. (kafka.coordinator.GroupMetadataManager)

[-- ::,] INFO [ThrottledRequestReaper-Produce], Starting (kafka.server.ClientQuotaManager$ThrottledRequestReaper)

[-- ::,] INFO [ThrottledRequestReaper-Fetch], Starting (kafka.server.ClientQuotaManager$ThrottledRequestReaper)

[-- ::,] INFO Will not load MX4J, mx4j-tools.jar is not in the classpath (kafka.utils.Mx4jLoader$)

[-- ::,] INFO Creating /brokers/ids/ (is it secure? false) (kafka.utils.ZKCheckedEphemeral)

[-- ::,] INFO Result of znode creation is: OK (kafka.utils.ZKCheckedEphemeral)

[-- ::,] INFO Registered broker at path /brokers/ids/ with addresses: PLAINTEXT -> EndPoint(slave1,,PLAINTEXT) (kafka.utils.ZkUtils)

[-- ::,] INFO Kafka version : 0.9.0.1 (org.apache.kafka.common.utils.AppInfoParser)

[-- ::,] INFO Kafka commitId : 23c69d62a0cabf06 (org.apache.kafka.common.utils.AppInfoParser)

[-- ::,] INFO [Kafka Server ], started (kafka.server.KafkaServer)

[hadoop@slave1 kafka_2.-0.9.0.1]$ jps

NodeManager

Jps

supervisor

DataNode

Kafka

QuorumPeerMain

[hadoop@slave1 kafka_2.-0.9.0.1]$

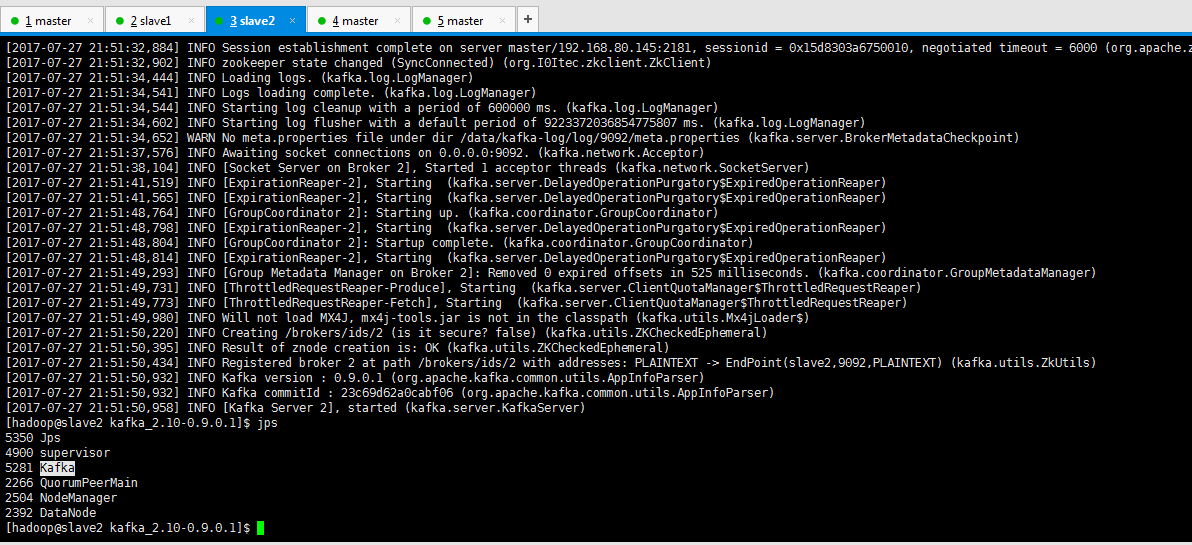

[-- ::,] INFO Session establishment complete on server master/192.168.80.145:, sessionid = 0x15d8303a6750010, negotiated timeout = (org.apache.zookeeper.ClientCnxn)

[-- ::,] INFO zookeeper state changed (SyncConnected) (org.I0Itec.zkclient.ZkClient)

[-- ::,] INFO Loading logs. (kafka.log.LogManager)

[-- ::,] INFO Logs loading complete. (kafka.log.LogManager)

[-- ::,] INFO Starting log cleanup with a period of ms. (kafka.log.LogManager)

[-- ::,] INFO Starting log flusher with a default period of ms. (kafka.log.LogManager)

[-- ::,] WARN No meta.properties file under dir /data/kafka-log/log//meta.properties (kafka.server.BrokerMetadataCheckpoint)

[-- ::,] INFO Awaiting socket connections on 0.0.0.0:. (kafka.network.Acceptor)

[-- ::,] INFO [Socket Server on Broker ], Started acceptor threads (kafka.network.SocketServer)

[-- ::,] INFO [ExpirationReaper-], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [ExpirationReaper-], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [GroupCoordinator ]: Starting up. (kafka.coordinator.GroupCoordinator)

[-- ::,] INFO [ExpirationReaper-], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [GroupCoordinator ]: Startup complete. (kafka.coordinator.GroupCoordinator)

[-- ::,] INFO [ExpirationReaper-], Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[-- ::,] INFO [Group Metadata Manager on Broker ]: Removed expired offsets in milliseconds. (kafka.coordinator.GroupMetadataManager)

[-- ::,] INFO [ThrottledRequestReaper-Produce], Starting (kafka.server.ClientQuotaManager$ThrottledRequestReaper)

[-- ::,] INFO [ThrottledRequestReaper-Fetch], Starting (kafka.server.ClientQuotaManager$ThrottledRequestReaper)

[-- ::,] INFO Will not load MX4J, mx4j-tools.jar is not in the classpath (kafka.utils.Mx4jLoader$)

[-- ::,] INFO Creating /brokers/ids/ (is it secure? false) (kafka.utils.ZKCheckedEphemeral)

[-- ::,] INFO Result of znode creation is: OK (kafka.utils.ZKCheckedEphemeral)

[-- ::,] INFO Registered broker at path /brokers/ids/ with addresses: PLAINTEXT -> EndPoint(slave2,,PLAINTEXT) (kafka.utils.ZkUtils)

[-- ::,] INFO Kafka version : 0.9.0.1 (org.apache.kafka.common.utils.AppInfoParser)

[-- ::,] INFO Kafka commitId : 23c69d62a0cabf06 (org.apache.kafka.common.utils.AppInfoParser)

[-- ::,] INFO [Kafka Server ], started (kafka.server.KafkaServer)

[hadoop@slave2 kafka_2.-0.9.0.1]$ jps

Jps

supervisor

Kafka

QuorumPeerMain

NodeManager

DataNode

[hadoop@slave2 kafka_2.-0.9.0.1]$

成功!

kafka启动时出现FATAL Fatal error during KafkaServer startup. Prepare to shutdown (kafka.server.KafkaServer) java.io.IOException: Permission denied错误解决办法(图文详解)的更多相关文章

- ERROR Fatal error during KafkaServer startup. Prepare to shutdown (kafka.server.KafkaServer)

1.之前搭建的kafka,过了好久,去启动kafka,发现报如下下面的错误,有错误就要解决了. 然后参考:https://blog.csdn.net/hello_world_qwp/article/d ...

- FATAL Fatal error during KafkaServerStable startup. Prepare to shutdown (kafka.server.KafkaServerStartable) java.io.FileNotFoundException: /tmp/kafka-logs/.lock (Permission denied)

1.启动kafka的时候,报错如下所示: [-- ::,] INFO zookeeper state changed (SyncConnected) (org.I0Itec.zkclient.ZkCl ...

- gerrit集成gitweb:Error injecting constructor, java.io.IOException: Permission denied

使用gerrit账户在centos上安装gerrit,然后集成gitweb,gerrit服务启动失败,查看日志,报错信息如下: [-- ::,] ERROR com.google.gerrit.pgm ...

- 【解决】hbase regionserver意外关机启动失败 [main] mortbay.log: tmpdir java.io.IOException: Permission denied

错误信息: 015-12-24 10:57:26,527 INFO [main] mortbay.log: jetty-6.1.26.cloudera.4 2015-12-24 10:57:26,5 ...

- MyEclipse+Tomcat 启动时出现A configuration error occured during startup错误的解决方法

MyEclipse+Tomcat 启动时出现A configuration error occured during startup错误的解决方法 分类: javaweb2013-06-03 14:4 ...

- Docker容器启动lnmp环境下的mysql服务时报"MySQL server PID file could not be found"错误解决办法

我在自己的mac笔记本上装了一个docker,并在docker容器中安装了lnmp环境,经常会遇到在使用"lnmp restart"命令启动lnmp服务的时候,mysql服务启动失 ...

- encfs创建时fuse: failed to exec fusermount: Permission denied错误解决

今天用encfs创建加密文件夹时碰到提示错误fuse: failed to exec fusermount: Permission denied fuse failed. Common problem ...

- Docekr 挂在卷之后访问目录时异常 cannot open directory '.': Permission denied 的解决办法

1,原因,原因是CentOS7 中的安全模块 selinux 把权限禁掉了 2,解决办法如下 2.1,运行容器是加参数在 --privileged=true (个人认为这是最佳方式,推荐使用) 如 ...

- VSCODE更改文件时,提示EACCES permission denied的解决办法(mac电脑系统)

permission denied:权限问题 具体解决办法: 1.在项目文件夹右键-显示简介-点击右下角解锁 2.权限全部设置为读与写 3.最关键一步:点击"应用到包含的项目",这 ...

随机推荐

- PKCS填充方式

1)RSA_PKCS1_PADDING 填充模式,最常用的模式要求: 输入 必须 比 RSA 钥模长(modulus) 短至少11个字节, 也就是 RSA_size(rsa) – 11.如果输入的明文 ...

- Linux下Apache服务部署静态网站------网站服务程序

文章链接(我的CSDN博客): Linux下Apache服务部署静态网站------网站服务程序

- Mutual Training for Wannafly Union #9

A(SPOJ NPC2016A) 题意:给一个正方形和内部一个点,要求从这个点向四边反射形成的路线的长度 分析:不断做对称,最后等价于求两个点之间的距离 B(CF480E) 题意:求01矩阵内由0组成 ...

- 对于事务ACID的理解

ACID,即以下四点: 原子性(Atomicity) 原子性是指事务是一个不可分割的工作单位,事务中的操作要么都发生,要么都不发生. 一致性(Consistency) 事务前后数据的完整性必须保持一致 ...

- JDBC的Statement对象

以下内容引用自http://wiki.jikexueyuan.com/project/jdbc/statements.html: 一旦获得了数据库的连接,就可以和数据库进行交互.JDBC的Statem ...

- Java高级教程:Java并发性和多线程

Java并发性和多线程: (中文,属于人工翻译,高质量):http://ifeve.com/java-concurrency-thread-directory/ (英文):http://tutoria ...

- import与require的区别

载入一个模块import() 与 require() 功能相同,但具有一定程度的自动化特性.假设我们有如下的目录结构:~~~app/app/classes/app/classes/MyClass.lu ...

- react 项目实战(七)用户编辑与删除

添加操作列 编辑与删除功能都是针对已存在的某一个用户执行的操作,所以在用户列表中需要再加一个“操作”列来展现[编辑]与[删除]这两个按钮. 修改/src/pages/UserList.js文件,添加方 ...

- Windows 环境下运用Python制作网络爬虫

import webbrowser as web import time import os i = 0 MAXNUM = 1 while i <= MAXNUM: web.open_new_t ...

- HDU 1047 Integer Inquiry 大数相加 string解法

本题就是大数相加,题目都不用看了. 只是注意的就是HDU的肯爹输出,好几次presentation error了. 还有个特殊情况,就是会有空数据的输入case. #include <stdio ...