关于KNN的python3实现

关于KNN,有幸看到这篇文章,写的很好,这里就不在赘述。直接贴上代码了,有小的改动。(原来是python2版本的,这里改为python3的,主要就是print)

环境:win7 32bit + spyder + anaconda3.5

一、初阶

# -*- coding: utf-8 -*-

"""

Created on Sun Nov 6 16:09:00 2016 @author: Administrator

""" #Input:

# newInput:待测的数据点(1xM)

# dataSet:已知的数据(NxM)

# labels:已知数据的标签(1xM)

# k:选取的最邻近数据点的个数

#

#Output:

# 待测数据点的分类标签

# from numpy import * # creat a dataset which contain 4 samples with 2 class

def createDataSet():

# creat a matrix: each row as a sample

group = array([[1.0, 0.9], [1.0, 1.0], [0.1, 0.2], [0.0, 0.1]])

labels = ['A', 'A', 'B', 'B']

return group, labels #classify using KNN

def KNNClassify(newInput, dataSet, labels, k):

numSamples = dataSet.shape[0] # row number

# step1:calculate Euclidean distance

# tile(A, reps):Constract an array by repeating A reps times

diff = tile(newInput, (numSamples, 1)) - dataSet

squreDiff = diff**2

squreDist = sum(squreDiff, axis=1) # sum if performed by row

distance = squreDist ** 0.5 #step2:sort the distance

# argsort() returns the indices that would sort an array in a ascending order

sortedDistIndices = argsort(distance) classCount = {}

for i in range(k):

# choose the min k distance

voteLabel = labels[sortedDistIndices[i]] #step4:count the times labels occur

# when the key voteLabel is not in dictionary classCount,

# get() will return 0

classCount[voteLabel] = classCount.get(voteLabel, 0) + 1

#step5:the max vote class will return

maxCount = 0

for k, v in classCount.items():

if v > maxCount:

maxCount = v

maxIndex = k return maxIndex # test dataSet, labels = createDataSet() testX = array([1.2, 1.0])

k = 3

outputLabel = KNNClassify(testX, dataSet, labels, 3) print("Your input is:", testX, "and classified to class: ", outputLabel) testX = array([0.1, 0.3])

k = 3

outputLabel = KNNClassify(testX, dataSet, labels, 3) print("Your input is:", testX, "and classified to class: ", outputLabel)

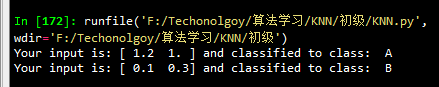

运行结果:

二、进阶

用到的手写识别数据库资料在这里下载。关于资料的介绍在上面的博文也已经介绍的很清楚了。

# -*- coding: utf-8 -*-

"""

Created on Sun Nov 6 16:09:00 2016 @author: Administrator

""" #Input:

# newInput:待测的数据点(1xM)

# dataSet:已知的数据(NxM)

# labels:已知数据的标签(1xM)

# k:选取的最邻近数据点的个数

#

#Output:

# 待测数据点的分类标签

# from numpy import * #classify using KNN

def KNNClassify(newInput, dataSet, labels, k):

numSamples = dataSet.shape[0] # row number

# step1:calculate Euclidean distance

# tile(A, reps):Constract an array by repeating A reps times

diff = tile(newInput, (numSamples, 1)) - dataSet

squreDiff = diff**2

squreDist = sum(squreDiff, axis=1) # sum if performed by row

distance = squreDist ** 0.5 #step2:sort the distance

# argsort() returns the indices that would sort an array in a ascending order

sortedDistIndices = argsort(distance) classCount = {}

for i in range(k):

# choose the min k distance

voteLabel = labels[sortedDistIndices[i]] #step4:count the times labels occur

# when the key voteLabel is not in dictionary classCount,

# get() will return 0

classCount[voteLabel] = classCount.get(voteLabel, 0) + 1

#step5:the max vote class will return

maxCount = 0

for k, v in classCount.items():

if v > maxCount:

maxCount = v

maxIndex = k return maxIndex # convert image to vector

def img2vector(filename):

rows = 32

cols = 32

imgVector = zeros((1, rows * cols))

fileIn = open(filename)

for row in range(rows):

lineStr = fileIn.readline()

for col in range(cols):

imgVector[0, row * 32 + col] = int(lineStr[col]) return imgVector # load dataSet

def loadDataSet():

## step 1: Getting training set

print("---Getting training set...")

dataSetDir = 'F:\\Techonolgoy\\算法学习\\KNN\\进阶\\'

trainingFileList = os.listdir(dataSetDir + 'trainingDigits') # load the training set

numSamples = len(trainingFileList) train_x = zeros((numSamples, 1024))

train_y = []

for i in range(numSamples):

filename = trainingFileList[i] # get train_x

train_x[i, :] = img2vector(dataSetDir + 'trainingDigits/%s' % filename) # get label from file name such as "1_18.txt"

label = int(filename.split('_')[0]) # return 1

train_y.append(label) ## step 2: Getting testing set

print("---Getting testing set...")

testingFileList = os.listdir(dataSetDir + 'testDigits') # load the testing set

numSamples = len(testingFileList)

test_x = zeros((numSamples, 1024))

test_y = []

for i in range(numSamples):

filename = testingFileList[i] # get train_x

test_x[i, :] = img2vector(dataSetDir + 'testDigits/%s' % filename) # get label from file name such as "1_18.txt"

label = int(filename.split('_')[0]) # return 1

test_y.append(label) return train_x, train_y, test_x, test_y # test hand writing class

def testHandWritingClass():

## step 1: load data

print("step 1: load data...")

train_x, train_y, test_x, test_y = loadDataSet() ## step 2: training...

print("step 2: training...")

pass ## step 3: testing

print("step 3: testing...")

numTestSamples = test_x.shape[0]

matchCount = 0

for i in range(numTestSamples):

predict = KNNClassify(test_x[i], train_x, train_y, 3)

if predict == test_y[i]:

matchCount += 1

accuracy = float(matchCount) / numTestSamples ## step 4: show the result

print("step 4: show the result...")

print('The classify accuracy is: %.2f%%' % (accuracy * 100)) testHandWritingClass()

运行结果:

关于KNN的python3实现的更多相关文章

- Python3实现机器学习经典算法(一)KNN

一.KNN概述 K-(最)近邻算法KNN(k-Nearest Neighbor)是数据挖掘分类技术中最简单的方法之一.它具有精度高.对异常值不敏感的优点,适合用来处理离散的数值型数据,但是它具有 非常 ...

- Python3 k-邻近算法(KNN)

# -*- coding: utf-8 -*- """ Created on Fri Dec 29 13:13:44 2017 @author: markli " ...

- 机器学习实战python3 K近邻(KNN)算法实现

台大机器技法跟基石都看完了,但是没有编程一直,现在打算结合周志华的<机器学习>,撸一遍机器学习实战, 原书是python2 的,但是本人感觉python3更好用一些,所以打算用python ...

- Python3实现机器学习经典算法(二)KNN实现简单OCR

一.前言 1.ocr概述 OCR (Optical Character Recognition,光学字符识别)是指电子设备(例如扫描仪或数码相机)检查纸上打印的字符,通过检测暗.亮的模式确定其形状,然 ...

- kNN.py源码及注释(python3.x)

import numpy as npimport operatorfrom os import listdirdef CerateDataSet(): group = np.array( ...

- KNN识别图像上的数字及python实现

领导让我每天手工录入BI系统中的数据并判断数据是否存在异常,若有异常点,则检测是系统问题还是业务问题.为了解放双手,我决定写个程序完成每天录入管理驾驶舱数据的任务.首先用按键精灵录了一套脚本把系统中的 ...

- 机器学习实战笔记(Python实现)-01-K近邻算法(KNN)

--------------------------------------------------------------------------------------- 本系列文章为<机器 ...

- 第2章KNN算法笔记_函数classify0

<机器学习实战>知识点笔记目录 K-近邻算法(KNN)思想: 1,计算未知样本与所有已知样本的距离 2,按照距离递增排序,选前K个样本(K<20) 3,针对K个样本统计各个分类的出现 ...

- 机器学习--kNN算法识别手写字母

本文主要是用kNN算法对字母图片进行特征提取,分类识别.内容如下: kNN算法及相关Python模块介绍 对字母图片进行特征提取 kNN算法实现 kNN算法分析 一.kNN算法介绍 K近邻(kNN,k ...

随机推荐

- 浙江理工2015.12校赛-A

孙壕请一盘青岛大虾呗 Time Limit: 5 Sec Memory Limit: 128 MB Submit: 577 Solved: 244 Description 话说那一年zstu与gdut ...

- 【转载】C++中的位拷贝和值拷贝

---恢复内容开始--- 原文:C++中的位拷贝和值拷贝 原文:http://blog.csdn.net/liam1122/article/details/1966617 为了便于说明我们以Strin ...

- Maven学习(三) -- 仓库

标签(空格分隔): 学习笔记 坐标和依赖时任何一个构件在Maven世界中的逻辑表示方式:而构件的物理表示方式是文件,Maven通过仓库来同意管理这些文件. 任何一个构件都有其唯一的坐标,根据这个坐标可 ...

- CSS基础介绍

CSS介绍 CSS是指层叠样式表,CSS样式表极大的提高了工作效率 CSS基础语法 1. 首先选择一个属性 2. 选择了属性以后,用大括号括起来 3. 括号里面是对应的属性和属性值,如: select ...

- Authentication和Authorization的区别

搞不太清楚Authentication和Authorization的区别,在网上搜了一下,lucky16的一篇文章讲的通俗,看了就懂,记录下来: 你要登机,你需要出示你的身份证和机票,身份证是为了证明 ...

- css 浮动

1. 浮动 浮动是css的布局功能,在CSS中,包括div在内的任何元素都可以浮动的方式显示.它能够改变页面中对象的前后流动顺序.浮动元素会脱离文档流,不占据空间.浮动元素可以左右移动,直到碰到包含它 ...

- Java代码性能优化总结

代码优化,一个很重要的课题.可能有些人觉得没用,一些细小的地方有什么好修改的,改与不改对于代码的运行效率有什么影响呢?这个问题我是这么考虑的,就像大海里面的鲸鱼一样,它吃一条小虾米有用吗?没用,但是, ...

- Maven引入本地jar包

<dependency> <groupId>${gorup}</groupId> <artifactId>${artifact}</artifac ...

- Java Java Java

学下java 的大数该怎么用>< hdu 1023 Train Problem II 求 卡特兰 数 诶...不记得卡特兰数的我眼泪掉下来 第一次用 java 大数 有点激动...> ...

- Java使用ZXing生成二维码条形码

一.下载Zxingjar包 本实例使用的是 zxing3.2.0的版本 下载地址 http://pan.baidu.com/s/1gdH7PzP 说明:本实例使用的3.2.0版本已经使用的java7 ...