XCTest(二)

New tool sets are making it easier and easier to engage in genuine agile development on iOS. In particular, true test-driven development—which was formerly a hard, upstream slog on iOS—is becoming increasingly attainable. Here’s how we’re doing it here at the HowAboutWe offices in DUMBO, Brooklyn.

Agile development has long been all the rage; indeed, in most modern development shops the great agile methodologies are old hat. If you come from a software background like Ruby on Rails, Python, or certain Java niches, you may—until recently—have experienced a small jolt of culture shock when encountering the deep obstacles that agile development practices faced on the iOS platform.

The lengthy iOS app approval and manual user update process runs against the grain of frequent delivery. IDE support for key agile coding practices like refactoring is soft. And more glaring than anything else: The dearth of great tools and established practices for automated testing has made the agile ideal of true test-driven development (TDD) hard to attain.

All this is changing, though, and rapidly. New tool sets are making it easier and easier to engage in genuine agile development on iOS. In particular, true test-driven development—which was formerly a hard, upstream slog on iOS—is becoming increasingly attainable.

This article outlines our experience using TDD to buildHowAboutWe Dating for iPad and iPhone. We'll describe the stack of tools we use for testing and continuous integration and how we use them to speed the delivery of quality software.

When we made automated tests a requirement for completing a feature or bug-fix ticket, our QA churn dropped radically; our crash instances plummeted; developer confidence improved because we saw the risk of making changes go down; and we could better predict our release readiness without emergency feature cuts.

Our most important tools are Kiwi for unit testing (what Xcode calls "logic tests") our model and controller logic; KIF for integration testing of user-facing behavior; and CruiseControl.rb for continuous integration to keep us honest. We also have some key practices that guide our use of these tools.

Tool Number One: Kiwi for Unit Testing

If you've ever used RSpec, you're familiar with the likes of:

| 123456 |

describe RingOfPower do

it 'takes a name in the constructor' do

my_precious = RingOfPower.new('The One Ring')

my_precious.name.should eq('The One Ring')

end

end

|

Allen Ding's Kiwi is a testing framework for iOS with an RSpec-inspired syntax. It makes slick use of Objective-C blocks and lends itself to readable, contextualized tests.

| 1234567891011121314151617 |

describe(@"Sting", ^{

it(@"does not glow, normally", ^{

[[theValue([Sting uniqueInstance].isGlowing) should] beFalse];

});

context(@"there's an orc about", ^{

__block Orc *anOrc = nil;

beforeEach(^{

anOrc = [[Orc alloc] init];

});

it(@"glows", ^{

[[theValue([Sting uniqueInstance].isGlowing) should] beTrue];

});

});

});

|

Kiwi is a very complete framework, with many of the levers and knobs you'd reach for regularly in RSpec, like:

- Nestable contexts

- Blocks to call before and after each or all specs in the context

- A rich set of expectations

- Mocks and stubs

- Asynchronous testing

In addition, Kiwi is built on top of OCUnit, which means that it integrates seamlessly with Xcode logic tests and that you can reuse your old OCUnit tests, if you want to do a whole-hog migration to Kiwi. We prefer Kiwi to raw OCUnit, mainly for the elegant syntax—the nested blocks are easy to scan, and the specs are about as smooth to write as you could hope for in Objective-C.

We Use Kiwi.

With our models (most of which are subclassed from NSManagedObject), we test all the code not generated for us. This includes parsing JSON from our API into Objective-C instances; all model-level internal logic, such as converting a user's gender and orientation into a complementary set of genders and orientations to search for; and important inter-model interactions, as between messages and message threads.

Helpers and categories are another place where Kiwi and TDD shine. We've test-driven a set of CGRect helper functions that aid us in smart photo cropping; a photo cache; and a category of time- and sanity-saving methods on NSLayoutConstraint.

We've also been driving toward thinning out our view controllers, and a lot of that involves factoring complex code into separate, single-responsibility objects. An example: In our app's messaging module, we offer an Inbox, a Sent messages folder, and an Archive folder. The three boxes have different behaviors (e.g., you can only archive a thread from the Inbox), and an earlier revision of the messaging view controller had a lot of if-inbox-then-do-X-else-if-sent-do-Y-else, plus a lot of code to make sure the correct message folder was loaded and visible, that Sent and Inbox were properly synced but sorted slightly differently, different empty state strings were displayed for each folder, etc.

Fat controllers and repeated if-else chains are both code smells, and we used Kiwi tests to drive out a single solution to both of them: a separate MessageStore object that handled the juggling of messages and threads. The messaging view controller tells the MessageStore when the user switches modes and queries the MessageStore for the contents of the current folder, appropriate loading and empty strings, and for yes/no answers to behavioral questions like, "Should I expose an Archive button?" The controller is slimmed and the chained if-else-if statements are replaced by data structures that will be easily extensible if we decide to add a fourth folder.

Kiwi specs were integral to building the MessageStore with minimalism and correctness. To give you a taste, here are two specs that cover message-archiving behavior:

| 123456789101112 |

beforeEach(^{ messageStore = [[MessageStore alloc] init]; }); // uninitialized

afterEach(^{ messageStore = nil; });

it(@"says whether you can archive messages in the current folder", ^{

[[theBlock(^{ [messageStore canArchiveThreads]; }) should] raise];

messageStore.mode = MessageStoreInbox;

[[theValue(messageStore.canArchiveThreads) should] equal:theValue(YES)];

messageStore.mode = MessageStoreSent;

[[theValue(messageStore.canArchiveThreads) should] equal:theValue(NO)];

messageStore.mode = MessageStoreArchive;

[[theValue(messageStore.canArchiveThreads) should] equal:theValue(NO)];

});

|

This first spec tells us that if the MessageStore is uninitialized, it should throw an exception when asked whether archiving behavior should be exposed; otherwise, it should give a boolean answer appropriate to its current mode. If the user requests that a thread be archived, the MessageStore handles that, as defined in the second spec:

| 1234567891011121314151617181920 |

it(@"lets you archive an item from the inbox only", ^{

messageStore.mode = MessageStoreInbox;

[messageStore addThreadIDs:@[@123, @234, @345]];

messageStore.mode = MessageStoreSent;

[[theBlock(^{ [messageStore archiveThreadAtIndex:1u to:1u]; }) should] raise];

messageStore.mode = MessageStoreArchive;

[messageStore addThreadIDs:@[@456, @567, @678]];

[[theBlock(^{ [messageStore archiveThreadAtIndex:1u to:1u]; }) should] raise];

messageStore.mode = MessageStoreInbox;

[[theBlock(^{ [messageStore archiveThreadAtIndex:10u to:1u]; }) should] raise];

[[theBlock(^{ [messageStore archiveThreadAtIndex:1u to:10u]; }) should] raise];

[messageStore archiveThreadAtIndex:1u to:2u];

[[messageStore.collection should] equal:@[@123, @345]];

messageStore.mode = MessageStoreArchive;

[[messageStore.collection should] equal:@[@456, @567, @234, @678]];

});

|

This spec sets up an Inbox containing message objects (here represented by some dummy NSNumber objects—the MessageStore does not actually care about the type of the objects it is holding) and mimics various requests to pull an object from the Inbox and insert it into a particular place in the archive folder. For modes where the user should not be allowed to archive messages (as defined in the previous spec) or when invalid indices in the Inbox or archive collections are specified, an exception should be thrown; otherwise, the appropriate object should change folders.

The full spec is about 250 LoC, and canonical red-green-refactor TDD drove out an implementation of about 200 LoC. Visible, facile metrics like this scare some people off TDD, because they just see the cost of more code; I see this and know that I've written and tested a well-specified, tight bundle of logic, and I took one of the flakier, harder-to-maintain pieces of our app and broke it into solid, loosely-coupled modules that work reliably. The test-driven MessageStore and the concomitant simplification of the messaging view controller purged a whole class of hard-to-diagnose bugs from our issue tracker. When it comes to stabilizing the most-used parts of your app, 250 lines of straightforward, declarative test code is cheap.

One limitation of Kiwi is that it's not so good for testing UIKit-derived classes or anything that touches them. This is actually a limitation of Xcode logic tests—they don't fire up a UIApplication instance and don't play nicely with UIKit. To test elements of the project that can't be separated from the UI, we use automated integration tests.

Tool Number Two: KIF for Integration Testing

Kiwi helps us keep our lovely abstractions lovely, but what of the user-facing parts of the app? And how do we know that all the pieces work together? For integration tests of user-visible behavior, we use Square's KIF library. It uses the iOS accessibility framework to simulate user interaction with the app.

Testing every facet of the app by automatically driving the app through every possible user action would be insanely costly, and it would rapidly get to the point of diminishing returns. In addition, the fact that the tests run in the simulator by faking user behavior means that the tests run at human-ish speeds, not as fast as the CPU can run through them. Integration testing in the sim requires a number of additional practices and judgment calls to make it sane and valuable.

First, the tests have to be decoupled from the outside world. We've used method swizzling to stub out all our network calls and give back dynamically generated, predictable data to drive the app. To wit:

| 1234567891011121314151617181920 |

+ (void)swapMethod:(SEL)origM withMethod:(SEL)newM inClass:(Class)class {

Method origMethod = class_getInstanceMethod(class, origM);

Method newMethod = class_getInstanceMethod(class, newM);

if (class_addMethod(class, origM, method_getImplementation(newMethod), method_getTypeEncoding(newMethod))) {

class_replaceMethod(class, newM, method_getImplementation(origMethod), method_getTypeEncoding(origMethod));

} else {

method_exchangeImplementations(origMethod, newMethod);

}

}

+ (void)swizzleAPICalls {

[HAWStubs swapMethod:@selector(getUserSearchResultsPage:search:delegate:)

withMethod:@selector(stubGetUserSearchResultsPage:search:delegate:)

inClass:[HAWUserSearchClient class]];

[HAWStubs swapMethod:@selector(getUserProfilePageForID:delegate:)

withMethod:@selector(stubGetUserProfilePageForID:delegate:)

inClass:[HAWUserProfileClient class]];

// and so on…

}

|

Each of those stub methods returns a simulated API response, based on responses recorded in an actual session. This keeps us from having to stand up a web server to test the client app, and puts the inputs to the tests entirely under our control. We frequently have the stubs respond to different inputs by returning different data or exceptions so that we can simulate behaviors like paging data, network failure modes, etc.

Second, the integration tests have to be decoupled from each other. If you run 50 integration tests one after the other and make a change to the fourth test that alters the app's state in a persistent way, you risk breaking the next 46 tests. To mitigate this risk, we bundle the tests into related modules and run steps to log the test user out and clear the database between modules. Where it's important that an intermodule dependency be tested (e.g., a message sent from a user profile should show up in the logged-in user's Sent folder), we write a test for it, but otherwise we try to keep the KIF test scenarios limited to one screen or a small set of related screens, each testing a limited but meaningful set of user behavior.

Third, a lot of judgment needs to be exercised in what gets tested. It is impossible to test every possible user input, but you want to hit all your major error states as well as at least one valid input. It is impossible to test every path through the code, but you want to reasonably simulate the things a user is likely to do and spend a little more effort on the parts of the code that matter most to the user experience.

If you've read about the pros and cons of integration testing, you've heard some version of the issues I've described above (coupling-induced fragility, impossibility of total coverage, etc.) as reasons why integration tests are a bad thing. Certainly, we've found them costlier to write and maintain than unit tests. The way we've applied them, though, has given us far too much value to even consider discarding them: Where we've used unit tests to write quality code, the integration tests have been invaluable in helping us maintain it. They form our "regression firewall", and if the test board is green, then the developers, product managers, and QA all know that none of the big stuff has gone wrong. Bugs still get through, but there will tend to be stuff around the edges.

In the rare case something big gets through, we add it to the suite. It happened recently that a release made it into the wild with a 100% reproducible crash when the subscription screen was reached by a certain path. We translated the repro into a set of KIF steps:

| 1234567 |

[scenario addStep:[KIFTestStep stepToWaitForTappableViewWithAccessibilityLabel:@"Date Card" value:@"date_stream_user_0" traits:UIAccessibilityTraitNone]];

[scenario addStep:[KIFTestStep stepToTapViewWithAccessibilityLabel:@"Date Card" value:@"date_stream_user_0" traits:UIAccessibilityTraitNone atPoint:CGPointMake(1, 1)]];

[scenario addStep:[KIFTestStep stepToWaitForTappableViewWithAccessibilityLabel:@"View All Dates"]];

[scenario addStep:[KIFTestStep stepToTapViewWithAccessibilityLabel:@"View All Dates"]];

[scenario addStep:[KIFTestStep stepToWaitForTappableViewWithAccessibilityLabel:NSLocalizedString(@"Ask_Out", @"")]];

[scenario addStep:[KIFTestStep stepToTapViewWithAccessibilityLabel:NSLocalizedString(@"Ask_Out", @"")]];

[scenario addStep:[KIFTestStep stepToWaitForViewWithAccessibilityLabel:@"Upgrade to connect\nwith date_stream_user_0"]];

|

From this short repro, you can get the feel of KIF tests: Check the state of the screen (mostly via the accessibilityLabel and accessibilityValue properties of screen elements), interact with screen elements, check the state, interact, and so on.

Our crash occurred on the last line of the repro above. We added the steps to the suite, ran it, watched it crash; then we fixed the bug, ran the test, watched it pass; then we ran the rest of the upgrade-related test module to make sure we didn't break anything. This is a much longer process than just diving in and fixing the bug as soon as you've diagnosed it, but it boosts confidence among everyone who builds or inspects the app in two ways: They trust that we haven't broken existing functionality (thanks to existing tests), and they trust that the bug being addressed in the new test won't return.

One KIF trick that comes in handy is defining our own one-off steps. Any parameterizable process that gets used more than once gets a factory method in our own category on the KIFTestStep class, but sometimes the code is made more comprehensible when a task that only happens once is defined inline with a block:

| 12345 |

[scenario addStep:[KIFTestStep stepWithDescription:@"HAWMessageThreads table should be empty" executionBlock:^(KIFTestStep *step, NSError **error) {

NSArray *threads = [HAWMessageThread MR_findAll];

KIFTestCondition(threads.count == 0, error, @"Threads table should have no threads");

return KIFTestStepResultSuccess;

}]];

|

The Cloud Inside the Silver Lining

There are two major downsides to KIF. The first is the syntax—all those addStep: calls are actually building the test suite, not running it, and there's no clean way to set a break point at a particular instance of a step (unless you've defined the step yourself). We tolerate it because KIF is the best thing we've found for this type of testing. We feel it has yet to achieve true maturity, and we've extended it quite a bit for our own purposes, but it largely does what it says it will and has served as one of the pillars of our testing strategy.

The other pain point is the run time of the tests. Our full suite takes nearly 15 minutes to run, which makes it useless for fast-iterating styles of TDD/BDD. Our usual method of handling this goes something like:

- Run only the test scenario related to the feature or bug being addressed.

- Once the central test passes, run related test modules to make sure nothing was broken.

- In cases where confidence is low or a change is far-reaching, run the full suite at the developer bench. Otherwise, merge your change (after review: See below) and be ready to jump back on it if the CI board (again: See below) goes red.

This is another one of those times when developer judgment plays a key role. Running the full suite is a major break in your rhythm, especially when you're making (what feels like) a small change. The value of moving on to the next thing needs to be weighed against the risk inherent in the change being made, and the evaluation of that risk depends on one's intimacy with the code and seasoning as a programmer. In the section on practices below, I go into how we buttress individual judgment with the collective wisdom of the team.

Tool Number Three: CruiseControl.rb for Continuous Integration

CruiseControl.rb is a darling of the Rails community, but it's not just for Rails apps. It's quick to set up—including on a Mac, which is required to run our Xcode-based tests—and can run and extract results from arbitrary build-and-test scripts. CC.rb handles polling our GitHub repositories for changes to projects; our custom scripts do the rest, and CC.rb reports red/green for each project by checking standard Unix return values from the scripts.

First the common library shared by our iPhone and iPad projects gets built, and its Kiwi tests are run:

| 12345 |

#!/bin/bash

git submodule init

git submodule update

xcodebuild -scheme HAWCommonTestsCL -sdk iphonesimulator \

TEST_AFTER_BUILD=YES -arch i386 clean build | grep "BUILD SUCCEEDED"

|

It's that simple; the result of the final grep for Kiwi's success message is the success or failure that CruiseControl.rb reports.

Running the KIF tests for the iPhone and iPad projects is a bit more of a production. We have to do extra steps to build the common library prior to the main project, and we have to use Waxsim (we prefer Jonathan Penn's excellent fork) to run the simulator from the command line and capture console output, and sift through that console output for success or failure messages. The end result is the same, though: The return value of the script is reported as the test outcome by CC.rb.

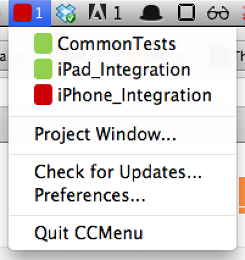

Continuous Integration, of course, is only as good as the speed with which it gives you feedback. We have our CI set up on a Mac Mini running Screen Sharing enabled, and with the CC.rb dashboard exposed on a convenient port. CruiseControl.rb can be set up to send email, but our inboxes are plenty cluttered already. We get the results through two main channels: On the developer desktop,CCMenu keeps the latest test results an eye flick away:

To broadcast status to management and the wider team, we keep CruiseControl.rb's web dashboard on a large screen mounted on a wall overlooking the developers' corner of the shop:

The public display of results is an important driver of good testing habits. As soon as a team gets used to meeting high expectations for test reliability, a red test suite on display for all to see becomes a distracting irritant. While we don't generally suggest introducing distracting irritants into technical workflows, in this case the irritation is confluent with the worthy goal of maintaining a reliable test suite that consistently inspires confidence in everyone who builds or depends on the software.

Our Best Practices: The Rules to Guide the Tools

Tools are only valuable when they are used well. We surround our tools with processes to get the most out of them, and tune those processes as we go in response to real-world feedback. Here are three simple rules that guide our use of the tools described above:

A: TDD

The core rhythm of TDD (and its cousins like BDD) is often described as "red-green-refactor":

- Red: You write a test describing the behavior you want, run it, and watch it fail.

- Green: You nudge your code into a state where the test passes.

- Refactor: You inspect your code (and your tests) for duplication and other issues, and remove them. The tests keep you from breaking anything.

The last step is probably the least-understood, most-skipped one in the process. People carry a lot of weird, fuzzy definitions in their heads for the word refactor, often having to do with larger re-architecture of code. In the TDD context, it has a very specific meaning: Refactoring is changing code without changing behavior. An important sidebar to this is that you have to verify the constancy of the behavior, or you're not really refactoring—you're just changing stuff. Automated tests are one (relatively cheap) way to do this—you can have confidence that the behavior being tested hasn't changed.

Skipping the refactoring step is a sure path to technical debt. The refactoring step is doing the dishes after dinner, pouring water on the ashes of your campfire, cleaning your rifle after you've fired it. After you've added or altered code, any duplicated bits should be factored out into methods (and tests run again); any ugly or slow bits should be reworked (and tests run again); any dead or commented code should be removed . . . You get the picture. Professional software development isn't a game of seeing how quickly we can deliver working code; there is the cost of future change to consider, too, and leaving your code clean makes life better for the next person who touches it (and that will as often as not be you).

All that to say: The automated tests don't increase your product quality. They provide the support and confidence for you to apply your skill and judgment toward improving the quality of your code.

B: Tests Should Always Be Green.

Tests do not inspire confidence when they're failing. Above, I mentioned the social incentive built into making our tests results public, but the practical value is important, too. The tests exist to convince us that we haven't broken anything. The moment you allow yourself to get comfortable with broken or inconsistent tests, you've lost sight of why you built them to begin with. There may be situations where you allow them to be red for a short time, even a few days during a large re-architecture, but these should be extreme situations, and you should not get comfortable with them.

We foster a culture of personal responsibility around the tests. If your name is on the failing commit, it's yours to fix. In the event that the committer can't address it right then, the next person with a free hand is responsible for investigating the issue.

C: 2 > 1

A minimum of two people must look at every piece of our code before it gets merged into the master branch. We have two ways to meet this requirement: pair programming and GitHub pull requests. In both cases, the goal is to have a second active collaborator devoting attention to the problem, to overcome the individual tendency to code to the happy path, and to bring different skills and perspectives to the question of the best way to implement something.

In the case of pull requests, the programmer inspecting the code is expected to run both automated and manual tests, as well as applying a critical eye to the code. With both practices (pairing and pull requests), both parties are expected to be active collaborators; no one should be rubber-stamping someone else's decisions.

Conclusion

iOS culture, even in many large organizations with skilled engineers, is behind on up-to-date testing practices. Aggressive mobile strategies up against lengthy App Store release cycles and manual user app updates create pressure to jettison code and best practices that might be seen as "extras."

It’s ironic that iOS development—the catalyst of the consumer web explosion of the past few years—has been a reluctant late comer to TDD, perhaps the most cherished methodology of the agile web development culture that is building the consumer Internet.

Over the platform's short history, agile methodology and TDD have been at odds in iOS development culture. The agile desire for speed has taken precedence over other concerns due to a past dearth of high-powered automated testing frameworks, and the results have often been high crash rates, long QA cycles, and a whole series of tribulations that the modern developer associates with the antiquities of waterfall development.

Our experience building HowAboutWe Dating for iPhone and iPad has shown that TDD and CI on iOS are well worth the effort. The tools are young but rapidly maturing. It is possible! Our move to a genuine culture of TDD on iOS has transformed the quality of our software and how quickly and predictably we can deliver it. So we're believers that any organization not already employing these practices should dive in and measure the results for themselves.

A version of this article will appear on the HowAboutWe blog. Liked this? Check out the HowAboutWe Team’s last technical post about 7 Key Questions To Ask When Taking Your App From iOS To Android.

HowAboutWe is the modern love company and has launched a series of products designed to help people fall in love and stay in love. Aaron Schildkrout is co-founder and co-CEO of HowAboutWe, where he runs product.

Brad Heintz is the Lead iOS Developer at HowAboutWe. When he's not bringing people better love through TDD, he's tinkering, painting, or playing the Chapman Stick.

James Paolantonio is a mobile engineer at HowAboutWe, specializing in iOS applications. He has been developing mobile apps since the launch of the first iPhone SDK. Besides coding, James enjoys watching sports, going to the beach, and scuba diving.

[Image: Flickr user Greg Westfall]

XCTest(二)的更多相关文章

- xcode 5 使用 XCTest 做单元测试

xcode 5 使用 XCTest 做单元测试 什么是单元测试,请看 百度百科 单元测试 一:在xcode5 之前,我们新建项目时,可以选择是否集成单元测试:如今在xcode5,我们新建立的项目默认就 ...

- 【小程序分享篇 二 】web在线踢人小程序,维持用户只能在一个台电脑持登录状态

最近离职了, 突然记起来还一个小功能没做, 想想也挺简单,留下代码和思路给同事做个参考. 换工作心里挺忐忑, 对未来也充满了憧憬与担忧.(虽然已是老人, 换了N次工作了,但每次心里都和忐忑). 写写代 ...

- 前端开发中SEO的十二条总结

一. 合理使用title, description, keywords二. 合理使用h1 - h6, h1标签的权重很高, 注意使用频率三. 列表代码使用ul, 重要文字使用strong标签四. 图片 ...

- 【疯狂造轮子-iOS】JSON转Model系列之二

[疯狂造轮子-iOS]JSON转Model系列之二 本文转载请注明出处 —— polobymulberry-博客园 1. 前言 上一篇<[疯狂造轮子-iOS]JSON转Model系列之一> ...

- 【原】Android热更新开源项目Tinker源码解析系列之二:资源文件热更新

上一篇文章介绍了Dex文件的热更新流程,本文将会分析Tinker中对资源文件的热更新流程. 同Dex,资源文件的热更新同样包括三个部分:资源补丁生成,资源补丁合成及资源补丁加载. 本系列将从以下三个方 ...

- 谈谈一些有趣的CSS题目(十二)-- 你该知道的字体 font-family

开本系列,谈谈一些有趣的 CSS 题目,题目类型天马行空,想到什么说什么,不仅为了拓宽一下解决问题的思路,更涉及一些容易忽视的 CSS 细节. 解题不考虑兼容性,题目天马行空,想到什么说什么,如果解题 ...

- MIP改造常见问题二十问

在MIP推出后,我们收到了很多站长的疑问和顾虑.我们将所有疑问和顾虑归纳为以下二十个问题,希望对大家理解 MIP 有帮助. 1.MIP 化后对其他搜索引擎抓取收录以及 SEO 的影响如何? 答:在原页 ...

- 如何一步一步用DDD设计一个电商网站(二)—— 项目架构

阅读目录 前言 六边形架构 终于开始建项目了 DDD中的3个臭皮匠 CQRS(Command Query Responsibility Segregation) 结语 一.前言 上一篇我们讲了DDD的 ...

- ASP.NET Core 之 Identity 入门(二)

前言 在 上篇文章 中讲了关于 Identity 需要了解的单词以及相对应的几个知识点,并且知道了Identity处在整个登入流程中的位置,本篇主要是在 .NET 整个认证系统中比较重要的一个环节,就 ...

随机推荐

- idea中maven项目放到包中的mapper的xml文件不发布的问题

今天重新一下mybatis的基础,然后一直报错,提示的是 result map 找不到com.zm.model.User对象可是看 mapper的写法没问题.找了半天才发现 是mapper没扫描到 解 ...

- .net的CLR

搜索:CLR结构图 C#所具有的许多特点都是由CLR提供的,如类型安全(Type Checker).垃圾回收(Garbage Collector).异常处理(Exception Manager).向下 ...

- 201621123034 《Java程序设计》第8周学习总结

作业08-集合 1. 本周学习总结 以你喜欢的方式(思维导图或其他)归纳总结集合相关内容. 2. 书面作业 1. ArrayList代码分析 1.1 解释ArrayList的contains源代码 答 ...

- 【bzoj1345】[Baltic2007]序列问题Sequence 单调栈

题目描述 对于一个给定的序列a1, …, an,我们对它进行一个操作reduce(i),该操作将数列中的元素ai和ai+1用一个元素max(ai,ai+1)替代,这样得到一个比原来序列短的新序列.这一 ...

- glibc内存泄露以及TCmalloc 简单分析

最近开发一个私人程序时碰到了严重的内存问题,具体表现为:进程占用的内存会随着访问高峰不断上升,直到发生OOM被kill为止.我们使用valgrind等工具进行检查发现程序并无内存泄露,经过仔细调查我们 ...

- python 下划线转驼峰

# 下划线转驼峰 def str2Hump(text): arr = filter(None, text.lower().split('_')) res = '' j = 0 for i in arr ...

- tips server ssh 正向 反向 代理

1. ssh userxxxxname@115.28.87.102 (直接使用ssh的连接方式连接到远程主机,而不是使用http,ftp等方式连接到具体远程主机) ...

- [ CodeVS冲杯之路 ] P3143

不充钱,你怎么AC? 题目:http://codevs.cn/problem/3143/ 大水题一道,只要会遍历,这里讲一下思路 先序遍历:先输出,然后左儿子,最后右儿子 中序遍历:先左儿子,再输出 ...

- 神奇的幻方(NOIP2015)(真·纯模拟)

原题传送门 这是道SB模拟题,NOIP--难度 直接贴代码 #include<iostream> #include<cstdio> using namespace std; , ...

- [转]Google gflags使用说明

gflags是什么: gflags是google的一个开源的处理命令行参数的库,使用c++开发,具备python接口,可以替代getopt. gflags使用起来比getopt方便,但是不支持参数的简 ...